0. aws-cli 설치 (링크)

1. kubectl 설치 (링크)

2. eksctl 설치 (링크)

3. eksctl을 위한 IAM 사용자 계정 생성 및 권한 부여, 클러스터 생성 (링크)

항목이 너무 많아서 일단 AWS 관리형 정책 AdministratorAccess을 추가해서 진행해보려고 한다.

root@elkhost:~# cat cluster.yml

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: elk-test

region: ap-southeast-1

nodeGroups:

- name: ng-1

instanceType: t3.medium

desiredCapacity: 2

volumeSize: 10

root@elkhost:~# eksctl create cluster -f cluster.yml

2022-12-16 19:20:57 [ℹ] eksctl version 0.122.0

2022-12-16 19:20:57 [ℹ] using region ap-southeast-1

2022-12-16 19:20:57 [ℹ] setting availability zones to [ap-southeast-1a ap-southeast-1b ap-southeast-1c]

2022-12-16 19:20:57 [ℹ] subnets for ap-southeast-1a - public:192.168.0.0/19 private:192.168.96.0/19

2022-12-16 19:20:57 [ℹ] subnets for ap-southeast-1b - public:192.168.32.0/19 private:192.168.128.0/19

2022-12-16 19:20:57 [ℹ] subnets for ap-southeast-1c - public:192.168.64.0/19 private:192.168.160.0/19

...

...

2022-12-16 19:38:39 [ℹ] nodegroup "ng-1" has 2 node(s)

2022-12-16 19:38:39 [ℹ] node "ip-192-168-61-186.ap-southeast-1.compute.internal" is ready

2022-12-16 19:38:39 [ℹ] node "ip-192-168-7-191.ap-southeast-1.compute.internal" is ready

2022-12-16 19:38:42 [ℹ] kubectl command should work with "/root/.kube/config", try 'kubectl get nodes'

2022-12-16 19:38:42 [✔] EKS cluster "elk-test" in "ap-southeast-1" region is ready4. ECK 배포 Quick Start (링크)

root@elkhost:~# kubectl create -f https://download.elastic.co/downloads/eck/2.5.0/crds.yaml

customresourcedefinition.apiextensions.k8s.io/agents.agent.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/beats.beat.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticmapsservers.maps.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticsearchautoscalers.autoscaling.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/enterprisesearches.enterprisesearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created

root@elkhost:~# kubectl apply -f https://download.elastic.co/downloads/eck/2.5.0/operator.yaml

namespace/elastic-system created

serviceaccount/elastic-operator created

secret/elastic-webhook-server-cert created

configmap/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator-view created

clusterrole.rbac.authorization.k8s.io/elastic-operator-edit created

clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created

service/elastic-webhook-server created

statefulset.apps/elastic-operator created

validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created5. 서비스 계정에 대한 Amazon EBS CSI 드라이버 IAM 역할 생성 (링크)

root@elkhost:~# eksctl utils associate-iam-oidc-provider --region=ap-southeast-1 --cluster=elk-test --approve

2022-12-17 13:10:05 [ℹ] will create IAM Open ID Connect provider for cluster "elk-test" in "ap-southeast-1"

2022-12-17 13:10:06 [✔] created IAM Open ID Connect provider for cluster "elk-test" in "ap-southeast-1"

root@elkhost:~# eksctl create iamserviceaccount --name ebs-csi-controller-sa --namespace kube-system --cluster elk-test --attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy --approve --role-only --role-name AmazonEKS_EBS_CSI_DriverRole

2022-12-17 13:10:15 [ℹ] 1 iamserviceaccount (kube-system/ebs-csi-controller-sa) was included (based on the include/exclude rules)

2022-12-17 13:10:15 [!] serviceaccounts that exist in Kubernetes will be excluded, use --override-existing-serviceaccounts to override

2022-12-17 13:10:15 [ℹ] 1 task: { create IAM role for serviceaccount "kube-system/ebs-csi-controller-sa" }

2022-12-17 13:10:15 [ℹ] building iamserviceaccount stack "eksctl-elk-test-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"

2022-12-17 13:10:15 [ℹ] deploying stack "eksctl-elk-test-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"

2022-12-17 13:10:16 [ℹ] waiting for CloudFormation stack "eksctl-elk-test-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa"6. Amazon EKS 추가 기능으로 Amazon EBS CSI 드라이버 관리 (링크)

root@elkhost:~# eksctl create addon --name aws-ebs-csi-driver --cluster elk-test --service-account-role-arn arn:aws:iam::~~~~:role/AmazonEKS_EBS_CSI_DriverRole --force

2022-12-17 13:22:47 [ℹ] Kubernetes version "1.23" in use by cluster "elk-test"

2022-12-17 13:22:47 [ℹ] using provided ServiceAccountRoleARN "arn:aws:iam::~~~~:role/AmazonEKS_EBS_CSI_DriverRole"

2022-12-17 13:22:47 [ℹ] creating addon샘플 애플리케이션 배포 및 CSI 드라이버 작동 여부 확인

https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/ebs-sample-app.html

7. StorageClass, Persistent Volume 리소스 생성

root@elkhost:~# cat stc.yml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: es-stc

annotations:

storageclass.kubernetes.io/is-default-class: "false"

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

fsType: ext4

root@elkhost:~#

root@elkhost:~#

root@elkhost:~# cat pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-pvv

namespace: default

spec:

accessModes:

- ReadWriteOnce

awsElasticBlockStore:

fsType: ext4

volumeID: aws://ap-southeast-1/vol-~~~~

capacity:

storage: 10Gi

persistentVolumeReclaimPolicy: Delete

storageClassName: es-stc

root@elkhost:~#

root@elkhost:~#

root@elkhost:~#

root@elkhost:~# kubectl apply -f stc.yml

storageclass.storage.k8s.io/es-stc created

root@elkhost:~# kubectl apply -f pv.yml

persistentvolume/es-pv created8. Elasticsearch 배포 (링크)

root@elkhost:~# cat es.yml

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 8.5.3

nodeSets:

- name: default

count: 1

podTemplate:

spec:

containers:

- name: elasticsearch

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 2Gi

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: es-stc

root@elkhost:~# kubectl apply -f es.yml

elasticsearch.elasticsearch.k8s.elastic.co/quickstart created

root@elkhost:~# kubectl get es --watch

NAME HEALTH NODES VERSION PHASE AGE

quickstart unknown 8.5.3 ApplyingChanges 23s

quickstart unknown 1 8.5.3 ApplyingChanges 39s

quickstart unknown 1 8.5.3 ApplyingChanges 42s

quickstart unknown 1 8.5.3 ApplyingChanges 43s

quickstart unknown 1 8.5.3 ApplyingChanges 44s

quickstart green 1 8.5.3 Ready 44s 9. Kibana 배포 (링크)

root@elkhost:~# cat kb.yml

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: quickstart

spec:

version: 8.5.3

count: 1

elasticsearchRef:

name: quickstart

config:

csp.strict: false

http:

tls:

selfSignedCertificate:

disabled: true

podTemplate:

spec:

containers:

- name: kibana

env:

- name: NODE_OPTIONS

value: "--max-old-space-size=1024"

resources:

requests:

memory: 0.5Gi

cpu: 0.5

limits:

memory: 1Gi

cpu: 1

root@elkhost:~# kubectl apply -f kb.yml

kibana.kibana.k8s.elastic.co/quickstart created

root@elkhost:~# kubectl get kb --watch

NAME HEALTH NODES VERSION AGE

quickstart red 8.5.3 9s

quickstart green 1 8.5.3 21s10. 외부 접속을 위한 Ingress 배포 및 접속 테스트

root@elkhost:~# cat ingress.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: "kibana-ingress"

annotations:

cert-manager.io/cluster-issuer: "letsencrypt-production"

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/ssl-passthrough: "true"

spec:

ingressClassName: nginx

tls:

- hosts:

- <dongdorrong 도메인 주소>

secretName: kibana-tls

rules:

- host: <dongdorrong 도메인 주소>

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: quickstart-kb-http

port:

number: 5601

root@elkhost:~# kubectl apply -f ingress.yml

ingress.networking.k8s.io/kibana-ingress created

root@elkhost:~# kubectl get ingress kibana-ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

kibana-ingress nginx <dongdorrong 도메인 주소> 80, 443 18s

root@elkhost:~#

root@elkhost:~#

root@elkhost:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/quickstart-es-default-0 1/1 Running 0 7m54s

pod/quickstart-kb-595499cd98-8jzm7 1/1 Running 0 5m49s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 88m

service/quickstart-es-default ClusterIP None <none> 9200/TCP 7m55s

service/quickstart-es-http ClusterIP 10.100.241.6 <none> 9200/TCP 7m57s

service/quickstart-es-internal-http ClusterIP 10.100.109.170 <none> 9200/TCP 7m57s

service/quickstart-es-transport ClusterIP None <none> 9300/TCP 7m57s

service/quickstart-kb-http ClusterIP 10.100.10.93 <none> 5601/TCP 5m50Ingress를 통해 도메인을 연결하기 위해 Kibana 서비스 타입을 ClusterIP에서 NodePort로, External IP로 파드가 돌아가는 노드 퍼블릭 IP로 적용하였다.

root@elkhost:~# kubectl edit svc quickstart-kb-http

kubservice/quickstart-kb-http edited

root@elkhost:~#

root@elkhost:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/quickstart-es-default-0 1/1 Running 0 13m

pod/quickstart-kb-595499cd98-8jzm7 1/1 Running 0 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 94m

service/quickstart-es-default ClusterIP None <none> 9200/TCP 13m

service/quickstart-es-http ClusterIP 10.100.241.6 <none> 9200/TCP 13m

service/quickstart-es-internal-http ClusterIP 10.100.109.170 <none> 9200/TCP 13m

service/quickstart-es-transport ClusterIP None <none> 9300/TCP 13m

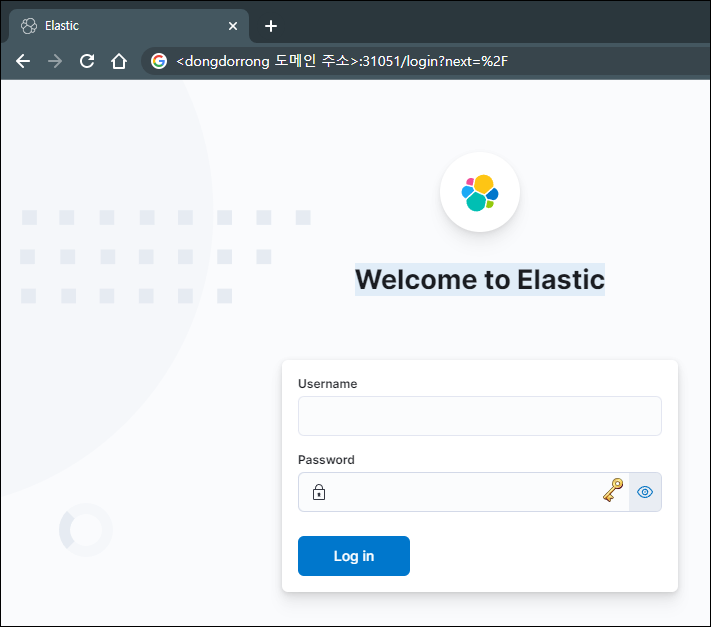

service/quickstart-kb-http NodePort 10.100.10.93 <none> 5601:31051/TCP 11m서비스 타입이 변경되어 NodePort가 반영된 것을 확인하였다. Route53으로 Ingress에 적용한 도메인으로 레코드를 추가 등록하고 접속해보니, 아래와 같이 정상 접속되는 것을 확인했다.

AWS ELB Contoller를 적용해서 접속해 보고 싶었는데, EKS를 처음 다루는 나로서는 쉽지 않았다. 번거로운 초기 세팅 작업들은 잘 모아서 Terraform이나 CloudFormation으로 만들어두어야겠다.