🥕 Google Colab을 활용해서 가상 인플루언서 얼굴을 만들어 보겠습니다!

2019년 11월에 NeuroIPS에서 발표된 First Order Motion Model for Image Animation 페이퍼에서 발표된 기술&코드를 포함

프로그램 자세한 코드 및 내용 -> https://url.kr/275tyo

📌 코드

🌳 버전세팅 및 깃허브 코드 불러오기

#버전 확인

# %를 사용하는 매직커멘드를 통해 버전 변경

# %tensorflow_version 1.x

#keras load_model에서 오류 발생할 때

!pip install h5py==2.10.0 #:: 다운그레이드 실시로 문제 해결

import h5py

print(h5py.__version__)🌳 인공지능 신경망 파일 및 함수 설정

🌱 import 설정 및

#임시로 각주

%tensorflow_version 1.x

import os

import cv2

import math

import pickle

import imageio

import warnings

import PIL.Image

import numpy as np

from PIL import Image

import tensorflow as tf

from random import randrange

import moviepy.editor as mpy

from google.colab import drive

from google.colab import files

import matplotlib.pyplot as plt

from IPython.display import clear_output

from moviepy.video.io.ffmpeg_writer import FFMPEG_VideoWriter

%matplotlib inline

warnings.filterwarnings("ignore")

def get_watermarked(pil_image: Image) -> Image:

try:

image = cv2.cvtColor(np.array(pil_image), cv2.COLOR_RGB2BGR)

(h, w) = image.shape[:2]

image = np.dstack([image, np.ones((h, w), dtype="uint8") * 255])

pct = 0.08

full_watermark = cv2.imread('/content/BabyGAN/media/logo.png', cv2.IMREAD_UNCHANGED)

(fwH, fwW) = full_watermark.shape[:2]

wH = int(pct * h*2)

wW = int((wH * fwW) / fwH*0.1)

watermark = cv2.resize(full_watermark, (wH, wW), interpolation=cv2.INTER_AREA)

overlay = np.zeros((h, w, 4), dtype="uint8")

(wH, wW) = watermark.shape[:2]

overlay[h - wH - 10 : h - 10, 10 : 10 + wW] = watermark

output = image.copy()

cv2.addWeighted(overlay, 0.5, output, 1.0, 0, output)

rgb_image = cv2.cvtColor(output, cv2.COLOR_BGR2RGB)

return Image.fromarray(rgb_image)

except: return pil_image

def generate_final_images(latent_vector, direction, coeffs, i):

new_latent_vector = latent_vector.copy()

new_latent_vector[:8] = (latent_vector + coeffs*direction)[:8]

new_latent_vector = new_latent_vector.reshape((1, 18, 512))

generator.set_dlatents(new_latent_vector)

img_array = generator.generate_images()[0]

img = PIL.Image.fromarray(img_array, 'RGB')

if size[0] >= 512: img = get_watermarked(img)

img_path = "/content/BabyGAN/for_animation/" + str(i) + ".png"

img.thumbnail(animation_size, PIL.Image.ANTIALIAS)

img.save(img_path)

face_img.append(imageio.imread(img_path))

clear_output()

return img

def generate_final_image(latent_vector, direction, coeffs):

new_latent_vector = latent_vector.copy()

new_latent_vector[:8] = (latent_vector + coeffs*direction)[:8]

new_latent_vector = new_latent_vector.reshape((1, 18, 512))

generator.set_dlatents(new_latent_vector)

img_array = generator.generate_images()[0]

img = PIL.Image.fromarray(img_array, 'RGB')

if size[0] >= 512: img = get_watermarked(img)

img.thumbnail(size, PIL.Image.ANTIALIAS)

img.save("face.png")

if download_image == True: files.download("face.png")

return img

def plot_three_images(imgB, fs = 10):

f, axarr = plt.subplots(1,3, figsize=(fs,fs))

axarr[0].imshow(Image.open('/content/BabyGAN/aligned_images/father_01.png'))

axarr[0].title.set_text("Father's photo")

axarr[1].imshow(imgB)

axarr[1].title.set_text("Child's photo")

axarr[2].imshow(Image.open('/content/BabyGAN/aligned_images/mother_01.png'))

axarr[2].title.set_text("Mother's photo")

plt.setp(plt.gcf().get_axes(), xticks=[], yticks=[])

plt.show()

!rm -rf sample_data

!git clone https://github.com/tg-bomze/BabyGAN.git

%cd /content/BabyGAN

!mkdir aligned_images data father_image mother_image

import config

import dnnlib

import dnnlib.tflib as tflib

from encoder.generator_model import Generator

age_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/age.npy')

horizontal_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/angle_horizontal.npy')

vertical_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/angle_vertical.npy')

eyes_open_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/eyes_open.npy')

gender_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/gender.npy')

smile_direction = np.load('/content/BabyGAN/ffhq_dataset/latent_directions/smile.npy')

clear_output()🌳 구글 드라이브 설정 및 GAN 깃허브 코드 가져오기

drive.mount('/content/drive')

clear_output()

if os.path.isdir('/content/drive/My Drive/BabyGAN'):

print("0%/100% Copying has started")

!cp '/content/drive/My Drive/BabyGAN/finetuned_resnet.h5' '/content/BabyGAN/data'

!cp '/content/drive/My Drive/BabyGAN/vgg16_weights_tf_dim_ordering_tf_kernels_notop.h5' '/content/BabyGAN'

print("50%/100% Checkpoints copied")

!cp '/content/drive/My Drive/BabyGAN/karras2019stylegan-ffhq-1024x1024.pkl' '/content/BabyGAN'

!cp '/content/drive/My Drive/BabyGAN/vgg16_zhang_perceptual.pkl' '/content/BabyGAN'

print("90%/100% Weights copied")

!cp '/content/drive/My Drive/BabyGAN/shape_predictor_68_face_landmarks.dat.bz2' '/content/BabyGAN'

print("100%/100% Dictionary copied")

clear_output()

print("Done!")

else: raise ValueError('Please read the instructions in the block description and follow all 3 points correctly!')🌳 가상인플루언서는 BAbyGAN 소스를 활용해 만든다

사진 두개 설정 -> 두 사진의 얼굴을 추출한 후 특징을 합쳐서 가상얼굴 만든다

🌱 사진 선택1

!rm -rf /content/BabyGAN/father_image/*.*

#@markdown *이미지 **url** 이나 로컬환경에 있는 사진을 업로드 하세요.*

url = '' #@param {type:"string"}

if url == '':

uploaded = list(files.upload().keys())

if len(uploaded) > 1: raise ValueError('You cannot upload more than one image at a time!')

fat = uploaded[0]

else:

try:

!wget $url

fat = url.split('/')[-1]

except BaseException:

print("Something wrong. Try uploading a photo from your computer")

FATHER_FILENAME = "father." + fat.split(".")[-1]

os.rename(fat, FATHER_FILENAME)

father_path = "/content/BabyGAN/father_image/" + FATHER_FILENAME

!mv -f $FATHER_FILENAME $father_path

!python align_images.py /content/BabyGAN/father_image /content/BabyGAN/aligned_images

clear_output()

if os.path.isfile('/content/BabyGAN/aligned_images/father_01.png'):

pil_father = Image.open('/content/BabyGAN/aligned_images/father_01.png')

(fat_width, fat_height) = pil_father.size

resize_fat = max(fat_width, fat_height)/256

display(pil_father.resize((int(fat_width/resize_fat), int(fat_height/resize_fat))))

else: raise ValueError('No face was found or there is more than one in the photo.')

🌱 사진 선택2

!rm -rf /content/BabyGAN/mother_image/*.*

#@markdown *이미지 **url** 이나 로컬환경에 있는 사진을 업로드 하세요.*

url = '' #@param {type:"string"}

if url == '':

uploaded = list(files.upload().keys())

if len(uploaded) > 1: raise ValueError('You cannot upload more than one image at a time!')

mot = uploaded[0]

else:

try:

!wget $url

mot = url.split('/')[-1]

except BaseException:

print("Something wrong. Try uploading a photo from your computer")

MOTHER_FILENAME = "mother." + mot.split(".")[-1]

os.rename(mot, MOTHER_FILENAME)

mother_path = "/content/BabyGAN/mother_image/" + MOTHER_FILENAME

!mv -f $MOTHER_FILENAME $mother_path

!python align_images.py /content/BabyGAN/mother_image /content/BabyGAN/aligned_images

clear_output()

if os.path.isfile('/content/BabyGAN/aligned_images/mother_01.png'):

pil_mother = Image.open('/content/BabyGAN/aligned_images/mother_01.png')

(mot_width, mot_height) = pil_mother.size

resize_mot = max(mot_width, mot_height)/256

display(pil_mother.resize((int(mot_width/resize_mot), int(mot_height/resize_mot))))

else: raise ValueError('No face was found or there is more than one in the photo.')

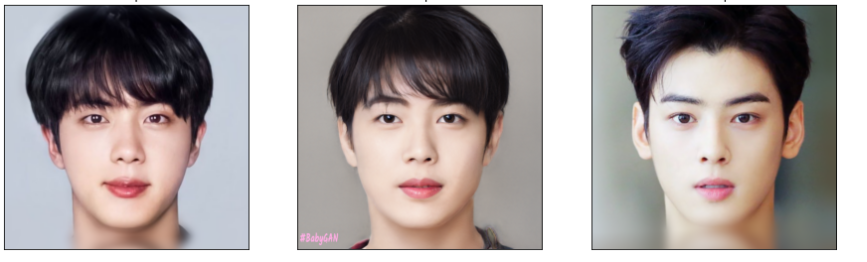

### 🌳 사진 합성하여 가상의 얼굴 만들기

genes_influence = 0.31 #@param {type:"slider", min:0.01, max:0.99, step:0.01}

style = "Default" #@param ["Default", "Father's photo", "Mother's photo"]

if style == "Father's photo":

lr = ((np.arange(1,model_scale+1)/model_scale)**genes_influence).reshape((model_scale,1))

rl = 1-lr

hybrid_face = (lr*first_face) + (rl*second_face)

elif style == "Mother's photo":

lr = ((np.arange(1,model_scale+1)/model_scale)**(1-genes_influence)).reshape((model_scale,1))

rl = 1-lr

hybrid_face = (rl*first_face) + (lr*second_face)

else: hybrid_face = ((1-genes_influence)*first_face)+(genes_influence*second_face)

#@markdown **Virtual human's approximate age:**

person_age = 30 #@param {type:"slider", min:10, max:50, step:1}

intensity = -((person_age/5)-6)

#@markdown ---

#@markdown **Download the final image?**

download_image = False #@param {type:"boolean"}

#@markdown **Resolution of the downloaded image:**

resolution = "1024" #@param [256, 512, 1024]

size = int(resolution), int(resolution)

face = generate_final_image(hybrid_face, age_direction, intensity)

plot_three_images(face, fs = 15)🥕 왼쪽 사진 & 오른쪽 사진 GAN알고리즘으로 합쳐져서 가상의 얼굴 생성(가운데 사진)

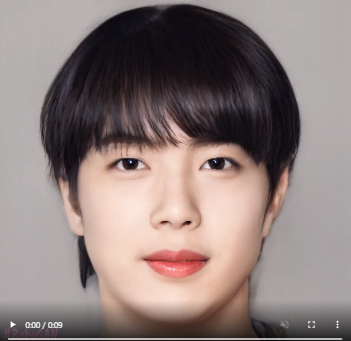

🌳 가상인플루언서 얼굴 제작 결과

아래 사진 클릭하면 영상 나옵니다!

📌 License

- 2019년 11월에 NeuroIPS에서 발표된 First Order Motion Model for Image Animation 페이퍼에서 발표된 기술&코드를 포함

안녕하세요. 이미지 불러오는 코드에서 이미지의 얼굴 인식을 실패하는 오류가 나는데 혹시 해결방법을 얻을 수 있을까요?