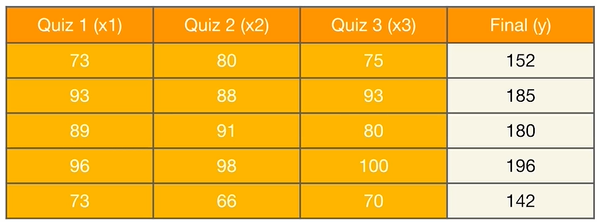

Multivariate Linear Regression란?

복수의 정보가 존재할때 하나의 추측값을 계산하는 것

예를 들어, 세번의 쪽지 시험 성적을 바탕으로 final exam 성적을 계산하기

dataset

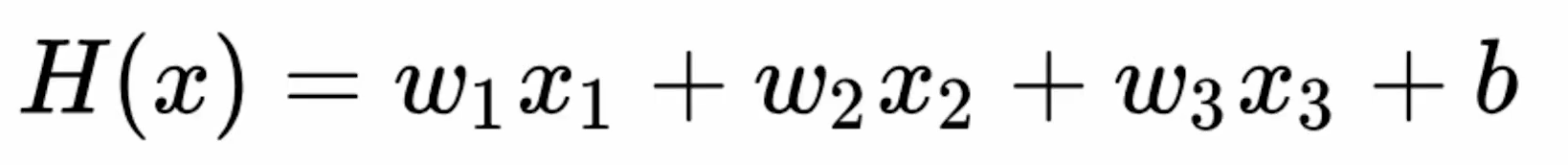

Hypothesis Function

입력 변수가 3개니까 weight도 3개!

Hypothesis Function: Naive

단순한 Hypothesis 정의

hypothesis = x1_train * w1 + x2_train * w2 + x3_train * w3 + b

하지만 x가 길이 1000의 vector라면?

# 데이터

x1_train = torch.FloatTensor([[73], [93], [89], [96], [73]])

x2_train = torch.FloatTensor([[80], [88], [91], [98], [66]])

x3_train = torch.FloatTensor([[75], [93], [90], [100], [70]])

y_train = torch.FloatTensor([[152], [185], [180], [196], [142]])

# 모델 초기화

w1 = torch.zeros(1, requires_grad=True)

w2 = torch.zeros(1, requires_grad=True)

w3 = torch.zeros(1, requires_grad=True)

b = torch.zeros(1, requires_grad=True)

# optimizer 설정

optimizer = optim.SGD([w1, w2, w3, b], lr=1e-5)

nb_epochs = 1000

for epoch in range(nb_epochs + 1):

# H(x) 계산

hypothesis = x1_train * w1 + x2_train * w2 + x3_train * w3 + b

# cost 계산

cost = torch.mean((hypothesis - y_train) ** 2)

# cost로 H(x) 개선

optimizer.zero_grad()

cost.backward()

optimizer.step()

# 100번마다 로그 출력

if epoch % 100 == 0:

print('Epoch {:4d}/{} w1: {:.3f} w2: {:.3f} w3: {:.3f} b: {:.3f} Cost: {:.6f}'.format(

epoch, nb_epochs, w1.item(), w3.item(), w3.item(), b.item(), cost.item()

))Epoch 0/1000 w1: 0.294 w2: 0.297 w3: 0.297 b: 0.003 Cost: 29661.800781

Epoch 100/1000 w1: 0.674 w2: 0.676 w3: 0.676 b: 0.008 Cost: 1.563634

Epoch 200/1000 w1: 0.679 w2: 0.677 w3: 0.677 b: 0.008 Cost: 1.497603

Epoch 300/1000 w1: 0.684 w2: 0.677 w3: 0.677 b: 0.008 Cost: 1.435026

Epoch 400/1000 w1: 0.689 w2: 0.678 w3: 0.678 b: 0.008 Cost: 1.375730

Epoch 500/1000 w1: 0.694 w2: 0.678 w3: 0.678 b: 0.009 Cost: 1.319503

Epoch 600/1000 w1: 0.699 w2: 0.679 w3: 0.679 b: 0.009 Cost: 1.266215

Epoch 700/1000 w1: 0.704 w2: 0.679 w3: 0.679 b: 0.009 Cost: 1.215693

Epoch 800/1000 w1: 0.709 w2: 0.679 w3: 0.679 b: 0.009 Cost: 1.167821

Epoch 900/1000 w1: 0.713 w2: 0.680 w3: 0.680 b: 0.009 Cost: 1.122419

Epoch 1000/1000 w1: 0.718 w2: 0.680 w3: 0.680 b: 0.009 Cost: 1.079375Hypothesis Function: Matrix

matmul()로 한번에 계산

a. 더 간결하고,

b. x의 길이가 바뀌어도 코드를 바꿀 필요가 없고

c. 속도도 더 빠르다

hypothesis = x_train.matmul(w) + b # or .mm or @

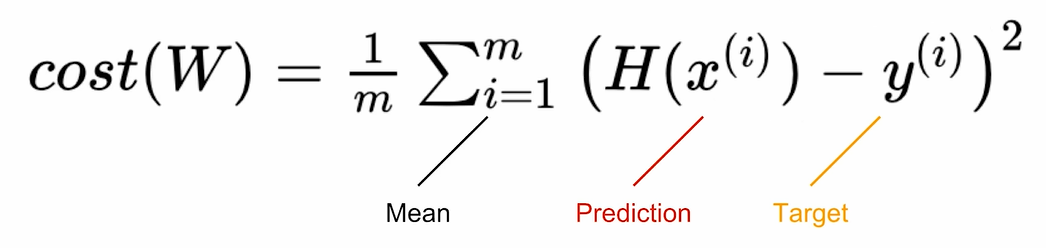

Cost function: MSE

기존 Simple Linear Regression과 동일한 공식!

cost = torch.mean((hypothesis - y_train) ** 2)

Gradient Descent with torch.optim

x_train = torch.FloatTensor([[73, 80, 75],

[93, 88, 93],

[89, 91, 90],

[96, 98, 100],

[73, 66, 70]])

y_train = torch.FloatTensor([[152], [185], [180], [196], [142]])

# 모델 초기화

W = torch.zeros((3, 1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

# optimizer 설정

optimizer = optim.SGD([W, b], lr=1e-5)

nb_epochs = 20

for epoch in range(nb_epochs + 1):

# H(x) 계산

hypothesis = x_train.matmul(W) + b # or .mm or @

# cost 계산

cost = torch.mean((hypothesis - y_train) ** 2)

# cost로 H(x) 개선

optimizer.zero_grad()

cost.backward()

optimizer.step()

# 100번마다 로그 출력

print('Epoch {:4d}/{} hypothesis: {} Cost: {:.6f}'.format(

epoch, nb_epochs, hypothesis.squeeze().detach(), cost.item()

))Epoch 0/20 hypothesis: tensor([0., 0., 0., 0., 0.]) Cost: 29661.800781

Epoch 1/20 hypothesis: tensor([67.2578, 80.8397, 79.6523, 86.7394, 61.6605]) Cost: 9298.520508

Epoch 2/20 hypothesis: tensor([104.9128, 126.0990, 124.2466, 135.3015, 96.1821]) Cost: 2915.713135

Epoch 3/20 hypothesis: tensor([125.9942, 151.4381, 149.2133, 162.4896, 115.5097]) Cost: 915.040527

Epoch 4/20 hypothesis: tensor([137.7968, 165.6247, 163.1911, 177.7112, 126.3307]) Cost: 287.936005

Epoch 5/20 hypothesis: tensor([144.4044, 173.5674, 171.0168, 186.2332, 132.3891]) Cost: 91.371017

Epoch 6/20 hypothesis: tensor([148.1035, 178.0144, 175.3980, 191.0042, 135.7812]) Cost: 29.758139

Epoch 7/20 hypothesis: tensor([150.1744, 180.5042, 177.8508, 193.6753, 137.6805]) Cost: 10.445305

Epoch 8/20 hypothesis: tensor([151.3336, 181.8983, 179.2240, 195.1707, 138.7440]) Cost: 4.391228

Epoch 9/20 hypothesis: tensor([151.9824, 182.6789, 179.9928, 196.0079, 139.3396]) Cost: 2.493135

Epoch 10/20 hypothesis: tensor([152.3454, 183.1161, 180.4231, 196.4765, 139.6732]) Cost: 1.897688

Epoch 11/20 hypothesis: tensor([152.5485, 183.3610, 180.6640, 196.7389, 139.8602]) Cost: 1.710541

Epoch 12/20 hypothesis: tensor([152.6620, 183.4982, 180.7988, 196.8857, 139.9651]) Cost: 1.651413

Epoch 13/20 hypothesis: tensor([152.7253, 183.5752, 180.8742, 196.9678, 140.0240]) Cost: 1.632387

Epoch 14/20 hypothesis: tensor([152.7606, 183.6184, 180.9164, 197.0138, 140.0571]) Cost: 1.625923

Epoch 15/20 hypothesis: tensor([152.7802, 183.6427, 180.9399, 197.0395, 140.0759]) Cost: 1.623412

Epoch 16/20 hypothesis: tensor([152.7909, 183.6565, 180.9530, 197.0538, 140.0865]) Cost: 1.622141

Epoch 17/20 hypothesis: tensor([152.7968, 183.6643, 180.9603, 197.0618, 140.0927]) Cost: 1.621253

Epoch 18/20 hypothesis: tensor([152.7999, 183.6688, 180.9644, 197.0662, 140.0963]) Cost: 1.620500

Epoch 19/20 hypothesis: tensor([152.8014, 183.6715, 180.9666, 197.0686, 140.0985]) Cost: 1.619770

Epoch 20/20 hypothesis: tensor([152.8020, 183.6731, 180.9677, 197.0699, 140.1000]) Cost: 1.619033- 점점 작아지는 cost 점점 y에 가까워지는 H(x)

nn.Module

import torch.nn as nn

class MultivariateLinearRegressionModel(nn.Module):

def __init__(self):

super().__init__()

self.linear = nn.Linear(3, 1)

def forward(self, x):

return self.linear(x)- nn.Linear(3,1)

- 입력차원 : 3

- 출력차원 : 1

- Hypothesis 계산은 forward()에서!

- Gradient 계산은 Pytorch가 알아서 해준다 backward()

F.mes_loss

import torch.nn.funcional as F

#cost계산

cost = F.mse_loss(predicion, y_train)- torch.nn.funcional에서 제공하는 loss function 사용

- 쉽게 다른 loss와 교체 가능! (li_loss, smooth_lo_loss 등...)

- 디버깅시 유리

Full Code with torch.optim

# 데이터

x_train = torch.FloatTensor([[73, 80, 75],

[93, 88, 93],

[89, 91, 90],

[96, 98, 100],

[73, 66, 70]])

y_train = torch.FloatTensor([[152], [185], [180], [196], [142]])

# 모델 초기화

model = MultivariateLinearRegressionModel()

# optimizer 설정

optimizer = optim.SGD(model.parameters(), lr=1e-5)

nb_epochs = 20

for epoch in range(nb_epochs+1):

# H(x) 계산

prediction = model(x_train)

# cost 계산

cost = F.mse_loss(prediction, y_train)

# cost로 H(x) 개선

optimizer.zero_grad()

cost.backward()

optimizer.step()

# 20번마다 로그 출력

print('Epoch {:4d}/{} Cost: {:.6f}'.format(

epoch, nb_epochs, cost.item()

))