Miniproject _4조

Cloud Infra ochestration : Tool 구성을 aws로 진행

| 팀원 | 강재민 | 김효진 | 박민선 | 박지연 | 임재헌 |

|---|---|---|---|---|---|

| 역할 | Ansible 구축 | Jenkins 구축 | Terraform 구축 | 문서작성 | Ansible 구축 |

1. 프로젝트 개요

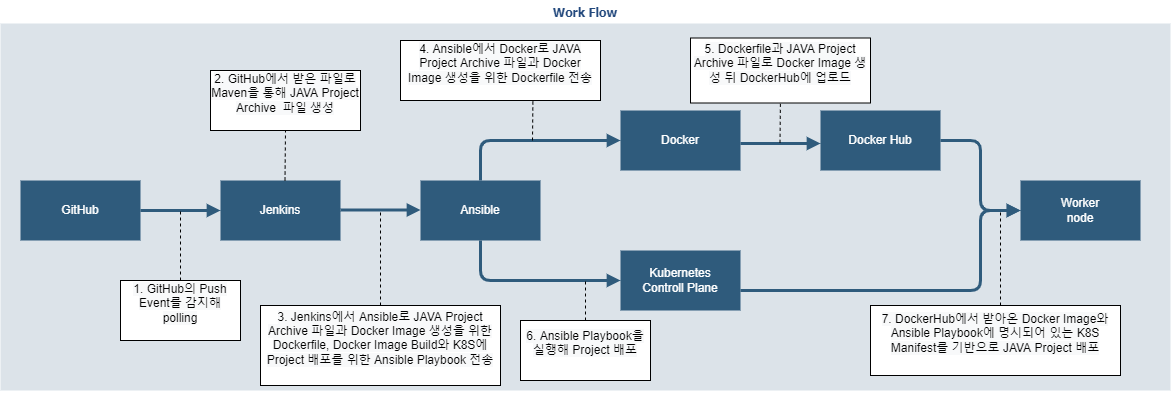

1-1. WorkFlow

1-2. 기술스택과 도구

| 스택 및 도구 | |

|---|---|

| jenkins |  |

| Ansible |  |

| Terraform |  |

| Docker |  |

| Kubernetes |  |

| Git |  |

2. 프로젝트 구성

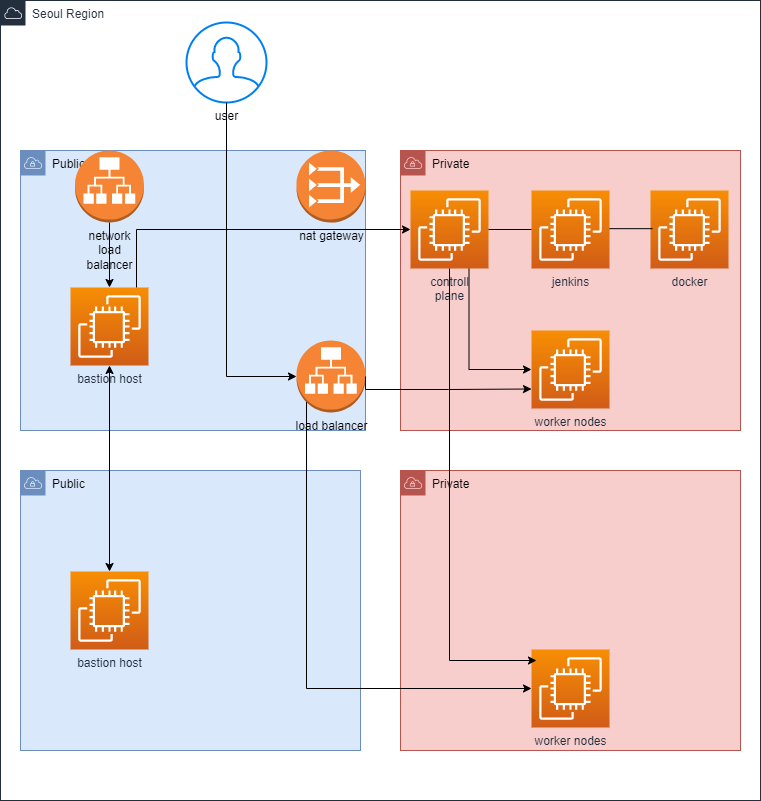

초기 Architecture 설계도

네트워크 | IPv4 CIDR |

|---|---|

| VPC | 10.0.0.0/16 |

| public subnet | 10.0.10.0/24 |

| private subnet | 10.0.30.0/24 |

| Public | Private | |

|---|---|---|

| Jenkins | 13.125.140.70 | 10.0.10.206 |

| Ansible | 13.209.20.29 | 10.0.10.209 |

| Docker | 3.38.107.141 | 10.0.10.95 |

| Kubernetes | 3.38.192.209 | 10.0.10.248 |

3. CI/CD를 위한 기본 인프라 구성

3-1. Kubernetes Cluster 구성

| kubeadm | kubelet | kubectl |

|---|---|---|---|

버전 | 1.22.8 | 1.22.8 | 1.22.8 |

| 구성 | 설정값 |

|---|---|

| 설치방법 | Kubeadm |

| pod-network-cidr | 172.16.0.0/16 |

3-2. Build 및 Deployment Server(Jenkins, Ansible, Docker) 구성

3-2-1) 기본 terraform 구성

vi provider.tf : Infrastructure의 type

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

}

}

provider "aws" {

region = "ap-northeast-2"

}vi vpc.tf : 가용영역1개, public2개, private1개 생성

module "project1_vpc" {

source = "terraform-aws-modules/vpc/aws"

name = "project1_vpc"

cidr = "10.0.0.0/16"

azs = ["ap-northeast-2a"]

public_subnets = ["10.0.10.0/24", "10.0.20.0/24"]

private_subnets = ["10.0.30.0/24"]

create_database_subnet_group = true

create_igw = true

enable_nat_gateway = true

single_nat_gateway = true

}

vi ec2.tf : 4대의 ec2 생성

resource "aws_key_pair" "project1_key" {

key_name = "project1_key"

public_key = file("/home/vagrant/.ssh/id_rsa.pub")

}

resource "aws_instance" "jenkins" {

ami = "ami-058165de3b7202099"

availability_zone = module.project1_vpc.azs[0]

instance_type = "t2.medium"

vpc_security_group_ids = [aws_security_group.all-sg.id]

subnet_id = module.project1_vpc.public_subnets[1]

key_name = aws_key_pair.project1_key.key_name

tags = {

Name = "jenkins"

}

}

resource "aws_instance" "ansible" {

ami = "ami-058165de3b7202099"

availability_zone = module.project1_vpc.azs[0]

instance_type = "t2.micro"

vpc_security_group_ids = [aws_security_group.all-sg.id]

subnet_id = module.project1_vpc.public_subnets[2]

key_name = aws_key_pair.project1_key.key_name

tags = {

Name = "ansible"

}

}

resource "aws_instance" "docker" {

ami = "ami-058165de3b7202099"

availability_zone = module.project1_vpc.azs[0]

instance_type = "t2.micro"

vpc_security_group_ids = [aws_security_group.all-sg.id]

subnet_id = module.project1_vpc.public_subnets[3]

key_name = aws_key_pair.project1_key.key_name

tags = {

Name = "docker"

}

}

resource "aws_instance" "k8s" {

ami = "ami-058165de3b7202099"

availability_zone = module.project1_vpc.azs[0]

instance_type = "t2.medium"

vpc_security_group_ids = [aws_security_group.all-sg.id]

subnet_id = module.project1_vpc.public_subnets[4]

key_name = aws_key_pair.project1_key.key_name

tags = {

Name = "k8s"

}

}

vi sg.tf : 모든 port를 열어 놓음

resource "aws_security_group" "all-sg" {

name = "all-sg"

description = "Allow all "

vpc_id = module.project1_vpc.vpc_id

ingress {

cidr_blocks = ["0.0.0.0/0"]

from_port = 0

to_port = 0

protocol = "-1"

}

egress {

cidr_blocks = ["0.0.0.0/0"]

from_port = 0

protocol = "-1"

to_port = 0

}

}3-2-2) Ansible구성

- bastionhost

-- hosts.ini

-- playbook

ㄴ installJenkins.yml

ㄴ installDoker.yaml

ㄴ installk8s.yaml

3-2-3) Jenkins build

- hosts: jenkins_host

tasks:

- shell: sudo apt-get update

ignore_errors: yes

- shell: sudo apt install -y openjdk-11-jdk

- shell: curl -fsSL https://pkg.jenkins.io/debian-stable/jenkins.io.key | sudo tee /usr/share/keyrings/jenkins-keyring.asc > /dev/null

- shell: echo "deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/" | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null

- shell: sudo apt-get update

ignore_errors: yes

- command: apt install -y fontconfig jenkins

- command: apt install -y maven3-2-4) Docker build

- name: Docker VM Provisioning

hosts: docker_host

gather_facts: false

tasks:

- command: apt update

# 사용 가능한 패키지와 그 버전 리스트 업데이트

- command: apt install -y ca-certificates curl gnupg lsb-release

# docker 설치

- command: apt install -y python3-pip

# python3용 pip 설치 및 python 모듈 구축에 필요한 모든 종속성 설치

- shell: curl https://get.docker.com | sh

# docker 설치

- shell: usermod -aG docker ubuntu

ubuntu 사용자를 doker 그룹에 추가

- pip:

name:

- docker

- docker-compose

# pip를 사용한 docker, docker-compose 설치

3-2-5) ansible build

- name: Ansible VM Provisioning

hosts: ansible_host

gather_facts: false

tasks:

- command: apt update

- command: apt install -y ca-certificates curl gnupg lsb-release

- command: apt install -y python3-pip

- shell: curl https://get.docker.com | sh

- shell: usermod -aG docker ubuntu

- pip:

name:

- docker

- docker-compose

- command: apt install -y ansible

- command: apt install -y python3-pip

- shell: sed -i 's/PasswordAuthentication no/PasswordAuthentication yes/g' /etc/ssh/sshd_config

- shell: pip install openshift==0.11

- shell: echo 'ubuntu:ubuntu' | chpasswd

- shell: sudo systemctl restart ssh

- shell: mkdir /home/ubuntu/.kube

- shell: curl -LO https://dl.k8s.io/release/v1.22.8/bin/linux/amd64/kubectl

- shell: sudo install kubectl /usr/local/bin/

#- shell: scp ~/.kube/config .kube/config3-2-6) k8s build

- name: Contorl-Plane VM Provisioning

hosts: controlplane_host

gather_facts: false

tasks:

- command: apt update

- command: apt install -y ca-certificates curl gnupg lsb-release

- command: apt install -y python3-pip

- shell: curl https://get.docker.com | sh

- shell: usermod -aG docker ubuntu

- pip:

name:

- docker

- docker-compose

- command: apt-get install -y apt-transport-https ca-certificates curl

- shell: curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

- shell: echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

- command: apt-get update

- shell: sudo apt-get install kubeadm=1.22.8-00 kubelet=1.22.8-00 kubectl=1.22.8-00 -y

- copy:

src: "/home/ubuntu/daemon.json"

dest: "/etc/docker/"

- shell: sudo systemctl restart docker

- shell: sudo systemctl daemon-reload && sudo systemctl restart kubelet

- shell: IPADDR=`ip addr | tail -n 8 | head -n 1 | cut -f 6 -d' ' | cut -f 1 -d '/'`

- shell: sudo kubeadm init --control-plane-endpoint "{{ lookup('env', 'IPADDR') }}" --pod-network-cidr 172.16.0.0/16 --apiserver-advertise-address "{{ lookup('env', 'IPADDR') }}"

- shell: mkdir -p /home/ubuntu/.kube

- shell: sudo cp -i /etc/kubernetes/admin.conf /home/ubuntu/.kube/config

- shell: sudo chown ubuntu:ubuntu /home/ubuntu/.kube/config

- fetch:

src: "/home/ubuntu/.kube/config"

dest: "/home/ubuntu/.kube/config"

flat: yes

#- shell: kubectl create -f https://projectcalico.docs.tigera.io/manifests/tigera-operator.yaml

- shell: curl https://projectcalico.docs.tigera.io/manifests/custom-resources.yaml -O

- replace:

path: /home/ubuntu/custom-resources.yaml

regexp: 192.168

replace: 172.16

#- shell: kubectl create -f custom-resources.yaml3-2-6) worker node build

- name: Contorl-Plane VM Provisioning

hosts: controlplane_host

gather_facts: false

tasks:

- command: apt update

- command: apt install -y ca-certificates curl gnupg lsb-release

- command: apt install -y python3-pip

- shell: curl https://get.docker.com | sh

- shell: usermod -aG docker ubuntu

- pip:

name:

- docker

- docker-compose

- command: apt-get install -y apt-transport-https ca-certificates curl

- shell: curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

- shell: echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

- command: apt-get update

- shell: sudo apt-get install kubeadm=1.22.8-00 kubelet=1.22.8-00 kubectl=1.22.8-00 -y

- copy:

src: "/home/ubuntu/daemon.json"

dest: "/etc/docker/"

- shell: sudo systemctl restart docker

- shell: sudo systemctl daemon-reload && sudo systemctl restart kubelet#! /bin/sh

sudo kubeadm join 10.0.10.248:6443 --token u3adz9.flbop6nslkaupqrq --discovery-token-ca-cert-hash sha256:70f23d516ea80a39c784d129bddb13d6f71a96865b97acac573054443183b3554. ci/cd 구현

4-1. Kubernetes Cluster Pod 배포를 위한 Ansible Playbook

4-1-1) kubernetes playbook

vi docker_build_and_push.yaml : DockerHub에서 image build 및 push

- name: Docker Image Build and Push

hosts: docker_host

gather_facts: false

tasks:

- command: docker image build -t repush/cicdproject:"{{ lookup('env', 'BUILD_NUMBER') }}" ~/

- command: docker login -u repush -p "{{ lookup('env', 'TOKEN') }}"

- command: docker push repush/cicdproject:"{{ lookup('env', 'BUILD_NUMBER') }}"

- command: docker logout- 첫번째 command: image build command, 변수를 통해 버전을 지정 (중복이 되지 않도록 build 횟수로 버전 생성)

- 두번째 command: DockerHub login command, 변수를 통해 Token을 지정

- 세번째 command: push command, 변수를 통해 버전을 지정 (중복이 되지 않도록 build 횟수로 버전 생성)

- 네번째 command: logout command

vi kube_deploy.yaml : k8s deployment 및 service

- hosts: ansible_host

gather_facts: no

tasks:

#- command: kubectl apply -f java-hello-world/kube_manifest/

- name: Create Deployment # container 배포

k8s:

state: present

definition:

apiVersion: apps/v1

kind: Deployment

metadata:

name: java-hello

namespace: default

spec:

replicas: 6 # pod 개수 지정

selector:

matchLabels:

app: java-hello

template:

metadata:

labels:

app: java-hello

spec:

containers:

- name: java-hello

image: "repush/cicdproject:{{ lookup('env', 'BUILD_NUMBER') }}" # DockerHub에 push된 image

imagePullPolicy: Always

ports:

- containerPort: 8080 # containerPort지정

- name: Create Service # service 배포

k8s:

state: present

definition:

apiVersion: v1

kind: Service

metadata:

name: java-hello-svc

namespace: default

spec:

type: NodePort

selector:

app: java-hello

ports:

- port: 80

targetPort: 8080

nodePort: 31313 # nodePort 지정4-2. Docker Image Build를 위한 이미지용 Dockerfile

4-2-1) Dockerfile

vi Dockerfile

FROM tomcat:9.0-jre11-openjdk # tomcat:9.0-jre11-openjdk 이미지를 가져옴

COPY webapp.war /usr/local/tomcat/webapps # 현재 경로의 war파일을 이미지 안에 /usr/local/tomcat/ 경로에 복사4-3. ci/cd 구현을 위한 Jenkins Job 구성

4-3-1) 사용된 플러그인

- Maven Integration plugin 3.19

- Maven Invoker plugin 2.4

- Publish Over SSH 1.24

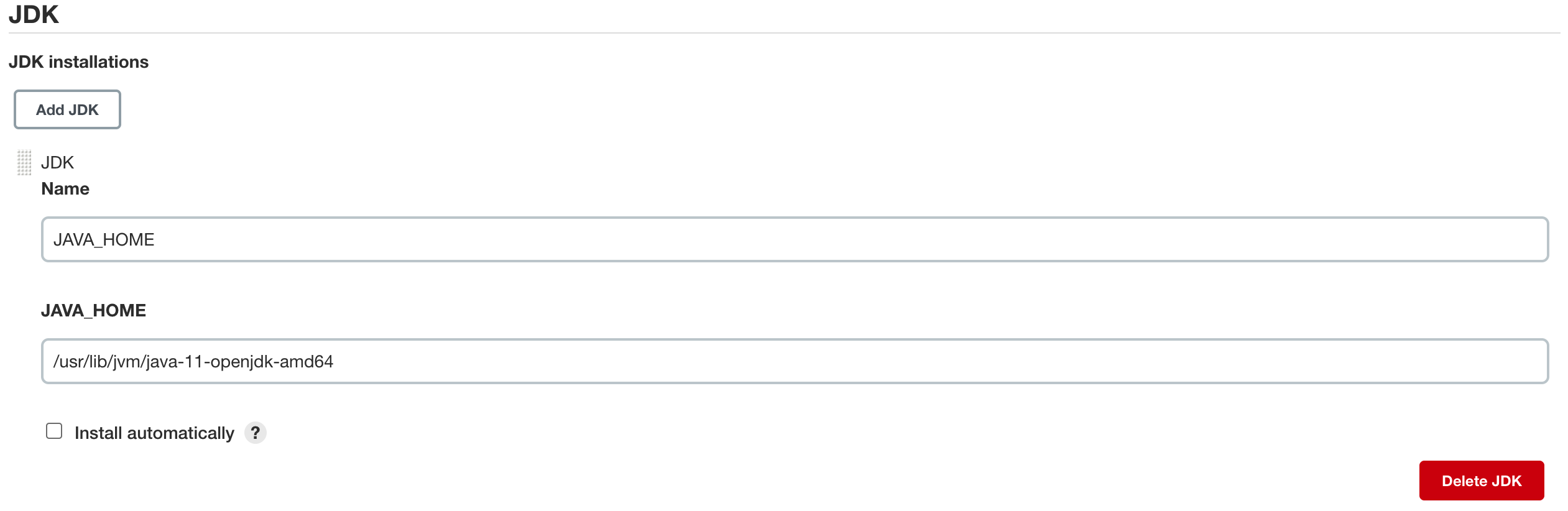

4-3-2) JAVA Project Build를 위한 JDK환경변수 세팅

Jenkins 관리 - Global Tool Configuration - JDK

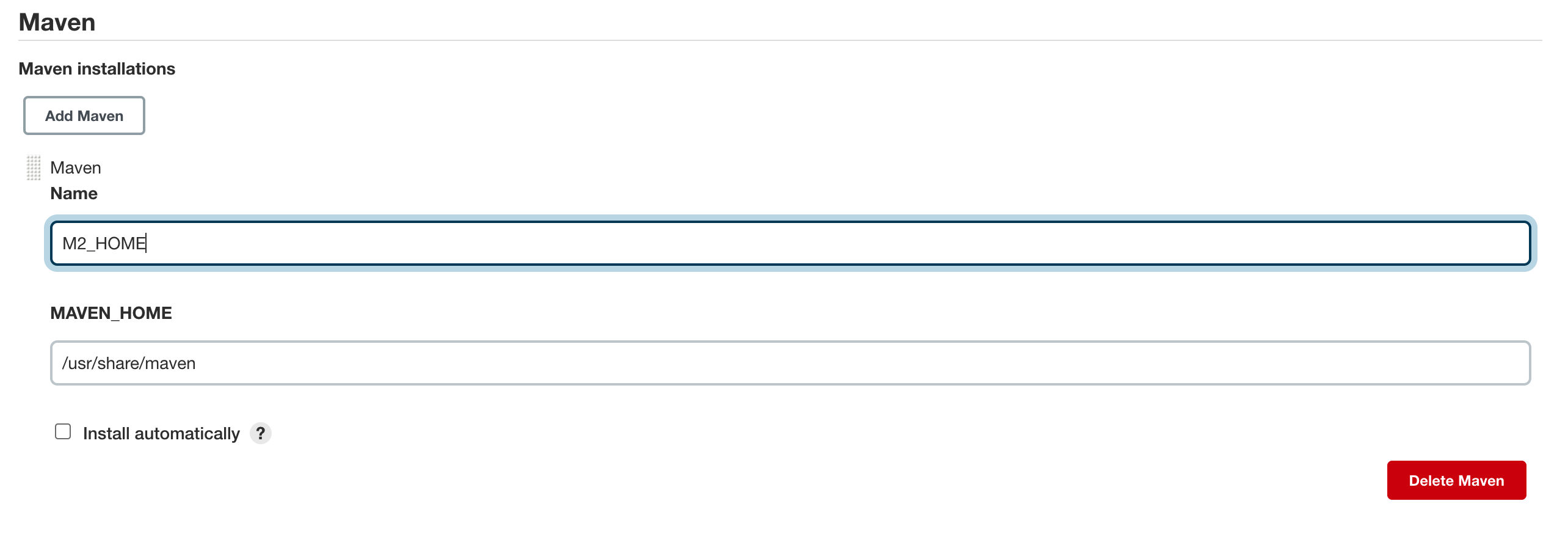

4-3-3) JAVA Project Build를 위한 Maven환경변수 세팅

Jenkins 관리 - Global Tool Configuration - Maven

4-3-4) ci/cd 구현을 위한 Junkins Job 설정

새로운 Item - Pipeline

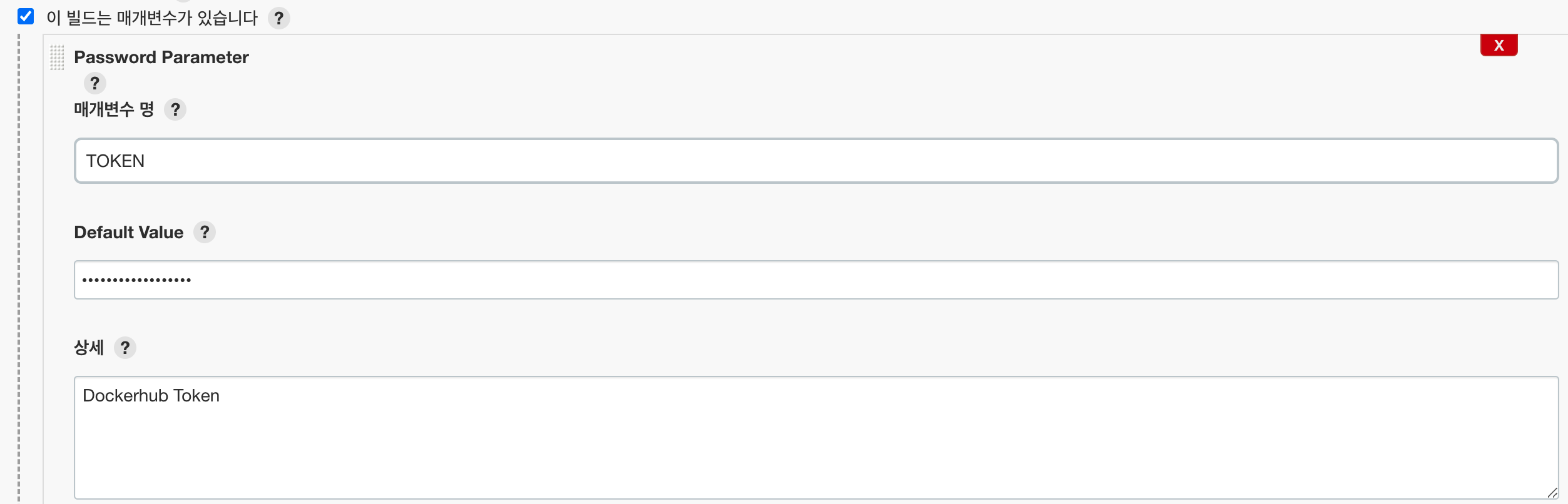

1) DockerHub 로그인을 위한 로그인 토큰 설정

General

- Default Value: DockerHub 계정의 Token

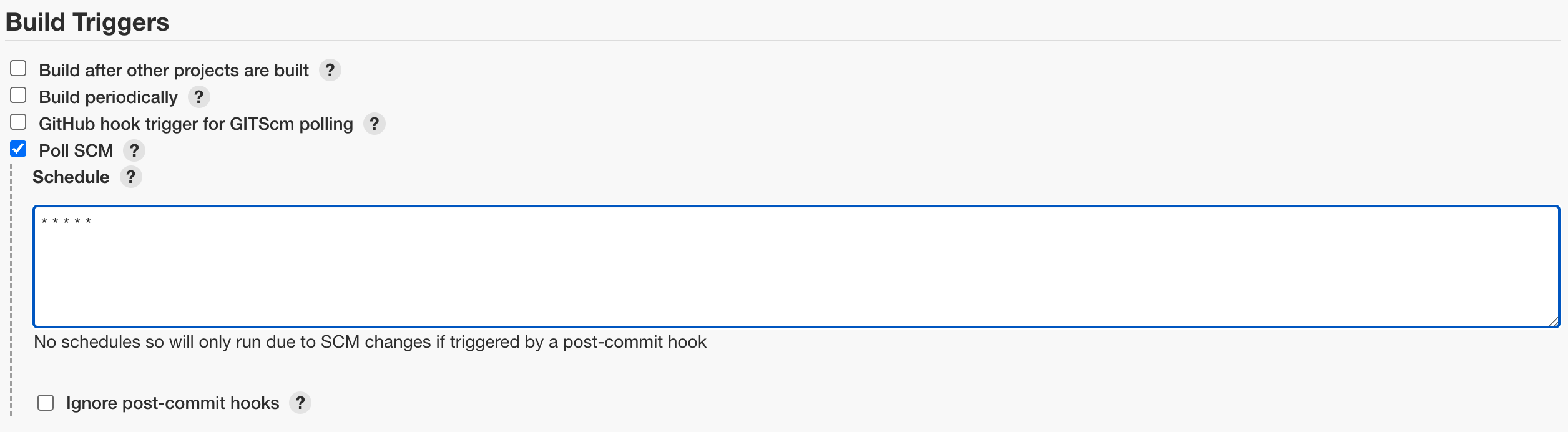

2) 프로젝트의 브랜치 변화 감지를 위한 Build Triggers 설정

Build Triggers

- 매 분마다 Github의 Push Event감지하는 Polling설정

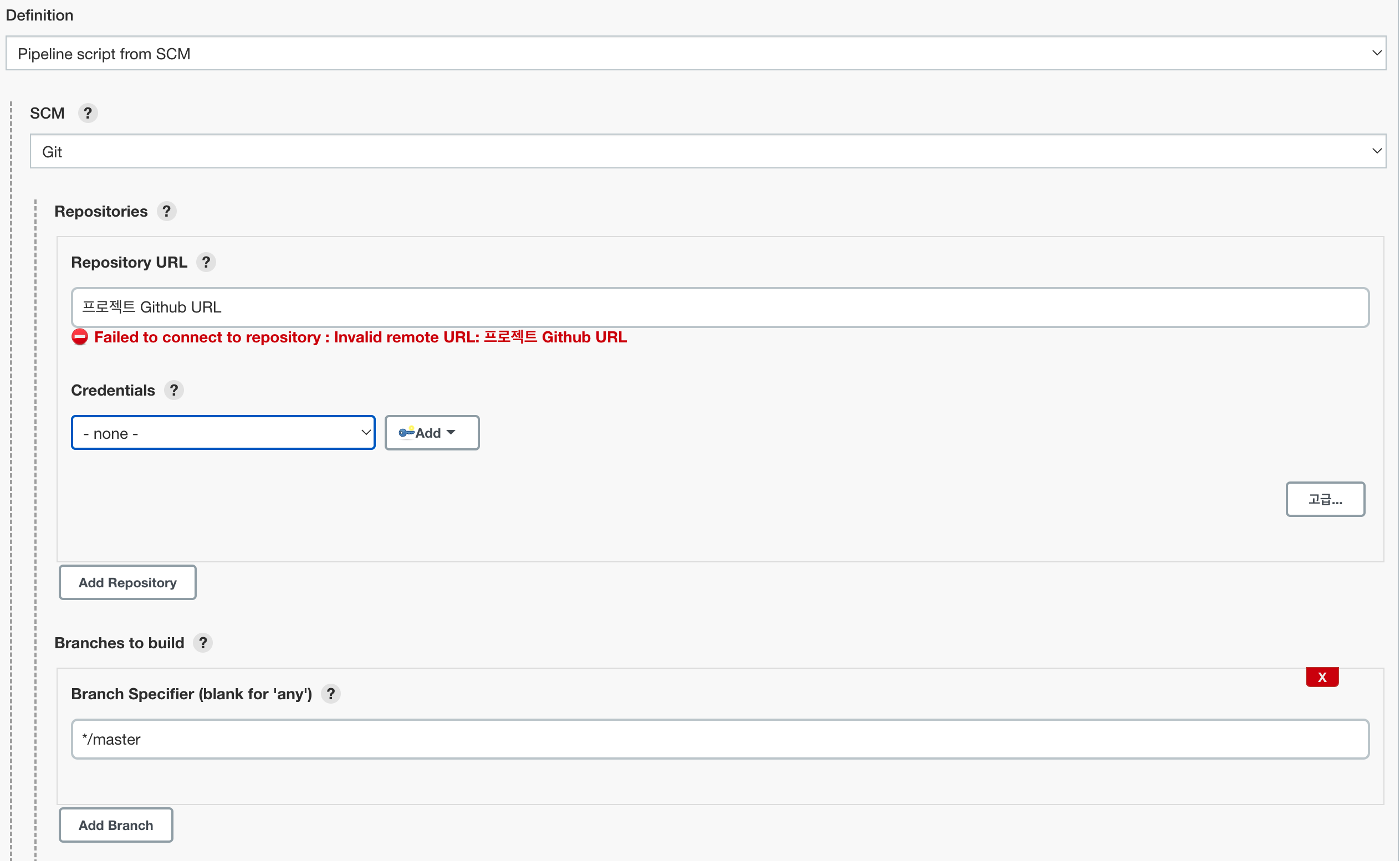

3) Pipeline과 Jenkins파일 구성

Pipiline - Definition - SCM

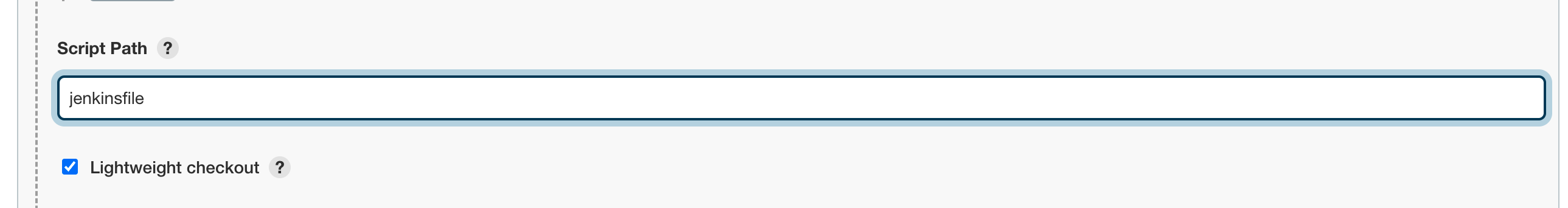

Pipiline - Definition - Script Path

- Github Repository 내의 jenkinsfile 상대경로

Jenkinsfile 구성

- Java Build stage: Maven을 통해 Java Project Archive파일 빌드

- Docekr Image Build With Remote Ansible Server AND Remote Docker Server Using Publish Over SSH Module: Publish Over SSH Module모듈로 Docker Image 빌드를 위한 파일을 Ansible서버에 전송 후 Docker서버에 전송, Ansible 서버에 Docker Image 빌드와 Project 배포 실행을 위한 Ansible Playbook 전송 및 실행.

jenkinsfile

pipeline {

agent any

tools {

// Install the Maven version configured as "M3" and add it to the path.

maven "M2_HOME"

}

stages {

stage('Java Build') {

steps {

// Run Maven on a Unix agent.

sh "mvn -Dmaven.test.failure.ignore=true clean package -f pom.xml"

}

}

stage('Docekr Image Build With Remote Ansible Server AND Remote Docker Server Using Publish Over SSH Module') {

steps {

sshPublisher(publishers: [sshPublisherDesc(configName: 'ansible-host', transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: 'java-hello-world', remoteDirectorySDF: false, removePrefix: 'webapp/target/', sourceFiles: 'webapp/target/webapp.war'), sshTransfer(cleanRemote: false, excludes: '', execCommand: '', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: 'java-hello-world', remoteDirectorySDF: false, removePrefix: 'docker/', sourceFiles: 'docker/Dockerfile'), sshTransfer(cleanRemote: false, excludes: '', execCommand: '''scp java-hello-world/Dockerfile 13.125.234.12:~/

scp java-hello-world/webapp.war 13.125.234.12:~/

TOKEN=`echo $TOKEN` BUILD_NUMBER=`echo $BUILD_NUMBER` ansible-playbook java-hello-world/docker_build_and_push.yaml

BUILD_NUMBER=`echo $BUILD_NUMBER` ansible-playbook java-hello-world/kube_deploy.yaml''', execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: 'java-hello-world', remoteDirectorySDF: false, removePrefix: 'playbook/', sourceFiles: 'playbook/*.yaml')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: true)])

}

}

}

}5. 구현 결과

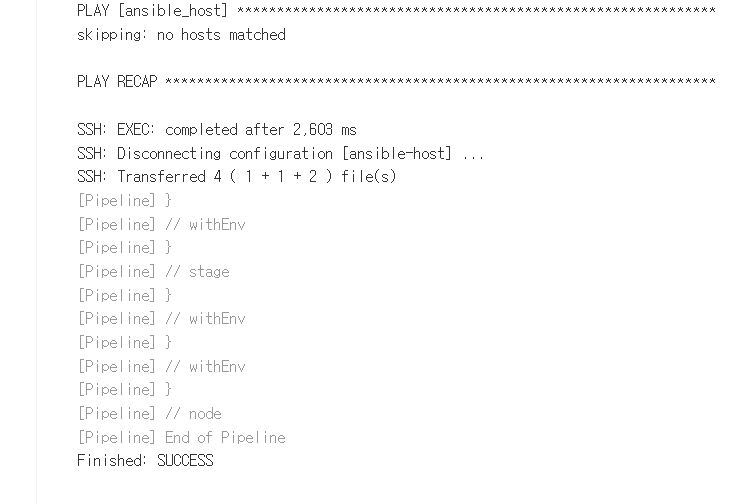

5-1. Jenkins build

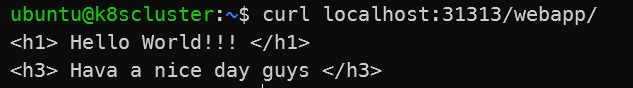

5-2. 로컬에서 접근

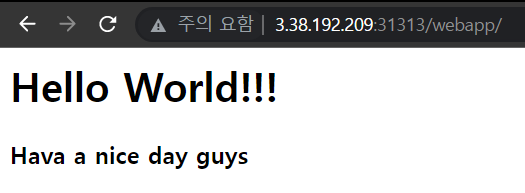

5-3. 웹사이트에서 접근

6. 결론

6-1. As-is

-

기본적으로 이번 프로젝트에서

Jenkins를 통한GitOpsCI/CD는 성공했다. -

Terraform과Ansible을 사용하여 빌드를자동화하는데 어느정도 구축은 했다.

6.2. To-be

- 앤서블 플레이북 작성시 몇몇 명령어가 실행되지 못한 점이 아쉬웠다.

- 앤서블 플레이북을 지금은

shell과command위주로 사용했는데모듈을 사용하면 더 완성도있는 결과를 낼 수 있었을것같다. - 로드밸드밸런서와 오토스케일링을 활용한 웹서비스 배포를

GUI환경에서는 성공했지만 마지막에IaC로 구현한 인프라에서는 작동하지 못한 점이 아쉬웠다. - 보안그룹을 지금은 간단하게 열어놓았지만 좀더 최적화해서 보안그룹을 구성하면 더 좋을 것 같다.

- workernode를 오토스케일링 하여 자동으로 추가하는 작업도

GUI환경에서는 성공했지만IaC로 구현한 인프라에서는 작동하지 못한 점 또한 아쉽게 되었다. - 배스천 호스트를 오토스케일링하고 네트워크 로드밸런서로 구성하여 도메인 네임 엔드포인트로 ssh는 것도

GUI환경에서 성공했지만IaC로 구현하면서 시간이 부족하여 구성하지 못한 점도 아쉽게 되었다.