1. color 데이터 이용한 와인 분류

1. data 준비 & histogram

import & load data

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')- color 컬럼 추가 및 concat

red_wine['color'] = 1.

white_wine['color'] = 0.

wine = pd.concat([red_wine, white_wine])

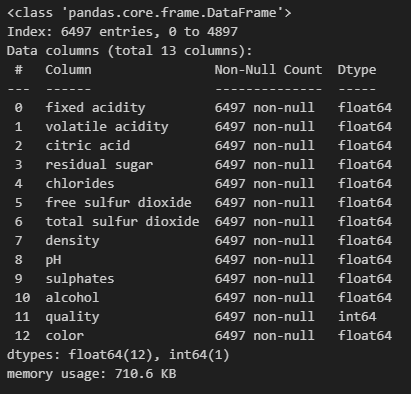

wine.info()

histogram

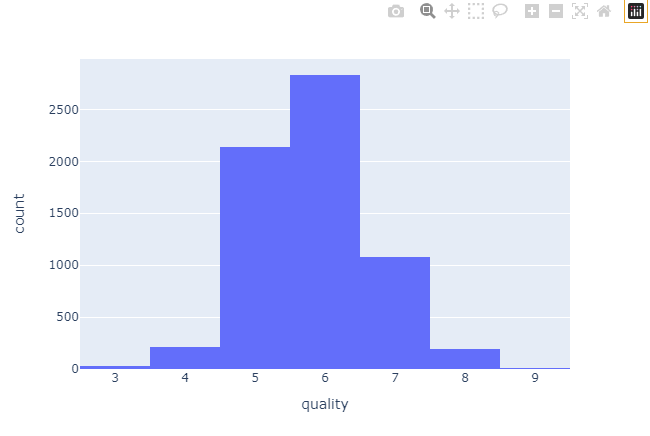

- 전체 quality histogram

import plotly.express as px

fig = px.histogram(wine, x='quality')

fig.show()-

5~6등급의 와인이 가장 많음

-

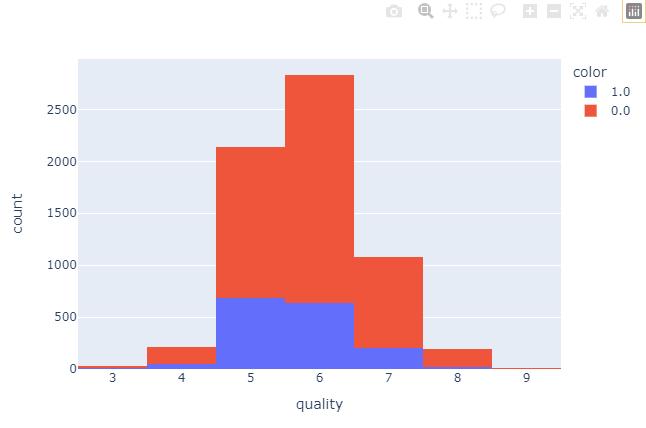

레드/화이트 와인별 등급 histogram

fig = px.histogram(wine, x='quality', color='color')

fig.show()- 화이트가 레드보다 훨씬 많음

- 레드는 5~6등급 와인이 비슷하며, 화이트는 6등급 와인이 더 많음

2. 레드/화이트 분리

- 레드/화이트 분리

X = wine.drop(['color'], axis=1)

y = wine['color']- train / test 값으로 분리

from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state = 13)

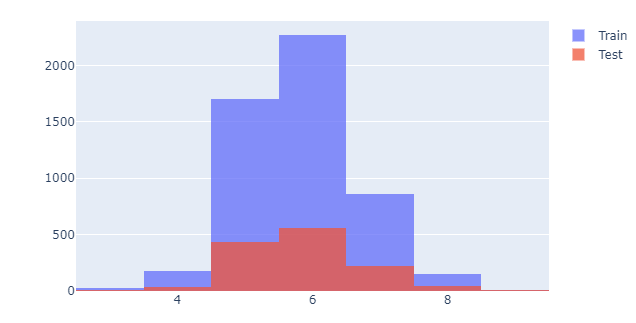

np.unique(y_train, return_counts=True)-> (array([0., 1.]), array([3913, 1284], dtype=int64))- 훈련용과 테스트용이 레드/화이트 와인에 따라 어느정도 구분되었을지 확인 - histogram

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=X_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=X_test['quality'], name='Test'))

fig.update_layout(barmode='overlay')

fig.update_traces(opacity=0.75)

fig.show()

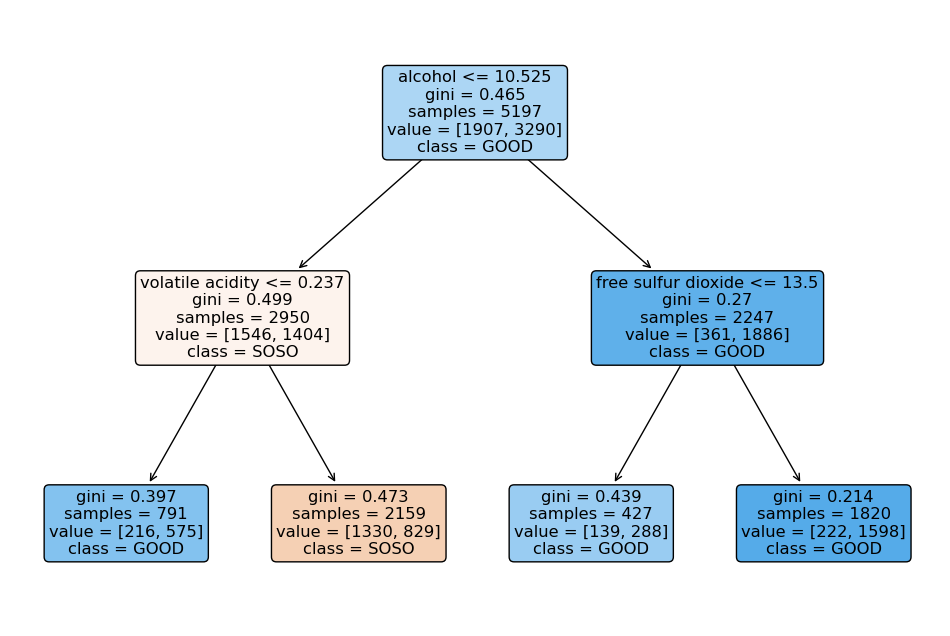

3. 결정나무 훈련

decisiontree 학습

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

학습 결과

from sklearn.metrics import accuracy_score

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))-> Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

4. 데이터 전처리 - MinMaxScaler & StandardScaler

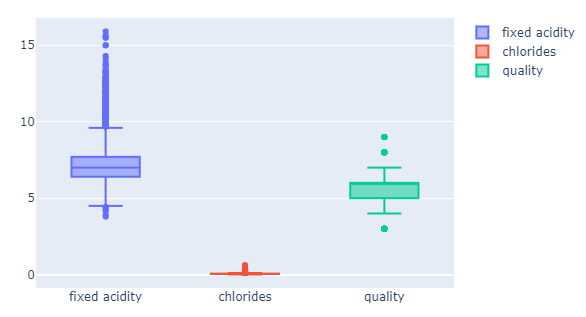

기존 값 boxplot

fig = go.Figure()

fig.add_trace(go.Box(y=X['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X['quality'], name='quality'))

fig.show()

- 컬럼들의 최대/최소 범위 각각 다르고, 평균과 분산이 각각 다르게 나타남

- 특성(feature)의 편향 문제는 최적의 모델을 찾는데 방해가 될 수 있음

-> 이때 사용해야 하는 것이 min/max와 standard!

- 참고

• 결정나무에서는 이런 전처리는 의미를 가지지 않는다.

• 주로 Cost Function을 최적화할 때 유효할 때가 있다.

• MinMaxScaler와 StandardScaler 중 어떤 것이 좋을지는 해봐야 안다.

min/max, standard scaler fit

from sklearn.preprocessing import MinMaxScaler, StandardScaler

MMS = MinMaxScaler()

SS = StandardScaler()

SS.fit(X)

MMS.fit(X)

X_ss = SS.transform(X)

X_mms = MMS.transform(X)

X_ss_pd = pd.DataFrame(X_ss, columns=X.columns)

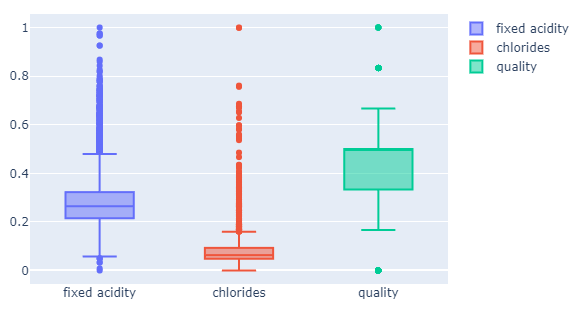

X_mms_pd = pd.DataFrame(X_mms, columns=X.columns)min/max boxplot

- 최대-최소를 1과 0으로 강제로 맞추는 것

fig = go.Figure()

fig.add_trace(go.Box(y=X_mms_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_mms_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_mms_pd['quality'], name='quality'))

fig.show()

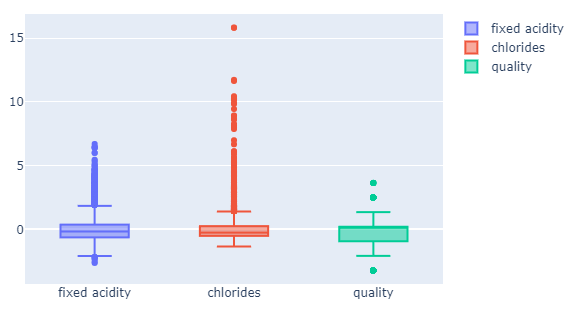

standard boxplot

• 평균을 0으로 표준편차를 1로 맞추는 것

fig = go.Figure()

fig.add_trace(go.Box(y=X_ss_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_ss_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_ss_pd['quality'], name='quality'))

fig.show()

accuracy

- 결정나무에서는 이런 전처리는 거의 효과가 없다.

minmax scaler accuracy

X_train, X_test, y_train, y_test = train_test_split(X_mms_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

t_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))-> Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769standard scaler accuracy

X_train, X_test, y_train, y_test = train_test_split(X_ss_pd, y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

y_pred_tr = wine_tree.predict(X_train)

t_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))-> Train Acc : 0.9553588608812776

Test Acc : 0.9569230769230769

5. zip

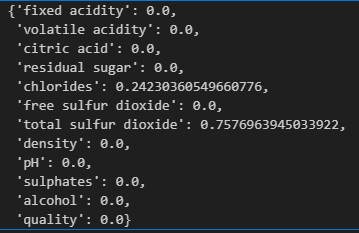

- 어떤 변수가 가장 큰 영향을 미쳤는지 확인

dict(zip(X_train.columns, wine_tree.feature_importances_))- total sulfur dioxide가 0.76으로 가장 큰 영향 미침

2. taste 데이터 이용한 와인 분류

1. taste column 생성

wine['taste'] = [1. if grade>5 else 0. for grade in wine['quality']]

wine.info()

2. decision tree & accuracy

- decision tree

X = wine.drop(['taste'], axis= 1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state= 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)- accuracy

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))-> Train Acc : 1.0

Test Acc : 1.0-

정확도가 1.0이 나오면 무조건! 의심해봐야 함

-

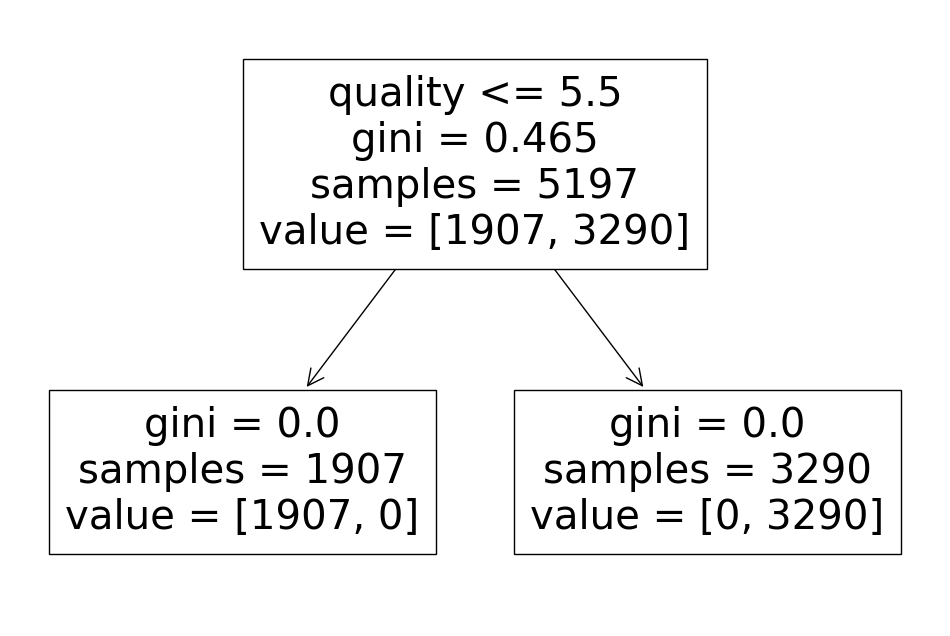

sklearn tree 확인

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12,8))

tree.plot_tree(wine_tree, feature_names=X.columns.tolist());

-> quality 열로 taste 열을 만들었으니 quality열을 제거했어야 함

3. decision tree & accuracy(quality열 삭제)

- decision tree

X = wine.drop(['taste', 'quality'], axis= 1)

y = wine['taste']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state= 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)- accuracy

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(y_test, y_pred_test))-> Train Acc : 0.7294593034442948

Test Acc : 0.7161538461538461

- sklearn tree 확인

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12,8))

tree.plot_tree(wine_tree, feature_names=X.columns.tolist(),

class_names = ['SOSO', 'GOOD'],

rounded=True, filled=True);