abs : https://arxiv.org/abs/2012.01211

code(아직 안 올라옴) : https://github.com/chaofengc/Face-SPARNet

abstract

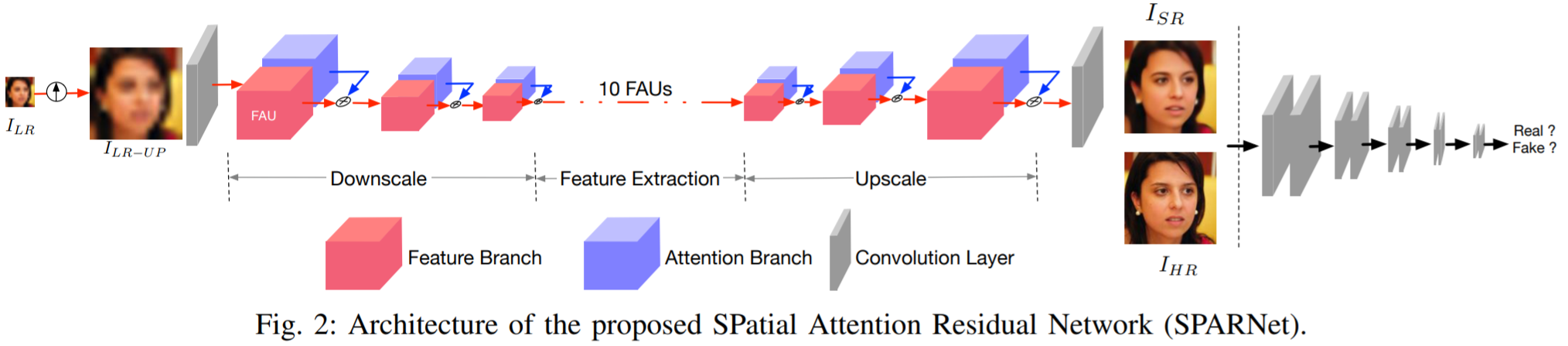

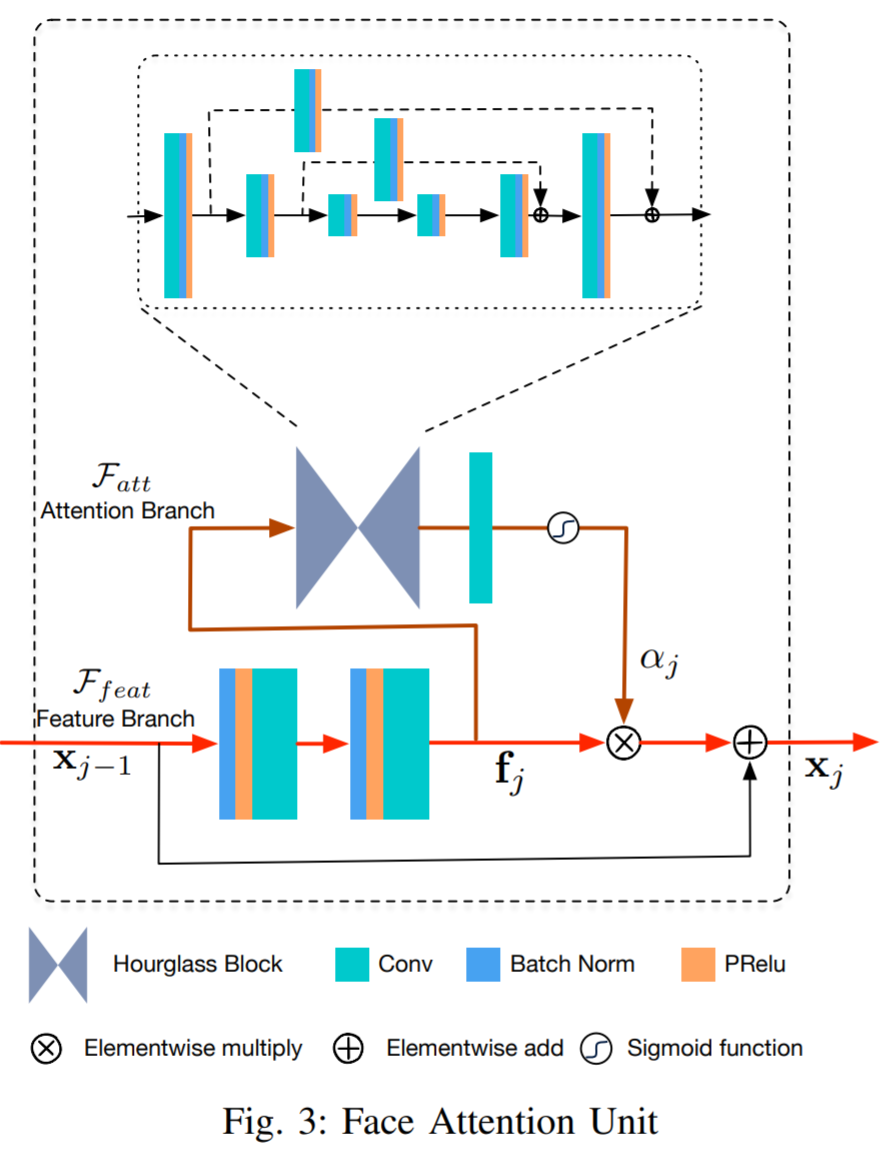

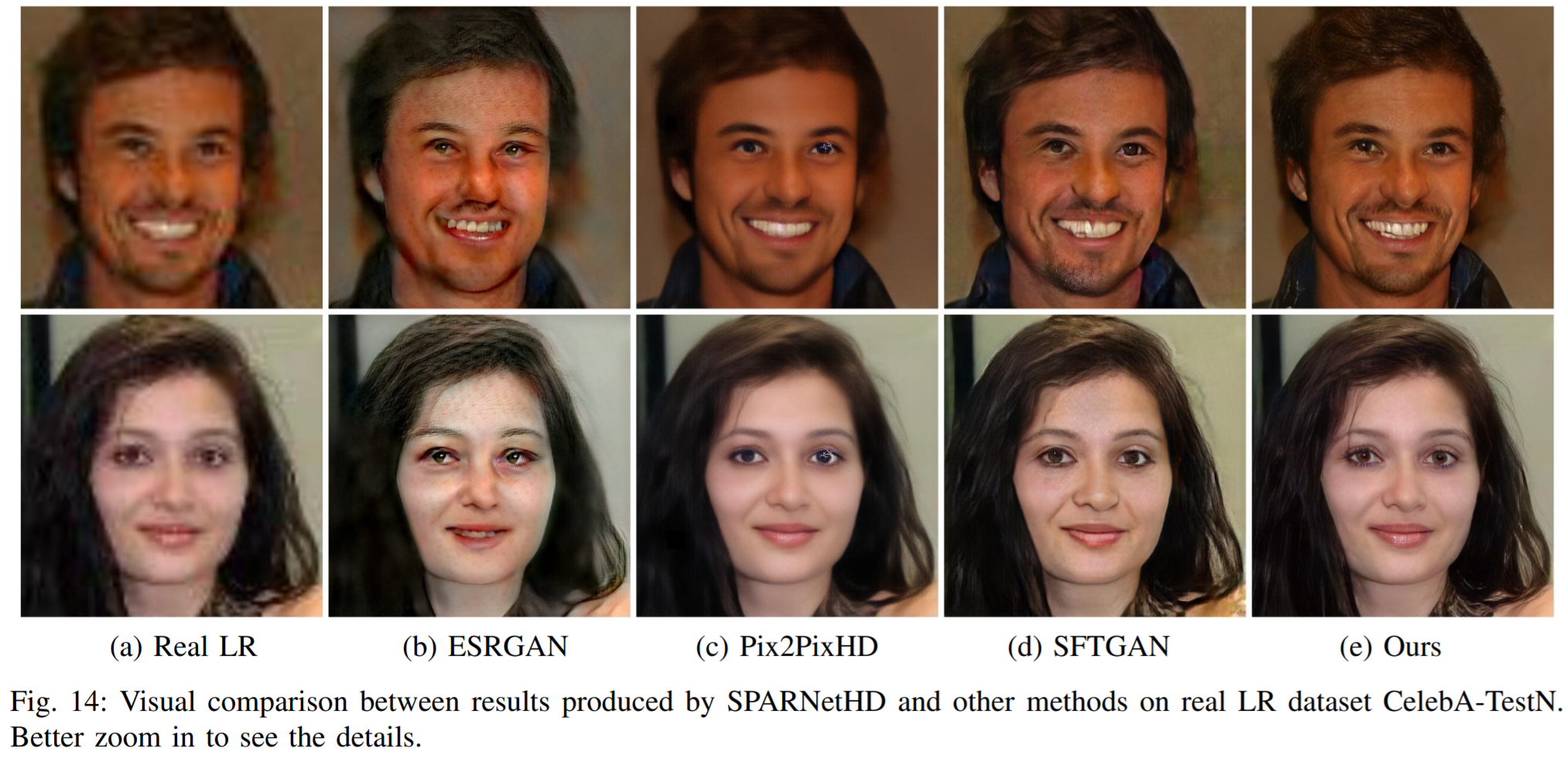

General image super-resolution techniques have difficulties in recovering detailed face structures when applying to low resolution face images. Recent deep learning based methods tailored for face images have achieved improved performance by jointly trained with additional task such as face parsing and landmark prediction. However, multi-task learning requires extra manually labeled data. Besides, most of the existing works can only generate relatively low resolution face images (e.g., 128×128), and their applications are therefore limited. In this paper, we introduce a novel SPatial Attention Residual Network (SPARNet) built on our newly proposed Face Attention Units (FAUs) for face super-resolution. Specifically, we introduce a spatial attention mechanism to the vanilla residual blocks. This enables the convolutional layers to adaptively bootstrap features related to the key face structures and pay less attention to those less feature-rich regions. This makes the training more effective and efficient as the key face structures only account for a very small portion of the face image. Visualization of the attention maps shows that our spatial attention network can capture the key face structures well even for very low resolution faces (e.g., 16×16). Quantitative comparisons on various kinds of metrics (including PSNR, SSIM, identity similarity, and landmark detection) demonstrate the superiority of our method over current state-of-the-arts. We further extend SPARNet with multi-scale discriminators, named as SPARNetHD, to produce high resolution results (i.e., 512×512). We show that SPARNetHD trained with synthetic data cannot only produce high quality and high resolution outputs for synthetically degraded face images, but also show good generalization ability to real world low quality face images.

Problem

- 기존 얼굴 SR 기법들은 우선 얼굴 분석과 (얼굴) landmark prediction 을 하고, 그 뒤에 SR 적용.

- 라벨링된 데이터 필요, 저해상도로 한계.

Proposed

Loss

L1 + adversarial + feature matching (High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs, CVPR 2018) + perceptual

Pros

- 추가적인 정보를 사용하지만, 영상 데이터셋에 이에 대한 label 없이도 학습 가능.

- 어탠션을 사용해서 집중할 부분 (여기서는 얼굴의 요소들)에 대한 성능 개선.

Cons

- 고해상도라고 하는데 512 x 512

- 결국에 어텐션 쓰니까 잘 되더라 얘기..

Experiments

특기사항

- PSNR, SSIM 을 2D error map 형태의 히트맵으로 보여주니, 개선된 부분을 시각적으로 확인 가능.

- 일반적인 SR의 metric인 PSNR, SSIM 말고도, task에 맞게 landmark detection, identity similarity에서 각각 기존 model을 사용하여 평가 metric으로 사용.