문서 목적

해당 문서는 아래 페이지를 보고 따라 진행한 내용을 정리하고자 한다.

https://www.startdataengineering.com/post/data-engineering-project-for-beginners-batch-edition/

왠만하면, 위의 내용을 보는게 더 정확! 여기서는 docker-compose등 연습하기 위해, 해당 git이 아닌 직접 진행할 예정

진행 방식

Data

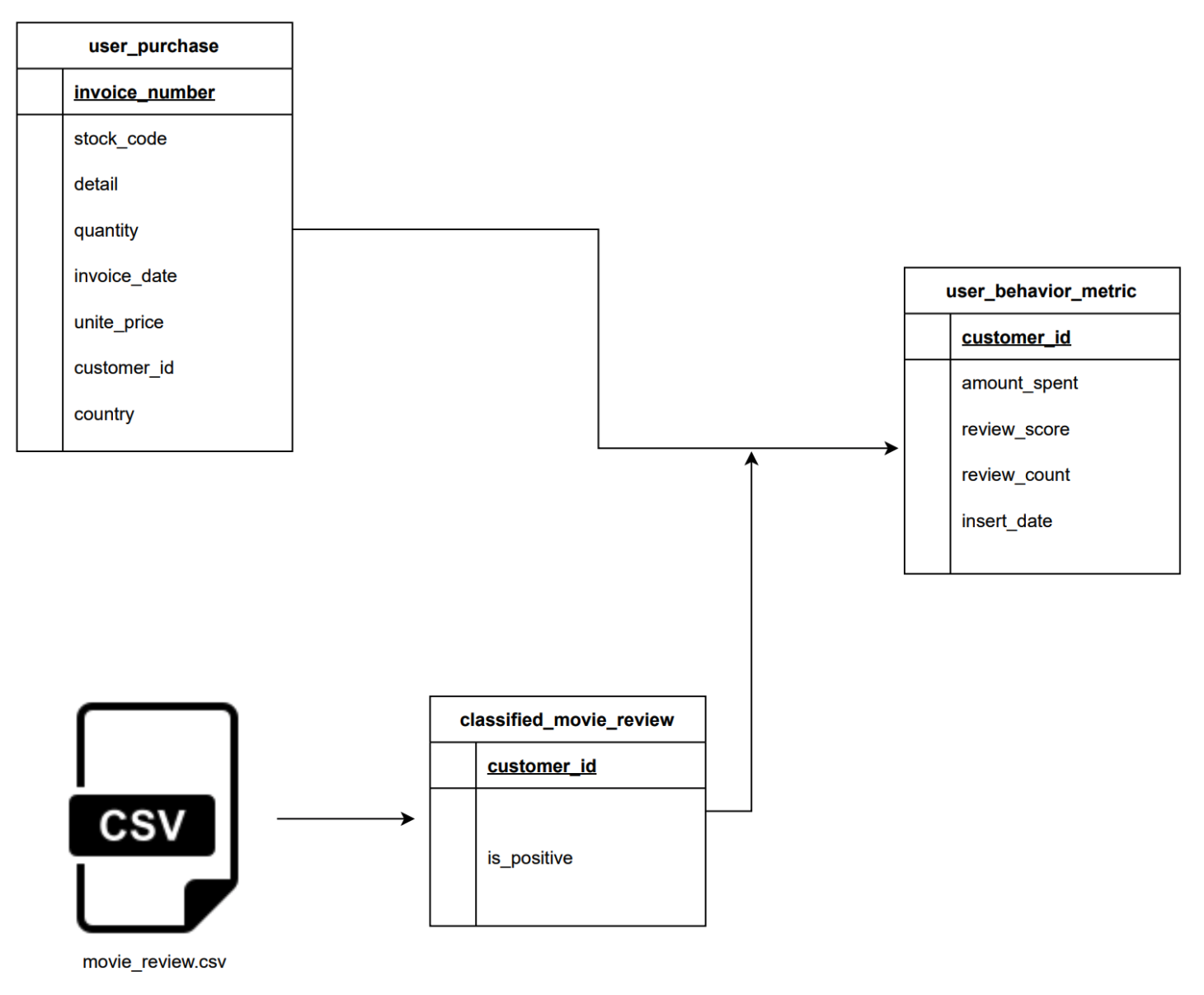

user_behavior_metric: OLAP table with user purchase informationmovie_review.csv: Data sent every day by an external data vendor.

출처: https://www.startdataengineering.com/post/data-engineering-project-for-beginners-batch-edition/

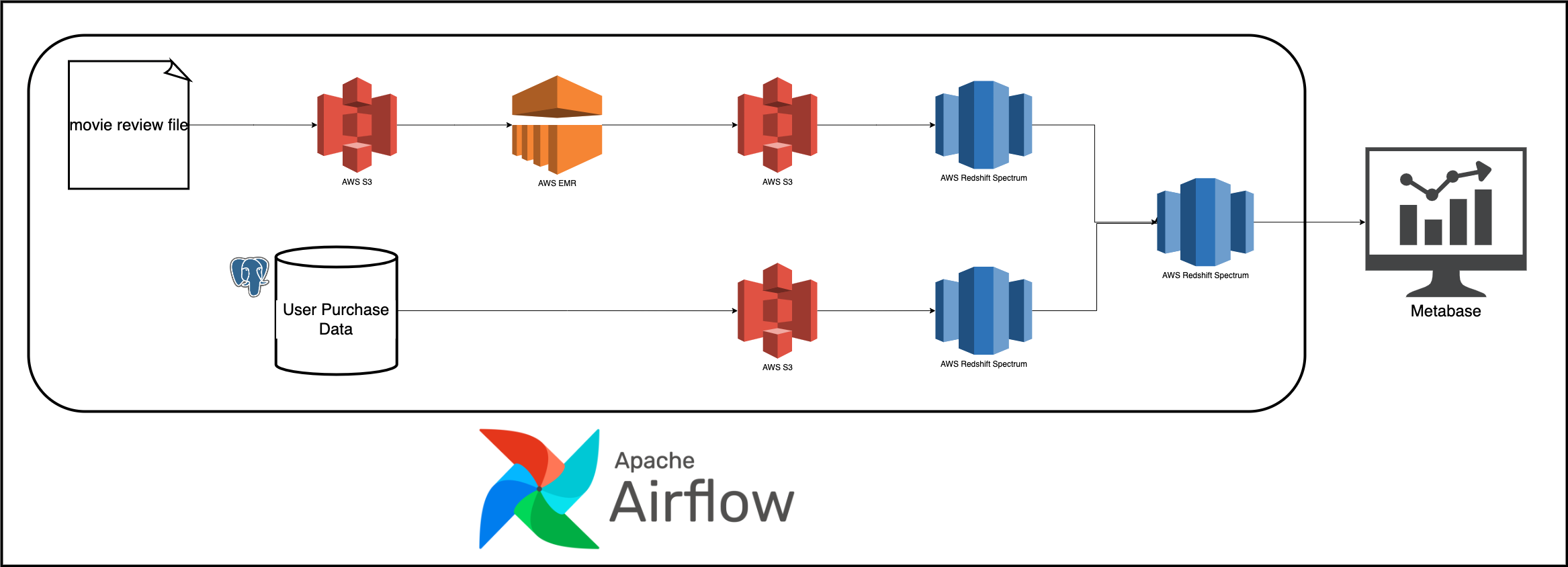

Design

- Airflow : data orchestrate

- Spark : classification movie review

출처: https://www.startdataengineering.com/post/data-engineering-project-for-beginners-batch-edition/

local

로컬 환경을 docker로 아래와 같이 구상한다.

- Airflow

- postgresql

- Metabase

docker-compose.yaml

version: '3'

x-airflow-common:

&airflow-common

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:2.4.0}

environment:

&airflow-common-env

AIRFLOW__CORE__EXECUTOR: LocalExecutor

AIRFLOW__CORE__SQL_ALCHEMY_CONN: postgresql+psycopg2://airflow:airflow@postgres/airflow

AIRFLOW__CORE__FERNET_KEY: ''

AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION: 'true'

AIRFLOW__CORE__LOAD_EXAMPLES: 'false'

AIRFLOW__API__AUTH_BACKEND: 'airflow.api.auth.backend.basic_auth'

AIRFLOW_CONN_POSTGRES_DEFAULT: postgres://airflow:airflow@postgres:5432/airflow

_PIP_ADDITIONAL_REQUIREMENTS: ${_PIP_ADDITIONAL_REQUIREMENTS:- black flake8 mypy isort moto[all] pytest pytest-mock apache-airflow-client}

volumes:

- ./dags:/opt/airflow/dags

- ./logs:/opt/airflow/logs

- ./plugins:/opt/airflow/plugins

- ./test:/opt/airflow/test

- ./data:/opt/airflow/data

- ./temp:/opt/airflow/temp

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

postgres:

condition: service_healthy

services:

postgres:

container_name: postgres

image: postgres:14

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

volumes:

- ./data:/input_data

- ./temp:/temp

- ./pgsetup:/docker-entrypoint-initdb.d

healthcheck:

test: [ "CMD", "pg_isready", "-U", "airflow" ]

interval: 5s

retries: 5

restart: always

ports:

- "5432:5432"

airflow-webserver:

<<: *airflow-common

container_name: webserver

command: webserver

ports:

- 8080:8080

healthcheck:

test:

[

"CMD",

"curl",

"--fail",

"http://localhost:8080/health"

]

interval: 10s

timeout: 10s

retries: 5

restart: always

airflow-scheduler:

<<: *airflow-common

container_name: scheduler

command: scheduler

healthcheck:

test:

[

"CMD-SHELL",

'airflow jobs check --job-type SchedulerJob --hostname "$${HOSTNAME}"'

]

interval: 10s

timeout: 10s

retries: 5

restart: always

airflow-init:

<<: *airflow-common

command: version

environment:

<<: *airflow-common-env

_AIRFLOW_DB_UPGRADE: 'true'

_AIRFLOW_WWW_USER_CREATE: 'true'

_AIRFLOW_WWW_USER_USERNAME: ${_AIRFLOW_WWW_USER_USERNAME:-airflow}

_AIRFLOW_WWW_USER_PASSWORD: ${_AIRFLOW_WWW_USER_PASSWORD:-airflow}

dashboard:

image: metabase/metabase

container_name: dashboard

ports:

- "3000:3000"

volumes:

postgres-db-volume:run

compose-up

docker compose up airflow-init && docker compose up --build -dresult

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a35bd2c328c5 apache/airflow:2.4.0 "/usr/bin/dumb-init …" 15 seconds ago Up 4 seconds 8080/tcp data-engineering-project-beginner-airflow-init-1

6ac663363c8e apache/airflow:2.4.0 "/usr/bin/dumb-init …" 15 seconds ago Up 4 seconds (health: starting) 8080/tcp scheduler

177ccec7cd0a apache/airflow:2.4.0 "/usr/bin/dumb-init …" 15 seconds ago Up 4 seconds (health: starting) 0.0.0.0:8080->8080/tcp webserver

ae88f198c979 postgres:14 "docker-entrypoint.s…" 15 seconds ago Up 12 seconds (healthy) 0.0.0.0:5432->5432/tcp postgres

e5daa340c84f metabase/metabase "/app/run_metabase.sh" 16 seconds ago Up 12 seconds 0.0.0.0:3000->3000/tcp dashboard

compose-down

docker compose downAWS deploy

내 돈...ㅠ

aws credential / cli

cli 및 config 설정

$ sudo apt install awscli

$ aws configure

AWS Access Key ID [****************JHGE]:

AWS Secret Access Key [****************oEr4]:

Default region name [ap-northeast-2]:

Default output format [json]:setup

복잡함.... 나중에 한번 더 확인할 예정.

setup_infra.sh 및 아래 링크 참조

setup 완료 결과!

airflow

emr

metabase & redshift

결론

어렵다... aws 지식이 좀 필요해 보임