ingress란

Kubernetes에서 클러스터 외부의 네트워크 트래픽을 클러스터 내부의 서비스로 라우팅하기 위한 리소스, 도메인 기반 라우팅 / 경로 기반 라우팅 / TLS 인증 / 로드 밸런싱 등 기능을 제공한다.

ingress controller란

Ingress Controller는 정의된 Ingress 리소스를 실제로 동작하게 하는 리소스로, 클러스터 내에서 배포되어 사용자가 생성한 Ingress 객체를 해석하여, 내부적으로 라우팅 규칙을 반영한 프록시 서버를 설정한다.

가장 대표적인 nginx ingress controller로 동작을 살펴보자.

ingress controller 설치

ingress controller는 Nodeport 타입으로 설치한다.

yaml 파일

cat <<EOT> ingress-nginx-values.yaml

controller:

service:

type: NodePort

nodePorts:

http: 30080

https: 30443

nodeSelector:

kubernetes.io/hostname: "k3s-s"

metrics:

enabled: true

serviceMonitor:

enabled: true

EOTingress-nginx-controller 배포

배포한 Ingress-nginx-controller 리소스이다.

(⎈|default:N/A) root@k3s-s:~# kubectl get pod -n ingress -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-7c68d6f654-brpgm 1/1 Running 0 65s 192.168.10.10 k3s-s <none> <none>

(⎈|default:N/A) root@k3s-s:~# kubectl get svc,ep -n ingress -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/ingress-nginx-controller NodePort 10.10.200.211 <none> 80:30080/TCP,443:30443/TCP 77s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

service/ingress-nginx-controller-admission ClusterIP 10.10.200.203 <none> 443/TCP 77s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

service/ingress-nginx-controller-metrics ClusterIP 10.10.200.72 <none> 10254/TCP 77s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

NAME ENDPOINTS AGE

endpoints/ingress-nginx-controller 192.168.10.10:443,192.168.10.10:80 77s

endpoints/ingress-nginx-controller-admission 192.168.10.10:8443 77s

endpoints/ingress-nginx-controller-metrics 192.168.10.10:10254 77s

(⎈|default:N/A) root@k3s-s:~# kc get svc -n ingress ingress-nginx-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.10.200.157 <none> 80:30080/TCP,443:30443/TCP 12m

(⎈|default:N/A) root@k3s-s:~# kc describe svc -n ingress ingress-nginx-controller

Name: ingress-nginx-controller

Namespace: ingress

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.11.2

helm.sh/chart=ingress-nginx-4.11.2

Annotations: meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: ingress

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.10.200.157

IPs: 10.10.200.157

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 30080/TCP

Endpoints: 172.16.0.4:80 #ingress-nginx-controller pod IP

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 30443/TCP

Endpoints: 172.16.0.4:443 #ingress-nginx-controller pod IP

Session Affinity: None

External Traffic Policy: Local

Events: <none>ingress controller 동작 방식

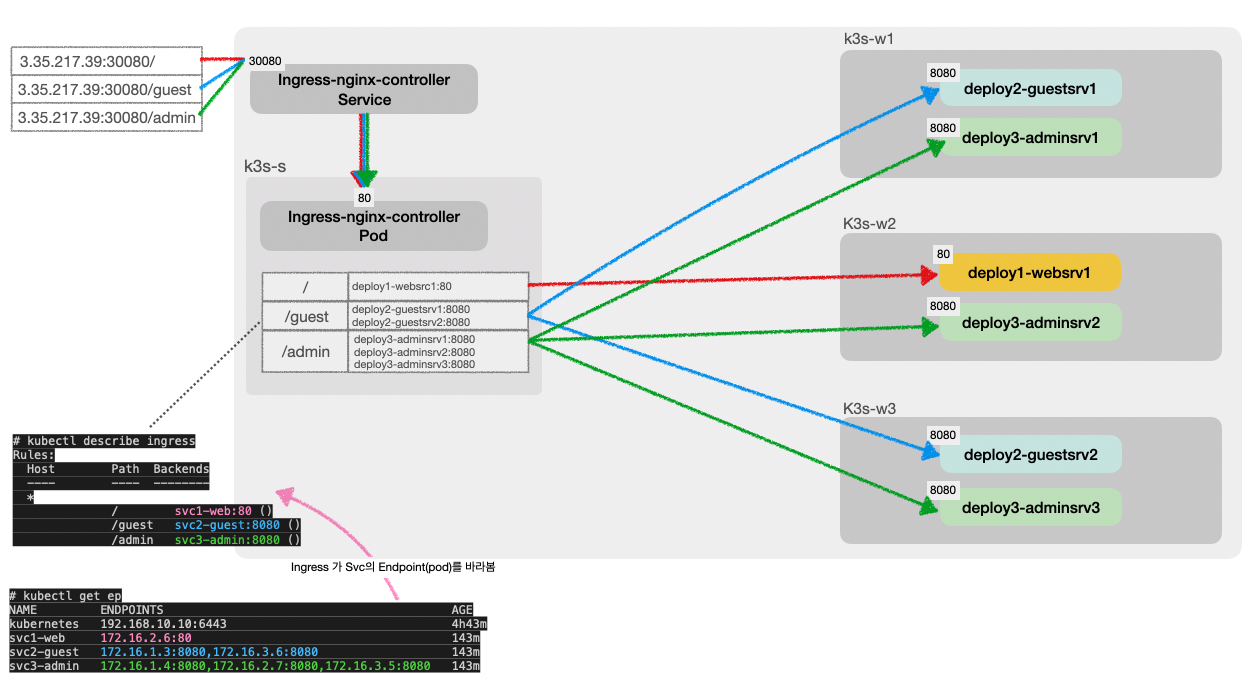

ingress controller 동작을 확인하기 위해 svc, deployment, ingress를 배포한다.

ingress-nginx-controller가 배포된 상태에서 Ingress를 생성하면 해당 설정을 기반으로 ingress-nginx-controller에서 프록시 역할을 할 수 있도록 nginx.conf를 구성한다.

ingress-nginx-controller는 nginx.conf에 설정된 경로로 요청이 들어오면, 경로에 해당하는 서비스의 Endpoint를 모니터링하여 해당 Endpoint로 직접 요청을 보낸다.

(⎈|default:N/A) root@k3s-s:~# kubectl get pod,svc,ep

NAME READY STATUS RESTARTS AGE

pod/deploy1-websrv-5c6b88bd77-r4b5k 1/1 Running 0 11m

pod/deploy2-guestsrv-649875f78b-2fl9v 1/1 Running 0 11m

pod/deploy2-guestsrv-649875f78b-w9s8q 1/1 Running 0 11m

pod/deploy3-adminsrv-7c8f8b8c87-5pf7g 1/1 Running 0 11m

pod/deploy3-adminsrv-7c8f8b8c87-76sjm 1/1 Running 0 11m

pod/deploy3-adminsrv-7c8f8b8c87-qq2fj 1/1 Running 0 11m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 151m

service/svc1-web ClusterIP 10.10.200.245 <none> 9001/TCP 11m

service/svc2-guest NodePort 10.10.200.148 <none> 9002:30832/TCP 11m

service/svc3-admin ClusterIP 10.10.200.241 <none> 9003/TCP 11m

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.10.10:6443 151m

endpoints/svc1-web 172.16.2.6:80 11m

endpoints/svc2-guest 172.16.1.3:8080,172.16.3.6:8080 11m

endpoints/svc3-admin 172.16.1.4:8080,172.16.2.7:8080,172.16.3.5:8080 11m(⎈|default:N/A) root@k3s-s:~# kc describe ingress ingress-1

Host Path Backends

---- ---- --------

*

/ svc1-web:80 ()

/guest svc2-guest:8080 ()

/admin svc3-admin:8080 ()

(⎈|default:N/A) root@k3s-s:~# kubectl exec deploy/ingress-nginx-controller -n ingress -it -- cat /etc/nginx/nginx.conf | grep location -A5

location = /guest {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc2-guest";

set $service_port "8080";

set $location_path "/guest";

set $global_rate_limit_exceeding n;

rewrite_by_lua_block {

lua_ingress.rewrite({

force_ssl_redirect = false,

...

--

location = /admin {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc3-admin";

set $service_port "8080";

set $location_path "/admin";

set $global_rate_limit_exceeding n;

rewrite_by_lua_block {

lua_ingress.rewrite({

force_ssl_redirect = false,

--

location / {

set $namespace "default";

set $ingress_name "ingress-1";

set $service_name "svc1-web";

set $service_port "80";

set $location_path "/";

set $global_rate_limit_exceeding n;

rewrite_by_lua_block {

lua_ingress.rewrite({

force_ssl_redirect = false,ingress-nginx-controller 서비스 Endpoint 모니터링 확인

ingress-nginx-controller 코드를 보면 로그레벨3의 서비스의 Endpoint를 수집하는 로그를 출력할 수 있음을 알 수 있다.

//ingress-nginx/internal/ingress/controller/endpoints.go

func getEndpoints(s *corev1.Service, port *corev1.ServicePort, proto corev1.Protocol,

getServiceEndpoints func(string) (*corev1.Endpoints, error)) []ingress.Endpoint {

...

klog.V(3).Infof("Getting Endpoints for Service %q and port %v", svcKey, port.String())

...

}ingress-nginx-controller를 로그레벨3으로 배포하여 로그를 확인해보자.

(⎈|default:N/A) root@k3s-s:~# cat ingress-nginx-values.yaml

controller:

service:

type: NodePort

nodePorts:

http: 30080

https: 30443

nodeSelector:

kubernetes.io/hostname: "k3s-s"

metrics:

enabled: true

serviceMonitor:

enabled: true

extraArgs: # 이 부분 추가

v: "3" # 이 부분 추가

(⎈|default:N/A) root@k3s-s:~# helm upgrade ingress-nginx ingress-nginx/ingress-nginx -f ingress-nginx-values.yaml --namespace ingress --version 4.11.2

(⎈|default:N/A) root@k3s-s:~# kubectl get pod -n ingress

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-5b8b86dff5-5stvf 1/1 Running 0 11m

(⎈|default:N/A) root@k3s-s:~# kubectl logs ingress-nginx-controller-5b8b86dff5-5stvf -n ingress서비스 Endpoint에 해당하는 upstream을 생성하고, 서비스 Endpoint를 찾는 로그이다.

# default-svc1-web-80

I1012 03:21:29.189148 7 controller.go:1075] Creating upstream "default-svc1-web-80"

I1012 03:21:29.189160 7 controller.go:1190] Obtaining ports information for Service "default/svc1-web"

I1012 03:21:29.189175 7 endpointslices.go:79] Getting Endpoints from endpointSlices for Service "default/svc1-web" and port &ServicePort{Name:web-port,Protocol:TCP,Port:9001,TargetPort:{0 80 },NodePort:0,AppProtocol:nil,}

I1012 03:21:29.189188 7 endpointslices.go:166] Endpoints found for Service "default/svc1-web": [{172.16.2.6 80 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy1-websrv-5c6b88bd77-r4b5k,UID:0792180e-010c-48d6-961b-282ca789ca58,APIVersion:,ResourceVersion:,FieldPath:,}}]

# default-svc2-guest-8080

I1012 03:21:29.189213 7 controller.go:1075] Creating upstream "default-svc2-guest-8080"

I1012 03:21:29.189218 7 controller.go:1190] Obtaining ports information for Service "default/svc2-guest"

I1012 03:21:29.189226 7 endpointslices.go:79] Getting Endpoints from endpointSlices for Service "default/svc2-guest" and port &ServicePort{Name:guest-port,Protocol:TCP,Port:9002,TargetPort:{0 8080 },NodePort:30832,AppProtocol:nil,}

I1012 03:21:29.189245 7 endpointslices.go:166] Endpoints found for Service "default/svc2-guest": [{172.16.3.6 8080 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy2-guestsrv-649875f78b-2fl9v,UID:c485d507-259b-4dea-aa4e-d6f8c9e57557,APIVersion:,ResourceVersion:,FieldPath:,}} {172.16.1.3 8080 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy2-guestsrv-649875f78b-w9s8q,UID:cf983782-0a43-4f5b-911a-ff8838d94801,APIVersion:,ResourceVersion:,FieldPath:,}}]

# default-svc3-admin-8080

I1012 03:21:29.189258 7 controller.go:1075] Creating upstream "default-svc3-admin-8080"

I1012 03:21:29.189263 7 controller.go:1190] Obtaining ports information for Service "default/svc3-admin"

I1012 03:21:29.189270 7 endpointslices.go:79] Getting Endpoints from endpointSlices for Service "default/svc3-admin" and port &ServicePort{Name:admin-port,Protocol:TCP,Port:9003,TargetPort:{0 8080 },NodePort:0,AppProtocol:nil,}

I1012 03:21:29.189279 7 endpointslices.go:166] Endpoints found for Service "default/svc3-admin": [{172.16.3.5 8080 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy3-adminsrv-7c8f8b8c87-76sjm,UID:789812d4-ee0a-4e5b-9e79-62b495bdb40c,APIVersion:,ResourceVersion:,FieldPath:,}} {172.16.1.4 8080 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy3-adminsrv-7c8f8b8c87-5pf7g,UID:dfb70e2a-549d-499f-8435-a625b20b7889,APIVersion:,ResourceVersion:,FieldPath:,}} {172.16.2.7 8080 &ObjectReference{Kind:Pod,Namespace:default,Name:deploy3-adminsrv-7c8f8b8c87-qq2fj,UID:5a511298-8b6e-433a-a33c-2e1898d7f5e0,APIVersion:,ResourceVersion:,FieldPath:,}}]ingress-nginx-controller에서 각 경로 (/, /guest, /admin)로 오는 요청을 각각의 upstream으로 라우팅을 변경하는 로그이며, 배포된 Ingress에 따라 변경 값이 설정된다.

I1012 03:21:29.189307 7 controller.go:811] Replacing location "/" for server "_" with upstream "upstream-default-backend" to use upstream "default-svc1-web-80" (Ingress "default/ingress-1")

I1012 03:21:29.189328 7 controller.go:831] Adding location "/guest" for server "_" with upstream "default-svc2-guest-8080" (Ingress "default/ingress-1")

I1012 03:21:29.189340 7 controller.go:831] Adding location "/admin" for server "_" with upstream "default-svc3-admin-8080" (Ingress "default/ingress-1")ingress controller의 endpoint 모니터링 권한

ingress controller에서 서비스의 endpoint를 추적할 수 있는 것은 ingress-nginx에 endpoint를 mointoring 할 수 있는 권한을 부여했기 때문이다.

(⎈|default:N/A) root@k3s-s:~# kc describe clusterroles ingress-nginx

Name: ingress-nginx

Labels: app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.11.2

helm.sh/chart=ingress-nginx-4.11.2

Annotations: meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: ingress

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

events [] [] [create patch]

services [] [] [get list watch]

ingressclasses.networking.k8s.io [] [] [get list watch]

ingresses.networking.k8s.io [] [] [get list watch]

nodes [] [] [list watch get]

endpointslices.discovery.k8s.io [] [] [list watch get]

configmaps [] [] [list watch]

endpoints [] [] [list watch]

namespaces [] [] [list watch]

pods [] [] [list watch]

secrets [] [] [list watch]

leases.coordination.k8s.io [] [] [list watch]

ingresses.networking.k8s.io/status [] [] [update]이렇든 ingress-nginx-controller는 ingress에 작성된 설정 값으로 트래픽 라우팅을 설정하고, 서비스의 EndpointService를 모니터링하여 해당 Endpoint로 직접 트래픽을 보낸다.

Ingress 접속 확인

기본적으로 ingress-nginx-controller는 라운드로빈 부하분산 알고리즘을 사용한다.

Ingress에 설정한 경로를 통해 백엔드 서비스를 호출해보자.

(⎈|default:N/A) root@k3s-s:~# MYIP=$(curl -s ipinfo.io/ip)

(⎈|default:N/A) root@k3s-s:~# echo $MYIP

3.35.217.39 ggyul 🐵

# MYIP=3.35.217.39

ggyul 🐵

# curl -s $MYIP:30080

...

<title>Welcome to nginx!</title>

ggyul 🐵

# curl -s $MYIP:30080/guest

Hello Kubernetes bootcamp! | Running on: deploy2-guestsrv-649875f78b-w9s8q | v=1

ggyul 🐵

# for i in {1..100}; do curl -s $MYIP:30080/guest ; done | sort | uniq -c | sort -nr

50 Hello Kubernetes bootcamp! | Running on: deploy2-guestsrv-649875f78b-w9s8q | v=1

50 Hello Kubernetes bootcamp! | Running on: deploy2-guestsrv-649875f78b-2fl9v | v=1

ggyul 🐵

# curl -s $MYIP:30080/admin

Hostname: deploy3-adminsrv-7c8f8b8c87-76sjm

Pod Information:

-no pod information available-

Server values:

server_version=nginx: 1.13.0 - lua: 10008

Request Information:

client_address=172.16.0.7

method=GET

real path=/admin

query=

request_version=1.1

request_uri=http://3.35.217.39:8080/admin

Request Headers:

accept=*/*

host=3.35.217.39:30080

user-agent=curl/7.79.1

x-forwarded-for=175.116.31.155

x-forwarded-host=3.35.217.39:30080

x-forwarded-port=80

x-forwarded-proto=http

x-forwarded-scheme=http

x-real-ip=175.116.31.155

x-request-id=63a5cdff6192f4430ba75ec92ad418bd

x-scheme=http

Request Body:

-no body in request-

ggyul 🐵

# for i in {1..100}; do curl -s $MYIP:30080/admin | grep Hostname ; done | sort | uniq -c | sort -nr

34 Hostname: deploy3-adminsrv-7c8f8b8c87-qq2fj

33 Hostname: deploy3-adminsrv-7c8f8b8c87-76sjm

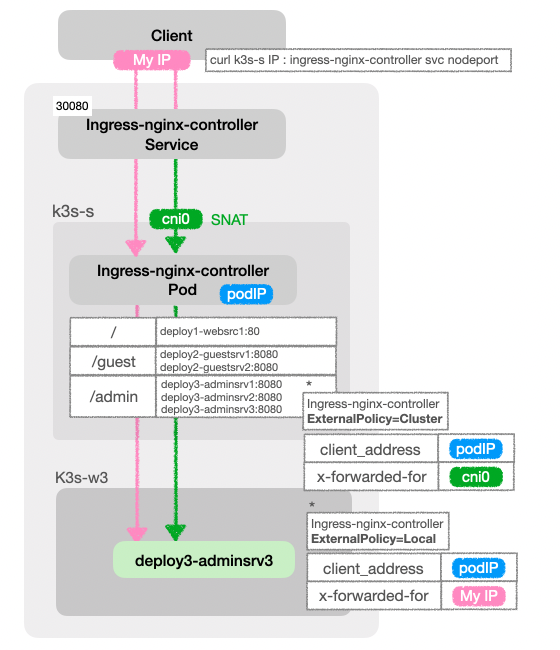

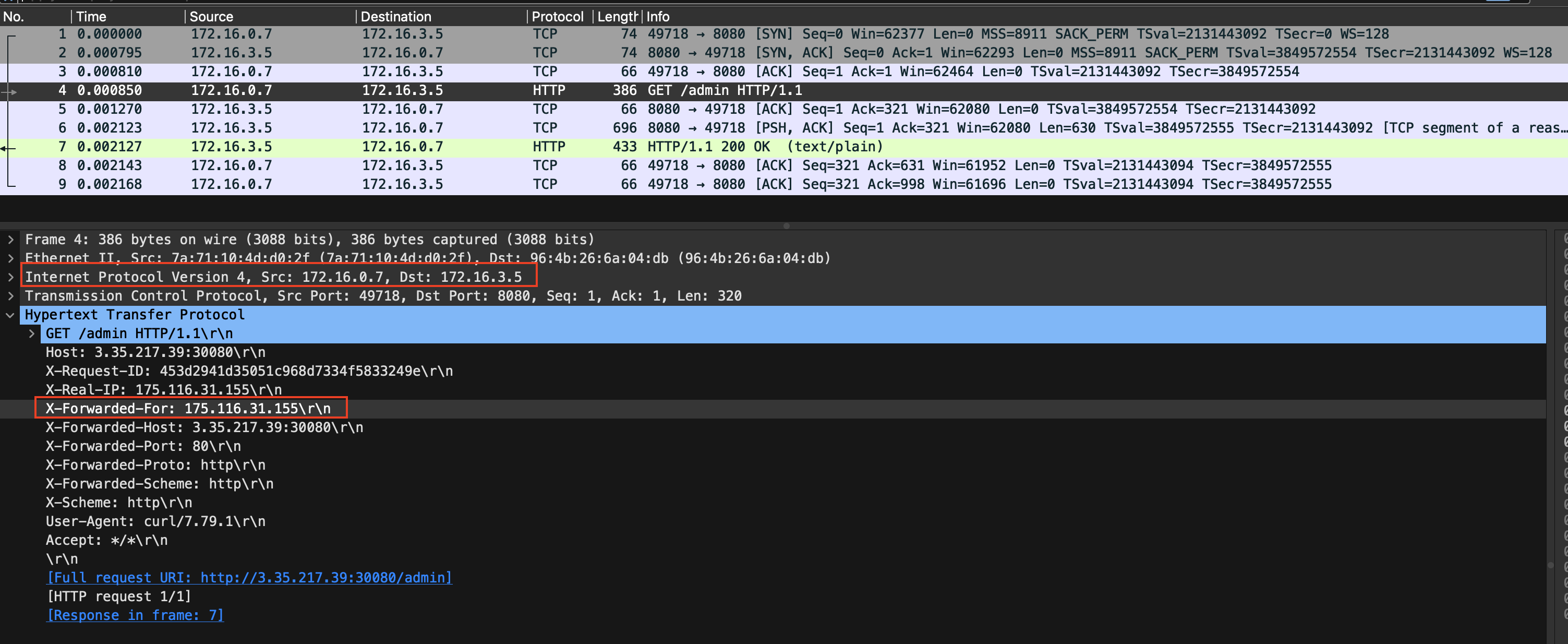

33 Hostname: deploy3-adminsrv-7c8f8b8c87-5pf7g/admin 으로 호출을 하였을 때, client_address와 x-forwarded-for값을 확인할 수 있다.

- ingress-nginx-controller 서비스에 externalTrafficPolicy를 local로 설정했을 경우 client_address는 ingress-nginx-controller pod ip이고, x-forwarded-for은 호출을 하는 Client IP이다.

- ingress-nginx-controller 서비스에 externalTrafficPolicy를 default로 설정했을 경우 client_address는 ingress-nginx-controller pod ip이고, x-forwarded-for은 Control-Plane의 cni0이다.

(⎈|default:N/A) root@k3s-s:~# kubectl get pod -n ingress -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-5b8b86dff5-5stvf 1/1 Running 0 144m 172.16.0.7 k3s-s <none> <none>

ggyul 🐵

# curl -s $MYIP:30080/admin | egrep '(client_address|x-forwarded-for)'

client_address=172.16.0.7

x-forwarded-for=175.116.31.155

(⎈|default:N/A) root@k3s-s:~# kubectl patch svc -n ingress ingress-nginx-controller -p '{"spec":{"externalTrafficPolicy": "Cluster"}}'

service/ingress-nginx-controller patched

ggyul 🐵

# curl -s $MYIP:30080/admin | egrep '(client_address|x-forwarded-for)'

client_address=172.16.0.7

x-forwarded-for=172.16.0.1-

ingress-nginx-controller는 클라이언트와 백엔드 서비스 사이에 proxy역할을 하기 때문에, 백엔드 서비스 입장에서는 Ingress-nginx-controller가 요청을 보낸 주체로 보이게 되고, client_address로 ingress-nginx-controller pod ip를 반환한다.

-

x-forwarded-for의 경우, ingress-nginx-controller 서비스가 ExternalTrafficPolicy가 Local일 경우, k3s-s로 들어온 요청이 해당 노드 내의 ingress-nginx-controller에만 전달되기 때문에 SNAT되지 않으면서 요청을 보낸 원본 IP가 보존되어 요청을 호출한 client의 IP가 반환된다.

-

ingress-nginx-controller 서비스의 ExternalTrafficPolicy가 Cluster일 경우, SNAT되어 요청이 들어온 k3s-s의 IP가 반환된다.

패킷 캡처

flannel vxlan, 파드 간 통신 시 IP 정보를 확인한다.

(⎈|default:N/A) root@k3s-s:~# tcpdump -i vetha126644e tcp port 8080 -w /tmp/ingress-nginx.pcap

ggyul 🐵

# curl -s $MYIP:30080/admin

ggyul 🐵

# sftp -i kp-bgr.pem ubuntu@$MYIP

Connected to 3.35.217.39.

sftp> get /tmp/ingress-nginx.pcap

Fetching /tmp/ingress-nginx.pcap to ingress-nginx.pcap

/tmp/ingress-nginx.pcap 100% 2095 201.2KB/s 00:00

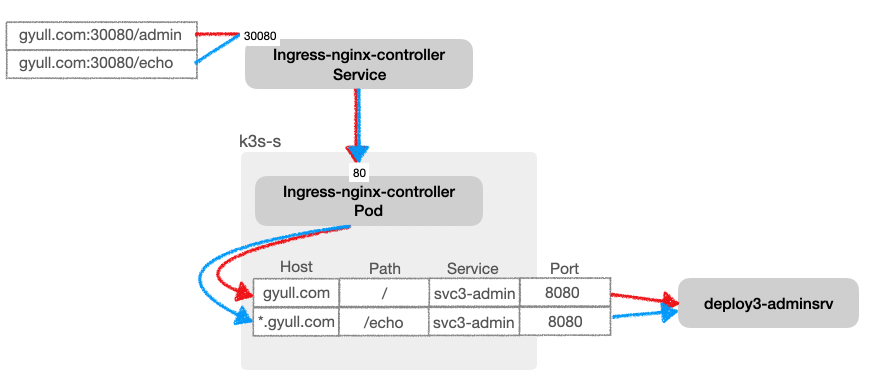

Host 기반 라우팅

yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-2

spec:

ingressClassName: nginx

rules:

- host: gyull.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc3-admin

port:

number: 8080

- host: "*.gyull.com"

http:

paths:

- path: /echo

pathType: Prefix

backend:

service:

name: svc3-admin

port:리소스 확인

(⎈|default:N/A) root@k3s-s:~# kubectl describe ingress ingress-2

Name: ingress-2

...

Rules:

Host Path Backends

---- ---- --------

gyull.com

/ svc3-admin:8080 ()

*.gyull.com

/echo svc3-admin:8080 ()

...접속 확인

도메인 설정

ggyul 🐵

# MYDOMAIN1=gyull.com

ggyul 🐵

# MYDOMAIN2=test.gyull.com

ggyul 🐵

# echo $MYIP $MYDOMAIN1 $MYDOMAIN2

3.35.217.39 gyull.com test.gyull.com

ggyul 🐵

# echo "$MYIP $MYDOMAIN1" | sudo tee -a /etc/hosts

echo "$MYIP $MYDOMAIN2" | sudo tee -a /etc/hostsMYDOMAIN1 접속

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN1:30080/

200

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN1:30080/gyull

200

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN1:30080/echo

200

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN1:30080/echo/5

200MYDOMAIN2 접속

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN2:30080/

404

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN2:30080/gyull

404

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN2:30080/echo

200

ggyul 🐵

# curl -o /dev/null -s -w "%{http_code}\n" $MYDOMAIN2:30080/echo/5

200카나리 배포

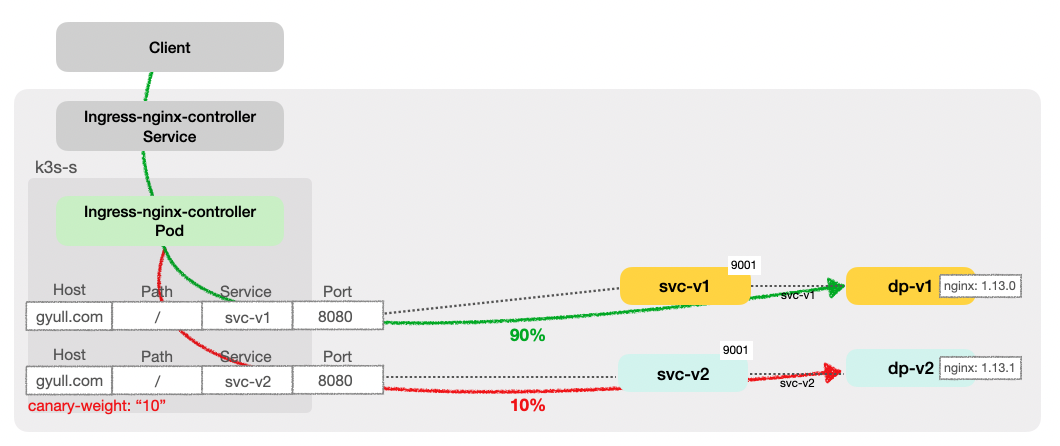

NGINX Ingress Controller에서 카나리 배포는 ingress의 Annotations를 통해 구현된다. 이를 통해 기존 서비스와 새로운 카나리 서비스 간에 트래픽을 동적으로 분배하여 새로운 버전 배포 시, 안정성을 높이고 리스크를 줄일 수 있다.

카나리 Ingress 반영 Upstream 생성 코드

ingress-nginx에서 카나리 Ingress를 인지하고 Upstream을 생성하는 코드는 아래와 같다.

Ingress parse -> Canary config 반환

// ingress-nginx/internal/ingress/annotations/canary/main.go

const (

canaryAnnotation = "canary"

canaryWeightAnnotation = "canary-weight" // canary-weight annotation 지정

canaryWeightTotalAnnotation = "canary-weight-total"

canaryByHeaderAnnotation = "canary-by-header"

canaryByHeaderValueAnnotation = "canary-by-header-value"

canaryByHeaderPatternAnnotation = "canary-by-header-pattern"

canaryByCookieAnnotation = "canary-by-cookie"

)

func (c canary) Parse(ing *networking.Ingress) (interface{}, error) {

...

// canary-weight annotation 값을 인지하여 config에 넣는다.

config.Weight, err = parser.GetIntAnnotation(canaryWeightAnnotation, ing, c.annotationConfig.Annotations)

...

//WeightTotal의 default는 100이다.

config.WeightTotal, err = parser.GetIntAnnotation(canaryWeightTotalAnnotation, ing, c.annotationConfig.Annotations)

if err != nil {

if errors.IsValidationError(err) {

klog.Warningf("%s is invalid, defaulting to '100'", canaryWeightTotalAnnotation)

}

config.WeightTotal = 100

}

...

}Upstream 생성

// ingress-nginx/internal/ingress/controller/controller.go

func (n *NGINXController) createUpstreams(data []*ingress.Ingress, du *ingress.Backend) map[string]*ingress.Backend {

...

// configure traffic shaping for canary

if anns.Canary.Enabled {

upstreams[defBackend].NoServer = true

upstreams[defBackend].TrafficShapingPolicy = newTrafficShapingPolicy(&anns.Canary) //weight가 주입된 ingress로 upstream을 생성한다.

}

...

}

// newTrafficShapingPolicy creates new ingress.TrafficShapingPolicy instance using canary configuration

func newTrafficShapingPolicy(cfg *canary.Config) ingress.TrafficShapingPolicy {

return ingress.TrafficShapingPolicy{ //parsing해서 얻은 weight를 ingress에 주입한다.

Weight: cfg.Weight,

WeightTotal: cfg.WeightTotal,

Header: cfg.Header,

HeaderValue: cfg.HeaderValue,

HeaderPattern: cfg.HeaderPattern,

Cookie: cfg.Cookie,

}

}리소스 확인

(⎈|default:N/A) root@k3s-s:~# kubectl get svc,ep,pod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 5m51s

service/svc-v1 ClusterIP 10.10.200.183 <none> 9001/TCP 5m18s

service/svc-v2 ClusterIP 10.10.200.225 <none> 9001/TCP 5m18s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.10.10:6443 5m51s

endpoints/svc-v1 172.16.1.5:8080,172.16.2.11:8080,172.16.3.9:8080 5m18s

endpoints/svc-v2 172.16.1.6:8080,172.16.2.10:8080,172.16.3.10:8080 5m18s

NAME READY STATUS RESTARTS AGE

pod/dp-v1-8684d45558-fzdt4 1/1 Running 0 5m18s

pod/dp-v1-8684d45558-gvxt9 1/1 Running 0 5m18s

pod/dp-v1-8684d45558-k2qfg 1/1 Running 0 5m18s

pod/dp-v2-7757c4bdc-8kf24 1/1 Running 0 5m18s

pod/dp-v2-7757c4bdc-96m7j 1/1 Running 0 5m18s

pod/dp-v2-7757c4bdc-fwx54 1/1 Running 0 5m18s

(⎈|default:N/A) root@k3s-s:~# for pod in $(kubectl get pod -o wide -l app=svc-v1 |awk 'NR>1 {print $6}'); do curl -s $pod:8080 | egrep '(Hostname|nginx)'; done

Hostname: dp-v1-8684d45558-fzdt4

server_version=nginx: 1.13.0 - lua: 10008

Hostname: dp-v1-8684d45558-gvxt9

server_version=nginx: 1.13.0 - lua: 10008

Hostname: dp-v1-8684d45558-k2qfg

server_version=nginx: 1.13.0 - lua: 10008

(⎈|default:N/A) root@k3s-s:~# for pod in $(kubectl get pod -o wide -l app=svc-v2 |awk 'NR>1 {print $6}'); do curl -s $pod:8080 | egrep '(Hostname|nginx)'; done

Hostname: dp-v2-7757c4bdc-8kf24

server_version=nginx: 1.13.1 - lua: 10008

Hostname: dp-v2-7757c4bdc-96m7j

server_version=nginx: 1.13.1 - lua: 10008

Hostname: dp-v2-7757c4bdc-fwx54

server_version=nginx: 1.13.1 - lua: 10008카나리 접속 테스트

전체 weight가 100이고, ingress-canary-v1에 해당하는 Pod(nginx 1.13.0)에 90%, ingress-canary-v2에 해당하는 Pod(nginx 1.13.1)에 10%의 트래픽이 처리되는 것을 확인할 수 있다.

ggyul 🐵

# for i in {1..100}; do curl -s $MYDOMAIN1:30080 | grep nginx ; done | sort | uniq -c | sort -nr

90 server_version=nginx: 1.13.0 - lua: 10008

10 server_version=nginx: 1.13.1 - lua: 10008

ggyul 🐵

# for i in {1..1000}; do curl -s $MYDOMAIN1:30080 | grep nginx ; done | sort | uniq -c | sort -nr=

876 server_version=nginx: 1.13.0 - lua: 10008

124 server_version=nginx: 1.13.1 - lua: 10008HTTPS 처리

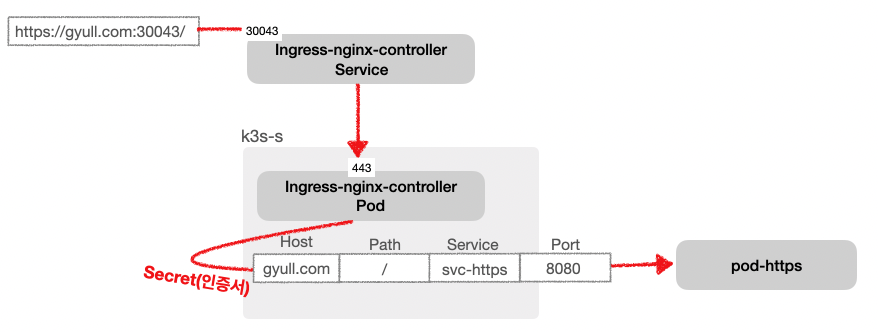

Ingress와 인증서 기반 secret을 연결하여 HTTPS 처리가 가능하다.

yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: https

spec:

ingressClassName: nginx

tls:

- hosts:

- gyull.com

secretName: secret-https

rules:

- host: gyull.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-https

port:

number: 8080리소스 확인

(⎈|default:N/A) root@k3s-s:~# kubectl describe ingress

Name: https

Labels: <none>

Namespace: default

Address: 10.10.200.170

Ingress Class: nginx

Default backend: <default>

TLS:

secret-https terminates gyull.com

Rules:

Host Path Backends

---- ---- --------

gyull.com

/ svc-https:8080 (172.16.3.11:8080)

(⎈|default:N/A) root@k3s-s:~# kubectl get svc,ep,pod

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.10.200.1 <none> 443/TCP 3m11s

service/svc-https ClusterIP 10.10.200.182 <none> 8080/TCP 3m4s

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.10.10:6443 3m11s

endpoints/svc-https 172.16.3.11:8080 3m4s

NAME READY STATUS RESTARTS AGE

pod/pod-https 1/1 Running 0 3m4s 인증서 생성

(⎈|default:N/A) root@k3s-s:~# MYDOMAIN1=gyull.com

(⎈|default:N/A) root@k3s-s:~# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=$MYDOMAIN1/O=$MYDOMAIN1"

(⎈|default:N/A) root@k3s-s:~# tree

.

├...

├── tls.crt

└── tls.key

(⎈|default:N/A) root@k3s-s:~# kubectl create secret tls secret-https --key tls.key --cert tls.crt

secret/secret-https created

(⎈|default:N/A) root@k3s-s:~# kubectl get secrets secret-https

NAME TYPE DATA AGE

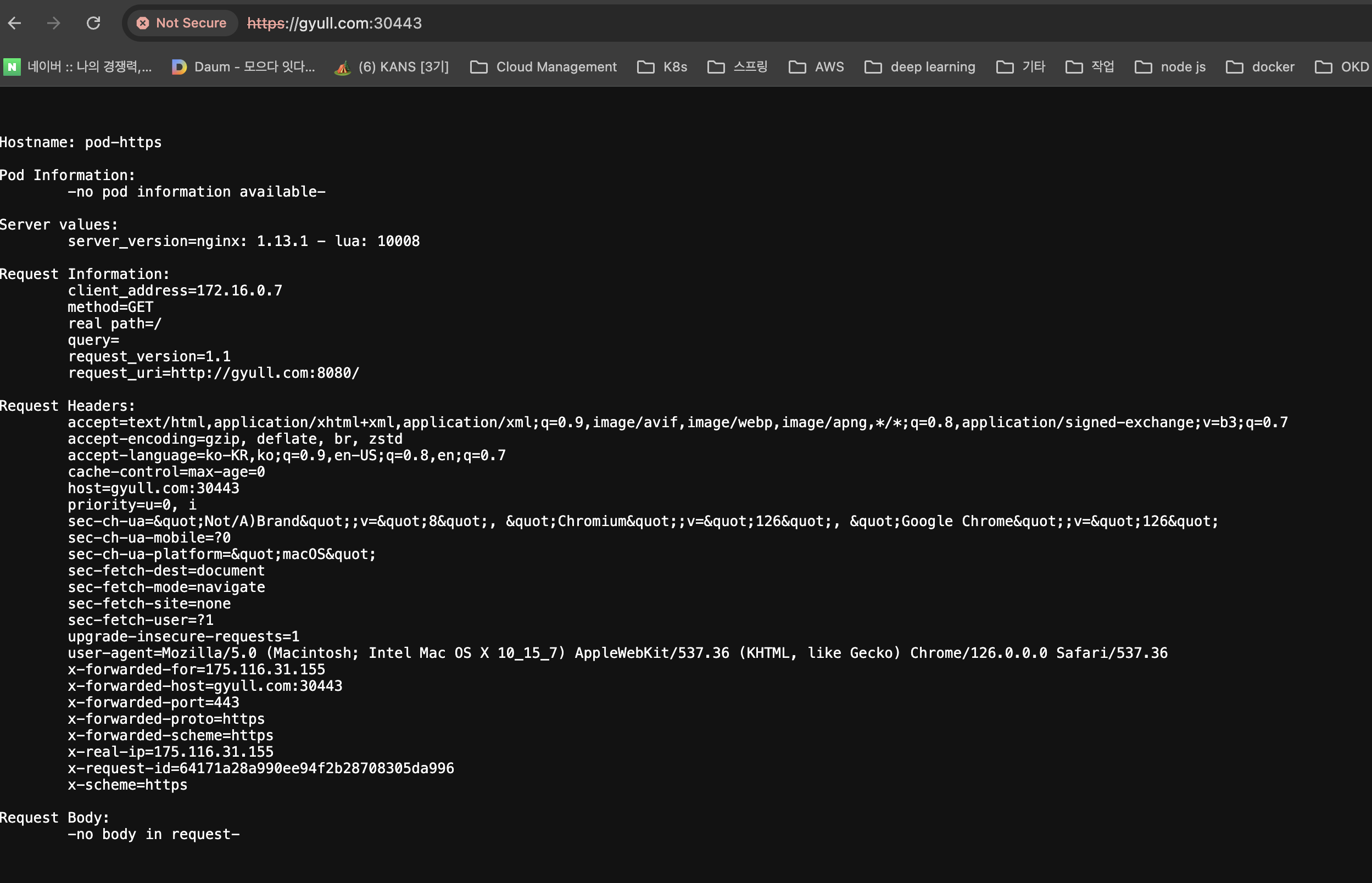

secret-https kubernetes.io/tls 2 9s 접속 확인

패킷 캡처

nginx-ingress-controller pod의 443포트로 패킷이 통신되는 것을 확인할 수 있다.

(⎈|default:N/A) root@k3s-s:~# export IngHttp=$(kubectl get service -n ingress ingress-nginx-controller -o jsonpath='{.spec.ports[0].nodePort}')

(⎈|default:N/A) root@k3s-s:~# export IngHttps=$(kubectl get service -n ingress ingress-nginx-controller -o jsonpath='{.spec.ports[1].nodePort}')

(⎈|default:N/A) root@k3s-s:~# tcpdump -i vetha126644e tcp port $IngHttp -nn

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on vetha126644e, link-type EN10MB (Ethernet), snapshot length 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

(⎈|default:N/A) root@k3s-s:~# tcpdump -i vetha126644e tcp port $IngHttps -nn

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on vetha126644e, link-type EN10MB (Ethernet), snapshot length 262144 bytes

^C

0 packets captured

0 packets received by filter

0 packets dropped by kernel

(⎈|default:N/A) root@k3s-s:~# tcpdump -i vetha126644e tcp port 443 -nn

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on vetha126644e, link-type EN10MB (Ethernet), snapshot length 262144 bytes

00:03:10.784942 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [S], seq 1383706545, win 65535, options [mss 1460,nop,wscale 6,nop,nop,TS val 723061224 ecr 0,sackOK,eol], length 0

00:03:10.784958 IP 172.16.0.7.443 > 175.116.31.155.63439: Flags [S.], seq 814443817, ack 1383706546, win 62293, options [mss 8911,sackOK,TS val 4107631988 ecr 723061224,nop,wscale 7], length 0

00:03:10.789822 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [.], ack 1, win 2058, options [nop,nop,TS val 723061229 ecr 4107631988], length 0

00:03:10.790540 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [.], seq 1:1449, ack 1, win 2058, options [nop,nop,TS val 723061229 ecr 4107631988], length 1448

00:03:10.790544 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [P.], seq 1449:1879, ack 1, win 2058, options [nop,nop,TS val 723061229 ecr 4107631988], length 430

00:03:10.790554 IP 172.16.0.7.443 > 175.116.31.155.63439: Flags [.], ack 1449, win 476, options [nop,nop,TS val 4107631994 ecr 723061229], length 0

00:03:10.790575 IP 172.16.0.7.443 > 175.116.31.155.63439: Flags [.], ack 1879, win 473, options [nop,nop,TS val 4107631994 ecr 723061229], length 0

00:03:10.791059 IP 172.16.0.7.443 > 175.116.31.155.63439: Flags [P.], seq 1:255, ack 1879, win 473, options [nop,nop,TS val 4107631994 ecr 723061229], length 254

00:03:10.796129 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [.], ack 255, win 2054, options [nop,nop,TS val 723061235 ecr 4107631994], length 0

00:03:10.796132 IP 175.116.31.155.63439 > 172.16.0.7.443: Flags [P.], seq 1879:1909, ack 255, win 2054, options [nop,nop,TS val 723061235 ecr 4107631994], length 30