Bag of Word

Bag of Words 란 단어들의 순서는 전혀 고려하지 않고, 단어들의 출현 빈도 (frequency) 에만 집중하는 텍스트 데이터의 수치화 표현 방법이다.

Bag of Words 는 단어들이 들어있는 가방이란 뜻을 가지고 있다.

순서가 중요한 것이 아니라 특정 단어의 개수가 중요하다.

생성순서

- 각 단어에 고유한 정수 인덱스를 부여한다.

- 각 인덱스의 위치에 단어 토큰의 등장 횟수를 기록한 벡터를 만든다.

Bag of Word 단점

희소포현

- 전체 코퍼스가 방대한 데이터라면 문서 벡터 차원은 수만 이상의 차원을 가질 수도 있다. 또한 많은 문서 벡터가 대부분의 값이 0 을 가질 것이다.

단순 빈도 수 기반 접근

- 문서에서 불용어인 the 는 어떤 문서이든 자주 등장할 수 밖에 없다. 그런데 유사한 문서인지 비교 하고 싶은 문서1, 문서2, 문서3 에서 동일하게 the 빈도수가 높다고 해서 이 문서들이 유사한 문서라고 판단해서는 안된다.

from sklearn.feature_extraction.text import CountVectorizer

text_data = ['나는 배가 고프다 배가',

'내일 점심 뭐 먹지',

'내일 공부를 해야겠다',

'점심 먹고 열심히 공부해야지']

counter_vectorizer = CountVectorizer()

counter_vectorizer.fit(text_data)

print(counter_vectorizer.vocabulary_)

print()

print(counter_vectorizer.transform(text_data).toarray())

{'나는': 3, '배가': 7, '고프다': 0, '내일': 4, '점심': 9, '먹지': 6, '공부를': 1, '해야겠다': 10, '먹고': 5, '열심히': 8, '공부해야지': 2}

[[1 0 0 1 0 0 0 2 0 0 0]

[0 0 0 0 1 0 1 0 0 1 0]

[0 1 0 0 1 0 0 0 0 0 1]

[0 0 1 0 0 1 0 0 1 1 0]]

TF-IDF

TF-IDF Vectorizer 는 TF-IDF 라는 특정한 값을 사용해서 텍스트 데이터의 특징을 추출하는 방법이다.

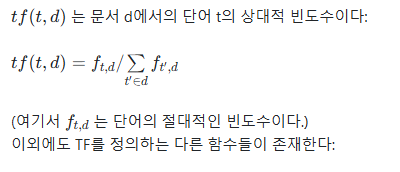

TF-IDF는 TF 와 IDF 를 곱한 값을 한다. 문서를 d, 단어를 t, 문서의 총 개수를 n이라고 표현한다.

TF(Term Frequency)란 특정 단어가 하나의 데이터 안에서 등장하는 횟 수를 의미한다.

출처 : https://namu.wiki/w/TF-IDF

DF(Document Frequency)란 특정 단어가 하나의 데이터 안에서 등장하는 횟 수를 의미한다.

DF(Document Frequency)는 문서 빈도 값으로, 여기서 특정 단어가 각 문서, 또는 문서들에서 몇 번 등장했는지는 관심가지지 않으며 오직 특정 단어 t가 등장한 문서의 수에만 관심을 가진다.

만약에 3개의 문서 중에서 문서2 와 문서3에 등장했다고 가정하면, 바나나의 df는 2 이다. 문서 3에서 바나나가 두 번 등장했더라도, 그것은 중요하지 않다. 심지어 바나나란 단어가 문서 2에서 100번 등장했고, 문서3에서 200번 등장했다고 하더라도 바나나의 df 는 2가 된다.

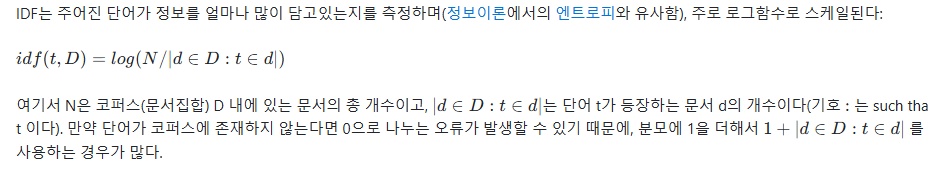

IDF(Inverse Document Frequency)는 DF 값에 역수를 취해서 구할 수 있으며, 특정 단어가 다른 데이터에 등장하지 않을수록 값이 커진다는 것을 의미한다.

출처 : https://namu.wiki/w/TF-IDF

log 를 사용하지 않았을 때, IDF 를 DF 의 역수로 사용한다면 총 문서의 수 n이 커질 수록, IDF 값은 기하급수적으로 커지게 된다. 그래서 log를 사용한다.

불용어 등과 같이 자주 쓰이는 단어들은 비교적 자주 쓰이지 않는 단어들보다 최소 수십 배 이다. 그런데 자주 쓰이지 않는 단어들조차 희귀 단어들과 비교하면 또 최소 수백 배더 많을 수 있다. 이때문에 log 를 씌워주지 않으면 , 희귀 단어들에 엄청난 가중치가 부여된다. 그러나 log 를 사용하면 이런 격차를 줄이는 효과가 있다.

TF-IDF란 위의 두 값을 곱해서 사용하므로 어떤 단어가 해당 문서에 자주 등장하지만 다른 문서에는 많이 없는 단어일수록 높은 값을 가지게 된다.

import pandas as pd

import numpy as np

docs = ['먹고 싶은 사과',

'먹고 싶은 바나나',

'길고 노란 바나나 바나나',

'나는 과일을 좋아해요']

vocab = list(set(w for doc in docs for w in doc.split()))

vocab.sort()

print(vocab)

'''

['과일을', '길고', '나는', '노란', '먹고', '바나나', '사과', '싶은', '좋아해요']

'''

N = len(docs)

def tf(t, d): # d : 문장 t : 해당단어

return d.count(t)

from math import log

def idf(t):

df = 0

for doc in docs:

df += t in doc

return log(N / (1 + df))

def tfidf(t,d):

return tf(t,d) * idf(t)

result = []

for i in range(N):

result.append([])

d = docs[i]

for j in range(len(vocab)):

t = vocab[j]

result[-1].append(tf(t, d))

tf_data = pd.DataFrame(result, columns=vocab)

print(tf_data)

'''

과일을 길고 나는 노란 먹고 바나나 사과 싶은 좋아해요

0 0 0 0 0 1 0 1 1 0

1 0 0 0 0 1 1 0 1 0

2 0 1 0 1 0 2 0 0 0

3 1 0 1 0 0 0 0 0 1

'''

result2 = []

for j in range(len(vocab)):

t = vocab[j]

result2.append(idf(t))

idf_data = pd.DataFrame(result2, index=vocab, columns=['IDF'])

print()

print(idf_data)

'''

IDF

과일을 0.693147

길고 0.693147

나는 0.693147

노란 0.693147

먹고 0.287682

바나나 0.287682

사과 0.693147

싶은 0.287682

좋아해요 0.693147

'''

print()

result3 = []

for i in range(N):

result3.append([])

d = docs[i]

for j in range(len(vocab)):

t = vocab[j]

result3[-1].append(tfidf(t,d))

tf_idf_data = pd.DataFrame(result3, columns=vocab)

print(tf_idf_data)

'''

과일을 길고 나는 ... 사과 싶은 좋아해요

0 0.000000 0.000000 0.000000 ... 0.693147 0.287682 0.000000

1 0.000000 0.000000 0.000000 ... 0.000000 0.287682 0.000000

2 0.000000 0.693147 0.000000 ... 0.000000 0.000000 0.000000

3 0.693147 0.000000 0.693147 ... 0.000000 0.000000 0.693147

[4 rows x 9 columns]

'''

word2Vec

원-핫 인코딩을 통해서 얻은 원-핫 벡터는 표현하고자 하는 단어의 인덱스 값만 1이고, 나머지 인덱스에는 전부 0으로 된다. 이와 같이 벡터 또는 행렬의 값이 대부분이 0으로 표현되는 방법을 희소 표현(sparse representation)이라고 한다.

하지만 이러한 표현 방법은 각 단어 벡터간 유의미한 유사성을 표현할 수 없다는 단점이 있다. 그래서 대안으로 단어의 의미를 다차원 공간에 벡터화 하는 방법을 사용하는데 이러한 표현을 분산 표현(distributed representation)이라고 한다. 그리고 분산 표현을 이용하여 단어 간 의미적 유사성을 벡터화하는 작업을 워드 임베딩(embedding)이라 부르며 이렇게 표현된 벡터를 임베딩 벡터(embedding vector)라고 한다.

분산 표현(distributed representation)방법은 기본적으로 분포 가설(distributional hypothesis)이 라는 가정 하에 만들어진 표현 방법이다. '비슷한 문맥에서 등장하는 단어들은 비슷한 의미를 가진다' 라는 가정이다

강아지라는 단어는 귀엽다, 예쁘다, 애교 등의 단어가 주로 함께 등장하는데 분포 가설에 따라서 해당 내용을 가진 텍스트의 단어들을 벡터화한다면 해당 단어 벡터들은 유사한 벡터값을 가지게 된다.

분산 표현은 분포 가설을 이용하여 텍스트를 학습하고, 단어의 의미를 벡터의 여러 차원에 분산하여 표현한다.

분산 표현은 저차원에 단어의 의미를 여러 차원에다가 분산 하여 표현한다. 이런 표현 방법을 사용하면 단어 벡터 간 유의미한 유사도를 계산할 수 있다. 이를 위한 대표적인 학습 방법이 Word2Vec 이다.

import torch

from torch.autograd import Variable

import numpy as np

import torch.nn.functional as F

corpus = [

'he is a king',

'she is a queen',

'he is a man',

'she is a woman',

'warsaw is poland capital',

'berlin is germany capital',

'paris is france capital'

]

def tokenize_corpus(corpus):

token = [x.split() for x in corpus]

return token

tokenized_corpus = tokenize_corpus(corpus)

print(tokenized_corpus)

'''

[['he', 'is', 'a', 'king'], ['she', 'is', 'a', 'queen'], ['he', 'is', 'a', 'man'], ['she', 'is', 'a', 'woman'], ['warsaw', 'is', 'poland', 'capital'], ['berlin', 'is', 'germany', 'capital'], ['paris', 'is', 'france', 'capital']]

'''

vocaburary = []

for sentence in tokenized_corpus:

for token in sentence:

if token not in vocaburary:

vocaburary.append(token)

print(vocaburary)

'''

['he', 'is', 'a', 'king', 'she', 'queen', 'man', 'woman', 'warsaw', 'poland', 'capital', 'berlin', 'germany', 'paris', 'france']

'''

word2idx = {w : idx for idx, w in enumerate(vocaburary)}

idx2word = {idx : w for idx, w in enumerate(vocaburary)}

print(word2idx)

'''

{'he': 0, 'is': 1, 'a': 2, 'king': 3, 'she': 4, 'queen': 5, 'man': 6, 'woman': 7, 'warsaw': 8, 'poland': 9, 'capital': 10, 'berlin': 11, 'germany': 12, 'paris': 13, 'france': 14}

'''

print(idx2word)

'''

{0: 'he', 1: 'is', 2: 'a', 3: 'king', 4: 'she', 5: 'queen', 6: 'man', 7: 'woman', 8: 'warsaw', 9: 'poland', 10: 'capital', 11: 'berlin', 12: 'germany', 13: 'paris', 14: 'france'}

'''

window_size = 2

idx_pairs = []

for sentence in tokenized_corpus:

indices = [word2idx[word] for word in sentence]

# print(indices)

'''

[0, 1, 2, 3]

[4, 1, 2, 5]

[0, 1, 2, 6]

[4, 1, 2, 7]

[8, 1, 9, 10]

[11, 1, 12, 10]

[13, 1, 14, 10]

'''

for center_word_pos in range(len(indices)):

for w in range(-window_size, window_size + 1 ):

context_word_pos = center_word_pos + w

if context_word_pos < 0 or context_word_pos >= len(indices) or center_word_pos == context_word_pos:

continue

context_word_idx = indices[context_word_pos]

idx_pairs.append([indices[center_word_pos], context_word_idx])

idx_pairs = np.array(idx_pairs)

print(idx_pairs)

'''

[[ 0 1]

[ 0 2]

[ 1 0]

[ 1 2]

[ 1 3]

[ 2 0]

[ 2 1]

[ 2 3]

[ 3 1]

[ 3 2]

[ 4 1]

[ 4 2]

[ 1 4]

[ 1 2]

[ 1 5]

[ 2 4]

[ 2 1]

[ 2 5]

[ 5 1]

[ 5 2]

[ 0 1]

[ 0 2]

[ 1 0]

[ 1 2]

[ 1 6]

[ 2 0]

[ 2 1]

[ 2 6]

[ 6 1]

[ 6 2]

[ 4 1]

[ 4 2]

[ 1 4]

[ 1 2]

[ 1 7]

[ 2 4]

[ 2 1]

[ 2 7]

[ 7 1]

[ 7 2]

[ 8 1]

[ 8 9]

[ 1 8]

[ 1 9]

[ 1 10]

[ 9 8]

[ 9 1]

[ 9 10]

[10 1]

[10 9]

[11 1]

[11 12]

[ 1 11]

[ 1 12]

[ 1 10]

[12 11]

[12 1]

[12 10]

[10 1]

[10 12]

[13 1]

[13 14]

[ 1 13]

[ 1 14]

[ 1 10]

[14 13]

[14 1]

[14 10]

[10 1]

[10 14]]

'''

vocaburary_size = len(vocaburary)

print(vocaburary_size)

'''

15

'''

print()

def get_input_layer(word_idx):

x = torch.zeros(vocaburary_size).float()

x[word_idx] = 1.0

return x

embedding_dims = 5

w1 = torch.tensor(torch.randn(vocaburary_size, embedding_dims), requires_grad=True)

w2 = torch.tensor(torch.randn(embedding_dims, vocaburary_size), requires_grad=True)

total_epochs = 100

learning_rate = 0.001

for epoch in range(total_epochs):

loss_val = 0

for data, target in idx_pairs:

x_data = torch.tensor(get_input_layer(data))

y_target = torch.tensor(torch.from_numpy(np.array([target]))).long()

z1 = torch.matmul(x_data, w1)

z2 = torch.matmul(z1,w2)

log_softmax = F.log_softmax(z2, dim=0)

loss = F.nll_loss(log_softmax.view(1,-1),y_target)

loss_val += loss.item()

loss.backward()

w1.data -= learning_rate * w1.grad.data

w2.data -= learning_rate * w2.grad.data

w1.grad.data.zero_()

w2.grad.data.zero_()

if epoch % 10 == 0:

print(f'epoch:{epoch+1}, loss:{loss_val/len(idx_pairs):.4f}')

'''

epoch:1, loss:4.1737

epoch:11, loss:3.8398

epoch:21, loss:3.6084

epoch:31, loss:3.4370

epoch:41, loss:3.3047

epoch:51, loss:3.1985

epoch:61, loss:3.1100

epoch:71, loss:3.0334

epoch:81, loss:2.9654

epoch:91, loss:2.9036

'''

print()

word1 = 'he'

word2 = 'king'

word1vector = torch.matmul(get_input_layer(word2idx[word1]),w1)

print(word1vector)

'''

tensor([-1.1517, 0.1347, 0.5270, 0.3812, -2.1801],

grad_fn=<SqueezeBackward4>)

'''

word2vector = torch.matmul(get_input_layer(word2idx[word2]),w1)

print(word2vector)

'''

tensor([ 1.0917, 1.0610, -1.6004, -1.2996, 1.6947],

grad_fn=<SqueezeBackward4>)

'''munge

from argparse import Namespace

import nltk.data

import pandas as pd

import re

args = Namespace(

raw_dataset_txt='data/books/frankenstein.txt',

window_size=5,

train_proportion = 0.7,

val_proportion = 0.15,

test_proportion = 0.15,

output_munged_csv = 'data/books/fankenstein_with_splits2.csv',

seed=1337

)

tokenizer = nltk.data.load('tokenizers/punkt/english.pickle')

with open(args.raw_dataset_txt)as fp:

book = fp.read()

sentences = tokenizer.tokenize(book)

# print(sentences)

print(len(sentences))

'''

3430

'''

print(sentences[100])

'''

This letter will reach England by a merchantman now on

its homeward voyage from Archangel; more fortunate than I, who may not

see my native land, perhaps, for many years.

'''

print()

def preprocess_text(text):

text = ' '.join(word.lower() for word in text.split())

text = re.sub(r'([.,!?])',r' \1 ',text)

text = re.sub(r'[^a-zA-Z.,!?]+',r' ',text)

return text

cleaned_sentences = [preprocess_text(sentence) for sentence in sentences]

print(cleaned_sentences[0])

'''

project gutenberg s frankenstein , by mary wollstonecraft godwin shelley this ebook is for the use of anyone anywhere at no cost and with almost no restrictions whatsoever .

'''

print()

MASK_TOKEN = '<MASK>'

flatten = lambda outer_list : [item for inner_list in outer_list for item in inner_list]

windows = flatten(list(nltk.ngrams([MASK_TOKEN] * args.window_size + sentence.split() + [MASK_TOKEN] * args.window_size,

args.window_size * 2 + 1) for sentence in cleaned_sentences))

print(windows[:5])

'''

[('<MASK>', '<MASK>', '<MASK>', '<MASK>', '<MASK>', 'project', 'gutenberg', 's', 'frankenstein', ',', 'by'), ('<MASK>', '<MASK>', '<MASK>', '<MASK>', 'project', 'gutenberg', 's', 'frankenstein', ',', 'by', 'mary'), ('<MASK>', '<MASK>', '<MASK>', 'project', 'gutenberg', 's', 'frankenstein', ',', 'by', 'mary', 'wollstonecraft'), ('<MASK>', '<MASK>', 'project', 'gutenberg', 's', 'frankenstein', ',', 'by', 'mary', 'wollstonecraft', 'godwin'), ('<MASK>', 'project', 'gutenberg', 's', 'frankenstein', ',', 'by', 'mary', 'wollstonecraft', 'godwin', 'shelley')]

'''

print(len(windows))

'''

87009

'''

data =[]

for window in windows:

target_token = window[args.window_size]

context = []

for i, token in enumerate(window):

if token == MASK_TOKEN or i == args.window_size:

continue

else:

context.append(token)

data.append([' '.join(token for token in context), target_token])

cbow_data = pd.DataFrame(data, columns=['context', 'target'])

print(cbow_data.head())

'''

context target

0 gutenberg s frankenstein , by project

1 project s frankenstein , by mary gutenberg

2 project gutenberg frankenstein , by mary wolls... s

3 project gutenberg s , by mary wollstonecraft g... frankenstein

4 project gutenberg s frankenstein by mary wolls... ,

'''

n = len(cbow_data)

print(n)

'''

87009

'''

print()

def get_split(row_num):

if row_num < n * args.train_proportion:

return 'train'

elif (row_num > n * args.train_proportion) and (row_num <= n * args.train_proportion + n * args.val_proportion):

return 'val'

else:

return 'test'

cbow_data['split'] = cbow_data.apply(lambda row:get_split(row.name), axis=1)

print(cbow_data.head())

'''

context target split

0 gutenberg s frankenstein , by project train

1 project s frankenstein , by mary gutenberg train

2 project gutenberg frankenstein , by mary wolls... s train

3 project gutenberg s , by mary wollstonecraft g... frankenstein train

4 project gutenberg s frankenstein by mary wolls... , train

'''

print(cbow_data.tail())

'''

context target split

87004 to our email newsletter to about new ebooks . hear test

87005 our email newsletter to hear new ebooks . about test

87006 email newsletter to hear about ebooks . new test

87007 newsletter to hear about new . ebooks test

87008 to hear about new ebooks . test

'''

cbow_data.to_csv(args.output_munged_csv, index=False)

embedding model 생성

import os

from argparse import Namespace

from collections import Counter

import json

import re

import string

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from sympy import vectorize

from torch.utils.data import Dataset, DataLoader

class Vocabulary(object):

""" 매핑을 위해 텍스트를 처리하고 어휘 사전을 만드는 클래스 """

##############################################################################

def __init__(self, token_to_idx=None, mask_token='<MASK>', add_unk=True, unk_token='<UNK>'):

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self._mask_token = mask_token

self.mask_index = self.add_token(self._mask_token)

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

##############################################################################

def to_serializable(self):

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token,

'mask_token': self._mask_token}

@classmethod

def from_serializable(cls, contents):

return cls(**contents)

def add_token(self, token):

if token in self._token_to_idx:

index = self._token_to_idx[token]

else:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

class CBOWVectorizer(object):

def __init__(self, cbow_vocab):

self.cbow_vocab = cbow_vocab

def vectorize(self, context, vector_length=-1):

##############################################################################

indices = [self.cbow_vocab.lookup_token(token) for token in context.split(' ')]

if vector_length < 0:

vector_length = len(indices)

out_vector = np.zeros(vector_length, dtype=np.int64)

out_vector[:len(indices)] = indices

out_vector[len(indices):] = self.cbow_vocab.mask_index

return out_vector

##############################################################################

@classmethod

def from_dataframe(cls, cbow_df):

cbow_vocab = Vocabulary()

for index, row in cbow_df.iterrows():

for token in row.context.split(' '):

cbow_vocab.add_token(token)

cbow_vocab.add_token(row.target)

return cls(cbow_vocab)

@classmethod

def from_serializable(cls, contents):

cbow_vocab = Vocabulary.from_serializable(contents['cbow_vocab'])

return cls(cbow_vocab=cbow_vocab)

def to_serializable(self):

return {'cbow_vocab': self.cbow_vocab.to_serializable()}

class CBOWDataset(Dataset):

def __init__(self, cbow_df, vectorizer):

self.cbow_df = cbow_df

self._vectorizer = vectorizer

######################################################################################

measure_len = lambda context: len(context.split(" "))

self._max_seq_length = max(map(measure_len, cbow_df.context))

######################################################################################

self.train_df = self.cbow_df[self.cbow_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.cbow_df[self.cbow_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.cbow_df[self.cbow_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

@classmethod

def load_dataset_and_make_vectorizer(cls, cbow_csv):

cbow_df = pd.read_csv(cbow_csv)

train_cbow_df = cbow_df[cbow_df.split=='train']

return cls(cbow_df, CBOWVectorizer.from_dataframe(train_cbow_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, cbow_csv, vectorizer_filepath):

cbow_df = pd.read_csv(cbow_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(cbow_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

with open(vectorizer_filepath) as fp:

return CBOWVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

return self._vectorizer

def set_split(self, split="train"):

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

####################################################################################

row = self._target_df.iloc[index]

context_vector = self._vectorizer.vectorize(row.context, self._max_seq_length)

target_index = self._vectorizer.cbow_vocab.lookup_token(row.target)

return {'x_data': context_vector,

'y_target': target_index}

#####################################################################################

def get_num_batches(self, batch_size):

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True, drop_last=True, device="cpu"):

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

class CBOWClassifier(nn.Module): # Simplified cbow Model

##############################################################################

def __init__(self, vocabulary_size, embedding_size, padding_idx=0):

super(CBOWClassifier, self).__init__()

self.embedding = nn.Embedding(num_embeddings=vocabulary_size,

embedding_dim=embedding_size,

padding_idx = padding_idx) # padding으로 사용한 인덱스 값을 전달

self.fc1 = nn.Linear(in_features=embedding_size,

out_features=vocabulary_size)

##############################################################################

def forward(self, x_in, apply_softmax=False):

##############################################################################

#d = self.embedding(x_in_) # 32, 10, 50

x_embedded_sum = F.dropout(self.embedding(x_in).sum(dim=1),0.3) # x_in : (32,10) x_embedded_sum :(32,50)

y_out = self.fc1(x_embedded_sum)

if apply_softmax:

y_out = F.softmax(y_out, dim=1)

return y_out

##############################################################################

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

def update_train_state(args, model, train_state):

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# 성능이 향상되면 모델을 저장합니다

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# 손실이 나빠지면

if loss_t >= train_state['early_stopping_best_val']:

# 조기 종료 단계 업데이트

train_state['early_stopping_step'] += 1

# 손실이 감소하면

else:

# 최상의 모델 저장

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

# 조기 종료 단계 재설정

train_state['early_stopping_step'] = 0

# 조기 종료 여부 확인

train_state['stop_early'] = train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

def compute_accuracy(y_pred, y_target):

_, y_pred_indices = y_pred.max(dim=1)

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

args = Namespace(

cbow_csv="data/books/frankenstein_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/day3/cbow",

embedding_size=50,

seed=1337,

num_epochs=100,

learning_rate=0.0001,

batch_size=32,

early_stopping_criteria=5,

cuda=True,

catch_keyboard_interrupt=True,

reload_from_files=False,

expand_filepaths_to_save_dir=True

)

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

print("파일 경로: ")

print("\t{}".format(args.vectorizer_file))

print("\t{}".format(args.model_state_file))

# CUDA 체크

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("CUDA 사용여부: {}".format(args.cuda))

# 재현성을 위해 시드 설정

set_seed_everywhere(args.seed, args.cuda)

# 디렉토리 처리

handle_dirs(args.save_dir)

dataset = CBOWDataset.load_dataset_and_make_vectorizer(args.cbow_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer()

##############################################################################

classifier = CBOWClassifier(vocabulary_size=len(vectorizer.cbow_vocab),

embedding_size=args.embedding_size)

##############################################################################

classifier = classifier.to(args.device)

loss_func = nn.CrossEntropyLoss()

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

try:

for epoch_index in range(args.num_epochs):

train_state['epoch_index'] = epoch_index

dataset.set_split('train')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.0

running_acc = 0.0

classifier.train()

print('epoch:',epoch_index+1)

for batch_index, batch_dict in enumerate(batch_generator):

optimizer.zero_grad()

y_pred = classifier(x_in=batch_dict['x_data'])

loss = loss_func(y_pred, batch_dict['y_target'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

loss.backward()

optimizer.step()

acc_t = compute_accuracy(y_pred, batch_dict['y_target'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['train_loss'].append(running_loss)

train_state['train_acc'].append(running_acc)

# 검증 세트와 배치 제너레이터 준비, 손실과 정확도를 0으로 설정

dataset.set_split('val')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

y_pred = classifier(x_in=batch_dict['x_data'])

loss = loss_func(y_pred, batch_dict['y_target'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

acc_t = compute_accuracy(y_pred, batch_dict['y_target'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['val_loss'].append(running_loss)

train_state['val_acc'].append(running_acc)

train_state = update_train_state(args=args, model=classifier,

train_state=train_state)

scheduler.step(train_state['val_loss'][-1])

if train_state['stop_early']:

break

except KeyboardInterrupt:

print("Exiting loop")

embedding model 사용

import os

from argparse import Namespace

from collections import Counter

import json

import re

import string

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

class Vocabulary(object):

""" 매핑을 위해 텍스트를 처리하고 어휘 사전을 만드는 클래스 """

##############################################################################

def __init__(self, token_to_idx=None, mask_token='<MASK>', add_unk=True, unk_token='<UNK>'):

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self._mask_token = mask_token

self.mask_index = self.add_token(self._mask_token)

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

##############################################################################

def to_serializable(self):

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token,

'mask_token': self._mask_token}

@classmethod

def from_serializable(cls, contents):

return cls(**contents)

def add_token(self, token):

if token in self._token_to_idx:

index = self._token_to_idx[token]

else:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

class CBOWVectorizer(object):

def __init__(self, cbow_vocab):

self.cbow_vocab = cbow_vocab

def vectorize(self, context, vector_length=-1):

##############################################################################

indices = [self.cbow_vocab.lookup_token(token) for token in context.split(' ')]

if vector_length < 0:

vector_length = len(indices)

out_vector = np.zeros(vector_length, dtype=np.int64)

out_vector[:len(indices)] = indices

out_vector[len(indices):] = self.cbow_vocab.mask_index

return out_vector

##############################################################################

@classmethod

def from_dataframe(cls, cbow_df):

cbow_vocab = Vocabulary()

for index, row in cbow_df.iterrows():

for token in row.context.split(' '):

cbow_vocab.add_token(token)

cbow_vocab.add_token(row.target)

return cls(cbow_vocab)

@classmethod

def from_serializable(cls, contents):

cbow_vocab = Vocabulary.from_serializable(contents['cbow_vocab'])

return cls(cbow_vocab=cbow_vocab)

def to_serializable(self):

return {'cbow_vocab': self.cbow_vocab.to_serializable()}

class CBOWDataset(Dataset):

def __init__(self, cbow_df, vectorizer):

self.cbow_df = cbow_df

self._vectorizer = vectorizer

measure_len = lambda context: len(context.split(" "))

self._max_seq_length = max(map(measure_len, cbow_df.context)) #가장 긴 문장 길이 추출

self.train_df = self.cbow_df[self.cbow_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.cbow_df[self.cbow_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.cbow_df[self.cbow_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

@classmethod

def load_dataset_and_make_vectorizer(cls, cbow_csv):

cbow_df = pd.read_csv(cbow_csv)

train_cbow_df = cbow_df[cbow_df.split=='train']

return cls(cbow_df, CBOWVectorizer.from_dataframe(train_cbow_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, cbow_csv, vectorizer_filepath):

cbow_df = pd.read_csv(cbow_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(cbow_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

with open(vectorizer_filepath) as fp:

return CBOWVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

return self._vectorizer

def set_split(self, split="train"):

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

row = self._target_df.iloc[index]

context_vector = self._vectorizer.vectorize(row.context, self._max_seq_length)

target_index = self._vectorizer.cbow_vocab.lookup_token(row.target)

return {'x_data': context_vector,

'y_target': target_index}

def get_num_batches(self, batch_size):

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True, drop_last=True, device="cpu"):

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

class CBOWClassifier(nn.Module): # Simplified cbow Model

##############################################################################

def __init__(self, vocabulary_size, embedding_size, padding_idx=0):

super(CBOWClassifier, self).__init__()

self.embedding = nn.Embedding(num_embeddings=vocabulary_size,

embedding_dim=embedding_size,

padding_idx=padding_idx)

self.fc1 = nn.Linear(in_features=embedding_size,

out_features=vocabulary_size)

##############################################################################

def forward(self, x_in, apply_softmax=False):

x_embedded_sum = F.dropout(self.embedding(x_in).sum(dim=1), 0.3)

y_out = self.fc1(x_embedded_sum)

if apply_softmax:

y_out = F.softmax(y_out, dim=1)

return y_out

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

def update_train_state(args, model, train_state):

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# 성능이 향상되면 모델을 저장합니다

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# 손실이 나빠지면

if loss_t >= train_state['early_stopping_best_val']:

# 조기 종료 단계 업데이트

train_state['early_stopping_step'] += 1

# 손실이 감소하면

else:

# 최상의 모델 저장

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

# 조기 종료 단계 재설정

train_state['early_stopping_step'] = 0

# 조기 종료 여부 확인

train_state['stop_early'] = train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

def compute_accuracy(y_pred, y_target):

_, y_pred_indices = y_pred.max(dim=1)

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

args = Namespace(

cbow_csv="data/books/frankenstein_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/day3/cbow",

embedding_size=50,

seed=1337,

num_epochs=100,

learning_rate=0.0001,

batch_size=32,

early_stopping_criteria=5,

cuda=True,

catch_keyboard_interrupt=True,

reload_from_files=False,

expand_filepaths_to_save_dir=True

)

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

print("파일 경로: ")

print("\t{}".format(args.vectorizer_file))

print("\t{}".format(args.model_state_file))

# CUDA 체크

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("CUDA 사용여부: {}".format(args.cuda))

# 재현성을 위해 시드 설정

set_seed_everywhere(args.seed, args.cuda)

# 디렉토리 처리

handle_dirs(args.save_dir)

dataset = CBOWDataset.load_dataset_and_make_vectorizer(args.cbow_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer()

##############################################################################

classifier = CBOWClassifier(vocabulary_size=len(vectorizer.cbow_vocab),

embedding_size=args.embedding_size)

##############################################################################

loss_func = nn.CrossEntropyLoss()

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

classifier.load_state_dict(torch.load(train_state['model_filename']))

classifier = classifier.to(args.device)

dataset.set_split('test')

batch_genrator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

classifier.eval()

target_words = ['frankenstein', 'monster', 'science', 'lonely', 'sickness']

embeddings = classifier.embedding.weight.data

def result_print(results):

for item in results:

print('[%.2f] = %s'%(item[1], item[0]))

def get_clossest(target_word, word_to_idx, embeddings, n=5):

word_embedding = embeddings[word_to_idx[target_word.lower()]]

distances = []

for word, index in word_to_idx.items():

if word == '<MASK>' or word == target_word:

continue

distances.append((word, torch.dist(word_embedding, embeddings[index])))

results = sorted(distances, key=lambda x: x[1])[:n+1]

return results

word_to_idx = vectorizer.cbow_vocab._token_to_idx

#print(word_to_idx)

for target_word in target_words:

print(f'=================================={target_word}=====================================')

if target_word not in word_to_idx:

print('not in vocabulary')

continue

result_print(get_clossest(target_word, word_to_idx, embeddings, n=7))

print()

surname RNN model

from argparse import Namespace

import os

import json

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

class Vocabulary(object):

def __init__(self, token_to_idx=None):

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token

for token, idx in self._token_to_idx.items()}

def to_serializable(self):

return {'token_to_idx': self._token_to_idx}

@classmethod

def from_serializable(cls, contents):

return cls(**contents)

def add_token(self, token):

if token in self._token_to_idx:

index = self._token_to_idx[token]

else:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

return self._token_to_idx[token]

def lookup_index(self, index):

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

class SequenceVocabulary(Vocabulary):

################################################################################

def __init__(self, token_to_idx=None, unk_token="<UNK>",

mask_token="<MASK>", begin_seq_token="<BEGIN>",

end_seq_token='<END>'):

super(SequenceVocabulary, self).__init__(token_to_idx)

self._mask_token = mask_token

self._unk_token = unk_token

self._begin_seq_token = begin_seq_token

self._end_seq_token = end_seq_token

self.mask_index = self.add_token(self._mask_token)

self.unk_index = self.add_token(self._unk_token)

self.begin_seq_index = self.add_token(self._begin_seq_token)

self.end_seq_index = self.add_token(self._end_seq_token)

################################################################################

def to_serializable(self):

contents = super(SequenceVocabulary, self).to_serializable()

contents.update({'unk_token': self._unk_token,

'mask_token': self._mask_token,

'begin_seq_token': self._begin_seq_token,

'end_seq_token': self._end_seq_token})

return contents

def lookup_token(self, token):

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

class SurnameVectorizer(object):

""" 어휘 사전을 생성하고 관리합니다 """

def __init__(self, char_vocab, nationality_vocab):

self.char_vocab = char_vocab

self.nationality_vocab = nationality_vocab

def vectorize(self, surname, vector_length=-1):

#####################################################################

indices = [self.char_vocab.begin_seq_index]

indices.extend(self.char_vocab.lookup_token(token)

for token in surname)

indices.append(self.char_vocab.end_seq_index)

if vector_length < 0 :

vector_length = len(indices)

out_vector = np.zeros(vector_length, dtype=np.int64)

out_vector[:len(indices)] = indices

out_vector[len(indices):] = self.char_vocab.mask_index

return out_vector, len(indices)

#####################################################################

@classmethod

def from_dataframe(cls, surname_df):

#####################################################################

char_vocab = SequenceVocabulary()

nationality_vocab = Vocabulary()

#####################################################################

for index, row in surname_df.iterrows():

for char in row.surname:

char_vocab.add_token(char)

nationality_vocab.add_token(row.nationality)

return cls(char_vocab, nationality_vocab)

@classmethod

def from_serializable(cls, contents):

char_vocab = SequenceVocabulary.from_serializable(contents['char_vocab'])

nat_vocab = Vocabulary.from_serializable(contents['nationality_vocab'])

return cls(char_vocab=char_vocab, nationality_vocab=nat_vocab)

def to_serializable(self):

return {'char_vocab': self.char_vocab.to_serializable(),

'nationality_vocab': self.nationality_vocab.to_serializable()}

class SurnameDataset(Dataset):

def __init__(self, surname_df, vectorizer):

self.surname_df = surname_df

self._vectorizer = vectorizer

self._max_seq_length = max(map(len, self.surname_df.surname)) + 2

self.train_df = self.surname_df[self.surname_df.split=='train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split=='val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split=='test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# 클래스 가중치

class_counts = self.train_df.nationality.value_counts().to_dict()

def sort_key(item):

return self._vectorizer.nationality_vocab.lookup_token(item[0])

sorted_counts = sorted(class_counts.items(), key=sort_key)

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split=='train']

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

surname_df = pd.read_csv(surname_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

with open(vectorizer_filepath) as fp:

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

return self._vectorizer

def set_split(self, split="train"):

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

############################################################################

row = self._target_df.iloc[index]

surname_vector, vec_length = self._vectorizer.vectorize(row.surname, self._max_seq_length)

nationality_index = self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_data': surname_vector,

'y_target': nationality_index,

'x_length': vec_length}

############################################################################

def get_num_batches(self, batch_size):

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True, drop_last=True, device="cpu"):

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

def column_gather(y_out, x_lengths):

#각 입력 된 성씨 문자 갯 수(seq)가 다르기 때문에 누적된 값이 마지막 시퀀스를 찾아 전달한다. 뒤에는 mask값이 적용되어 의미가 없기 때문이다.

#####################################################################

x_lengths = x_lengths.long().detach().cpu().numpy() - 1

out = []

for batch_index, column_index in enumerate(x_lengths):

out.append(y_out[batch_index, column_index]) # column_index : 마지막 것

return torch.stack(out)

#####################################################################

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, batch_first=False):

#####################################################################

super(RNN, self).__init__()

self.rnn_cell = nn.RNNCell(input_size, hidden_size)

self.batch_first = batch_first

self.hidden_size = hidden_size

#####################################################################

def _initial_hidden(self, batch_size):

return torch.zeros((batch_size, self.hidden_size))

def forward(self, x_in, initial_hidden=None):

#####################################################################

if self.batch_first:

batch_size, seq_size, feat_size = x_in.size()

x_in = x_in.permute(1,0,2)

else:

seq_size, batch_size, feat_size = x_in.size()

hiddens=[]

if initial_hidden is None:

initial_hidden = self._initial_hidden(batch_size)

initial_hidden = initial_hidden.to(x_in.device)

hidden_t = initial_hidden

for t in range(seq_size):

hidden_t = self.rnn_cell(x_in[t], hidden_t)

hiddens.append(hidden_t)

hiddens = torch.stack(hiddens)

if self.batch_first:

hiddens = hiddens.permute(1,0,2)

return hiddens

#####################################################################

class SurnameClassifier(nn.Module):

#####################################################################

def __init__(self, embedding_size, num_embeddings, num_classes,

rnn_hidden_size, batch_first=True, padding_idx=0):

super(SurnameClassifier, self).__init__()

self.emb = nn.Embedding(num_embeddings=num_embeddings,

embedding_dim=embedding_size,

padding_idx=padding_idx)

self.rnn = RNN(input_size=embedding_size,

hidden_size=rnn_hidden_size,

batch_first=batch_first)

self.fc1 = nn.Linear(in_features=rnn_hidden_size,

out_features=rnn_hidden_size)

self.fc2 = nn.Linear(in_features=rnn_hidden_size,

out_features=num_classes)

#####################################################################

def forward(self, x_in, x_lengths=None, apply_softmax=False):

'''

:param x_in: 입력 텐서

:param x_lengths: 배치에 있는 각 시퀀스의 길이(시퀀스의 마지막 벡터를 찾는데 이용

'''

#####################################################################

x_embedded = self.emb(x_in)

y_out = self.rnn(x_embedded)

if x_lengths is not None:

y_out = column_gather(y_out, x_lengths)

else:

y_out = y_out[:, -1, :]

y_out = F.relu(self.fc1(F.dropout(y_out,0.5)))

y_out = self.fc2(F.dropout(y_out, 0.5))

if apply_softmax:

y_out = F.softmax(y_out, dim=1)

return y_out

#####################################################################

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

args = Namespace(

surname_csv="data/surnames/surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/day3/surname_classification",

char_embedding_size=100,

rnn_hidden_size=64,

num_epochs=100,

learning_rate=1e-3,

batch_size=64,

seed=1337,

early_stopping_criteria=5,

cuda=True,

catch_keyboard_interrupt=True,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

)

# CUDA 체크

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("CUDA 사용여부: {}".format(args.cuda))

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

# 재현성을 위해 시드 설정

set_seed_everywhere(args.seed, args.cuda)

# 디렉토리 처리

handle_dirs(args.save_dir)

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer()

#####################################################################

classifier = SurnameClassifier(embedding_size=args.char_embedding_size,

num_embeddings=len(vectorizer.char_vocab),

num_classes=len(vectorizer.nationality_vocab),

rnn_hidden_size=args.rnn_hidden_size,

padding_idx=vectorizer.char_vocab.mask_index)

#####################################################################

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

def update_train_state(args, model, train_state):

# 적어도 한 번 모델을 저장합니다

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# 성능이 향상되면 모델을 저장합니다

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# 손실이 나빠지면

if loss_t >= loss_tm1:

# 조기 종료 단계 업데이트

train_state['early_stopping_step'] += 1

# 손실이 감소하면

else:

# 최상의 모델 저장

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['early_stopping_best_val'] = loss_t

# 조기 종료 단계 재설정

train_state['early_stopping_step'] = 0

# 조기 종료 여부 확인

train_state['stop_early'] = \

train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

def compute_accuracy(y_pred, y_target):

_, y_pred_indices = y_pred.max(dim=1)

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

try:

for epoch_index in range(args.num_epochs):

train_state['epoch_index'] = epoch_index

dataset.set_split('train')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.0

running_acc = 0.0

classifier.train()

print('epoch:', epoch_index+1)

for batch_index, batch_dict in enumerate(batch_generator):

optimizer.zero_grad()

y_pred = classifier(x_in=batch_dict['x_data'],

x_lengths=batch_dict['x_length'])

loss = loss_func(y_pred, batch_dict['y_target'])

running_loss += (loss.item() - running_loss) / (batch_index + 1)

loss.backward()

optimizer.step()

acc_t = compute_accuracy(y_pred, batch_dict['y_target'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['train_loss'].append(running_loss)

train_state['train_acc'].append(running_acc)

# 검증 세트에 대한 순회

dataset.set_split('val')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

y_pred = classifier(x_in=batch_dict['x_data'],

x_lengths=batch_dict['x_length'])

loss = loss_func(y_pred, batch_dict['y_target'])

running_loss += (loss.item() - running_loss) / (batch_index + 1)

acc_t = compute_accuracy(y_pred, batch_dict['y_target'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['val_loss'].append(running_loss)

train_state['val_acc'].append(running_acc)

print('val loss:{:.4f} val_acc:{:4f}\n'.format(running_loss, running_acc))

train_state = update_train_state(args=args, model=classifier,

train_state=train_state)

scheduler.step(train_state['val_loss'][-1])

if train_state['stop_early']:

break

except KeyboardInterrupt:

print("반복 중지")