How to build Agent Without Langchain (Scott Logic)

- 랭체인과 랭그래프를 사용하던 와중, 파이프라인에 대한 이해 부족과 구현의 필요성을 느꼈고 이를 랭체인과 랭그래프 플랫폼 외부에서 찾아보고자 했다.

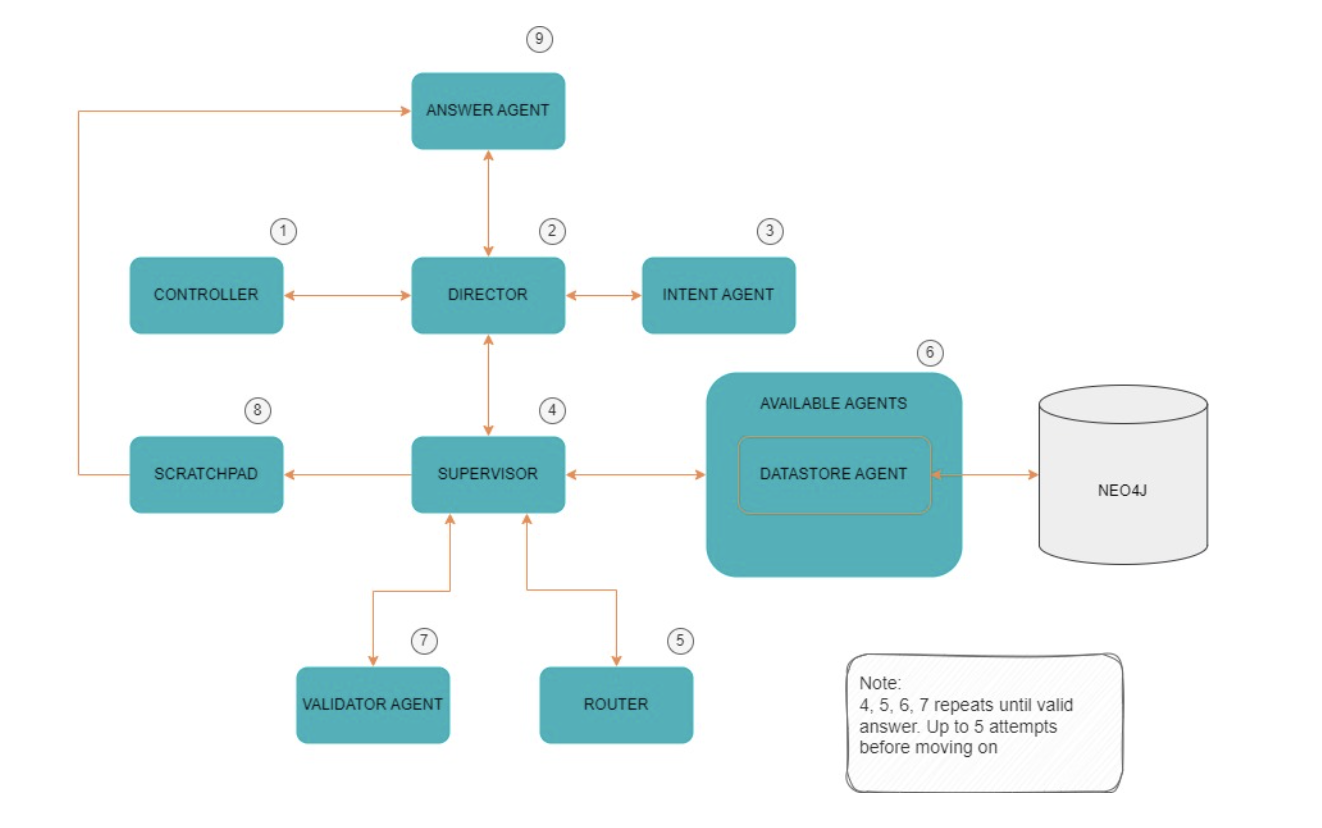

Key Flow

- Controller: The entry point to the backend, where we set up our chat endpoint via FastAPI. The chat endpoint delegates to the director to get the response for the user.

- Director: No LLM involved. The director handles the user’s query, breaking it down into solvable questions for the supervisor and returning the final response to the - controller.

- Intent Agent: An LLM call is used to break up the user’s original query into its intent, ensuring more reliable and repeatable results.

- Supervisor: No LLM involved here. The supervisor loops through the questions it needs to solve:

- Router: Decides the best next step. With a list of available agents, the current task to solve, and the history of results, the router makes an LLM call to decide which agent should solve the task.

- Available Agents: The supervisor invokes the chosen agent to get a response. For example, a datastore agent would be chosen for a data-driven question. It would then use an LLM to generate a Cypher query to retrieve the data and return the response to the supervisor.

- Validator Agent: With a response from the question agent, the supervisor checks with the validator agent if the answer is sufficient. An LLM call is used to determine whether the answer is satisfactory.

- Scratchpad: A simple object that the supervisor uses to record every answer to each question.

- Answer Agent: With each result written to the scratchpad, the director can then invoke the answer agent to summarise the scratchpad, resulting in the final response for the user.

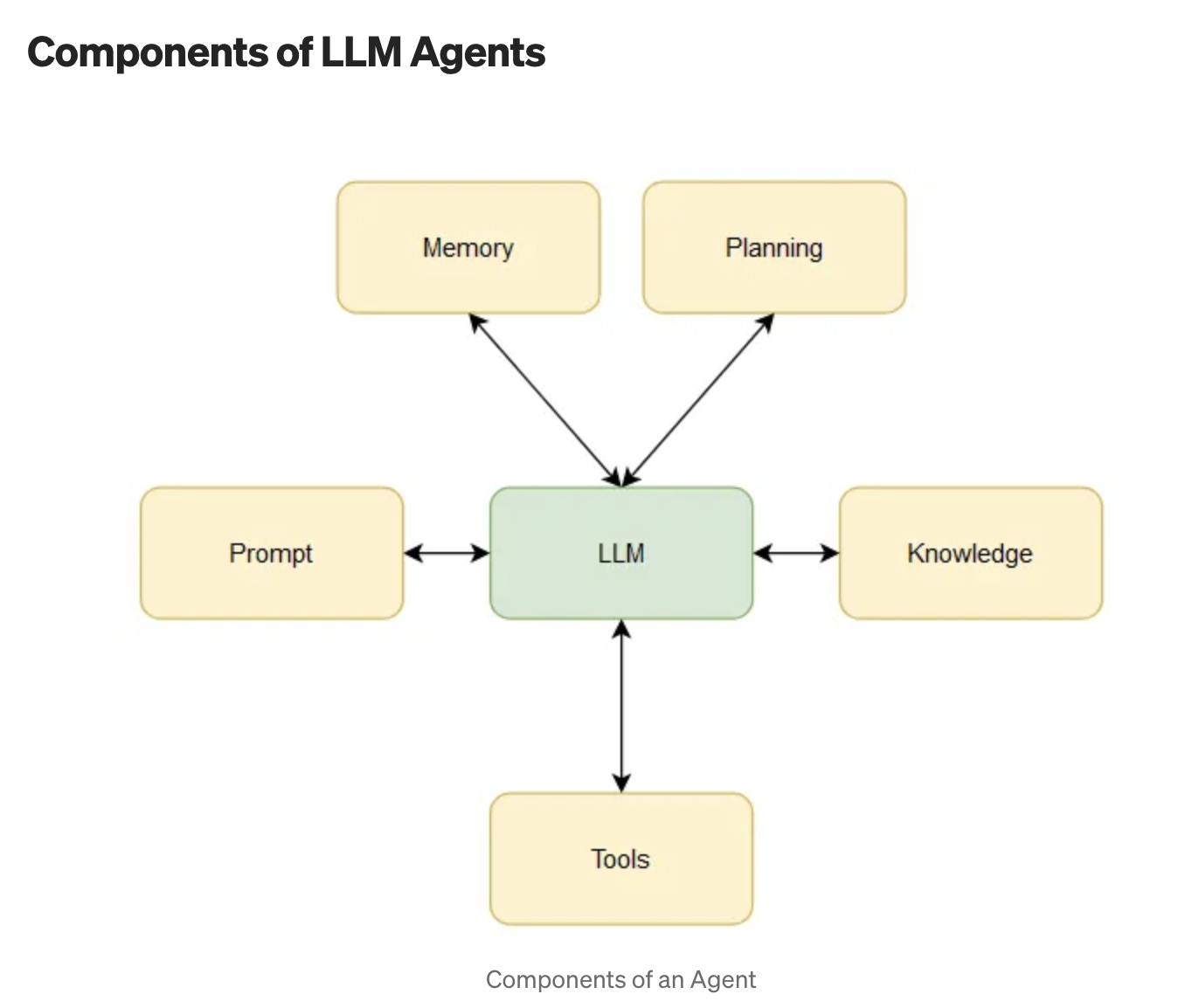

Definition of LLM (Medium Blog)

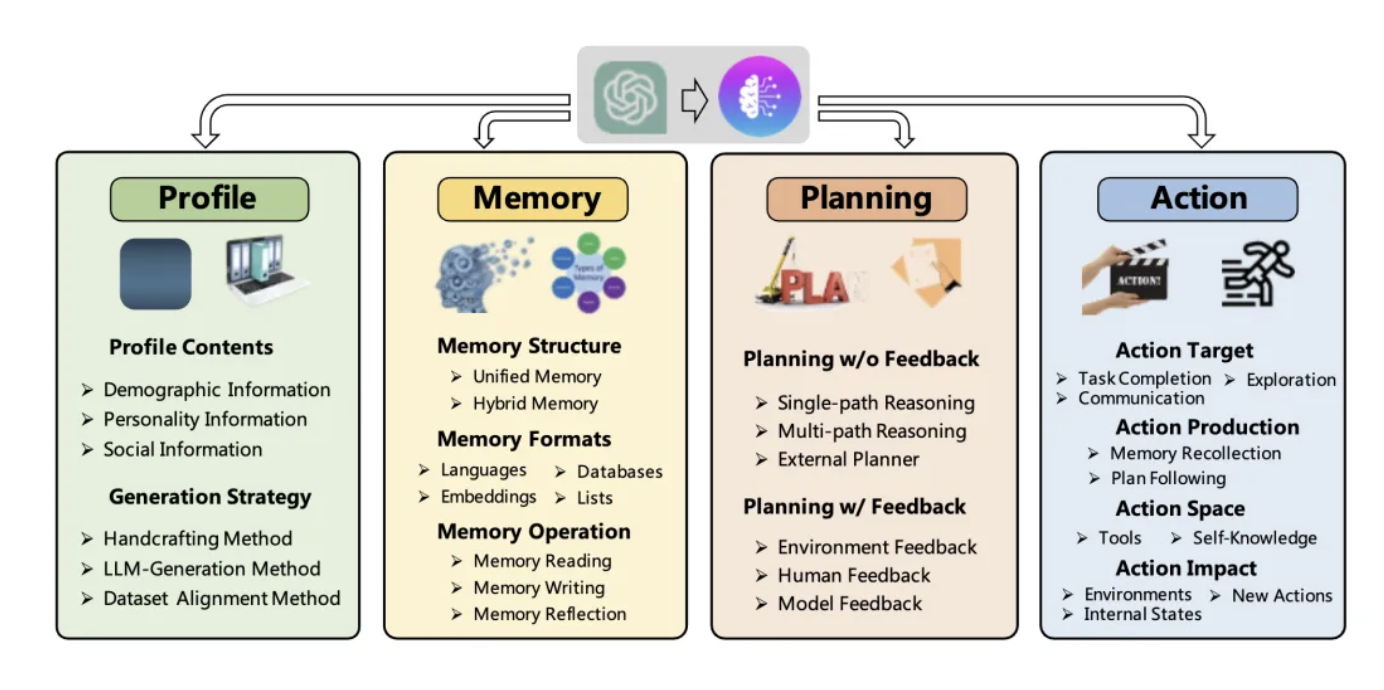

Agents Orchestration (Medium Blog)

REFERENCE

- Building a Multi Agent Chatbot Without LangChain (ScottLogic)

- What is an LLM Agent and how does it work? (Blogpost by Keremaydin)

- Understand the LLM Agent Orchestration (Blogpost by Haiping Chen)

USEFUL LINKS