전이 학습

- 특정 조건에서 얻어진 어떤 지식을 다른 상황에 맞게 '전이'하여 활용하는 학습방법

- 데이터 부족을 어느정도 해결할 수 있음

- 학습에 걸리는 시간이 줄어듬

- 시물레이션에서 학습된 모델을 현실에 적용할 수 있게 해줌

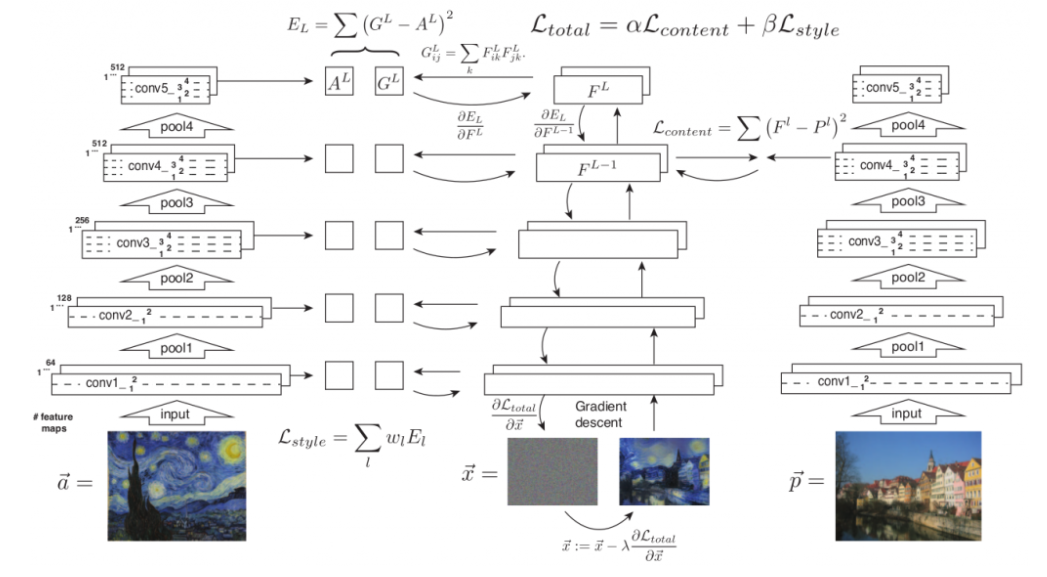

스타일 트랜스퍼

- 전이학습의 단적인 예로 범용이 가능하여 작업종류와 관계없이 물체를 인식하는 데 모두 적용될 수 있어 학습된 모델에서 얻은 지식을 다른 작업에 전이할 수 있음

스타일 트랜스퍼 모델 학습의 전체적 구조

L-BFGS 알고리즘

- 최적화 알고리즘으로 2차 미분값까지 이용

- 뉴턴 메서드 방법 : f(x)=0인 x에 수렴한다

- 값을 정확히 계산하는 대신 근사하는 방식으로 바꿔 연산 속도를 높임,O(n**2) 시간 소요

코드 구현

# https://discuss.pytorch.org/t/how-to-extract-features-of-an-image-from-a-trained-model/119/3

# https://github.com/leongatys/PytorchNeuralStyleTransfer

import torch

import torch.nn as nn

import torch.optim as optim

import torch.utils as utils

import torch.utils.data as data

import torchvision.models as models

import torchvision.utils as v_utils

import torchvision.transforms as transforms

from torch.autograd import Variable

import matplotlib.pyplot as plt

from PIL import Image

# hyperparameters

content_dir = "./image/content/Neckarfront_origin.jpg"

style_dir = "./image/style/monet.jpg"

content_layer_num = 4

image_size = 512

epoch = 10000

# import pretrained resnet50 model

# check what layers are in the model

resnet = models.resnet50(pretrained=True)

for name,module in resnet.named_children():

print(name)

# resnet without fully connected layers

# return activations in each layers

class Resnet(nn.Module):

def __init__(self):

super(Resnet,self).__init__()

self.layer0 = nn.Sequential(*list(resnet.children())[0:1])

self.layer1 = nn.Sequential(*list(resnet.children())[1:4])

self.layer2 = nn.Sequential(*list(resnet.children())[4:5])

self.layer3 = nn.Sequential(*list(resnet.children())[5:6])

self.layer4 = nn.Sequential(*list(resnet.children())[6:7])

self.layer5 = nn.Sequential(*list(resnet.children())[7:8])

def forward(self,x):

out_0 = self.layer0(x)

out_1 = self.layer1(out_0)

out_2 = self.layer2(out_1)

out_3 = self.layer3(out_2)

out_4 = self.layer4(out_3)

out_5 = self.layer5(out_4)

return out_0, out_1, out_2, out_3, out_4, out_5

# read & preprocess image

def image_preprocess(img_dir):

img = Image.open(img_dir)

transform = transforms.Compose([

transforms.Scale(image_size),

transforms.CenterCrop(image_size),

transforms.ToTensor(),

transforms.Normalize(mean=[0.40760392, 0.45795686, 0.48501961],

std=[1,1,1]),

])

img = transform(img).view((-1,3,image_size,image_size))

return img

# image post process

def image_postprocess(tensor):

transform = transforms.Normalize(mean=[-0.40760392, -0.45795686, -0.48501961],

std=[1,1,1])

img = transform(tensor.clone())

img[img>1] = 1

img[img<0] = 0

return 2*img-1

# show image given a tensor as input

def imshow(tensor):

image = tensor.clone().cpu()

image = image.view(3, image_size, image_size)

image = transforms.ToPILImage()(image)

plt.imshow(image)

plt.show()

# gram matrix

class GramMatrix(nn.Module):

def forward(self, input):

b,c,h,w = input.size()

F = input.view(b, c, h*w)

G = torch.bmm(F, F.transpose(1,2))

return G

# gram matrix mean squared error

class GramMSELoss(nn.Module):

def forward(self, input, target):

out = nn.MSELoss()(GramMatrix()(input), target)

return(out)

# initialize resnet and put on gpu

# model is not updated so .requires_grad = False

resnet = Resnet().cuda()

for param in resnet.parameters():

param.requires_grad = False

# get content and style image & image to be generated

content = Variable(image_preprocess(content_dir), requires_grad=False).cuda()

style = Variable(image_preprocess(style_dir), requires_grad=False).cuda()

generated = Variable(content.data.clone(),requires_grad=True)

v_utils.save_image(image_postprocess(image_preprocess(content_dir)),"./content.png")

v_utils.save_image(image_postprocess(image_preprocess(style_dir)),"./style.png")

# set targets and style weights

style_target = list(GramMatrix().cuda()(i) for i in resnet(style))

content_target = resnet(content)[content_layer_num]

style_weight = [1/n**2 for n in [64,64,256,512,1024,2048]]

# set LBFGS optimizer

# change the image through training

optimizer = optim.LBFGS([generated])

iteration = [0]

while iteration[0] < epoch:

def closure():

optimizer.zero_grad()

out = resnet(generated)

style_loss = [GramMSELoss().cuda()(out[i],style_target[i])*style_weight[i] for i in range(len(style_target))]

content_loss = nn.MSELoss().cuda()(out[content_layer_num],content_target)

total_loss = 1000 * sum(style_loss) + sum(content_loss)

total_loss.backward()

if iteration[0] % 100 == 0:

print(total_loss)

v_utils.save_image(image_postprocess(generated.data),"./gen_{}.png".format(iteration[0]))

iteration[0] += 1

return total_loss

optimizer.step(closure)***파이토치 첫걸음 (최건호 저) ch 8 요약