1) deep CNN의 minimal training data 제한 , issue 제공.

New 3 phase model for decision support of CNN transfer learning (Learning mechanism), data augmentation (Data pre-processing), hyperparamter optimization (Learning mechanism).

1) Data augmentation

-

AlexNet, VGG16, CNN, and MLP models (뭘 사용했는지 확인)

2) optimal combination of paramters (HPO) -

A random-based search strategy

Introduction

1) ImageNet의 큰 개념의 dataset에서는 dropout, deep CNN

-

CNN의 단점

(1) weight parameter learning, labeled training sample들이 필요하다는 점- weight의 값들을 현재 상태에서 markov처럼 다음 단계로 넘어갈때, 이 weight에 대한 상태값을 어떤식으로 변형 할건지 아니면 그것을 임계값을 기준으로 처리할건지에 대한 기준에 따라서 last output이 달라진다. 이 부분은 CNN의 장점으로 보통 사용을 한다고 논문에서는 그냥 임의로 깔고 들어가는 듯 하다

(2) GPU가 learning process에서 당연시 된다는 점을 단점으로 두었는데, 이 부분에 대해서는 지금 현재 AI 시장의 방식이 DNN보다 CNN의 resource 문제에도 CNN이나 SNN로 계속 모든 model이 진화되가는 과정은 지극히 prediction accuracy나 embedded system에서 높은 성과를 띄고 있기 때문인데 이 현상을 단점으로 두는건 좀 이상하다는 생각이 듬.

The medical data -> high accuracy. Chanllenge of algorithms with the medical decision with images combined with AI methods.

HPO (Hyperparamter Optimization) - Extract features from processed images automatically

1) Optimal classification results of hyperparamterrs for differnt models; AlexNet, VGG16, CNN, and multilayer perceptron (MLP).

2) Data augementatino technique to enrich the training datasets

3) MPII human pose dataset - weight의 값들을 현재 상태에서 markov처럼 다음 단계로 넘어갈때, 이 weight에 대한 상태값을 어떤식으로 변형 할건지 아니면 그것을 임계값을 기준으로 처리할건지에 대한 기준에 따라서 last output이 달라진다. 이 부분은 CNN의 장점으로 보통 사용을 한다고 논문에서는 그냥 임의로 깔고 들어가는 듯 하다

MPII human pose dataset

25,000 images from online videos

Data-set split (75%, 15%, 10%)

1) Data cycle over in mini-batch in each optimizatin iteration (Determied by the batch size)

Model

-

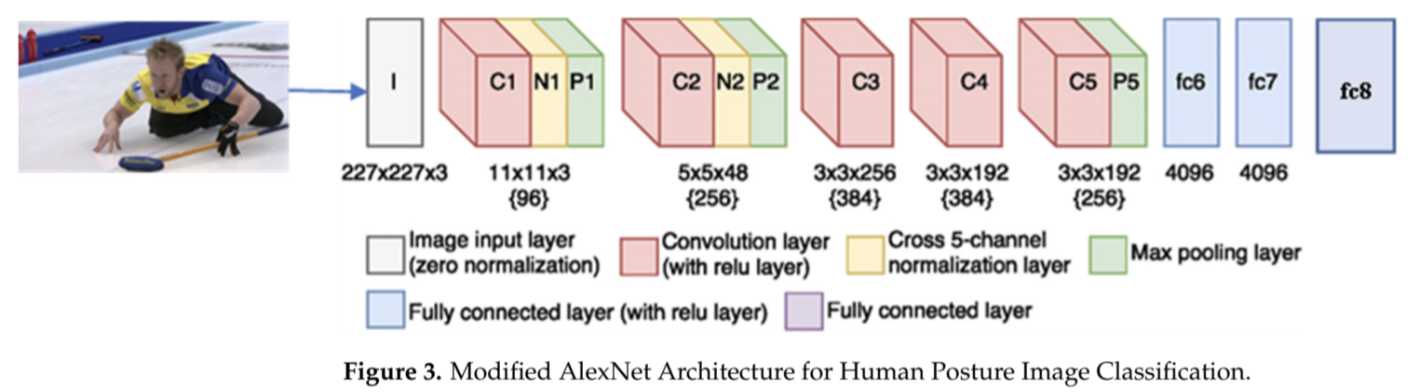

AlexNet

- Input layer, convolutional layer, pooling layer, fully connected layer.

-

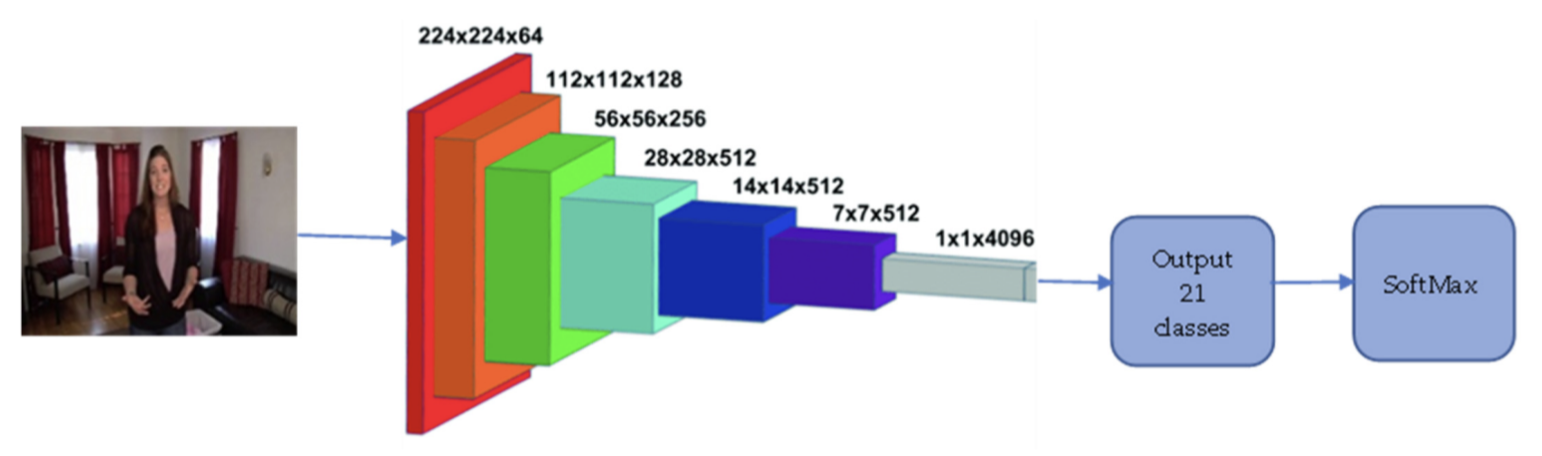

VGG16

- 16 layers ( 13 Convolutional layers + 3 Fully Connected layers)

System Architecture

1) RandomSearch hyper-parameter optimization

2) Data augmentation techniques

- Rotation

- Translation

- Zoom

- Flips

- Shear

- Mirror

- Color Perturbation

The hyper-parameter tuning 자체가 training 과 performance에서 큰 영향을 주고 있다는 것을 설명.

HPO (Hyper paramter optimization)

Critical distinction

The key impact features in network

initial learning rate, learning rate decay factor, number of hidden neurons, regularizatoin strength.

Model (MLP + HPO)

reduce the execution time

AlexNet model & VGG16 model), IDA보다는 HPO가 훨씬 더 효과적으로 training accuracy 랑 testing accuracy 증가