AWS Solutions Architect Associate

2월23일에 응시할 AWS SAA C03 시험을 준비하기 위해

Ultimate AWS Certified Solutions Architect Associate SAA-C03

를 수강했고, 실전문제를 풀이한 내용을 정리하는 포스트입니다.

AWS의 기초적인 서비스들에 대해선 익숙하고 프로젝트에도 적용해 봤으므로 준비기간을 7일 정도로 타이트하게 잡았다. 예상과는 달리 IAM, VPC, Kinesis 등의 개념들이 너무 새롭고 복잡해서 합격에 많은 어려움이 예상된다.

2월20일에 부트캠프가 끝나서 좀 쉬고 싶었지만 나태해지지 않기 위해서 과감하게 시험을 접수했고, 하루종일 공부해야한다는 사실에 기뻐 눈물을 흘리면서 학습중.😢

문제와 풀이 정리

키워드 중심으로 간략하게 이해하기 쉽게 정리한다.

1. BigData, Performance first, distributed processing

Placement group as cluster

ec2 placement group options are

- cluter : HPC

- spread : across racks, failure resilient

- partition : spread across logical partition, for hadoop, kafka

2. EC2, ASG, Aurora, need to handle periodic spikes of requests

use cloud front in front of alb, and use aurora read replica

wrong answers are

- global accelerator : suitable for non-http traffic spikes, such as UDP, MQTT, VoIP

- AWS Direct Connect : dedicated connection between on-premise and VPC. Set up private netwok, no internet connection

3. complicated linked query, social media, correct DB?

Netpune for Graph DB

AWS neptune details

- fully managed, high perf, HA with read replica service

- S3 backup, HTTPs, milliseconds latency

Wrong answers

- elasticSearch : fully managed analytics SQL query on S3, Kinesis, CloudWatch

- Redishift : PB scale database warehouse

4. financial company, ACM expiration monitor, alarm feature

AWS Config managed rule, Trigger SNS notification

wrong answers

- using cloudwatch metric is possible, but not the most effortless option

5. Protect sensitive data stored on S3

Guard Duty for monitor activities on the data by watching streams of meta-data generated by related activities

Macie for ML supported scan and protection on S3

6. Migrate on-premise DB with complex configuration to AWS

AWS DB Migration Service supports migrations between heterogeneous DBs

AWS Schema Conversion Tool can perform migrations with complex configurations

Wrong answers

- Basic Schema Copy : Basic Schema Copy will not migrate secondary indexes, foreign keys or stored procedures.

- Snowball Edge : transfer dozens of terabytes to petabytes of data to AWS. It provides up to 80 TB of usable HDD storage with maximum 10 devices.

7. Best security practice as a IAM admin

Use permissions boundary to control the maximum permissions employees can grant to the IAM principals

Wrong Answers

- Remove access for all IAM users in the organization : Not practical

8. HA, in-memory database

ElastiCache for Redis/Memcached

9. connecting HQ on AWS VPC and Site to Site VPN altogether between

VPN CloudHub

Wrong Answers

- VPC Peering : networking connection between two VPCs within AWS

10. Cost Optimization for EC2, RDS, S3

Use AWS Cost Explorer Resource Optimization to get a report of EC2 instances that are either idle or have low utilization and use AWS Compute Optimizer to look at instance type recommendations

11. EC2 User data spec

- By default, user data runs only during the boot cycle when you first launch an instance

- By default, scripts entered as user data are executed with root user privileges

Wrong answers

- User data is not executed on instance restart

12. Amazon GuardDuty targets

VPC Flow Logs, DNS Logs, Cloud Trail events

13. How to setup IPsec with on-premise

Create a Virtual Private Gateway on the AWS side of the VPN and a Customer Gateway on the on-premises side of the VPN

You must set up appropriate gateway for both on-premise and AWS

14. EBS volume encrypt options

at rest, in flight, snapshot encryptions are available

15. Migrating 5PB of on-premise data to AWS

Transfer the on-premises data into multiple Snowball Edge Storage Optimized devices. Copy the Snowball Edge data into Amazon S3 and create a lifecycle policy to transition the data into AWS Glacier

Wrong Answers

- You can't copy snowball data directly into S3 Glacier.

16. Aurora read replica

Set up a read replica and modify the application to use the appropriate endpoint

Wrong Answers

- Configure the application to read from the Multi-AZ standby instance : Aurora doesn't have standby instance.

17. How to distribute static content cost-efficiently

Distribute the static content through Amazon S3

18. Easist way to get notifications from fleet of EC2 metrics

SNS, CloudWatch

You can use CloudWatch Alarms to send an email via SNS whenever any of the EC2

Wrong Answers

- Lambda : lambda can't monitor metrics and sns itself can send an email

19. EC2 options for migrating server bound licensed on-premise application

Use EC2 dedicated hosts

Wrong Answers:

- Use EC2 dedicated instances : may share hardware with other instnaces from same AWS account

20. Proper NLB routing plans

Route traffic to instances using the primary private IP address specified in the primary network interface for the instance

21. Best way to transfer on-premise nfs files to EFS

Configure an AWS DataSync agent on the on-premises server that has access to the NFS file system. Transfer data over the Direct Connect connection to an AWS PrivateLink interface VPC endpoint for Amazon EFS by using a private VIF. Set up a DataSync scheduled task to send the video files to the EFS file system every 24 hours

You can get direct connection between on-premise and EFS through private VIF.

You can use AWS DataSync to migrate data located on-premises, at the edge, or in other clouds to Amazon S3, Amazon EFS, Amazon FSx for Windows File Server, Amazon FSx for Lustre, Amazon FSx for OpenZFS, and Amazon FSx for NetApp ONTAP.

Wrong Answers

- Use S3 and lamabda in between : it is possible but direct connection is available and more efficient.

22. Migrations for on-premise Oracle DB

Leverage multi-AZ configuration of RDS Custom for Oracle that allows the database administrators to access and customize the database environment and the underlying operating system

You must choose RDS custom for OS level access to RDS instance

23. Migrating PBs data between S3 buckets

- Copy data from the source bucket to the destination bucket using the aws S3 sync command

- Set up S3 batch replication to copy objects across S3 buckets in different Regions using S3 console

Wrong Answers:

- Snowball Edge : on-premise to AWS

- S3 Console : PBs data is too huge for this

- S3 Transfer Acceleration : client to AWS

24. Big Data Company wants throttles spikable requests

Amazon API Gateway, Amazon SQS and Amazon Kinesis

API Gateway - API Gateway sets a limit on a steady-state rate and a burst of request submissions against all APIs in your account.

SQS - SQS offers buffer capabilities to smooth out temporary volume spikes without losing messages or increasing latency.

Kinesis - Kinesis is a fully managed, scalable service that can ingest, buffer, and process streaming data in real-time.

Wrong Answers:

- AWS Gateway Endpoints : cannot help in throttling or buffering of requests.

- AWS Lambda : If function is at maximum concurrency, Lambda throttles those requests with error code 429 status code

25. How interconnect multiple VPCs

Use a transit gateway to interconnect the VPCs

A transit gateway is a network transit hub that you can use to interconnect your virtual private clouds (VPC) and on-premises networks.

Wrong Answers:

- VPC peering connections between all VPCs : possible, but not a best practice

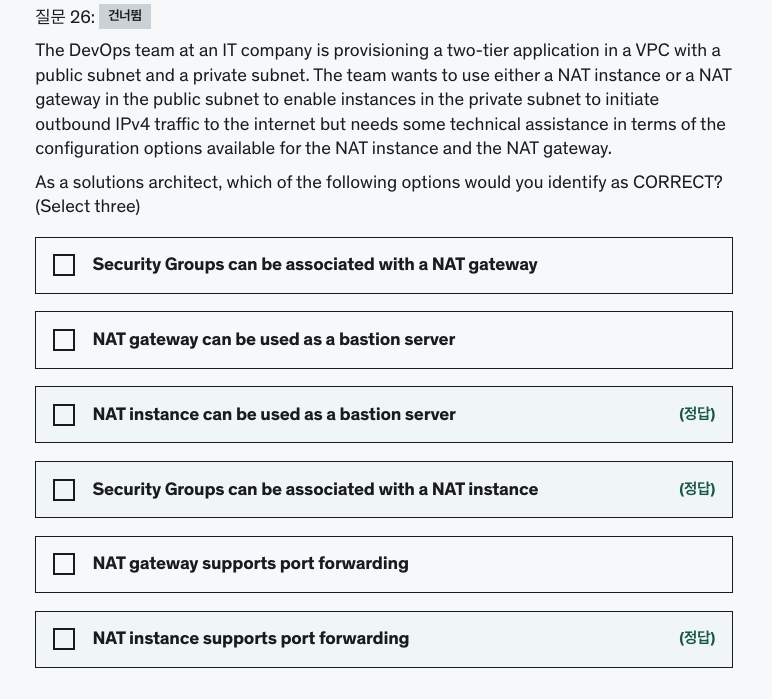

26. 네트워크 강의를 듣고 다시 정리

27. How to enhance DynamoDB and S3 read performance

Enable DynamoDB Accelerator (DAX) for DynamoDB and CloudFront for S3

easy cheesy

28. ECS with EC2 and Fargate Pricings

ECS with EC2 launch type is charged based on EC2 instances and EBS volumes used. ECS with Fargate launch type is charged based on vCPU and memory resources that the containerized application requests

easy cheesy2

29. How to access SQS over public internet

Use VPC endpoint to access Amazon SQS

Wrong Answers:

- Use Network Address Translation (NAT) instance to access Amazon SQS - NAT instances are used to provide internet access to any instances in a private subnet.

30. Protection against accidental deletion on S3

- Enable versioning on the bucket

- Enable MFA delete on the bucket

31. Migration from SQS to SQS FIFO

- Delete the existing standard queue and recreate it as a FIFO queue

- Make sure that the name of the FIFO queue ends with the .fifo suffix

- Make sure that the throughput for the target FIFO queue does not exceed 3,000 messages per second

Can't change existing SQS to FIFO

32. How to reboot ec2 when CloudWatch Alarm triggered

Setup a CloudWatch alarm to monitor the health status of the instance. In case of an Instance Health Check failure, an EC2 Reboot CloudWatch Alarm Action can be used to reboot the instance

CloudWatch Alarm Action can reboot EC2 instance

33. How to delegate access to a set of users from another AWS account

Create a new IAM role with the required permissions to access the resources in the production environment. The users can then assume this IAM role while accessing the resources from the production environment

Wrong Answers

- Create new IAM user credentials for the production environment and share these credentials : using IAM role is much more simple

34. Routing traffic for blue/green test

Use AWS Global Accelerator to distribute a portion of traffic to a particular deployment. this is network layer service with anycast IP

With AWS Global Accelerator, you can shift traffic gradually or all at once between the blue and the green environment and vice-versa without being subject to DNS caching on client devices and internet resolvers, traffic dials and endpoint weights changes are effective within seconds.

DNS is possible for normal usecases, DNS cache prevents rapid application of traffic routing.

35. How to improve S3 upload performance

- S3 Transfer Acceleration

- multipart uploads

Wrong Answers

- AWS direct connect : can take months to provision direct connect. overkill

36. S3 read/write consistency

A process replaces an existing object and immediately tries to read it. Amazon S3 always returns the latest version of the object

Amazon S3 delivers strong read-after-write consistency automatically

37. Services that support Gateway Endpoint

- DynamoDB

- S3

There are two types of VPC endpoints: Interface Endpoints and Gateway Endpoints. An Interface Endpoint is an Elastic Network Interface with a private IP address from the IP address range of your subnet that serves as an entry point for traffic destined to a supported service.

A Gateway Endpoint is a gateway that you specify as a target for a route in your route table for traffic destined to a supported AWS service. The following AWS services are supported: Amazon S3 and DynamoDB.

38. Built-in user management service for API Gateway

Amazon Cognito User Pools

Cognito User Pools provide built-in user management or integrate with external identity providers, such as Facebook, Twitter, Google

Wrong Answer

- Cognito Identity Pools : authorize user access to AWS resources

39. Weather Data, Key-value pair, scailiability and HA

- DynamoDB : key-value, managed

- Lambda : serverless, managed, auto scalable

Wrong Answer

- Redshift :PB scale data warehouse product designed for large scale data set storage and analysis. You cannot use Redshift to capture data in key-value pairs

40. Organization level VPC sharing

Use VPC sharing to share one or more subnets with other AWS accounts belonging to the same parent organization from AWS Organizations

VPC sharing targest must be a subnet

Wrong Answer:

- VPC peering : peering is just between two VPCs

41. Cross Account reachability on AWS resources

Create an IAM role for the Lambda function that grants access to the S3 bucket. Set the IAM role as the Lambda function's execution role. Make sure that the bucket policy also grants access to the Lambda function's execution role

Make sure set up permission on both ends

42. Choosing right FSx for HPC

FSx for Lustre

Wrong Answers:

- Glue : Extract, Transfer, Load service for data analytics

- EMR : Map Reduce, hence Big Data

- FSx for Windows : for windows

43. Improving Kinesis Data Stream Performance

Use Enhanced Fanout feature of Kinesis Data Streams

You can use fan-out feature to process data in parallel

Kinesis data stream can store data for up to 7 days

Wrong Answers:

- Kinesis Data Firehose : auto scaling managed service. stream consumer restricted to S3, Redshift, Elasticsearch

44. VPC 잘 모르는 개념, 학습 후 다시 정리

45. Elasticache for Redis authentication

Use Redis Auth for id/pw authentication

46. S3 bucket tiers and choice

S3 One Zone-IA is for data that is accessed less frequently, but requires rapid access when needed

for re-creatble data, One-Zone is cost-efficient choice

47. Best practice for granting access right for EC2

Attach the appropriate IAM role to the EC2 instance profile so that the instance can access S3 and DynamoDB

storing keys in EC2 itself is not recommended even if it is encrypted

48. How to improve performance of Aurora when it's read replica is already set up.

Use Amazon Aurora Global Database to enable fast local reads with low latency in each region

For DynamoDB, Global Tables does the same work.

49. AWS shield advanced consolidated billing

Consolidated billing has not been enabled. All the AWS accounts should fall under a single consolidated billing for the monthly fee to be charged only once

Wrong Answer:

- Saving Plan: this feature is flexible pricing model for EC2, Lambda, Fargate.

50. Migrating on-premise DB to Redshift

Use AWS Database Migration Service to replicate the data from the databases into Amazon Redshift

Wrong Answer

- Glue : batch ETL processing job. possible but involves significant development efforts to write custom migration scripts to copy the database data into Redshift.

- EMR : possible but very hard to configure. overkill