scheduler cosine annealing

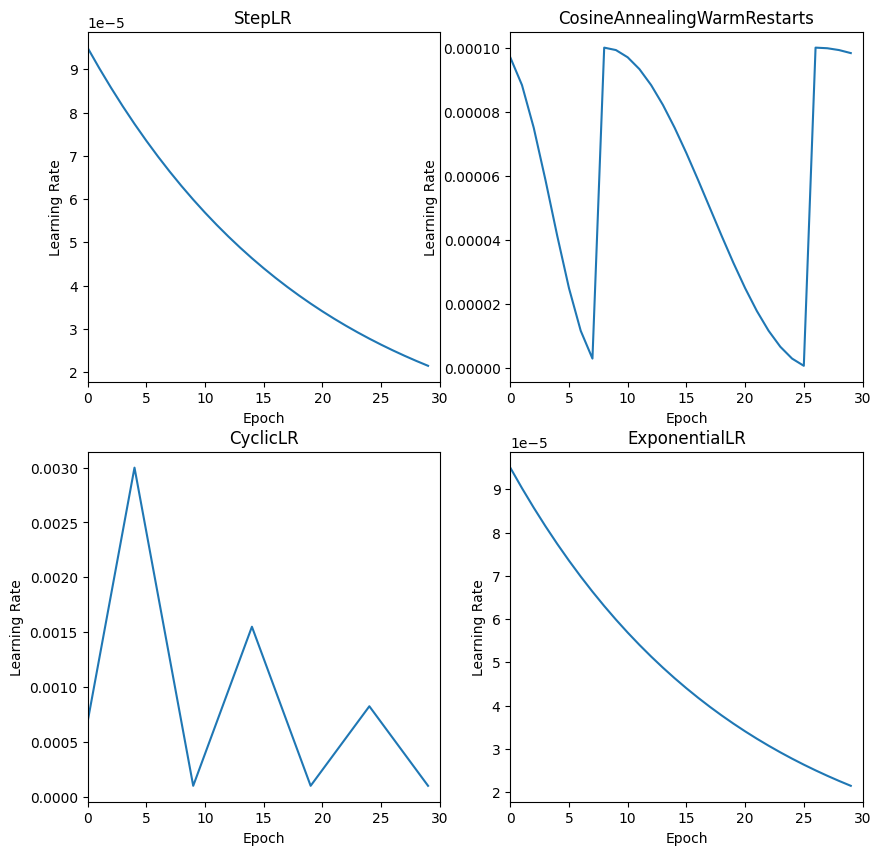

schedular를 뭐를 쓰냐에 따라 학습 진행이 매우 다르다.

유명한 cosine annealing warmup restarts를 살펴보자.

https://gaussian37.github.io/dl-pytorch-lr_scheduler/

import math

from torch.optim.lr_scheduler import _LRScheduler

class CosineAnnealingWarmUpRestarts(_LRScheduler):

def __init__(self, optimizer, T_0, T_mult=1, eta_max=0.1, T_up=0, gamma=1., last_epoch=-1):

if T_0 <= 0 or not isinstance(T_0, int):

raise ValueError("Expected positive integer T_0, but got {}".format(T_0))

if T_mult < 1 or not isinstance(T_mult, int):

raise ValueError("Expected integer T_mult >= 1, but got {}".format(T_mult))

if T_up < 0 or not isinstance(T_up, int):

raise ValueError("Expected positive integer T_up, but got {}".format(T_up))

self.T_0 = T_0

self.T_mult = T_mult

self.base_eta_max = eta_max

self.eta_max = eta_max

self.T_up = T_up

self.T_i = T_0

self.gamma = gamma

self.cycle = 0

self.T_cur = last_epoch

super(CosineAnnealingWarmUpRestarts, self).__init__(optimizer, last_epoch)

def get_lr(self):

if self.T_cur == -1:

return self.base_lrs

elif self.T_cur < self.T_up:

return [(self.eta_max - base_lr)*self.T_cur / self.T_up + base_lr for base_lr in self.base_lrs]

else:

return [base_lr + (self.eta_max - base_lr) * (1 + math.cos(math.pi * (self.T_cur-self.T_up) / (self.T_i - self.T_up))) / 2

for base_lr in self.base_lrs]

def step(self, epoch=None):

if epoch is None:

epoch = self.last_epoch + 1

self.T_cur = self.T_cur + 1

if self.T_cur >= self.T_i:

self.cycle += 1

self.T_cur = self.T_cur - self.T_i

self.T_i = (self.T_i - self.T_up) * self.T_mult + self.T_up

else:

if epoch >= self.T_0:

if self.T_mult == 1:

self.T_cur = epoch % self.T_0

self.cycle = epoch // self.T_0

else:

n = int(math.log((epoch / self.T_0 * (self.T_mult - 1) + 1), self.T_mult))

self.cycle = n

self.T_cur = epoch - self.T_0 * (self.T_mult ** n - 1) / (self.T_mult - 1)

self.T_i = self.T_0 * self.T_mult ** (n)

else:

self.T_i = self.T_0

self.T_cur = epoch

self.eta_max = self.base_eta_max * (self.gamma**self.cycle)

self.last_epoch = math.floor(epoch)

for param_group, lr in zip(self.optimizer.param_groups, self.get_lr()):

param_group['lr'] = lr

model = nn.Linear(1,1)

optimizer = torch.optim.Adam(model.parameters(), lr = 0)

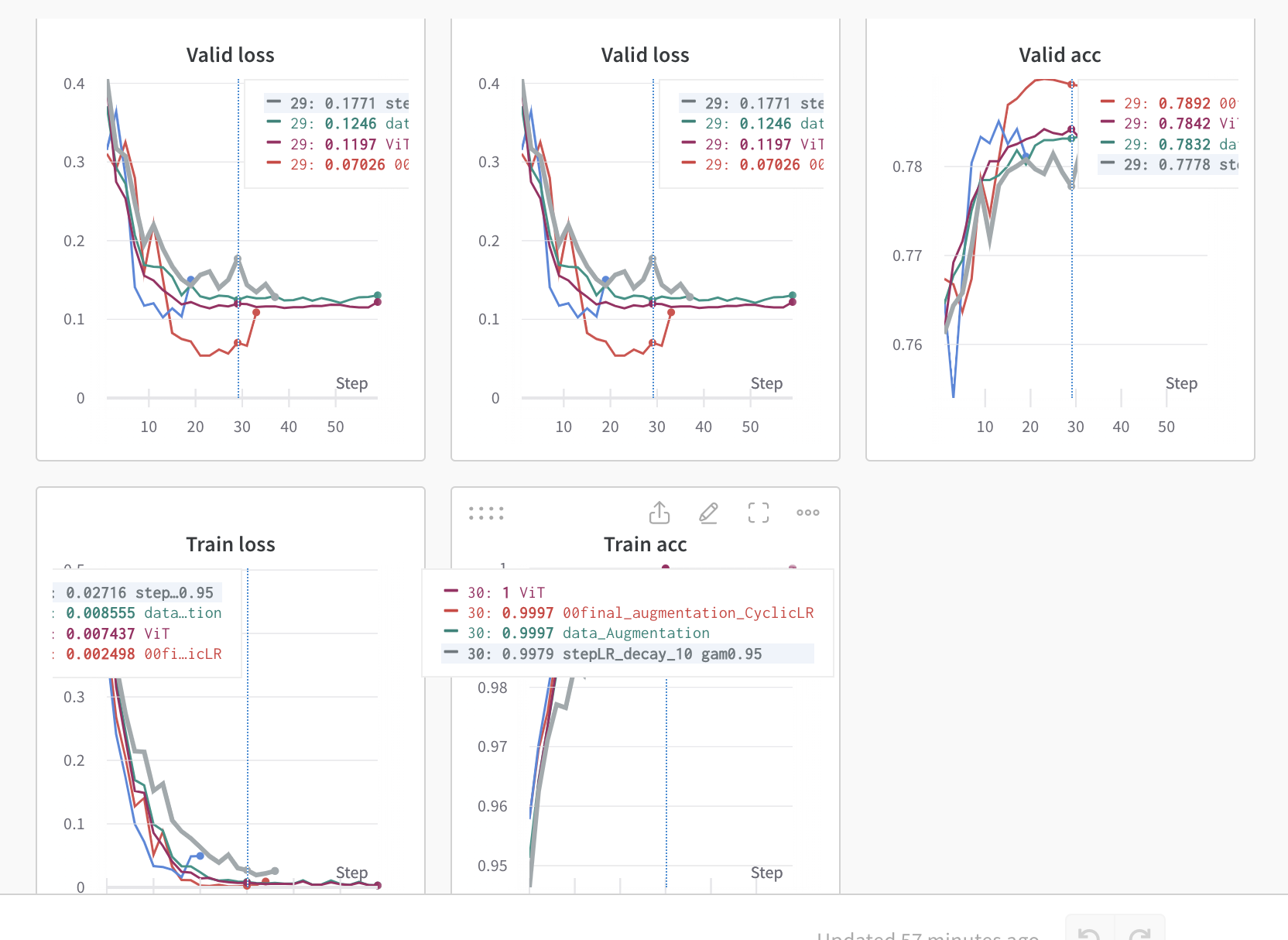

scheduler = CosineAnnealingWarmUpRestarts(optimizer, T_0=70, T_mult=1, eta_max=3e-5, T_up=17, gamma=0.9)

num_epochs = 20*15

lr_values = []

# Loop over epochs

for epoch in range(num_epochs):

# Step the scheduler

scheduler.step()

# Get the current learning rate

lr = optimizer.param_groups[0]['lr']

# Append the learning rate to the list

lr_values.append(lr)

plt.plot(lr_values)

# plt.show(lr_values)결과

cosine annealing warmup restarts plot

import matplotlib.pyplot as plt

import numpy as np

import torch.nn as nn

import torch

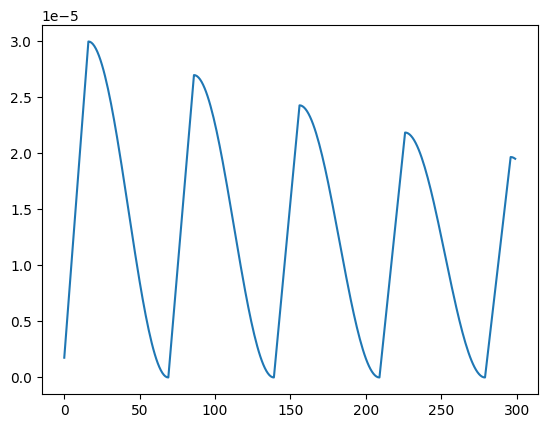

from torch.optim.lr_scheduler import CosineAnnealingWarmRestarts , CyclicLR, ExponentialLR,StepLR, CosineAnnealingLR

# Define the model

model = nn.Linear(1, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9, weight_decay=5e-4)

num_epochs = 30

# Define list to store learning rate values

lr_values = []

n, m = 2, 2

fig, ax = plt.subplots(n, m, figsize=(10, 10))

lr_decay_step = 1

scheduler1 = StepLR(optimizer, lr_decay_step, gamma=0.95)

scheduler2 = CosineAnnealingWarmRestarts(optimizer, T_0=9, T_mult=2, eta_min=0, last_epoch=-1)

scheduler3 = CyclicLR(optimizer, base_lr=0.0001, max_lr=0.003, step_size_up=5, step_size_down=5, mode='triangular2', gamma=1.0, scale_fn=None, scale_mode='cycle', cycle_momentum=True, base_momentum=0.8, max_momentum=0.95, last_epoch=-1)

scheduler4 = ExponentialLR(optimizer, gamma=0.95)

for i in range(n):

for j in range(m):

if i == 0 and j == 0:

scheduler = scheduler1

elif i == 0 and j == 1:

scheduler = scheduler2

elif i == 1 and j == 0:

scheduler = scheduler3

elif i == 1 and j == 1:

scheduler = scheduler4

# Loop over epochs

for epoch in range(num_epochs):

# Step the scheduler

scheduler.step()

# Get the current learning rate

lr = optimizer.param_groups[0]['lr']

# Append the learning rate to the list

lr_values.append(lr)

ax[i, j].plot(lr_values)

x = f'{scheduler}'.split(' ')[0]

ax[i, j].set_title(x[26:])

ax[i, j].set_xlabel('Epoch')

ax[i, j].set_xlim(0, num_epochs)

ax[i, j].set_ylabel('Learning Rate')

lr_values = []

plt.show()적용하려는 스케쥴러의 lr shape.

상황에 따라 다르겠지만 위 스케쥴러를 적용한 결과