Cilim Routing 기법

바로 실습환경 구성과 셋업하며 문제 확인과 해결 방법 찾기

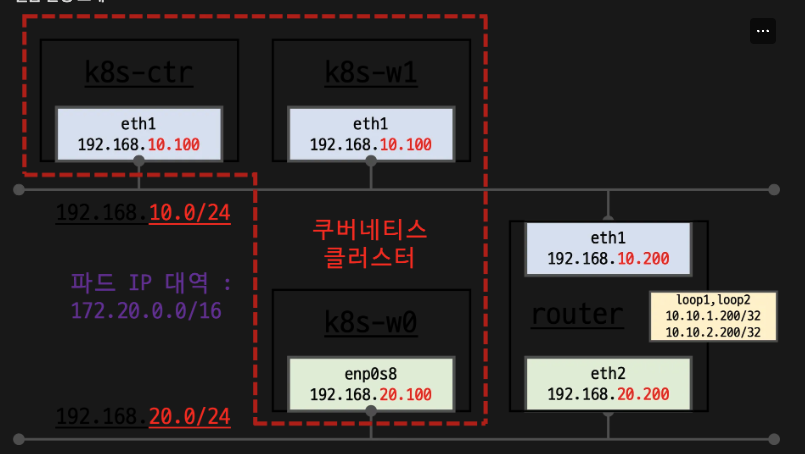

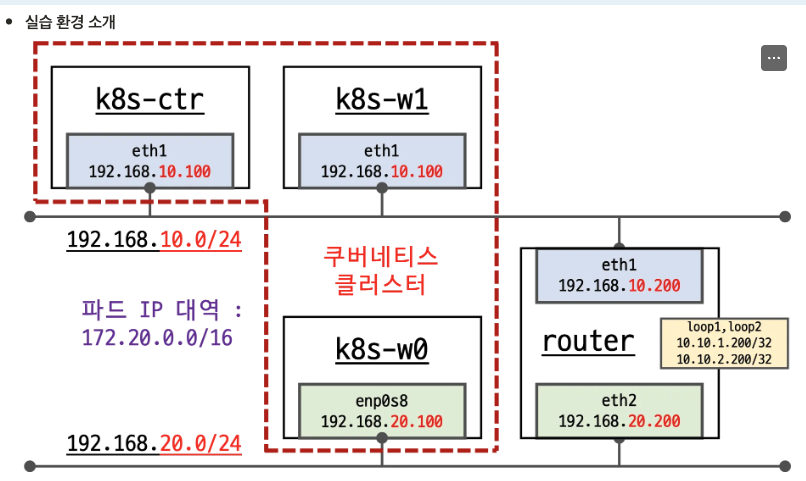

Kubernetes 클러스터 네트워크 구성:

노드:

k8s-ctr: 컨트롤 플레인 (마스터)

k8s-w1, k8s-w0: 워커 노드들

router: 네트워크 라우터네트워크 :

- 192.168.10.0/24: k8s-ctr, k8s-w1, router(eth1) 연결

- 192.168.20.0/24: k8s-w0, router(eth2) 연결

- 파드 IP 대역: 172.20.0.0/16

라우터 기능:

- 두 네트워크 간 라우팅

- 루프백 인터페이스로 추가 연결성 제공

특징:

📌 멀티 네트워크 환경에서 Kubernetes 클러스터 구성

라우터를 통한 워커 노드 간 통신 가능

환경 구축

📌--set routingMode=native --set autoDirectNodeRoutes=true 로 cilium 설치했다

- routingMode=native

- Cilium이 네이티브 라우팅 모드를 사용하도록 설정

- 패킷이 캡슐화(encapsulation) 없이 직접 라우팅됨

- 성능이 향상되지만 네트워크 인프라가 Pod CIDR을 라우팅할 수 있어야 함

- autoDirectNodeRoutes=true

- 노드 간 직접 경로를 자동으로 생성!

- 동일 L2 네트워크에 있는 노드들 사이의 라우팅을 단순화

- BGP나 기타 라우팅 프로토콜 없이도 노드 간 통신 가능

설치

mkdir cilium-lab && cd cilium-lab

curl -O https://raw.githubusercontent.com/gasida/vagrant-lab/refs/heads/main/cilium-study/4w/Vagrantfile

vagrant up

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get nodes

NAME STATUS ROLES AGE VERSION

k8s-ctr Ready control-plane 38m v1.33.2

k8s-w0 Ready 60s v1.33.2

k8s-w1 Ready 36m v1.33.2

(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium status

/¯¯\

/¯¯__/¯¯\ Cilium: OK

__/¯¯__/ Operator: OK

/¯¯__/¯¯\ Envoy DaemonSet: OK

__/¯¯__/ Hubble Relay: OK

__/ ClusterMesh: disabledk8s-ctr # 노드 정보 : 상태 INTERNAL-IP 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# ifconfig | grep -iEA1 'eth[0-9]:'

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255 -- eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.10.100 netmask 255.255.255.0 broadcast 192.168.10.255노드 IP 통신은 서로 가능

(⎈|HomeLab:N/A) root@k8s-ctr:~# tracepath -n 192.168.20.100

1?: [LOCALHOST] pmtu 1500 1: 192.168.10.200 0.636ms 1: 192.168.10.200 0.414ms 2: 192.168.20.100 0.987ms reached샘플 app 배포

deployment.apps/webpod created

service/webpod created

pod/curl-pod created

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get deploy,svc,ep webpod -owideWarning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR deployment.apps/webpod 3/3 3 3 55s webpod traefik/whoami app=webpod NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR service/webpod ClusterIP 10.96.17.34 <none> 80/TCP 55s app=webpod NAME ENDPOINTS AGE endpoints/webpod 172.20.0.37:80,172.20.1.25:80,172.20.2.4:80 54s# IP 확인

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl get ciliumendpoints # IP 확인

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6 curl-pod 36705 ready 172.20.0.107 webpod-697b545f57-7q4gk 17701 ready 172.20.1.25 webpod-697b545f57-8tcp6 17701 ready 172.20.2.4 webpod-697b545f57-f9zp6 17701 ready 172.20.0.37방금 만든 서비스 webpod cluster-ip 10.96.17.34

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 65m webpod ClusterIP 10.96.17.34 <none> 80/TCP 11m(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium --

cilium-dbg service list |grep -A 3 10.96.17.34ID Frontend Service Type Backend 20 10.96.17.34:80/TCP ClusterIP 1 => 172.20.0.37:80/TCP (active) 2 => 172.20.1.25:80/TCP (active) 3 => 172.20.2.4:80/TCP (active)실제 쓰는 기능 map list 로 보기

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map list | grep -v '0 0'

Name Num entries Num errors Cache enabled cilium_policy_v2_02312 3 0 true cilium_policy_v2_01400 3 0 true cilium_lb4_services_v2 45 0 true cilium_lb4_backends_v3 16 0 true cilium_runtime_config 256 0 true cilium_ipcache_v2 22 0 true cilium_policy_v2_00426 3 0 true cilium_policy_v2_00451 3 0 true cilium_policy_v2_00539 3 0 true cilium_lb4_reverse_sk 9 0 true cilium_lxc 13 0 true cilium_policy_v2_00112 3 0 true cilium_policy_v2_01545 2 0 true cilium_policy_v2_01744 3 0 true cilium_policy_v2_01212 3 0 true cilium_lb4_reverse_nat 20 0 true cilium_policy_v2_02610 3 0 true cilium_policy_v2_00152 3 0 truemap 상세 정보 보기

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -n kube-system ds/cilium -- cilium-dbg map get cilium_lb4_services_v2 |grep 10.96.17.34

10.96.17.34:80/TCP (0) 0 3[0] (20) [0x0 0x0] 10.96.17.34:80/TCP (2) 14 0[0] (20) [0x0 0x0] 10.96.17.34:80/TCP (3) 15 0[0] (20) [0x0 0x0] 10.96.17.34:80/TCP (1) 16 0[0] (20) [0x0 0x0]

문제 상황 보기 -> pod 서비스 호출이 되다 끊어진다 반복중

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'

Hostname: webpod-697b545f57-f9zp6 --- Hostname: webpod-697b545f57-f9zp6 --- Hostname: webpod-697b545f57-f9zp6 --- Hostname: webpod-697b545f57-7q4gk ---why? -> 각 노드에 한대씩 기동중 webpod 3개 중 다른 네트워크 대역 pod 통신 문제

(⎈|HomeLab:N/A) root@k8s-ctr:~# k get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES curl-pod 1/1 Running 0 21m 172.20.0.107 k8s-ctr <none> <none> webpod-697b545f57-7q4gk 1/1 Running 0 21m 172.20.1.25 k8s-w1 <none> <none> webpod-697b545f57-8tcp6 1/1 Running 0 21m 172.20.2.4 k8s-w0 <none> <none> webpod-697b545f57-f9zp6 1/1 Running 0 21m 172.20.0.37 k8s-ctr <none> <none>k8s k8s-w0 webpod 쪽으로 호출할때 router tcpdump로 확인해보자

⎈|HomeLab:N/A) root@k8s-ctr:~# export WEBPOD=$(kubectl get pod -l app=webpod --field-selector spec.nodeName=k8s-w0 -o jsonpath='{.items[0].status.podIP}')

(⎈|HomeLab:N/A) root@k8s-ctr:~# echo $WEBPOD172.20.2.4(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping -c 2 -w 1 -W 1 $WEBPOD

PING 172.20.2.4 (172.20.2.4) 56(84) bytes of data.router 에서 tcpdump

root@router:~# tcpdump -i any icmp -nn

tcpdump: data link type LINUX_SLL2 tcpdump: verbose output suppressed, use -v[v]... for full protocol decode listening on any, link-type LINUX_SLL2 (Linux cooked v2), snapshot length 262144 bytes 01:16:20.710564 eth1 In IP 172.20.0.107 > 172.20.2.4: ICMP echo request, id 1119, seq 1, length 64 01:16:20.710576 eth0 Out IP 172.20.0.107 > 172.20.2.4: ICMP echo request, id 1119, seq 1, length 64

- 1.w0쪽 pod ip는 노드도 다른 대역이므로 기본 autoDirectNodeRoutes로 셋업된 노드 route에 없어 default Route(router)로 감

- 2.router로 확인해보면 셋업이 없기에 얘도 default 라우터 경로로 보냄 우리가 셋업한 eth2가 아닌 public eth0 으로 보내버림..

router 확인

root@router:~# ip -c route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100 10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100 10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100 10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100 10.10.1.0/24 dev loop1 proto kernel scope link src 10.10.1.200 10.10.2.0/24 dev loop2 proto kernel scope link src 10.10.2.200 192.168.10.0/24 dev eth1 proto kernel scope link src 192.168.10.200 192.168.20.0/24 dev eth2 proto kernel scope link src 192.168.20.200-> 172.20.0.107 노드에 대한 route 정보가 없다.

172.20.0.107 default routing 경로 확인

root@router:~# ip -c route get 172.20.0.107

172.20.0.107 via 10.0.2.2 dev eth0 src 10.0.2.15 uid 0 cachehubble로 보면 SYN만 나가고 ACK 없음..

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- curl $WEBPOD (⎈|HomeLab:N/A) hubble observe -f --protocol tcp --pod curl-pod9 16:31:03.834: default/curl-pod:32788 (ID:36705) -> default/webpod-697b545f57-8tcp6:80 (ID:17701) to-network FORWARDED (TCP Flags: SYN) Aug 9 16:31:11.033: default/curl-pod:32788 (ID:36705) -> default/webpod-697b545f57-8tcp6:80 (ID:17701) to-network FORWARDED (TCP Flags: SYN) Aug 9 16:31:23.193: default/curl-pod:32788 (ID:36705) -> default/webpod-697b545f57-8tcp6:80 (ID:17701) to-network FORWARDED (TCP Flags: SYN) Aug 9 16:31:39.577: default/curl-pod:32788 (ID:36705) -> default/webpod-697b545f57-8tcp6:80 (ID:17701) to-network FORWARDED (TCP Flags: SYN) Aug 9 16:32:11.833: default/curl-pod:32788 (ID:36705) -> default/webpod-697b545f57-8tcp6:80 (ID:17701) to-network FORWARDED (TCP Flags: SYN)#### 해결방법1. static Routing 설정 -> 노드 많으면 빡세짐..운영관리도 문제

#### 해결방법2. Overlay Network 사용

- 패킷 헤더를 하나 더 추가(Encapsulation)해서 목적지 IP를 담아서 router가 알게함!

VXLAN 으로 바꿔보자

(⎈|HomeLab:N/A) root@k8s-ctr:~#

(⎈|HomeLab:N/A) root@k8s-ctr:~# grep -E 'CONFIG_VXLAN=y|CONFIG_VXLAN=m|CONFIG_GENEVE=y|CONFIG_GENEVE=m|CONFIG_FIB_RULES=y' /boot/config-$(uname -r)CONFIG_FIB_RULES=y CONFIG_VXLAN=m CONFIG_GENEVE=m(⎈|HomeLab:N/A) root@k8s-ctr:~# lsmod | grep -E 'vxlan|geneve'

(⎈|HomeLab:N/A) root@k8s-ctr:~# modprobe vxlan # modprobe geneve

(⎈|HomeLab:N/A) root@k8s-ctr:~# lsmod | grep -E 'vxlan|geneve'vxlan 147456 0 ip6_udp_tunnel 16384 1 vxlan udp_tunnel 36864 1 vxlan(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-i sudo modprobe vxlan ; echo; done

> node : k8s-w1 << > node : k8s-w0 <<(⎈|HomeLab:N/A) root@k8s-ctr:~# for i in w1 w0 ; do echo ">> node : k8s-i sudo lsmod | grep -E 'vxlan|geneve' ; echo; done

> node : k8s-w1 << > node : k8s-w0 <<업그레이드

(⎈|HomeLab:N/A) root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --version 1.18.0 --reuse-values \

--set routingMode=tunnel --set tunnelProtocol=vxlan \

--set autoDirectNodeRoutes=false --set installNoConntrackIptablesRules=falseRelease "cilium" has been upgraded. Happy Helming! NAME: cilium LAST DEPLOYED: Sun Aug 10 02:01:45 2025 NAMESPACE: kube-system STATUS: deployed REVISION: 2 TEST SUITE: None NOTES: You have successfully installed Cilium with Hubble Relay and Hubble UI. Your release version is 1.18.0.For any further help, visit https://docs.cilium.io/en/v1.18/gettinghelp

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl rollout restart -n kube-system ds/ciliumdaemonset.apps/cilium restarted모드 확인

⎈|HomeLab:N/A) root@k8s-ctr:~# cilium features status | grep datapath_network

Uniform Name Labels k8s-ctr k8s-w0 k8s-w1 Yes cilium_feature_datapath_network mode=overlay-vxlan 1 1 1(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -- cilium status | grep ^Routing

Routing: Network: Tunnel [vxlan] Host: BPF(⎈|HomeLab:N/A) root@k8s-ctr:~# cilium config view | grep tunnel

routing-mode tunnel tunnel-protocol vxlan tunnel-source-port-range 0-0새로운 가상 device 확인 cilium_vxlan

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c addr show dev cilium_vxlan

26: cilium_vxlan: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default link/ether b6:28:af:4e:39:13 brd ff:ff:ff:ff:ff:ff inet6 fe80::b428:afff:fe4e:3913/64 scope link valid_lft forever preferred_lft forever노드에 route 정보 확인 -cilium_host route 정보 추가 !

(⎈|HomeLab:N/A) root@k8s-ctr:~# ip -c route | grep cilium_host

172.20.0.0/24 via 172.20.0.229 dev cilium_host proto kernel src 172.20.0.229 172.20.0.229 dev cilium_host proto kernel scope link 172.20.1.0/24 via 172.20.0.229 dev cilium_host proto kernel src 172.20.0.229 mtu 1450 172.20.2.0/24 via 172.20.0.229 dev cilium_host proto kernel src 172.20.0.229 mtu 1450?! 172.20.0.229 는 누구인가 ?

(⎈|HomeLab:N/A) root@k8s-ctr:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].metadata.name}')

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium status --all-addresses | grep router172.20.0.229 (router)이제 안되던 w0 노드 webpod 로 재호출 시도 해보기

(⎈|HomeLab:N/A) root@k8s-ctr:~# kubectl exec -it curl-pod -- ping -c 2 -w 1 -W 1 $WEBPOD

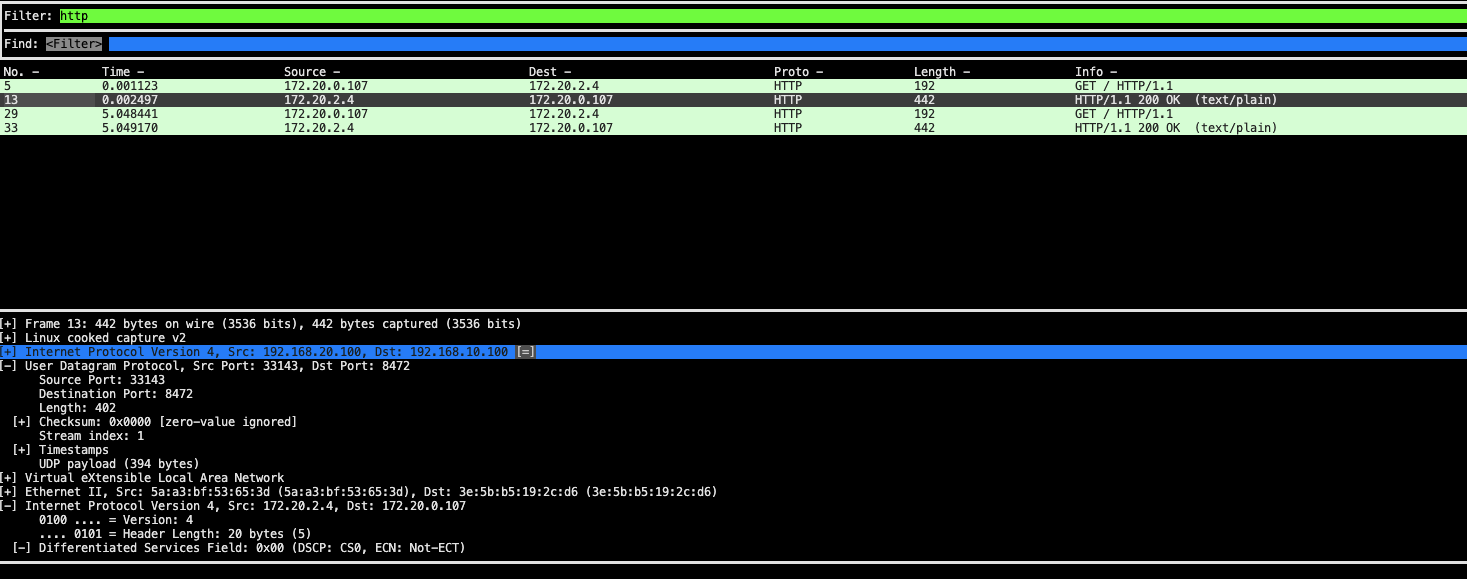

64 bytes from 172.20.2.4: icmp_seq=1 ttl=63 time=1.04 ms --- 172.20.2.4 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 1.039/1.039/1.039/0.000 msroot@router:~# tcpdump -i any udp port 8472 -nn

02:27:50.282388 eth1 In IP 192.168.10.100.51213 > 192.168.20.100.8472: OTV, flags [I] (0x08), overlay 0, instance 36705 IP 172.20.0.107 > 172.20.2.4: ICMP echo request, id 2900, seq 1, length 64 02:27:50.282404 eth2 Out IP 192.168.10.100.51213 > 192.168.20.100.8472: OTV, flags [I] (0x08), overlay 0, instance 36705 IP 172.20.0.107 > 172.20.2.4: ICMP echo request, id 2900, seq 1, length 64 02:27:50.282860 eth2 In IP 192.168.20.100.52674 > 192.168.10.100.8472: OTV, flags [I] (0x08), overlay 0, instance 17701 IP 172.20.2.4 > 172.20.0.107: ICMP echo reply, id 2900, seq 1, length 64 02:27:50.282863 eth1 Out IP 192.168.20.100.52674 > 192.168.10.100.8472: OTV, flags [I] (0x08), overlay 0, instance 17701eth1 로 들어온 것이 eth2로 나간다.

중간에 overlay 정보도 보인다.

kubectl exec -it curl-pod -- sh -c 'while true; do curl -s --connect-timeout 1 webpod | grep Hostname; echo "---" ; sleep 1; done'router에서 tcpdump & termshark 확인

tcpdump -i any udp port 8472 -w /tmp/vxlan.pcap

termshark -r /tmp/vxlan.pcap -d udp.port==8472,vxlan

- 라우터는 L3 영역까지만 본다. 나머지 내부 추가된 패킷은 안봄

- 50바이트 헤더 추가로 패킷 사이즈가 커지고 encap/decapsulation 해야하는 Node 리소스 낭비가 있다는 점 기억!

아래 뉴스를 통해 ,AI에서 고성능 멀티 노드간 network 성능과 셋업이 중요하다는 것을 느끼며 Go cilium!