DL 입문

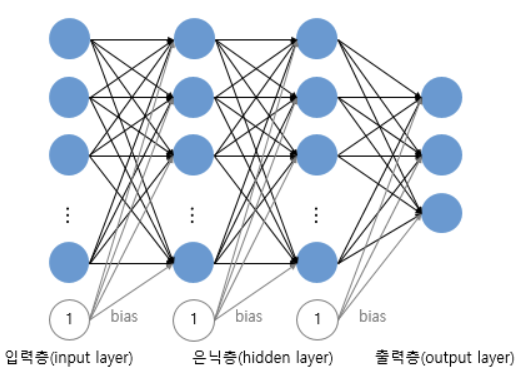

다층 퍼셉트론(MultiLayer Perceptron, MLP)

은닉층이 2개 이상인 신경망을 심층 신경망(Deep Neural Network, DNN)이라고 함

파이토치로 다층 퍼셉트론 구현하기

import torch

import torch.nn as nn

X = torch.FloatTensor([[0, 0], [0, 1], [1, 0], [1, 1]]).to(device)

Y = torch.FloatTensor([[0], [1], [1], [0]]).to(device)

model = nn.Sequential(

nn.Linear(2, 10, bias=True), # input_layer = 2, hidden_layer1 = 10

nn.Sigmoid(),

nn.Linear(10, 10, bias=True), # hidden_layer1 = 10, hidden_layer2 = 10

nn.Sigmoid(),

nn.Linear(10, 10, bias=True), # hidden_layer2 = 10, hidden_layer3 = 10

nn.Sigmoid(),

nn.Linear(10, 1, bias=True), # hidden_layer3 = 10, output_layer = 1

nn.Sigmoid()

).to(device)

criterion = torch.nn.BCELoss().to(device)

optimizer = torch.optim.SGD(model.parameters(), lr=1) # modified learning rate from 0.1 to 1

for epoch in range(10001):

optimizer.zero_grad()

# forward 연산

hypothesis = model(X)

# 비용 함수

cost = criterion(hypothesis, Y)

cost.backward()

optimizer.step()

# 100의 배수에 해당되는 에포크마다 비용을 출력

if epoch % 100 == 0:

print(epoch, cost.item())

with torch.no_grad():

hypothesis = model(X)

predicted = (hypothesis > 0.5).float()

accuracy = (predicted == Y).float().mean()

print('모델의 출력값(Hypothesis): ', hypothesis.detach().cpu().numpy())

print('모델의 예측값(Predicted): ', predicted.detach().cpu().numpy())

print('실제값(Y): ', Y.cpu().numpy())

print('정확도(Accuracy): ', accuracy.item())

모델의 출력값(Hypothesis): [[1.1169249e-04]

[9.9982882e-01]

[9.9984229e-01]

[1.8529959e-04]]

모델의 예측값(Predicted): [[0.]

[1.]

[1.]

[0.]]

실제값(Y): [[0.]

[1.]

[1.]

[0.]]

정확도(Accuracy): 1.0

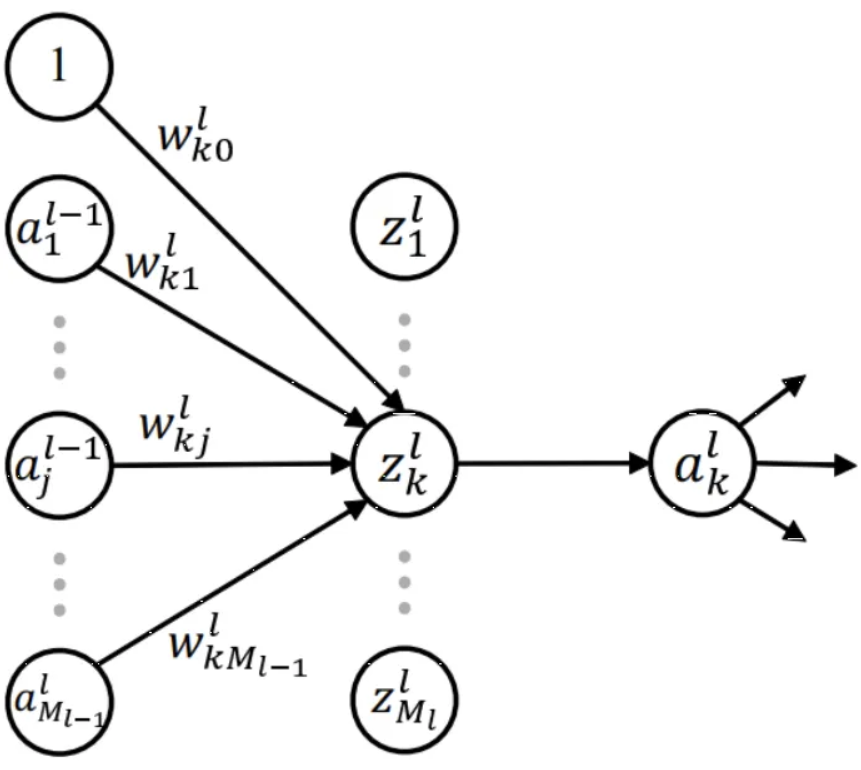

- 위 첨자 : layer 순서

- 아래 첨자 : neuron 순서

- l번째 layer에는 neuron이 개씩 있음

- ex) : l번째 layer의 k번째 neuron

- z → a 출력값은 w (weight)에 곱해져 다음 뉴런 z로 들어감

- ⇒ l : 도착하는 layer, j : 출발 neuron, k : 도착 neuron

과적합 방지하기

- 데이터의 양을 늘리기

- 모델의 복잡도 줄이기

- 가중치 규제(Regularization) 적용하기

- 드롭아웃(Dropout)