most of the scripts are made with the help of GPT-4

Objectives

Identify the limitations of existing encoding methods in automatic music generation in symbolic music and consider ways to improve them

Symbolic Music Encoding

Symbolic Music(music as a language): Music Transformer, MuseNet, Pop Music Transformer, VirtuosoTune

Encoding: the way music is converted into discrete tokens for language modeling(in a machine readable format). This is different from tokenizing and parsing, which serve as sub-processes of encoding.

Tokenizing: This is the process of breaking down the input musical data into smaller units called tokens.

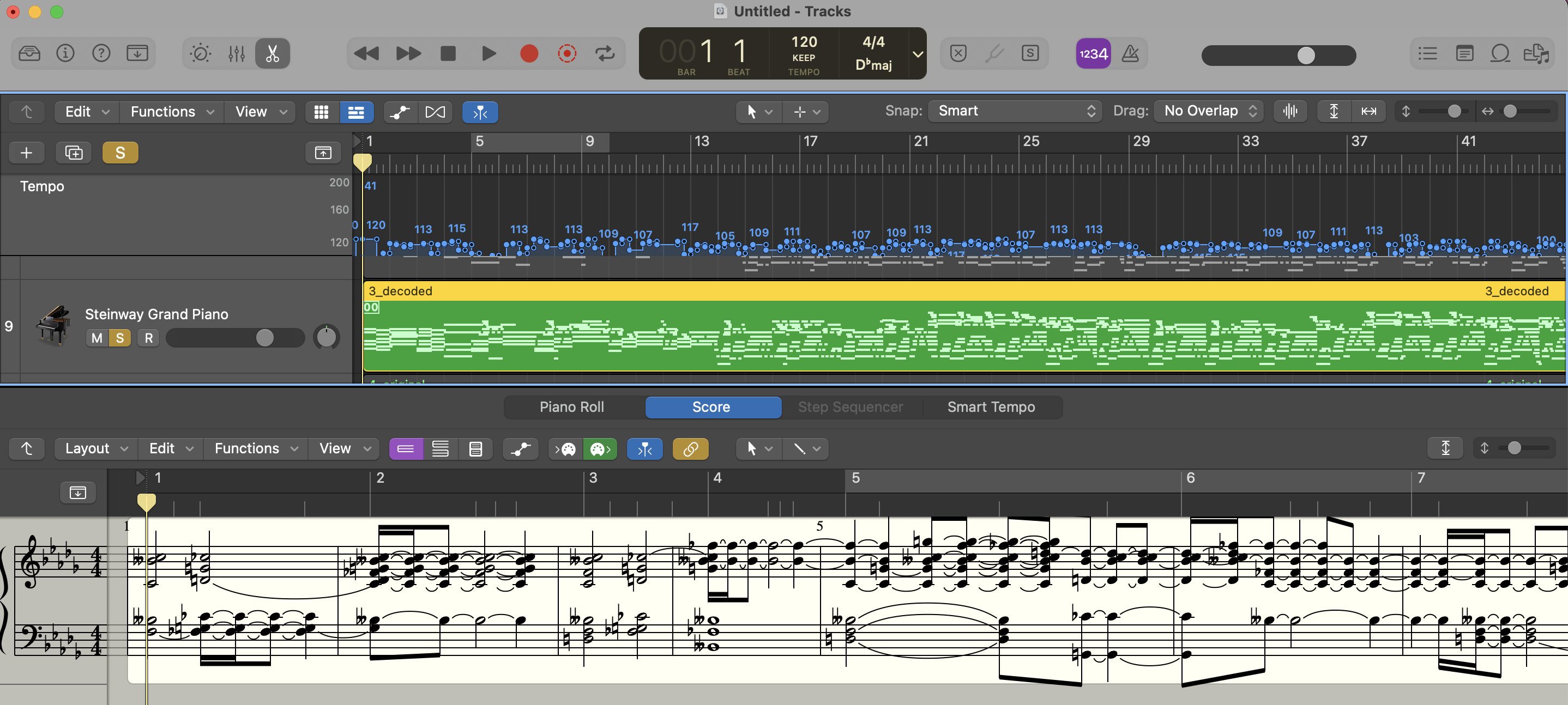

MIDI

MIDI (Musical Instrument Digital Interface) is a communication protocol that enables electronic musical instruments, computers, and other digital devices to interact and share musical information. Introduced in 1983, MIDI has become a standard format for storing, transmitting, and manipulating musical data across various hardware and software platforms.

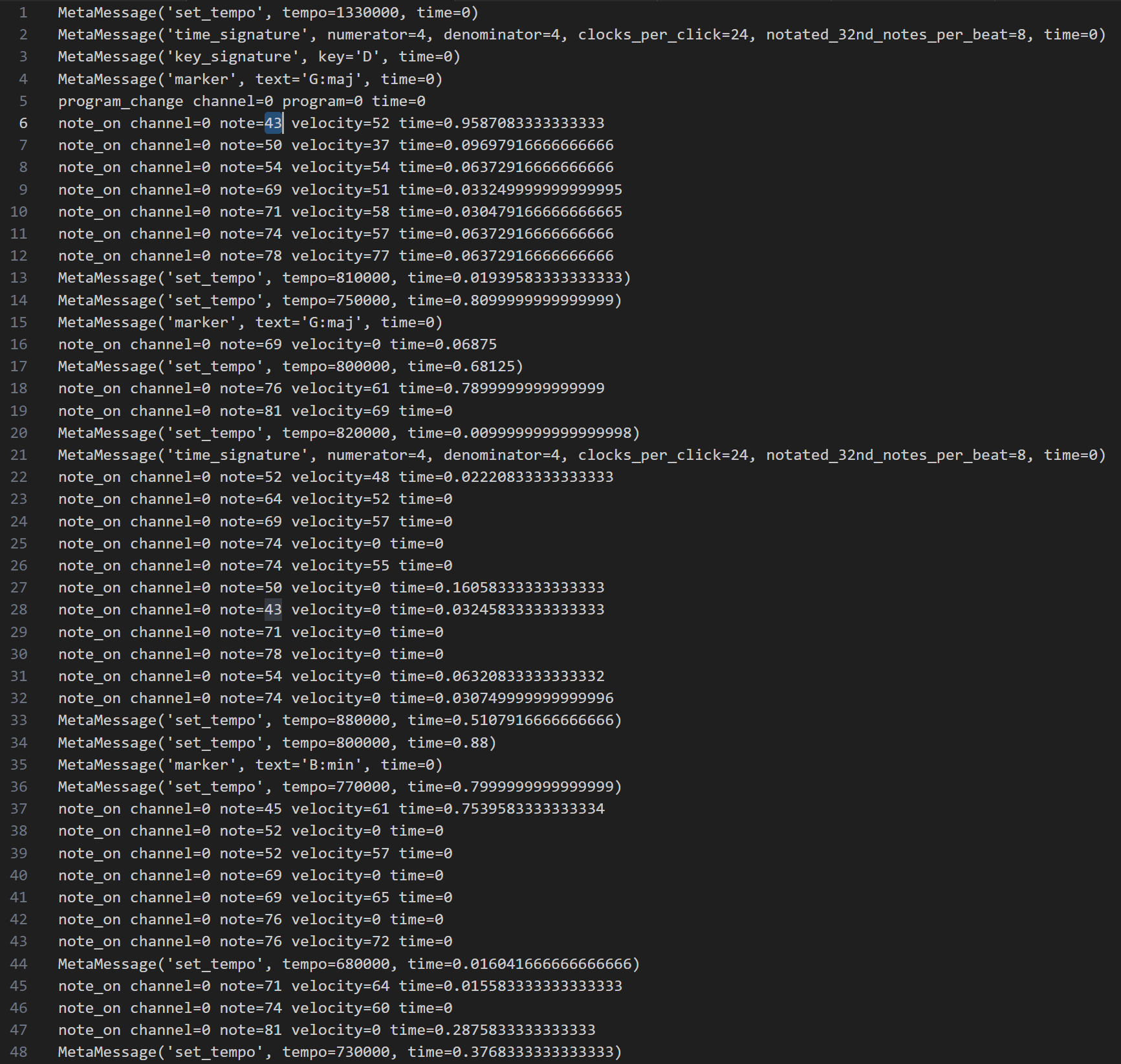

parsing MIDI

parsing tools(mido, pretty_midi): pretty_midi and mido are Python libraries designed to handle and process MIDI data conveniently and efficiently.

Tempo is in microseconds per beat (quarter note)

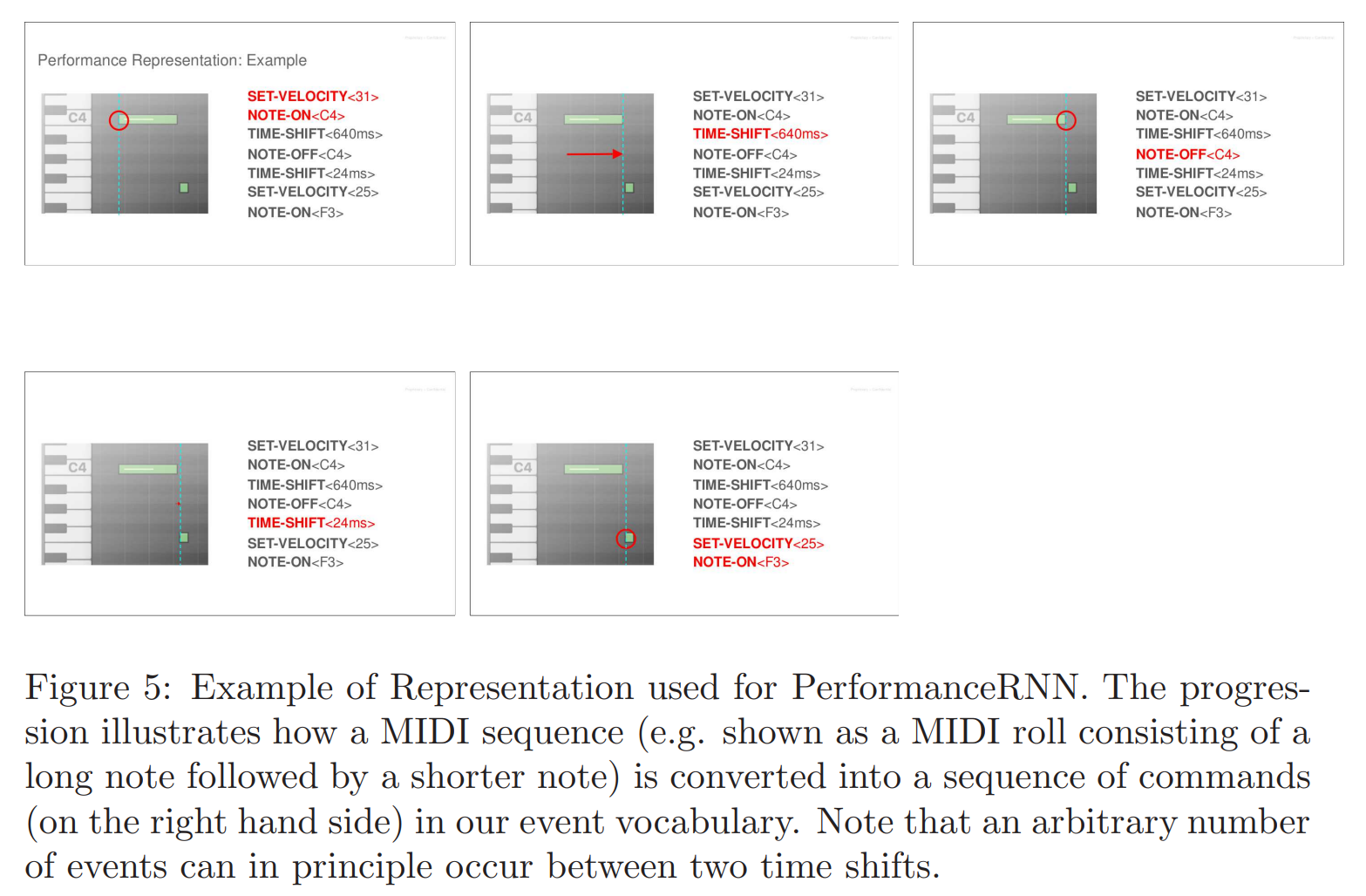

MIDI-like encoding

• 128 NOTE-ON events: one for each of the 128 MIDI pitches. Each one starts a new note.

• 128 NOTE-OFF events: one for each of the 128 MIDI pitches. Each one releases a note.

• 125 TIME-SHIFT events: each one moves the time step forward by increments of 8 ms up to 1 second.

• 32 VELOCITY events: each one changes the velocity applied to all subsequent notes (until the next velocity event).

Time-Shift (∆T ) events are used to indicate the relative time gap between events (rather than the absolute time of the events)

from This Time with Feeling: Learning Expressive Musical Performance (2018), arxiv

High-level (semantic) information

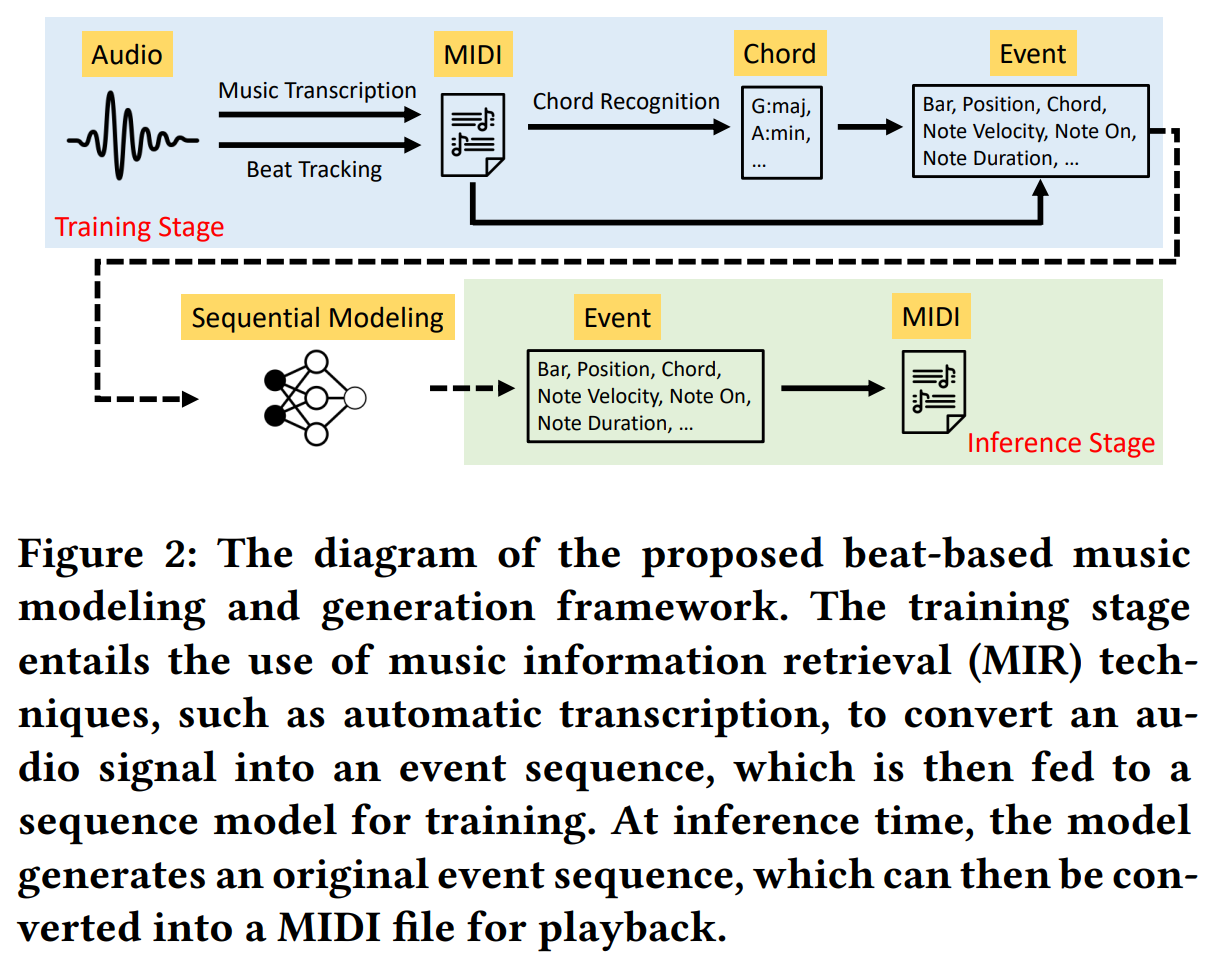

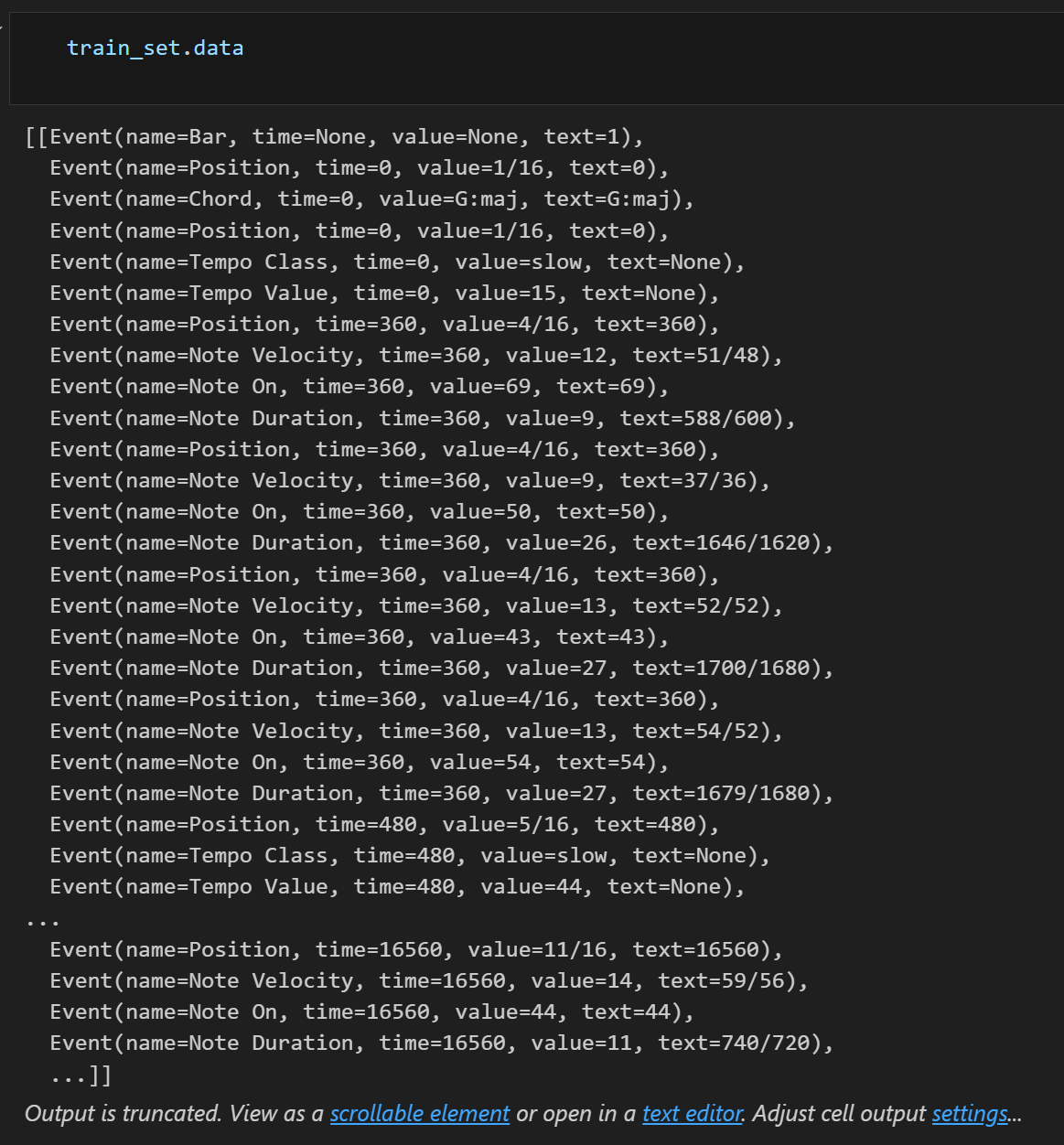

revamped MIDIderived events(REMI) style encoding added: downbeat, tempo and chord

1) Focusing on recurring patterns and accents: Position and Bar in REMI style encoding provides an explicit metrical grid to model music, in a beat-based manner

2) To model the expressive rhythmic freedom in music: add a set of Tempo events to allow for local tempo changes per beat

3) To have control over the chord progression underlying the music being composed: introduce the Chord events to make the harmonic structure of music explicit

REMI style encoding

from Pop Music Transformer: Beat-based Modeling and Generation of Expressive Pop Piano Compositions

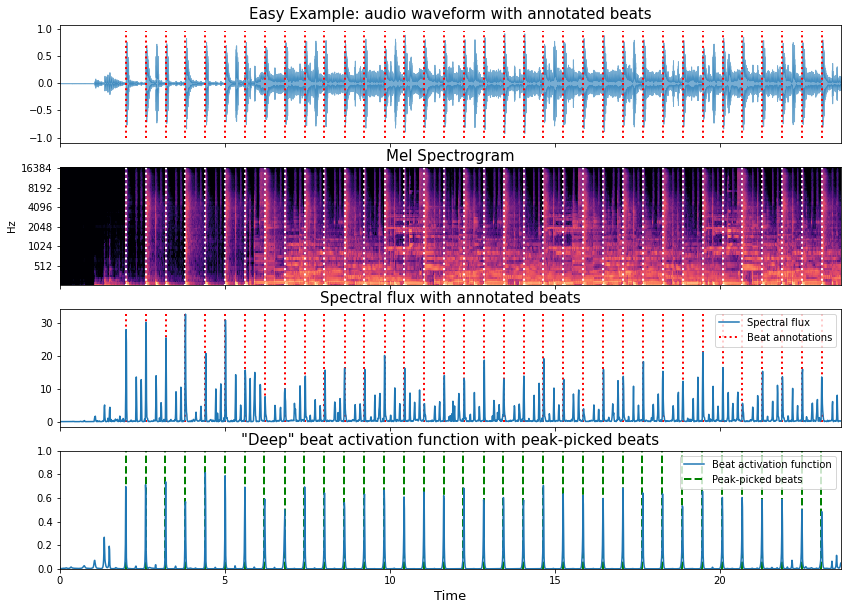

what is beat?

To create the Bar events, we employ the recurrent neural network model to estimate from the audio files the position of the ‘downbeats,’ which correspond to the first beat in each bar.

shortcomings of REMI style encoding

1) Beat tracking : in the dataset provided by pop music transformer, I can see some data has doubled up tempo beat like '008.mid'. I guess this is because while tracking beat the model they used didn't care some similar meters like 2/4 and 4/4.

2) only considered four-beat song : there needs a meter detection algorithm for three-beat song.

3) chord recognition from limited algorithm:

- why dominant chord should include 7('5th')? and what if the code only include 10('b7th') without 7('5th')?

if 7 in sequence and 10 in sequence:

quality = 'dom'

else:

quality = 'maj'- and there is no considerations in tension notes like add 9th, 11th, 13th

self.CHORD_OUTSIDERS_1 = {'maj': [2, 5, 9],

'min': [2, 5, 8],

'dim': [2, 5, 10],

'aug': [2, 5, 9],

'dom': [2, 5, 9]}

# define chord outsiders (-2)

self.CHORD_OUTSIDERS_2 = {'maj': [1, 3, 6, 8, 10],

'min': [1, 4, 6, 9, 11],

'dim': [1, 4, 7, 8, 11],

'aug': [1, 3, 6, 7, 10],

'dom': [1, 3, 6, 8, 11]}- Also, there is no way to choose one chord among samely scored chords. this could lead to make some chords which is quite not fit into current key of that song.

개발자로서 배울 점이 많은 글이었습니다. 감사합니다.