[paper review] Pop Music Transformer: Beat-based Modeling and Generation of Expressive Pop Piano Compositions

Paper Review

from : https://arxiv.org/abs/2002.00212

by Yu-Siang Huang, Yi-Hsuan Yang

Abstract

In contrast with this general approach, this paper shows that Transformers can do even better for music modeling, when we improve the way a musical score is converted into the data fed to a Transformer model. In particular, we seek to impose a metrical structure in the input data, so that Transformers can be more easily aware of the beat-bar-phrase hierarchical structure in music. The new data representation maintains the flexibility of local tempo changes, and provides hurdles to control the rhythmic and harmonic structure of music.

KEYWORDS

Automatic music composition, transformer, neural sequence model

1 Introduction

elements in music

melody : the organization of musical notes of different fundamental frequencies (from low to high)

rhythm : The placement of strong and weak beats over

time

structure : Repetition and long-term structure

2 things for music generation

- the way music is converted into discrete tokens for language modeling

- the machine learning algorithm used to build the model(sparse attention, transformer-xl)

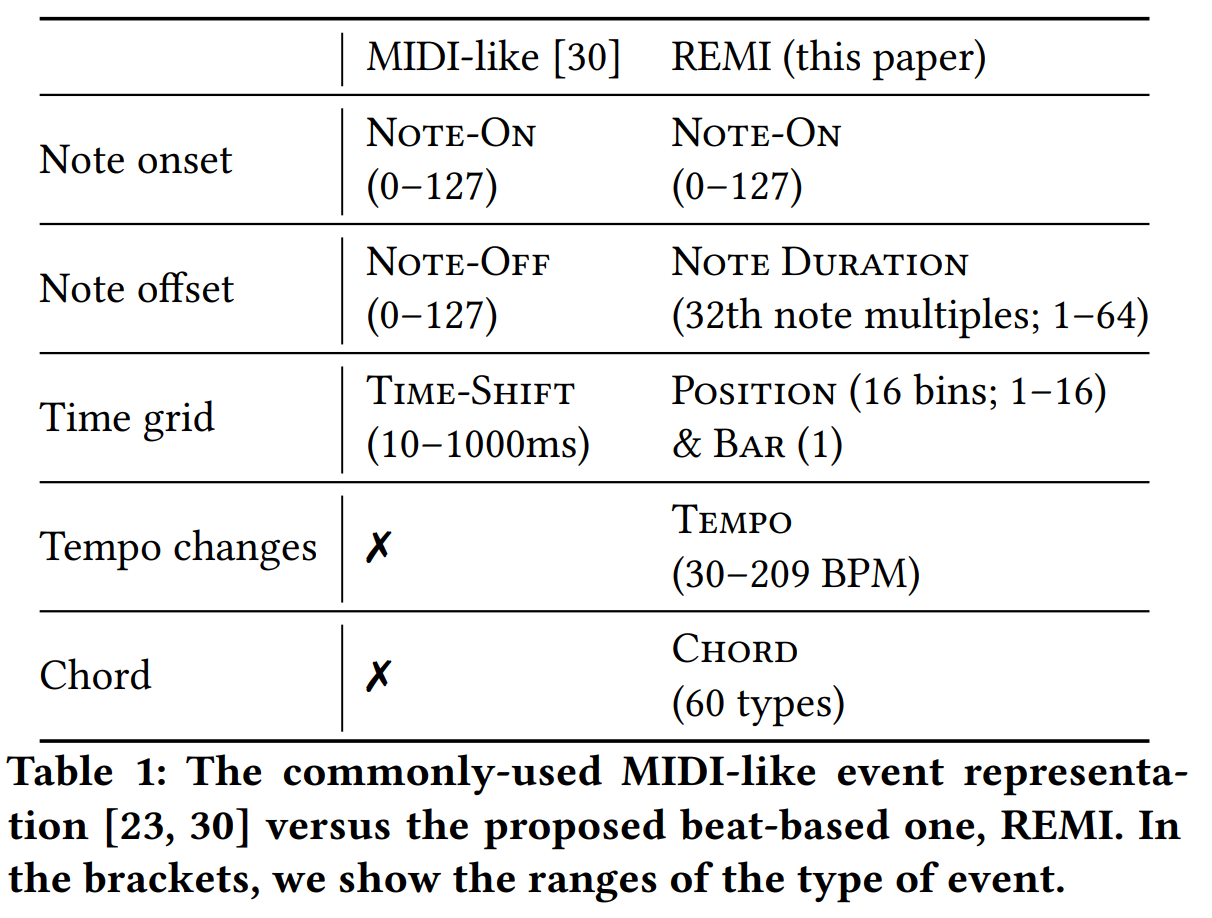

MIDI-like vs. REMI

from the paper

This MIDIlike representation is general and represents keyboard-style music (such as piano music) fairly faithfully, therefore a good choice as the format for the input data fed to Transformers. A general idea here is that a deep network can learn abstractions of the MIDI data that contribute to the generative musical modeling on its own. Hence, high-level (semantic) information of music, such as downbeat, tempo and chord, are not included in this MIDI-like representation.

REMI style encoding

overall process of REMI encoding

added Bar, Position, Tempo, Chord

from the paper

example of REMI style encoding

2 Related Works

Note-off vs. Note duration

In MIDI-like, the duration of a note has to be inferred from the time gap between a Note-On and the corresponding Note-Off, by accumulating the time span of the Time Shift events in between.

Time shift vs. Position & Bar

We note that there are extra benefits in using Position & Bar, including

1) we can more easily learn the dependency (e.g., repetition) of note events occurring at the same Position (⋆/Q) across bars

2) we can add bar-level conditions to condition the generation process if we want

3) we have time reference to coordinate the generation of different tracks for the case of multi-instrument music.

Tempo

But if look into tempo information in MIDI files provided by authors then you can easily find some errors in it. The one observed is that if the track has many notes in a time(play many notes in a fast way) then the tempo goes up too much.

Unlike the Position events, information regrading the Tempo events may not be always available in a MIDI score. But, for the case of MIDI performances, one can derive such Tempo events with the use of an audio-domain tempo estimation function.

from Sebastian Böck, Florian Krebs, and Gerhard Widmer. 2016. Joint beat and downbeat tracking with recurrent neural networks. In Proc. Int. Soc. Music Information Retrieval Conf. 255–261.

Chords

But there is some algorithmic error in finding chords.

1. one case is that there is no priorities among selecting best scored chords. So there could be an error if in case the key is A minor then the algorithm just select C major istead of A minor for a certeain harmony because C major was infront of a list for selecting.

2. Also the algorithm just expand previously selected chords if next chords section is empty(if there are no proper chords). So there is an noise for the selection.

And maybe I need to see how "Commu" from Pozalabs has tried to expand chords from REMI

A chord consists of a root note and a chord quality. Here, we consider 12 chord roots (C,C#,D,D#,E,F,F#,G,G#,A,A#,B) and five chord qualities (major, minor, diminished, augmented, dominant), yielding 60 possible Chord events, covering the triad and seventh chords

4 Proposed framework

5 Evaluation

Dataset

how about note level not position level? then we can reduce significant number of tokens while gaining finer grid to improve the expression of music

In our preliminary experiments, we have attempted to use a finer time grid (e.g., 32-th or 64-th note) to reduce quantization errors and to improve the expression of music (e.g., to include triplets, swing, mirco-timing variations, etc). However, we found that this seems to go beyond the capability of the adopted Transformer-XL architecture.

3개의 댓글

Immerse yourself in the vibrant world of Mariachi en Salt Lake City with Mariachi Guzmán! Their expert blend of experience and youthful enthusiasm creates a Mexican serenade like no other. The live show's musical quality is outstanding, complemented by the staff's professionalism. For an authentic and delightful Mariachi experience in Salt Lake City, Mariachi Guzmán is the top pick!

The best thing about https://mp3juicehub.org is how simple it makes music downloading. No registration, no subscription fees, and no distracting ads. It supports a broad range of music genres and formats. The download speed is impressive, and I’ve been able to build a large collection of quality songs and albums with ease using this service.

잘 봤습니다. 좋은 글 감사합니다.