이번에 대용량 csv파일을 조회해야 할 일이 생겼다.

pandas로 하기에 용량이 매우 컸다.

단기 프로젝트로 간단하게 해결해 볼 계획이다.

방식은 spark thrift를 띄워

hive에 external table을 만들고

spark에서 제공하는 ansi sql을 사용는 것이다.

파티셔닝

/data

|-- year=2023

| |-- month=1

| | |-- day=1

| | | |-- data1.csv

| | | |-- data2.csv

| | |-- day=2

| | |-- data3.csv

|-- year=2024

|-- month=3

|-- day=15

|-- data4.csvdocker container 실행

docker pull ubuntu

docker run -ti \

-p 10000:10000 \

-v /data:/data

--name spark \

ubuntu /bin/bashspark 설치

mkdir spark

cd spark

wget https://archive.apache.org/dist/spark/spark-3.2.3/spark-3.2.3-bin-hadoop2.7.tgz

tar xvf spark-3.2.3-bin-hadoop2.7.tgz

rm spark-3.2.3-bin-hadoop2.7.tgzspark 환경변수 세팅

vi ~/.bashrc

export SPARK_HOME=/spark/spark-3.2.3-bin-hadoop2.7

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

source ~/.bashrcthrift 서버 실행

start-thriftserver.shdatabase 생성

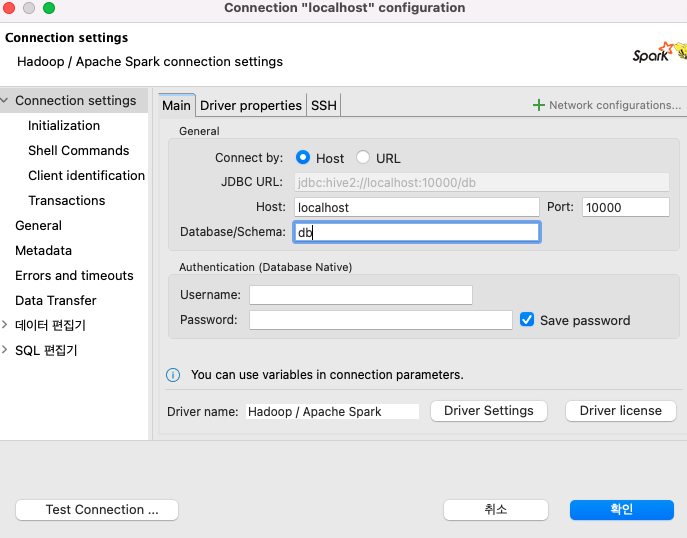

CREATE DATABASE [database name];dbeaver 접속

External Table 생성

CREATE external TABLE user_logs (

id integer,

name string,

email string,

age integer

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n'

STORED AS TEXTFILE

LOCATION '/data/rems'

PARTITIONED BY (year INT, month INT, day INT)

TBLPROPERTIES ("skip.header.line.count"="1");데이터 스캔

MSCK REPAIR TABLE user_logs;Partition Pruning

select *

from user_logs

where year=2023

and month=5

and day=15pyhive로 접속하기

관련 패키지 설치

pip install pandas

pip install pyhive

pip install thrift

pip install thrift_sasl코드

from pyhive import hive

import pandas as pd

con = hive.connect(host='localhost', port='10000', database='default')

sql = """

select *

from user_logs

where year=2018

and MONTH=1

and day=1

"""

df = pd.read_sql(con=con, sql=sql)

print(df)