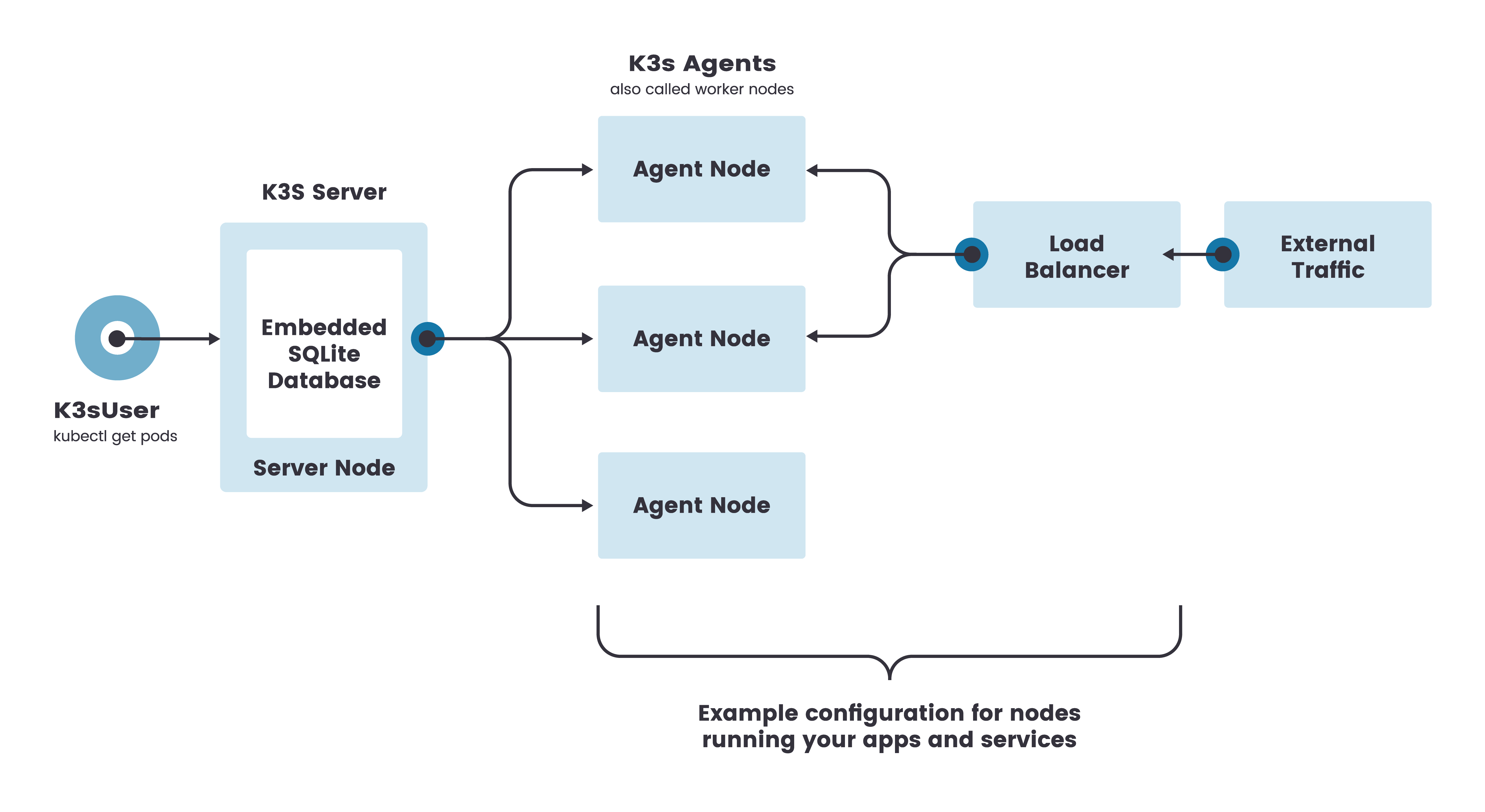

위 그림과 같은 구조로 클러스터를 구성할 예정입니다.

이번 글에서는 K3s Server라고 나와있는 마스터 노드를 올리겠습니다.

준비물

- 2 core, 2 GB, 우분투 18.04 머신

설치 시작

root@master:~# curl -sfL https://get.k3s.io | sh -

[INFO] Finding release for channel stable

[INFO] Using v1.22.7+k3s1 as release

[INFO] Downloading hash https://github.com/k3s-io/k3s/releases/download/v1.22.7+k3s1/sha256sum-amd64.txt

[INFO] Downloading binary https://github.com/k3s-io/k3s/releases/download/v1.22.7+k3s1/k3s

[INFO] Verifying binary download

[INFO] Installing k3s to /usr/local/bin/k3s

[INFO] Skipping installation of SELinux RPM

[INFO] Creating /usr/local/bin/kubectl symlink to k3s

[INFO] Creating /usr/local/bin/crictl symlink to k3s

[INFO] Creating /usr/local/bin/ctr symlink to k3s

[INFO] Creating killall script /usr/local/bin/k3s-killall.sh

[INFO] Creating uninstall script /usr/local/bin/k3s-uninstall.sh

[INFO] env: Creating environment file /etc/systemd/system/k3s.service.env

[INFO] systemd: Creating service file /etc/systemd/system/k3s.service

[INFO] systemd: Enabling k3s unit

Created symlink /etc/systemd/system/multi-user.target.wants/k3s.service → /etc/systemd/system/k3s.service.

[INFO] systemd: Starting k3s설치가 끝났으니 상태를 한번 보겠습니다.

root@master:~# service k3s status

● k3s.service - Lightweight Kubernetes

Loaded: loaded (/etc/systemd/system/k3s.service; enabled; vendor preset: enabled)

Active: active (running) since Wed 2022-04-20 18:38:22 KST; 1min 39s ago

Docs: https://k3s.io

Process: 25316 ExecStartPre=/sbin/modprobe overlay (code=exited, status=0/SUCCESS)

Process: 25315 ExecStartPre=/sbin/modprobe br_netfilter (code=exited, status=0/SUCCESS)

Process: 25313 ExecStartPre=/bin/sh -xc ! /usr/bin/systemctl is-enabled --quiet nm-cloud-setup.service (code=exited, status=0/SUCCESS)

Main PID: 25321 (k3s-server)

Tasks: 97

CGroup: /system.slice/k3s.service

├─25321 /usr/local/bin/k3s server

├─25367 containerd

├─25994 /var/lib/rancher/k3s/data/31ff0fd447a47323a7c863dbb0a3cd452e12b45f1ec67dc55efa575503c2c3ac/bin/containerd-shim-runc-v2 -namespace k8s.io -id 10dabaf1418ba836fe57c5513e66a8e0a4dd7cc028e8c01e98f2a2061f636b81 -address /run

├─26077 /pause

├─26169 /var/lib/rancher/k3s/data/31ff0fd447a47323a7c863dbb0a3cd452e12b45f1ec67dc55efa575503c2c3ac/bin/containerd-shim-runc-v2 -namespace k8s.io -id ff32f0db350793942da3e522f896a7e4440dea35e54b933fb49072ccac3b8e53 -address /run

├─26253 /pause

├─26271 /var/lib/rancher/k3s/data/31ff0fd447a47323a7c863dbb0a3cd452e12b45f1ec67dc55efa575503c2c3ac/bin/containerd-shim-runc-v2 -namespace k8s.io -id 41f864a1d060cf5d1133a8768391ce5fbecd79f5c1753248d32dc9ca9fa51033 -address /run

├─26297 /pause

├─26440 local-path-provisioner start --config /etc/config/config.json

├─26493 /coredns -conf /etc/coredns/Corefile

├─26549 /metrics-server --cert-dir=/tmp --secure-port=4443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --kubelet-use-node-status-port --metric-resolution=15s

├─27563 /var/lib/rancher/k3s/data/31ff0fd447a47323a7c863dbb0a3cd452e12b45f1ec67dc55efa575503c2c3ac/bin/containerd-shim-runc-v2 -namespace k8s.io -id dae1dd7d1dc9ac13131f187f2b8ff3109f6524296260cfe3d472dd62474939d3 -address /run

├─27606 /pause

├─27637 /var/lib/rancher/k3s/data/31ff0fd447a47323a7c863dbb0a3cd452e12b45f1ec67dc55efa575503c2c3ac/bin/containerd-shim-runc-v2 -namespace k8s.io -id d1459f5fac60a94db19f0d42ad41ff51b7d2a66159c78868ff73e8990fdaa12e -address /run

├─27670 /pause

├─27770 /bin/sh /usr/bin/entry

├─27844 /bin/sh /usr/bin/entry

└─27957 traefik traefik --global.checknewversion --global.sendanonymoususage --entrypoints.metrics.address=:9100/tcp --entrypoints.traefik.address=:9000/tcp --entrypoints.web.address=:8000/tcp --entrypoints.websecure.address=:8

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954518 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for ingressroutetcps.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954595 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for serverstransports.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954647 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for ingressrouteudps.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954698 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for tlsoptions.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954747 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for tlsstores.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954803 25321 resource_quota_monitor.go:229] QuotaMonitor created object count evaluator for middlewaretcps.traefik.containo.us

Apr 20 18:39:22 master k3s[25321]: I0420 18:39:22.954936 25321 shared_informer.go:240] Waiting for caches to sync for resource quota

Apr 20 18:39:23 master k3s[25321]: I0420 18:39:23.055234 25321 shared_informer.go:247] Caches are synced for resource quota

Apr 20 18:39:23 master k3s[25321]: I0420 18:39:23.525829 25321 shared_informer.go:240] Waiting for caches to sync for garbage collector

Apr 20 18:39:23 master k3s[25321]: I0420 18:39:23.525896 25321 shared_informer.go:247] Caches are synced for garbage collector클러스터 상태 확인

아직 어떤 상황인지 정확히 모르겠지만 명령어 한 줄로 설치가 끝났습니다!

kubectl로 노드 상태를 한번 볼까요?

root@master:~# kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane,master 5m1s v1.22.7+k3s1 192.168.0.7 <none> Ubuntu 18.04.6 LTS 4.15.0-29-generic containerd://1.5.9-k3s1

root@master:~# kubectl get all -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system pod/local-path-provisioner-84bb864455-5qk54 1/1 Running 0 9m18s

kube-system pod/coredns-96cc4f57d-2rsfc 1/1 Running 0 9m18s

kube-system pod/helm-install-traefik-crd--1-x8h7d 0/1 Completed 0 9m18s

kube-system pod/helm-install-traefik--1-zc4gc 0/1 Completed 1 9m18s

kube-system pod/metrics-server-ff9dbcb6c-xfllk 1/1 Running 0 9m18s

kube-system pod/svclb-traefik-qlnkz 2/2 Running 0 8m38s

kube-system pod/traefik-56c4b88c4b-w5km5 1/1 Running 0 8m39s

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 9m33s

kube-system service/kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 9m30s

kube-system service/metrics-server ClusterIP 10.43.132.121 <none> 443/TCP 9m28s

kube-system service/traefik LoadBalancer 10.43.57.223 192.168.0.7 80:30766/TCP,443:31445/TCP 8m39s

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/svclb-traefik 1 1 1 1 1 <none> 8m38s

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system deployment.apps/local-path-provisioner 1/1 1 1 9m30s

kube-system deployment.apps/coredns 1/1 1 1 9m30s

kube-system deployment.apps/metrics-server 1/1 1 1 9m29s

kube-system deployment.apps/traefik 1/1 1 1 8m39s

NAMESPACE NAME DESIRED CURRENT READY AGE

kube-system replicaset.apps/coredns-96cc4f57d 1 1 1 9m18s

kube-system replicaset.apps/local-path-provisioner-84bb864455 1 1 1 9m18s

kube-system replicaset.apps/metrics-server-ff9dbcb6c 1 1 1 9m18s

kube-system replicaset.apps/traefik-56c4b88c4b 1 1 1 8m39s

NAMESPACE NAME COMPLETIONS DURATION AGE

kube-system job.batch/helm-install-traefik-crd 1/1 39s 9m27s

kube-system job.batch/helm-install-traefik 1/1 40s 9m27s이전 소개 글에서 한번에 설치된다고 했던 것들이 진짜 있는게 보입니다.

그 중에서 metric 서버를 같이 설치해주는 부분이 편리하네요.

metric 서버가 설치되면 아래와 같은 모니터링이 가능하고 HPA 사용 또한 가능합니다.

root@master:~# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master 75m 3% 1156Mi 58%

root@master:~# kubectl top pod -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system coredns-96cc4f57d-2rsfc 2m 12Mi

kube-system local-path-provisioner-84bb864455-5qk54 1m 6Mi

kube-system metrics-server-ff9dbcb6c-xfllk 6m 16Mi

kube-system svclb-traefik-qlnkz 0m 1Mi

kube-system traefik-56c4b88c4b-w5km5 1m 18Mi동작 확인

마스터 노드가 정상적으로 동작하는 것 같습니다!

마지막으로 디플로이먼트를 하나 배포 해보고 마무리 하겠습니다.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80root@master:~# kubectl apply -f deployment.yaml

deployment.apps/nginx-deployment created

root@master:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-66b6c48dd5-kznkg 0/1 ContainerCreating 0 11s

pod/nginx-deployment-66b6c48dd5-9fqfl 0/1 ContainerCreating 0 11s

pod/nginx-deployment-66b6c48dd5-7g9v7 0/1 ContainerCreating 0 11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 23h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 0/3 3 0 11s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-66b6c48dd5 3 3 0 11s

root@master:~# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-66b6c48dd5-7g9v7 1/1 Running 0 2m20s

pod/nginx-deployment-66b6c48dd5-9fqfl 1/1 Running 0 2m20s

pod/nginx-deployment-66b6c48dd5-kznkg 1/1 Running 0 2m20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 23h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 3/3 3 3 2m20s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-66b6c48dd5 3 3 3 2m20s디플로이먼트도 잘 실행되는 것을 확인했습니다.

그럼 파드를 죽여서 레플리카셋이 파드를 잘 살려주는지 확인해보겠습니다.

root@master:~# kubectl delete pod/nginx-deployment-66b6c48dd5-kznkg

pod "nginx-deployment-66b6c48dd5-kznkg" deleted

root@master:~# kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-66b6c48dd5-7g9v7 1/1 Running 0 3m46s 10.42.0.12 master <none> <none>

pod/nginx-deployment-66b6c48dd5-9fqfl 1/1 Running 0 3m46s 10.42.0.11 master <none> <none>

pod/nginx-deployment-66b6c48dd5-gprjb 1/1 Running 0 2s 10.42.0.13 master <none> <none>nginx-deployment-66b6c48dd5-gprjb 파드가 새로 생성된 것을 확인했습니다.

아주 잘 동작하네요!

마무리

명령어 한 줄로 마스터 노드를 설치했습니다.

디플로이먼트를 배포해서 기본 기능이 잘 동작하는 것도 확인했구요.

이제 다음 글에서 워커 노드를 추가해서 온전한 쿠버네티스 클러스터를 구축해보겠습니다.

감사합니다!