google ML Kit를 사용해보려고한다

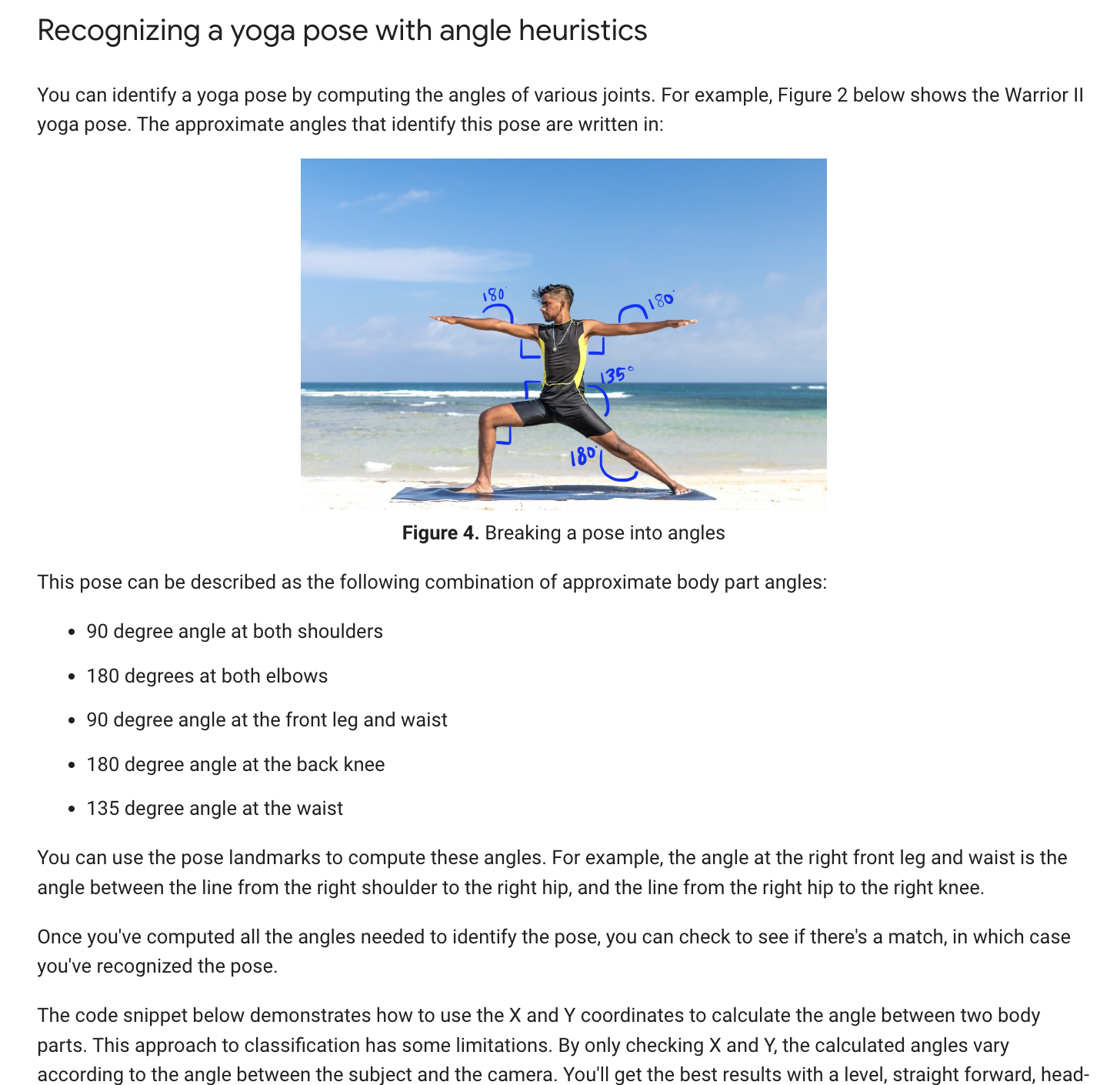

구글은 관절(조인트)의 거리와 각도에 따른 함수를 제공한다

https://developers.google.com/ml-kit/vision/pose-detection/classifying-poses?hl=ko

func angle(

firstLandmark: PoseLandmark,

midLandmark: PoseLandmark,

lastLandmark: PoseLandmark

) -> CGFloat {

let radians: CGFloat =

atan2(lastLandmark.position.y - midLandmark.position.y,

lastLandmark.position.x - midLandmark.position.x) -

atan2(firstLandmark.position.y - midLandmark.position.y,

firstLandmark.position.x - midLandmark.position.x)

var degrees = radians * 180.0 / .pi

degrees = abs(degrees) // Angle should never be negative

if degrees > 180.0 {

degrees = 360.0 - degrees // Always get the acute representation of the angle

}

return degrees

}애플의 pose detecting에는 관절의 각도에 따라, 관절의 거리에 따라 작동하는 함수가 없다 위 함수를 만들수있는지 확인이 필요하다

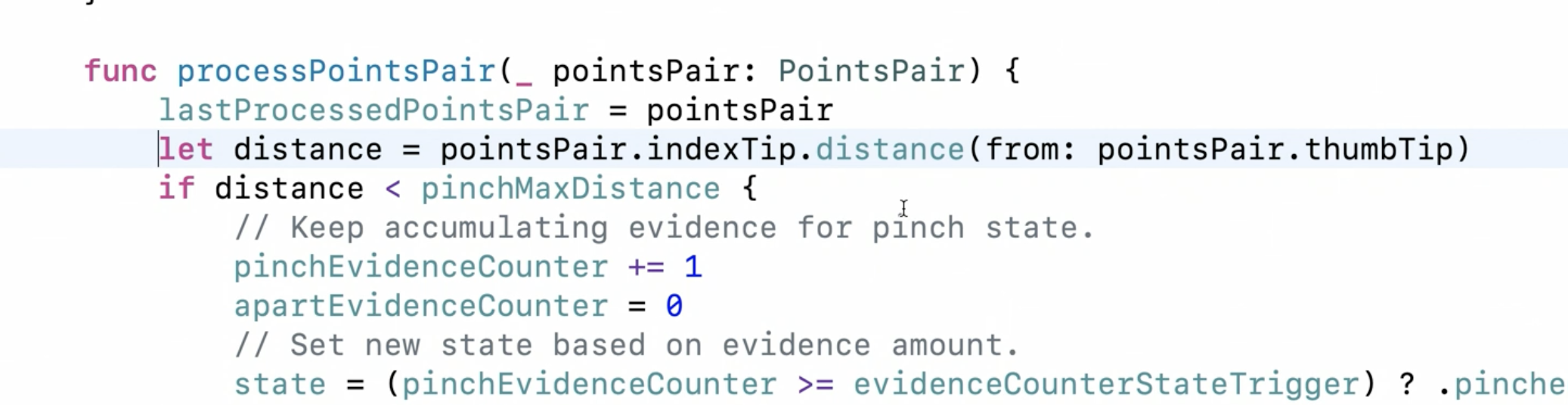

- 애플의 pose detecting 따로 함수가 없어 extension으로 만들어주어야한다 아래는 두 포인트의 거리를 측정하는 함수 (

extension CGPoint {

static func midPoint(p1: CGPoint, p2: CGPoint) -> CGPoint {

return CGPoint(x: (p1.x + p2.x) / 2, y: (p1.y + p2.y) / 2)

}

func distance(from point: CGPoint) -> CGFloat {

return hypot(point.x - x, point.y - y)

}

}https://developer.apple.com/videos/play/wwdc2020/10653/)

- 다른 wwdc영상에서는 바디 프레임의 형태를 ML모델로 만들어 pose를 구분하는 것만 있다

(해당 링크 https://developer.apple.com/videos/play/wwdc2020/10099/)

- 나중에 안드로이드에서도 구동해보고 싶기도하다

- 구글의 샘플을 구동해보았고, pose detect기능을 구현해보려 했지만

- pod로 설치한 프레임워크의 헤더파일을 읽지 못하는 오류 상황이고 다음 수업 전까지 해결 해보려한다

- https://developer.apple.com/documentation/createml/detecting_human_actions_in_a_live_video_feed 해당 애플 샘플은 바디 트래킹을 하고 프레임을 ML로 자세를 파악하는 것인데 UIKit으로 만들어져있다 UIKit으로 만들어진 샘플을 참고하는것은 내게는 버겁다