캐글 산탄데르 고객 만족 예측

- XGBoost와 LightGBM을 활용하여 고객 만족 여부를 예측

- 370개 피처 사용

- 피처는 모두 익명 처리로, 속성 추정 x

- 클래스 레이블명 TARGET, 1=불만고객 & 0=만족고객

- 모델 성능 평가는 ROC-AUC(ROC 곡선 영역)

- data download

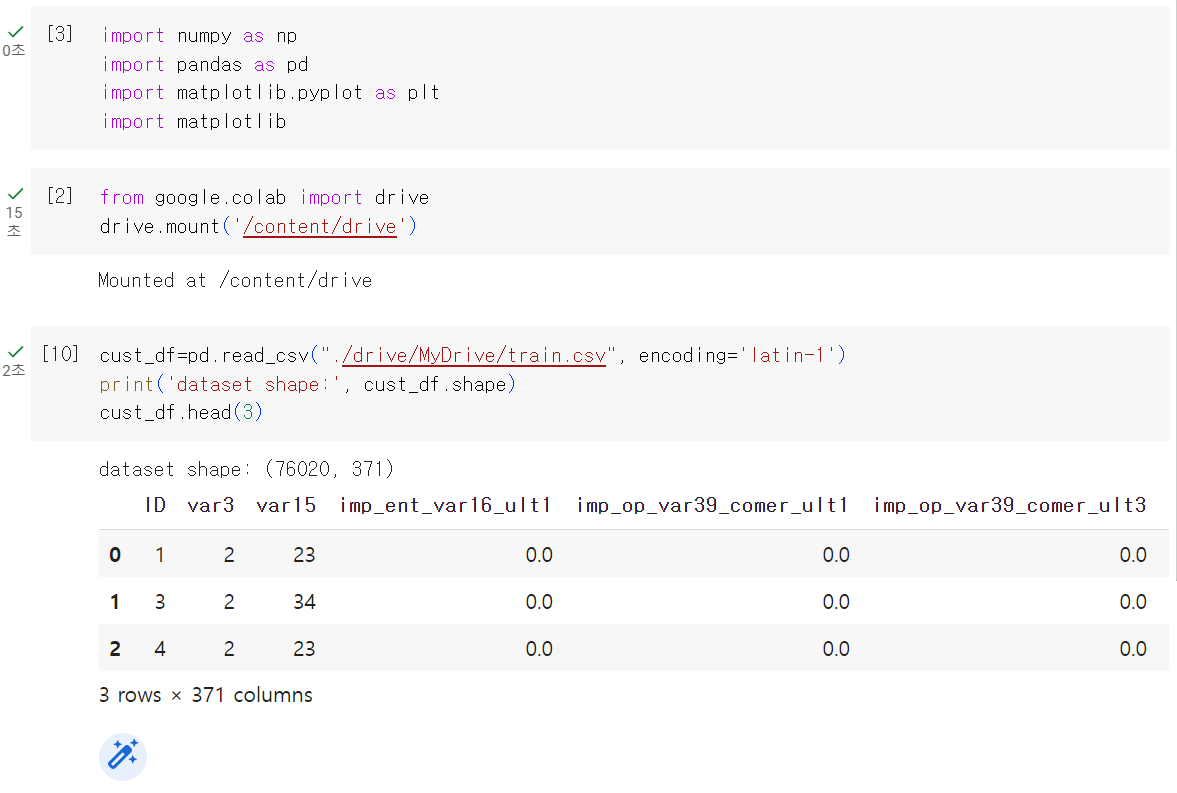

1. 데이터 전처리

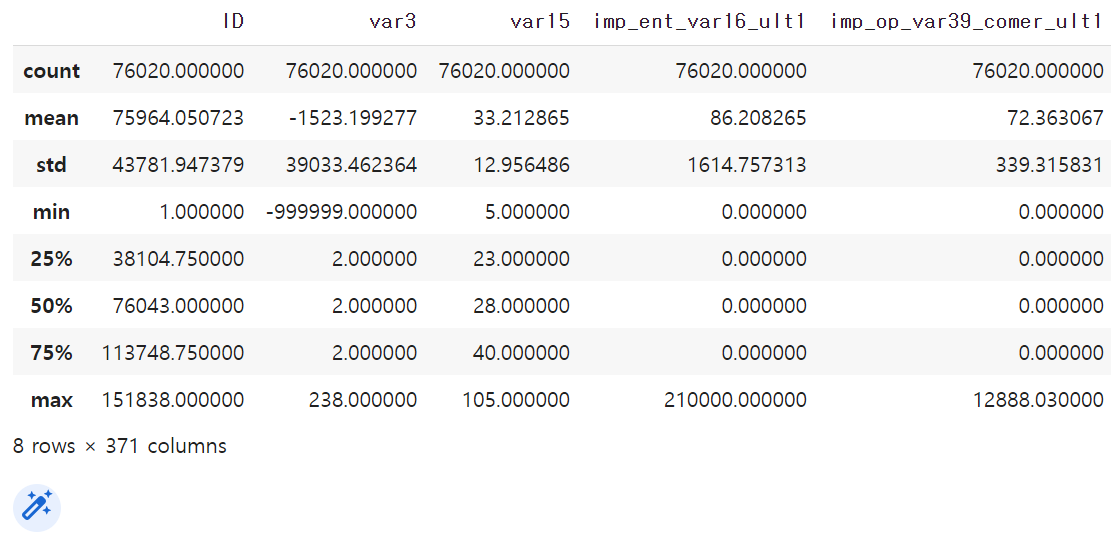

<결과값>

| index | ID | var3 | var15 | imp_ent_var16_ult1 | imp_op_var39_comer_ult1 | imp_op_var39_comer_ult3 | imp_op_var40_comer_ult1 | imp_op_var40_comer_ult3 | imp_op_var40_efect_ult1 | imp_op_var40_efect_ult3 | imp_op_var40_ult1 | imp_op_var41_comer_ult1 | imp_op_var41_comer_ult3 | imp_op_var41_efect_ult1 | imp_op_var41_efect_ult3 | imp_op_var41_ult1 | imp_op_var39_efect_ult1 | imp_op_var39_efect_ult3 | imp_op_var39_ult1 | imp_sal_var16_ult1 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 23 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1 | 3 | 2 | 34 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2 | 4 | 2 | 23 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

▶️ 클래스 값 칼럼을 포함한 피처가 371개 존재한다는 것을 알 수 있음

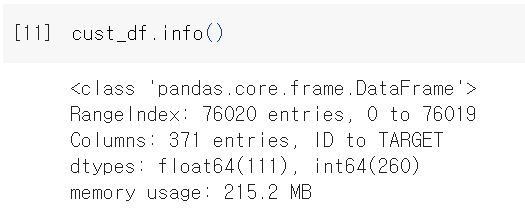

📍피처 타입과 null 값 더 알아보기

cust_df.info()

▶️111개의 피처가 float형, 260개의 피처가 int형임

▶️모든 피처는 숫자 형태에 null 값이 존재하지 않는다는 것을 알 수 있음

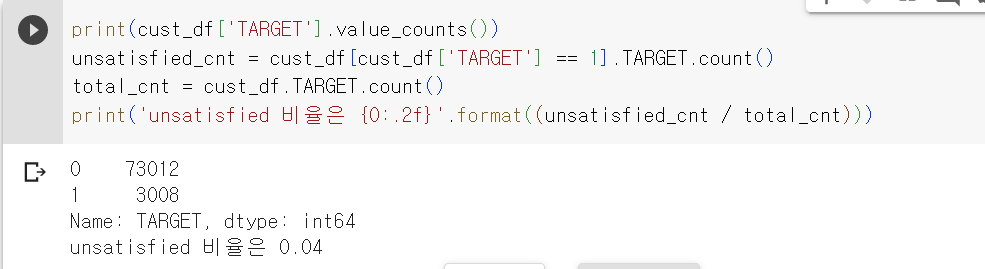

📍전체 데이터의 만족과 불만족의 비율을 알아보자 (레이블인 Target 속성의 값의 분포를 알아 보면 됨)

print(cust_df['TARGET'].value_counts())

unsatisfied_cnt = cust_df[cust_df['TARGET'] == 1].TARGET.count()

total_cnt = cust_df.TARGET.count()

print('unsatisfied 비율은 {0:.2f}'.format((unsatisfied_cnt / total_cnt))) ▶️불만족 비율은 4%밖에 되지 않음

▶️불만족 비율은 4%밖에 되지 않음

📍DataFrame의 describe() 활용하여 각 피처의 값의 분포를 확인해보자

cust_df.describe() ▶️var3 칼럼은 min 값이 -999999임(NaN이나 특정한 예외값을 이렇게 변환한듯)

▶️var3 칼럼은 min 값이 -999999임(NaN이나 특정한 예외값을 이렇게 변환한듯)

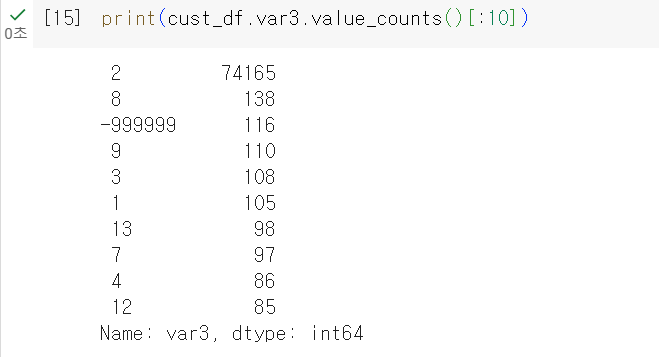

▶️print해서 var3의 값을 조사해보면 116개의 -999999이 존재

▶️print해서 var3의 값을 조사해보면 116개의 -999999이 존재

▶️가장 많이 존재하는 값은 2임

🔻var3은 숫자형이고 -999999는 편차가 너무 심하므로 값이 가장 많은 2로 변환

🔻ID 피처는 단순 식별자이므로 피처 드롭

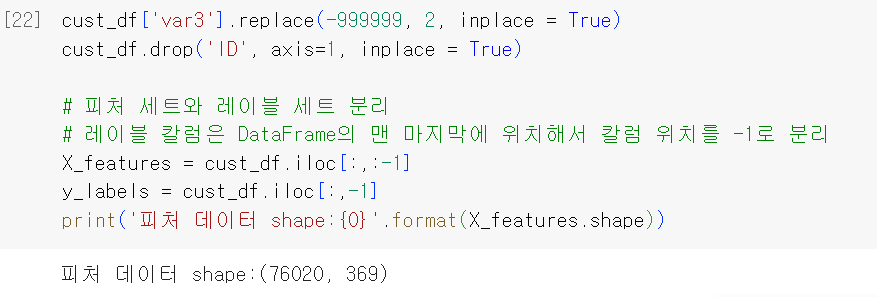

📍-999999을 2로 변환, ID 피처 드롭하고 클래스 데이터 세트와 피처 데이터 세트를 분리해 별도로 저장

cust_df['var3'].replace(-999999, 2, inplace = True)

cust_df.drop('ID', axis=1, inplace = True)

# 피처 세트와 레이블 세트 분리

# 레이블 칼럼은 DataFrame의 맨 마지막에 위치해서 칼럼 위치를 -1로 분리

X_features = cust_df.iloc[:,:-1]

y_labels = cust_df.iloc[:,-1]

print('피처 데이터 shape:{0}'.format(X_features.shape))

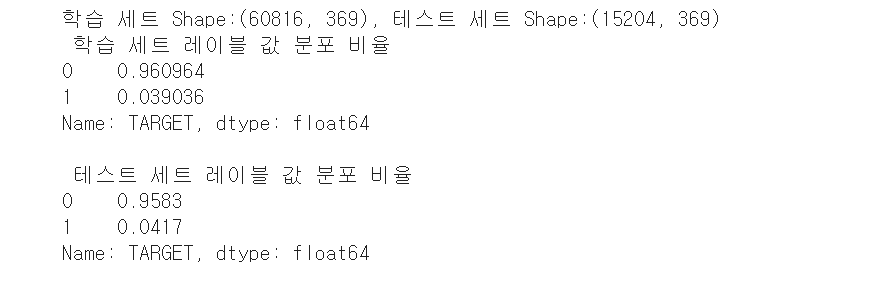

📍train data set과 test data set 분리하고 클래스인 Target 값 분포도가 모두 비슷하게 추출됐는지 확인

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X_features, y_labels, test_size=0.2, random_state=0)

train_cnt = y_train.count()

test_cnt = y_test.count()

print('학습 세트 Shape:{0}, 테스트 세트 Shape:{1}'.format(X_train.shape, X_test.shape))

print(' 학습 세트 레이블 값 분포 비율')

print(y_train.value_counts()/train_cnt)

print('\n 테스트 세트 레이블 값 분포 비율')

print(y_test.value_counts()/test_cnt) ▶️train과 test data set 모두 Target 값의 분포가 원본 데이터와 유사하게 4%정도의 불만족 값으로 만들어졌음

▶️train과 test data set 모두 Target 값의 분포가 원본 데이터와 유사하게 4%정도의 불만족 값으로 만들어졌음

2. XGBoost 모델 학습과 하이퍼 파라미터 튜닝

📍XGBoost 학습 모델 생성하고 예측 결과를 ROC AUC로 평가해보자

🔻 사이킷런 래퍼인 XGBClassifier를 기반으로 학습 수행

🔻 성능 평가 기준이 ROC-AUC이므로 eval_metric은 'auc'(logloss로 해도 ㄱㅊ

🔻 test data set를 평가 데이터 세트로 사용하면 과적합될 가능성이 높아질 수 있긴 하지만... 일단 사용해보겠음

from xgboost import XGBClassifier

from sklearn.metrics import roc_auc_score

# n_estimators는 500으로, random state는 예제 수행 시마다 동일 예측 결과를 위해 설정

xgb_clf = XGBClassifier(n_estimators=500, random_state=156)

# 성능 평가 지표를 auc로, 조기 중단 파라미터는 100으로 설정하고 학습 수행

xgb_clf.fit(X_train, y_train, early_stopping_rounds=100, eval_metric="auc", eval_set=[(X_train, y_train), (X_test, y_test)])

xgb_roc_score = roc_auc_score(y_test, xgb_clf.predict_proba(X_test)[:, 1], average='macro')

print('ROC AUC: {0:.4f}'.format(xgb_roc_score))/usr/local/lib/python3.10/dist-packages/xgboost/sklearn.py:835: UserWarning: 'eval_metric' in 'fit' method is deprecated for better compatibility with scikit-learn, use 'eval_metric' in constructor or'set_params' instead.

warnings.warn(

/usr/local/lib/python3.10/dist-packages/xgboost/sklearn.py:835: UserWarning: 'early_stopping_rounds' in 'fit' method is deprecated for better compatibility with scikit-learn, use 'early_stopping_rounds' in constructor or 'set_params' instead.

warnings.warn(

[0] validation_0-auc:0.82005 validation_1-auc:0.81157

[1] validation_0-auc:0.83400 validation_1-auc:0.82452

[2] validation_0-auc:0.83870 validation_1-auc:0.82745

[3] validation_0-auc:0.84419 validation_1-auc:0.82922

[4] validation_0-auc:0.84783 validation_1-auc:0.83298

[5] validation_0-auc:0.85125 validation_1-auc:0.83500

[6] validation_0-auc:0.85501 validation_1-auc:0.83653

[7] validation_0-auc:0.85831 validation_1-auc:0.83782

[8] validation_0-auc:0.86143 validation_1-auc:0.83802

[9] validation_0-auc:0.86452 validation_1-auc:0.83914

[10] validation_0-auc:0.86717 validation_1-auc:0.83954

[11] validation_0-auc:0.87013 validation_1-auc:0.83983

[12] validation_0-auc:0.87369 validation_1-auc:0.84033

[13] validation_0-auc:0.87620 validation_1-auc:0.84055

[14] validation_0-auc:0.87799 validation_1-auc:0.84135

[15] validation_0-auc:0.88071 validation_1-auc:0.84117

[16] validation_0-auc:0.88237 validation_1-auc:0.84101

[17] validation_0-auc:0.88352 validation_1-auc:0.84071

[18] validation_0-auc:0.88457 validation_1-auc:0.84052

[19] validation_0-auc:0.88592 validation_1-auc:0.84023

[20] validation_0-auc:0.88788 validation_1-auc:0.84012

[21] validation_0-auc:0.88845 validation_1-auc:0.84022

[22] validation_0-auc:0.88980 validation_1-auc:0.84007

[23] validation_0-auc:0.89019 validation_1-auc:0.84009

[24] validation_0-auc:0.89193 validation_1-auc:0.83974

[25] validation_0-auc:0.89253 validation_1-auc:0.84015

[26] validation_0-auc:0.89329 validation_1-auc:0.84101

[27] validation_0-auc:0.89386 validation_1-auc:0.84087

[28] validation_0-auc:0.89416 validation_1-auc:0.84074

[29] validation_0-auc:0.89660 validation_1-auc:0.83999

[30] validation_0-auc:0.89737 validation_1-auc:0.83959

[31] validation_0-auc:0.89911 validation_1-auc:0.83952

[32] validation_0-auc:0.90103 validation_1-auc:0.83901

[33] validation_0-auc:0.90250 validation_1-auc:0.83885

[34] validation_0-auc:0.90275 validation_1-auc:0.83887

[35] validation_0-auc:0.90290 validation_1-auc:0.83864

[36] validation_0-auc:0.90460 validation_1-auc:0.83834

[37] validation_0-auc:0.90497 validation_1-auc:0.83810

[38] validation_0-auc:0.90515 validation_1-auc:0.83811

[39] validation_0-auc:0.90533 validation_1-auc:0.83813

[40] validation_0-auc:0.90574 validation_1-auc:0.83776

[41] validation_0-auc:0.90690 validation_1-auc:0.83720

[42] validation_0-auc:0.90715 validation_1-auc:0.83684

[43] validation_0-auc:0.90736 validation_1-auc:0.83672

[44] validation_0-auc:0.90758 validation_1-auc:0.83674

[45] validation_0-auc:0.90767 validation_1-auc:0.83694

[46] validation_0-auc:0.90778 validation_1-auc:0.83687

[47] validation_0-auc:0.90791 validation_1-auc:0.83678

[48] validation_0-auc:0.90829 validation_1-auc:0.83694

[49] validation_0-auc:0.90869 validation_1-auc:0.83676

[50] validation_0-auc:0.90890 validation_1-auc:0.83655

[51] validation_0-auc:0.91067 validation_1-auc:0.83669

[52] validation_0-auc:0.91238 validation_1-auc:0.83641

[53] validation_0-auc:0.91352 validation_1-auc:0.83690

[54] validation_0-auc:0.91386 validation_1-auc:0.83693

[55] validation_0-auc:0.91406 validation_1-auc:0.83681

[56] validation_0-auc:0.91545 validation_1-auc:0.83680

[57] validation_0-auc:0.91556 validation_1-auc:0.83667

[58] validation_0-auc:0.91628 validation_1-auc:0.83665

[59] validation_0-auc:0.91725 validation_1-auc:0.83591

[60] validation_0-auc:0.91762 validation_1-auc:0.83576

[61] validation_0-auc:0.91784 validation_1-auc:0.83534

[62] validation_0-auc:0.91872 validation_1-auc:0.83513

[63] validation_0-auc:0.91892 validation_1-auc:0.83510

[64] validation_0-auc:0.91896 validation_1-auc:0.83508

[65] validation_0-auc:0.91907 validation_1-auc:0.83519

[66] validation_0-auc:0.91970 validation_1-auc:0.83510

[67] validation_0-auc:0.91982 validation_1-auc:0.83523

[68] validation_0-auc:0.92007 validation_1-auc:0.83457

[69] validation_0-auc:0.92015 validation_1-auc:0.83460

[70] validation_0-auc:0.92024 validation_1-auc:0.83446

[71] validation_0-auc:0.92037 validation_1-auc:0.83462

[72] validation_0-auc:0.92087 validation_1-auc:0.83394

[73] validation_0-auc:0.92094 validation_1-auc:0.83410

[74] validation_0-auc:0.92133 validation_1-auc:0.83394

[75] validation_0-auc:0.92141 validation_1-auc:0.83368

[76] validation_0-auc:0.92321 validation_1-auc:0.83413

[77] validation_0-auc:0.92415 validation_1-auc:0.83359

[78] validation_0-auc:0.92503 validation_1-auc:0.83353

[79] validation_0-auc:0.92539 validation_1-auc:0.83293

[80] validation_0-auc:0.92577 validation_1-auc:0.83253

[81] validation_0-auc:0.92677 validation_1-auc:0.83187

[82] validation_0-auc:0.92706 validation_1-auc:0.83230

[83] validation_0-auc:0.92800 validation_1-auc:0.83216

[84] validation_0-auc:0.92822 validation_1-auc:0.83206

[85] validation_0-auc:0.92870 validation_1-auc:0.83196

[86] validation_0-auc:0.92875 validation_1-auc:0.83200

[87] validation_0-auc:0.92881 validation_1-auc:0.83208

[88] validation_0-auc:0.92919 validation_1-auc:0.83174

[89] validation_0-auc:0.92940 validation_1-auc:0.83160

[90] validation_0-auc:0.92948 validation_1-auc:0.83155

[91] validation_0-auc:0.92959 validation_1-auc:0.83165

[92] validation_0-auc:0.92964 validation_1-auc:0.83172

[93] validation_0-auc:0.93031 validation_1-auc:0.83160

[94] validation_0-auc:0.93032 validation_1-auc:0.83150

[95] validation_0-auc:0.93037 validation_1-auc:0.83132

[96] validation_0-auc:0.93084 validation_1-auc:0.83090

[97] validation_0-auc:0.93091 validation_1-auc:0.83091

[98] validation_0-auc:0.93168 validation_1-auc:0.83066

[99] validation_0-auc:0.93245 validation_1-auc:0.83058

[100] validation_0-auc:0.93286 validation_1-auc:0.83029

[101] validation_0-auc:0.93361 validation_1-auc:0.82955

[102] validation_0-auc:0.93359 validation_1-auc:0.82962

[103] validation_0-auc:0.93435 validation_1-auc:0.82893

[104] validation_0-auc:0.93446 validation_1-auc:0.82837

[105] validation_0-auc:0.93480 validation_1-auc:0.82815

[106] validation_0-auc:0.93579 validation_1-auc:0.82744

[107] validation_0-auc:0.93583 validation_1-auc:0.82728

[108] validation_0-auc:0.93610 validation_1-auc:0.82651

[109] validation_0-auc:0.93617 validation_1-auc:0.82650

[110] validation_0-auc:0.93659 validation_1-auc:0.82621

[111] validation_0-auc:0.93663 validation_1-auc:0.82620

[112] validation_0-auc:0.93710 validation_1-auc:0.82591

[113] validation_0-auc:0.93781 validation_1-auc:0.82498

ROC AUC: 0.8413

▶️test data set으로 예측하니 ROC AUC는 약 0.8413이 됨