4.1 쿠버네티스 파드에 문제가 생겼다면?

파드를 실수로 지웠다면?

- 파드만 배포된 경우: 그냥 지워진거다.

- 디플로이먼트 형태로 배포된 파드: 디플로이먼트가 파드를 유지하기 때문에 문제가 생기지 않는다.

파드와 디플로이먼트의 비교

- 디플로이먼트는 파드가 지워지면 파드를 다시 만든다.

쿠버네티스가 파드를 대하는 자세

- 파드가 죽을 수도 있다는 것을 전제로 파드를 다룬다. (파드가 죽으면 다시 생성)

ex) 노드에 있는 파드를 다른 곳으로 옮길 때 파드를 지우고 다시 만든다.

- 파드는 어디로 옮겨지는 주체가 아니다. -> 어디로 갈 때 반드시 파드가 삭제되고 다시 생성된다.

실습

생성

[root@m-k8s ~]

deployment.apps/del-deploy created

pod/del-pod created

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-gfc2t 1/1 Running 0 85s

del-deploy-57f68b56f7-t5pnw 1/1 Running 0 85s

del-deploy-57f68b56f7-zx979 1/1 Running 0 85s

del-pod 1/1 Running 0 85s

- apply 명령어로 디렉토리 선언 시, 디렉토리 안의 yaml 파일들을 모두 생성한다.

pod 삭제

[root@m-k8s ~]

pod "del-pod" deleted

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-gfc2t 1/1 Running 0 2m13s

del-deploy-57f68b56f7-t5pnw 1/1 Running 0 2m13s

del-deploy-57f68b56f7-zx979 1/1 Running 0 2m13s

deployment로 배포된 파드 삭제

root@m-k8s ~]

pod "del-deploy-57f68b56f7-zx979" deleted

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-gfc2t 1/1 Running 0 2m50s

del-deploy-57f68b56f7-nf7vz 1/1 Running 0 15s

del-deploy-57f68b56f7-t5pnw 1/1 Running 0 2m50s

- 디플로이먼트를 삭제해도 replica가 3이라 3개의 파드를 유지하기 위해 자동으로 재생성한다.

deployment 삭제

[root@m-k8s ~]

deployment.apps "del-deploy" deleted

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-gfc2t 0/1 Terminating 0 5m14s

del-deploy-57f68b56f7-nf7vz 0/1 Terminating 0 2m39s

del-deploy-57f68b56f7-t5pnw 0/1 Terminating 0 5m14s

[root@m-k8s ~]

No resources found in default namespace.

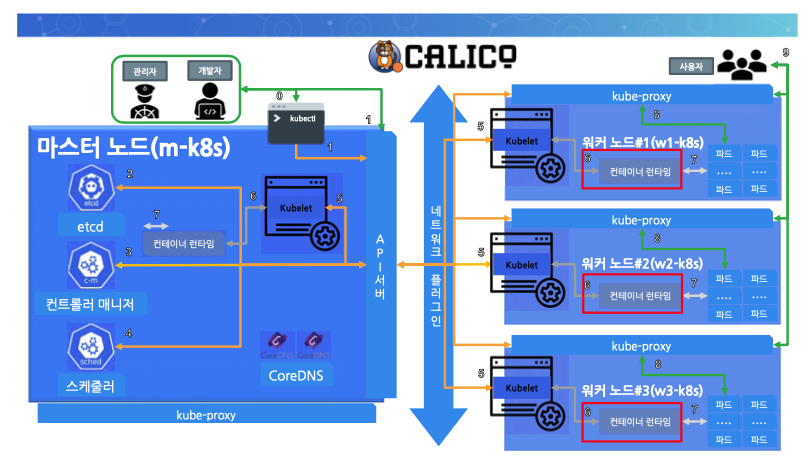

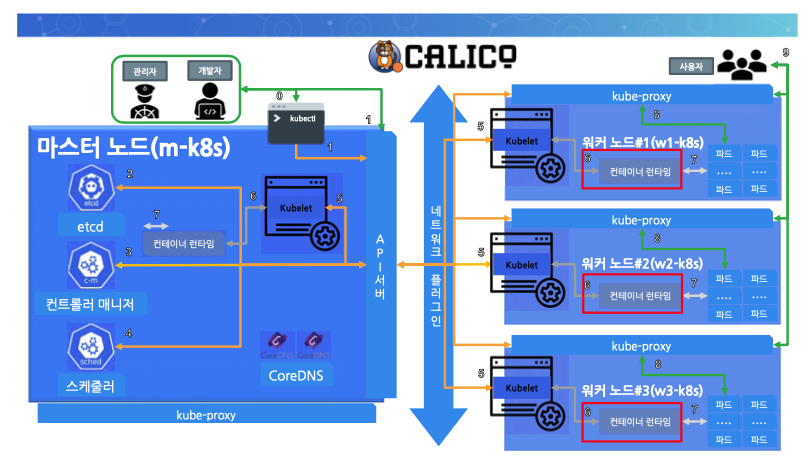

4.2 쿠버네티스 워커 노드의 구성 요소에 문제가 생겼다면?

- 대부분 쿠버네티스는 구성 요소는 문제가 생겨도 자동으로 복구가 빠르게 된다.

- 단, kubelet은 API 서버와 직접적으로 선언한 것을 가져가는 방식이 아니기 때문에 바로 복구가 되지 않는다.

kubelet 중단 실습

w1-워커 노드의 kubelet 중단

[root@w1-k8s ~]

[root@w1-k8s ~]

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead) since Sun 2022-09-11 14:12:35 KST; 9s ago

Docs: https://kubernetes.io/docs/

Process: 970 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=0/SUCCESS)

Main PID: 970 (code=exited, status=0/SUCCESS)

Sep 11 14:01:14 w1-k8s kubelet[970]: E0911 14:01:14.17...

Sep 11 14:01:14 w1-k8s kubelet[970]: W0911 14:01:14.17...

Sep 11 14:01:14 w1-k8s kubelet[970]: I0911 14:01:14.23...

Sep 11 14:01:14 w1-k8s kubelet[970]: I0911 14:01:14.24...

Sep 11 14:01:14 w1-k8s kubelet[970]: I0911 14:01:14.33...

Sep 11 14:01:14 w1-k8s kubelet[970]: E0911 14:01:14.93...

Sep 11 14:01:14 w1-k8s kubelet[970]: E0911 14:01:14.93...

Sep 11 14:12:35 w1-k8s systemd[1]: Stopping kubelet: T...

Sep 11 14:12:35 w1-k8s kubelet[970]: I0911 14:12:35.25...

Sep 11 14:12:35 w1-k8s systemd[1]: Stopped kubelet: Th...

Hint: Some lines were ellipsized, use -l to show in full.

마스터 노드 배포

[root@m-k8s ~]

deployment.apps/del-deploy created

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-hqxb8 0/1 Pending 0 48s <none> w1-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 48s 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 48s 172.16.103.138 w2-k8s <none> <none>

- w1-k8s node의 상태가 Pending이 된 것을 볼 수 있다.

w1-워커 노드의 kubelet 재시작

[root@w1-k8s ~]# systemctl start kubelet

[root@m-k8s ~]# kubectl get pods -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-hqxb8 0/1 Pending 0 114s <none> w1-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 114s 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 114s 172.16.103.138 w2-k8s <none> <none>

del-deploy-57f68b56f7-hqxb8 0/1 Pending 0 117s <none> w1-k8s <none> <none>

del-deploy-57f68b56f7-hqxb8 0/1 ContainerCreating 0 117s <none> w1-k8s <none> <none>

del-deploy-57f68b56f7-hqxb8 1/1 Running 0 2m1s 172.16.221.137 w1-k8s <none> <none>

- Pending된 컨테이너가 재생성되어 실행 상태로 변경되었다.

컨테이너 런타임(도커) 중단 실습

w1의 도커 중단

[root@w1-k8s ~]

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

[root@w1-k8s ~]

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: inactive (dead) since Sun 2022-09-11 14:24:56 KST; 14s ago

Docs: https://docs.docker.com

Process: 989 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock (code=exited, status=0/SUCCESS)

Main PID: 989 (code=exited, status=0/SUCCESS)

Sep 11 14:24:55 w1-k8s systemd[1]: Stopping Docker Application Container Engine...

Sep 11 14:24:55 w1-k8s dockerd[989]: time="2022-09-11T14:24:55.941337654+09:00" lev...d'"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.251665832+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.281801800+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.294807428+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.315198873+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.315550370+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.315821626+09:00" lev...te"

Sep 11 14:24:56 w1-k8s dockerd[989]: time="2022-09-11T14:24:56.342025027+09:00" lev...te"

Sep 11 14:24:56 w1-k8s systemd[1]: Stopped Docker Application Container Engine.

Hint: Some lines were ellipsized, use -l to show in full.

deployment의 replica를 6으로 늘리면

[root@m-k8s ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-hqxb8 1/1 Running 0 6m6s

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 6m6s

del-deploy-57f68b56f7-pxs75 1/1 Running 0 6m6s

[root@m-k8s ~]# kubectl scale deployment del-deploy --replicas=6

deployment.apps/del-deploy scaled

[root@m-k8s ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 6s 172.16.132.10 w3-k8s <none> <none>

del-deploy-57f68b56f7-hqxb8 1/1 Running 0 6m27s 172.16.221.137 w1-k8s <none> <none>

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 6s 172.16.103.139 w2-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 6m27s 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 6m27s 172.16.103.138 w2-k8s <none> <none>

del-deploy-57f68b56f7-zlnxb 0/1 ContainerCreating 0 6s <none> w2-k8s <none> <none>

- 워커 노드의 컨테이너 런타임이 중단이 되면 스케줄러가 다른 워커 노드에서 파드를 생성하게 한다.

차이점?

- 컨테이너 런타임이 문제가 생겼을 때, kubelet이 이를 인식해 API 서버에게 알려주고 스케줄러가 문제가 생긴 컨테이너 런타임이 있는 노드에게 배포를 스케줄링하지 않는다.

- 하지만 kubelet에 문제가 생긴 경우에는 kubelet이 API 서버에 업데이트를 할 수가 없기 때문에 단순히 Pending 상태로 되어 있는 것이다.

추가 배포를 통해 스케줄러 역할 확인

노드가 비균등하게 배포된 현 상태

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 3m56s 172.16.132.10 w3-k8s <none> <none>

del-deploy-57f68b56f7-hqxb8 1/1 Running 0 10m 172.16.221.137 w1-k8s <none> <none>

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 3m56s 172.16.103.139 w2-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 10m 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 10m 172.16.103.138 w2-k8s <none> <none>

del-deploy-57f68b56f7-zlnxb 1/1 Running 0 3m56s 172.16.103.140 w2-k8s <none> <none>

w1의 도커 재시작

[root@w1-k8s ~]

마스터 노드의 레플리카를 9로 설정

[root@m-k8s ~]

배포가 균등하게 이루어진 상태

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-5wrpq 1/1 Running 0 31s 172.16.221.141 w1-k8s <none> <none>

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 4m56s 172.16.132.10 w3-k8s <none> <none>

del-deploy-57f68b56f7-c9gql 1/1 Running 0 40s 172.16.132.11 w3-k8s <none> <none>

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 4m56s 172.16.103.139 w2-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 11m 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 11m 172.16.103.138 w2-k8s <none> <none>

del-deploy-57f68b56f7-s5mtb 1/1 Running 0 31s 172.16.221.139 w1-k8s <none> <none>

del-deploy-57f68b56f7-vn9kx 1/1 Running 0 31s 172.16.221.140 w1-k8s <none> <none>

del-deploy-57f68b56f7-zlnxb 1/1 Running 0 4m56s 172.16.103.140 w2-k8s <none> <none>

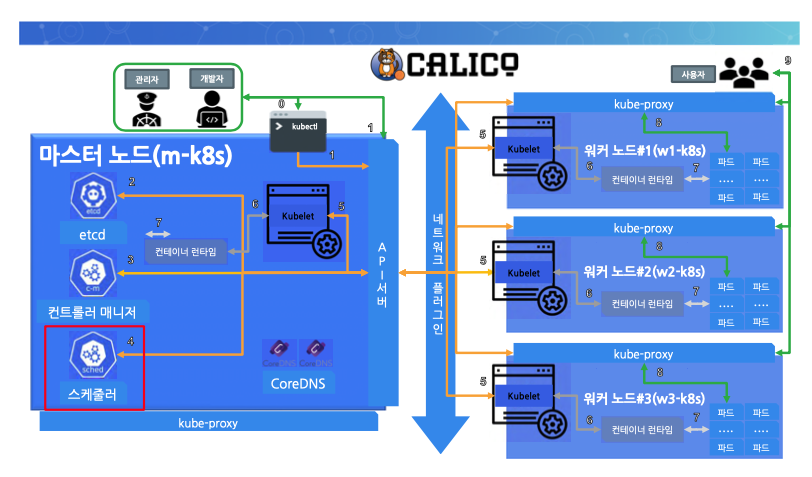

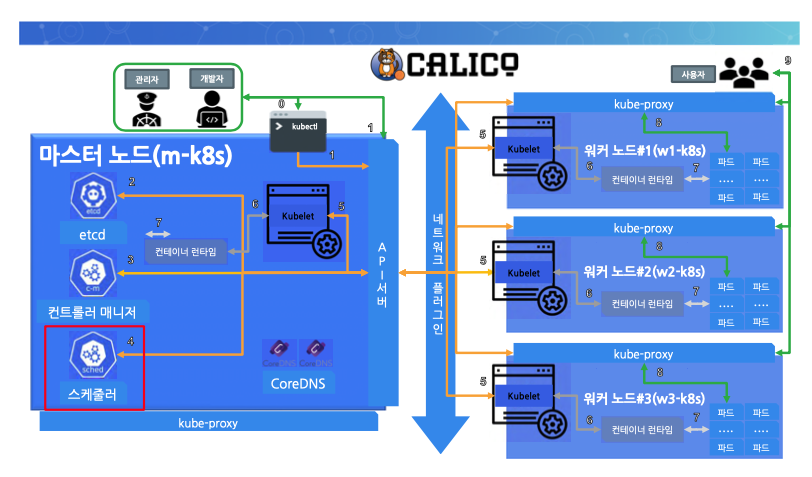

4.3 쿠버네티스 마스터 노드의 구성 요소에 문제가 생겼다면?

스케줄러가 삭제된다면?

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-744cfdf676-7xr6t 1/1 Running 5 21d

calico-node-4ckvp 1/1 Running 3 21d

calico-node-bd75c 1/1 Running 5 21d

calico-node-qg8d2 1/1 Running 4 21d

calico-node-ssls5 1/1 Running 3 21d

coredns-74ff55c5b-twzlf 1/1 Running 5 21d

coredns-74ff55c5b-vmjmv 1/1 Running 5 21d

etcd-m-k8s 1/1 Running 5 21d

kube-apiserver-m-k8s 1/1 Running 5 21d

kube-controller-manager-m-k8s 1/1 Running 5 21d

kube-proxy-422sj 1/1 Running 5 21d

kube-proxy-dnq2l 1/1 Running 3 21d

kube-proxy-jc9gr 1/1 Running 3 21d

kube-proxy-p4rpt 1/1 Running 4 21d

kube-scheduler-m-k8s 1/1 Running 5 21d

[root@m-k8s ~]

Error from server (NotFound): pods "kube-scheduler-m-k8s" not found

[root@m-k8s ~]

pod "kube-scheduler-m-k8s" deleted

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-744cfdf676-7xr6t 1/1 Running 5 21d

calico-node-4ckvp 1/1 Running 3 21d

calico-node-bd75c 1/1 Running 5 21d

calico-node-qg8d2 1/1 Running 4 21d

calico-node-ssls5 1/1 Running 3 21d

coredns-74ff55c5b-twzlf 1/1 Running 5 21d

coredns-74ff55c5b-vmjmv 1/1 Running 5 21d

etcd-m-k8s 1/1 Running 5 21d

kube-apiserver-m-k8s 1/1 Running 5 21d

kube-controller-manager-m-k8s 1/1 Running 5 21d

kube-proxy-422sj 1/1 Running 5 21d

kube-proxy-dnq2l 1/1 Running 3 21d

kube-proxy-jc9gr 1/1 Running 3 21d

kube-proxy-p4rpt 1/1 Running 4 21d

kube-scheduler-m-k8s 1/1 Running 5 13s

- kube-system 요소이므로 -n 명령어를 통해 네임스페이스를 지정해서 삭제한다.

- 마스터 노드의 중요한 요소들은 특별하게 관리 되므로 파드를 삭제하는 것이라도 바로 재생성된다.

kublet이 중단된다면?

[root@m-k8s ~]

[root@m-k8s ~]

pod "kube-scheduler-m-k8s" deleted

1분 뒤에 ctr+ c로 탈출

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-744cfdf676-7xr6t 1/1 Running 5 21d

calico-node-4ckvp 1/1 Running 3 21d

calico-node-bd75c 1/1 Running 5 21d

calico-node-qg8d2 1/1 Running 4 21d

calico-node-ssls5 1/1 Running 3 21d

coredns-74ff55c5b-twzlf 1/1 Running 5 21d

coredns-74ff55c5b-vmjmv 1/1 Running 5 21d

etcd-m-k8s 1/1 Running 5 21d

kube-apiserver-m-k8s 1/1 Running 5 21d

kube-controller-manager-m-k8s 1/1 Running 5 21d

kube-proxy-422sj 1/1 Running 5 21d

kube-proxy-dnq2l 1/1 Running 3 21d

kube-proxy-jc9gr 1/1 Running 3 21d

kube-proxy-p4rpt 1/1 Running 4 21d

kube-scheduler-m-k8s 1/1 Terminating 5 6m21s

- kubelet이 멈춰있으므로 컨테이너 런타임에게 삭제 명령을 내릴 수 없는 상황.

kubelet이 멈춘 상태, 스케줄러가 Terminating 상태에서 nginx 배포

[root@m-k8s ~]# kubectl create deployment ngix --image=nginx

deployment.apps/ngix created

[root@m-k8s ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-5wrpq 1/1 Running 0 20m

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 25m

del-deploy-57f68b56f7-c9gql 1/1 Running 0 21m

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 25m

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 31m

del-deploy-57f68b56f7-pxs75 1/1 Running 0 31m

del-deploy-57f68b56f7-s5mtb 1/1 Running 0 20m

del-deploy-57f68b56f7-vn9kx 1/1 Running 0 20m

del-deploy-57f68b56f7-zlnxb 1/1 Running 0 25m

ngix-5f644fbfb6-5ktc8 1/1 Running 0 11s

replica=3

[root@m-k8s ~]# kubectl scale deployment nginx --replicas=3

[root@m-k8s ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

del-deploy-57f68b56f7-5wrpq 1/1 Running 0 25m

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 29m

del-deploy-57f68b56f7-c9gql 1/1 Running 0 25m

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 29m

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 35m

del-deploy-57f68b56f7-pxs75 1/1 Running 0 35m

del-deploy-57f68b56f7-s5mtb 1/1 Running 0 25m

del-deploy-57f68b56f7-vn9kx 1/1 Running 0 25m

del-deploy-57f68b56f7-zlnxb 1/1 Running 0 29m

nginx-6799fc88d8-7w6cm 1/1 Running 0 29s

nginx-6799fc88d8-flscm 1/1 Running 0 8s

nginx-6799fc88d8-zpht7 1/1 Running 0 8s

- kubelet이 멈춘 상태 그리고 스케줄러가 Terminating 상태에서도 제대로 동작한다.

curl 명령어로 날려보기

[root@m-k8s ~]

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

del-deploy-57f68b56f7-5wrpq 1/1 Running 0 27m 172.16.221.141 w1-k8s <none> <none>

del-deploy-57f68b56f7-b7pzc 1/1 Running 0 31m 172.16.132.10 w3-k8s <none> <none>

del-deploy-57f68b56f7-c9gql 1/1 Running 0 27m 172.16.132.11 w3-k8s <none> <none>

del-deploy-57f68b56f7-jc4gw 1/1 Running 0 31m 172.16.103.139 w2-k8s <none> <none>

del-deploy-57f68b56f7-jp7gc 1/1 Running 0 37m 172.16.132.9 w3-k8s <none> <none>

del-deploy-57f68b56f7-pxs75 1/1 Running 0 37m 172.16.103.138 w2-k8s <none> <none>

del-deploy-57f68b56f7-s5mtb 1/1 Running 0 27m 172.16.221.139 w1-k8s <none> <none>

del-deploy-57f68b56f7-vn9kx 1/1 Running 0 27m 172.16.221.140 w1-k8s <none> <none>

del-deploy-57f68b56f7-zlnxb 1/1 Running 0 31m 172.16.103.140 w2-k8s <none> <none>

nginx-6799fc88d8-7w6cm 1/1 Running 0 2m29s 172.16.132.13 w3-k8s <none> <none>

nginx-6799fc88d8-flscm 1/1 Running 0 2m8s 172.16.221.144 w1-k8s <none> <none>

nginx-6799fc88d8-zpht7 1/1 Running 0 2m8s 172.16.103.142 w2-k8s <none> <none>

[root@m-k8s ~]

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

컨테이너 런타임이 중단된다면?

[root@m-k8s ~]

[root@m-k8s ~]

The connection to the server 192.168.1.10:6443 was refused - did you specify the right host or port?

[root@m-k8s ~]

The connection to the server 192.168.1.10:6443 was refused - did you specify the right host or port?

[root@m-k8s ~]

[root@m-k8s ~]

- 192.168.1.10:6443는 API 서버의 주소. 마스터 노드에 있는 컨테이너 런타임이 없으면 다른 컨테이너들과 통신이 불가능하다.

- 배포한 nginx와의 통신도 불가능.

- 즉, 마스터 노드의 컨테이너 런타임은 매우 중요한 요소다.

- 일반적인 현업에서의 마스터 노드는 멀티 마스터를 사용하는 것을 권장