One Pixel Attack for Fooling Deep Neural Networks

1. Introduction

Artificial perturbations on natural images can easily make DNN misclassify and accordingly proposed effective algorithms for generating such samples called "adversarial images".

Adversarial image

머신러닝 모델(특히 딥러닝 기반의 이미지 분류 모델)을 속이기 위해 만들어진 이미지

- 원본 이미지에 미세한 노이즈 (perturbation)을 추가하면, 인간의 눈에는 별 차이가 없지만 AI모델은 완전히 다른 클래스로 분류한다.

이전의 방법들의 부작용

- Modification might be excessive

It may be perceptible to human eyes

인간의 눈으로도 인식할 수 있게 된다 - Might give new insights about the geometrical characteristics and overall behavior of DNN's model in high dimensional space

고차원 공간에서의 기하학적 특성과 전반적인 행동에 대한 새로운 통찰을 제공할 수도 있다.

ex ) the characteristics of adversarial images close to the decision boundaries can help describing the boundaries' shape

이 논문에서는 one pixel로 perturb 하겠다. 이 과정에서 black-box DNN attack을 제안한다.

Black-box DNN attack

: 공격자가 딥러닝 모델의 내부 구조 (파라미터, 아키텍처, 가중치)를 모르는 상태에서 수행하는 공격

→ 쿼리를 보내서 모델의 응답을 기반으로 적대적 샘플을 만듦

One-pixel Attack의 이점

- Effectiveness

- Able to launch non-targeted attacks by only modifying one pixel on common deep neural network structures

- Semi-Black-Box Attack

- Requires only probability labels but no inner information of target DNNs such as gradient and network structures

- Does not abstract the problem of searching perturbation to any explicit target functions

- Directly focus on increasing the probability label values of the target classes

- Flexibility

- Can attack more types of DNNs

시나리오를 고려하게된 이유

-

Analyze the Vicinity of Natural Images

- By limiting the length of perturbation vector

- It can give geometrical insight to the understanding of CNN input space, in contrast to another extreme case

CNN의 입력 공간은 기하학적으로 구조화된 특성을 가지며, 이를 이해하면 CNN의 동작 방식도 더 깊이 파악할 수 있음

-

A Measure of Perceptiveness

- Effective for hiding adversarial modification in practice

- Limit the amount of modifications as few as possible

2. Related Works

흠.. 그냥 논문과 관련되어있는 여러 다른 논문들의 내용을 요약해서 적어놨다. 아래는 그냥 몇가지 문장들만 적었다.

- The security problem of DNN has become a critical topic

- A number of detection and defense methods have been also proposed to mitigate the vulnerability induced by adversarial perturbation

- Several black-box attacks that require no internal knowledge about the target systems such as gradients, have also been proposed

- There have been many efforts to understand DNN by visualizing the activation of network nodes while the geometrical characteristics of DNN boundary have gained less attraction due to the difficulty of understanding high-dimenstional space

3. Methodology

A. Problem Description

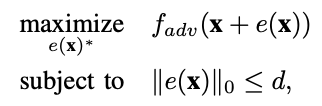

Generating adversarial images can be formalized as an optimization probelm with constraints.

The goal of adversaries in the case of targeted attacks is to find the optimized solution .

다음 두가지 값을 찾아야한다.

(a) 어떤 차원이 수정되어야 하는가

(b) 각각 차원에 대한 수정의 대응되는 강도

one pixel attack 에서 d = 1 이다.

The one-pixel modification can be seen as perturbing the data point along a direction parallel to the axis of one of the dimensions.

→ 한 픽셀 수정은 데이터를 n개의 차원 중 하나의 축과 평행한 방향으로 변형하는 것으로 볼 수 있다.

One-pixel perturbation allows the modification of an image towards a chosen direction out of possible directions with arbitrary strength.

→ 한 픽셀 변형은 n개의 가능한 방향 중 선택된 방향으로 임의의 강도로 이미지를 수정할 수 있게 한다.

Specifically focus on few pixels but does not limit the strength of modification.

B. Differential Evolution

Differential evolution (DE) is a population based optimization algorithm for solving complex multi-modal optimization problems

EA algorithm

: 생물학적 진화 과정을 모방해 최적화 문제를 해결하는 알고리즘 종류

Keep the diversity such that in practice it is expected to efficiently find higher quality solutions than gradient-based solutions or even other kinds of EAs

다양성을 유지 → gradient based solution 이나 다른 EA 보다 효율적

DE does not require the objective function to be differentiable or previously known. → It can be utilized on a wider range of optimization problems compared to gradient based methods

meta-heuristic

: 경험적인 방법을 사용하여 좋은 해를 빠르게 찾는 것

DE를 사용해서 얻는 장점

- Higher probability of Finding Global Optima

- DE is meta-heuristic, which is relatively less subject to local minima than gradient descent or greedy search algorithms

- Require Less Information from Target System

- DE does no require the optimization problem to be differentiable as is required by classical optimization methods

- Simplicity

- Indepenent of the classifier used

C. Method and Settings

We encode the perturbation into an array which is optimized by differential evolution.

Once generated, each candidate solution compete with their corresponding parents according to the index of the population and the winner survive for next iteration.

The fitness function is simply the probabilistic label of the target class in the case of CIFAR-10 and the label of true class in the case of ImageNet. The crossover is not included in our scheme.

4. Evaluation and Results

We introduce several metrics to measure the effectiveness of the attacks

- Success Rate

- Non-targeted attack

- percentage of adversarial images that were successfully classified by the target system as an arbitrary target class

- Targeted attack

- probability of perturbing a natural image to a specific target class

- Non-targeted attack

- Adversarial Probability Labels (Confidence)

- Accumulates the values of probability label of the target class for each successful perturbation, then divided by the total number of successful perturbations

- Number of Target Classes

- Counts the number of natural images that sucessfully perturb to a certain number of target classes

- Number of Original-Target Class Pairs

- Counts the number of times each original-destination class pair was attacked

Kaggle CIFAR-10 데이터셋 결과 :

- 실험 방법 : 세 가지 다른 딥러닝 모델을 대상으로 1픽셀, 3픽셀, 5픽셀 변조를 적용하여 공격을 수행하였다.

- 결과 : 1픽셀 변조만으로도 상당한 비율의 이미지에서 모델이 오분류하였으며, 변조 픽셀 수가 증가할수록 공격 성공률도 높아졌다.

ImageNet 데이터셋 결과 :

- 실험 방법 : BVLC AlexNet 모델을 대상으로 비타겟(non-targeted) 1픽셀 공격을 수행하였다.

- 결과 : 105개의 테스트 이미지 중 16.04%가 1픽셀 변조로 오분류되었으며, 평균 신뢰도는 22.91%였다. 이는 CIFAR-10보다 낮은 성공률이지만, 이미지 크기와 복잡성을 고려할 때 여전히 주목할 만한 결과이다.