group_dag.py

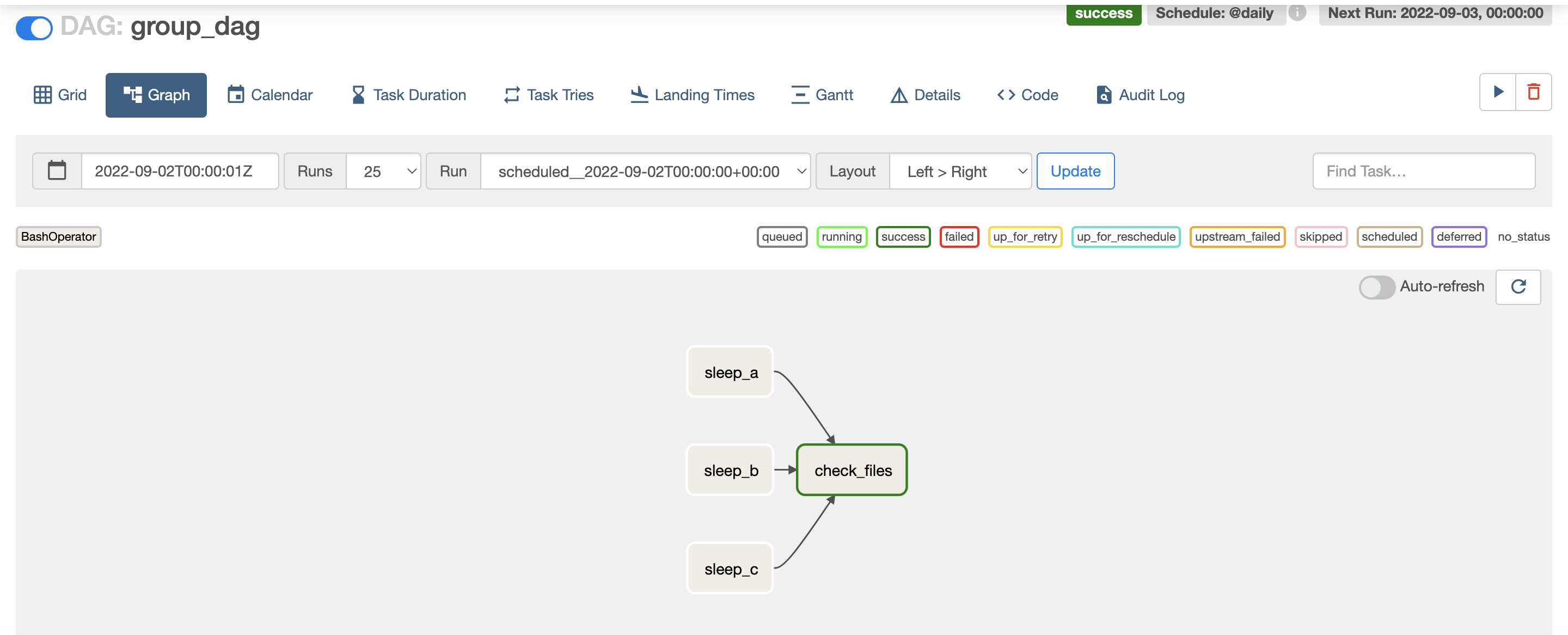

from airflow import DAG

from airflow.operators.bash import BashOperator

from datetime import datetime

with DAG('group_dag', start_date=datetime(2022, 9, 1),

schedule_interval='@daily', catchup=False) as dag:

sleep_a = BashOperator(

task_id='sleep_a',

bash_command='sleep 10'

)

sleep_b = BashOperator(

task_id='sleep_b',

bash_command='sleep 10'

)

sleep_c = BashOperator(

task_id='sleep_c',

bash_command='sleep 10'

)

check_files = BashOperator(

task_id='check_files',

bash_command='sleep 10'

)

[sleep_a, sleep_b, sleep_c] >> check_files

클릭하면

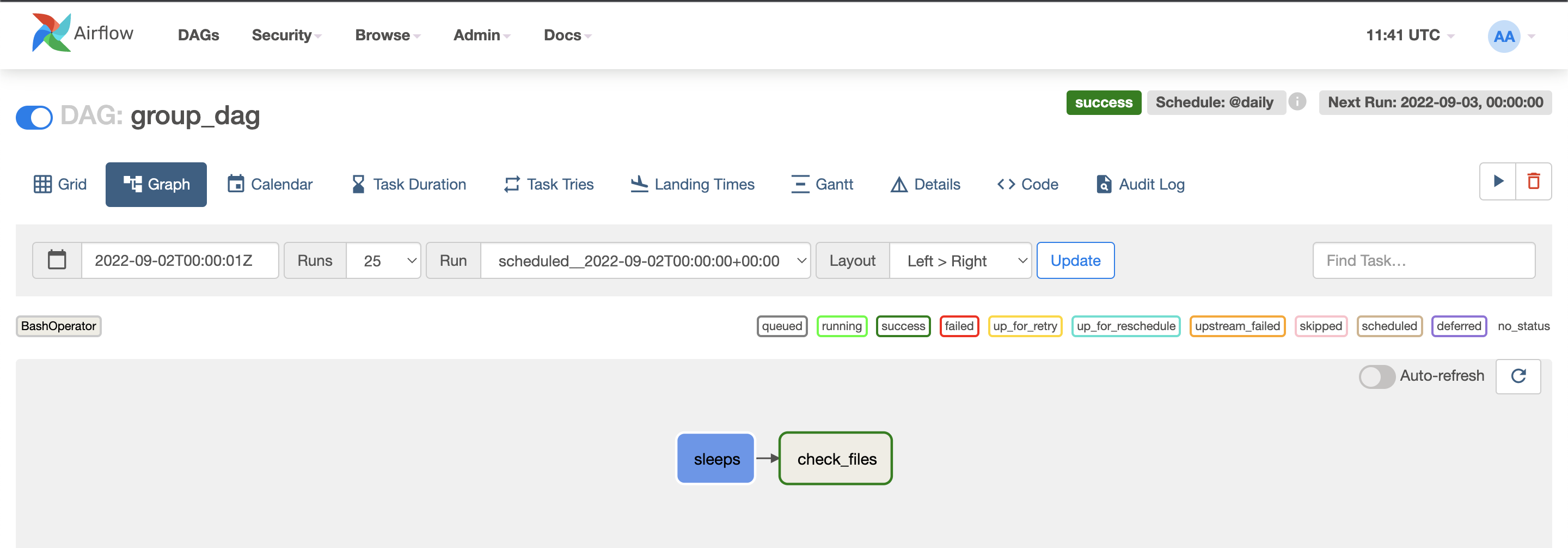

group_dag.py

from airflow import DAG

from airflow.operators.bash import BashOperator

#groups 디렉토리에 group_sleeps.py에서 sleep_tasks 를 import 한다

from groups.group_sleeps import sleep_tasks

from datetime import datetime

with DAG('group_dag', start_date=datetime(2022, 9, 1),

schedule_interval='@daily', catchup=False) as dag:

args = {'start_date': dag.start_date, 'schedule_interval': dag.schedule_interval, 'catchup': dag.catchup}

#task 정의가 되어있는 sleep_tasks() 를 호출한다.

sleeps = sleep_tasks()

check_files = BashOperator(

task_id='check_files',

bash_command='sleep 10'

)

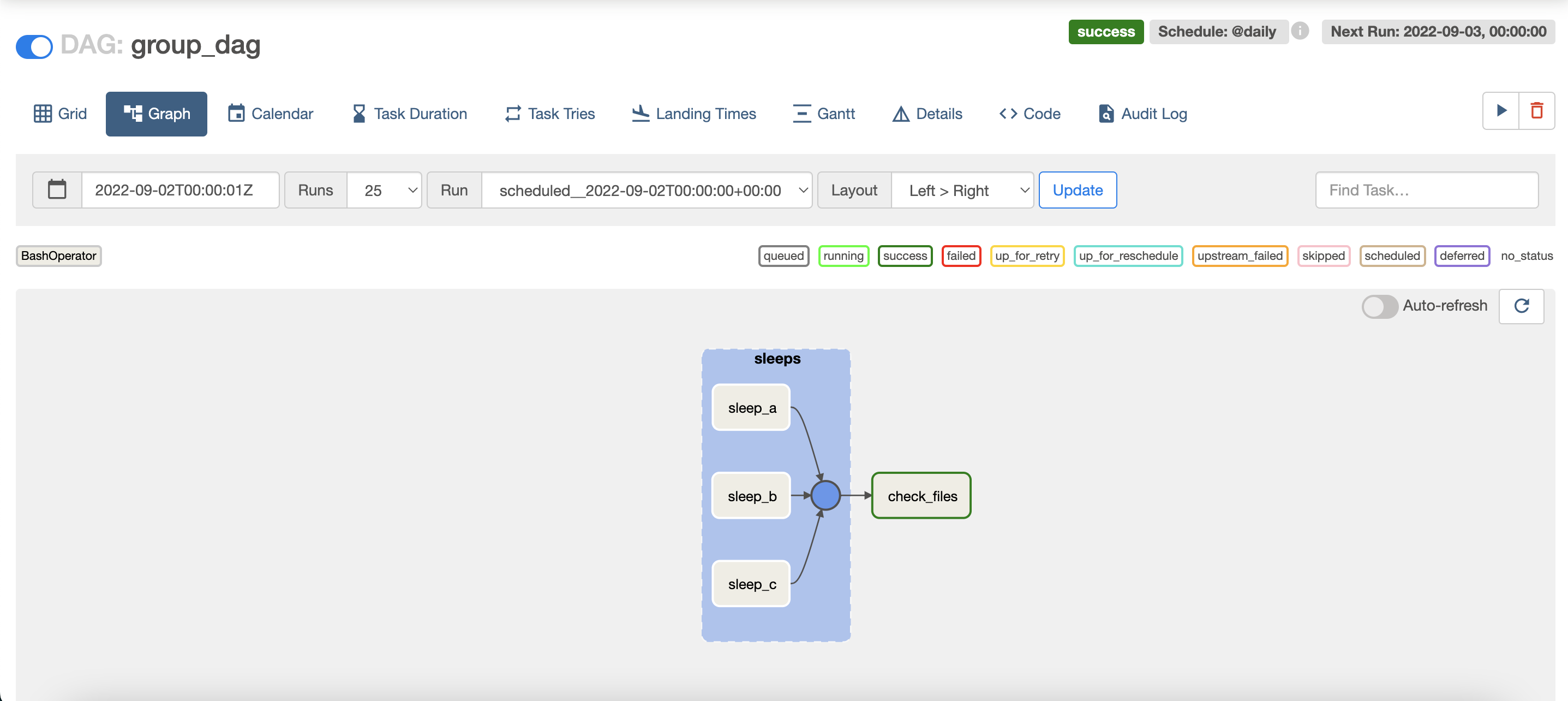

sleeps >> check_filesgroups/group_sleeps.py

from airflow import DAG

from airflow.operators.bash import BashOperator

from airflow.utils.task_group import TaskGroup

def sleep_tasks():

with TaskGroup("sleeps", tooltip="Sleep tasks") as group:

sleep_a = BashOperator(

task_id='sleep_a',

bash_command='sleep 10'

)

sleep_b = BashOperator(

task_id='sleep_b',

bash_command='sleep 10'

)

sleep_c = BashOperator(

task_id='sleep_c',

bash_command='sleep 10'

)

return group