이번 포스트는 쿠버네티스의 워커노드에 runc, cni-plugins, containerd, kubelet, kube-proxy 서비스를 구성하는 작업입니다.

이전 시리즈와 같이, 작업에 사용되는 명령어는 아래 깃허브에서 확인 가능합니다.

- Prerequisites : 작업을 위해 워커노드 서버(worker-0~2)에 접속

아래 명령어를 사용하여 워커노드 서버에서 필요한 바이너리를 설치하고 swap을 off 합니다.

$ sudo apt-get update

# 워커노드에 필요한 패키지 설치

$ sudo apt-get -y install socat conntrack ipset

$ sudo swapoff -a

$ wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.21.0/crictl-v1.21.0-linux-amd64.tar.gz \

https://github.com/opencontainers/runc/releases/download/v1.0.0-rc93/runc.amd64 \

https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-amd64-v0.9.1.tgz \

https://github.com/containerd/containerd/releases/download/v1.4.4/containerd-1.4.4-linux-amd64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubelet

$ sudo mkdir -p \

/etc/cni/net.d \

/opt/cni/bin \

/var/lib/kubelet \

/var/lib/kube-proxy \

/var/lib/kubernetes \

/var/run/kubernetes

$ mkdir containerd

$ tar -xvf crictl-v1.21.0-linux-amd64.tar.gz

$ tar -xvf containerd-1.4.4-linux-amd64.tar.gz -C containerd

$ sudo tar -xvf cni-plugins-linux-amd64-v0.9.1.tgz -C /opt/cni/bin/

$ sudo mv runc.amd64 runc

$ chmod +x crictl kubectl kube-proxy kubelet runc

$ sudo mv crictl kubectl kube-proxy kubelet runc /usr/local/bin/

$ sudo mv containerd/bin/* /bin/위 코드와 같이 워커노드 서버(worker-0~2)에서 3개의 패키지를 설치 했으며, 용도는 아래와 같습니다.

- socat : 독립적인 데이터 채널의 양방향 데이터 전송 지원, kubectl port-forwarding 지원에 사용

- conntrack : 커널 내의 네트워크 연결 흐름을 user space에서 확인 가능, kube-proxy에서 백엔드 연결 과정에 사용

- ipset : iptables의 확장 기능을 제공. iptables의 성능 및 관리성 개선 (예, hash 셋으로 주소 관리), kube-proxy에서 애플리케이션을 네트워크 서비스로 접근 가능하게 하는데 사용

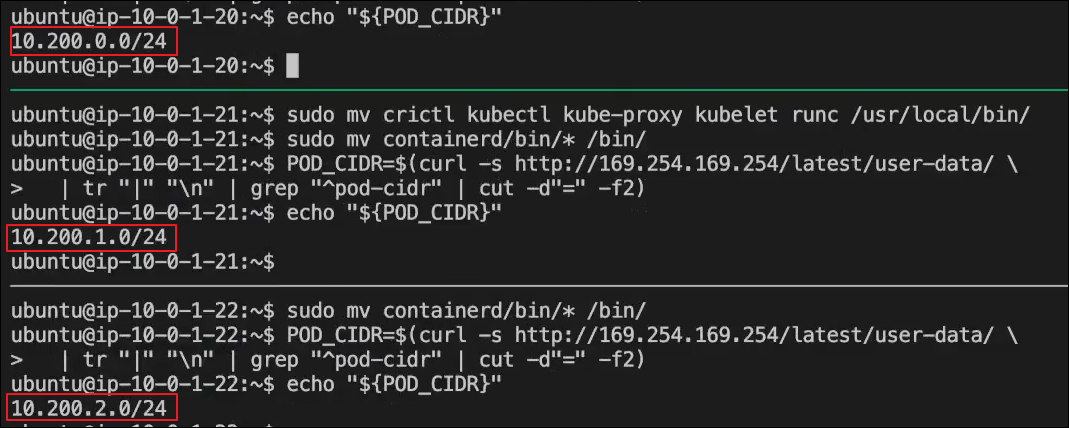

- Configure CNI Networking : AWS 메타데이터를 사용하여 워커노드의 네트워크(CIDR)을 할당합니다.

- AWS 메타 데이터에서 pod-cidr 조회

$ POD_CIDR=$(curl -s http://169.254.169.254/latest/user-data/ \

| tr "|" "\n" | grep "^pod-cidr" | cut -d"=" -f2)

$ echo "${POD_CIDR}"

- bridge, loopback 네트워크 생성

$ cat <<EOF | sudo tee /etc/cni/net.d/10-bridge.conf

{

"cniVersion": "0.4.0",

"name": "bridge",

"type": "bridge",

"bridge": "cnio0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"ranges": [

[{"subnet": "${POD_CIDR}"}]

],

"routes": [{"dst": "0.0.0.0/0"}]

}

}

EOF

$ cat <<EOF | sudo tee /etc/cni/net.d/99-loopback.conf

{

"cniVersion": "0.4.0",

"name": "lo",

"type": "loopback"

}

EOF- Configure containerd : containerd 설정파일을 생성하고 systemd 유닛을 생성합니다.

# containerd 설정파일 생성

$ cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

EOF

# containerd.service systemd 유닛 생성

$ cat <<EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF- Configure the Kubelet : Kubelet kubelet-config.yaml 파일을 생성하여 kublet을 설정하며 systemd 유닛을 생성합니다.

$ WORKER_NAME=$(curl -s http://169.254.169.254/latest/user-data/ \

| tr "|" "\n" | grep "^name" | cut -d"=" -f2)

$ echo "${WORKER_NAME}"

$ sudo mv ${WORKER_NAME}-key.pem ${WORKER_NAME}.pem /var/lib/kubelet/

$ sudo mv ${WORKER_NAME}.kubeconfig /var/lib/kubelet/kubeconfig

$ sudo mv ca.pem /var/lib/kubernetes/

# kubelet-config.yaml(설정파일) 생성

$ cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "${POD_CIDR}"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/${WORKER_NAME}.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/${WORKER_NAME}-key.pem"

EOF

# kubelet.service systemd unit 생성

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- Configure the Kubernetes Proxy : kube-proxy-config.yaml 파일을 생성해 Kubernetes Proxy 설정 후 systemd 유닛을 생성합니다.

# sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfig

# kube-proxy-config.yaml 설정 파일 생성 후 설정 내용추가

$ cat <<EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOF

# kube-proxy.service systemd 유닛 생성

$ cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

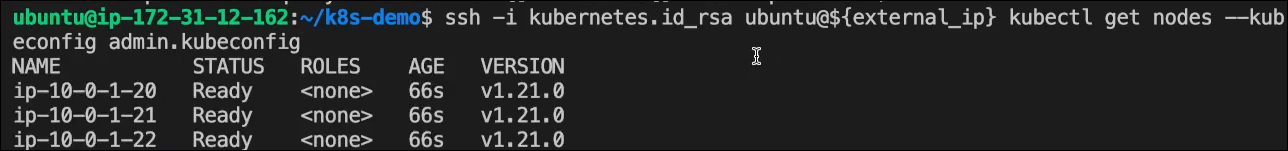

마스터 노드(ontroller-0) 서버에 접속하여 kubectl get nodes 명령어로 이번에 생성된 워커노드의 상태를 확인할 수 있습니다.

external_ip=$(aws ec2 describe-instances --filters \

"Name=tag:Name,Values=controller-0" \

"Name=instance-state-name,Values=running" \

--output text --query 'Reservations[].Instances[].PublicIpAddress')

ssh -i kubernetes.id_rsa ubuntu@${external_ip} kubectl get nodes --kubeconfig admin.kubeconfig