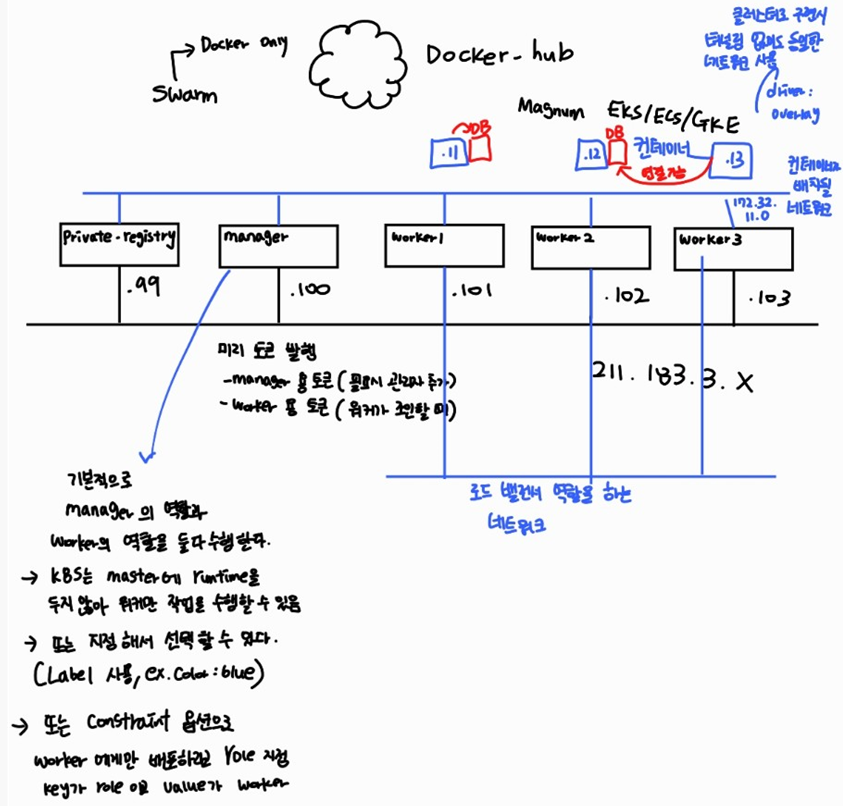

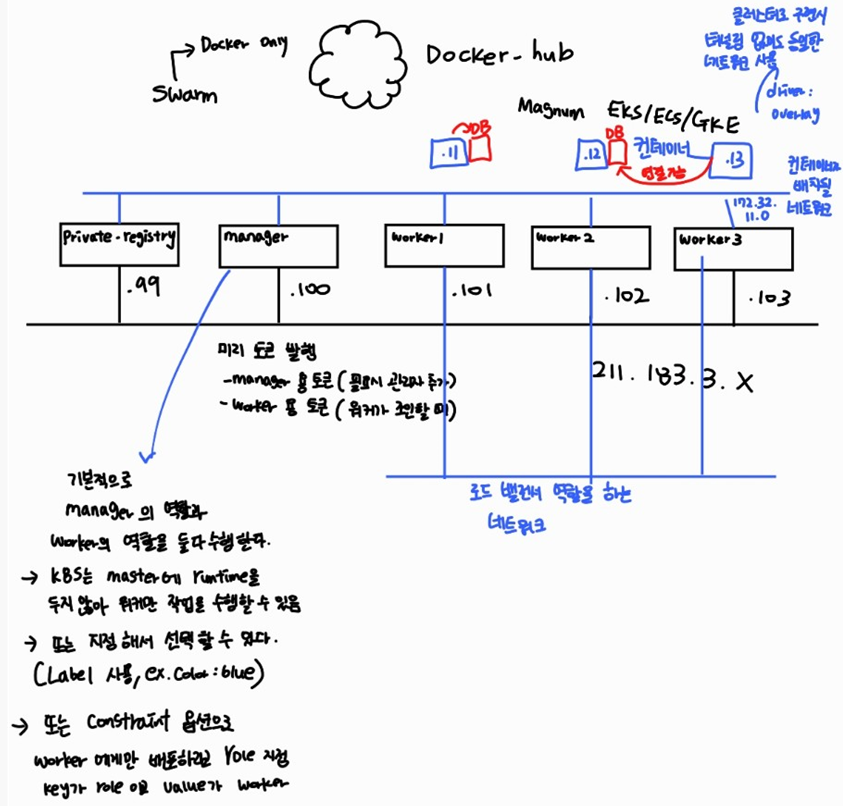

Docker Swarm Mode cluster

- 클러스터를 통해 컨테이너를 확장하기 위한 도커 고유의 플랫폼

- 클러스터 환경에서는 자원을 pool에 담아 하나의 자원(computing, network, storage)으로 활용될 수 있다.

- 기능적으로 두 가지 기능이 호스트에 부여

- manager(master@k8s)

- controller의 역할 수행

- manager은 worker의 기능을 포함

- 반면 쿠버네티스의 master는 worker의 기능을 수행하지 않는다.

- 일반적으로 manager은 컨테이너 배포에서 제외시키고, 주로 컨트롤, 관리, 모니터링 역할에 주력해야 함

- 매니저 노드에 컨테이너를 수행하지 않도록 역할 분리를 수행하는 방법은 도커 서비스 생성 시 —constraint node.role!=manager 옵션으로 매니저 노드는 컨테이너를 수행하지 않도록 할 수 있다. (또는 role==worker)

- worker(node@k8s) → 컨트롤로부터 명령을 전달 받고 이를 수행하는 역할

-

manager, worker은 처음 클러스터 생성 시 자동으로 node.role이 각 노드에 부여됨

-

또는 추가적으로 label을 각 노드에 부여하여 컨테이너 배포 시 활용할 수 있다. (ex. zone:busan)

-

예를 들어 두가지 제약조건(role, label)을 부여하여 role==worker이고 label.zone==busan 처럼 사용할 수 있다.

-

aws, gcp에서 제공하는 컨테이너 클러스터링 서비스는 EKS, GKE가 있다. 이들은 별도의 manager용 VM을 제공해 주는 것이 아니라, 전체 관리용 VM(콘솔VM)에 도커를 설치하여 사용한다. 노드(worker)는 완전관리형 서비스로 제공 된다.

Swarm은 2가지 모드가 있다.

도커 스웜(스웜 클래식) vs 스웜 모드

- 일반적으로 swarm이라하면 스웜 모드를 말함

- 스웜 모드의 경우 manager가 전달할 때 worker의 컨테이너 데몬에게 직접 통신해 상태 정보를 바로 확인할 수 있다.

- 과거에 사용한 클래식의 경우 통신하기위한 별도의 agent가 들어가야 했다.

- 새로운 노드가 연결되거나 했을 때 이러한 정보는 별도의 분산 코디네이터(주키퍼,etcd)가 관리할 수 있는데, 클래식의 경우 별도의 분산 코디네이터를 두고, 이 분산 코디네이터에서 컨테이너, 클러스터상태를 별도의 DB에서 확인하는 방식

- 반면, 스웜모드는 내부에 포함되어있어 자체적으로 관리를 함.

도커 스웜(스웜 클래식)

- zookeeper, etcd와 같은 별도의 분산 코디네이터를 두고 이를 통해 노드, 컨테이너 들의 정보를 관리한다.

- 또한 컨테이너의 정보를 확인하기 위하여 각 노드에 agent를 두고 이를 통해 통신하게 된다.

스웜 모드

- 매니저 자체에 분산 코디네이터가 내장되어있어 추가 설치 패키지 등이 없다.

- 일반적으로 이야기하는 스웜 클러스터는 스웜모드를 지칭한다.

롤링 업데이트

- 동작중인 컨테이너를 새로운 컨테이너로 업데이트 할 수 있다.

- 컨테이너 내부의 내용을 바꾸는 것이 아니라 기존 컨테이너는 중지되고, 새로운 컨테이너로 대체되는 것이다. (롤백 가능성이 있으니까 기존 컨테이너는 중지되어 있는 것. 죽어있는 상태에서 대기 cold standby)

MSA

- 고도화를 위해 다수의 컨테이너나 VM을 이용해 개발을 진행할 수 있다.

- 개발 속도를 향상 시키고, 모듈 형식으로 기능을 구분해 개발하므로 문제 발생시 해당 컨테이너나 코드, VM만 확인하면 되므로 관리가 용이하다.

- 수평적 확장에도 유용

- 각 기능 개발에서 언어를 지정하지 않으므로 필요한 언어를 선택하여 개발하면 된다.

- 각 기능 별 데이터는 API를 통해 주고 받으면 된다.

서비스를 배포하는 두가지 방식

- replicas : 지정된 개수만큼 컨테이너를 동작시킴. scale과 연계하여 확장 축소가 용이하다r

- global : 지정된 노드에 각 1개씩 글로벌하게 배포한다.

Docker swarm cluster 실습하기

실습 환경 구성

| CPU | RAM | NIC (VMnet10) | |

|---|---|---|---|

| manager(private-registry) | 2 | 4 | 211.183.3.100 |

| worker1 | 2 | 2 | 211.183.3.101 |

| worker2 | 2 | 2 | 211.183.3.102 |

| worker3 | 2 | 2 | 211.183.3.103 |

manager vm을 오른클릭 > Manage > Clone > 다음 > 다음 > Full clone으로 worker1,2,3 생성

실습 환경 설정

노드 IP 설정

rapa@manager:/root$ sudo vi /etc/netplan/01-network-manager-all.yaml# Let NetworkManager manage all devices on this system

network:

ethernets:

ens32:

addresses: [211.183.3.100/24] # manager:100, worker1:101, worker2:102, worker3:103

gateway4: 211.183.3.2

nameservers:

addresses: [8.8.8.8, 168.126.63.1]

dhcp4: no

version: 2

# renderer: NetworkManagerrapa@manager:/root$ sudo netplan applyhost 이름 변경

sudo hostnamectl set-hostname [이름]

sudo su/etc/hosts 추가

sudo vi /etc/hosts211.183.3.100 manager

211.183.3.101 worker1

211.183.3.102 worker2

211.183.3.103 worker3

추가

ping 확인

ping manager -c 3 ; ping worker1 -c 3 ; ping worker2 -c 3 ; ping worker3 -c 3클러스터 환경 구현하기

- 매니저 역할을 수행할 노드에서 토큰을 발행하고, 워커는 해당 토큰을 이용해 매니저에 조인한다.

manager

- 스웜 노드가 매니저에 접근하기 위해 advertise-addr로 지정

rapa@manager:~/0824$ docker swarm init --advertise-addr ens32

Swarm initialized: current node (mmq3j418myp0x5pktz3o0k1jt) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0klwpp8pig0mbpcn51656xxi34zswkbpztob9lvxyq6o9fnybs-6dvipms8zzjar4idajry66hyb 211.183.3.100:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.ip 대신 ens32같이 인터페이스를 지정. IP가 dhcp로 하면 계속 바뀔수있으니까(dhcp ip 임대기간을 infinite로 할수도)

docker swarm join ~ 부분을 복사하여 worker1, 2, 3에 각각 복사해 명령어 입력

worker

- 위 명령어를 복사해 worker1,2,3에 각각 넣기

rapa@worker1:~$ docker swarm join --token SWMTKN-1-0klwpp8pig0mbpcn51656xxi34zswkbpztob9lvxyq6o9fnybs-6dvipms8zzjar4idajry66hyb 211.183.3.100:2377

Error response from daemon: This node is already part of a swarm. Use "docker swarm leave" to leave this swarm and join another one.manager

- 노드 확인

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

mmq3j418myp0x5pktz3o0k1jt * manager Ready Active Leader 20.10.17

ef41qj130k6k3glpy2sjiayd5 worker1 Ready Active 20.10.17

luwd64ouh37h3vagnt5eifxj8 worker2 Ready Active 20.10.17

osj6glwuhgm5psjai379s5mqj worker3 Ready Active 20.10.17AVAILABILITY

-

Active : 컨테이너 생성이 가능한 상태

-

drain : 동작중인 모든 컨테이너가 종료되고 새로운 컨테이너를 생성할 수도 없는 상태

-

pause : 새로운 컨테이너를 생성 할 수는 없다. 하지만 drain과는 달리 기존 컨테이너가 종료되지는 않는다.

- 유지보수를 위해 해당 모드를 drain 또는 pause로 (강제) 변경한다.

-

토큰 발행한 매니저가 리더 매니저

-

worker들이 가입할 때 사용할 토큰

rapa@manager:~/0824$ docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0klwpp8pig0mbpcn51656xxi34zswkbpztob9lvxyq6o9fnybs-6dvipms8zzjar4idajry66hyb 211.183.3.100:2377- manager이 가입할 때 사용할 토큰

rapa@manager:~/0824$ docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0klwpp8pig0mbpcn51656xxi34zswkbpztob9lvxyq6o9fnybs-2icqn40a9ikpyl73e2bqi5se3 211.183.3.100:2377처음 매니저가 토큰을 발행하면 manager용, worker용 토큰이 발행된다. 워커 외에 추가로 manager을 두고싶다면 별도의 노드에서는 manager용 토큰으로 스웜 클러스터에 join 한다.

manager1(leader), manager2, worker1, worker2, worker3

만약 manager가 한개인 상태에서 해당 매니저가 다운된다면 전체 클러스터를 관리할 수 없으므로 실제 환경에서는 최소한 2대의 매니저를 두어야 한다.

worker → manager

만약 현재 상태에서 worker1(worker)을 manager로 변경하고 싶다면

- 등급을 올려준다. (promote)

- 등급 확인

rapa@manager:~/0824$ docker node inspect manager --format "{{.Spec.Role}}"

manager

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

worker- worker 등급 → manager 등급으로

rapa@manager:~/0824$ docker node promote worker1

Node worker1 promoted to a manager in the swarm.

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

manager- 노드 상태 확인 (worker1은 manager가 되었기 때문에 docker node ls 명령어를 사용할 수 있다. worker는 사용 불가)

rapa@worker1:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

mmq3j418myp0x5pktz3o0k1jt * manager Ready Active Leader 20.10.17

ef41qj130k6k3glpy2sjiayd5 worker1 Ready Active Reachable 20.10.17

luwd64ouh37h3vagnt5eifxj8 worker2 Ready Active 20.10.17

osj6glwuhgm5psjai379s5mqj worker3 Ready Active 20.10.17worker → manager

- 등급 하락 후 확인

rapa@manager:~/0824$ docker node demote worker1

Manager worker1 demoted in the swarm.

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

worker클러스터 벗어나기

워커에서 leave (docker swarm leave) → manager에서 rm (docker node rm [worker이름])

- 노드에서 클러스터 벗어나기

rapa@worker1:~$ docker swarm leave

Node left the swarm.- 매니저에서 확인

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

mmq3j418myp0x5pktz3o0k1jt * manager Ready Active Leader 20.10.17

ef41qj130k6k3glpy2sjiayd5 worker1 Down Active 20.10.17

luwd64ouh37h3vagnt5eifxj8 worker2 Ready Active 20.10.17

osj6glwuhgm5psjai379s5mqj worker3 Ready Active 20.10.17삭제는 되지 않고, Down만 되어있는 상태

- 모든 worker 노드에서 leave 후 확인

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

mmq3j418myp0x5pktz3o0k1jt * manager Ready Active Leader 20.10.17

ef41qj130k6k3glpy2sjiayd5 worker1 Down Active 20.10.17

luwd64ouh37h3vagnt5eifxj8 worker2 Down Active 20.10.17

osj6glwuhgm5psjai379s5mqj worker3 Down Active 20.10.17- 매니저에서 worker 노드 제거

rapa@manager:~/0824$ docker node rm worker1

worker1

rapa@manager:~/0824$ docker node rm worker2

worker2

rapa@manager:~/0824$ docker node rm worker3

worker3매니저에서 leave하면 안된다고 뜬다. 왜냐하면 실수로 worker노드는 등록돠어있는 상태에서 manager노드가 떠나면 안되기 때문이다. 강제로 할 순 있다.

(뒤 실습을 위해 다시 토큰을 발행해 원래처럼 worker1,2,3에 복사해 붙여넣는다.)

도커 스웜 모드 워크숍

단일 노드에서는 컨테이너 단위의 배포

compose, swarm에서는 서비스(다수개의 컨테이너) 단위로 배포

- nginx를 3개 복제본으로 클러스터 노드에 서비스 배포

rapa@manager:~/0824$ docker service create --name web --constraint node.role==worker --replicas 3 -p 80:80 nginxreplicas 3: 몇개 배포할것인지. 항상 3개를 유지, 최소값

이미지도 worker 각각에서 받아오는 것

매니저가 아닌 노드에게만 배포

- 클러스터에 동작중인 서비스의 목록 확인, 동작 컨테이너 개수 / 요청 컨테이너 개수

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

lla5yziqwd8u web replicated 3/3 nginx:latest *:80->80/tcp- 각 컨테이너가 어떤 노드에서 동작 중인지 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t38vjftdu3o1 web.1 nginx:latest worker1 Running Running 9 minutes ago

fbq9q2pxpklu web.2 nginx:latest worker2 Running Running 9 minutes ago

cbzmtsckptvn web.3 nginx:latest worker3 Running Running 11 minutes ago만든 컨테이너 3개가 worker1,2,3에 각각 하나씩 동작하고 있다.

만약 _ web.2 ~~ Reject인 경우(컨테이너가 생성되지 않는 이유)

- 이미지 다운이 되지 않은 상태에서 생성하려고 할 때

- 이미지 다운로드를 위한 인증정보가 없을 경우

→ 자원 사용에 불균형이 발생할 수 있다. 즉, 한 대의 노드에서 여러 컨테이너가 동작하고 몇 노드에서는 컨테이너 생성이 되지 않는 문제

각 노드에 저장소 접근을 위한 인증정보가 config.json에 없다면 해결 방법

- manager에서 로그인 하고 config.json 정보를 일일이 각 노드의 config.json에 붙여넣기 한다.

- manager에서 로그인 하고, config.json 정보를 docker service create시 넘겨준다.

- —with-registry-auth

현재 211.183.3.101~103은 nginx가 제대로 뜨고, 100(manager)또한 nginx가 뜬다.

- 스케일(컨테이너 개수) 조정하기

rapa@manager:~/0824$ docker service scale web=1

web scaled to 1

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged- 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

lla5yziqwd8u web replicated 1/1 nginx:latest *:80->80/tcp현재 한개만 돌아가는 상태

- 어떤 노드에서 동작하는 지 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t38vjftdu3o1 web.1 nginx:latest worker1 Running Running 20 minutes agoweb1에만 동작하고 있는 상태로 바뀜

그러나 100,101,102,103 모두 들어와도 overlay때문에 모두 nginx가 보인다.

- 스케일을 4개로 조정

rapa@manager:~/0824$ docker service scale web=4

web scaled to 4

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged- 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

lla5yziqwd8u web replicated 4/4 nginx:latest *:80->80/tcp- 어디에서 동작하는 지 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t38vjftdu3o1 web.1 nginx:latest worker1 Running Running 22 minutes ago

qw27c0mtlv3c web.2 nginx:latest worker2 Running Running 44 seconds ago

o0botdf29tw9 web.3 nginx:latest worker3 Running Running 43 seconds ago

fklpgc4zg2oe web.4 nginx:latest worker3 Running Running 43 seconds agoweb3과 4가 둘다 worker3에서 동작하고 있는 상태이다.

- worker3에서 동작하고 있는 컨테이너를 확인(두개가 모두 동작하고 있는 상태)

rapa@worker3:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8814e84febf5 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web.3.o0botdf29tw9ezhcc92ntpigh

810390ebf608 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp web.4.fklpgc4zg2oeg04npsj4qk85vweb3,4가 하나의 포트를 공유해 2개의 컨테이너가 동작하는 상태이다.

이 컨테이너를 inspect해보면 network에 자동으로 ingress가 부여되어 있다.

- 동작중인 서비스 삭제하기

rapa@manager:~/0824$ docker service rm web

web- 서비스 배포 (3개)

docker service create --name web --constraint node.role!=manager --replicas 3 -p 80:80 nginx- worker3의 컨테이너를 삭제하고, manager 노드에서 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

8vpa8eo0cse0 web.1 nginx:latest worker2 Running Running 13 minutes ago

zqndk7cjfjls web.2 nginx:latest worker3 Running Running 48 seconds ago

u3vz8l8t8oht \_ web.2 nginx:latest worker3 Shutdown Failed 55 seconds ago "task: non-zero exit (137)"

8zwbng412a83 web.3 nginx:latest worker1 Running Running 13 minutes ago삭제했지만, 3개를 유지하기 위해(replica 3) worker1에서 돌아가고 있는 것이 보인다.

- 다시 service 삭제 후 재설치

- 이번에는 —replica가 아닌, —mode global 사용(모든 노드에 각각 한대씩)

rapa@manager:~/0824$ docker service create --name web --constraint node.role!=manager --mode global -p 80:80 nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

zldorxuyixcf357npek7ry3tv

overall progress: 3 out of 3 tasks

o9obccagttlf: running [==================================================>]

qk27035jk5cd: running [==================================================>]

hripanq8twel: running [==================================================>]

verify: Service converged- 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

zldorxuyixcf web global 3/3 nginx:latest *:80->80/tcp롤링 업데이트 실습

- 디렉토리 준비

rapa@manager:~/0824$ mkdir blue green

rapa@manager:~/0824$ ls

blue ctn1.py ctn2.py ctn.py green

rapa@manager:~/0824$ touch blue/Dockerfile green/Dockerfileblue

FROM httpd

ADD index.html /usr/local/apache2/htdocs/index.html

CMD httpd -D FOREGROUND도커 허브의 저장소로 들어가서 레포지토리 만들기

repository name : myweb

tag : blue, green

myweb:blue

myweb:green

rapa@manager:~/0824$ touch blue/index.html green/index.html

rapa@manager:~/0824$ echo "<h2>BLUE PAGE</h2>" > blue/index.html

rapa@manager:~/0824$ echo "<h2>GREEN PAGE</h2>" > green/index.html- blue, green dockerfile

FROM httpd

ADD index.html /usr/local/apache2/htdocs/index.html

CMD httpd -D FOREGROUND- blue, green dockerfile로 이미지 생성 myweb:blue, myweb:green

rapa@manager:~/0824$ cd blue

rapa@manager:~/0824/blue$ ls

Dockerfile index.html

rapa@manager:~/0824/blue$ docker build -t myweb:blue .

Sending build context to Docker daemon 3.072kB

Step 1/3 : FROM httpd

---> f2a976f932ec

Step 2/3 : ADD index.html /usr/local/apache2/htdocs/index.html

---> b281c3ebdf83

Step 3/3 : CMD httpd -D FOREGROUND

---> Running in 5341aa158bf3

Removing intermediate container 5341aa158bf3

---> 0defb8bb2f6e

Successfully built 0defb8bb2f6e

Successfully tagged myweb:blue

rapa@manager:~/0824/blue$ cd ..

rapa@manager:~/0824$ cd green

rapa@manager:~/0824/green$ docker build -t myweb:green .

Sending build context to Docker daemon 3.072kB

Step 1/3 : FROM httpd

---> f2a976f932ec

Step 2/3 : ADD index.html /usr/local/apache2/htdocs/index.html

---> ba9bae1aede3

Step 3/3 : CMD httpd -D FOREGROUND

---> Running in 115f4c162d9a

Removing intermediate container 115f4c162d9a

---> 06dd0d9e041d

Successfully built 06dd0d9e041d

Successfully tagged myweb:green- 이미지 태그, push

rapa@manager:~/0824$ docker image tag myweb:blue dustndus8/myweb:blue

rapa@manager:~/0824$ docker image tag myweb:green dustndus8/myweb:green

rapa@manager:~/0824$ docker push dustndus8/myweb:blue

The push refers to repository [docker.io/dustndus8/myweb]

6bf5cd1d8560: Pushed

0c2dead5c030: Mounted from library/httpd

54fa52c69e00: Mounted from library/httpd

28a53545632f: Mounted from library/httpd

eea65516ea3b: Mounted from library/httpd

92a4e8a3140f: Mounted from dustndus8/mynginx

blue: digest: sha256:5a15a885ef41744201e438e9ca3e5ba1f365d89c54ce96f57573e43fb2c3c9c6 size: 1573

rapa@manager:~/0824$ docker push dustndus8/myweb:green

The push refers to repository [docker.io/dustndus8/myweb]

f2a2284890e1: Pushed

0c2dead5c030: Layer already exists

54fa52c69e00: Layer already exists

28a53545632f: Layer already exists

eea65516ea3b: Layer already exists

92a4e8a3140f: Layer already exists

green: digest: sha256:0e2632256f1f43bb2387faf6f5c6dff6936cdaa5f0ba2e428f453627492b9206 size: 1573- 서비스 배포

rapa@manager:~/0824$ docker service create --name web --replicas 3 --with-registry-auth --constraint node.role==worker -p 80:80 dustndus8/myweb:blue

r1hrndbgxyazab645q4g6pil2

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged—with-registry-auth : 인증정보를 worker에게 전달 해준다

도커 허브에 올린 이미지 dustndus8/myweb:blue를 배포

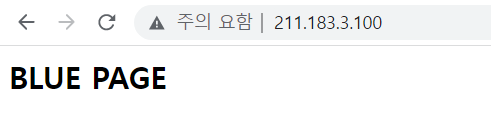

211.183.3.100:80, 101:80, 102:80, 103:80으로 접속하면 BLUE PAGE가 뜬다.(갱신하면됨)

- scale 6으로 증가

rapa@manager:~/0824$ docker service scale web=6

web scaled to 6

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

pc6bzua9uj7d web.1 dustndus8/myweb:blue worker2 Running Running 45 minutes ago

pvw7qhltei7u web.2 dustndus8/myweb:blue worker3 Running Running 45 minutes ago

ys5prl7l67qo web.3 dustndus8/myweb:blue worker1 Running Running 45 minutes ago

3xo9oohqybc3 web.4 dustndus8/myweb:blue worker2 Running Running 23 seconds ago

41msdi7gyh4z web.5 dustndus8/myweb:blue worker3 Running Running 22 seconds ago

whqgprhjfqc8 web.6 dustndus8/myweb:blue worker1 Running Running 23 seconds ago- update

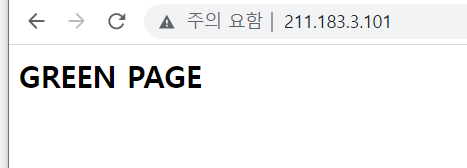

rapa@manager:~/0824$ docker service update --image dustndus8/myweb:green web

image dustndus8/myweb:green could not be accessed on a registry to record

its digest. Each node will access dustndus8/myweb:green independently,

possibly leading to different nodes running different

versions of the image.

web

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged- service 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

61bv9hk1dfgs web.1 dustndus8/myweb:green worker2 Running Running 4 minutes ago

pc6bzua9uj7d \_ web.1 dustndus8/myweb:blue worker2 Shutdown Shutdown 4 minutes ago

mewxvqpqx55k web.2 dustndus8/myweb:green worker3 Running Running 6 minutes ago

pvw7qhltei7u \_ web.2 dustndus8/myweb:blue worker3 Shutdown Shutdown 6 minutes ago

t9mmk6fcqcts web.3 dustndus8/myweb:green worker1 Running Running 5 minutes ago

ys5prl7l67qo \_ web.3 dustndus8/myweb:blue worker1 Shutdown Shutdown 5 minutes ago

dqb3phx7nsg7 web.4 dustndus8/myweb:green worker2 Running Running 5 minutes ago

3xo9oohqybc3 \_ web.4 dustndus8/myweb:blue worker2 Shutdown Shutdown 5 minutes ago

p3m48ceyjdnu web.5 dustndus8/myweb:green worker3 Running Running 5 minutes ago

41msdi7gyh4z \_ web.5 dustndus8/myweb:blue worker3 Shutdown Shutdown 5 minutes ago

hh497i2gv276 web.6 dustndus8/myweb:green worker1 Running Running 5 minutes ago

whqgprhjfqc8 \_ web.6 dustndus8/myweb:blue worker1 Shutdown Shutdown 5 minutes agoupdate를 하게 되면 기존에 동작하고 있던 컨테이너는 down 상태가 되고, 새로운 이미지로 생성한 컨테이너가 외부에 서비스를 제공하게 된다. 기존 컨테이너의 내용을 업데잍트 하는 것처럼 보이지만 그렇지 않다. green이 먼저 만들어 지고, 만들어진 후에 blue를 down시킨다

들어가보면 update 된 것을 확인할 수 있다.

- 다시 blue로 돌리기(ROLLBACK)

rapa@manager:~/0824$ docker service rollback web

web

rollback: manually requested rollback

overall progress: rolling back update: 6 out of 6 tasks

1/6: running [> ]

2/6: running [> ]

3/6: running [> ]

4/6: running [> ]

5/6: running [> ]

6/6: running [> ]

verify: Service converged확인하면 다시 BLUE로 ROLLBACK 된 것을 볼 수 있다.

- 삭제하고 다시 만들기

- 6개를 만들고, 이후에 업데이트 시 3개씩 3초마다 업데이트 진행하도록 함

rapa@manager:~/0824$ docker service create --name web --replicas 6 --update-delay 3s --update-parallelism 3 --constraint node.role==worker -p 80:80 dustndus8/myweb:blue

image dustndus8/myweb:blue could not be accessed on a registry to record

its digest. Each node will access dustndus8/myweb:blue independently,

possibly leading to different nodes running different

versions of the image.

nf8bqwmpgnliz3fp7u77q7nkc

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged- green으로 update 하기

rapa@manager:~/0824$ docker service update --image dustndus8/myweb:green web

image dustndus8/myweb:green could not be accessed on a registry to record

its digest. Each node will access dustndus8/myweb:green independently,

possibly leading to different nodes running different

versions of the image.

web

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service convergedtask(작업)에 동시에 3개의 컨테이너를 업데이트하고 3초 쉰 뒤 다음 테스트를 진행

- rollback하기

rapa@manager:~/0824$ docker service rollback web

web

rollback: manually requested rollback

overall progress: rolling back update: 6 out of 6 tasks

1/6: running [> ]

2/6: running [> ]

3/6: running [> ]

4/6: running [> ]

5/6: running [> ]

6/6: running [> ]

verify: Service converged- 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ov3793sb0jqz web.1 dustndus8/myweb:blue worker1 Running Running about a minute ago

rvjh8i3nocu1 \_ web.1 dustndus8/myweb:green worker1 Shutdown Shutdown about a minute ago

u0uupacqnsqw \_ web.1 dustndus8/myweb:blue worker1 Shutdown Shutdown 5 minutes ago

jwb0tm9x1s64 web.2 dustndus8/myweb:blue worker2 Running Running about a minute ago

vg1mkldxj1aw \_ web.2 dustndus8/myweb:green worker2 Shutdown Shutdown about a minute ago

m1uyhctausof \_ web.2 dustndus8/myweb:blue worker2 Shutdown Shutdown 5 minutes ago

4wvpxt72uz4c web.3 dustndus8/myweb:blue worker1 Running Running 2 minutes ago

hazmv2lv9q0l \_ web.3 dustndus8/myweb:green worker1 Shutdown Shutdown 2 minutes ago

go12itdebviv \_ web.3 dustndus8/myweb:blue worker3 Shutdown Shutdown 5 minutes ago

i1y4y8jalj5h web.4 dustndus8/myweb:blue worker3 Running Running 45 seconds ago

ker84cvy3f0n \_ web.4 dustndus8/myweb:green worker3 Shutdown Shutdown 46 seconds ago

ervot1v0pz3e \_ web.4 dustndus8/myweb:blue worker1 Shutdown Shutdown 5 minutes ago

4phpcorv5xzc web.5 dustndus8/myweb:blue worker2 Running Running about a minute ago

9s65sp59lx4u \_ web.5 dustndus8/myweb:green worker2 Shutdown Shutdown about a minute ago

jtgxe9fhza8z \_ web.5 dustndus8/myweb:blue worker2 Shutdown Shutdown 5 minutes ago

on42li09bm4r web.6 dustndus8/myweb:blue worker3 Running Running about a minute ago

m83rnf7rprjs \_ web.6 dustndus8/myweb:green worker3 Shutdown Shutdown about a minute ago

i9xrctrldc5y \_ web.6 dustndus8/myweb:blue worker3 Shutdown Shutdown 5 minutes ago