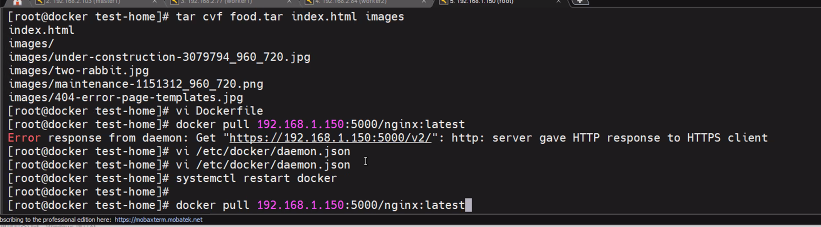

tar cvf food.tar index.html images

vi Dockerfile

docker pull 192.168.1.156:5000/nginx:latest

vi /etc/docker/daemon.json

systemctl restart docker

vi Dockerfile

FROM 192.168.1.156:5000/nginx:latest

ADD test.tar /usr/share/nginx/html

CMD ["nginx", "-g", "daemon off;"]

docker build -t 192.168.1.156:5000/test-home:v1.0 .

docker push 192.168.1.156:5000/test-home:v1.0

docker ps -a

docker run -d -p 80:80 --name test-home 192.168.1.156:5000/test-home:v1.0- vi index.html 이번에는 색깔 ㅂㅏ꿔보자

tar cvf sale.tar images index.html

ls

vi Dockerfile

FROM 192.168.1.156:5000/nginx:latest

ADD sale.tar /usr/share/nginx/html

CMD ["nginx", "-g", "daemon off;"]

docker build -t 192.168.1.156:5000/test-home:v2.0 .

docker push 192.168.1.156:5000/test-home:v2.0

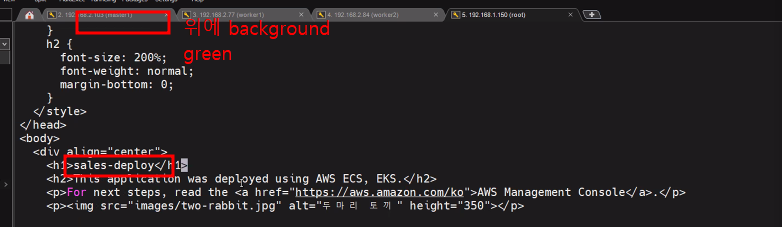

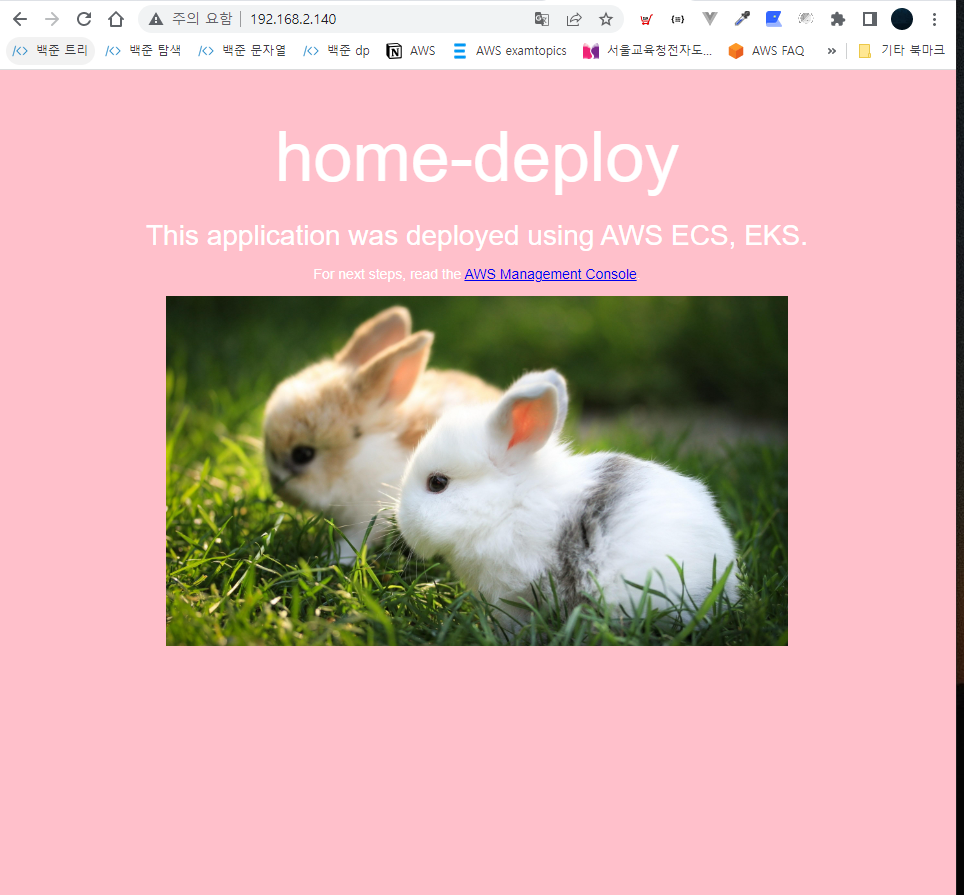

vi index.html

background-> pink

body > h1 -> home-deploy

ls

vi Dockerfile

FROM 192.168.1.156:5000/nginx:latest

ADD home.tar /usr/share/nginx/html

CMD ["nginx", "-g", "daemon off;"]

tar cvf home.tar images index.html

docker build -t 192.168.1.156:5000/test-home:v0.0

docker push 192.168.1.156:5000/test-home:v0.0master1

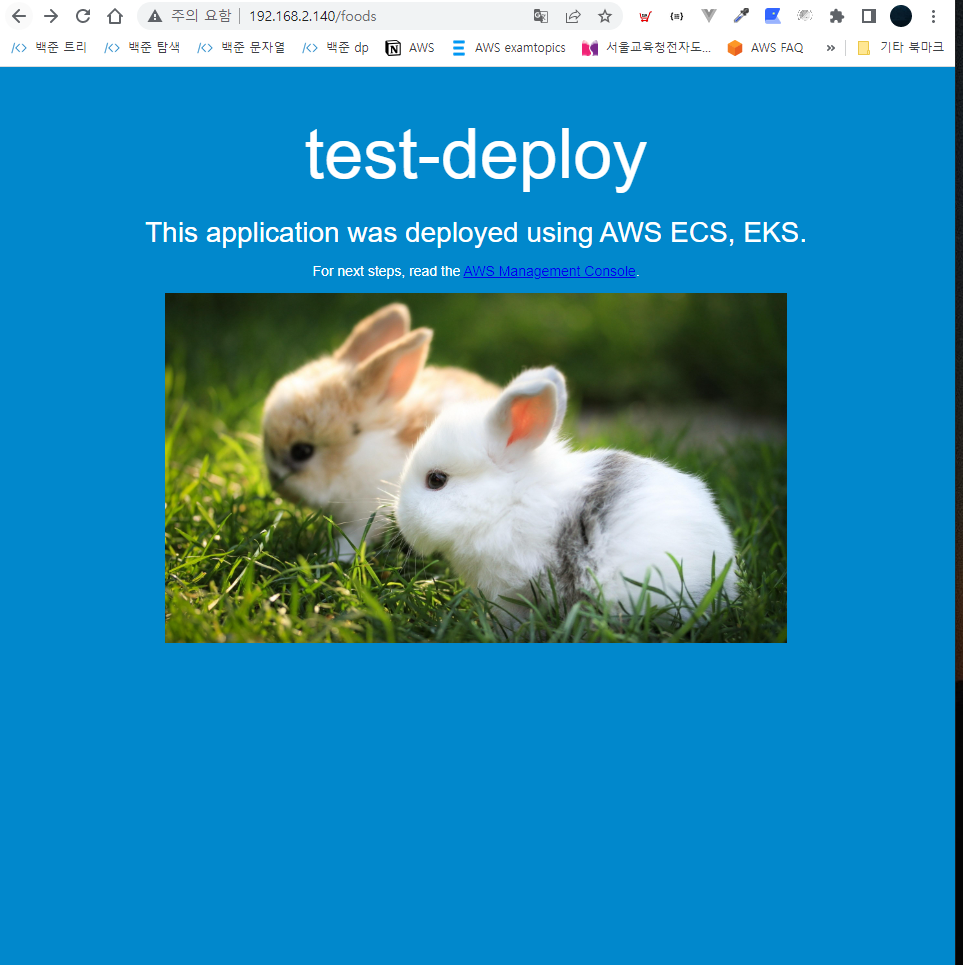

vi ingress-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

image: 192.168.1.156:5000/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

image: 192.168.1.156:5000/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: 192.168.1.156:5000/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f ingress-deploy.yaml # vi ingress-config.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80

# kubectl apply -f ingress-config.yaml

# k get ingress ingress-nginx

# k describe ingress ingress-nginx

# k get pod -n ingress-nginx --show-labels# vi ingress-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller-svc

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

externalIPs:

- 192.168.2.140

# kubectl apply -f ingress-service.yaml

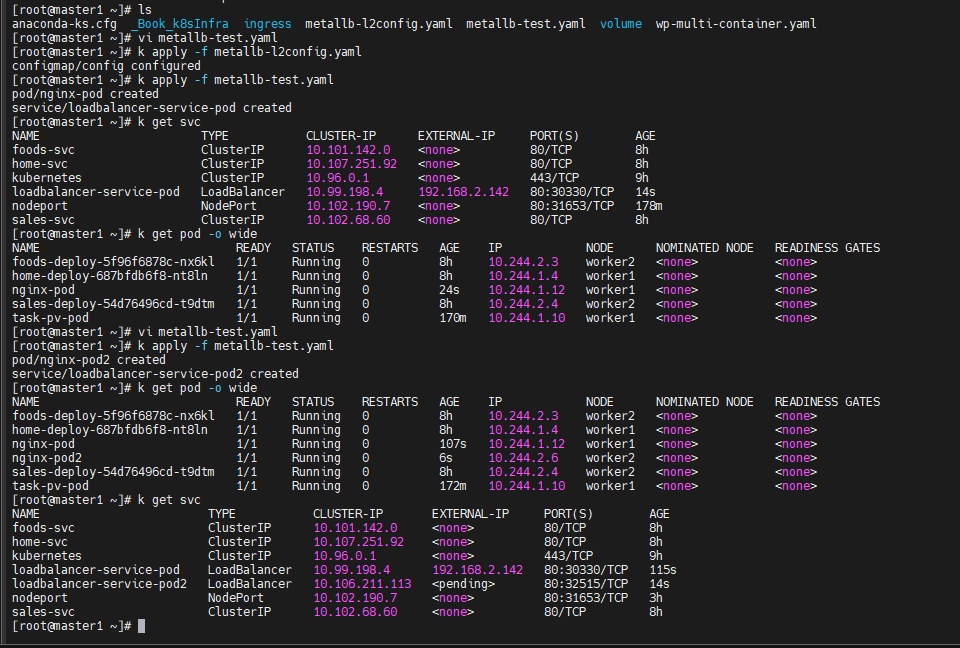

metallb

# kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

# kubectl get pods -n metallb-system -o wide

# vi metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 192.168.2.141-192.168.2.142 # worker1, worker2

# kubectl apply -f metallb-l2config.yaml

# kubectl describe configmaps -n metallb-system # vi metallb-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod-container

image: nginx

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-pod

spec:

type: LoadBalancer

# externalIPs:

# -

selector:

app: nginx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

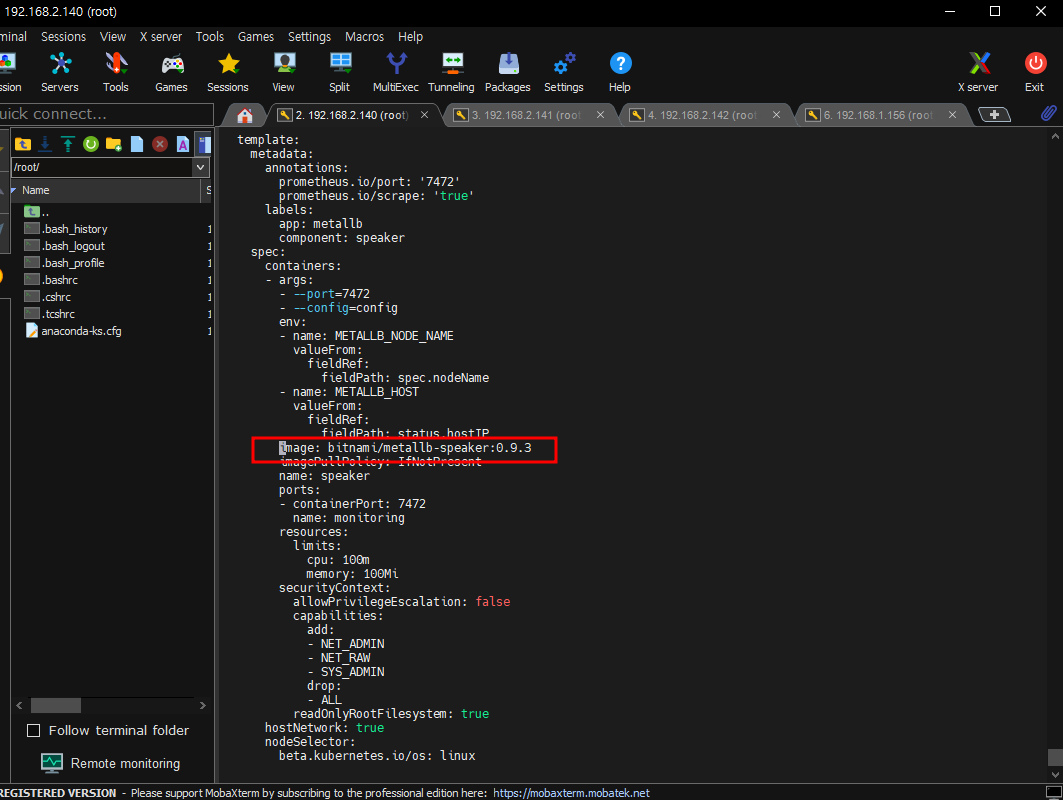

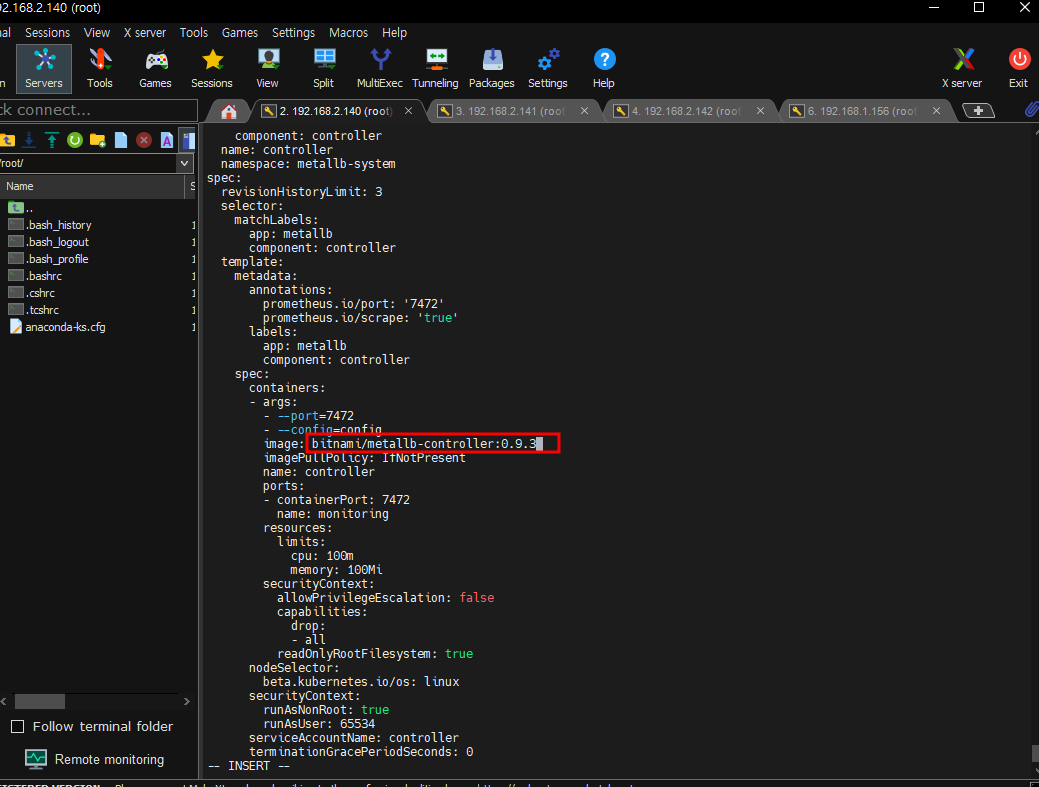

# kubectl apply -f metallb-test.yamlvi /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

image 검색해서 두번바꿈

docker tag bitnami/metallb-speaker:0.9.3192.168.1.156:5000/metallb-controller:0.9.3

docker push 192.168.1.156:5000/metallb-controller:0.9.3

docker tag bitnami/metallb-controller:0.9.3 192.168.1.156:5000/metallb-controller:0.9.3

docker push 192.168.1.156:5000/metallb-controller:0.9.3

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

kubectl get pods -n metallb-system -o widekubectl apply -f metallb-l2config.yaml

kubectl describe configmaps -n metallb-system

kubectl apply -f metallb-test.yaml

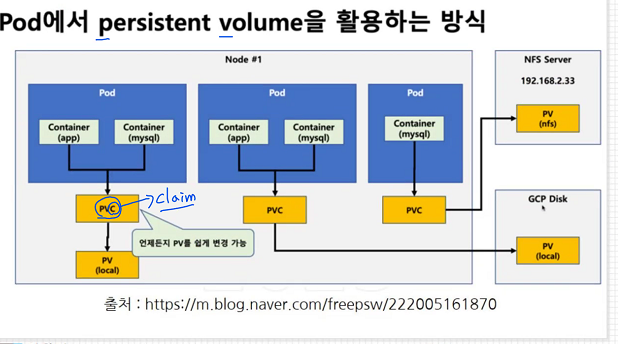

Volume

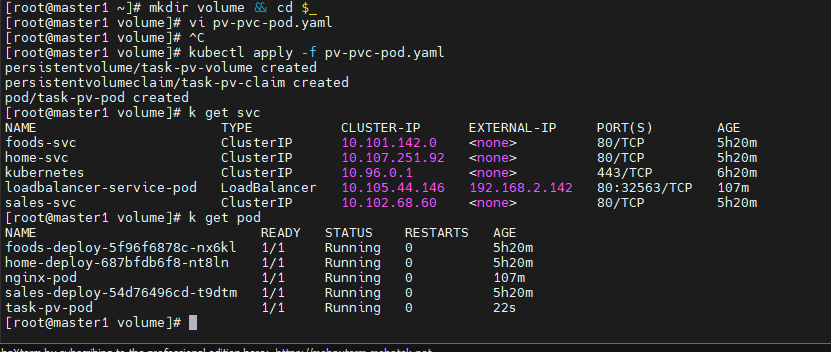

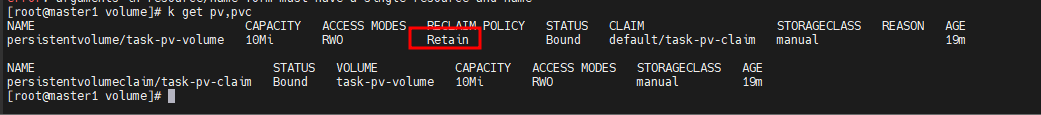

vi pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: 192.168.1.156:5000/nginx:latest

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

# kubectl apply -f pv-pvc-pod.yaml

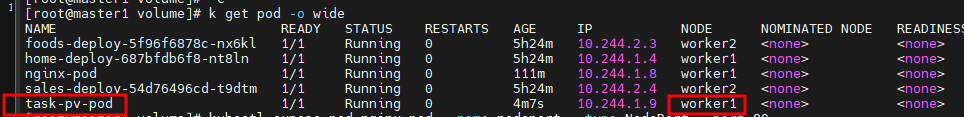

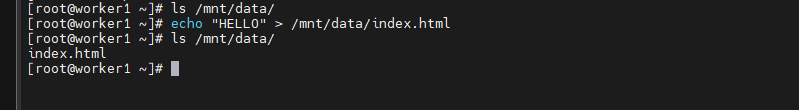

- worker1에 있으니 worker 1로 ㄱㄱ /mnt/data 생겼나 확인

worker1

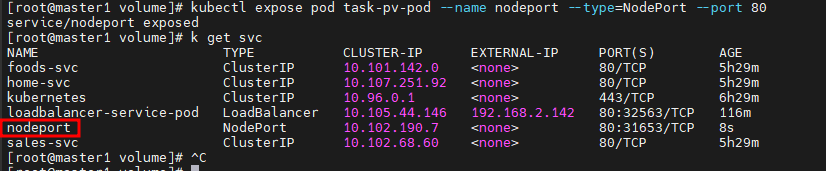

master1

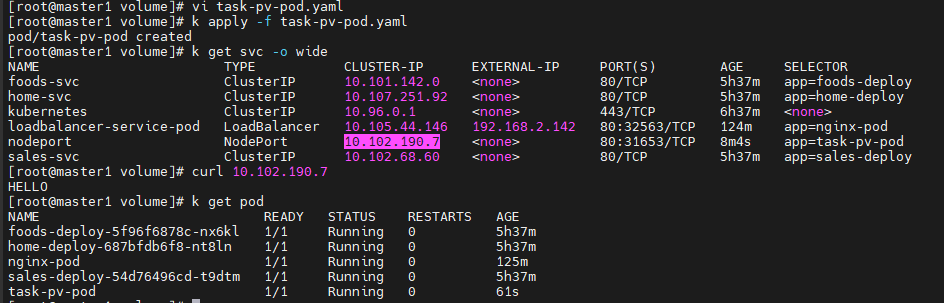

expose pod task-pv-pod --name nodeport --type=NodePort --port 80

k delete pod task-pv-pod - 지우고 worker1갔지만 안지워져있음

vi task-pv-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: 192.168.1.156:5000/nginx:latest

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

k apply -f task-pv-pod.yaml

- pvc만 지워보기

kubectl delete pvc task-pv-claimpending이뜨네 하나는..