7주차

본 페이지는 AWS EKS Workshop 실습 스터디의 일환으로 매주 실습 결과와 생각을 기록하는 장으로써 작성하였습니다.

실습 전 환경 배포

$curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick6.yaml

# Stack 배포

$aws cloudformation deploy \

--template-file eks-oneclick6.yaml \

--stack-name myeks \

--region ap-northeast-2 \

--parameter-overrides KeyName=test-key \

SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 \

MyIamUserAccessKeyID=AKIA... \

MyIamUserSecretAccessKey='R1oQ6y83A...' \

ClusterBaseName=myeks

# default 네임스페이스

$kubectl ns default

# context 이름 변경

NICK=eljoe

$kubectl ctx

eljoe@myeks.ap-northeast-2.eksctl.io

$kubectl config rename-context eljoe@myeks.ap-northeast-2.eksctl.io $NICK

Context "eljoe@myeks.ap-northeast-2.eksctl.io" renamed to "eljoe".

# ExternalDNS

$MyDomain=eljoe-test.link

$echo "export MyDomain=eljoe-test.link" >> /etc/profile

$MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

$curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

$MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# LB Controller

$helm repo add eks https://aws.github.io/eks-charts

$helm repo update

$helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# Node IP & Private IP

$N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

$N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

$N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

$echo "export N1=$N1" >> /etc/profile

$echo "export N2=$N2" >> /etc/profile

$echo "export N3=$N3" >> /etc/profile

# Node 보안그룹 ID

$NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values='*ng1*' --query "SecurityGroups[*].[GroupId]" --output text)

$aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.0/24AWS Controller for Kubernetes(ACK)

-

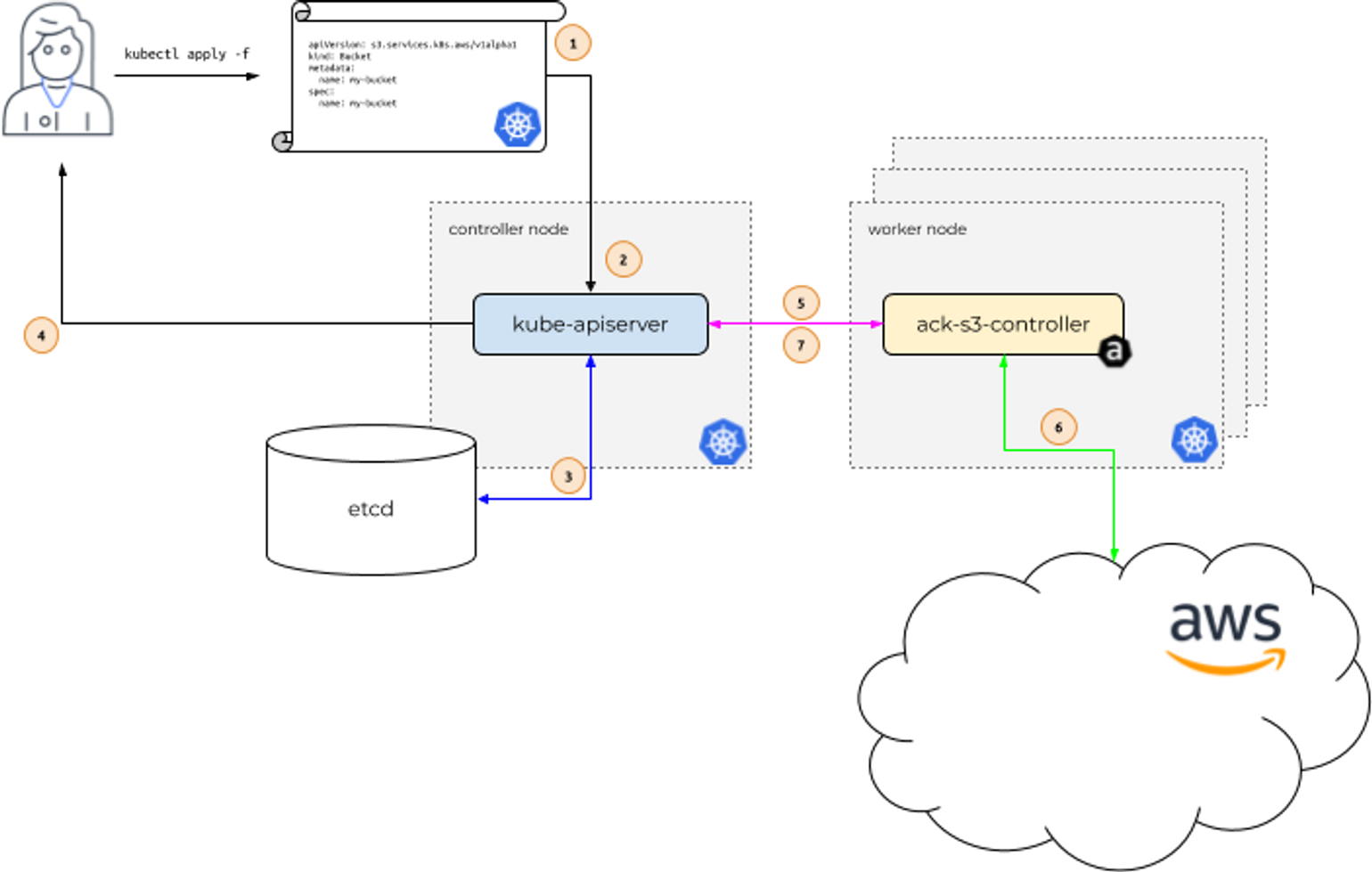

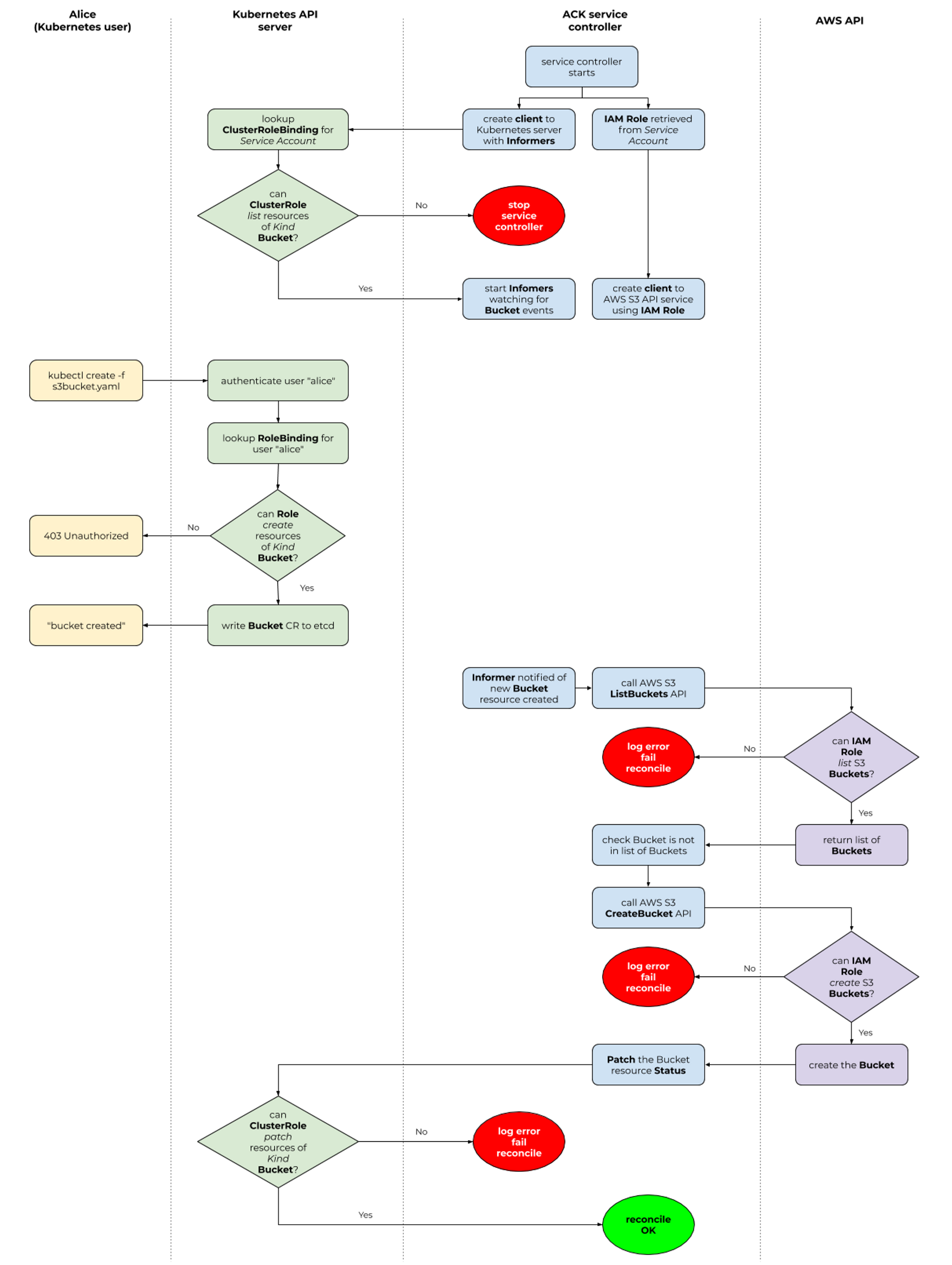

AWS 리소스 서비스를 K8S에서 YAML을 통해 직접 정의하고 사용할 수 있는 기능

- 사용자는 AWS S3 버킷을 생성하는 yaml 파일을 작성하고 kubectl을 통해 생성 요청을 진행한다.

- api-server는 워커노드의 ack-s3-controller 파드에 요청을 전달한다.

- 요청을 전달받은 파드(IRSA)는 AWS S3 API call을 통해 버킷을 생성한다.

-

현재(23/05/29 기준) GA 서비스는 17개가 출시되었다.

AWS Services Latest Version Amazon Prometheus Service 1.2.3 Amazon ApiGatewayV2 1.0.3 Amazon Application Auto Scaling 1.0.5 Amazon CloudTrail 1.0.3 Amazon dynamodb 1.1.1 Amazon EC2 1.0.3 Amazon ECR 1.0.4 Amazon EKS 1.0.2 Amazon EMR Containers 1.0.1 Amazon IAM 1.2.1 Amazon KMS 1.0.5 AWS Lambda 1.0.1 Amazon MemoryDB 1.0.0 Amazon RDS 1.1.4 Amazon S3 1.0.4 Amazon Sagemaker 1.2.2 AWS SFN 1.0.2 -

필요 권한 : K8S & AWS API RBAC 및 각 서비스 컨트롤러 파드에 부여한 IRSA

-

S3 실습

- ACK S3 Controller 설치

# 서비스명 변수 지정 $export SERVICE=s3 # 릴리즈 버전 변수 지정 $export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-) $echo $SERVICE $RELEASE_VERSION s3 1.0.4 # Helm 배포 $helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION $tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz # 파일 확인 $tree ~/$SERVICE-chart /root/s3-chart ├── Chart.yaml ├── crds │ ├── s3.services.k8s.aws_buckets.yaml │ ├── services.k8s.aws_adoptedresources.yaml │ └── services.k8s.aws_fieldexports.yaml ├── templates │ ├── cluster-role-binding.yaml │ ├── cluster-role-controller.yaml │ ├── deployment.yaml │ ├── _helpers.tpl │ ├── metrics-service.yaml │ ├── NOTES.txt │ ├── role-reader.yaml │ ├── role-writer.yaml │ └── service-account.yaml ├── values.schema.json └── values.yaml # S3 Controller 설치 $export ACK_SYSTEM_NAMESPACE=ack-system $export AWS_REGION=ap-northeast-2 $helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart # 설치 확인 $helm list --namespace $ACK_SYSTEM_NAMESPACE NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ack-s3-controller ack-system 1 2023-06-08 10:08:13.328049434 +0900 KST deployed s3-chart-1.0.4 1.0.4 $kubectl -n ack-system get pods NAME READY STATUS RESTARTS AGE ack-s3-controller-s3-chart-7c55c6657d-pk764 1/1 Running 0 55s $kubectl get crd | grep $SERVICE buckets.s3.services.k8s.aws 2023-06-08T01:08:11Z $kubectl get all -n ack-system NAME READY STATUS RESTARTS AGE pod/ack-s3-controller-s3-chart-7c55c6657d-pk764 1/1 Running 0 91s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ack-s3-controller-s3-chart 1/1 1 1 91s NAME DESIRED CURRENT READY AGE replicaset.apps/ack-s3-controller-s3-chart-7c55c6657d 1 1 1 91s $kubectl describe sa -n ack-system ack-$SERVICE-controller Name: ack-s3-controller Namespace: ack-system Labels: app.kubernetes.io/instance=ack-s3-controller app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=s3-chart app.kubernetes.io/version=1.0.4 helm.sh/chart=s3-chart-1.0.4 k8s-app=s3-chart Annotations: meta.helm.sh/release-name: ack-s3-controller meta.helm.sh/release-namespace: ack-system ... - IRSA(AmazonS3FullAccess) 생성 및 연결

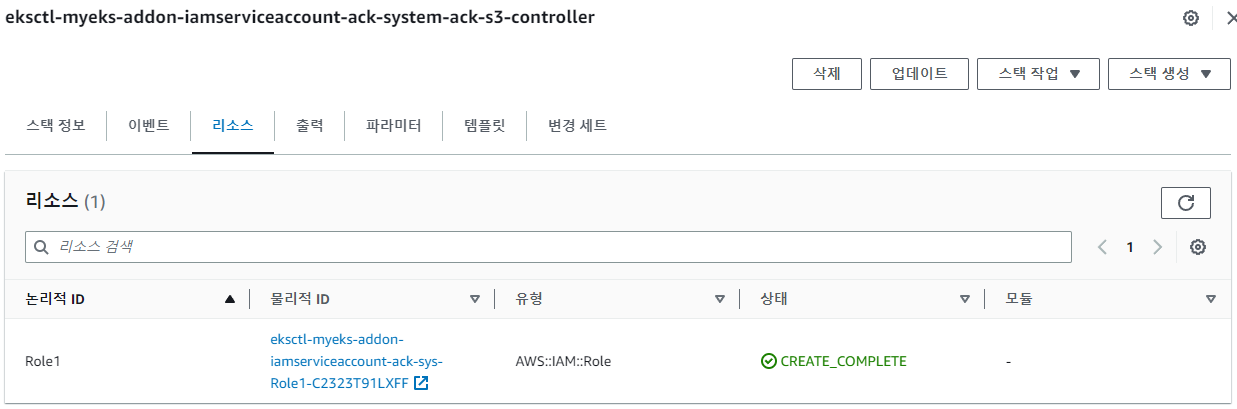

# S3FullAccess 정책 부여한 IRSA 적용 $eksctl create iamserviceaccount \ --name ack-$SERVICE-controller \ --namespace ack-system \ --cluster $CLUSTER_NAME \ --attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonS3FullAccess`].Arn' --output text) \ --override-existing-serviceaccounts --approve $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN ack-system ack-s3-controller arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-C2323T91LXFF

# ACK S3 Controller Deployment 재시작 $kubectl -n ack-system rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart deployment.apps/ack-s3-controller-s3-chart restarted # 해당 파드 IRSA 관련 ENV 및 Volume 추가 확인 $kubectl describe pod -n ack-system -l k8s-app=$SERVICE-chart ... Environment: ... AWS_ROLE_ARN: arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-C2323T91LXFF ... Mounts: /var/run/secrets/eks.amazonaws.com/serviceaccount from aws-iam-token (ro) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-hlxlh (ro) .. Volumes: aws-iam-token: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 86400 kube-api-access-hlxlh: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true ... - S3 버킷 생성, 변경 및 삭제

# 버킷 생성을 위한 계정 ID 및 버킷 이름 변수 지정 $export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text) $export BUCKET_NAME=my-ack-s3-bucket-$AWS_ACCOUNT_ID # 버킷 생성 $cat <<EOF | kubectl create -f - apiVersion: s3.services.k8s.aws/v1alpha1 kind: Bucket metadata: name: $BUCKET_NAME spec: name: $BUCKET_NAME EOF bucket.s3.services.k8s.aws/my-ack-s3-bucket-131421611146 created $aws s3 ls 2023-06-08 10:30:39 my-ack-s3-bucket-131421611146 $kubectl describe bucket/$BUCKET_NAME | head -6 Name: my-ack-s3-bucket-131421611146 Namespace: default Labels: <none> Annotations: <none> API Version: s3.services.k8s.aws/v1alpha1 Kind: Bucket # 버킷 수정 : 태그 입력 $cat <<EOF | kubectl apply -f - apiVersion: s3.services.k8s.aws/v1alpha1 kind: Bucket metadata: name: $BUCKET_NAME spec: name: $BUCKET_NAME tagging: tagSet: - key: myTagKey value: myTagValue EOF bucket.s3.services.k8s.aws/my-ack-s3-bucket-131421611146 configured $kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5 Spec: Name: my-ack-s3-bucket-131421611146 Tagging: Tag Set: Key: myTagKey Value: myTagValue # 버킷 삭제 $kubectl delete bucket $BUCKET_NAME bucket.s3.services.k8s.aws "my-ack-s3-bucket-123..." deleted $aws s3 ls # Controller 삭제 $helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller $kubectl delete -f ~/$SERVICE-chart/crds $eksctl delete iamserviceaccount --cluster myeks --name ack-$SERVICE-controller --namespace ack-system

- ACK S3 Controller 설치

-

EC2 실습

- ACK EC2 Controller 설치

# 서비스명 변수 지정 $export SERVICE=ec2 # 릴리즈 버전 변수 지정 $export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-) $echo $SERVICE $RELEASE_VERSION ec2 1.0.3 # Helm 배포 $helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION $tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz # 파일 확인 $tree ~/$SERVICE-chart /root/ec2-chart ├── Chart.yaml ├── crds │ ├── ec2.services.k8s.aws_dhcpoptions.yaml │ ├── ec2.services.k8s.aws_elasticipaddresses.yaml │ ├── ec2.services.k8s.aws_instances.yaml │ ├── ec2.services.k8s.aws_internetgateways.yaml │ ├── ec2.services.k8s.aws_natgateways.yaml │ ├── ec2.services.k8s.aws_routetables.yaml │ ├── ec2.services.k8s.aws_securitygroups.yaml │ ├── ec2.services.k8s.aws_subnets.yaml │ ├── ec2.services.k8s.aws_transitgateways.yaml │ ├── ec2.services.k8s.aws_vpcendpoints.yaml │ ├── ec2.services.k8s.aws_vpcs.yaml │ ├── services.k8s.aws_adoptedresources.yaml │ └── services.k8s.aws_fieldexports.yaml ├── templates │ ├── cluster-role-binding.yaml │ ├── cluster-role-controller.yaml │ ├── deployment.yaml │ ├── _helpers.tpl │ ├── metrics-service.yaml │ ├── NOTES.txt │ ├── role-reader.yaml │ ├── role-writer.yaml │ └── service-account.yaml ├── values.schema.json └── values.yaml # EC2 Controller 설치 $export ACK_SYSTEM_NAMESPACE=ack-system $export AWS_REGION=ap-northeast-2 $helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart # 설치 확인 $helm list --namespace $ACK_SYSTEM_NAMESPACE NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ack-ec2-controller ack-system 1 2023-06-08 11:05:50.910353973 +0900 KST deployed ec2-chart-1.0.3 1.0.3 $kubectl -n ack-system get pods NAME READY STATUS RESTARTS AGE ack-ec2-controller-ec2-chart-777567ff4c-wglwc 1/1 Running 0 32s $kubectl get crd | grep $SERVICE dhcpoptions.ec2.services.k8s.aws 2023-06-08T02:05:50Z elasticipaddresses.ec2.services.k8s.aws 2023-06-08T02:05:50Z instances.ec2.services.k8s.aws 2023-06-08T02:05:50Z internetgateways.ec2.services.k8s.aws 2023-06-08T02:05:50Z natgateways.ec2.services.k8s.aws 2023-06-08T02:05:50Z routetables.ec2.services.k8s.aws 2023-06-08T02:05:50Z securitygroups.ec2.services.k8s.aws 2023-06-08T02:05:50Z subnets.ec2.services.k8s.aws 2023-06-08T02:05:50Z transitgateways.ec2.services.k8s.aws 2023-06-08T02:05:50Z vpcendpoints.ec2.services.k8s.aws 2023-06-08T02:05:50Z vpcs.ec2.services.k8s.aws 2023-06-08T02:05:50Z $kubectl get all -n ack-system NAME READY STATUS RESTARTS AGE pod/ack-ec2-controller-ec2-chart-777567ff4c-wglwc 1/1 Running 0 62s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/ack-ec2-controller-ec2-chart 1/1 1 1 62s NAME DESIRED CURRENT READY AGE replicaset.apps/ack-ec2-controller-ec2-chart-777567ff4c 1 1 1 62s $kubectl describe sa -n ack-system ack-$SERVICE-controller Name: ack-ec2-controller Namespace: ack-system Labels: app.kubernetes.io/instance=ack-ec2-controller app.kubernetes.io/managed-by=Helm app.kubernetes.io/name=ec2-chart app.kubernetes.io/version=1.0.3 helm.sh/chart=ec2-chart-1.0.3 k8s-app=ec2-chart Annotations: meta.helm.sh/release-name: ack-ec2-controller meta.helm.sh/release-namespace: ack-system ... - IRSA(AmazonEC2FullAccess) 생성 및 연결

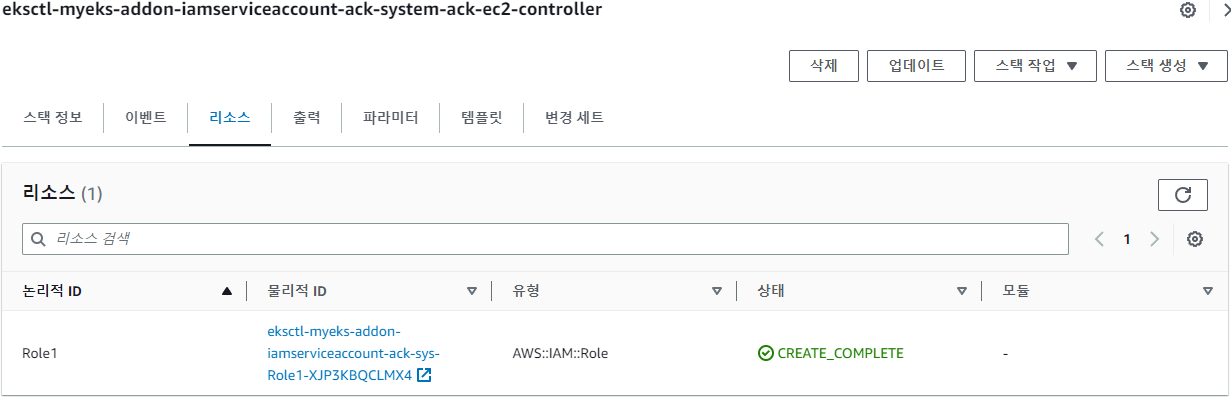

# EC2FullAccess 정책 부여한 IRSA 적용 $eksctl create iamserviceaccount \ --name ack-$SERVICE-controller \ --namespace ack-system \ --cluster $CLUSTER_NAME \ --attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonEC2FullAccess`].Arn' --output text) \ --override-existing-serviceaccounts --approve $eksctl get iamserviceaccount --cluster $CLUSTER_NAME NAMESPACE NAME ROLE ARN ack-system ack-ec2-controller arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-XJP3KBQCLMX4

# ACK EC2 Controller Deployment 재시작 $kubectl -n ack-system rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart deployment.apps/ack-ec2-controller-ec2-chart restarted # 해당 파드 IRSA 관련 ENV 및 Volume 추가 확인 $kubectl describe pod -n ack-system -l k8s-app=$SERVICE-chart ... Environment: ... AWS_ROLE_ARN: arn:aws:iam::131421611146:role/eksctl-myeks-addon-iamserviceaccount-ack-sys-Role1-XJP3KBQCLMX4 ... Mounts: /var/run/secrets/eks.amazonaws.com/serviceaccount from aws-iam-token (ro) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-b9gh6 (ro) .. Volumes: aws-iam-token: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 86400 kube-api-access-b9gh6: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true ... - VPC & Subnet 생성 및 삭제

# VPC 생성 $cat <<EOF | kubectl apply -f - apiVersion: ec2.services.k8s.aws/v1alpha1 kind: VPC metadata: name: vpc-tutorial-test spec: cidrBlocks: - 10.0.0.0/16 enableDNSSupport: true enableDNSHostnames: true EOF vpc.ec2.services.k8s.aws/vpc-tutorial-test created $aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text 10.0.0.0/16 vpc-0d73c99a5e3b2b710 $kubectl get vpcs NAME ID STATE vpc-tutorial-test vpc-0d73c99a5e3b2b710 available # 서브넷 생성을 위한 VPC ID 변수 지정 $VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID}) # 서브넷 생성 $cat <<EOF | kubectl apply -f - apiVersion: ec2.services.k8s.aws/v1alpha1 kind: Subnet metadata: name: subnet-tutorial-test spec: cidrBlock: 10.0.0.0/20 vpcID: $VPCID EOF subnet.ec2.services.k8s.aws/subnet-tutorial-test created $aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text 10.0.0.0/20 subnet-007ea7d9b307aef71 $kubectl get subnets NAME ID STATE subnet-tutorial-test subnet-007ea7d9b307aef71 available # VPC, 서브넷 삭제 $kubectl delete vpc vpc-tutorial-test & kubectl delete subnet subnet-tutorial-test subnet.ec2.services.k8s.aws "subnet-tutorial-test" deleted vpc.ec2.services.k8s.aws "vpc-tutorial-test" deleted # Controller 삭제 $helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller $kubectl delete -f ~/$SERVICE-chart/crds $eksctl delete iamserviceaccount --cluster myeks --name ack-$SERVICE-controller --namespace ack-system

- ACK EC2 Controller 설치

Flux

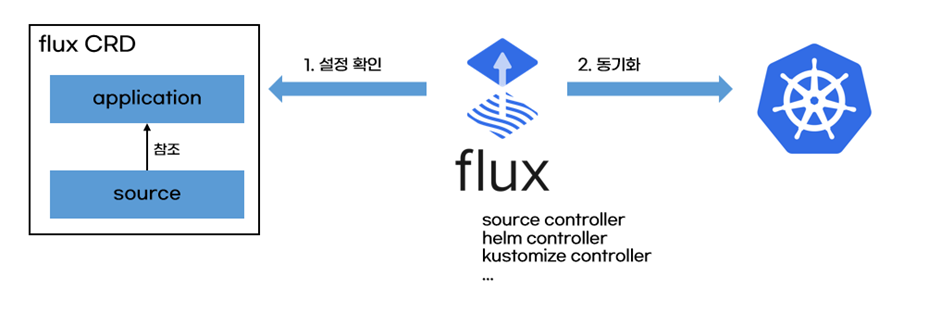

- K8S를 위한 gitops 도구, git repo에 저장된 K8S manifest를 읽고 배포하여 git과 동기화한다.

- 출처 - 악분의 블로그

- Flux 설치

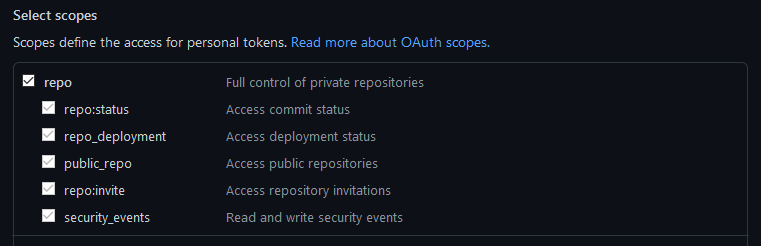

- GitHub Personal Access Token 생성

- CLI 설치 및 Bootstrap

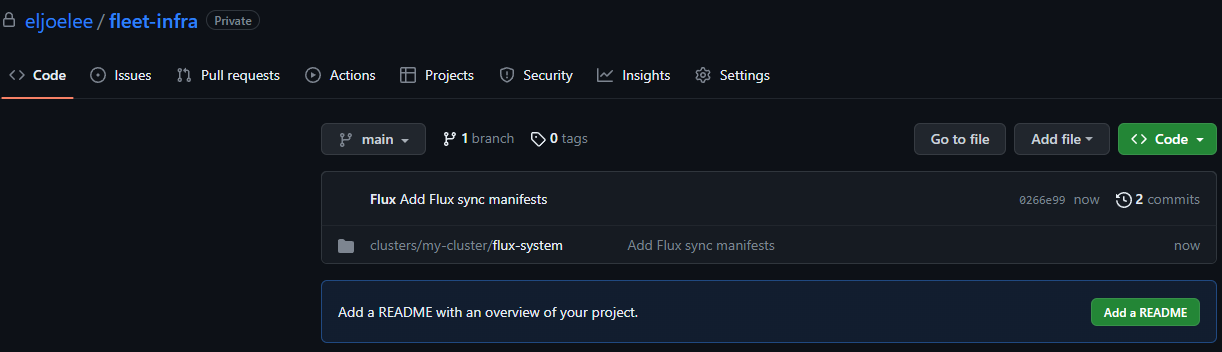

# CLI 설치 $curl -s https://fluxcd.io/install.sh | sudo bash $. <(flux completion bash) $flux --version flux version 2.0.0-rc.5 # Github Token & Username 변수 지정 $export GITHUB_TOKEN=ghp_... $export GITHUB_USER=eljoelee # Bootstrap flux bootstrap github \ --owner=$GITHUB_USER \ --repository=fleet-infra \ --branch=main \ --path=./clusters/my-cluster \ --personal

# 설치 확인 $kubectl get pods -n flux-system NAME READY STATUS RESTARTS AGE helm-controller-fbdd59577-q4vtf 1/1 Running 0 105s kustomize-controller-6b67b54cf8-xftpt 1/1 Running 0 105s notification-controller-78f4869c94-6rnsv 1/1 Running 0 105s source-controller-75db64d9f7-4ldq4 1/1 Running 0 105s $kubectl get crd | grep fluxc alerts.notification.toolkit.fluxcd.io 2023-06-08T05:49:00Z buckets.source.toolkit.fluxcd.io 2023-06-08T05:49:00Z gitrepositories.source.toolkit.fluxcd.io 2023-06-08T05:49:00Z helmcharts.source.toolkit.fluxcd.io 2023-06-08T05:49:00Z helmreleases.helm.toolkit.fluxcd.io 2023-06-08T05:49:00Z helmrepositories.source.toolkit.fluxcd.io 2023-06-08T05:49:00Z kustomizations.kustomize.toolkit.fluxcd.io 2023-06-08T05:49:00Z ocirepositories.source.toolkit.fluxcd.io 2023-06-08T05:49:00Z providers.notification.toolkit.fluxcd.io 2023-06-08T05:49:00Z receivers.notification.toolkit.fluxcd.io 2023-06-08T05:49:00Z $kubectl get gitrepository -n flux-system NAME URL AGE READY STATUS flux-system ssh://git@github.com/eljoelee/fleet-infra 2m47s True stored artifact for revision 'main@sha1:0266e99c89584d55d27e680df481dc54e4e33237'

- GitHub Personal Access Token 생성

- GitOps 도구 설치

- flux 대시보드 설치

$curl --silent --location "https://github.com/weaveworks/weave-gitops/releases/download/v0.24.0/gitops-$(uname)-$(uname -m).tar.gz" | tar xz -C /tmp $sudo mv /tmp/gitops /usr/local/bin $gitops version To improve our product, we would like to collect analytics data. You can read more about what data we collect here: https://docs.gitops.weave.works/docs/feedback-and-telemetry/ ? Would you like to turn on analytics to help us improve our product? [Y/n] Y Current Version: 0.24.0 GitCommit: cc1d0e680c55e0aaf5bfa0592a0a454fb2064bc1 BuildTime: 2023-05-24T16:29:14Z Branch: releases/v0.24.0 # 대시보드 설치 $PASSWORD="password" $gitops create dashboard ww-gitops --password=$PASSWORD ... ✔ Installed GitOps Dashboard # 설치 확인 $flux -n flux-system get helmrelease NAME REVISION SUSPENDED READY MESSAGE ww-gitops 4.0.23 False True Release reconciliation succeeded$kubectl -n flux-system get pod,svc $kubectl -n flux-system get pod,svc NAME READY STATUS RESTARTS AGE pod/helm-controller-fbdd59577-q4vtf 1/1 Running 0 6m25s pod/kustomize-controller-6b67b54cf8-xftpt 1/1 Running 0 6m25s pod/notification-controller-78f4869c94-6rnsv 1/1 Running 0 6m25s pod/source-controller-75db64d9f7-4ldq4 1/1 Running 0 6m25s pod/ww-gitops-weave-gitops-89bf585f4-vsfdb 1/1 Running 0 53s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/notification-controller ClusterIP 10.100.229.164 <none> 80/TCP 6m25s service/source-controller ClusterIP 10.100.121.42 <none> 80/TCP 6m25s service/webhook-receiver ClusterIP 10.100.66.27 <none> 80/TCP 6m25s service/ww-gitops-weave-gitops ClusterIP 10.100.251.227 <none> 9001/TCP 53s - Ingress 설정

$CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text` $echo $CERT_ARN $cat <<EOF | kubectl apply -n flux-system -f - apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: gitops-ingress annotations: alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN alb.ingress.kubernetes.io/group.name: study alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]' alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/ssl-redirect: "443" alb.ingress.kubernetes.io/success-codes: 200-399 alb.ingress.kubernetes.io/target-type: ip spec: ingressClassName: alb rules: - host: gitops.$MyDomain http: paths: - backend: service: name: ww-gitops-weave-gitops port: number: 9001 path: / pathType: Prefix EOF ingress.networking.k8s.io/gitops-ingress created $kubectl get ingress -n flux-system NAME CLASS HOSTS ADDRESS PORTS AGE gitops-ingress alb gitops.eljoe-test.link myeks-ingress-alb-762217735.ap-northeast-2.elb.amazonaws.com 80 15s - 접속(ID : admin / PW : password)

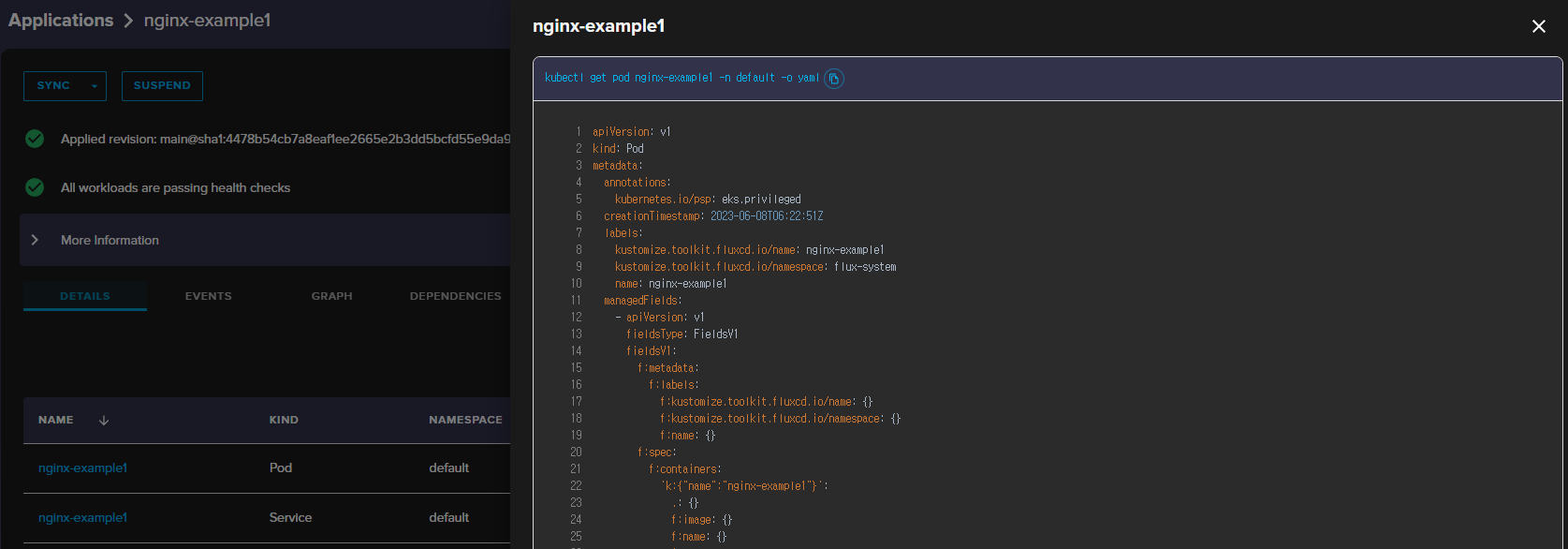

- hello-world(kustomize)

# 악분님의 Github repo로 소스 생성 $GITURL="https://github.com/sungwook-practice/fluxcd-test.git" $flux create source git nginx-example1 --url=$GITURL --branch=main --interval=30s ✚ generating GitRepository source ► applying GitRepository source ✔ GitRepository source created ◎ waiting for GitRepository source reconciliation ✔ GitRepository source reconciliation completed ✔ fetched revision: main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9 # 소스 확인 $flux get sources git NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True stored artifact for revision 'main@sha1:0266e99c' nginx-example1 main@sha1:4478b54c False True stored artifact for revision 'main@sha1:4478b54c' $kubectl -n flux-system get gitrepositories NAME URL AGE READY STATUS flux-system ssh://git@github.com/eljoelee/fleet-infra 21m True stored artifact for revision 'main@sha1:0266e99c89584d55d27e680df481dc54e4e33237' nginx-example1 https://github.com/sungwook-practice/fluxcd-test.git 49s True stored artifact for revision 'main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9' # kustomization를 통해 애플리케이션(nginx-example1) 생성 $flux create kustomization nginx-example1 \ --target-namespace=default \ --interval=1m \ --source=nginx-example1 \ --path="./nginx" \ --health-check-timeout=2m ✚ generating Kustomization ► applying Kustomization ✔ Kustomization created ◎ waiting for Kustomization reconciliation ✔ Kustomization nginx-example1 is ready ✔ applied revision main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9 # 배포 확인 $kubectl get pod,svc nginx-example1 NAME READY STATUS RESTARTS AGE pod/nginx-example1 1/1 Running 0 18s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx-example1 ClusterIP 10.100.99.234 <none> 80/TCP 18s $flux get kustomizations NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True Applied revision: main@sha1:0266e99c nginx-example1 main@sha1:4478b54c False True Applied revision: main@sha1:4478b54c # 애플리케이션(nginx-example1) 삭제 $flux delete kustomization nginx-example1 Are you sure you want to delete this kustomization: y ► deleting kustomization nginx-example1 in flux-system namespace ✔ kustomization deleted # 삭제 확인 $flux get kustomizations NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True Applied revision: main@sha1:0266e99c # 애플리케이션 생성 시 prune(default: false) 옵션을 활성화하지 않으면 파드와 서비스는 삭제되지 않음 $kubectl get pod,svc nginx-example1 NAME READY STATUS RESTARTS AGE pod/nginx-example1 1/1 Running 0 2m50s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx-example1 ClusterIP 10.100.99.234 <none> 80/TCP 2m50s $kubectl delete pod,svc nginx-example1 pod "nginx-example1" deleted service "nginx-example1" deleted # prune 옵션 활성화(true)하여 생성 $flux create kustomization nginx-example1 \ --target-namespace=default \ --prune=true \ --interval=1m \ --source=nginx-example1 \ --path="./nginx" \ --health-check-timeout=2m ✚ generating Kustomization ► applying Kustomization ✔ Kustomization created ◎ waiting for Kustomization reconciliation ✔ Kustomization nginx-example1 is ready ✔ applied revision main@sha1:4478b54cb7a8eaf1ee2665e2b3dd5bcfd55e9da9 # 배포 확인 $flux get kustomizations NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True Applied revision: main@sha1:0266e99c nginx-example1 main@sha1:4478b54c False True Applied revision: main@sha1:4478b54c $kubectl get pod,svc nginx-example1 NAME READY STATUS RESTARTS AGE pod/nginx-example1 1/1 Running 0 104s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/nginx-example1 ClusterIP 10.100.27.241 <none> 80/TCP 104s # 애플리케이션(nginx-example1) 삭제 $flux delete kustomization nginx-example1 Are you sure you want to delete this kustomization: y ► deleting kustomization nginx-example1 in flux-system namespace ✔ kustomization deleted # 삭제 확인 $flux get kustomizations NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True Applied revision: main@sha1:0266e99c $kubectl get pod,svc nginx-example1 Error from server (NotFound): pods "nginx-example1" not found Error from server (NotFound): services "nginx-example1" not found # 소스 삭제 $flux delete source git nginx-example1 Are you sure you want to delete this source git: y ► deleting source git nginx-example1 in flux-system namespace ✔ source git deleted # 삭제 확인 $flux get sources git NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:0266e99c False True stored artifact for revision 'main@sha1:0266e99c'

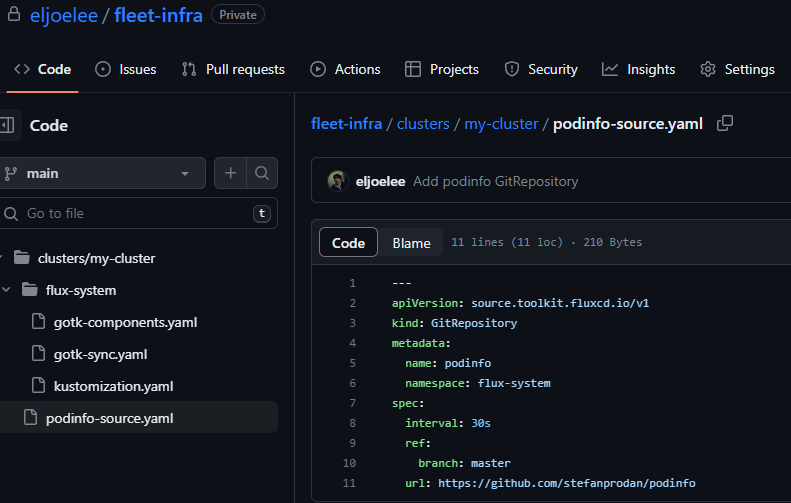

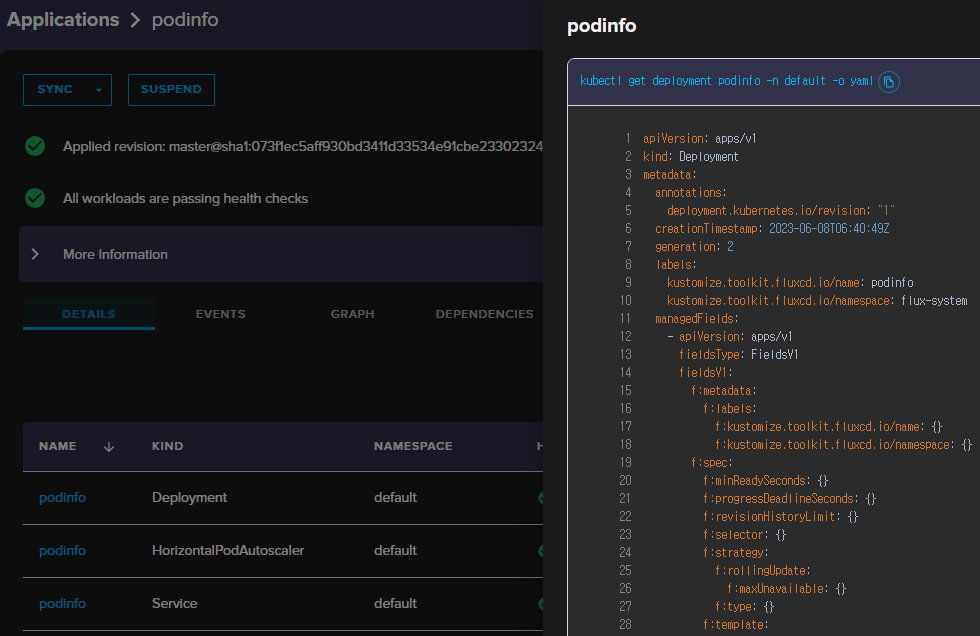

- 공식 Docs 샘플 실습

# clone $git clone https://github.com/$GITHUB_USER/fleet-infra Cloning into 'fleet-infra'... Username for 'https://github.com': <username> Password for 'https://eljoelee@github.com': <token> remote: Enumerating objects: 13, done. remote: Counting objects: 100% (13/13), done. remote: Compressing objects: 100% (6/6), done. remote: Total 13 (delta 0), reused 13 (delta 0), pack-reused 0 Receiving objects: 100% (13/13), 34.98 KiB | 5.83 MiB/s, done. $cd fleet-infra && tree . └── clusters └── my-cluster └── flux-system ├── gotk-components.yaml ├── gotk-sync.yaml └── kustomization.yaml 3 directories, 3 files # GitRepository yaml 파일 생성 $flux create source git podinfo \ --url=https://github.com/stefanprodan/podinfo \ --branch=master \ --interval=30s \ --export > ./clusters/my-cluster/podinfo-source.yaml $cat ./clusters/my-cluster/podinfo-source.yaml | yh --- apiVersion: source.toolkit.fluxcd.io/v1 kind: GitRepository metadata: name: podinfo namespace: flux-system spec: interval: 30s ref: branch: master url: https://github.com/stefanprodan/podinfo # git config $git config --global user.name "*Your Name*" $git config --global user.email "*you@example.com*" $git add -A && git commit -m "Add podinfo GitRepository" [main 61291a4] Add podinfo GitRepository 1 file changed, 11 insertions(+) create mode 100644 clusters/my-cluster/podinfo-source.yaml $git push Username for 'https://github.com': <username> Password for 'https://eljoelee@github.com': <token> Enumerating objects: 8, done. Counting objects: 100% (8/8), done. Delta compression using up to 2 threads Compressing objects: 100% (3/3), done. Writing objects: 100% (5/5), 543 bytes | 77.00 KiB/s, done. Total 5 (delta 0), reused 0 (delta 0), pack-reused 0 To https://github.com/eljoelee/fleet-infra 0266e99..61291a4 main -> main # 소스 확인 $flux get sources git NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:61291a43 False True stored artifact for revision 'main@sha1:61291a43' podinfo master@sha1:073f1ec5 False True stored artifact for revision 'master@sha1:073f1ec5' $kubectl -n flux-system get gitrepositories NAME URL AGE READY STATUS flux-system ssh://git@github.com/eljoelee/fleet-infra 45m True stored artifact for revision 'main@sha1:61291a43a14e1f09a38b93fe32531f2ec8309ff6' podinfo https://github.com/stefanprodan/podinfo 54s True stored artifact for revision 'master@sha1:073f1ec5aff930bd3411d33534e91cbe23302324' # kustomization를 통해 애플리케이션(podinfo) 생성 $flux create kustomization podinfo \ --target-namespace=default \ --source=podinfo \ --path="./kustomize" \ --prune=true \ --interval=5m \ --export > ./clusters/my-cluster/podinfo-kustomization.yaml # Commit and Push $git add -A && git commit -m "Add podinfo Kustomization" [main 3aefc04] Add podinfo Kustomization 1 file changed, 14 insertions(+) create mode 100644 clusters/my-cluster/podinfo-kustomization.yaml $git push Username for 'https://github.com': <username> Password for 'https://eljoelee@github.com': <token> Enumerating objects: 8, done. Counting objects: 100% (8/8), done. Delta compression using up to 2 threads Compressing objects: 100% (3/3), done. Writing objects: 100% (5/5), 597 bytes | 597.00 KiB/s, done. Total 5 (delta 0), reused 0 (delta 0), pack-reused 0 To https://github.com/eljoelee/fleet-infra 61291a4..3aefc04 main -> main # 배포 확인 $flux get kustomizations NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:3aefc040 False True Applied revision: main@sha1:3aefc040 podinfo master@sha1:073f1ec5 False True Applied revision: master@sha1:073f1ec5 $kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/podinfo-59988cbf67-2bcmc 1/1 Running 0 11s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 6h16m service/podinfo ClusterIP 10.100.139.229 <none> 9898/TCP,9999/TCP 11s # 소스 삭제 $flux delete source git podinfo Are you sure you want to delete this source git: y ? Are you sure you want to delete this source git? [y/N] y ✔ source git deleted # 삭제 확인 $flux get sources git NAME REVISION SUSPENDED READY MESSAGE flux-system main@sha1:3aefc040 False True stored artifact for revision 'main@sha1:3aefc040'

- flux 대시보드 설치

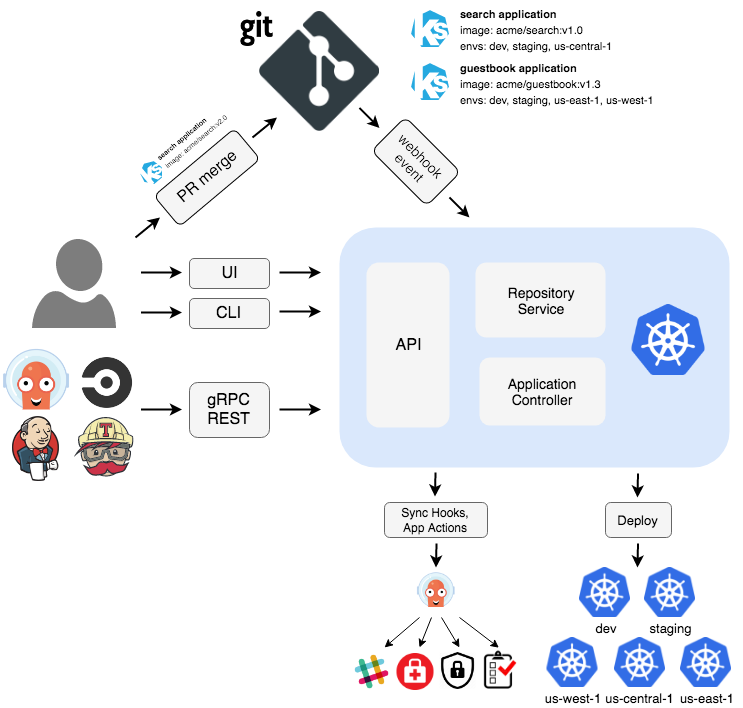

ArgoCD

- Flux CD와 동일한 K8S를 위한 GitOps 도구이다.

- ArgoCD 설치

# 네임스페이스 생성 $kubectl create ns argocd # argocd 설치 $kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml # CLI 설치 $curl -sSL -o argocd-linux-amd64 https://github.com/argoproj/argo-cd/releases/latest/download/argocd-linux-amd64 $sudo install -m 555 argocd-linux-amd64 /usr/local/bin/argocd $rm argocd-linux-amd64 $argocd version argocd: v2.7.4+a33baa3 BuildDate: 2023-06-05T19:16:50Z GitCommit: a33baa301fe61b899dc8bbad9e554efbc77e0991 GitTreeState: clean GoVersion: go1.19.9 Compiler: gc Platform: linux/amd64 # 설치 확인 $kubectl get all -n argocd NAME READY STATUS RESTARTS AGE pod/argocd-application-controller-0 1/1 Running 0 45s pod/argocd-applicationset-controller-d7cbbcdc8-nphgz 1/1 Running 0 46s pod/argocd-dex-server-6d7b7b6b4d-kzjm7 1/1 Running 0 46s pod/argocd-notifications-controller-77546bbb87-bllj9 1/1 Running 0 46s pod/argocd-redis-98fbb98fc-4fcld 1/1 Running 0 45s pod/argocd-repo-server-c45579b5d-t4jzt 1/1 Running 0 45s pod/argocd-server-85cf95fd9b-8zhmp 1/1 Running 0 45s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/argocd-applicationset-controller ClusterIP 10.100.182.77 <none> 7000/TCP,8080/TCP 46s service/argocd-dex-server ClusterIP 10.100.253.200 <none> 5556/TCP,5557/TCP,5558/TCP 46s service/argocd-metrics ClusterIP 10.100.61.226 <none> 8082/TCP 46s service/argocd-notifications-controller-metrics ClusterIP 10.100.110.187 <none> 9001/TCP 46s service/argocd-redis ClusterIP 10.100.206.235 <none> 6379/TCP 46s service/argocd-repo-server ClusterIP 10.100.250.9 <none> 8081/TCP,8084/TCP 46s service/argocd-server ClusterIP 10.100.89.41 <none> 80/TCP,443/TCP 46s service/argocd-server-metrics ClusterIP 10.100.155.156 <none> 8083/TCP 46s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/argocd-applicationset-controller 1/1 1 1 46s deployment.apps/argocd-dex-server 1/1 1 1 46s deployment.apps/argocd-notifications-controller 1/1 1 1 46s deployment.apps/argocd-redis 1/1 1 1 46s deployment.apps/argocd-repo-server 1/1 1 1 45s deployment.apps/argocd-server 1/1 1 1 45s NAME DESIRED CURRENT READY AGE replicaset.apps/argocd-applicationset-controller-d7cbbcdc8 1 1 1 46s replicaset.apps/argocd-dex-server-6d7b7b6b4d 1 1 1 46s replicaset.apps/argocd-notifications-controller-77546bbb87 1 1 1 46s replicaset.apps/argocd-redis-98fbb98fc 1 1 1 46s replicaset.apps/argocd-repo-server-c45579b5d 1 1 1 45s replicaset.apps/argocd-server-85cf95fd9b 1 1 1 45s NAME READY AGE statefulset.apps/argocd-application-controller 1/1 45s # Service 노출 $kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "LoadBalancer"}}' # Service 주소 확인 $kubectl get svc -n argocd NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ... argocd-server LoadBalancer 10.100.231.187 a24237612c2a941b094ca876461b508e-579925311.ap-northeast-2.elb.amazonaws.com 80:31020/TCP,443:30384/TCP 14m # 최초 password 확인 $kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo-

접속(ID : admin / PW : 최초 password)

-

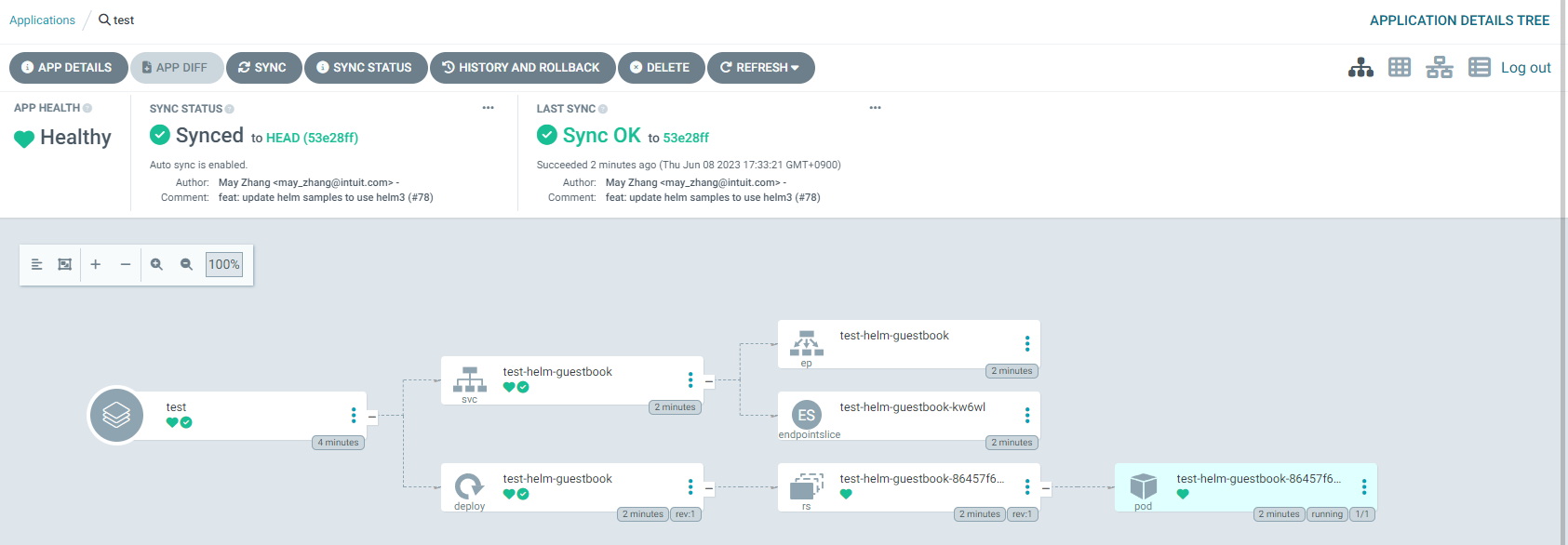

공식 데모 실습 - 참고

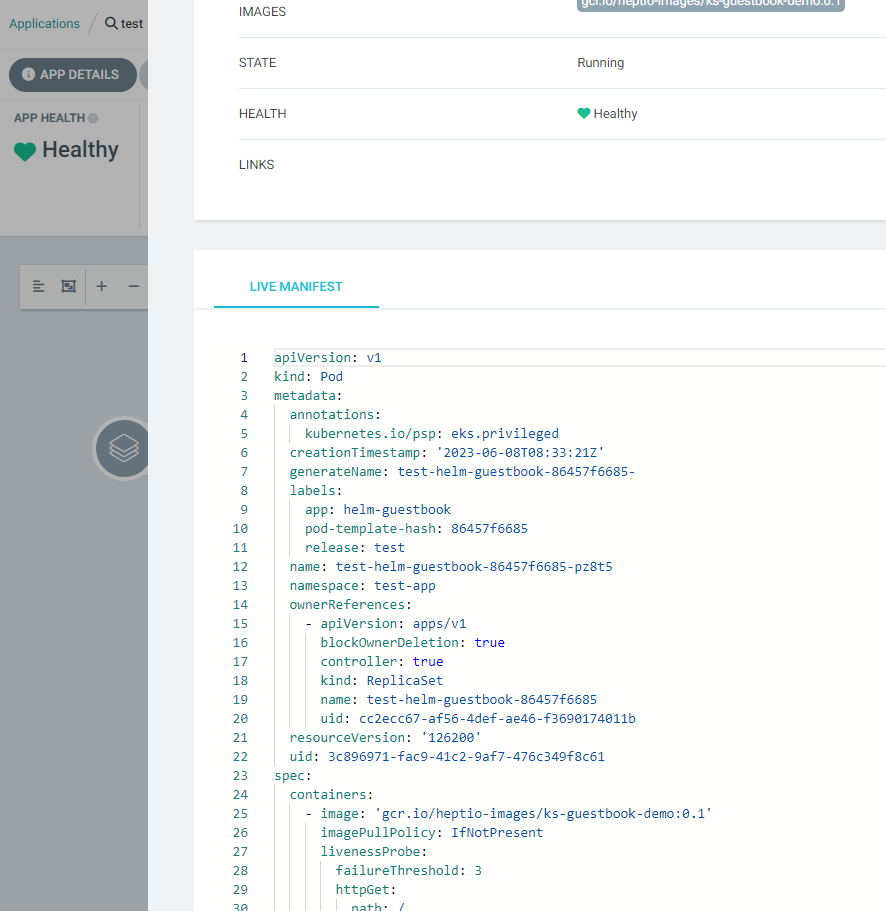

$argocd login <서비스 주소> --username admin WARNING: server certificate had error: x509: certificate is valid for localhost, argocd-server, argocd-server.argocd, argocd-server.argocd.svc, argocd-server.argocd.svc.cluster.local, not a24237612c2a941b094ca876461b508e-579925311.ap-northeast-2.elb.amazonaws.com. Proceed insecurely (y/n)? y Password: <최초 password> 'admin:login' logged in successfully Context '123456....ap-northeast-2.elb.amazonaws.com' updated # argocd demo git repo 추가 $argocd repo add https://github.com/argoproj/argocd-example-apps --username eljoelee --insecure-skip-server-verification Password: ghp_... Repository 'https://github.com/argoproj/argocd-example-apps' added # repo 추가 확인 $argocd repo list TYPE NAME REPO INSECURE OCI LFS CREDS STATUS MESSAGE PROJECT git https://github.com/argoproj/argocd-example-apps true false false true Successful # 네임스페이스 생성 $kubectl create ns test-app namespace/test-app created # 배포 진행 $cat <<EOF | kubectl apply -n argocd -f - apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: test spec: destination: name: '' namespace: test-app server: 'https://kubernetes.default.svc' source: path: helm-guestbook repoURL: 'https://github.com/argoproj/argocd-example-apps' targetRevision: HEAD sources: [] project: default syncPolicy: automated: prune: true selfHeal: true EOF application.argoproj.io/test created # 배포 확인 $kubectl get all -n test-app NAME READY STATUS RESTARTS AGE pod/test-helm-guestbook-86457f6685-pz8t5 1/1 Running 0 2m49s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/test-helm-guestbook ClusterIP 10.100.74.252 <none> 80/TCP 2m49s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/test-helm-guestbook 1/1 1 1 2m49s NAME DESIRED CURRENT READY AGE replicaset.apps/test-helm-guestbook-86457f6685 1 1 1 2m49s

-

Flux vs ArgoCD

| 항목 | Flux | Argocd |

|---|---|---|

| UI 제공 | O(무료버전, 유료버전 존재) | O |

| CLI 제공 | O | O |

| 알림 기능 | O | O |

| kustomize 연동 | O | O(kusotmize 옵션 커스터마이징 필요) |

| helm 연동 | O(개발단계) | O(Helm CLI 미연동) |

| 배포전략(blue/green,canary) | O(Flagger 오픈소스 사용) | O(Argo Rollout 오픈소스 사용) |

| Prune | O | O |

| Manual Sync | O | O |

| Auto Sync | O | O |

| SSO 연동 | X | O |

| 계정/권한 관리 | X | O |

| 메트릭 제공 | O | O |

| 테라폼 코드실행 | O | X |

| Image Updater | O | O |

- 출처 - 악분의 블로그