-

목적

- clickhouse db에 대한 특징 및 기능 공유

-

공식 홈페이지

Query billions of rows in milliseconds

ClickHouse is the fastest and most resource efficient open-source database for real-time apps and analytics.

-

Fast Open-Source OLAP DBMS

-

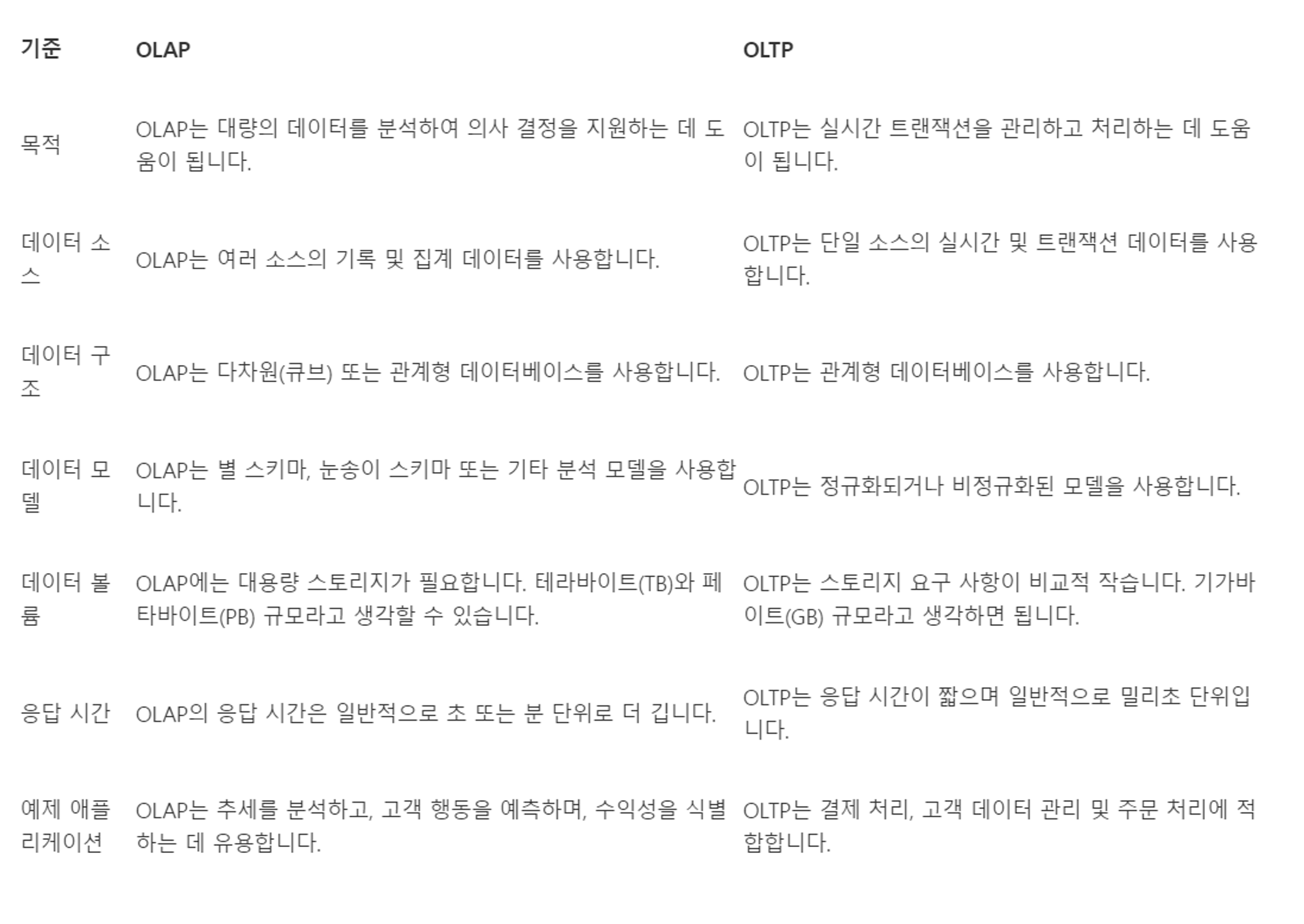

OLAP, OLTP 차이점

- https://aws.amazon.com/ko/compare/the-difference-between-olap-and-oltp/

- 일반적으로(?) 응답시간 OLAP가 더 길다

-

언제 써야 좋을까?

- 트랜잭션(Transaction)이 중요한 application에는 부적합

- 대량의 데이터를 분석

- https://clickhouse.com/use-cases

- Real-time Analytics

- Logs, Events, & Traces

-

clickhouse intro

- https://clickhouse.com/docs/en/intro

- ClickHouse® is a high-performance, column-oriented SQL database management system (DBMS) for online analytical processing (OLAP). It is available as both an open-source software and a cloud offering.

- https://clickhouse.com/docs/en/concepts/why-clickhouse-is-so-fast

- Column-oriented storage

- Indexes

- Data compression

- Vectorized query execution

- Scalability

-

Distinctive Features of ClickHouse

- https://clickhouse.com/docs/en/about-us/distinctive-features

- True Column-Oriented Database Management System

- Data Compression

- Disk Storage of Data

- Parallel Processing on Multiple Cores

- Distributed Processing on Multiple Servers

- SQL Support

- OTLP의 결과와 같지는 않다

- Vector Computation Engine

- Real-Time Data Inserts

- Primary Indexes

- Secondary Indexes

- Suitable for Online Queries

- Support for Approximated Calculations

- Adaptive Join Algorithm

- Data Replication and Data Integrity Support

- Role-Based Access Control

-

tutorial

- https://clickhouse.com/docs/en/tutorial

- sql 문법, 데이터 타입, 테이블 엔진

guides

- https://clickhouse.com/docs/en/guides/creating-tables

CREATE DATABASE IF NOT EXISTS helloworld

CREATE TABLE helloworld.my_first_table

(

user_id UInt32,

message String,

timestamp DateTime,

metric Float32

)

ENGINE = MergeTree()

PRIMARY KEY (user_id, timestamp)INSERT INTO helloworld.my_first_table (user_id, message, timestamp, metric) VALUES

(101, 'Hello, ClickHouse!', now(), -1.0 ),

(102, 'Insert a lot of rows per batch', yesterday(), 1.41421 ),

(102, 'Sort your data based on your commonly-used queries', today(), 2.718 ),

(101, 'Granules are the smallest chunks of data read', now() + 5, 3.14159 )SELECT *

FROM helloworld.my_first_table

ORDER BY timestampSELECT *

FROM helloworld.my_first_table

ORDER BY timestamp

FORMAT TabSeparated- Updating and Deleting ClickHouse Data

-

alter table update

-

https://clickhouse.com/docs/en/sql-reference/statements/alter/update

note

The

ALTER TABLEprefix makes this syntax different from most other systems supporting SQL. It is intended to signify that unlike similar queries in OLTP databases this is a heavy operation not designed for frequent use. -

It is not possible to update columns that are part of the primary or sorting key.

-

alter table delete

-

https://clickhouse.com/docs/en/sql-reference/statements/alter/delete

The

ALTER TABLEprefix makes this syntax different from most other systems supporting SQL. It is intended to signify that unlike similar queries in OLTP databases this is a heavy operation not designed for frequent use.ALTER TABLEis considered a heavyweight operation that requires the underlying data to be merged before it is deleted. For MergeTree tables, consider using theDELETE FROMquery, which performs a lightweight delete and can be considerably faster. -

Lightweight delete

-

- joining tables

advanced guides

- Materialized views

CREATE MATERIALIZED VIEW analytics.monthly_aggregated_data_mv

TO analytics.monthly_aggregated_data

AS

SELECT

toDate(toStartOfMonth(event_time)) AS month,

domain_name,

sumState(count_views) AS sumCountViews

FROM analytics.hourly_data

GROUP BY

domain_name,

month- TTL (time-to-live)

- TTL (time-to-live) refers to the capability of having rows or columns moved, deleted, or rolled up after a certain interval of time has passed

CREATE TABLE example1 (

timestamp DateTime,

x UInt32 TTL now() + INTERVAL 1 MONTH,

y String TTL timestamp + INTERVAL 1 DAY,

z String

)

ENGINE = MergeTree

ORDER BY tuple()- Deduplication Strategies

ClickHouse is built for speed when it comes to data insertion. The storage files are immutable and ClickHouse does not check for an existing primary key before inserting a row-so deduplication involves a bit more effort. This also means that deduplication is not immediate-it is eventual, which has a few side effects:

-

At any moment in time your table can still have duplicates (rows with the same sorting key)

-

The actual removal of duplicate rows occurs during the merging of parts

-

Your queries need to allow for the possibility of duplicates

-

table engine

- https://clickhouse.com/docs/en/engines/table-engines

ENGINE = MergeTree- primary key, partitiong key ..

ReplacingMergeTree- 중복 항목을 제거(실시간 아님)

CollapsingMergeTree- ENGINE = CollapsingMergeTree(sign)

- which can have

1and-1values,1is a “state” row,-1is a “cancel” row.

VersionedCollapsingMergeTree- VersionedCollapsingMergeTree(sign, version)

-

Dictionaries

-

Lightweight Update

note

Lightweight update is only available on ClickHouse Cloud. -

Lightweight Delete

- Lightweight

DELETEdoes not delete data from storage immediately

- Lightweight

-

Transactional (ACID) support

- https://clickhouse.com/docs/en/guides/developer/transactional

- 제약 조건이 많다, 이 부분이 중요하면 OLTP

-

영환ES

-

RDBMS → b-tree

-

ClickHosue → spares index

-

인덱스 추가는 중간에 할 수 없다

- 이를 극복 할 수 있는 3가지 옵션

- 테이블 2개 만든 후, read 목적에 맞게 사용

- insert 2 테이블, 양쪽으로 2개

- 뷰

- 옵션이 있다

- 기존 거 포함 또는 신규 만등

- order 다르게 가능, 칼럼에 통계 함수 사용 가능

- insert 1 테이블

- 옵션이 있다

- 인덱스와 비슷한 걸 만들어서 사용할 수 있다

- 프로젝션(?)

- insert, select를 동일 테이블에 사용 가능

- 테이블 2개 만든 후, read 목적에 맞게 사용

- 이를 극복 할 수 있는 3가지 옵션

-

기존 생성 테이블에, 칼럼이 늘어나는 경우(?)

-

메모리가 부족하면, 에러 발생

- primary key

-

skip index

- rdb의 인덱스와는 다르다

- type

-

Best Practices

- async insert

- [async, wait]

- 1초 추천

- async insert

-

자 그러면 rdb

- rdb 의 range index를 사용하면 어떨까?

- 그래서 rdb도 로그 데이터를 저장할 때는 인덱스를 안잡는다

- 마이그할때도 안잡는다

-

experimental 옵션은 프러덕션에 사용하지 말아라

- use with caution