사전 작업

- EKS Cluster 프로비저닝을 위한 원클릭 스크립트를 먼저 배포해 주고 변수를 지정합시다.

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick.yaml

# CloudFormation 스택 배포

# aws cloudformation deploy --template-file eks-oneclick.yaml --stack-name myeks --parameter-overrides KeyName=<My SSH Keyname> SgIngressSshCidr=<My Home Public IP Address>/32 MyIamUserAccessKeyID=<IAM User의 액세스키> MyIamUserSecretAccessKey=<IAM User의 시크릿 키> ClusterBaseName='<eks 이름>' --region ap-northeast-2

예시) aws cloudformation deploy --template-file eks-oneclick.yaml --stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

<SSH 접속>

ssh -i ~/.ssh/Your.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)- 변수 지정

# 노드 IP 확인 및 PrivateIP 변수 지정

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

echo "export N1=$N1" >> /etc/profile

echo "export N2=$N2" >> /etc/profile

echo "export N3=$N3" >> /etc/profile

echo $N1, $N2, $N3

# 노드 보안그룹에 eksctl-host 에서 노드(파드)에 접속 가능하게 룰(Rule) 추가 설정

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text)

echo "export NGSGID=$NGSGID" >> /etc/profile

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

# 워커 노드 SSH 접속 : '-i ~/.ssh/id_rsa' 생략 가능

for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i hostname; echo; done

yes

yes

yes

for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i hostname; echo; done1. AWS VPC CNI

-

기본적으로 kubernetes 의 Network Interface 로 kubernetes의 네트워크 환경을 구성해 주는 것이 kubernetes CNI 입니다.

-

여러 CNI 중에, AWS EKS 에서는 AWS VPC CNI을 사용합니다.

- AWS VPC CNI 의 가장 큰 장점은 파드는 VPC 와 동일한 IP 대역을 가질 수 있게 되어서 직접 통신이 가능하다는 점입니다.

-

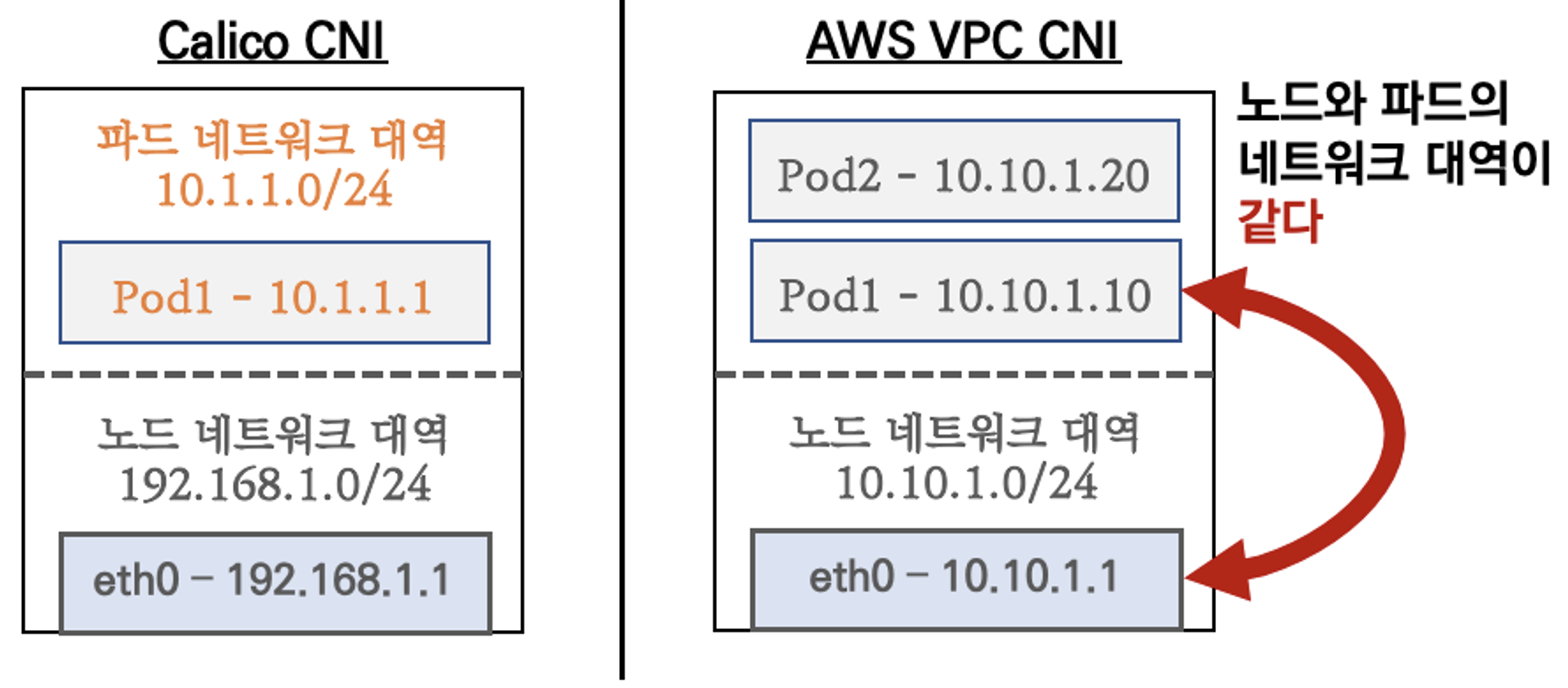

대표적인 calico CNI 를 예로 들자면 Calico CNI 는 Host Network 와 Pod 의 IP 대역이 다릅니다.

- 아래는 Calico CNI 와 AWS VPC CNI 비교 입니다.

- AWS VPC CNI는 네트워크 통신의 최적화 성능과 레이턴시를 위해서 노드와 파드의 네트워크 대역을 동일하게 설정합니다.

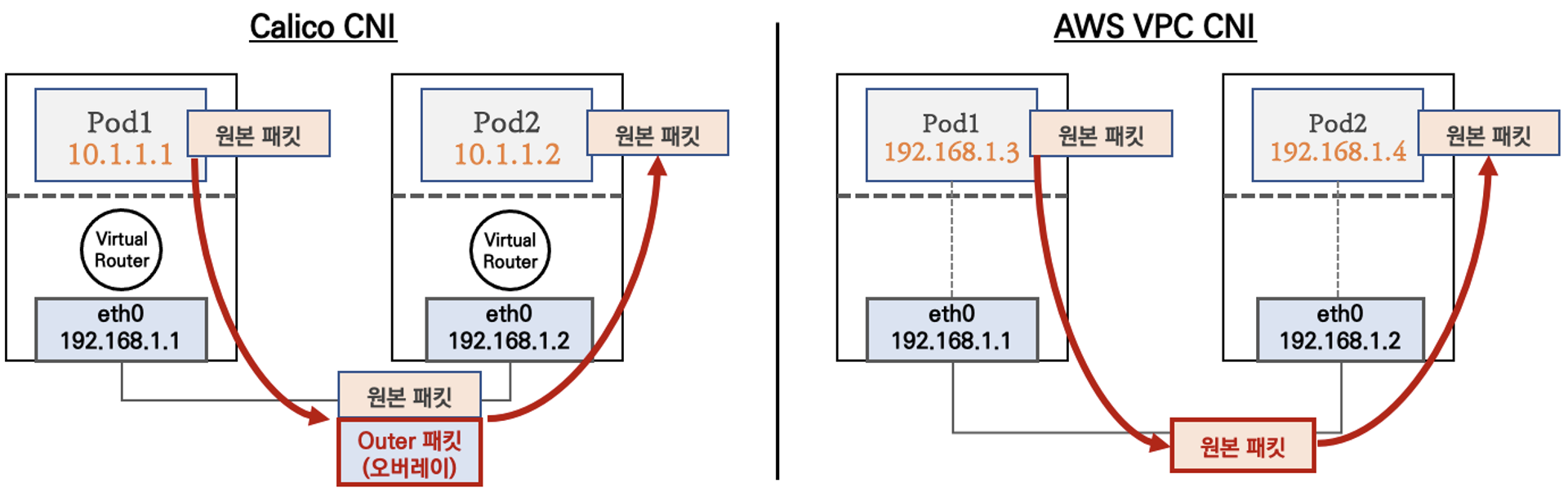

- 파드간 통신 시 일반적으로 K8S CNI는 오버레이(VXLAN, IP-IP 등) 통신을 하고, AWS VPC CNI는 동일 대역으로 직접 통신을 합니다.

- 즉, Calico CNI 는 IP 변환 작업이 있으니 당연히 통신 포인트가 하나 늘어난 것입니다.

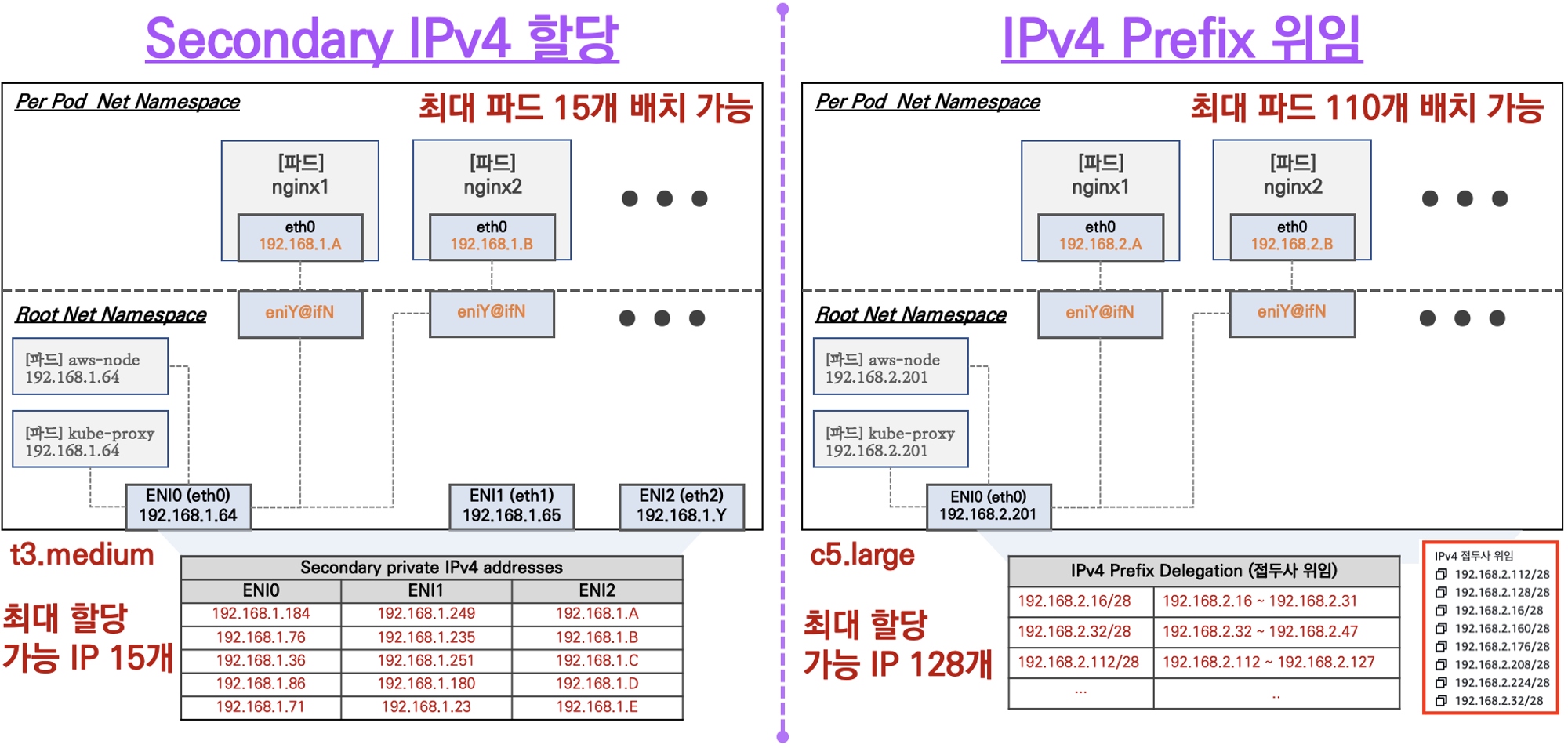

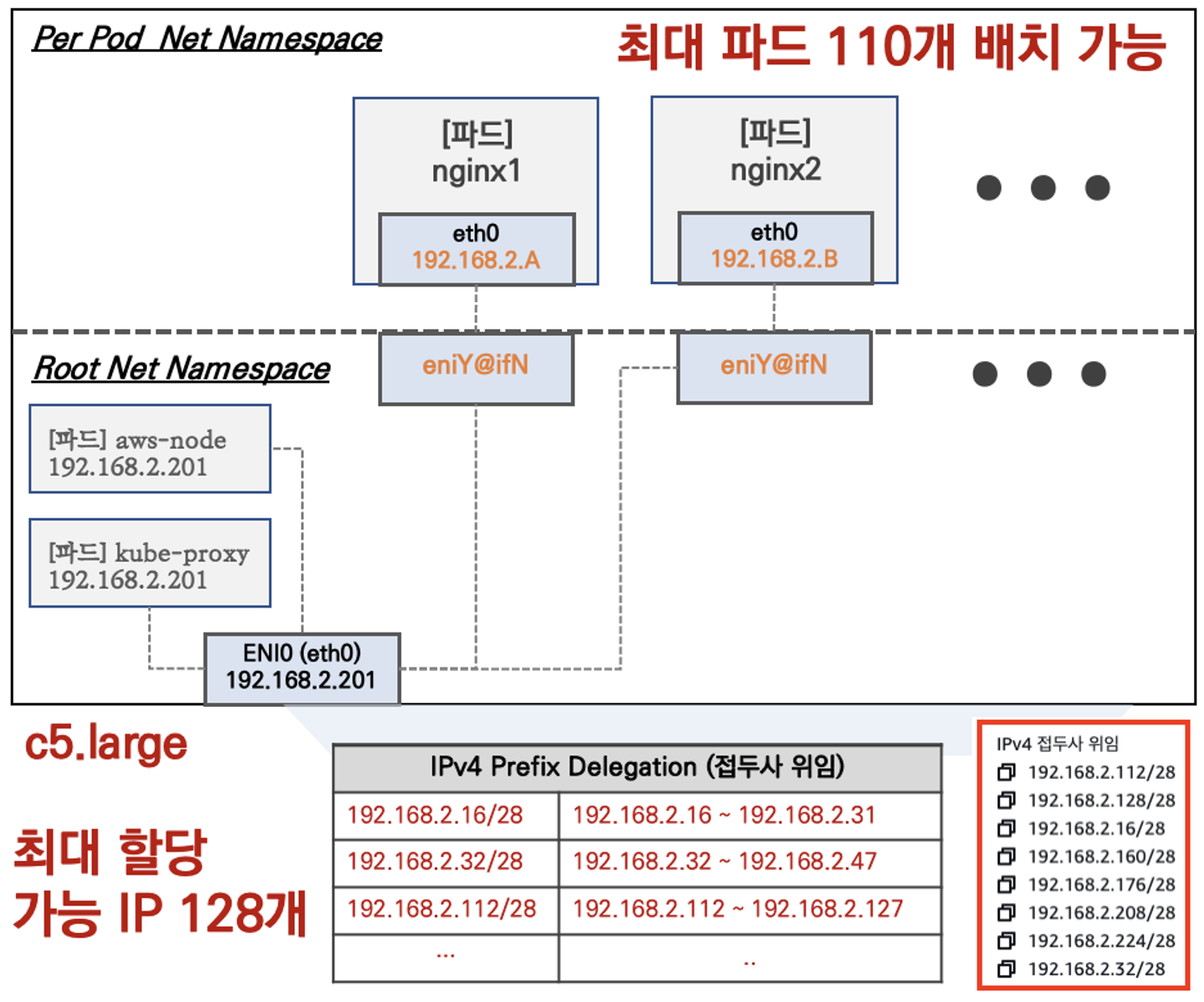

- 또한, EKS는 Node 의 Spec에 따라 노드 당 최대 Pod 생성 개수가 정해져 있습니다.

- t3.medium 스펙 기준 AWS ENI 가 최대 3개 붙을 수 있고 ENI 하나당 5개의 Secondary IP를 할당할 수 있습니다.(각 노드별 kube-proxy, aws-node IP 제외)

- 그러나, AWS VPC CNI 를 사용하게 되면 IPv4 Delegation 기능을 통해 IP 개수가 아닌 IP 서브넷을 위임하여 할당 가능 IP 수와 인스턴스 유형에 권장하는 최대 갯수로 선정합니다.

- 그러면, 네트워크의 기본 정보를 확인해봅시다.

# CNI 정보 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

amazon-k8s-cni-init:v1.16.4-eksbuild.2

amazon-k8s-cni:v1.16.4-eksbuild.2

amazon

# Node IP 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

--------------------------------------------------------------------

| DescribeInstances |

+-------------------+-----------------+-----------------+----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+-------------------+-----------------+-----------------+----------+

| myeks-ng1-Node | 192.168.3.66 | 13.124.234.76 | running |

| myeks-bastion-EC2| 192.168.1.100 | 3.34.96.93 | running |

| myeks-ng1-Node | 192.168.1.127 | 52.79.190.19 | running |

| myeks-ng1-Node | 192.168.2.136 | 43.202.158.34 | running |

+-------------------+-----------------+-----------------+----------+

# Pod IP 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl get pod -n kube-system -o=custom-columns=NAME:.metadata.name,IP:.status.podIP,STATUS:.status.phase

NAME IP STATUS

aws-node-kwln5 192.168.1.127 Running

aws-node-lc8ck 192.168.2.136 Running

aws-node-lmdnj 192.168.3.66 Running

coredns-55474bf7b9-4vh8h 192.168.3.183 Running

coredns-55474bf7b9-7nmkq 192.168.1.48 Running

kube-proxy-2w694 192.168.2.136 Running

kube-proxy-5v5wj 192.168.3.66 Running

kube-proxy-qj87g 192.168.1.127 Running-

출력값들을 보게 되면, Node 의 IP 대역 192.168.xx 과 Pod 의 IP 대역이 같은 것을 확인할 수 있습니다.

-

노드의 네트워크 정보를 알아보겠습니다.

- eniY는 pod network 네임스페이스와 veth 인터페이스 입니다.

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i tree /var/log/aws-routed-eni; echo; done

>> node 192.168.1.127 <<

/var/log/aws-routed-eni

├── ebpf-sdk.log

├── egress-v6-plugin.log

├── ipamd.log

├── network-policy-agent.log

└── plugin.log

0 directories, 5 files

>> node 192.168.2.136 <<

/var/log/aws-routed-eni

├── ebpf-sdk.log

├── egress-v6-plugin.log

├── ipamd.log

├── network-policy-agent.log

└── plugin.log

0 directories, 5 files

>> node 192.168.3.66 <<

/var/log/aws-routed-eni

├── ebpf-sdk.log

├── egress-v6-plugin.log

├── ipamd.log

├── network-policy-agent.log

└── plugin.log

0 directories, 5 files

-------------------------------

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i sudo ip -br -c addr; echo; done

>> node 192.168.1.127 <<

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.127/24 fe80::a0:76ff:fe13:2c21/64

enibee2fe18d14@if3 UP fe80::84e7:bff:fec4:a744/64

eth1 UP 192.168.1.88/24 fe80::a4:12ff:fe9b:cfe5/64

>> node 192.168.2.136 <<

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.2.136/24 fe80::40c:afff:fe72:b705/64

>> node 192.168.3.66 <<

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.3.66/24 fe80::8de:bfff:fe8c:97c5/64

eni95b4950f11c@if3 UP fe80::f8ca:3fff:fe57:c9fa/64

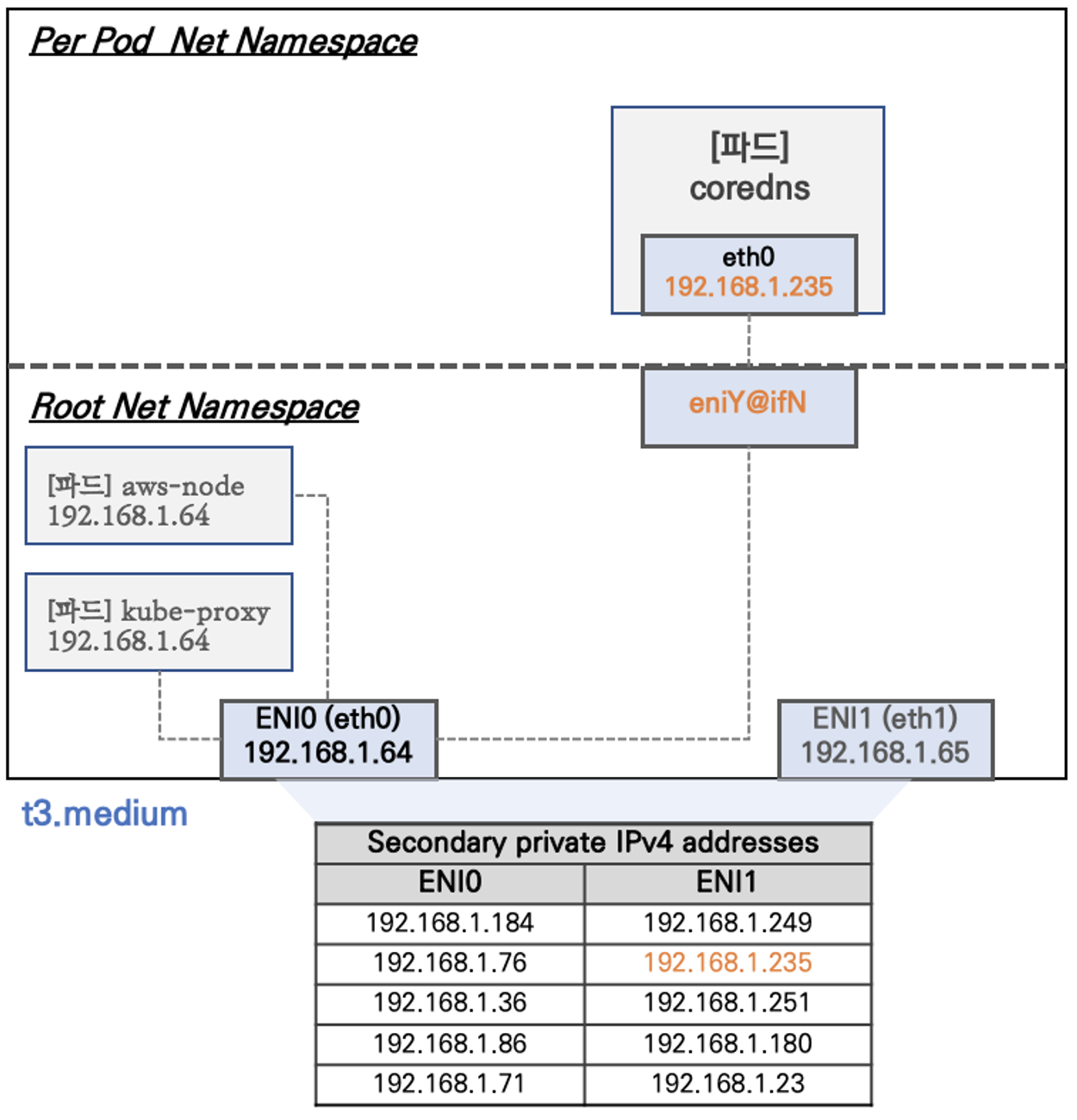

eth1 UP 192.168.3.75/24 fe80::8b4:57ff:fe92:4087/642. Node 의 기본 네트워크 정보

-

Net Namespace 는 호스트, Pod 별로 구분이 됩니다.

- 하지만, 특정한 파드는 호스트(Root)의 IP(ENI의 IP)를 그대로 사용합니다.

- kube-proxy

- aws-node

- 하지만, 특정한 파드는 호스트(Root)의 IP(ENI의 IP)를 그대로 사용합니다.

-

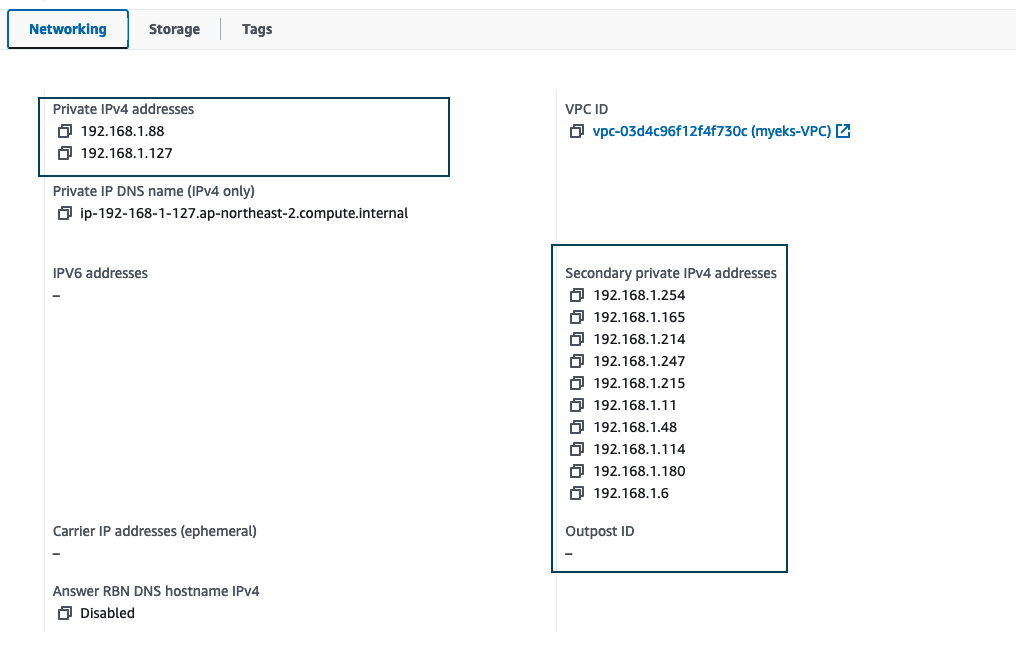

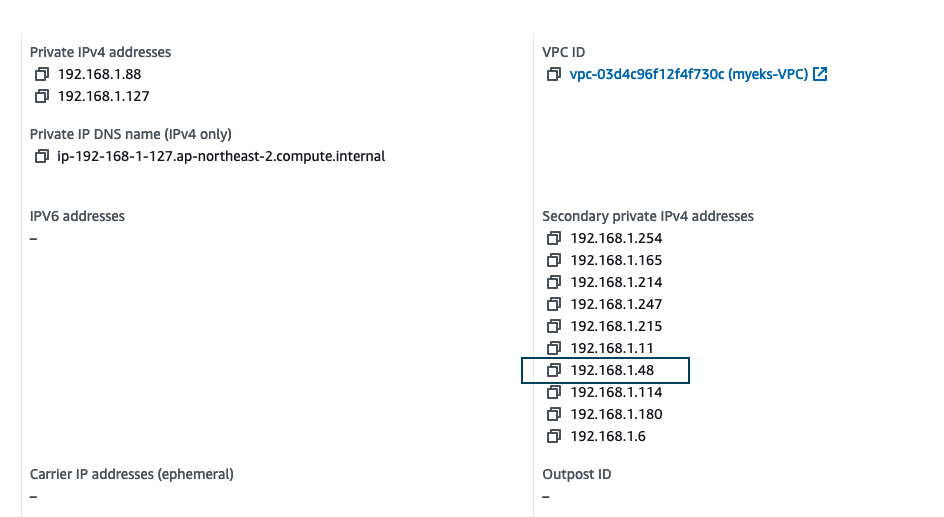

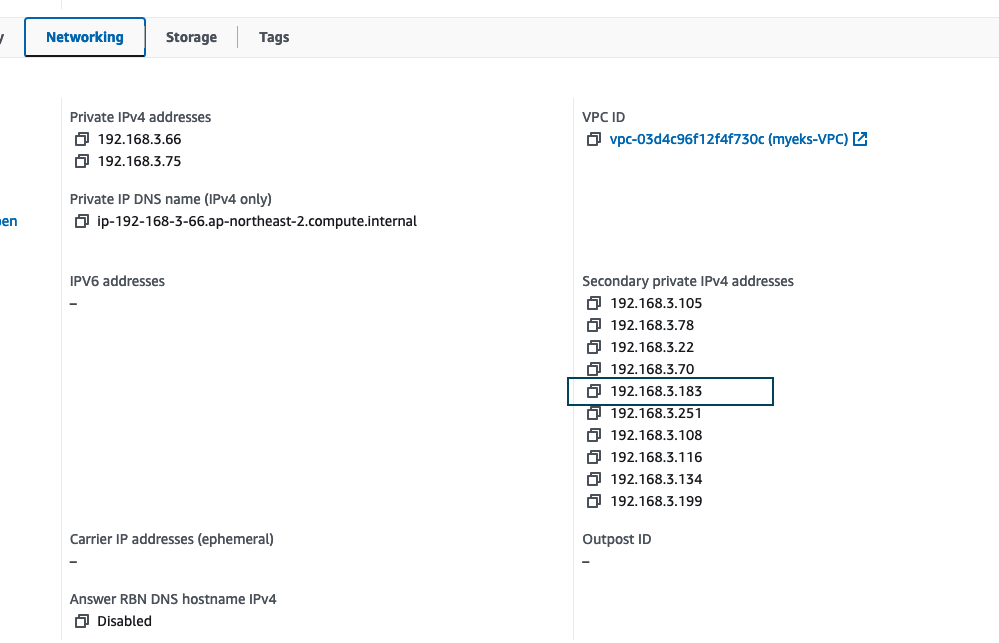

프라이빗 IPv4, 보조 프라이빗 IPv4

-

core-dns pod

-

192.168.3.183

-

192.168.1.48

-

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl get pod -n kube-system -l k8s-app=kube-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-55474bf7b9-4vh8h 1/1 Running 0 72m 192.168.3.183 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

coredns-55474bf7b9-7nmkq 1/1 Running 0 72m 192.168.1.48 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>- Node 1

- (192.168.1.48)

- Node 2

- (192.168.3.183)

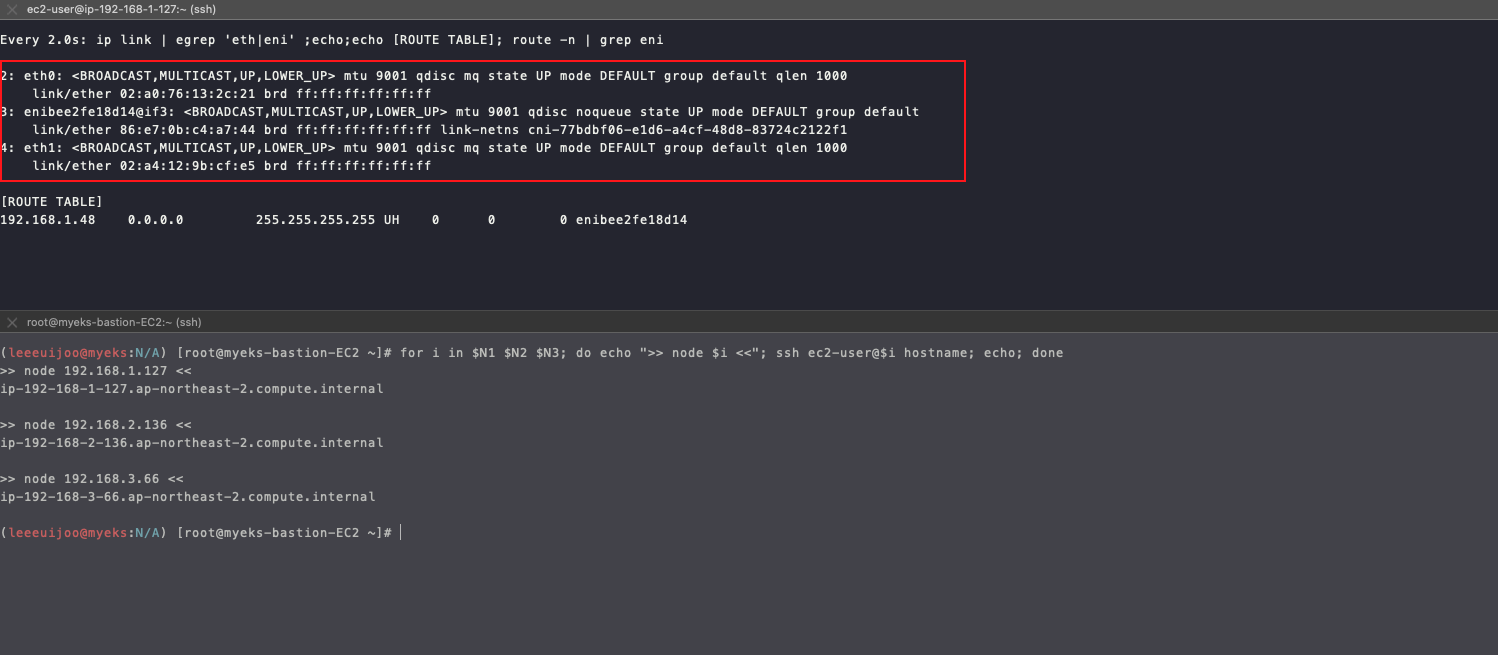

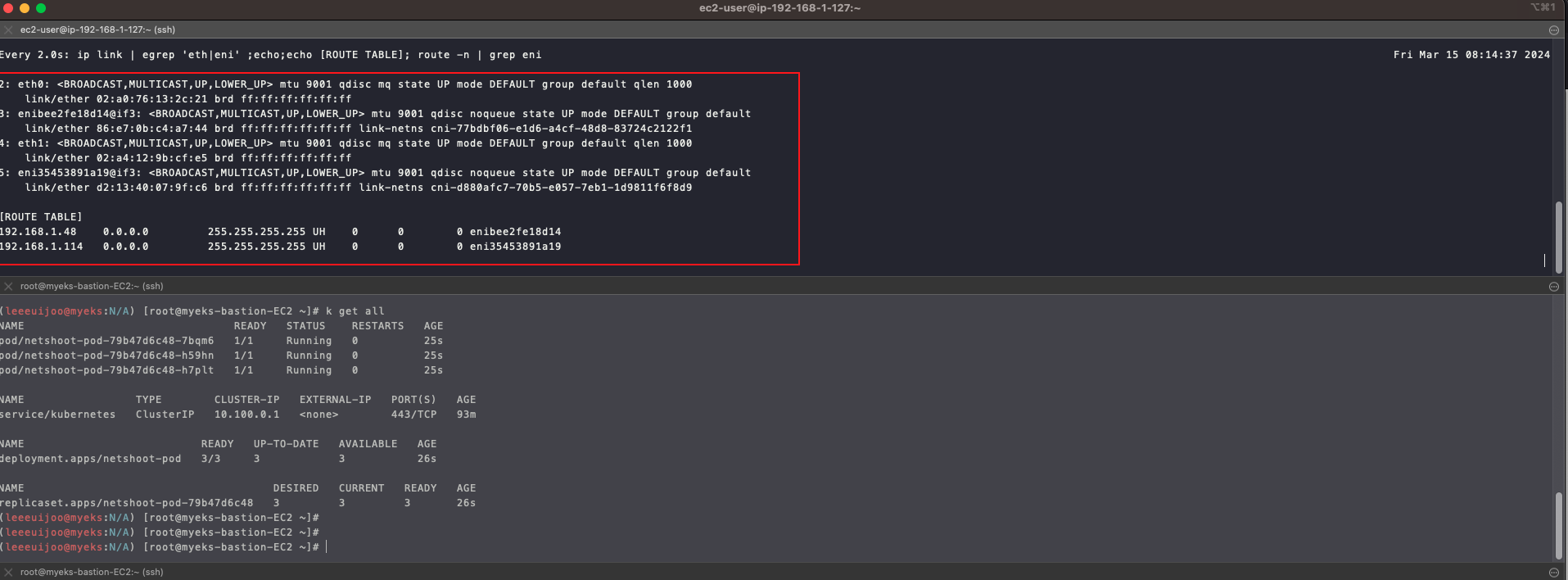

- 그러면, 새로운 파드를 생성하면서 노드에 접속하여 인터페이스 정보를 모니터링 해보겠습니다.

- Node 1만 모니터링 해보겠습니다.

ssh ec2-user@$N1

watch -d "ip link | egrep 'eth|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

# 테스트용 파드 netshoot-pod 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot-pod

spec:

replicas: 3

selector:

matchLabels:

app: netshoot-pod

template:

metadata:

labels:

app: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

- Route Table 이 하나 추가된 것을 볼 수 있습니다.

- IP : 192.168.1.114

# Pod IP 조회

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP

NAME IP

netshoot-pod-79b47d6c48-7bqm6 192.168.2.8

netshoot-pod-79b47d6c48-h59hn 192.168.3.105

netshoot-pod-79b47d6c48-h7plt 192.168.1.114- 파드가 생성되면, 워커 노드에 eniY@ifN 추가되고 라우팅 테이블에도 정보가 추가됩니다.

- 테스트용 파드 eniY 정보 확인해보겠습니다.

# Node 1 애서 네트워크 인터페이스 확인

[ec2-user@ip-192-168-1-127 ~]$ ip -br -c addr show

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.127/24 fe80::a0:76ff:fe13:2c21/64

enibee2fe18d14@if3 UP fe80::84e7:bff:fec4:a744/64

eth1 UP 192.168.1.88/24 fe80::a4:12ff:fe9b:cfe5/64

eni35453891a19@if3 UP fe80::d013:40ff:fe07:9fc6/64

# 마지막에 생성된 네임스페이스 정보 출력

[ec2-user@ip-192-168-1-127 ~]$ sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1

38315

# 마지막 생성된 네임스페이스 net PID 정보 출력 -t net(네트워크 타입)를 변수 지정

[ec2-user@ip-192-168-1-127 ~]$ MyPID=$(sudo lsns -o PID,COMMAND -t net | awk 'NR>2 {print $1}' | tail -n 1)

# PID 정보로 파드 정보를 확인해봅니다.

[ec2-user@ip-192-168-1-127 ~]$ sudo nsenter -t $MyPID -n ip -c route

default via 169.254.1.1 dev eth0

169.254.1.1 dev eth0 scope link

[ec2-user@ip-192-168-1-127 ~]$ sudo nsenter -t $MyPID -n ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 56:34:8e:ae:ed:24 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.114/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::5434:8eff:feae:ed24/64 scope link

valid_lft forever preferred_lft forever- 테스트용 Pod 에 접속하여 네트워크 정보를 봅시다.

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl exec -it $PODNAME1 -- zsh

dP dP dP

88 88 88

88d888b. .d8888b. d8888P .d8888b. 88d888b. .d8888b. .d8888b. d8888P

88' `88 88ooood8 88 Y8ooooo. 88' `88 88' `88 88' `88 88

88 88 88. ... 88 88 88 88 88. .88 88. .88 88

dP dP `88888P' dP `88888P' dP dP `88888P' `88888P' dP

Welcome to Netshoot! (github.com/nicolaka/netshoot)

Version: 0.12

netshoot-pod-79b47d6c48-7bqm6 ~

netshoot-pod-79b47d6c48-7bqm6 ~ ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc noqueue state UP group default

link/ether 66:93:b7:ac:af:a8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.2.8/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6493:b7ff:feac:afa8/64 scope link

valid_lft forever preferred_lft forever

- 192.168.2.8/32 IP 를 가지고 있습니다.

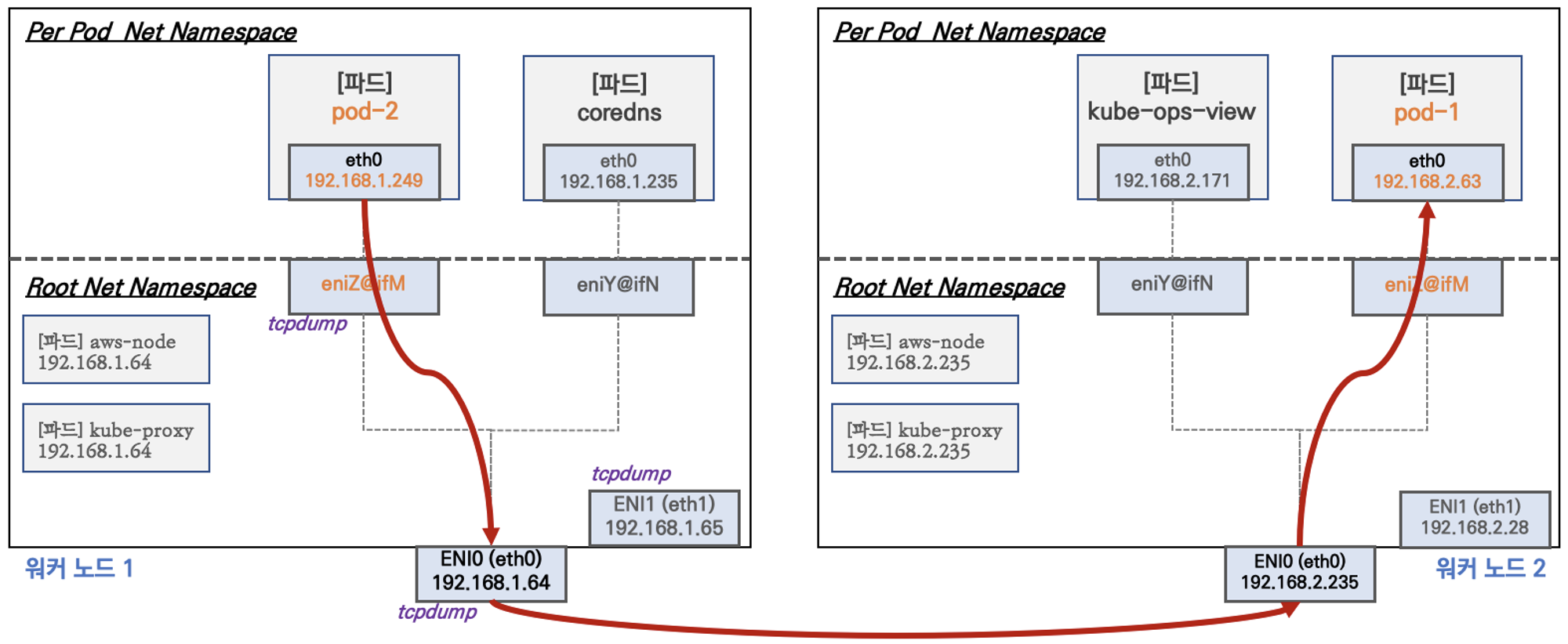

3. Node 간 Pod 통신

-

Pod 간 통신 시 tcpdump 내용을 확인하여 통신 과정을 알아보겠습니다.

-

파드간 통신 흐름 : AWS VPC CNI 경우 별도의 오버레이(Overlay) 통신 기술 없이, AWS 의 VPC 를 타서 파드간 직접 통신이 가능합니다.

- 파드간 통신 및 테스트를 위해 Pod에 변수를 지정하겠습니다.

- Pod IP

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# echo $PODIP1

192.168.2.8

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# echo $PODIP2

192.168.3.105

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# echo $PODIP3

192.168.1.114# 변수 지정

PODIP1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].status.podIP})

PODIP2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].status.podIP})

PODIP3=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[2].status.podIP})

# Ping TEST

# Pod 끼리의 통신 ICMP 테스트

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl exec -it $PODNAME1 -- ping -c 2 $PODIP2

PING 192.168.3.105 (192.168.3.105) 56(84) bytes of data.

64 bytes from 192.168.3.105: icmp_seq=1 ttl=125 time=1.94 ms

64 bytes from 192.168.3.105: icmp_seq=2 ttl=125 time=1.24 ms

--- 192.168.3.105 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.235/1.589/1.944/0.354 ms

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# k exec -it $PODNAME2 -- ping -c 2 $PODIP3

PING 192.168.1.114 (192.168.1.114) 56(84) bytes of data.

64 bytes from 192.168.1.114: icmp_seq=1 ttl=125 time=1.73 ms

64 bytes from 192.168.1.114: icmp_seq=2 ttl=125 time=1.28 ms

--- 192.168.1.114 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.284/1.505/1.726/0.221 ms

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# k exec -it $PODNAME3 -- ping -c 2 $PODIP1

PING 192.168.2.8 (192.168.2.8) 56(84) bytes of data.

64 bytes from 192.168.2.8: icmp_seq=1 ttl=125 time=1.43 ms

64 bytes from 192.168.2.8: icmp_seq=2 ttl=125 time=0.764 ms

--- 192.168.2.8 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.764/1.098/1.433/0.334 ms-

워커 노드에서 TCPDUMP 확인

-

Bastion Host 터미널

# 다른 터미널 (bastion Host 터미널)

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl exec -it $PODNAME3 -- ping -c 2 $PODIP1

PING 192.168.2.8 (192.168.2.8) 56(84) bytes of data.

64 bytes from 192.168.2.8: icmp_seq=1 ttl=125 time=0.972 ms

64 bytes from 192.168.2.8: icmp_seq=2 ttl=125 time=0.927 ms

--- 192.168.2.8 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.927/0.949/0.972/0.022 ms

- ICMP 패킷을 보냅니다.

- 터미널로 모니터링

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1

[ec2-user@ip-192-168-1-127 ~]$ sudo tcpdump -i any -nn icmp- ICMP 패킷 내용

- IP를 보게되면 어떤 중간에 어떤 인터페이스를 거치지 않고 Pod 에서 Pod 로의 직접 통신이 이루어지는 것을 볼 수 있습니다.

- Pod1 은 IP 가 192.162.2.8, Pod2 의 IP는 192.162.1.114 입니다.

[ec2-user@ip-192-168-1-127 ~]$ sudo tcpdump -i any -nn icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

11:03:41.555696 IP 192.168.1.114 > 192.168.2.8: ICMP echo request, id 18, seq 1, length 64

11:03:41.555867 IP 192.168.1.114 > 192.168.2.8: ICMP echo request, id 18, seq 1, length 64

11:03:41.556634 IP 192.168.2.8 > 192.168.1.114: ICMP echo reply, id 18, seq 1, length 64

11:03:41.556654 IP 192.168.2.8 > 192.168.1.114: ICMP echo reply, id 18, seq 1, length 64

11:03:42.556761 IP 192.168.1.114 > 192.168.2.8: ICMP echo request, id 18, seq 2, length 64

11:03:42.556803 IP 192.168.1.114 > 192.168.2.8: ICMP echo request, id 18, seq 2, length 64

11:03:42.557645 IP 192.168.2.8 > 192.168.1.114: ICMP echo reply, id 18, seq 2, length 64

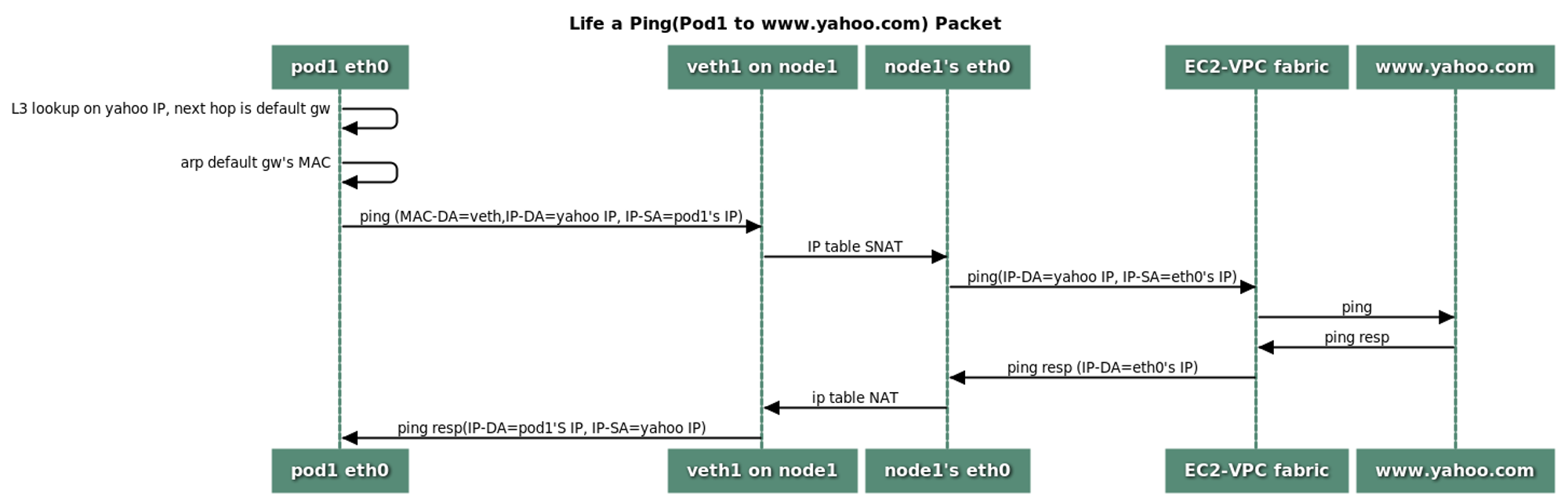

11:03:42.557667 IP 192.168.2.8 > 192.168.1.114: ICMP echo reply, id 18, seq 2, length 644. Pod 에서의 외부통신

- iptable 에 SNAT 을 통하여 노드의 eth0 IP로 변경되어서 외부와 통신이 되는 원리입니다.

- 워커 노드에서 TCPDUMP를 통해 어떻게 패킷이 이동하는지 확인합니다.

# 작업용 EC2 : pod-1 Shell 에서 외부로 ping

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl exec -it $PODNAME1 -- ping -c 1 www.google.com

PING www.google.com (142.250.206.196) 56(84) bytes of data.

64 bytes from kix07s07-in-f4.1e100.net (142.250.206.196): icmp_seq=1 ttl=104 time=28.2 ms

--- www.google.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 28.234/28.234/28.234/0.000 ms

# 워커 노드 EC2 : TCPDUMP 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N2

Last login: Fri Mar 15 11:21:51 2024 from ip-192-168-1-100.ap-northeast-2.compute.internal

# Node 들의 공인 IP 주소 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# for i in $N1 $N2 $N3; do echo ">> node $i <<"; ssh ec2-user@$i curl -s ipinfo.io/ip; echo; echo; done

>> node 192.168.1.127 <<

52.79.190.19

>> node 192.168.2.136 <<

43.202.158.34

>> node 192.168.3.66 <<

13.124.234.76

# 파드가 외부와 통신시에는 아래 처럼 'AWS-SNAT-CHAIN-0' 룰(rule)에 의해서 SNAT 되어서 외부와 통신합니다.

[ec2-user@ip-192-168-2-136 ~]$ sudo iptables -t nat -S | grep 'A AWS-SNAT-CHAIN'

-A AWS-SNAT-CHAIN-0 -d 192.168.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j RETURN

-A AWS-SNAT-CHAIN-0 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 192.168.2.136 --random-fully5. 노드에 파드 생성 갯수 제한

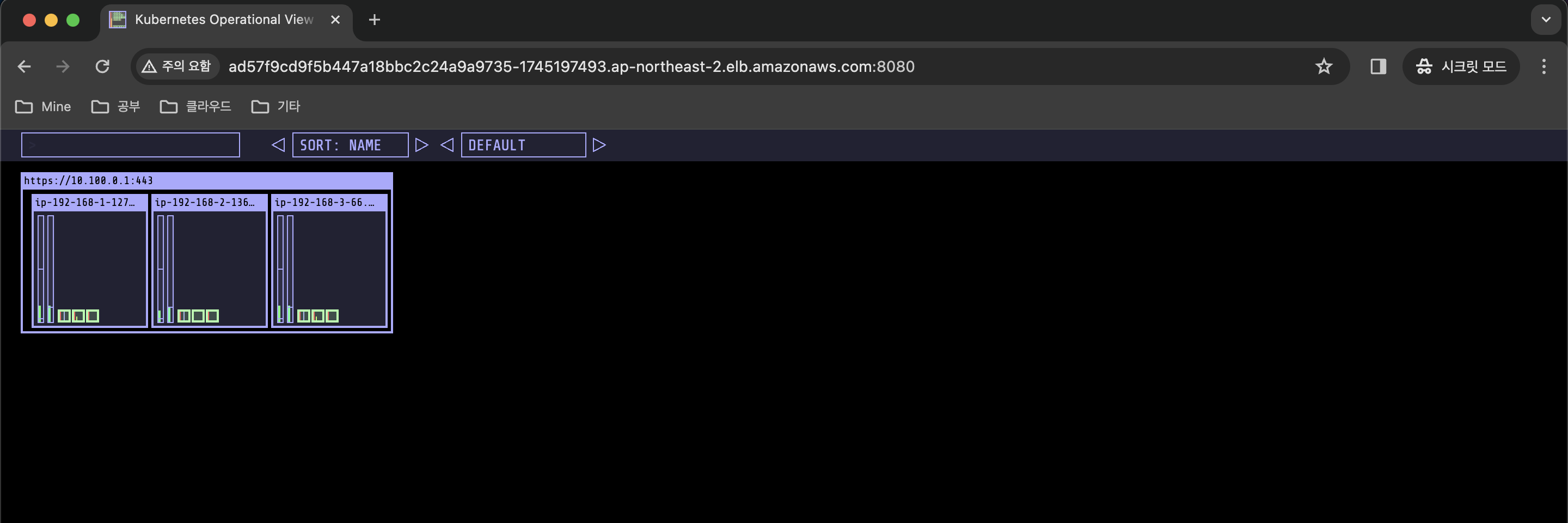

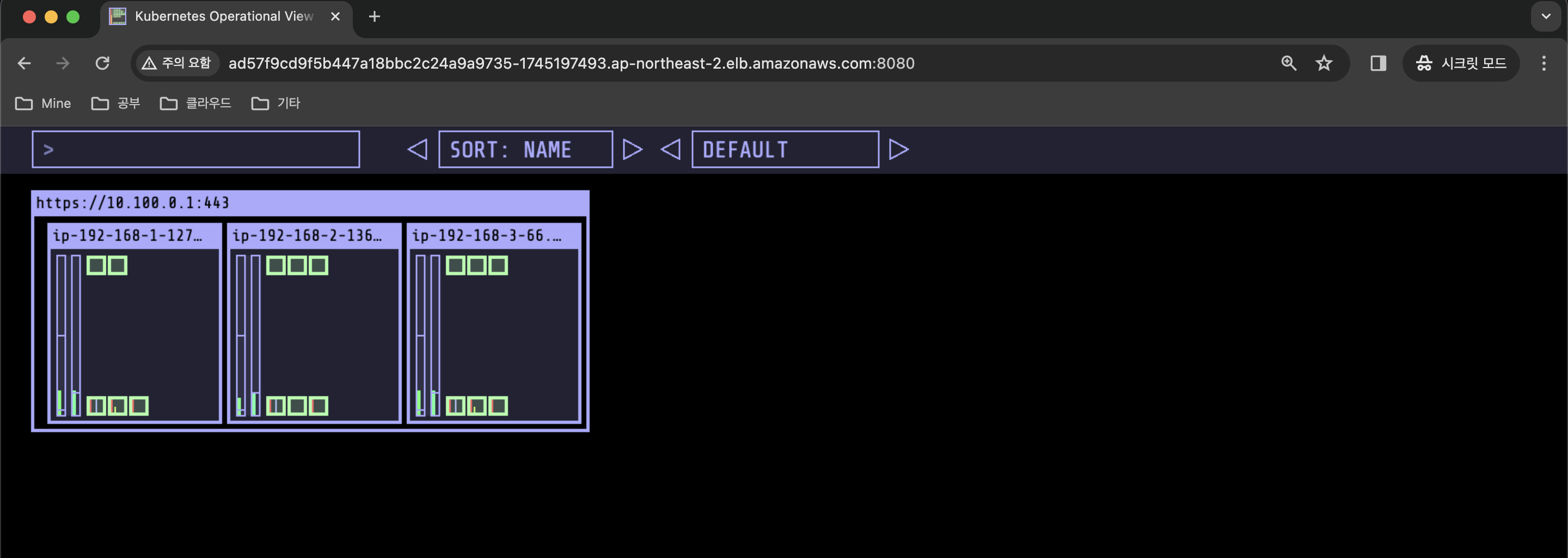

- Pod 의 생성을 UI 로 확인할 수 있는 kube-ops-view 애플리케이션을 사용합니다.

# kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

# kube-ops-view 접속 URL 확인 (1.5 배율)

kubectl get svc -n kube-system kube-ops-view -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "KUBE-OPS-VIEW URL = http://"$1":8080/#scale=1.5"}'

- 워커 노드의 인스턴스 타입 별 파드 생성 갯수 제한

- 인스턴스 타입 별 ENI 최대 갯수와 할당 가능한 최대 IP 갯수에 따라서 파드 배치 갯수가 결정됩니다.

- 단, aws-node 와 kube-proxy 파드는 호스트의 IP를 사용함으로 최대 갯수에서 제외합니다.

-

최대 파드 생성 갯수 : (Number of network interfaces for the instance type × (the number of IP addressess per network interface - 1)) + 2

-

저희는 현재 3개의 Worker Node 가 있으며 인스턴스의 유형(스펙)은 t3.medium 입니다.

- 워커 노드의 인스턴스 정보 확인 : t3.medium 사용 시

# t3 타입의 정보(필터) 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# aws ec2 describe-instance-types --filters Name=instance-type,Values=t3.* \

> --query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" \

> --output table

--------------------------------------

| DescribeInstanceTypes |

+----------+----------+--------------+

| IPv4addr | MaxENI | Type |

+----------+----------+--------------+

| 12 | 3 | t3.large |

| 6 | 3 | t3.medium |

| 15 | 4 | t3.xlarge |

| 15 | 4 | t3.2xlarge |

| 2 | 2 | t3.micro |

| 2 | 2 | t3.nano |

| 4 | 3 | t3.small |

+----------+----------+--------------+

# 워커노드 상세 정보 확인 : 노드 상세 정보의 Allocatable 에 pods 에 17개 정보 확인

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl describe node | grep Allocatable: -A6

Allocatable:

cpu: 1930m

ephemeral-storage: 27905944324

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3388352Ki

pods: 17

--

Allocatable:

cpu: 1930m

ephemeral-storage: 27905944324

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3388344Ki

pods: 17

--

Allocatable:

cpu: 1930m

ephemeral-storage: 27905944324

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3388344Ki

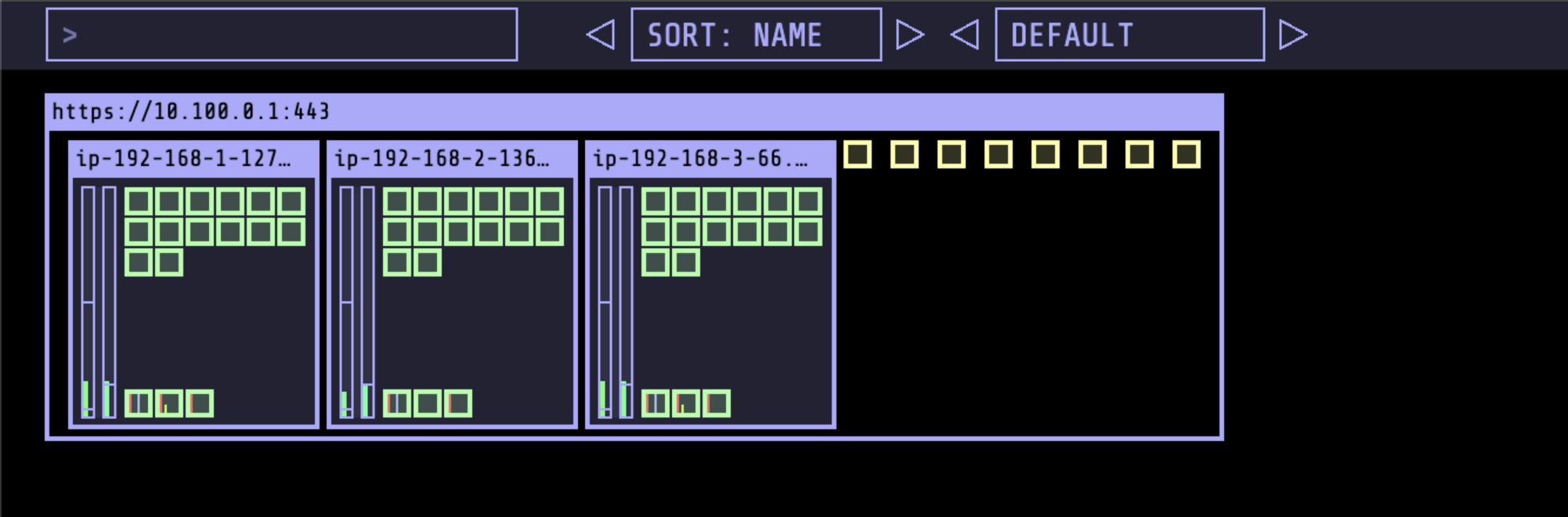

pods: 17- 최대 파드 생성 및 테스트

- deployment 의 replica 를 점진적으로 높여가보겠습니다.

# 워커 노드 접속하여 Monitoring 설정

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# ssh ec2-user@$N1

Last login: Fri Mar 15 10:57:35 2024 from ip-192-168-1-100.ap-northeast-2.compute.internal

[ec2-user@ip-192-168-1-127 ~]$ while true; do ip -br -c addr show && echo "--------------" ; date "+%Y-%m-%d %H:%M:%S" ; sleep 1; done

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.127/24 fe80::a0:76ff:fe13:2c21/64

enibee2fe18d14@if3 UP fe80::84e7:bff:fec4:a744/64

eth1 UP 192.168.1.88/24 fe80::a4:12ff:fe9b:cfe5/64

--------------

.

.

.

# Bastion Host 에서 Monitoring (Default Namespace)

watch -d 'kubectl get pods -o wide'

# 디플로이먼트 생성

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/nginx-dp.yaml

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl apply -f nginx-dp.yaml

deployment.apps/nginx-deployment created

# Node Monitoring (Node 접속하여 Monitoring)

--------------

2024-03-15 11:52:26

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.127/24 fe80::a0:76ff:fe13:2c21/64

enibee2fe18d14@if3 UP fe80::84e7:bff:fec4:a744/64

eth1 UP 192.168.1.88/24 fe80::a4:12ff:fe9b:cfe5/64

# Pod Monitoring (Bastion)

Every 2.0s: kubectl get pods -o wide Fri Mar 15 20:55:54 2024

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-f7f5c78c5-5p9tw 1/1 Running 0 2m22s 192.168.1.114 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-lt4r9 1/1 Running 0 2m22s 192.168.2.11 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

- Pod 가 2개 생성되었으며 점차 Replica 수를 늘려보겠습니다.

- Replica 2 -> 8

kubectl scale deployment nginx-deployment --replicas=8

# Node 에서의 Monitoring : eni 가 하나 더 생성됨

--------------

2024-03-15 12:03:58

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 192.168.1.127/24 fe80::a0:76ff:fe13:2c21/64

enibee2fe18d14@if3 UP fe80::84e7:bff:fec4:a744/64

eth1 UP 192.168.1.88/24 fe80::a4:12ff:fe9b:cfe5/64

eniad55c49a24a@if3 UP fe80::f4ef:e5ff:fe54:a919/64

-

# Pod 모니터링

Every 2.0s: kubectl get pods -o wide Fri Mar 15 21:07:24 2024

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-f7f5c78c5-2nvxx 1/1 Running 0 84s 192.168.3.78 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-5p9tw 1/1 Running 0 13m 192.168.1.114 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-5w7kq 1/1 Running 0 84s 192.168.2.93 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-dg24h 1/1 Running 0 84s 192.168.3.105 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-h7kbm 1/1 Running 0 84s 192.168.1.11 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-lt4r9 1/1 Running 0 13m 192.168.2.11 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-ptzc9 1/1 Running 0 84s 192.168.2.20 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-t4dht 1/1 Running 0 84s 192.168.3.70 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

- Replica 8 -> 50

kubectl scale deployment nginx-deployment --replicas=50

# Pod Monitoring

Every 2.0s: kubectl get pods -o wide Fri Mar 15 21:13:27 2024

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-f7f5c78c5-245fn 0/1 Pending 0 56s <none> <none> <none> <none>

nginx-deployment-f7f5c78c5-2nvxx 1/1 Running 0 7m27s 192.168.3.78 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-4j545 1/1 Running 0 116s 192.168.2.64 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-59vbk 1/1 Running 0 116s 192.168.2.68 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-5p9tw 1/1 Running 0 19m 192.168.1.114 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-5sbg7 1/1 Running 0 116s 192.168.3.108 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

nginx-deployment-f7f5c78c5-5v2nk 1/1 Running 0 2m39s 192.168.2.179 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

.

.

.

kubectl delete deploy nginx-deployment

- 각 노드당 17개의 파드가 생성이 가능합니다.

- node 3 개 X 15 = 45 개 까지 가능합니다. (kube-proxy, aws-node,kube-ops-view 제외)

- 이러한 문제를 해결하는 방법은 아래의 방법들이 있으며, 대표적으로 Prefix-delegation 이 있습니다.

EKS Workshop Reference

- Prefix Delegation : https://www.eksworkshop.com/docs/networking/vpc-cni/prefix/

- Custom Networking : https://www.eksworkshop.com/docs/networking/vpc-cni/custom-networking/

- Security Groups for Pods : https://www.eksworkshop.com/docs/networking/vpc-cni/security-groups-for-pods/

- Network Policies : https://www.eksworkshop.com/docs/networking/vpc-cni/network-policies/

- Amazon VPC Lattice : https://www.eksworkshop.com/docs/networking/vpc-lattice/

6. Service & AWS LoadBalancer Controller

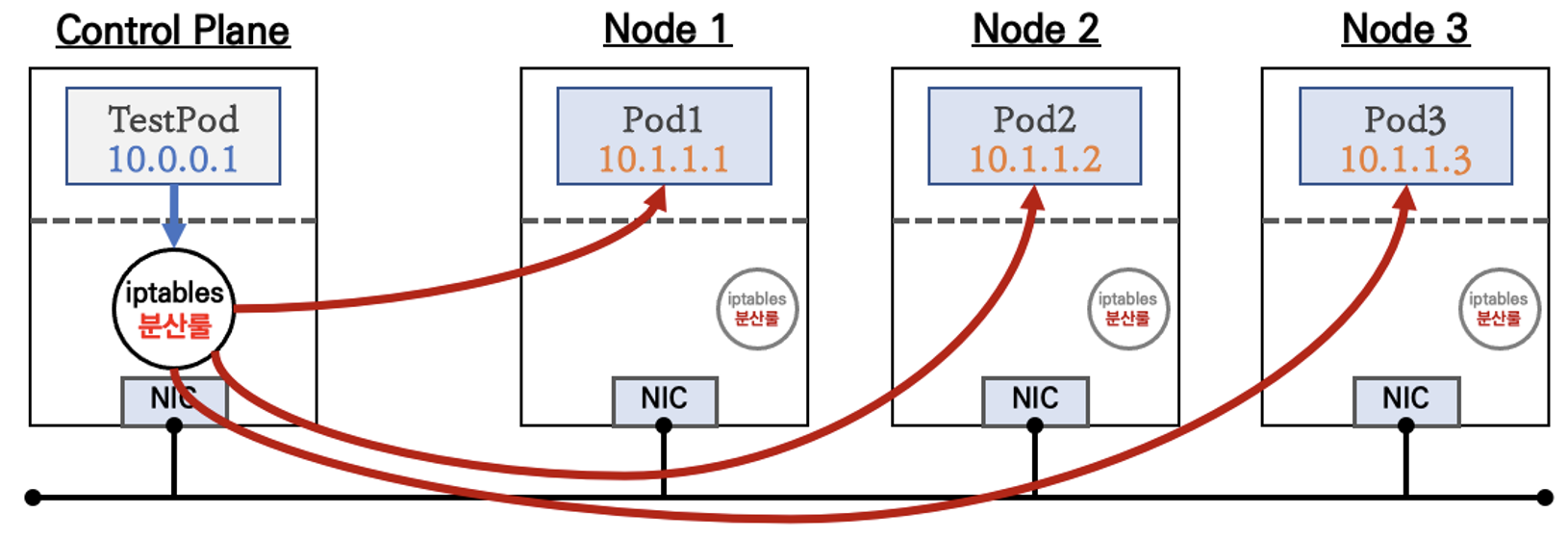

- kubernetes 에서의 service type 은 3가지(+ LoadBalancer Controller)가 있습니다.

- ClusterIP

- 간단하게 요약하자면, 클라이언트(TestPod)가 'CLUSTER-IP' 접속 시 해당 노드의 iptables 룰(랜덤 분산)에 의해서 DNAT 처리가 되어 목적지(backend) 파드와 통신

- 클러스터 외부에서는 서비스(ClusterIP)로 접속이 불가능 ⇒ NodePort 타입으로 외부에서 접속이 가능합니다.

- IPtables 는 파드에 대한 헬스체크 기능이 없어서 문제 있는 파드에 연결할 가능성이 있기 때문에파드에 Readiness Probe 설정으로 파드 문제 시 서비스의 엔드포인트에서 제거되게 해야합니다.

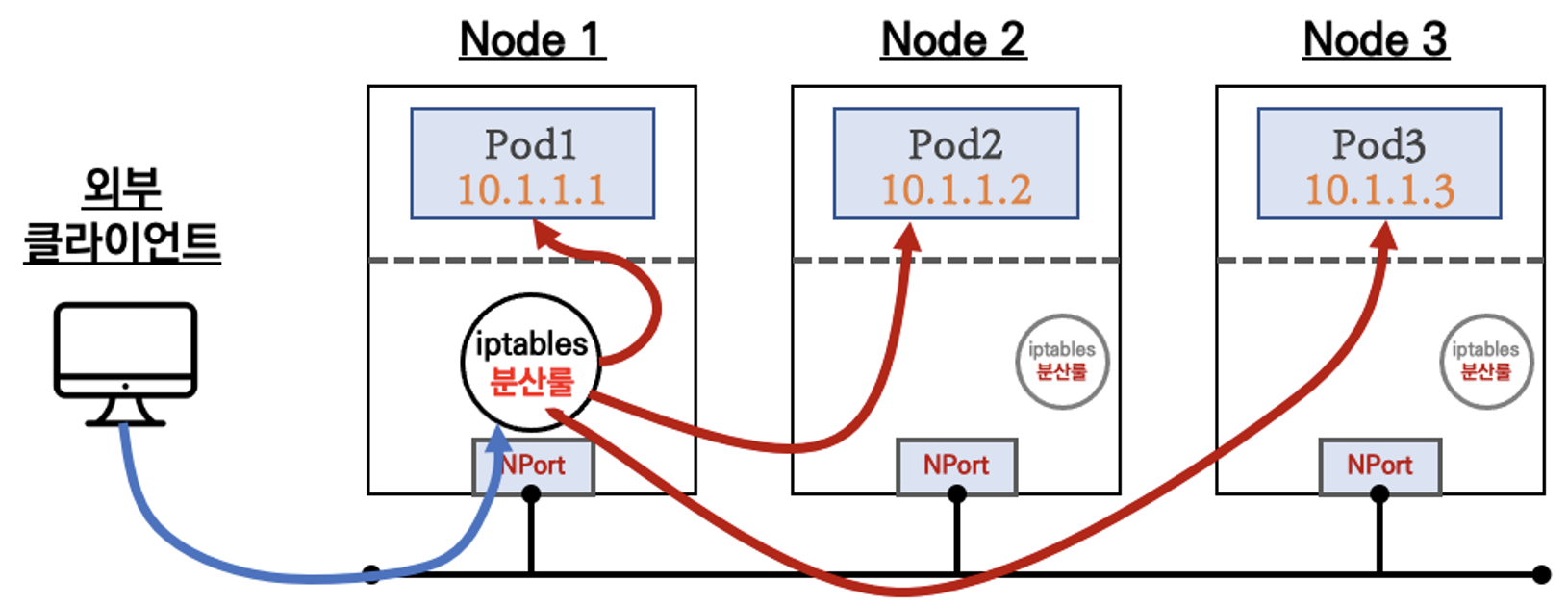

- NodePort

- 간단하게 요약하자면, 외부 클라이언트가 '노드IP:NodePort' 접속 시 해당 노드의 iptables 룰에 의해서 SNAT/DNAT 되어 목적지 파드와 통신 후 리턴 트래픽은 최초 인입 노드를 경유해서 외부로 되돌아갑니다.

- 외부에서 노드의 IP와 포트로 직접 접속이 필요함 → 내부망이 외부에 공개(라우팅 가능)되어 보안에 취약함 ⇒ LoadBalancer 서비스 타입으로 외부 공개 최소화가 가능합니다.

- 클라이언트 IP 보존을 위해서,

externalTrafficPolicy: local사용 시 파드가 없는 노드 IP로 NodePort 접속 시 실패 ⇒ LoadBalancer 서비스에서 헬스체크 기능으로 대비가 가능합니다.

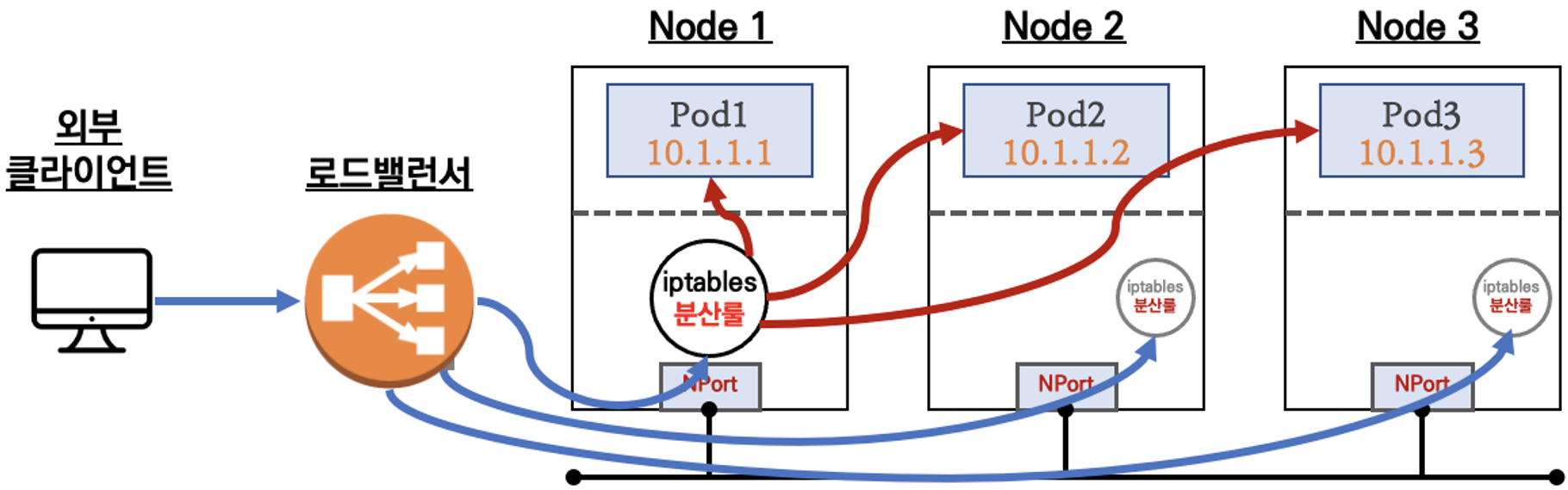

- LoadBalancer

- 간단하게 요약하자면, 외부 클라이언트가 '로드밸런서' 접속 시 부하분산 되어 노드 도달 후 iptables 룰로 목적지 파드와 통신됩니다.

-

부하분산 최적화 : 노드에 파드가 없을 경우 '로드밸런서'에서 노드에 헬스 체크(상태 검사)가 실패하여 해당 노드로는 외부 요청 트래픽을 전달하지 않습니다.

-

즉, 만능입니다.

- 서비스(LoadBalancer) 생성 시 마다 LB(예 AWS NLB)가 생성되어 자원 활용이 비효율적임 ⇒ HTTP 경우 인그레스(Ingress) 를 통해 자원 활용 효율화가 가능합니다.

- 서비스(LoadBalancer)는 HTTP/HTTPS 처리에 일부 부족함(TLS 종료, 도메인 기반 라우팅 등) ⇒ 인그레스(Ingress) 를 통해 기능 동작이 가능합니다.

- (참고) 온프레미스 환경에서는 MetalLB를 통해서 온프레미스 환경에서 서비스(LoadBalancer) 기능 동작이 가능합니다.

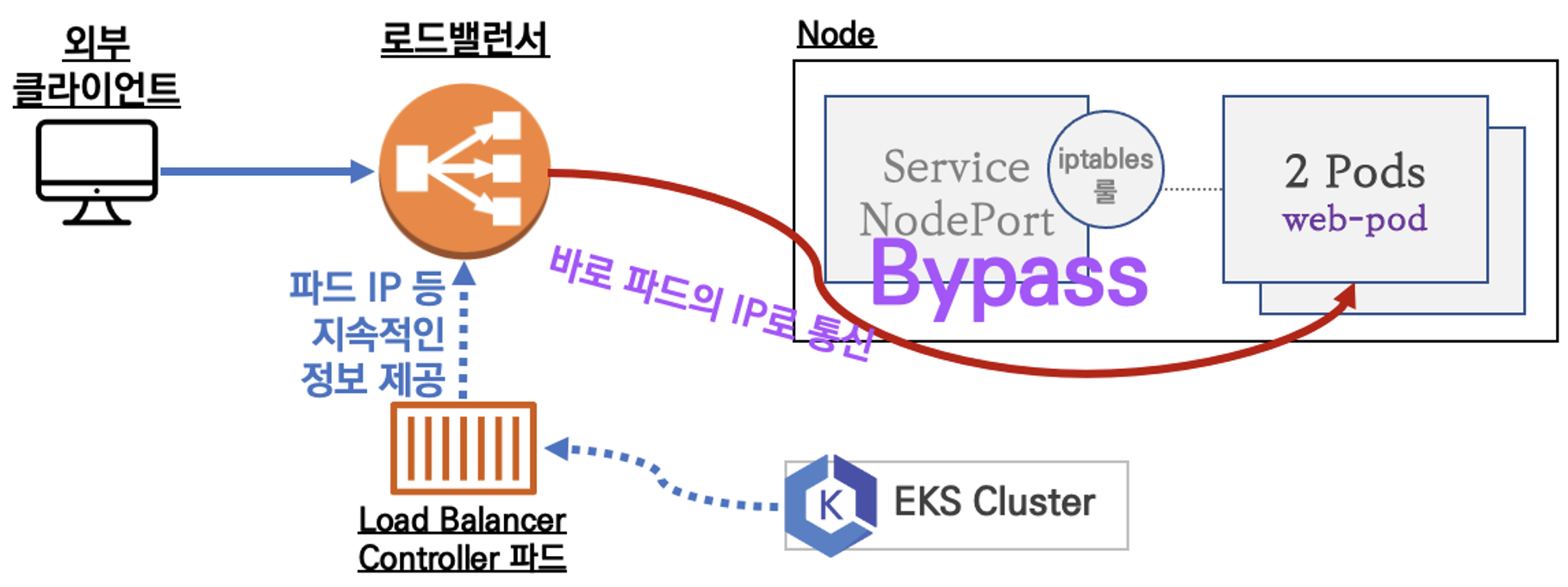

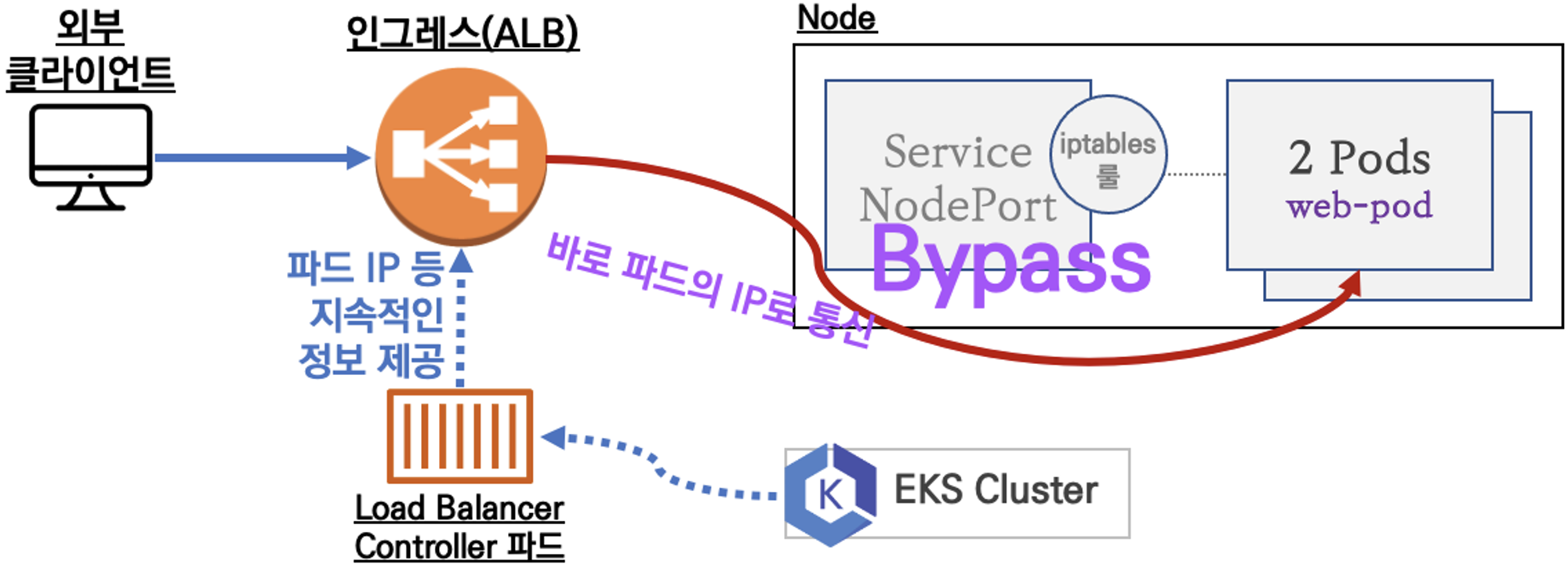

- AWS LoadBalancer Controller

- LoadBalancer controller 는 Pod 의 형태로 동작하는데, 이 Pod는 EKS 클러스터의 정보를 로드밸런서에 계속해서 전달해주기 때문에 로드 밸런서는 직접적으로 Pod 의 IP로 트래픽을 전달합니다.

- IRSA를 기반으로 LoadBalancer Controller 를 배포해봅시다.

# OIDC 확인

aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text

aws iam list-open-id-connect-providers | jq

# IAM Policy (AWSLoadBalancerControllerIAMPolicy) 생성

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

# 혹시 이미 IAM 정책이 있지만 예전 정책일 경우 아래 처럼 최신 업데이트 할 것

# aws iam update-policy ~~~

# 생성된 IAM Policy Arn 확인

aws iam list-policies --scope Local | jq

aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy | jq

aws iam get-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --query 'Policy.Arn'

# AWS Load Balancer Controller를 위한 ServiceAccount를 생성 >> 자동으로 매칭되는 IAM Role 을 CloudFormation 으로 생성됨!

# IAM 역할 생성. AWS Load Balancer Controller의 kube-system 네임스페이스에 aws-load-balancer-controller라는 Kubernetes 서비스 계정을 생성하고 IAM 역할의 이름으로 Kubernetes 서비스 계정에 주석을 답니다

eksctl create iamserviceaccount --cluster=$CLUSTER_NAME --namespace=kube-system --name=aws-load-balancer-controller --role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --override-existing-serviceaccounts --approve

## IRSA 정보 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

## 서비스 어카운트 확인

kubectl get serviceaccounts -n kube-system aws-load-balancer-controller -o yaml | yh

# Helm Chart 설치

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

## 설치 확인 : aws-load-balancer-controller:v2.7.1

kubectl get crd

kubectl get deployment -n kube-system aws-load-balancer-controller

kubectl describe deploy -n kube-system aws-load-balancer-controller

kubectl describe deploy -n kube-system aws-load-balancer-controller | grep 'Service Account'

Service Account: aws-load-balancer-controller

# 클러스터롤, 롤 확인

kubectl describe clusterrolebindings.rbac.authorization.k8s.io aws-load-balancer-controller-rolebinding

kubectl describe clusterroles.rbac.authorization.k8s.io aws-load-balancer-controller-role

...

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

targetgroupbindings.elbv2.k8s.aws [] [] [create delete get list patch update watch]

events [] [] [create patch]

ingresses [] [] [get list patch update watch]

services [] [] [get list patch update watch]

ingresses.extensions [] [] [get list patch update watch]

services.extensions [] [] [get list patch update watch]

ingresses.networking.k8s.io [] [] [get list patch update watch]

services.networking.k8s.io [] [] [get list patch update watch]

endpoints [] [] [get list watch]

namespaces [] [] [get list watch]

nodes [] [] [get list watch]

pods [] [] [get list watch]

endpointslices.discovery.k8s.io [] [] [get list watch]

ingressclassparams.elbv2.k8s.aws [] [] [get list watch]

ingressclasses.networking.k8s.io [] [] [get list watch]

ingresses/status [] [] [update patch]

pods/status [] [] [update patch]

services/status [] [] [update patch]

targetgroupbindings/status [] [] [update patch]

ingresses.elbv2.k8s.aws/status [] [] [update patch]

pods.elbv2.k8s.aws/status [] [] [update patch]

services.elbv2.k8s.aws/status [] [] [update patch]

targetgroupbindings.elbv2.k8s.aws/status [] [] [update patch]

ingresses.extensions/status [] [] [update patch]

pods.extensions/status [] [] [update patch]

services.extensions/status [] [] [update patch]

targetgroupbindings.extensions/status [] [] [update patch]

ingresses.networking.k8s.io/status [] [] [update patch]

pods.networking.k8s.io/status [] [] [update patch]

services.networking.k8s.io/status [] [] [update patch]

targetgroupbindings.networking.k8s.io/status [] [] [update patch]- 최종적으로 kube-system 네임스페이스에서 loadbalancer-controller 가 생성되어 있는 것을 볼 수 있습니다.

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# k get pod -n kube-system | grep load

aws-load-balancer-controller-75c55f7bd-69kn2 1/1 Running 0 73s

aws-load-balancer-controller-75c55f7bd-npdnl 1/1 Running 0 74s- 이제 LoadBalancer Controller 가 생성되었으니, 서비스를 생성해봅시다.

# 모니터링 터미널

watch -d kubectl get pod,svc,ep

Every 2.0s: kubectl get pod,svc,ep Fri Mar 15 21:47:38 2024

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 6h6m

NAME ENDPOINTS AGE

endpoints/kubernetes 192.168.1.61:443,192.168.2.46:443 6h6m

----------

# 디플로이먼트 & 서비스 생성

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/2/echo-service-nlb.yaml

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl apply -f echo-service-nlb.yaml

deployment.apps/deploy-echo created

service/svc-nlb-ip-type created

(leeeuijoo@myeks:N/A) [root@myek

# 등록 취소 지연(드레이닝 간격) 수정 : 기본값 300초 -> 60

vi echo-service-nlb.yaml

..

apiVersion: v1

kind: Service

metadata:

name: svc-nlb-ip-type

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-healthcheck-port: "8080"

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: deregistration_delay.timeout_seconds=60 ## 추가

kubectl apply -f echo-service-nlb.yaml

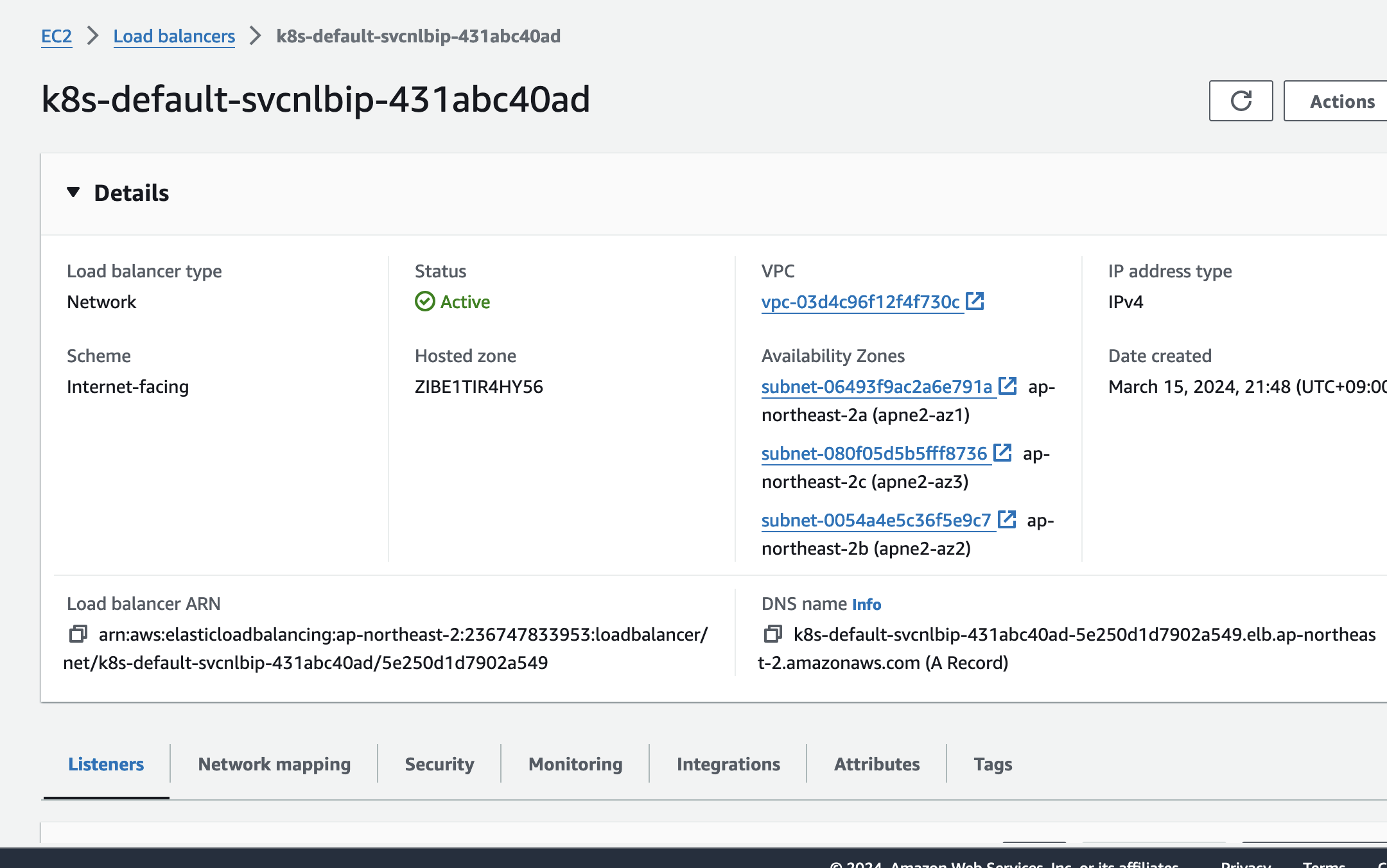

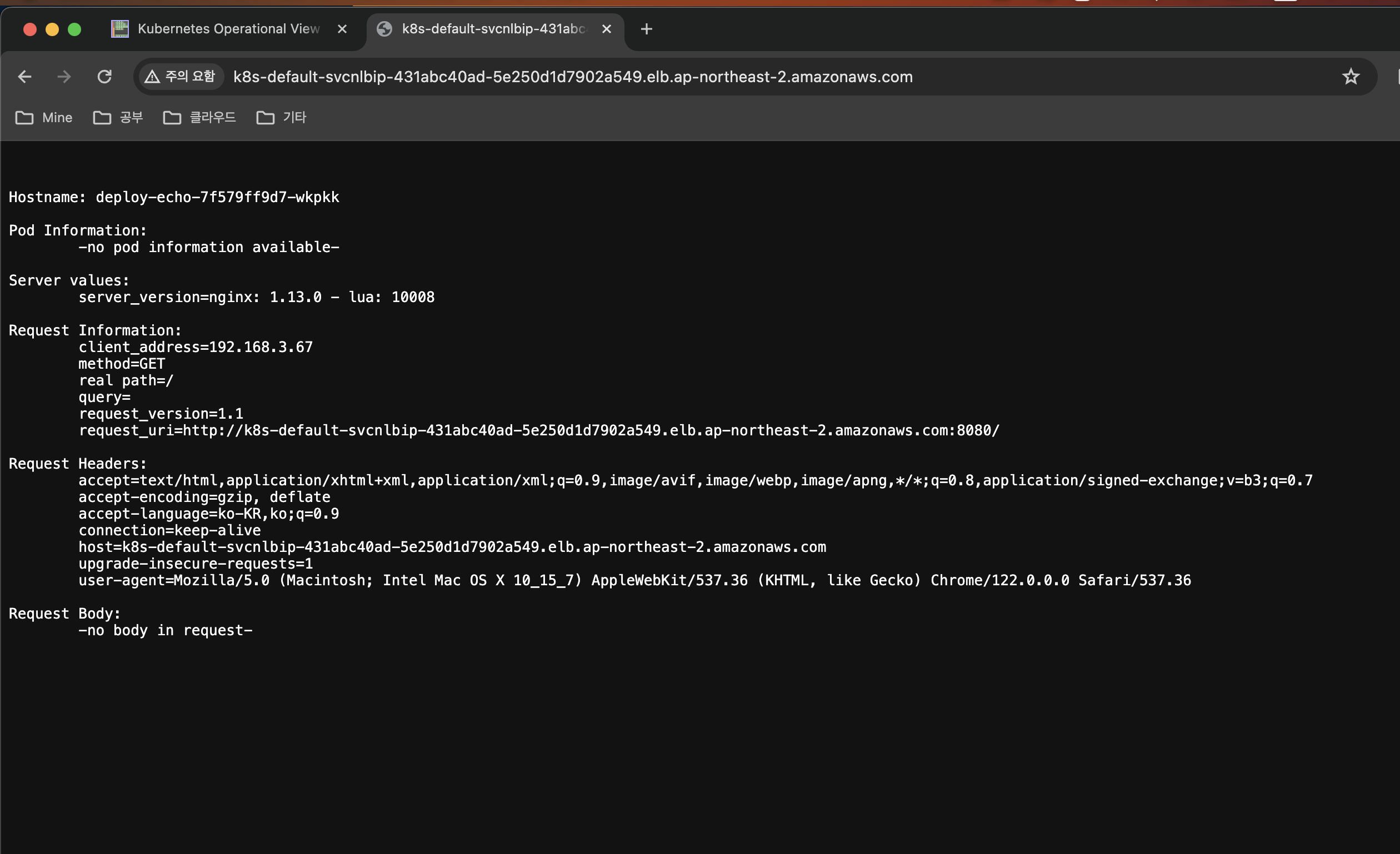

- 로드밸런서 생성 이후 웹으로 접속

-

부하 분산이 이루어 지고 있는지 확인

- 100번을 찔러봤을 때, 53 번은 deploy-echo-7f579ff9d7-zx746 pod 로,

47번은deploy-echo-7f579ff9d7-wkpkk pod로 로드밸런싱이 수행된 것을 확인할 수 있었습니다.

- 100번을 찔러봤을 때, 53 번은 deploy-echo-7f579ff9d7-zx746 pod 로,

NLB=$(kubectl get svc svc-nlb-ip-type -o jsonpath={.status.loadBalancer.ingress[0].hostname})

for i in {1..100}; do curl -s $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

# 적용

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# for i in {1..100}; do curl -s $NLB | grep Hostname ; done | sort | uniq -c | sort -nr

53 Hostname: deploy-echo-7f579ff9d7-zx746

47 Hostname: deploy-echo-7f579ff9d7-wkpkk

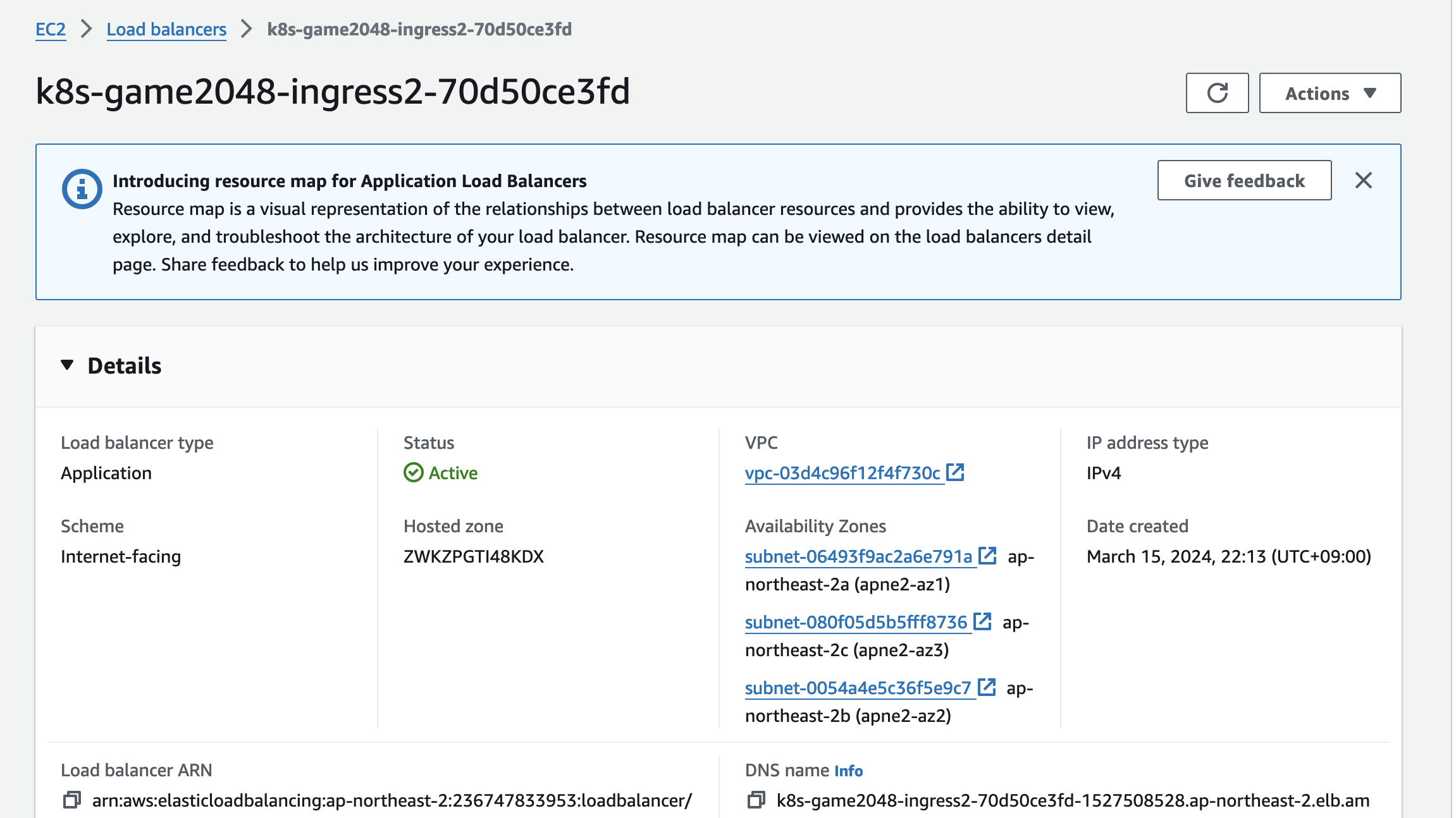

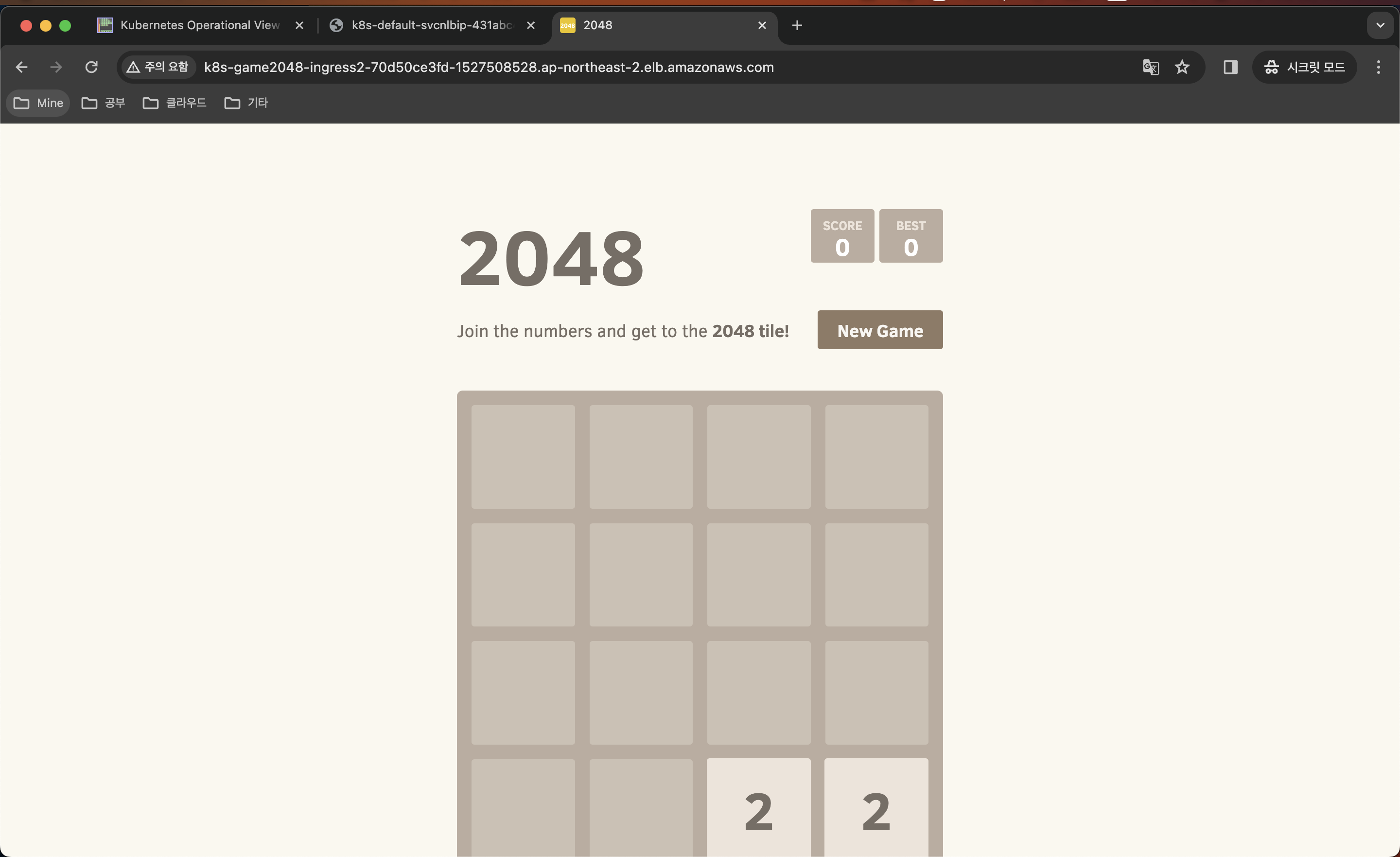

7. k8s Ingress

- 클러스터 내부의 서비스(ClusterIP, NodePort, Loadbalancer)를 외부로 노출시키는 기능이며 (HTTP/HTTPS) Web Proxy 역할을 합니다.

- 서비스/파드 배포 테스트 with Ingress(ALB)

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ingress1.yaml

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl apply -f ingress1.yaml

namespace/game-2048 created

deployment.apps/deployment-2048 created

service/service-2048 created

ingress.networking.k8s.io/ingress-2048 created

# Monitoring

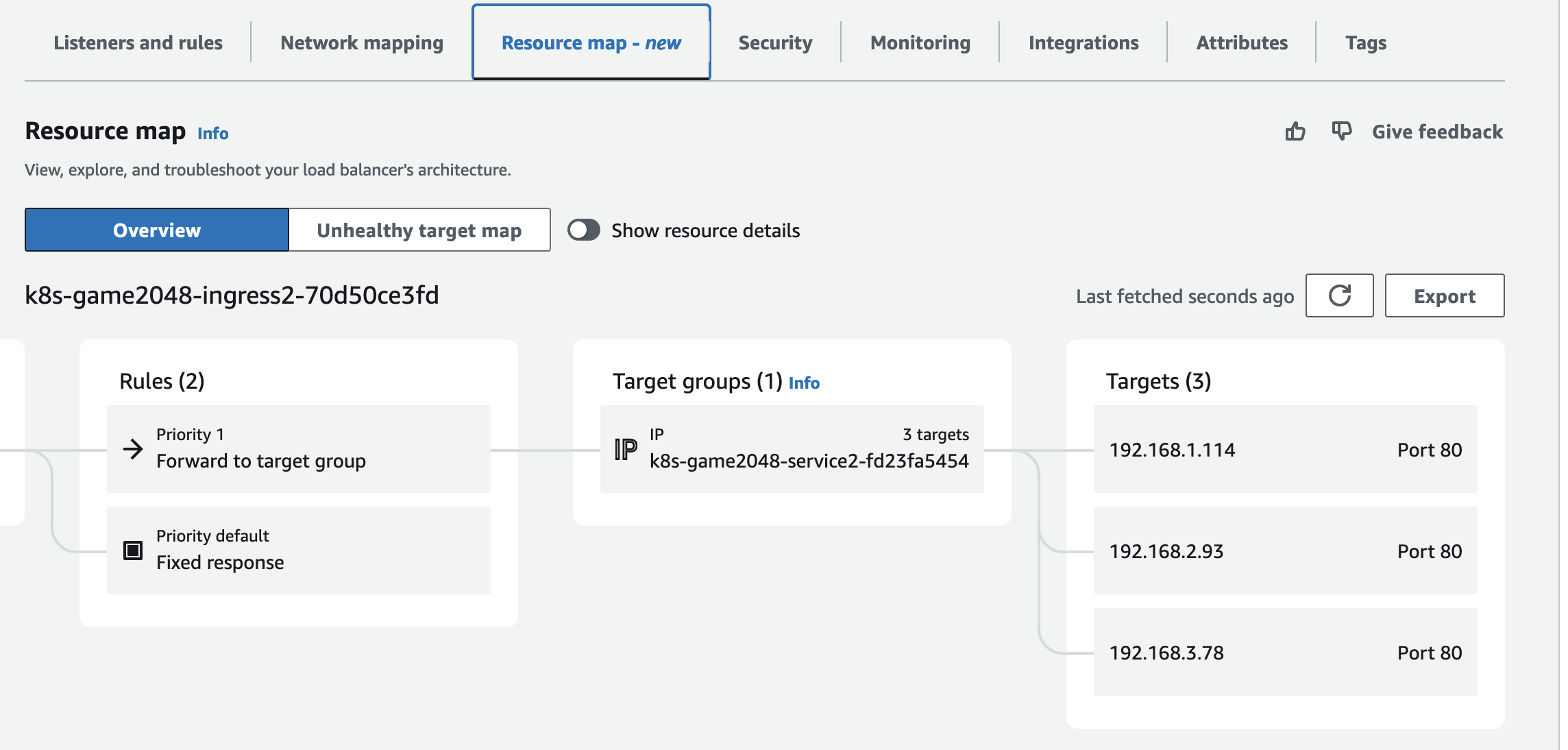

Every 2.0s: kubectl get pod,ingress,svc,ep -n game-2048 Fri Mar 15 22:13:58 2024

NAME READY STATUS RESTARTS AGE

pod/deployment-2048-75db5866dd-h5rkq 1/1 Running 0 58s

pod/deployment-2048-75db5866dd-sb7qc 1/1 Running 0 58s

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/ingress-2048 alb * k8s-game2048-ingress2-70d50ce3fd-1527508528.ap-northeast-2.elb.amazonaws.com 80 58s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/service-2048 NodePort 10.100.57.48 <none> 80:31007/TCP 58s

NAME ENDPOINTS AGE

endpoints/service-2048 192.168.1.114:80,192.168.3.78:80 58s

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# k get ingress -n game-2048

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-2048 alb * k8s-game2048-ingress2-70d50ce3fd-1527508528.ap-northeast-2.elb.amazonaws.com 80 113s

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-75db5866dd-h5rkq 1/1 Running 0 2m32s 192.168.1.114 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

deployment-2048-75db5866dd-sb7qc 1/1 Running 0 2m32s 192.168.3.78 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

- Ingress 접속

- Scale UP (Replica Up)

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl scale deployment -n game-2048 deployment-2048 --replicas 3

deployment.apps/deployment-2048 scaled

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-75db5866dd-h5rkq 1/1 Running 0 6m7s 192.168.1.114 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

deployment-2048-75db5866dd-n9wxg 1/1 Running 0 4s 192.168.2.93 ip-192-168-2-136.ap-northeast-2.compute.internal <none> <none>

deployment-2048-75db5866dd-sb7qc 1/1 Running 0 6m7s 192.168.3.78 ip-192-168-3-66.ap-northeast-2.compute.internal <none> <none>

# 리소스 삭제

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl delete ingress ingress-2048 -n game-2048

ingress.networking.k8s.io "ingress-2048" deleted

(leeeuijoo@myeks:N/A) [root@myeks-bastion-EC2 ~]# kubectl delete svc service-2048 -n game-2048 && kubectl delete deploy deployment-2048 -n game-2048 && kubectl delete ns game-2048service "service-2048" deleted

deployment.apps "deployment-2048" deleted

namespace "game-2048" deleted

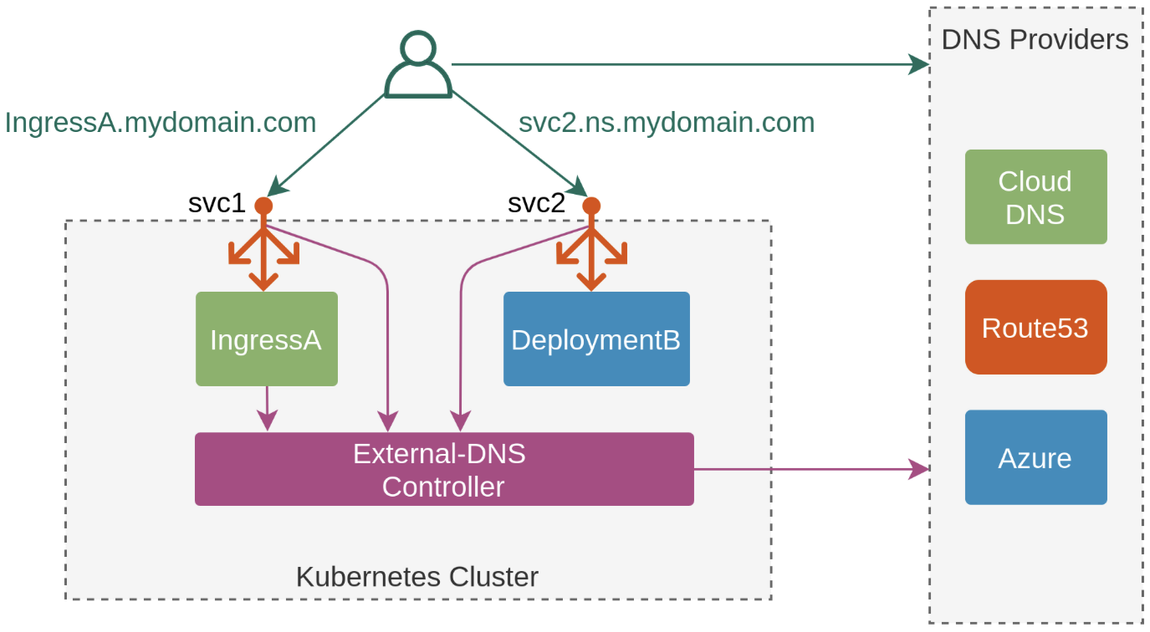

8. External DNS

- K8S 서비스/인그레스 생성 시 도메인을 설정하면, AWS(Route 53), Azure(DNS), GCP(Cloud DNS) 에 A 레코드(TXT 레코드)로 자동 생성/삭제가 가능합니다.

-

ExternalDNS CTRL 권한 주는 방법은 3가지가 있습니다.

- Node IAM Role

- Static credentials

- IRSA

-

AWS Route 53 정보 확인 & 변수 지정 : Public Domain 소유시

# 자신의 도메인 변수 지정

MyDomain=euijoo.store

echo "export MyDomain=euijoo.store" >> /etc/profile

# 자신의 Route 53 도메인 ID 조회 및 변수 지정

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." | jq

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Name"

aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text

MyDnzHostedZoneId=`aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text`

echo $MyDnzHostedZoneId

# (옵션) NS 레코드 타입 첫번째 조회

aws route53 list-resource-record-sets --output json --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'NS']" | jq -r '.[0].ResourceRecords[].Value'

# (옵션) A 레코드 타입 모두 조회

aws route53 list-resource-record-sets --output json --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']"

# A 레코드 타입 조회

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" | jq

aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A'].Name" --output text

# A 레코드 값 반복 조회

while true; do aws route53 list-resource-record-sets --hosted-zone-id "${MyDnzHostedZoneId}" --query "ResourceRecordSets[?Type == 'A']" | jq ; date ; echo ; sleep 1; done-

External DNS 구성

-

이전 EKS 원클릭 배포시 Node IAM Role 에 미리 설정이 되어 있습니다.

eksctl create cluster --name $CLUSTER_NAME --... --with-oidc --external-dns-access --full-ecr-access --dry-run > myeks.yaml -

기존에 ExternalDNS를 통해 사용한 A/TXT 레코드가 있는 존의 경우에 policy 정책을 upsert-only 로 설정 후 사용하는 것을 권장 드립니다.

- Record 가 삭제되는 것을 방지

-

MyDomain=euijoo.store

# 자신의 Route 53 도메인 ID 조회 및 변수 지정

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

# 변수 확인

echo $MyDomain, $MyDnzHostedZoneId

# ExternalDNS 배포

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

sed -i "s/0.13.4/0.14.0/g" externaldns.yaml

cat externaldns.yaml | yh

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# 확인 및 로그 모니터링

kubectl get pod -l app.kubernetes.io/name=external-dns -n kube-system

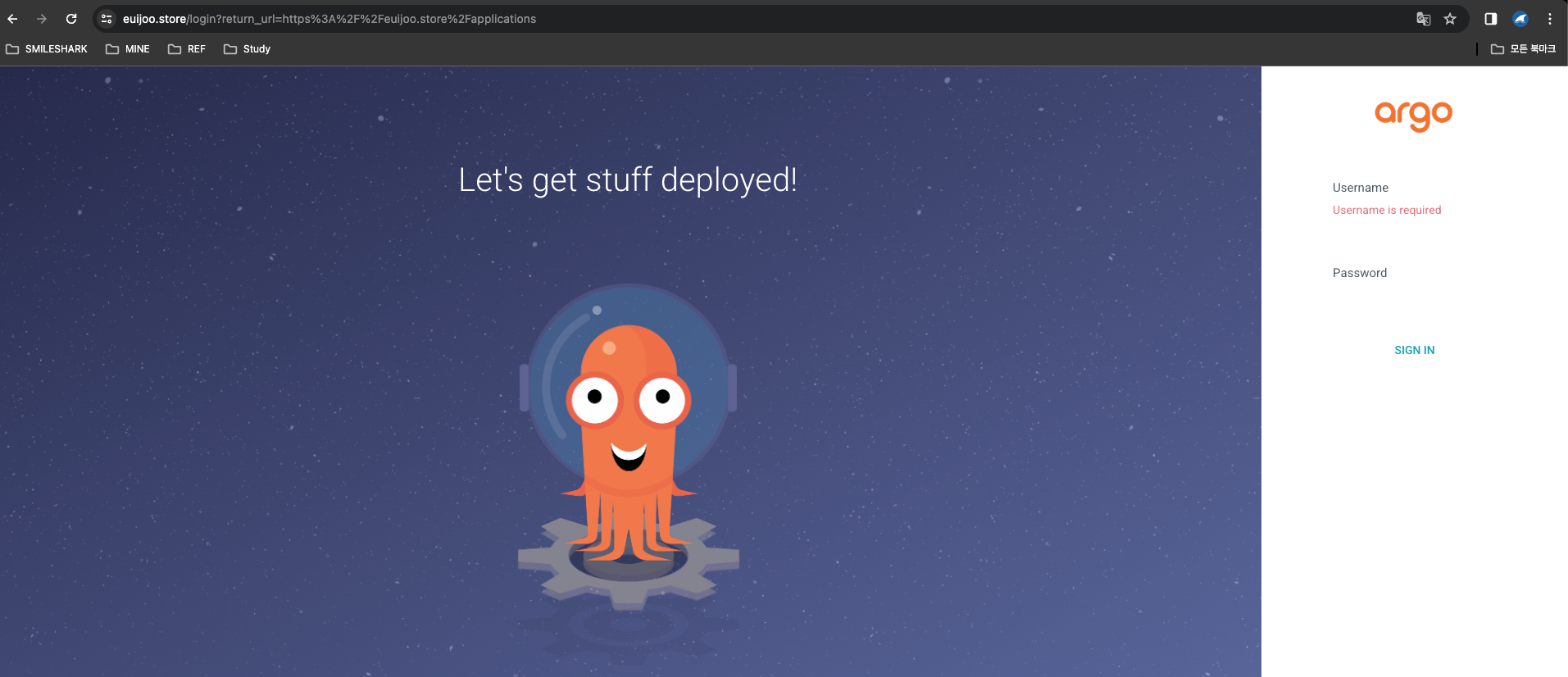

kubectl logs deploy/external-dns -n kube-system -f- External DNS 를 구성한 예시

- ArgoCD 에 적용

실습 자원 삭제

eksctl delete cluster --name $CLUSTER_NAME && aws cloudformation delete-stack --stack-name $CLUSTER_NAME