0. 실습 환경 배포

- 이전 실습 환경과는 다르게 Bastion Host 2대 입니다.

- DevOps 담당자가 2명이라는 시나리오로 진행합니다.

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick5.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick5.yaml --stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ./ejl-eks.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text --profile ejl-personal)

# devops 담장자2 PC

ssh -i ./ejl-eks.pem root@<Public IP>

- 기본 설정

# default 네임스페이스 적용

kubectl ns default

# 노드 정보 확인 : t3.medium

kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

# ExternalDNS

MyDomain=22joo.shop

echo "export MyDomain=22joo.shop" >> /etc/profile

MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

echo $MyDomain, $MyDnzHostedZoneId

curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

# kube-ops-view

helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

# AWS LB Controller

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

--set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

# gp3 스토리지 클래스 생성

kubectl apply -f https://raw.githubusercontent.com/gasida/PKOS/main/aews/gp3-sc.yaml

# 노드 IP 확인 및 PrivateIP 변수 지정

N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

echo "export N1=$N1" >> /etc/profile

echo "export N2=$N2" >> /etc/profile

echo "export N3=$N3" >> /etc/profile

echo $N1, $N2, $N3

# 노드 보안그룹 ID 확인

NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text)

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.200/32

# 워커 노드 SSH 접속

for node in $N1 $N2 $N3; do ssh -o StrictHostKeyChecking=no ec2-user@$node hostname; done

for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done

ip-192-168-1-231.ap-northeast-2.compute.internal

ip-192-168-2-179.ap-northeast-2.compute.internal

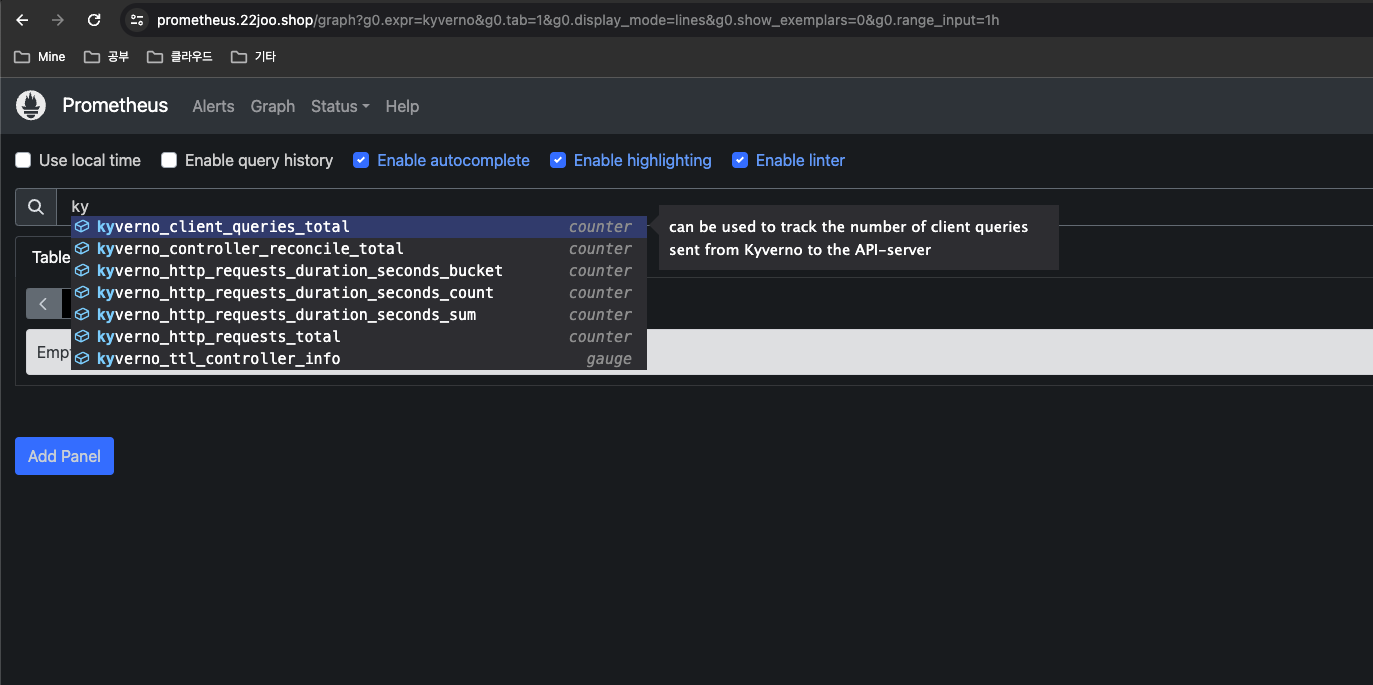

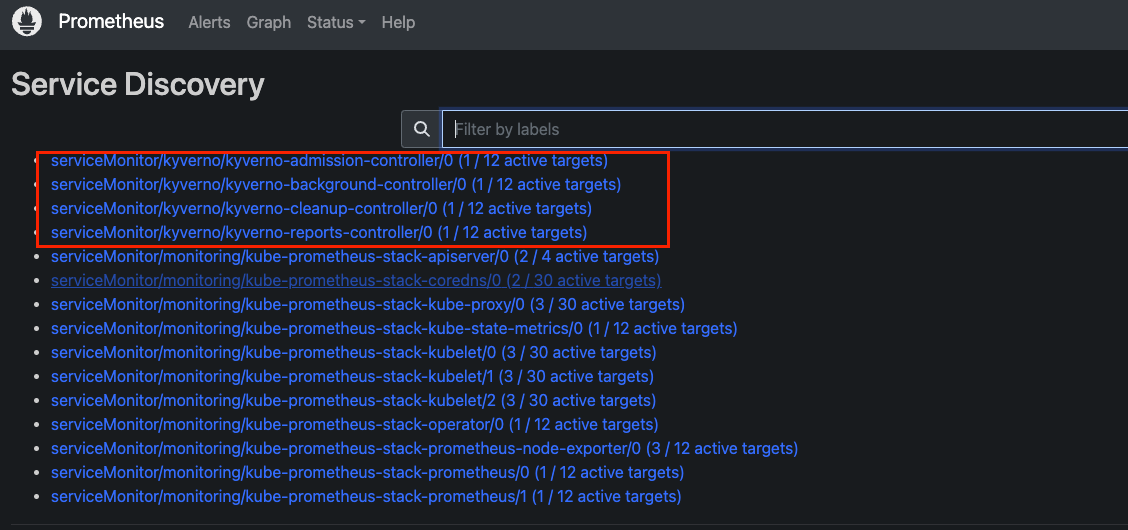

ip-192-168-3-12.ap-northeast-2.compute.internal- 프로메테우스 & 그라파나(admin / prom-operator) 설치

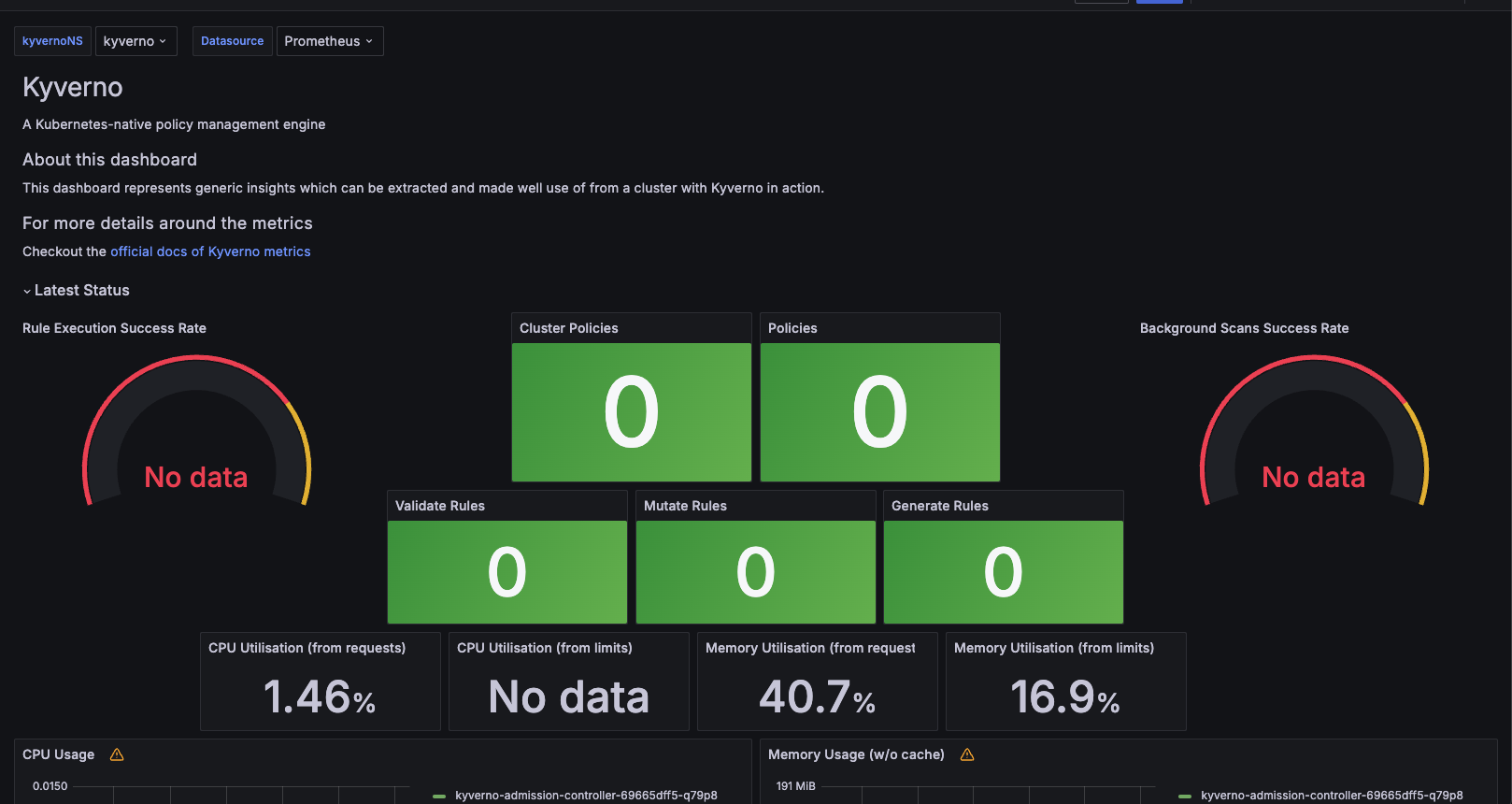

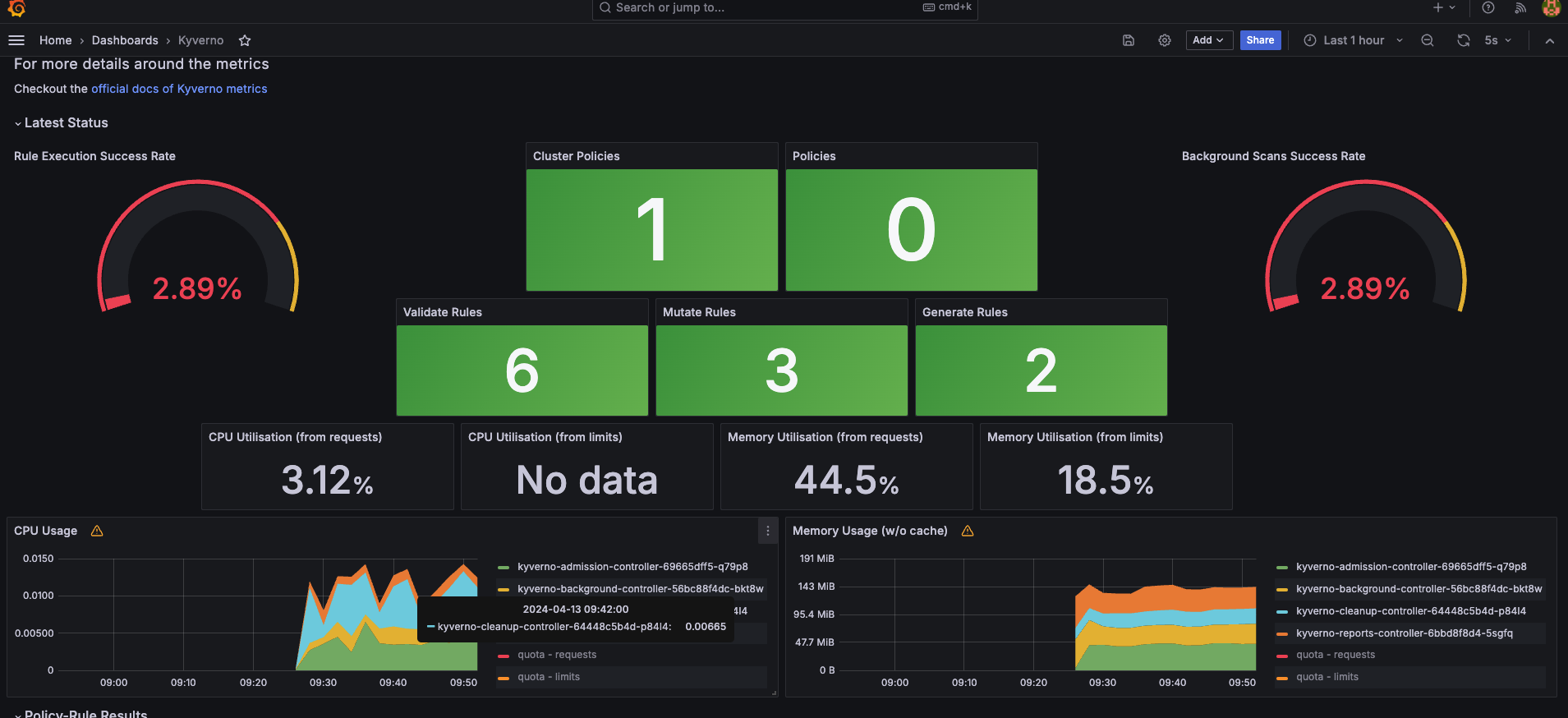

- Recommanded : 15757 17900 15172

# 사용 리전의 인증서 ARN 확인

CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

echo $CERT_ARN

# repo 추가

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 파라미터 파일 생성 : PV/PVC(AWS EBS) 삭제에 불편하니, 4주차 실습과 다르게 PV/PVC 미사용

cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

defaultDashboardsEnabled: false

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

alertmanager:

enabled: false

EOT

cat monitor-values.yaml | yh

# 배포

kubectl create ns monitoring

helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 57.2.0 \

--set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

-f monitor-values.yaml --namespace monitoring

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k get pods -n monitoring

NAME READY STATUS RESTARTS AGE

kube-prometheus-stack-grafana-64854dfdc4-nbfrz 3/3 Running 0 38s

kube-prometheus-stack-kube-state-metrics-5c6549bfd5-q5klr 1/1 Running 0 38s

kube-prometheus-stack-operator-76bf64f57d-rjb5p 1/1 Running 0 38s

kube-prometheus-stack-prometheus-node-exporter-6rfwm 1/1 Running 0 38s

kube-prometheus-stack-prometheus-node-exporter-m74dv 1/1 Running 0 38s

kube-prometheus-stack-prometheus-node-exporter-qhrlm 1/1 Running 0 38s

prometheus-kube-prometheus-stack-prometheus-0 2/2 Running 0 32s

# Metrics-server 배포

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# 프로메테우스 ingress 도메인으로 웹 접속

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# echo -e "Prometheus Web URL = https://prometheus.$MyDomain"

Prometheus Web URL = https://prometheus.22joo.shop

# 그라파나 웹 접속 : 기본 계정 - admin / prom-operator

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# echo -e "Grafana Web URL = https://grafana.$MyDomain"

Grafana Web URL = https://grafana.22joo.shop

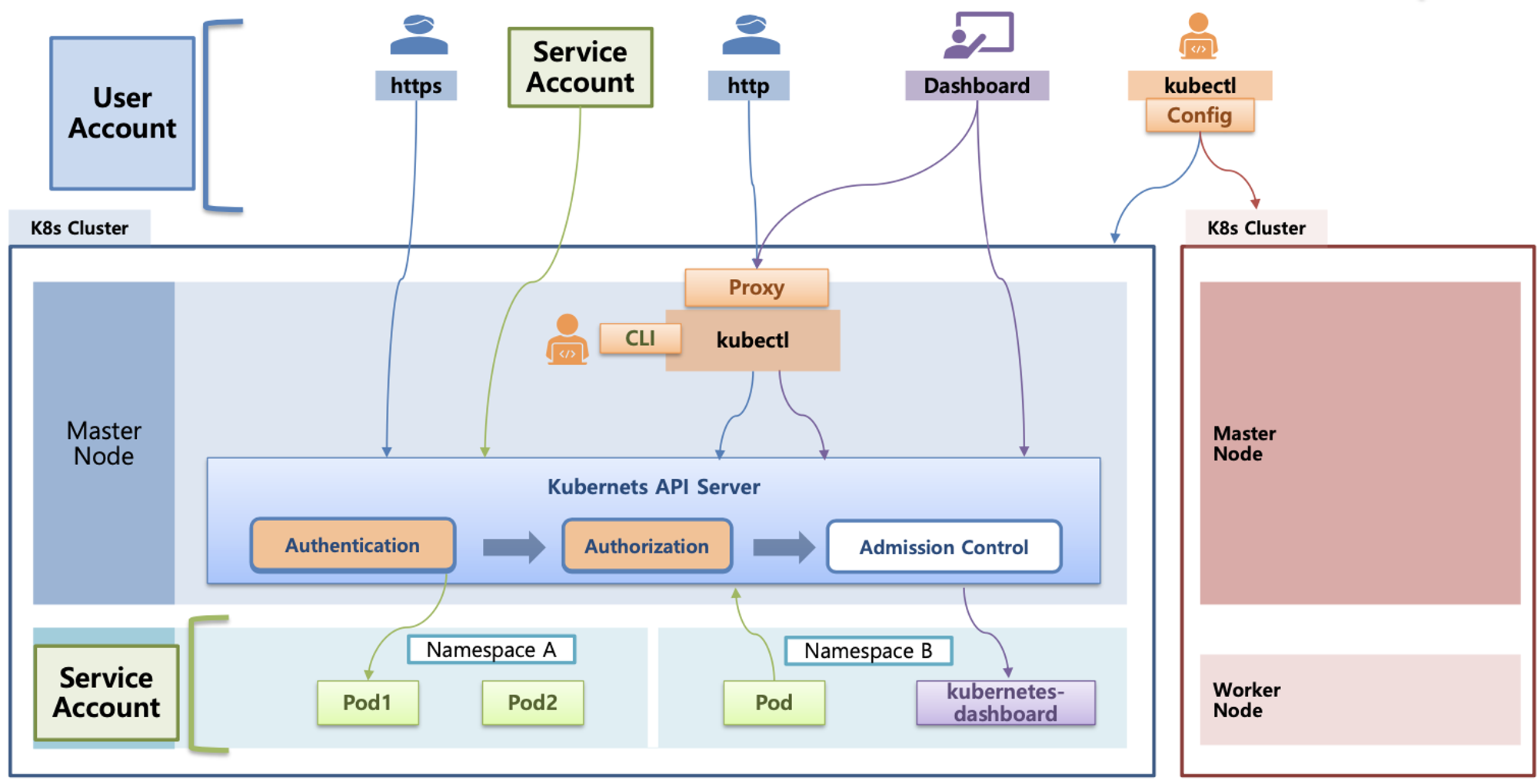

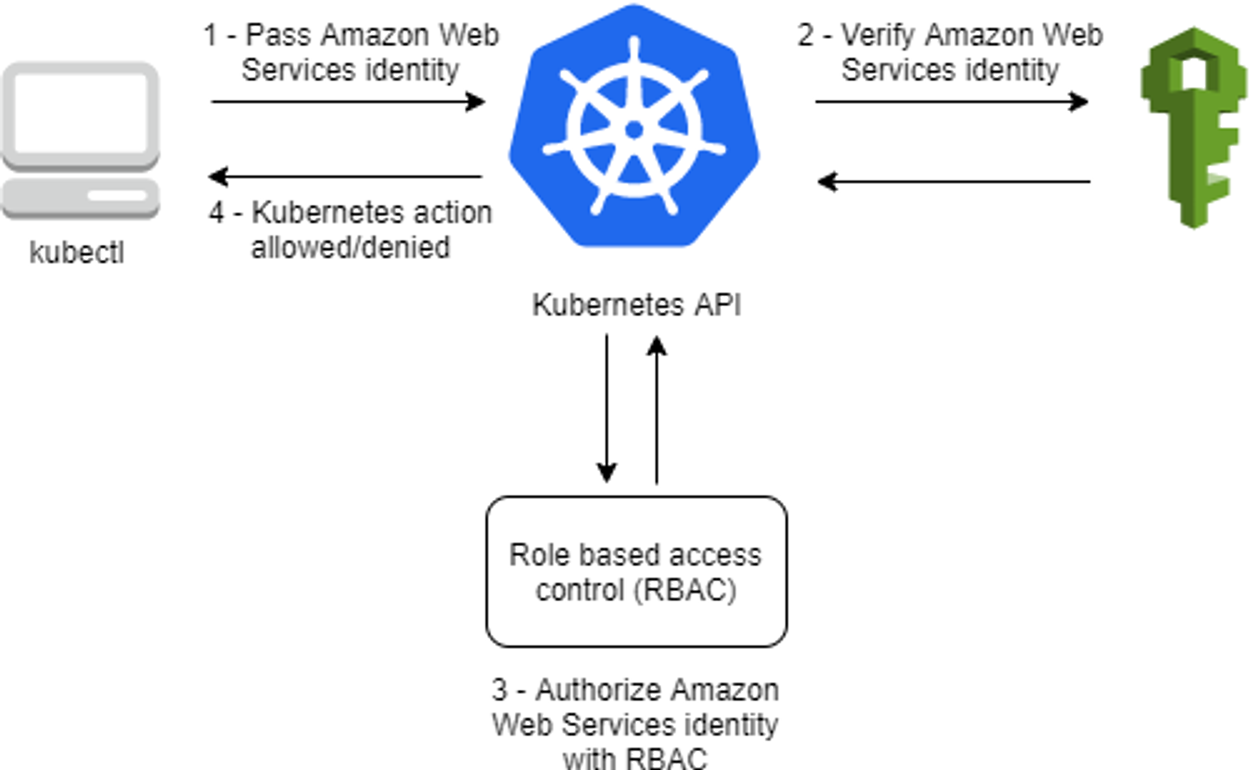

1. k8s의 인증 & 인가

- 서비스 어카운트(Service Account)

- API 서버 사용 : kubectl(config, 다수 클러스터 관리 가능), 서비스 어카운트, https(x.509 Client Certs)

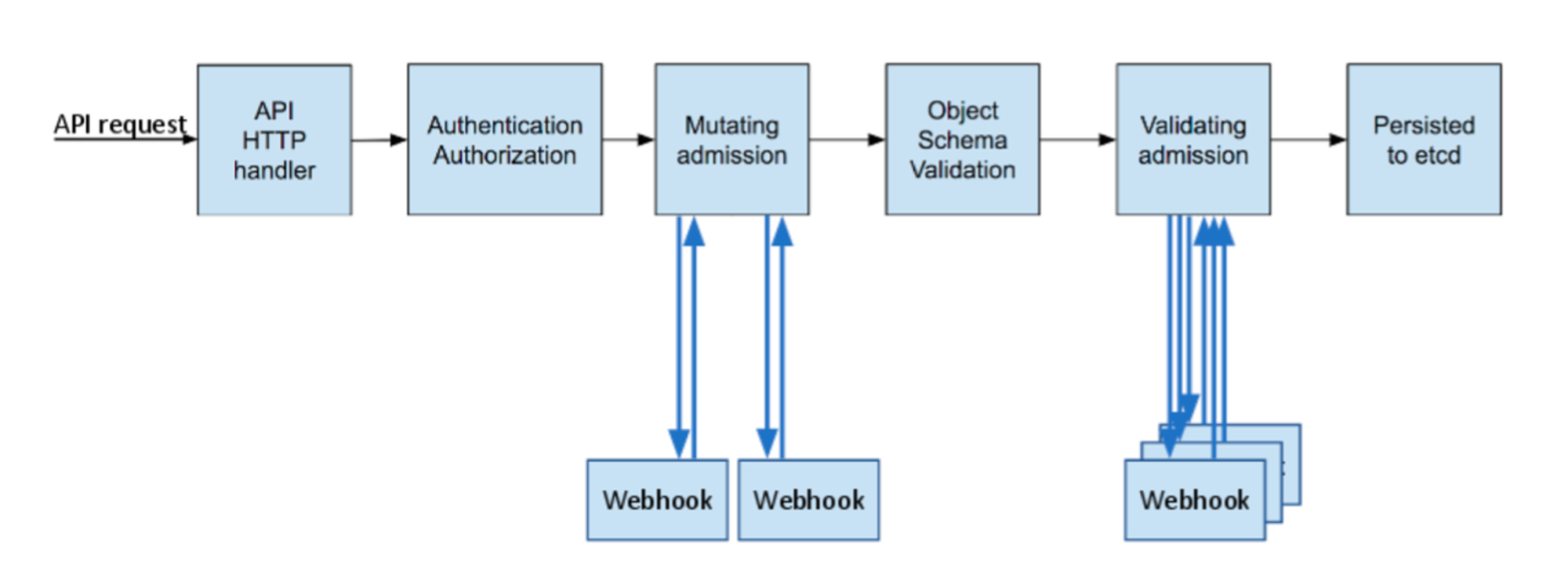

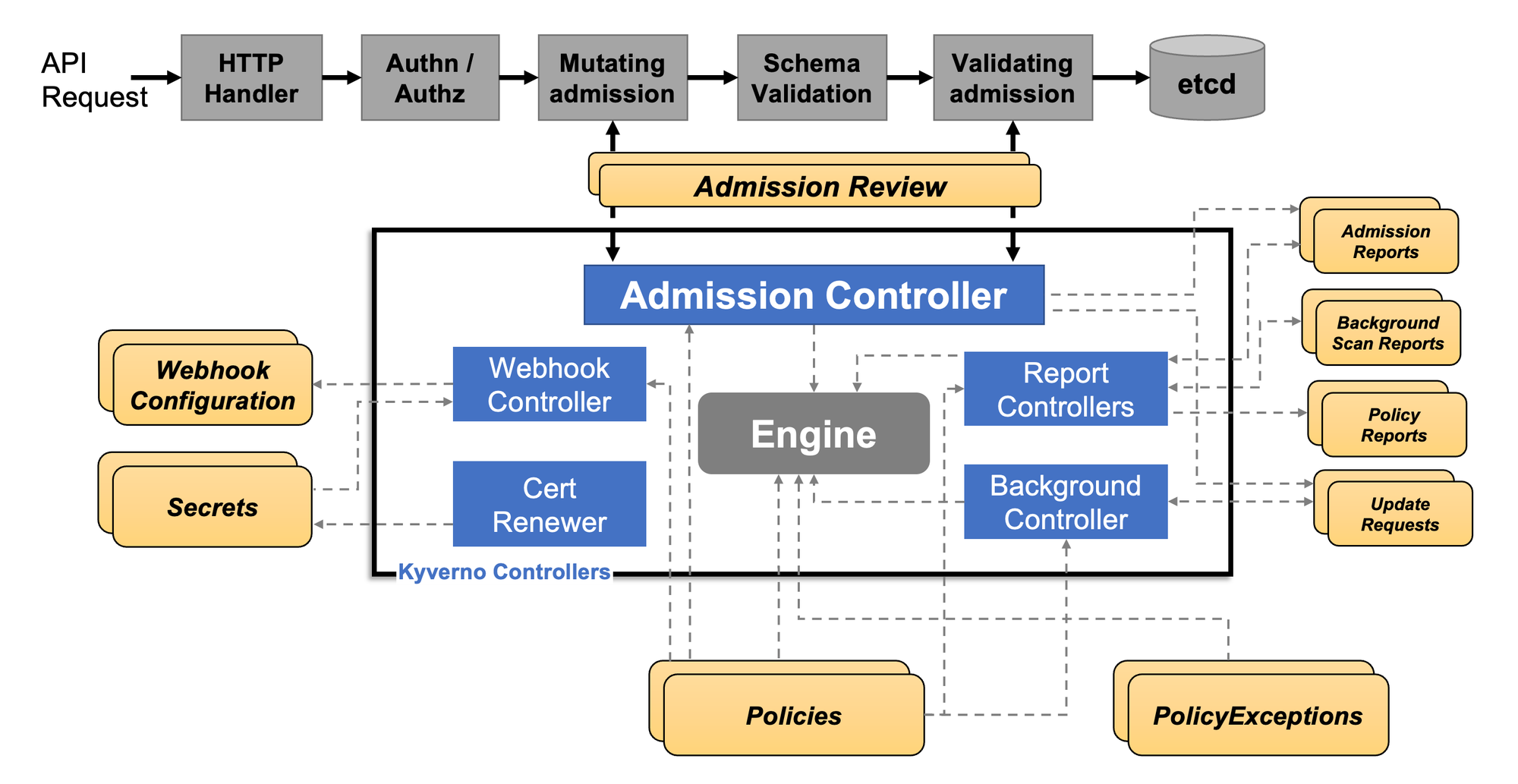

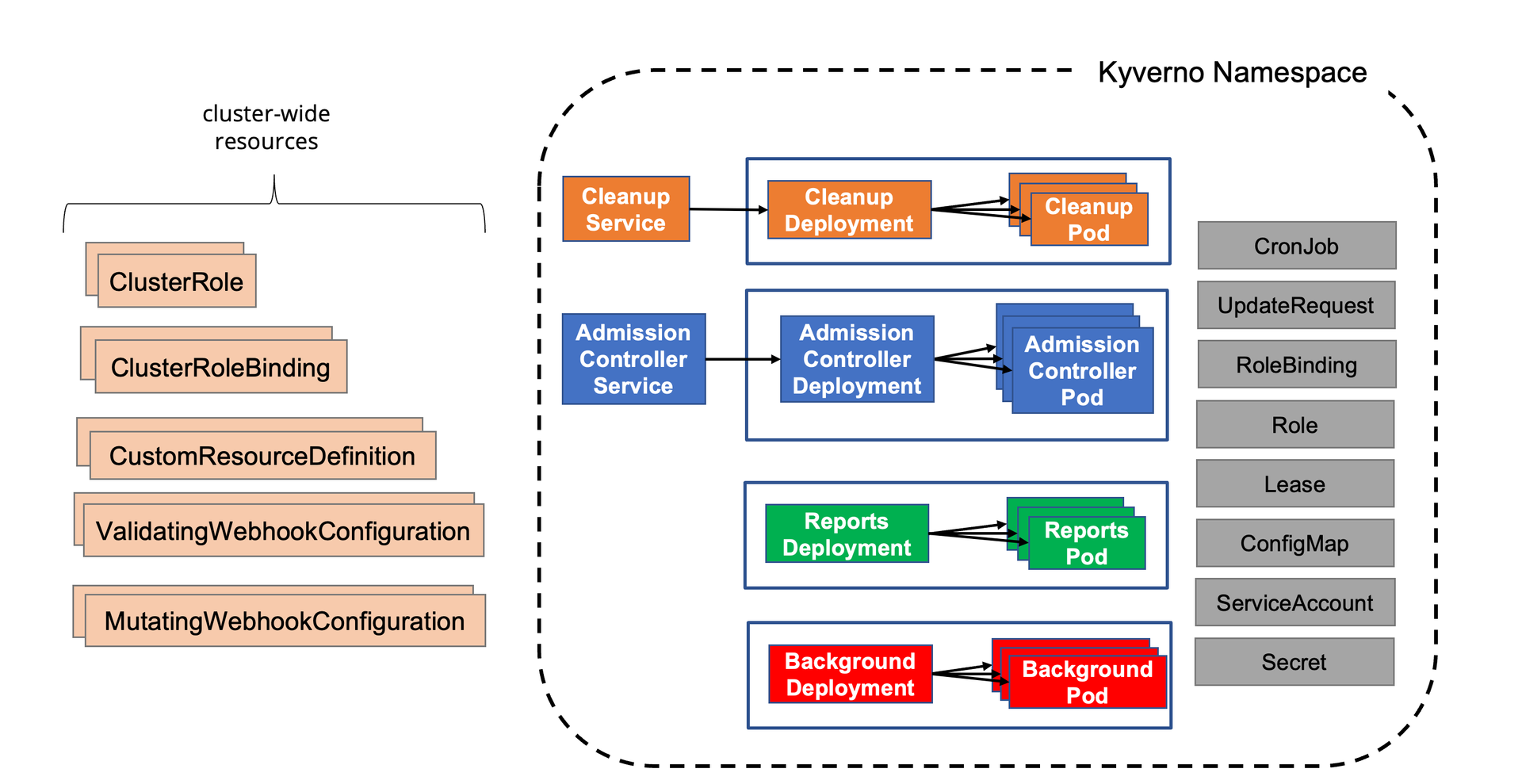

- API 서버 접근 과정 : 인증 → 인가 → Admission Control(API 요청 검증, 필요 시 변형 - 예. ResourceQuota, LimitRange) - 참고

인증(Authentication)

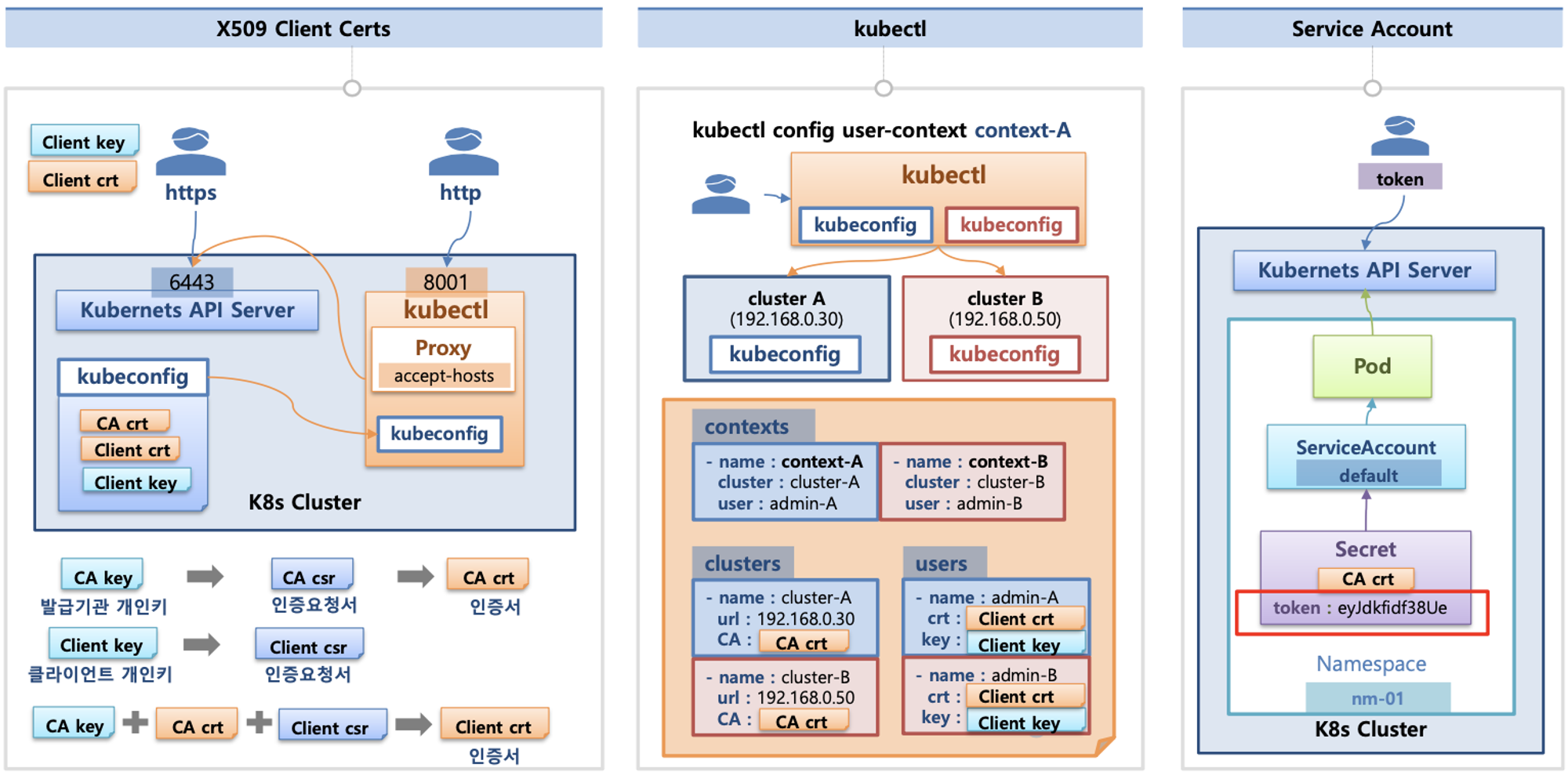

- X.509 Client Certs : kubeconfig 에 CA crt(발급 기관 인증서) , Client crt(클라이언트 인증서) , Client key(클라이언트 개인키) 를 통해 인증

- kubectl : 여러 클러스터(kubeconfig)를 관리 가능 - contexts 에 클러스터와 유저 및 인증서/키 참고

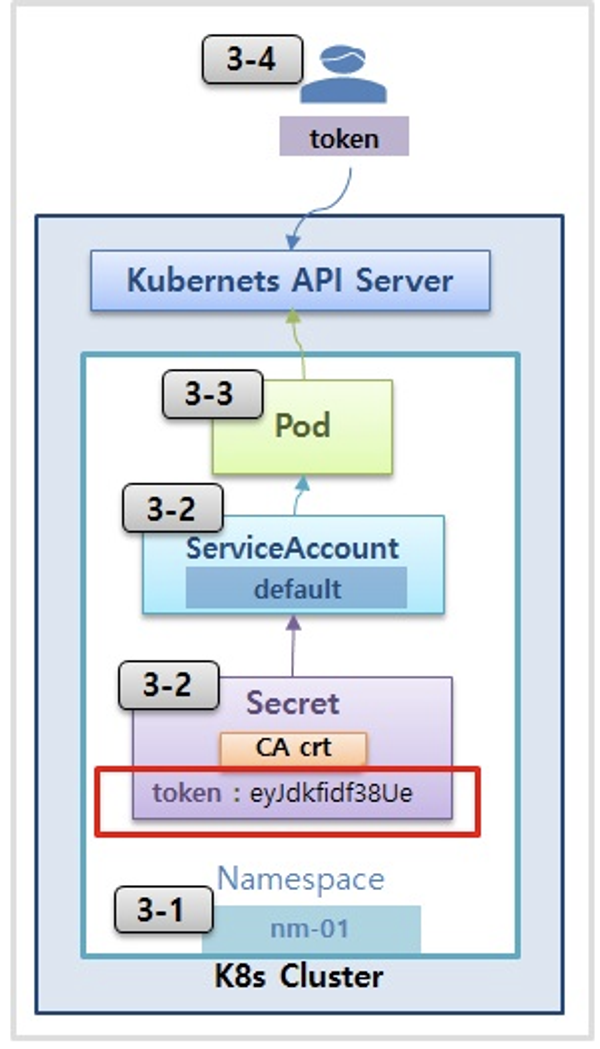

- Service Account : 기본 서비스 어카운트(default) - 시크릿(CA crt 와 token)

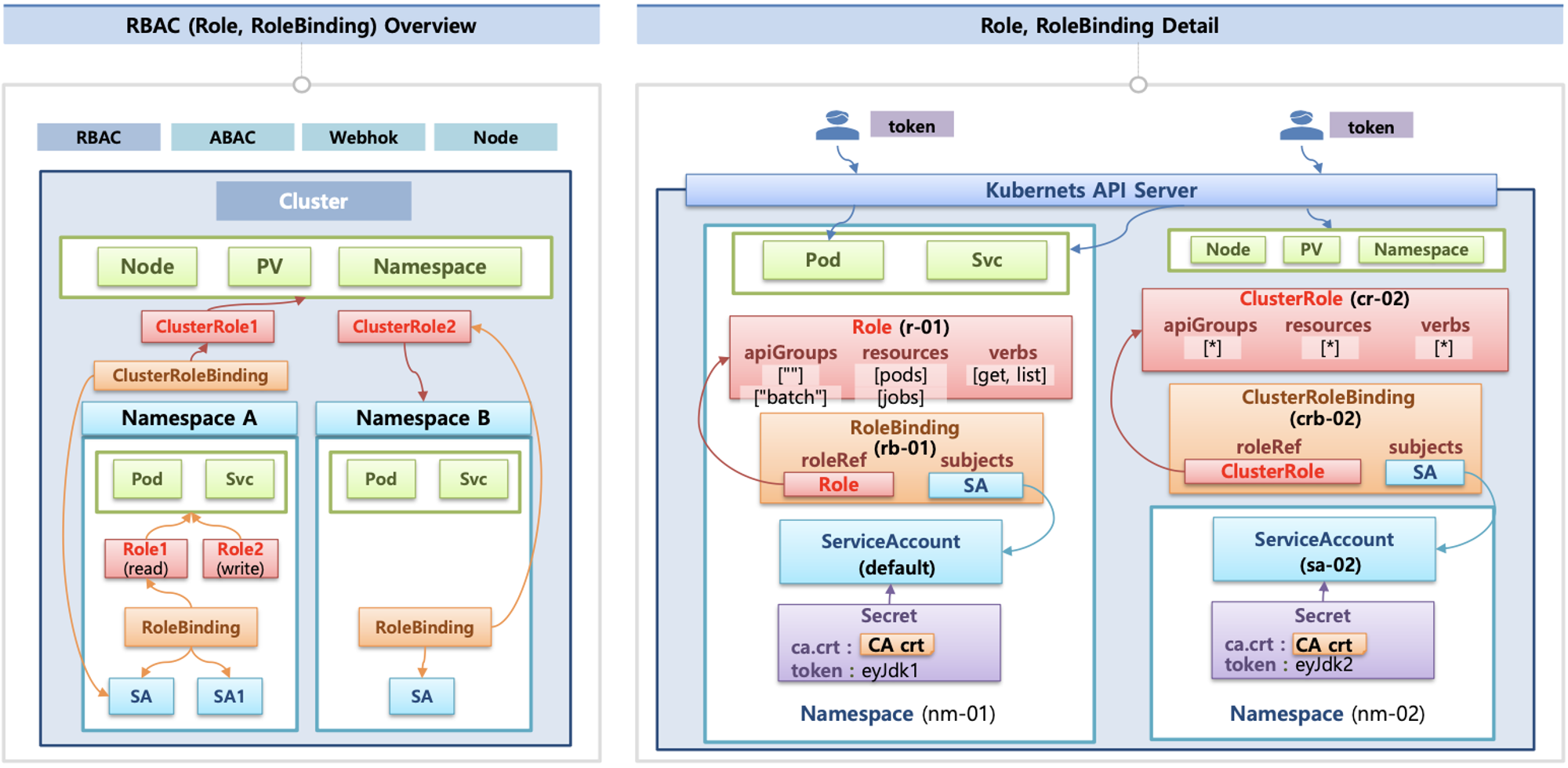

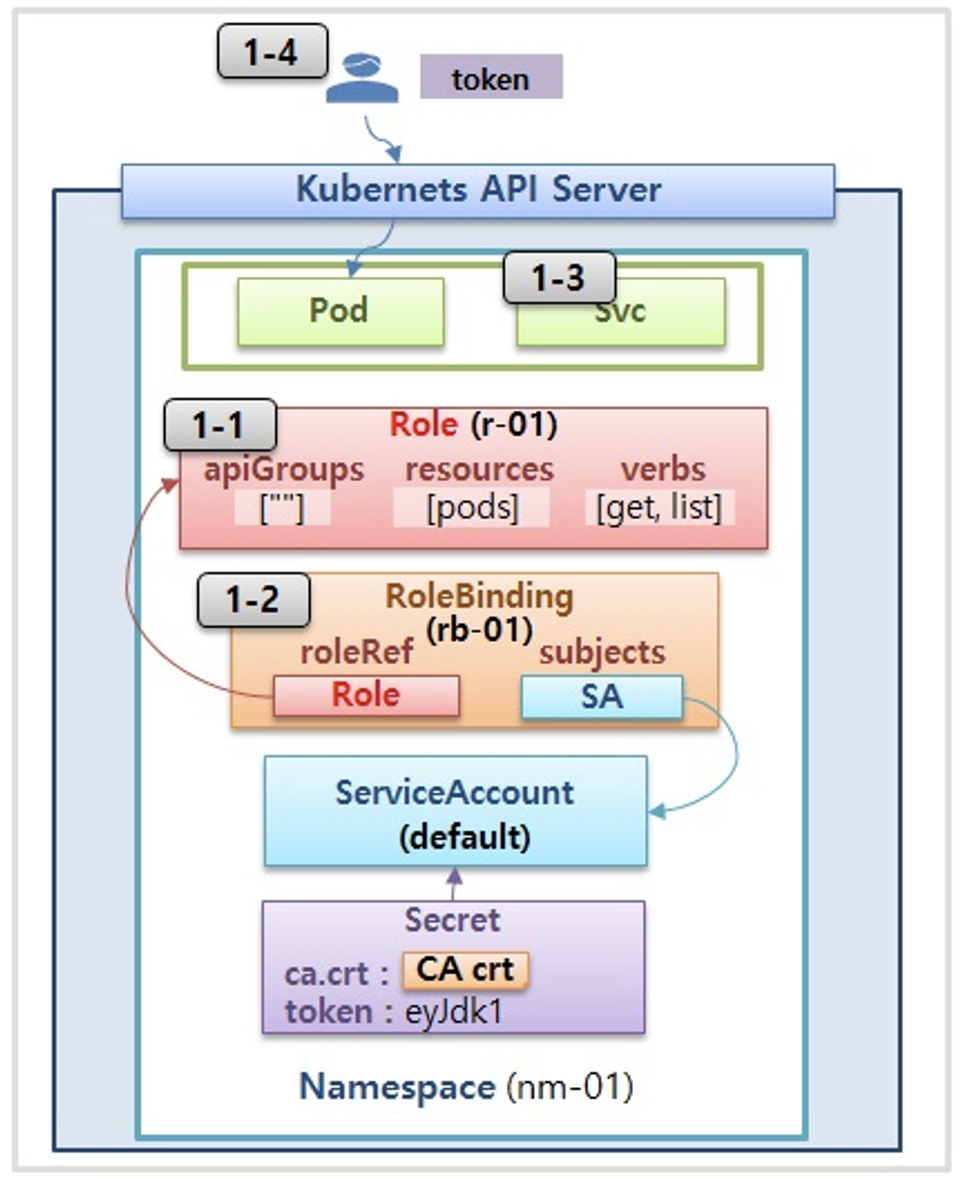

인가(Authorization)

- 인가 방식 : RBAC(Role, RoleBinding), ABAC, Webhook, Node Authorization⇒

RBAC 발음을 어떻게 하시나요? - RBAC : 역할 기반의 권한 관리, 사용자와 역할을 별개로 선언 후 두가지를 조합(binding)해서 사용자에게 권한을 부여하여 kubectl or API로 관리 가능

- Namespace/Cluster - Role/ClusterRole, RoleBinding/ClusterRoleBinding, Service Account

- Role(롤) - (RoleBinding 롤 바인딩) - Service Account(서비스 어카운트) : 롤 바인딩은 롤과 서비스 어카운트를 연결

- Role(네임스페이스내 자원의 권한) vs ClusterRole(클러스터 수준의 자원의 권한)

.kube/config 파일 내용

-

clusters : kubectl 이 사용할 쿠버네티스 API 서버의 접속 정보 목록. 원격의 쿠버네티스 API 서버의 주소를 추가해 사용 가능

-

users : 쿠버네티스의 API 서버에 접속하기 위한 사용자 인증 정보 목록. (서비스 어카운트의 토큰, 혹은 인증서의 데이터 등)

-

contexts : cluster 항목과 users 항목에 정의된 값을 조합해 최종적으로 사용할 쿠버네티스 클러스터의 정보(컨텍스트)를 설정.

- 예를 들어 clusters 항목에 클러스터 A,B 가 정의돼 있고, users 항목에 사용자 a,b 가 정의돼 있다면 cluster A + user a 를 조합해, 'cluster A 에 user a 로 인증해 쿠버네티스를 사용한다' 라는 새로운 컨텍스트를 정의할 수 있습니다.

- kubectl 을 사용하려면 여러 개의 컨텍스트 중 하나를 선택.

실습

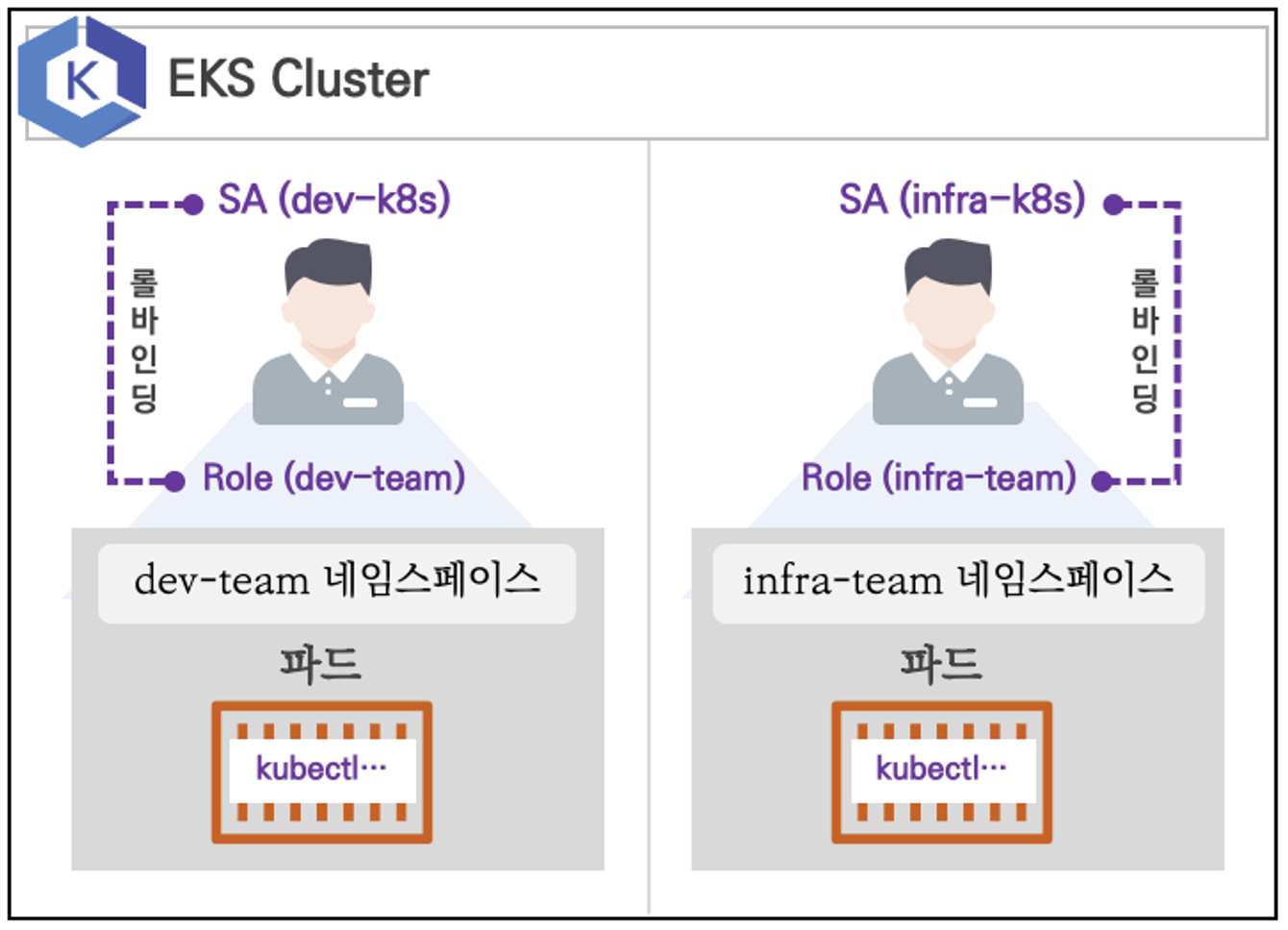

- 쿠버네티스에 사용자를 위한 서비스 어카운트(Service Account, SA)를 생성 : dev-k8s, infra-k8s

- 사용자는 각기 다른 권한(Role, 인가)을 가짐 : dev-k8s(dev-team 네임스페이스 내 모든 동작) , infra-k8s(dev-team 네임스페이스 내 모든 동작)

- 각각 별도의 kubectl 파드를 생성하고, 해당 파드에 SA 를 지정하여 권한에 대한 테스트를 진행

네임스페이스와 서비스 어카운트 생성 후 확인

- 파드 기동 시 서비스 어카운트 한 개가 할당되며, 서비스 어카운트 기반 인증/인가를 함, 미지정 시 기본 서비스 어카운트가 할당

- 서비스 어카운트에 자동 생성된 시크릿에 저장된 토큰으로 쿠버네티스 API에 대한 인증 정보로 사용 할 수 있다 ← 1.23 이전 버전의 경우에만 해당

# 네임스페이스(Namespace, NS) 생성 및 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create namespace dev-team

namespace/dev-team created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create ns infra-team

namespace/infra-team created

# 네임스페이스 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get ns

NAME STATUS AGE

default Active 40m

dev-team Active 21s

infra-team Active 17s

kube-node-lease Active 40m

kube-public Active 40m

kube-system Active 40m

monitoring Active 18m

# 네임스페이스에 각각 서비스 어카운트 생성 : serviceaccounts 약자(=sa)

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create sa dev-k8s -n dev-team

serviceaccount/dev-k8s created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create sa infra-k8s -n infra-team

serviceaccount/infra-k8s created

# 서비스 어카운트 정보 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get sa -n dev-team

NAME SECRETS AGE

default 0 79s

dev-k8s 0 24s

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get sa dev-k8s -n dev-team -o yaml | yh

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: "2024-04-12T12:35:57Z"

name: dev-k8s

namespace: dev-team

resourceVersion: "11311"

uid: 223a186a-05f2-43e8-aa9b-3f5ae38b7208

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]#

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get sa -n infra-team

NAME SECRETS AGE

default 0 77s

infra-k8s 0 23s

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get sa infra-k8s -n infra-team -o yaml | yh

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: "2024-04-12T12:36:00Z"

name: infra-k8s

namespace: infra-team

resourceVersion: "11322"

uid: 791eb437-4f51-4d32-9d92-4cdb4362f9c3서비스 어카운트를 지정하여 파드 생성 후 권한 테스트

# 각각 네임스피이스에 kubectl 파드 생성 - 컨테이너이미지

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: dev-kubectl

namespace: dev-team

spec:

serviceAccountName: dev-k8s

containers:

- name: kubectl-pod

image: bitnami/kubectl:1.28.5

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

------------------------------------

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: infra-kubectl

namespace: infra-team

spec:

serviceAccountName: infra-k8s

containers:

- name: kubectl-pod

image: bitnami/kubectl:1.28.5

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

dev-team dev-kubectl 1/1 Running 0 37s

infra-team infra-kubectl 1/1 Running 0 29s

# 파드에 기본 적용되는 서비스 어카운트(토큰) 정보 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl exec -it dev-kubectl -n dev-team -- ls /run/secrets/kubernetes.io/serviceaccount

ca.crt namespace token

# 상세 정보 확인

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/token

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/namespace

kubectl exec -it dev-kubectl -n dev-team -- cat /run/secrets/kubernetes.io/serviceaccount/ca.crt

# 각각 파드로 Shell 접속하여 정보 확인 : 단축 명령어(alias) 사용

alias k1='kubectl exec -it dev-kubectl -n dev-team -- kubectl'

alias k2='kubectl exec -it infra-kubectl -n infra-team -- kubectl'

# 권한 테스트 - 조회 불가

## kubectl exec -it dev-kubectl -n dev-team -- kubectl get pods 와 동일한 실행 명령

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get pods

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:dev-team:dev-k8s" cannot list resource "pods" in API group "" in the namespace "dev-team"

command terminated with exit code 1

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 run nginx --image nginx:1.20-alpine

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:dev-team:dev-k8s" cannot create resource "pods" in API group "" in the namespace "dev-team"

command terminated with exit code 1

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get pods -n kube-system

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:dev-team:dev-k8s" cannot list resource "pods" in API group "" in the namespace "kube-system"

command terminated with exit code 1

# (옵션) kubectl auth can-i 로 kubectl 실행 사용자가 특정 권한을 가졌는지 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 auth can-i get pods

no

각각 네임스페이스에 롤(Role)를 생성 후 서비스 어카운트 바인딩

- 롤(Role) : apiGroups 와 resources 로 지정된 리소스에 대해 verbs 권한을 인가

- 실행 가능한 조작(verbs) : *(모두 처리), create(생성), delete(삭제), get(조회), list(목록조회), patch(일부업데이트), update(업데이트), watch(변경감시)

- Role 생성

# 각각 네임스페이스내의 모든 권한에 대한 롤 생성

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: role-dev-team

namespace: dev-team

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

EOF

-------------------------

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: role-infra-team

namespace: infra-team

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

EOF

# 롤 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get roles -n dev-team

NAME CREATED AT

role-dev-team 2024-04-12T12:45:58Z

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get roles -n infra-team

NAME CREATED AT

role-infra-team 2024-04-12T12:46:05Z

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl describe roles role-dev-team -n dev-team

Name: role-dev-team

Labels: <none>

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

*.* [] [] [*]- Role Binding 생성

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: roleB-dev-team

namespace: dev-team

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: role-dev-team

subjects:

- kind: ServiceAccount

name: dev-k8s

namespace: dev-team

EOF

----------------------

cat <<EOF | kubectl create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: roleB-infra-team

namespace: infra-team

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: role-infra-team

subjects:

- kind: ServiceAccount

name: infra-k8s

namespace: infra-team

EOF

# 롤바인딩 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get rolebindings -n dev-team

NAME ROLE AGE

roleB-dev-team Role/role-dev-team 20s

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get rolebindings -n infra-team

NAME ROLE AGE

roleB-infra-team Role/role-infra-team 16s

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl describe rolebindings roleB-dev-team -n dev-team

Name: roleB-dev-team

Labels: <none>

Annotations: <none>

Role:

Kind: Role

Name: role-dev-team

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount dev-k8s dev-team서비스 어카운트를 지정하여 생성한 파드에서 다시 권한 테스트

# 각각 파드로 Shell 접속하여 정보 확인 : 단축 명령어(alias) 사용

alias k1='kubectl exec -it dev-kubectl -n dev-team -- kubectl'

alias k2='kubectl exec -it infra-kubectl -n infra-team -- kubectl'

# 권한 테스트

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get pods

NAME READY STATUS RESTARTS AGE

dev-kubectl 1/1 Running 0 11m

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 run nginx --image nginx:1.20-alpine

pod/nginx created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get pods

NAME READY STATUS RESTARTS AGE

dev-kubectl 1/1 Running 0 11m

nginx 1/1 Running 0 16s

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 delete pods nginx

pod "nginx" deleted

# 권한 막히는 예시

## 다른 네임 스페이스

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get pods -n kube-system

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:dev-team:dev-k8s" cannot list resource "pods" in API group "" in the namespace "kube-system"

command terminated with exit code 1

## ClusterRole 이 필요

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 get nodes

Error from server (Forbidden): nodes is forbidden: User "system:serviceaccount:dev-team:dev-k8s" cannot list resource "nodes" in API group "" at the cluster scope

command terminated with exit code 1

# (옵션) kubectl auth can-i 로 kubectl 실행 사용자가 특정 권한을 가졌는지 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# k1 auth can-i get pods

yes- 리소스 삭제 :

kubectl delete ns dev-team infra-team

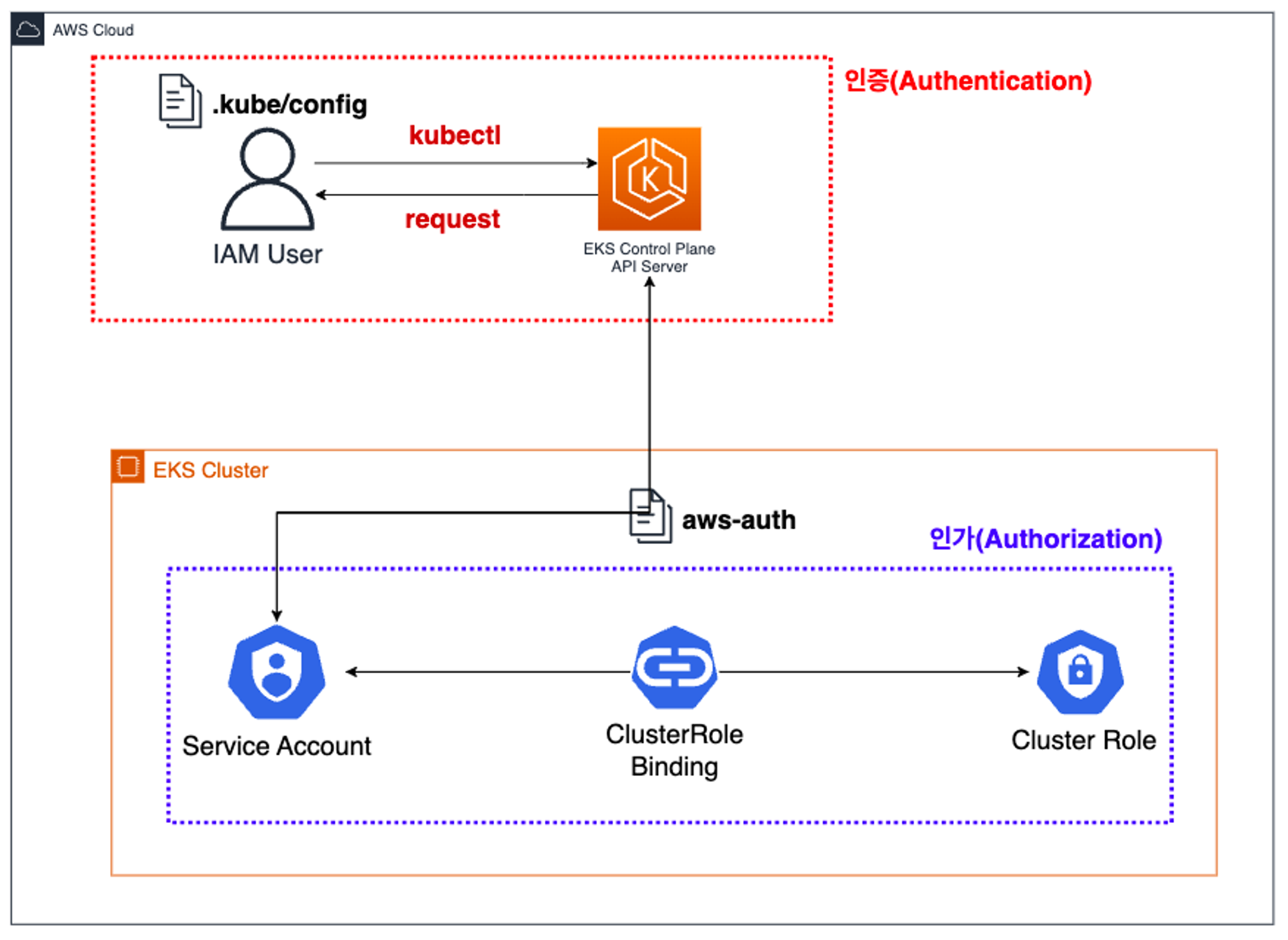

2. EKS의 인증&인가

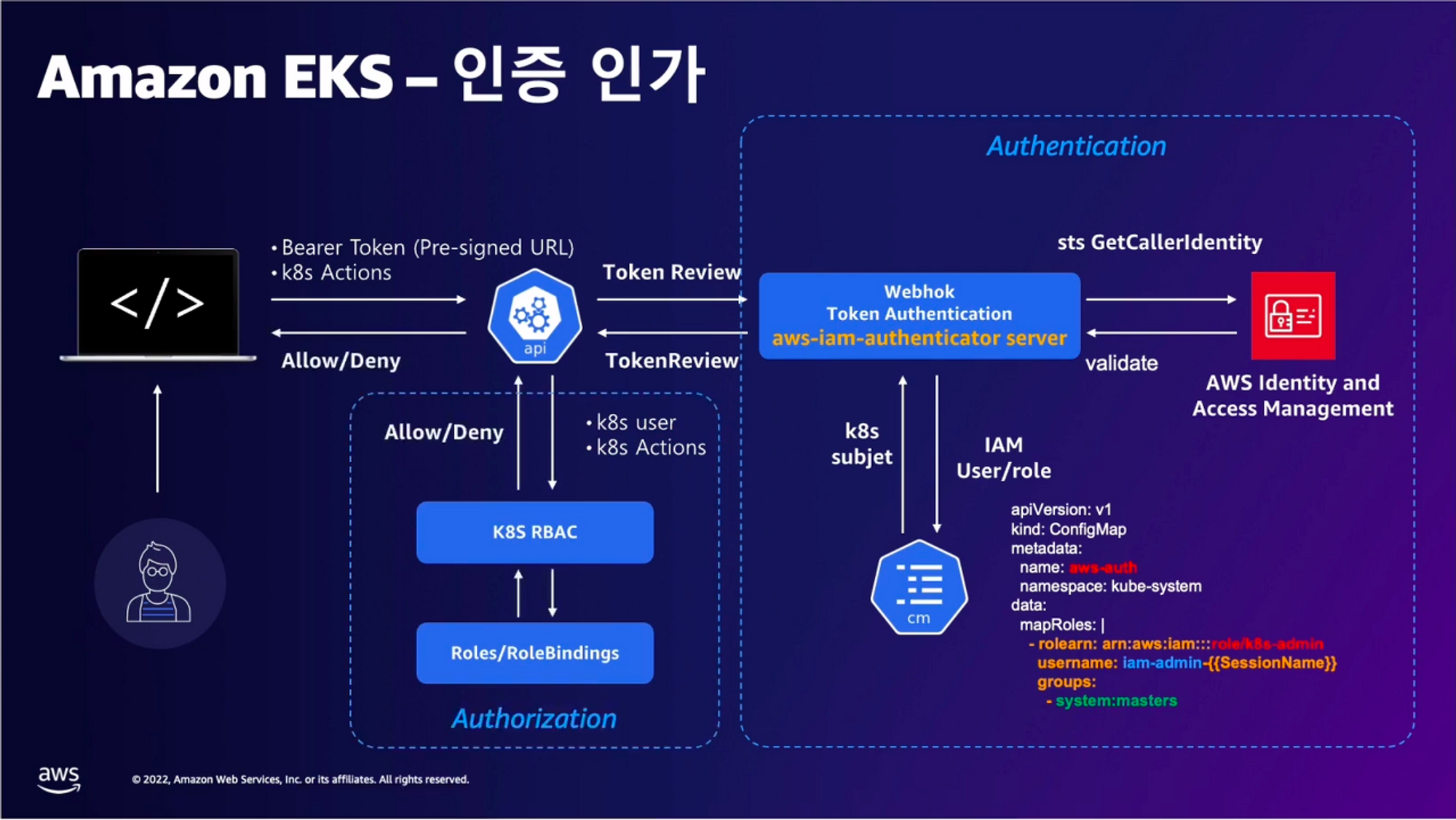

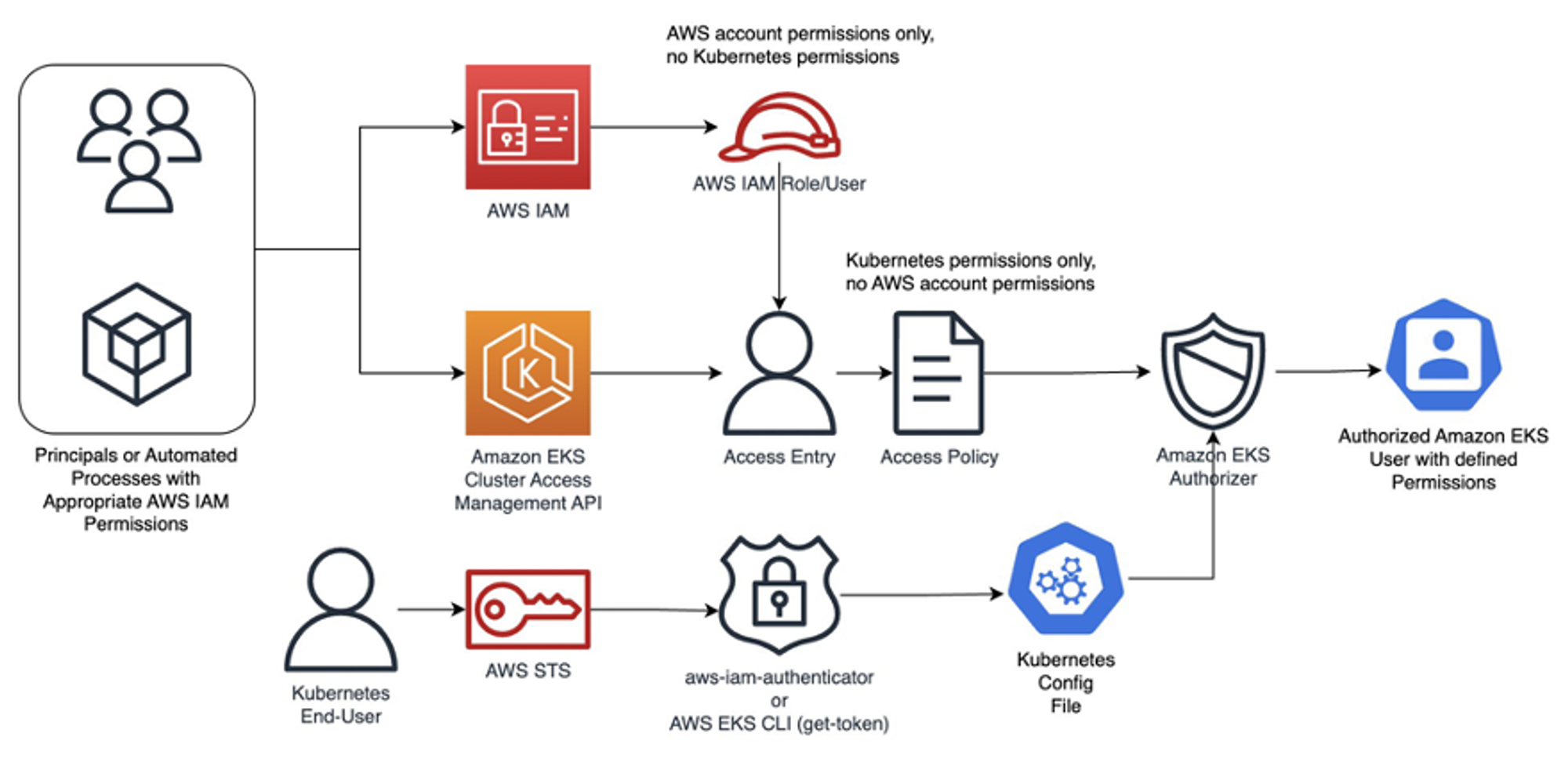

동작 : 사용자/애플리케이션 → k8s 사용 시 ⇒ 인증은 AWS IAM, 인가는 K8S RBAC 기억!!

- 인가는 RBAC 으로 이루어지는데, 이것을 보거나 분석하기 위해선 유용한 플러그인들이 있습니다.

- RBAC 관련 k8s Krew 플러그인

# Install

kubectl krew install access-matrix rbac-tool rbac-view rolesum whoami

# k8s 인증된 주체 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl whoami

arn:aws:iam::236747833953:user/leeeuijoo

# Show an RBAC access matrix for server resources

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl access-matrix --namespace default

NAME LIST CREATE UPDATE DELETE

alertmanagerconfigs.monitoring.coreos.com ✔ ✔ ✔ ✔

alertmanagers.monitoring.coreos.com ✔ ✔ ✔ ✔

bindings ✔

configmaps ✔ ✔ ✔ ✔

controllerrevisions.apps ✔ ✔ ✔ ✔

cronjobs.batch ✔ ✔ ✔ ✔

csistoragecapacities.storage.k8s.io ✔ ✔ ✔ ✔

daemonsets.apps ✔ ✔ ✔ ✔

deployments.apps ✔ ✔ ✔ ✔

endpoints ✔ ✔ ✔ ✔

endpointslices.discovery.k8s.io ✔ ✔ ✔ ✔

events ✔ ✔ ✔ ✔

events.events.k8s.io ✔ ✔ ✔ ✔

horizontalpodautoscalers.autoscaling ✔ ✔ ✔ ✔

ingresses.networking.k8s.io ✔ ✔ ✔ ✔

jobs.batch ✔ ✔ ✔ ✔

leases.coordination.k8s.io ✔ ✔ ✔ ✔

limitranges ✔ ✔ ✔ ✔

localsubjectaccessreviews.authorization.k8s.io ✔

networkpolicies.networking.k8s.io ✔ ✔ ✔ ✔

persistentvolumeclaims ✔ ✔ ✔ ✔

poddisruptionbudgets.policy ✔ ✔ ✔ ✔

podmonitors.monitoring.coreos.com ✔ ✔ ✔ ✔

pods ✔ ✔ ✔ ✔

pods.metrics.k8s.io ✔

podtemplates ✔ ✔ ✔ ✔

policyendpoints.networking.k8s.aws ✔ ✔ ✔ ✔

probes.monitoring.coreos.com ✔ ✔ ✔ ✔

prometheusagents.monitoring.coreos.com ✔ ✔ ✔ ✔

prometheuses.monitoring.coreos.com ✔ ✔ ✔ ✔

prometheusrules.monitoring.coreos.com ✔ ✔ ✔ ✔

replicasets.apps ✔ ✔ ✔ ✔

replicationcontrollers ✔ ✔ ✔ ✔

resourcequotas ✔ ✔ ✔ ✔

rolebindings.rbac.authorization.k8s.io ✔ ✔ ✔ ✔

roles.rbac.authorization.k8s.io ✔ ✔ ✔ ✔

scrapeconfigs.monitoring.coreos.com ✔ ✔ ✔ ✔

secrets ✔ ✔ ✔ ✔

securitygrouppolicies.vpcresources.k8s.aws ✔ ✔ ✔ ✔

serviceaccounts ✔ ✔ ✔ ✔

servicemonitors.monitoring.coreos.com ✔ ✔ ✔ ✔

services ✔ ✔ ✔ ✔

statefulsets.apps ✔ ✔ ✔ ✔

targetgroupbindings.elbv2.k8s.aws ✔ ✔ ✔ ✔

thanosrulers.monitoring.coreos.com ✔ ✔ ✔ ✔

# RBAC Lookup by subject (user/group/serviceaccount) name

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool lookup

SUBJECT | SUBJECT TYPE | SCOPE | NAMESPACE | ROLE | BINDING

+------------------------------------------+----------------+-------------+-------------+------------------------------------------------------+--------------------------------------------------------------+

attachdetach-controller | ServiceAccount | ClusterRole | | system:controller:attachdetach-controller | system:controller:attachdetach-controller

aws-load-balancer-controller | ServiceAccount | ClusterRole | | aws-load-balancer-controller-role | aws-load-balancer-controller-rolebinding

aws-load-balancer-controller | ServiceAccount | Role | kube-system | aws-load-balancer-controller-leader-election-role | aws-load-balancer-controller-leader-election-rolebinding

aws-node | ServiceAccount | ClusterRole | | aws-node | aws-node

bootstrap-signer | ServiceAccount | Role | kube-public | system:controller:bootstrap-signer | system:controller:bootstrap-signer

bootstrap-signer | ServiceAccount | Role | kube-system | system:controller:bootstrap-signer | system:controller:bootstrap-signer

certificate-controller | ServiceAccount | ClusterRole | | system:controller:certificate-controller | system:controller:certificate-controller

cloud-provider | ServiceAccount | Role | kube-system | system:controller:cloud-provider | system:controller:cloud-provider

...

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool lookup system:masters

SUBJECT | SUBJECT TYPE | SCOPE | NAMESPACE | ROLE | BINDING

+----------------+--------------+-------------+-----------+---------------+---------------+

system:masters | Group | ClusterRole | | cluster-admin | cluster-admin

# RBAC List Policy Rules For subject (user/group/serviceaccount) name

$ kubectl rbac-tool policy-rules

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool policy-rules -e '^system:authenticated'

TYPE | SUBJECT | VERBS | NAMESPACE | API GROUP | KIND | NAMES | NONRESOURCEURI | ORIGINATED FROM

+-------+----------------------+--------+-----------+-----------------------+--------------------------+-------+------------------------------------------------------------------------------------------+-----------------------------------------+

Group | system:authenticated | create | * | authentication.k8s.io | selfsubjectreviews | | | ClusterRoles>>system:basic-user

Group | system:authenticated | create | * | authorization.k8s.io | selfsubjectaccessreviews | | | ClusterRoles>>system:basic-user

Group | system:authenticated | create | * | authorization.k8s.io | selfsubjectrulesreviews | | | ClusterRoles>>system:basic-user

Group | system:authenticated | get | * | | | | /healthz,/livez,/readyz,/version,/version/ | ClusterRoles>>system:public-info-viewer

Group | system:authenticated | get | * | | | | /api,/api/*,/apis,/apis/*,/healthz,/livez,/openapi,/openapi/*,/readyz,/version,/version/ | ClusterRoles>>system:discovery

# Generate ClusterRole with all available permissions from the target cluster

kubectl rbac-tool show

# Shows the subject for the current context with which one authenticates with the cluster

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool whoami

{Username: "arn:aws:iam::236747833953:user/leeeuijoo",

UID: "aws-iam-authenticator:236747833953:AIDATOH2FIJQS6NUPMSPJ",

Groups: ["system:authenticated"],

Extra: {accessKeyId: ["AKIATOH2FIJQYBEPSIM7"],

arn: ["arn:aws:iam::236747833953:user/leeeuijoo"],

canonicalArn: ["arn:aws:iam::236747833953:user/leeeuijoo"],

principalId: ["AIDATOH2FIJQS6NUPMSPJ"],

sessionName: [""]}}

# Summarize RBAC roles for subjects : ServiceAccount(default), User, Group

$ kubectl rolesum -h

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rolesum aws-node -n kube-system

ServiceAccount: kube-system/aws-node

Secrets:

Policies:

• [CRB] */aws-node ⟶ [CR] */aws-node

Resource Name Exclude Verbs G L W C U P D DC

cninodes.vpcresources.k8s.aws [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✔ ✖ ✖

eniconfigs.crd.k8s.amazonaws.com [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✖ ✖ ✖

events.[,events.k8s.io] [*] [-] [-] ✖ ✔ ✖ ✔ ✖ ✔ ✖ ✖

namespaces [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✖ ✖ ✖

nodes [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✖ ✖ ✖

pods [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✖ ✖ ✖

policyendpoints.networking.k8s.aws [*] [-] [-] ✔ ✔ ✔ ✖ ✖ ✖ ✖ ✖

policyendpoints.networking.k8s.aws/status [*] [-] [-] ✔ ✖ ✖ ✖ ✖ ✖ ✖ ✖

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rolesum -k Group system:authenticated

Group: system:authenticated

Policies:

• [CRB] */system:basic-user ⟶ [CR] */system:basic-user

Resource Name Exclude Verbs G L W C U P D DC

selfsubjectaccessreviews.authorization.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

selfsubjectreviews.authentication.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

selfsubjectrulesreviews.authorization.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

• [CRB] */system:discovery ⟶ [CR] */system:discovery

• [CRB] */system:public-info-viewer ⟶ [CR] */system:public-info-viewer

# 다른 터미널 [A tool to visualize your RBAC permissions]

$ kubectl rbac-view

## 이후 해당 작업용PC 공인 IP:8800 웹 접속 : 최초 접속 후 정보 가져오는데 다시 시간 걸림 (2~3분 정도 후 화면 출력됨)

echo -e "RBAC View Web http://$(curl -s ipinfo.io/ip):8800"- rbac-view : UI 로 노출

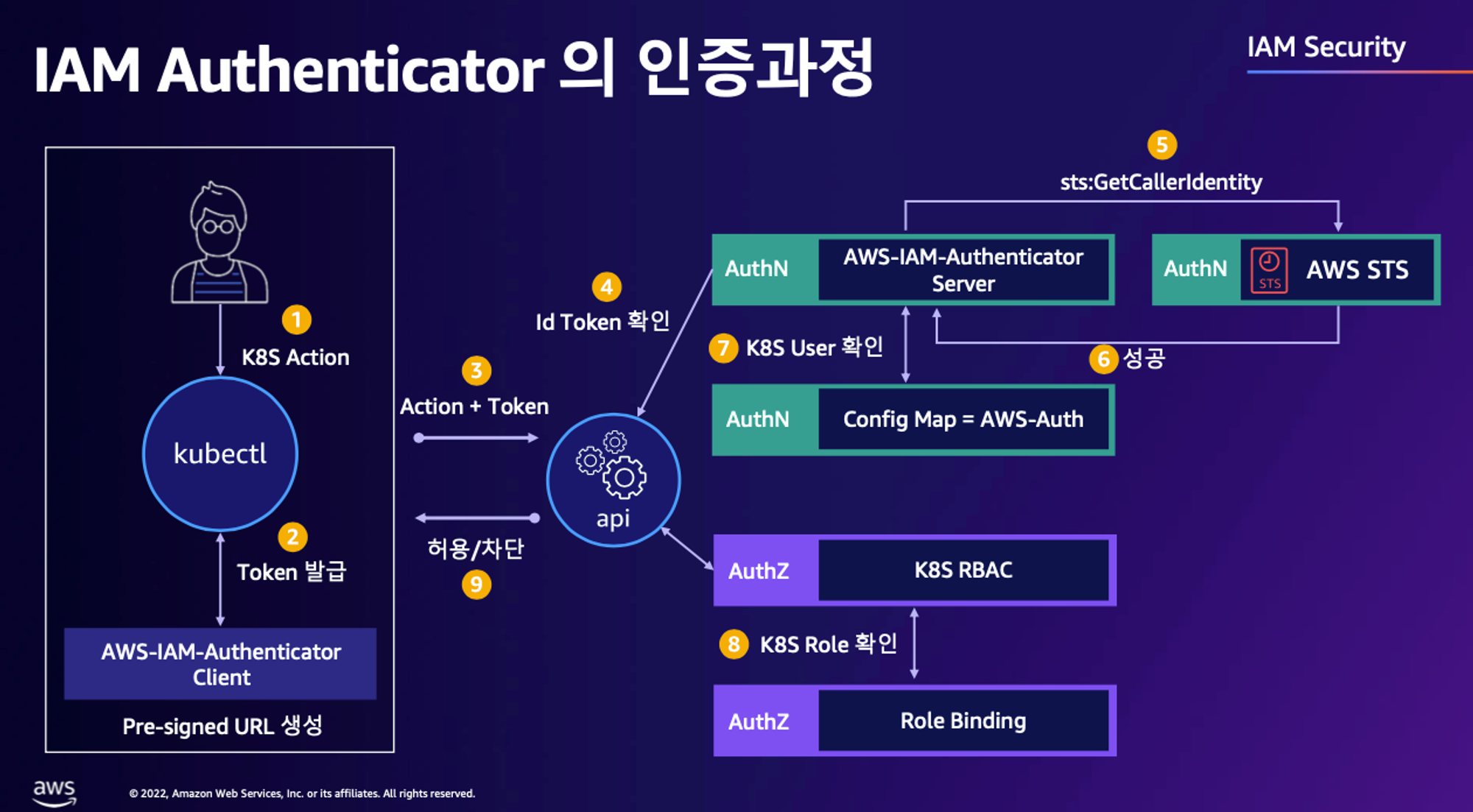

인증 / 인가 완벽 분석

핵심은 인증은 AWS IAM, 인가는 K8S RBAC에서 처리 한다!

-

user 가 kubectl 명령어 날림

-

Bearer Token (Pre-Signed URL) 이 kube-api 로 전달되는 것이 아닌 Token Review 를 위해 Webhook Token Authentication(플러그인)이 받아서 sts GetCallerIndentity 통해 IAM 에 확인 요청을 보냄

-

IAM 이 Validation 하였으면 Webhook Token Authentication 플러그인은 이제 configmap 으로 IAM User/role 을 비교 (예 : user or Group 정보가 리턴)

-

인증 완료

-

RBAC 을 통해 Action 을 취할 수 있는지 확인

-

액션 결과를 User 에게 전달

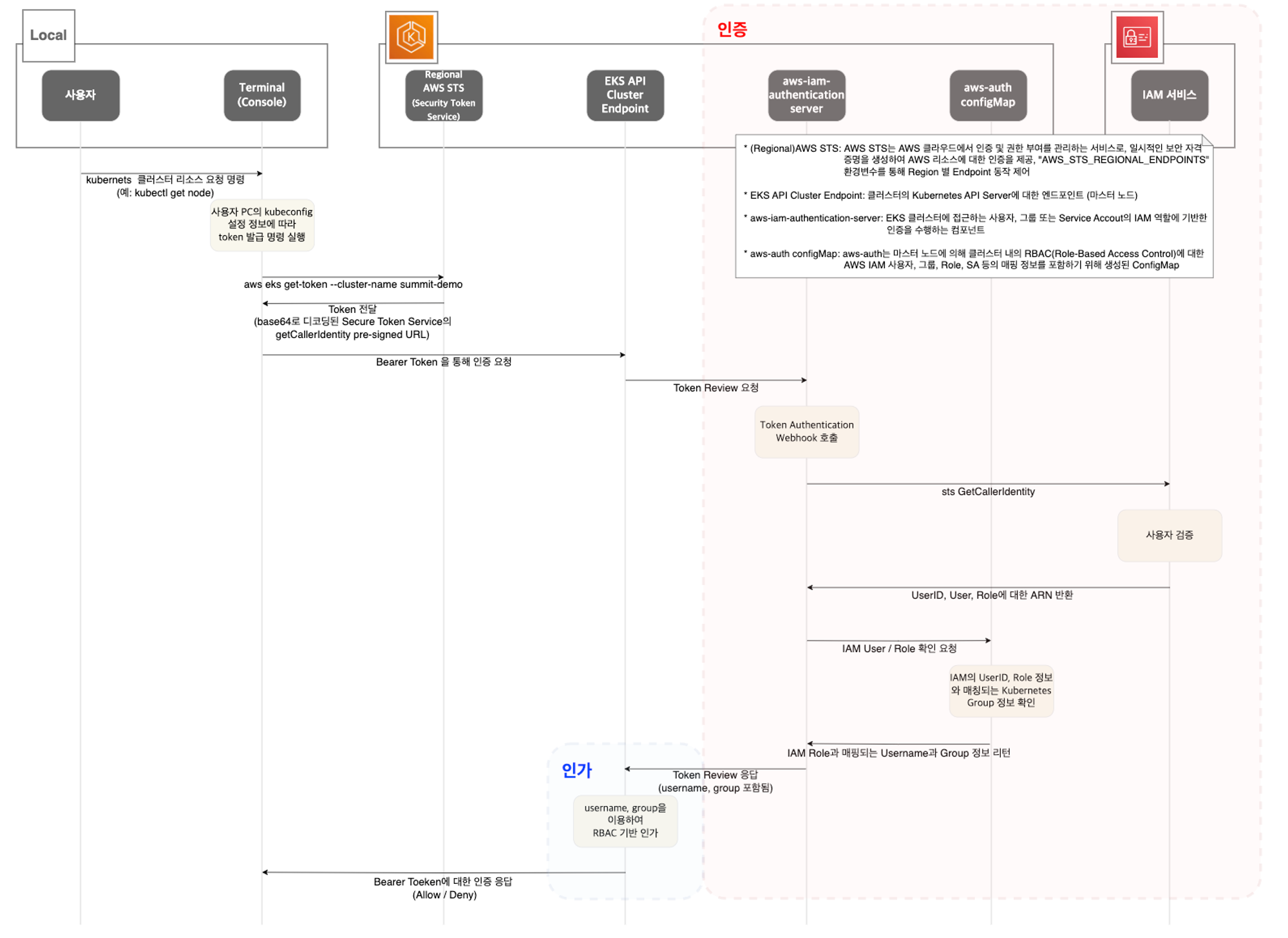

스터디 전 멤버분의 완벽 인증 & 인가 정리 Map

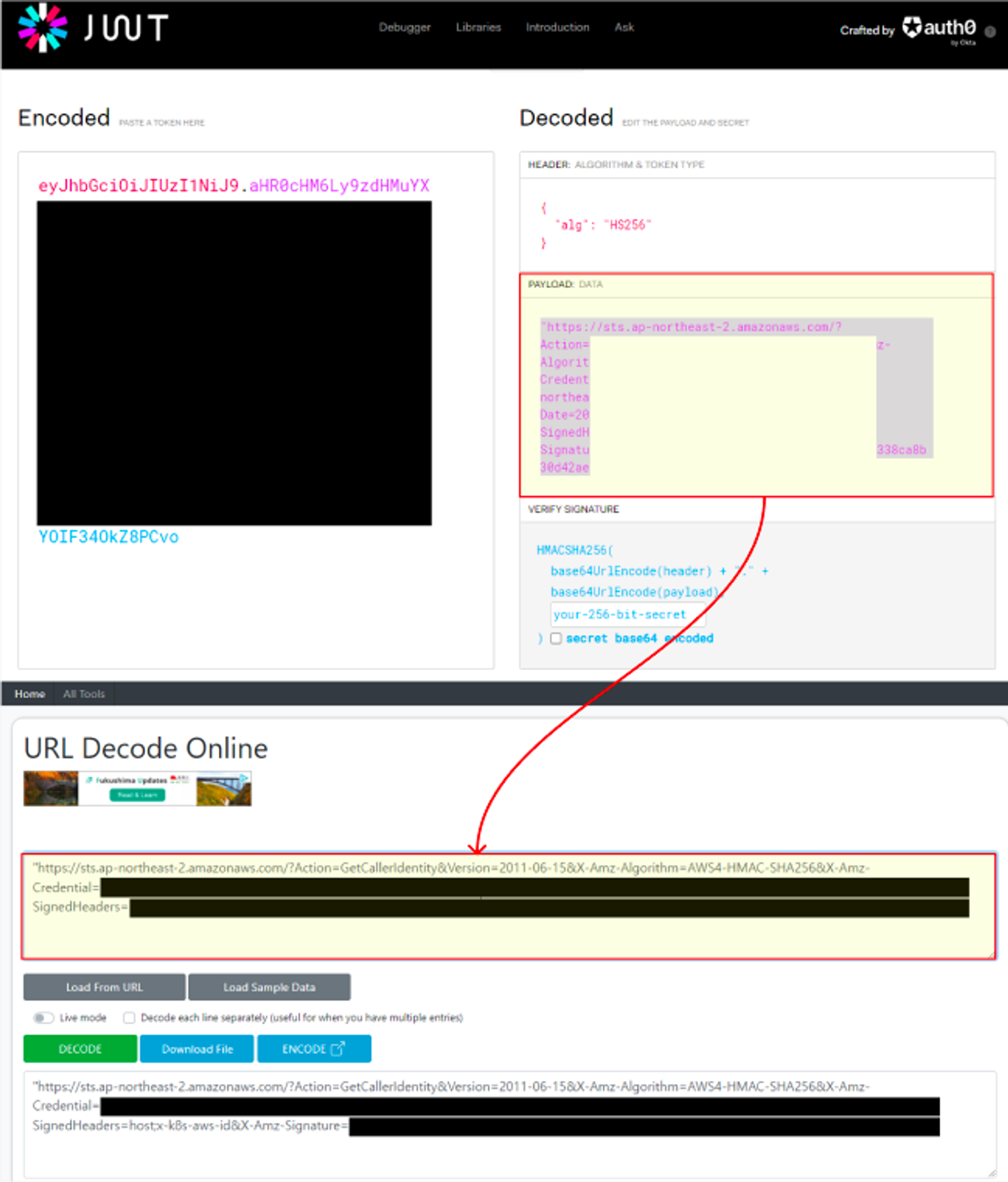

1. kubectl 명령 → aws eks get-token → EKS Service endpoint(STS)에 토큰 요청 ⇒ 응답값 디코드(Pre-Signed URL 이며 GetCallerIdentity..)

-

STS Security Token Service : AWS 리소스에 대한 액세스를 제어할 수 있는 임시 보안 자격 증명(STS)을 생성하여 신뢰받는 사용자에게 제공할 수 있음

-

AWS CLI 버전 1.16.156 이상에서는 별도 aws-iam-authenticator 설치 없이 aws eks get-token으로 사용 가능

# sts caller id의 ARN 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws sts get-caller-identity --query Arn

"arn:aws:iam::236747833953:user/leeeuijoo"

# kubeconfig 정보 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# cat ~/.kube/config | yh

...

users:

- name: leeeuijoo@myeks2.ap-northeast-2.eksctl.io

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- eks

- get-token

- --output

- json

- --cluster-name

- myeks2

- --region

- ap-northeast-2

command: aws

env:

- name: AWS_STS_REGIONAL_ENDPOINTS

value: regional

interactiveMode: IfAvailable

provideClusterInfo: false

# 임시 보안 자격 증명(토큰)을 요청 : expirationTimestamp 시간경과 시 토큰 재발급됨

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks get-token --cluster-name $CLUSTER_NAME | jq -r '.status.token'

k8s-aws-v1.aHR0cHM6Ly9zdHMuYXAtbm9ydGhlYXN0LTIuYW1hem9uYXdzLmNvbS8_QWN0aW9uPUdldENhbGxlcklkZW50aXR5JlZlcnNpb249MjAxMS0wNi0xNSZYLUFtei1BbGdvcml0aG09QVdTNC1ITUFDLVNIQTI1NiZYLUFtei1DcmVkZW50aWFsPUFLSUFUT0gyRklKUVlCRVBTSU03JTJGMjAyNDA0MTIlMkZhcC1ub3J0aGVhc3QtMiUyRnN0cyUyRmF3czRfcmVxdWVzdCZYLUFtei1EYXRlPTIwMjQwNDEyVDEzMzQ1NFomWC1BbXotRXhwaXJlcz02MCZYLUFtei1TaWduZWRIZWFkZXJzPWhvc3QlM0J4LWs4cy1hd3MtaWQmWC1BbXotU2lnbmF0dXJlPTVlMzlmNGM4M2RkODgzMTYzZWIwYmZlN2MyZGNkZDUzZmE4NTE4ZGQxZjk5ZDI1ZjAwZGQ1M2I0YjI5MzhmNTQ

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks get-token --cluster-name $CLUSTER_NAME | jq -r '.status.token'

k8s-aws-v1.aHR0cHM6Ly9zdHMuYXAtbm9ydGhlYXN0LTIuYW1hem9uYXdzLmNvbS8_QWN0aW9uPUdldENhbGxlcklkZW50aXR5JlZlcnNpb249MjAxMS0wNi0xNSZYLUFtei1BbGdvcml0aG09QVdTNC1ITUFDLVNIQTI1NiZYLUFtei1DcmVkZW50aWFsPUFLSUFUT0gyRklKUVlCRVBTSU03JTJGMjAyNDA0MTIlMkZhcC1ub3J0aGVhc3QtMiUyRnN0cyUyRmF3czRfcmVxdWVzdCZYLUFtei1EYXRlPTIwMjQwNDEyVDEzMzQ1NlomWC1BbXotRXhwaXJlcz02MCZYLUFtei1TaWduZWRIZWFkZXJzPWhvc3QlM0J4LWs4cy1hd3MtaWQmWC1BbXotU2lnbmF0dXJlPWI4MjhiNzJjMWRmMDAzNzdmNzlkNjRjNzMxMDU0MTdkYmE0OWYzY2VhMmIzNGE3NmQ4YzYwZjdkNDczZDQzZTk2. kubectl의 Client-Go 라이브러리는 Pre-Signed URL을 Bearer Token으로 EKS API Cluster Endpoint로 요청을 보냄

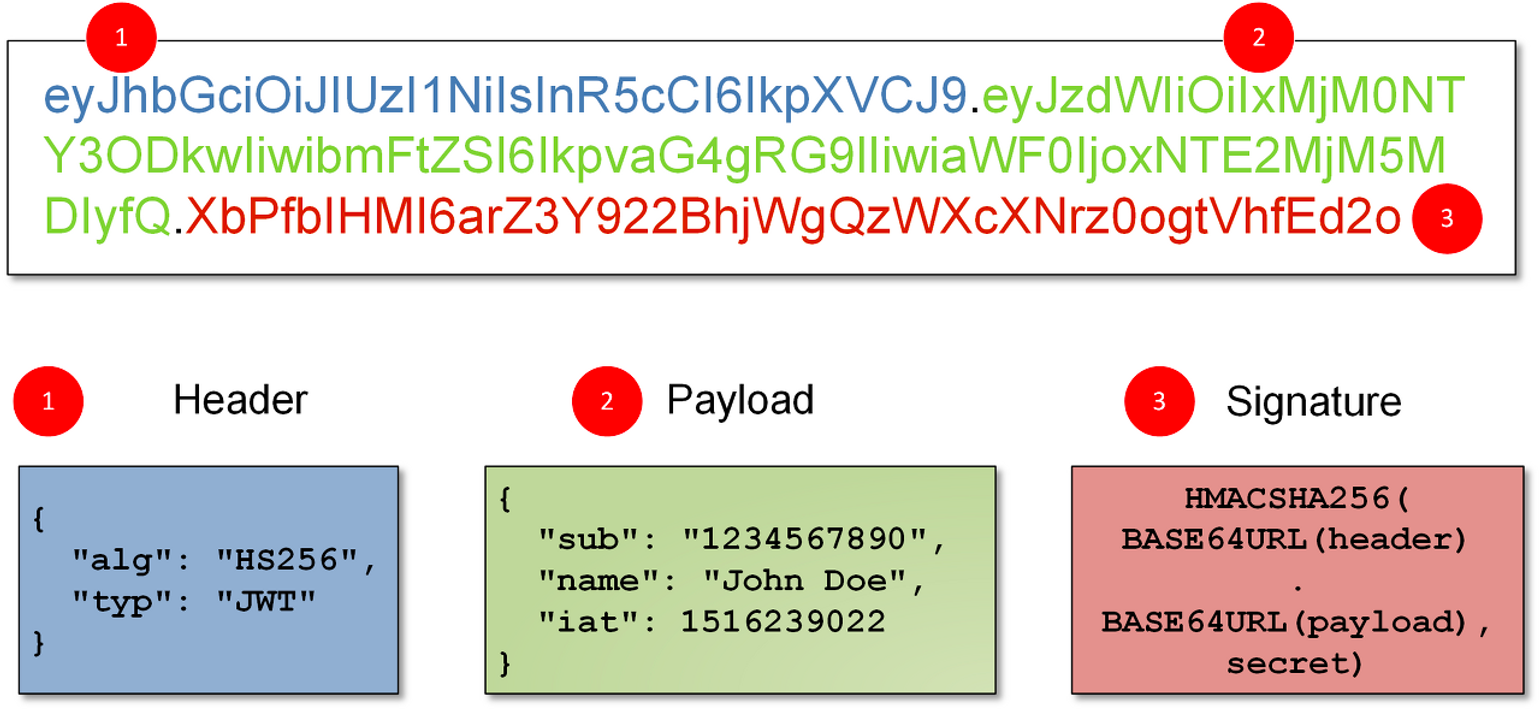

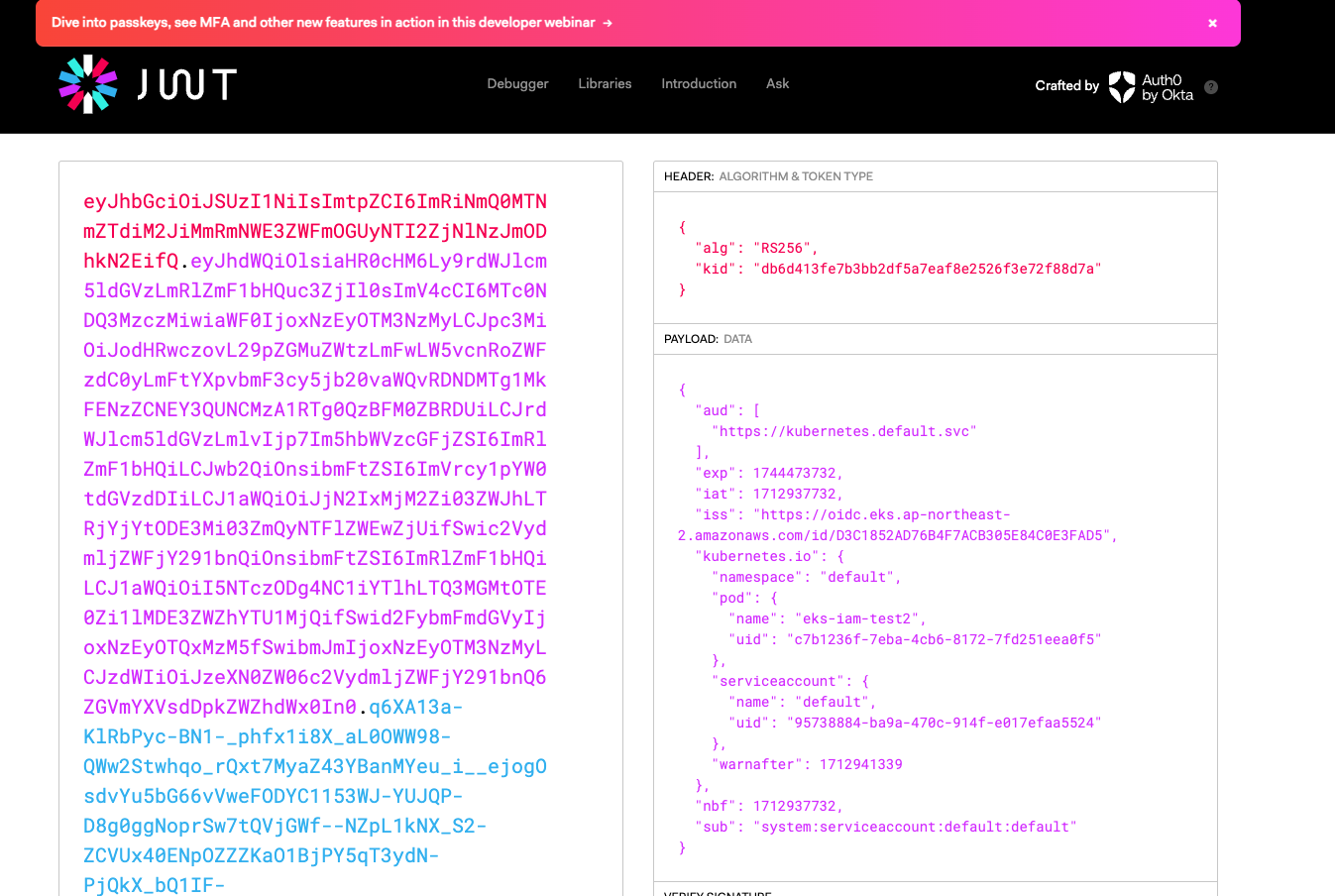

- 토큰을 JWT 로 까보면

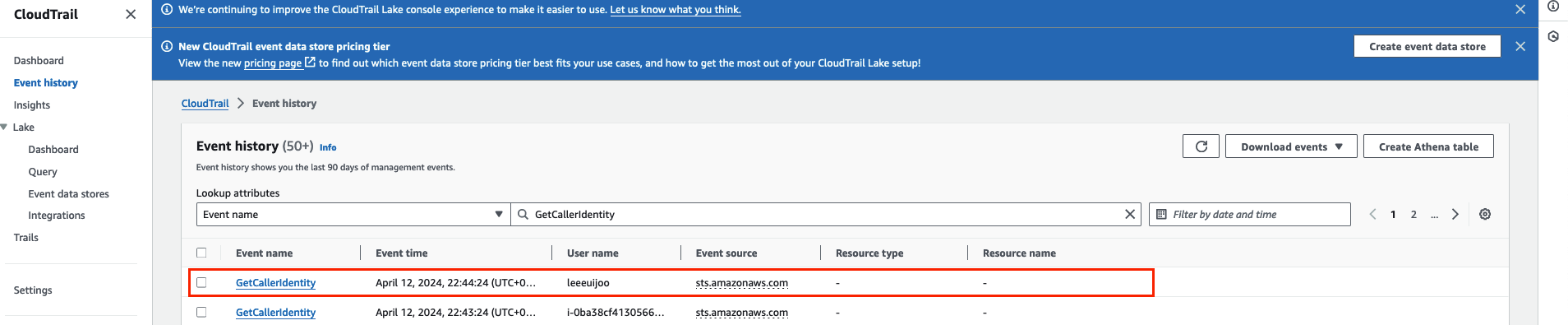

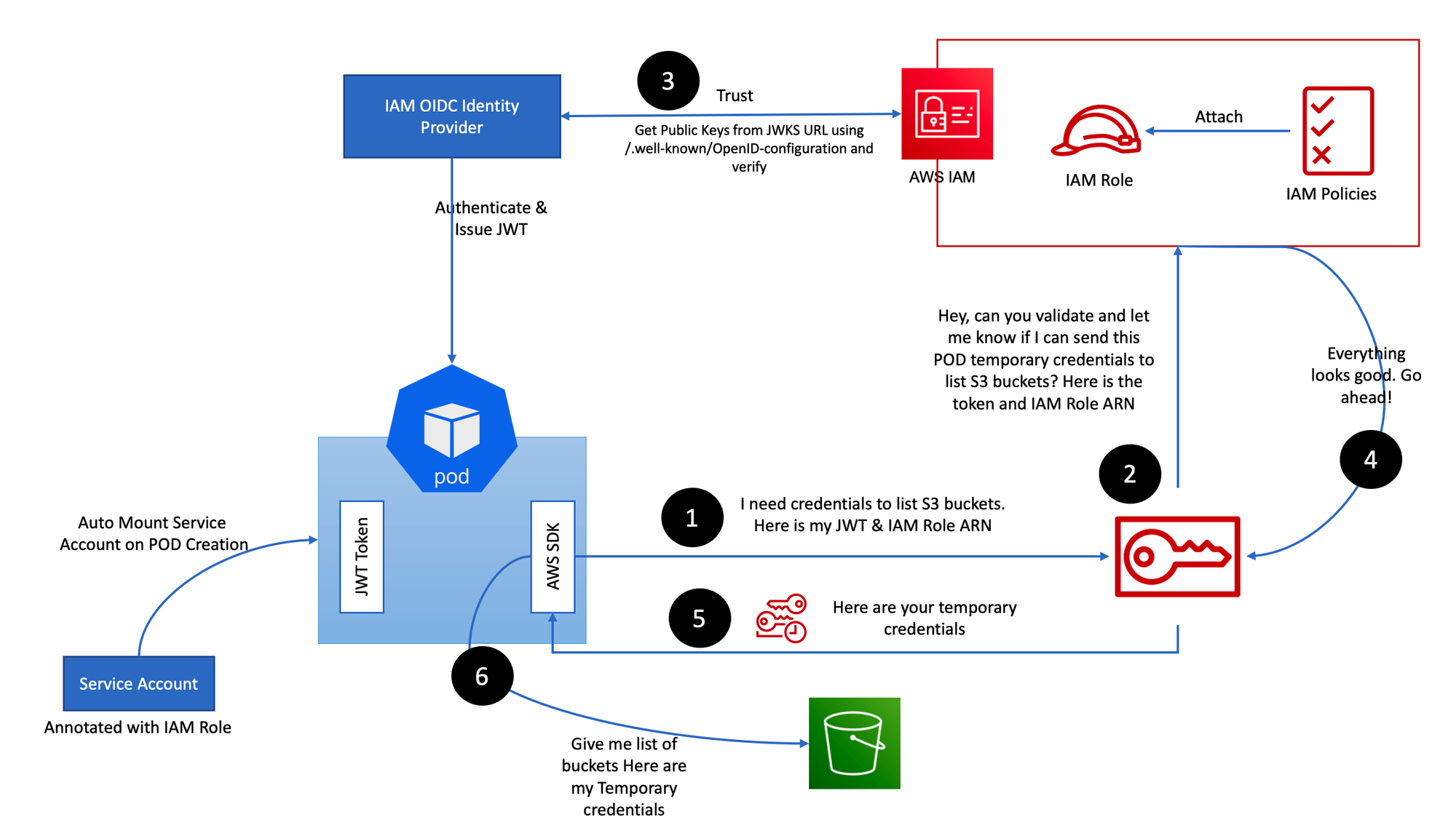

3. EKS API는 Token Review 를 Webhook token authenticator에 요청 ⇒ (STS GetCallerIdentity 호출) AWS IAM 해당 호출 인증 완료 후 User/Role에 대한 ARN 반환

- 참고로 Webhook token authenticator 는 aws-iam-authenticator 를 사용

# tokenreviews api 리소스 확인

## TokenReview 이 수행

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl api-resources | grep authentication

selfsubjectreviews authentication.k8s.io/v1 false SelfSubjectReview

tokenreviews authentication.k8s.io/v1 false TokenReview

# List the fields for supported resources.

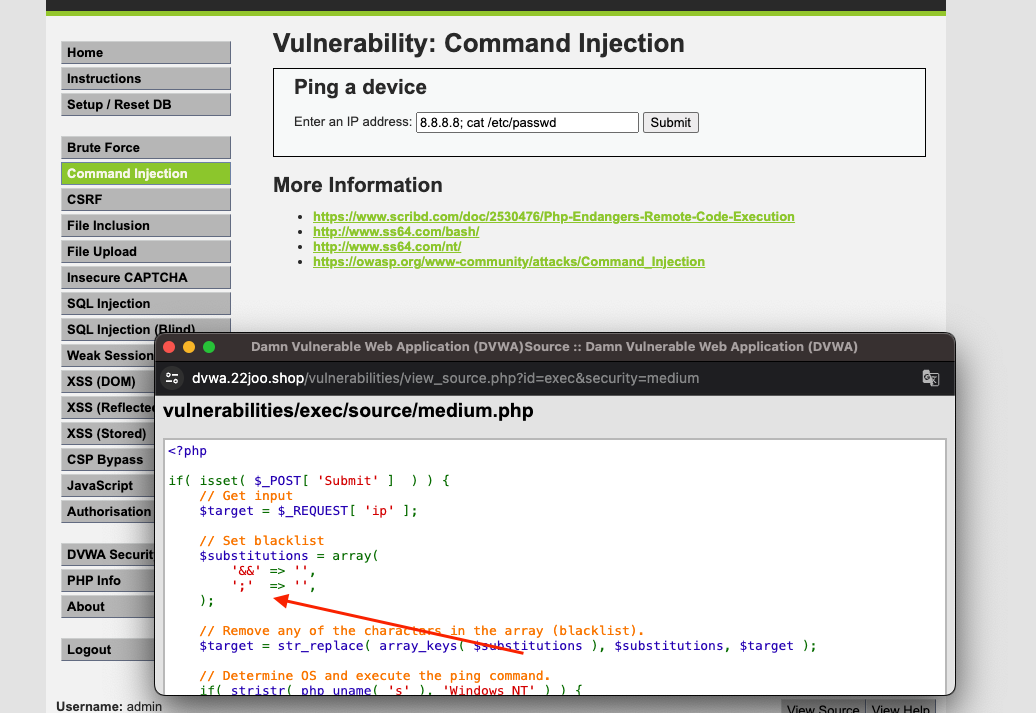

$ kubectl explain tokenreviews4. 이제 쿠버네티스 RBAC 인가를 처리

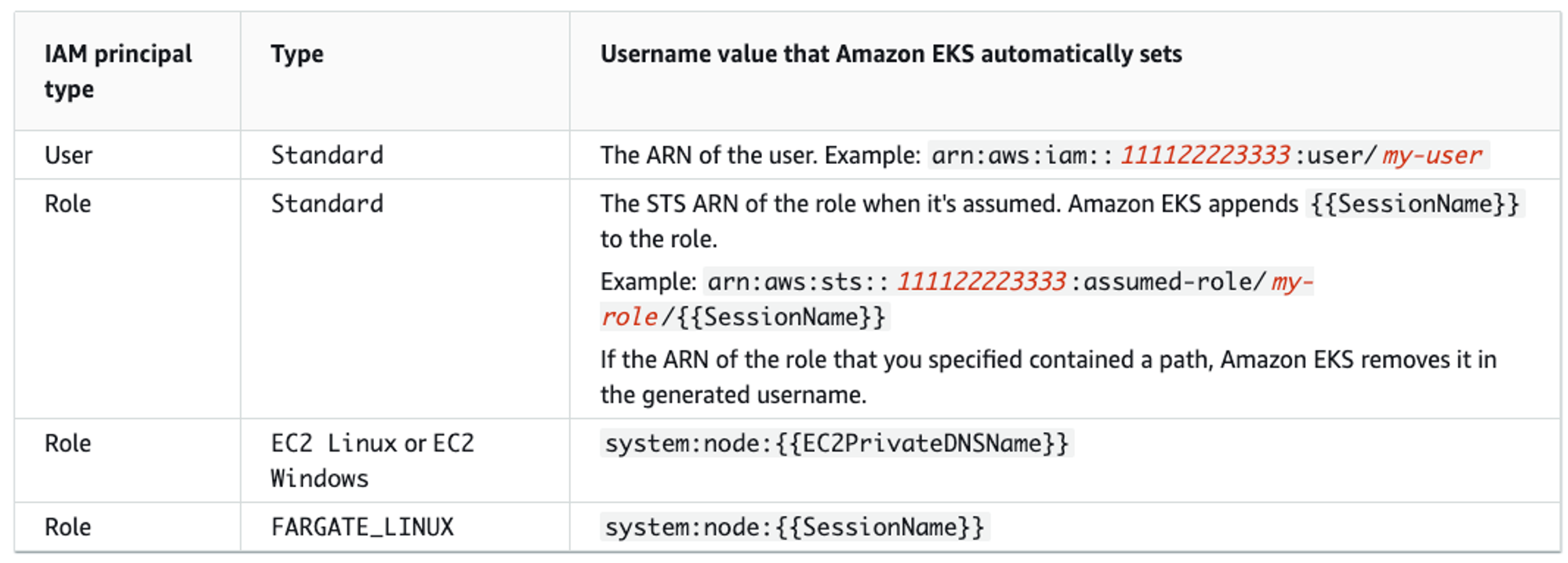

- 해당 IAM User/Role 확인이 되면 k8s aws-auth configmap에서 mapping 정보를 확인하게 됩니다.

- aws-auth 컨피그맵에 'IAM 사용자, 역할 arm, K8S 오브젝트' 로 권한 확인 후 k8s 인가 허가가 되면 최종적으로 동작 실행을 합니다.

- 참고로 EKS를 생성한 IAM principal은 aws-auth 와 상관없이 kubernetes-admin Username으로 system:masters 그룹에 권한을 가짐 - 링크

# Webhook api 리소스 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl api-resources | grep Webhook

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

# validatingwebhookconfigurations 리소스 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get validatingwebhookconfigurations

NAME WEBHOOKS AGE

aws-load-balancer-webhook 3 87m

eks-aws-auth-configmap-validation-webhook 1 107m ## 해당 리소스

kube-prometheus-stack-admission 1 85m

vpc-resource-validating-webhook 2 107m

# aws-auth 컨피그맵 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get cm -n kube-system aws-auth -o yaml | kubectl neat | yh

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

username: system:node:{{EC2PrivateDNSName}}

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

#---<아래 생략(추정), ARN은 EKS를 설치한 IAM User , 여기 있었을경우 만약 실수로 삭제 시 복구가 가능했을까요? 에전에는 존재 헀는데, 이제는 보이지 않게 됨. 예전에는 주체를 확인할 수 있었음

mapUsers: |

- groups:

- system:masters

userarn: arn:aws:iam::111122223333:user/admin

username: kubernetes-admin

# EKS 설치한 IAM User 정보 >> system:authenticated는 어떤 방식으로 추가가 되었는지

$ kubectl rbac-tool whoami

# system:masters , system:authenticated 그룹의 정보 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool lookup system:masters

SUBJECT | SUBJECT TYPE | SCOPE | NAMESPACE | ROLE | BINDING

+----------------+--------------+-------------+-----------+---------------+---------------+

system:masters | Group | ClusterRole | | cluster-admin | cluster-admin

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rbac-tool lookup system:authenticated

SUBJECT | SUBJECT TYPE | SCOPE | NAMESPACE | ROLE | BINDING

+----------------------+--------------+-------------+-----------+---------------------------+---------------------------+

system:authenticated | Group | ClusterRole | | system:basic-user | system:basic-user

system:authenticated | Group | ClusterRole | | system:discovery | system:discovery

system:authenticated | Group | ClusterRole | | system:public-info-viewer | system:public-info-viewer

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rolesum -k Group system:masters

Group: system:masters

Policies:

• [CRB] */cluster-admin ⟶ [CR] */cluster-admin

Resource Name Exclude Verbs G L W C U P D DC

*.* [*] [-] [-] ✔ ✔ ✔ ✔ ✔ ✔ ✔ ✔

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl rolesum -k Group system:authenticated

Group: system:authenticated

Policies:

• [CRB] */system:basic-user ⟶ [CR] */system:basic-user

Resource Name Exclude Verbs G L W C U P D DC

selfsubjectaccessreviews.authorization.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

selfsubjectreviews.authentication.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

selfsubjectrulesreviews.authorization.k8s.io [*] [-] [-] ✖ ✖ ✖ ✔ ✖ ✖ ✖ ✖

• [CRB] */system:discovery ⟶ [CR] */system:discovery

• [CRB] */system:public-info-viewer ⟶ [CR] */system:public-info-viewer

# system:masters 그룹이 사용 가능한 클러스터 롤 확인 : cluster-admin

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl describe clusterrolebindings.rbac.authorization.k8s.io cluster-admin

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

Role:

Kind: ClusterRole

Name: cluster-admin

Subjects:

Kind Name Namespace

---- ---- ---------

Group system:masters

# cluster-admin 의 PolicyRule 확인 : 모든 리소스에 사용 가능

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl describe clusterrole cluster-admin

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

*.* [] [] [*]

[*] [] [*]

# system:authenticated 그룹이 사용 가능한 클러스터 롤 확인

kubectl describe ClusterRole system:discovery

kubectl describe ClusterRole system:public-info-viewer

kubectl describe ClusterRole system:basic-user

kubectl describe ClusterRole eks:podsecuritypolicy:privileged새로운 데브옵스 신입사원을 위한 PC 에 eks 를 사용할 수 있도록 설정해봅시다.

-

생성한 User 는 실습 이후 삭제 예정!

-

터미널 하나 더 열어서 Bastion-2 인스턴스 SSH 접속

- 지금은 작업용 EC2에서 실행

- [myeks-bastion] testuser 사용자 생성

# testuser 사용자 생성

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws iam create-user --user-name testuser

{

"User": {

"Path": "/",

"UserName": "testuser",

"UserId": "AIDATOH2FIJQZQUSI5J7W",

"Arn": "arn:aws:iam::236747833953:user/testuser",

"CreateDate": "2024-04-12T14:03:12+00:00"

}

}

# 사용자에게 프로그래밍 방식 액세스 권한 부여

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws iam create-access-key --user-name testuser

{

"AccessKey": {

"UserName": "testuser",

"AccessKeyId": "AKIATOH2FIJQSSIHIZGL",

"Status": "Active",

"SecretAccessKey": "mmPrhUBZ/Y7tNsO05m7EGhC4NHHJ16biRTKtCW1B",

"CreateDate": "2024-04-12T14:03:28+00:00"

}

}

# testuser 사용자에 정책을 추가

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws iam attach-user-policy --policy-arn arn:aws:iam::aws:policy/AdministratorAccess --user-name testuser

# get-caller-identity 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws sts get-caller-identity --query Arn

"arn:aws:iam::236747833953:user/leeeuijoo"

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl whoami

arn:aws:iam::236747833953:user/leeeuijoo

- [myeks-bastion-2] testuser 자격증명 설정 및 확인

# 자격증명 설정이 안되어 있기 때문에 오류를 뿜음

[root@myeks2-bastion-2 ~]# aws sts get-caller-identity --query Arn

Unable to locate credentials. You can configure credentials by running "aws configure".

# testuser 자격증명 설정

[root@myeks2-bastion-2 ~]# aws configure

AWS Access Key ID [None]:

..

[root@myeks2-bastion-2 ~]# aws sts get-caller-identity --query Arn

"arn:aws:iam::236747833953:user/testuser"

# kubectl 시도 >> testuser도 AdministratorAccess 권한을 가지고 있는데, 실패 하는 이유는 Kubeconfig 파일이 없기 때문

[root@myeks2-bastion-2 ~]# clear

[root@myeks2-bastion-2 ~]# kubectl get node -v6

I0412 23:09:59.345744 1821 round_trippers.go:553] GET http://localhost:8080/api?timeout=32s in 0 milliseconds

E0412 23:09:59.345863 1821 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

I0412 23:09:59.345904 1821 cached_discovery.go:120] skipped caching discovery info due to Get \

...

[root@myeks2-bastion-2 ~]# ls ~/.kube

ls: cannot access /root/.kube: No such file or directory- [myeks-bastion] testuser에 system:masters 그룹 부여로 EKS 관리자 수준 권한 설정

# 방안1 : eksctl 사용 >> iamidentitymapping 실행 시 aws-auth 컨피그맵 작성해줌

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl create iamidentitymapping --cluster $CLUSTER_NAME --username testuser --group system:masters --arn arn:aws:iam::$ACCOUNT_ID:user/testuser

2024-04-12 23:13:26 [ℹ] checking arn arn:aws:iam::236747833953:user/testuser against entries in the auth ConfigMap

2024-04-12 23:13:26 [ℹ] adding identity "arn:aws:iam::236747833953:user/testuser" to auth ConfigMap

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::236747833953:user/testuser testuser system:masters

- 이전에 aws-auth configmap 에서 보이지 않던 mapUsers 가 생성됨

- 이유는 aws-eks-auth-configmap-validation-webhook 이 configmap Update 를 수행하기 때문임

# reopened with the relevant failures.

#

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

username: system:node:{{EC2PrivateDNSName}}

mapUsers: |

- groups:

- system:masters

userarn: arn:aws:iam::236747833953:user/testuser

username: testuser- [myeks-bastion-2] testuser kubeconfig 생성 및 kubectl 사용 확인

# testuser kubeconfig 생성 >> aws eks update-kubeconfig 실행이 가능한 이유는 testuser 생성 시에 권한을 줬가 때문입니다.

[root@myeks2-bastion-2 ~]# aws eks update-kubeconfig --name $CLUSTER_NAME --user-alias testuser

Added new context testuser to /root/.kube/config

# 첫번째 bastion ec2의 config와 비교

-> 내용은 차이가 없습니다.

# kubectl 사용 확인

(testuser:N/A) [root@myeks2-bastion-2 ~]# kubectl ns default

Context "testuser" modified.

Active namespace is "default".

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get node -v6

I0412 23:21:36.278032 1988 loader.go:395] Config loaded from file: /root/.kube/config

I0412 23:21:36.966772 1988 round_trippers.go:553] GET https://D3C1852AD76B4F7ACB305E84C0E3FAD5.sk1.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 200 OK in 680 milliseconds

NAME STATUS ROLES AGE VERSION

ip-192-168-1-231.ap-northeast-2.compute.internal Ready <none> 136m v1.28.5-eks-5e0fdde

ip-192-168-2-179.ap-northeast-2.compute.internal Ready <none> 136m v1.28.5-eks-5e0fdde

ip-192-168-3-12.ap-northeast-2.compute.internal Ready <none> 136m v1.28.5-eks-5e0fdde

# rbac-tool 후 확인 >> 기존 계정과 비교해봅시다.

{Username: "testuser",

UID: "aws-iam-authenticator:236747833953:AIDATOH2FIJQZQUSI5J7W",

Groups: ["system:masters",

"system:authenticated"],

Extra: {accessKeyId: ["AKIATOH2FIJQSSIHIZGL"],

arn: ["arn:aws:iam::236747833953:user/testuser"],

canonicalArn: ["arn:aws:iam::236747833953:user/testuser"],

principalId: ["AIDATOH2FIJQZQUSI5J7W"],

sessionName: [""]}}

- Configmap 의 중요도를 테스트 하기 위해 Configmap 을 수정해보겠습니다.

# 아래 edit로 mapUsers 내용 직접 수정 system:authenticated

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl edit cm -n kube-system aws-auth

configmap/aws-auth edited

# 바뀐 것 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::236747833953:user/testuser testuser system:authenticated

- [myeks-bastion-2] testuser kubectl 사용 확인

- 실패 :

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get node -v6

I0412 23:26:01.651031 2163 loader.go:395] Config loaded from file: /root/.kube/config

I0412 23:26:02.599377 2163 round_trippers.go:553] GET https://D3C1852AD76B4F7ACB305E84C0E3FAD5.sk1.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 403 Forbidden in 936 milliseconds

I0412 23:26:02.599785 2163 helpers.go:246] server response object: [{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "nodes is forbidden: User \"testuser\" cannot list resource \"nodes\" in API group \"\" at the cluster scope",

"reason": "Forbidden",

"details": {

"kind": "nodes"

},

"code": 403

}]

Error from server (Forbidden): nodes is forbidden: User "testuser" cannot list resource "nodes" in API group "" at the cluster scope

$ kubectl api-resources -v5

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl api-resources -v5

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

selfsubjectreviews authentication.k8s.io/v1 false SelfSubjectReview

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

eniconfigs crd.k8s.amazonaws.com/v1alpha1 false ENIConfig

endpointslices discovery.k8s.io/v1 true EndpointSlice

ingressclassparams elbv2.k8s.aws/v1beta1 false IngressClassParams

targetgroupbindings elbv2.k8s.aws/v1beta1 true TargetGroupBinding

events ev events.k8s.io/v1 true Event

flowschemas flowcontrol.apiserver.k8s.io/v1beta3 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta3 false PriorityLevelConfiguration

nodes metrics.k8s.io/v1beta1 false NodeMetrics

pods metrics.k8s.io/v1beta1 true PodMetrics

alertmanagerconfigs amcfg monitoring.coreos.com/v1alpha1 true AlertmanagerConfig

alertmanagers am monitoring.coreos.com/v1 true Alertmanager

podmonitors pmon monitoring.coreos.com/v1 true PodMonitor

probes prb monitoring.coreos.com/v1 true Probe

prometheusagents promagent monitoring.coreos.com/v1alpha1 true PrometheusAgent

prometheuses prom monitoring.coreos.com/v1 true Prometheus

prometheusrules promrule monitoring.coreos.com/v1 true PrometheusRule

scrapeconfigs scfg monitoring.coreos.com/v1alpha1 true ScrapeConfig

servicemonitors smon monitoring.coreos.com/v1 true ServiceMonitor

thanosrulers ruler monitoring.coreos.com/v1 true ThanosRuler

policyendpoints networking.k8s.aws/v1alpha1 true PolicyEndpoint

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment

cninodes cnd vpcresources.k8s.aws/v1alpha1 false CNINode

securitygrouppolicies sgp vpcresources.k8s.aws/v1beta1 true SecurityGroupPolicy

- [myeks-bastion]에서 testuser IAM 맵핑 삭제

- mapUsers 에서 삭제됨

# testuser IAM 맵핑 삭제

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl delete iamidentitymapping --cluster $CLUSTER_NAME --arn arn:aws:iam::$ACCOUNT_ID:user/testuser

2024-04-12 23:28:35 [ℹ] removing identity "arn:aws:iam::236747833953:user/testuser" from auth ConfigMap (username = "testuser", groups = ["system:authenticated"])

# Get IAM identity mapping(s)

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get cm -n kube-system aws-auth -o yaml | yh

...

mapUsers: |

[]

kind: ConfigMap

metadata:

creationTimestamp: "2024-04-12T12:04:18Z"

name: aws-auth

namespace: kube-system

resourceVersion: "44284"

uid: fad6d576-294c-42e6-882e-e5012465be0a- [myeks-bastion-2] testuser kubectl 사용 확인

- 전현 인증 인가가 나질 않음

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get node -v6

I0412 23:30:23.250487 2378 loader.go:395] Config loaded from file: /root/.kube/config

I0412 23:30:24.646041 2378 round_trippers.go:553] GET https://D3C1852AD76B4F7ACB305E84C0E3FAD5.sk1.ap-northeast-2.eks.amazonaws.com/api/v1/nodes?limit=500 401 Unauthorized in 1386 milliseconds

I0412 23:30:24.646308 2378 helpers.go:246] server response object: [{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}]

error: You must be logged in to the server (Unauthorized)

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl api-resources -v5

E0412 23:30:29.776461 2432 memcache.go:265] couldn't get current server API group list: the server has asked for the client to provide credentials

I0412 23:30:29.776486 2432 cached_discovery.go:120] skipped caching discovery info due to the server has asked for the client to provide credentials

NAME SHORTNAMES APIVERSION NAMESPACED KIND

I0412 23:30:29.776667 2432 helpers.go:246] server response object: [{

"metadata": {},

"status": "Failure",

"message": "the server has asked for the client to provide credentials",

"reason": "Unauthorized",

"details": {

"causes": [

{

"reason": "UnexpectedServerResponse",

"message": "unknown"

}

]

},

"code": 401

}]

error: You must be logged in to the server (the server has asked for the client to provide credentials)Sample Config File

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::111122223333:role/my-role

username: system:node:{{EC2PrivateDNSName}}

- groups:

- eks-console-dashboard-full-access-group

rolearn: arn:aws:iam::111122223333:role/my-console-viewer-role

username: my-console-viewer-role

mapUsers: |

- groups:

- system:masters

userarn: arn:aws:iam::111122223333:user/admin

username: admin

- groups:

- eks-console-dashboard-restricted-access-group

userarn: arn:aws:iam::444455556666:user/my-user

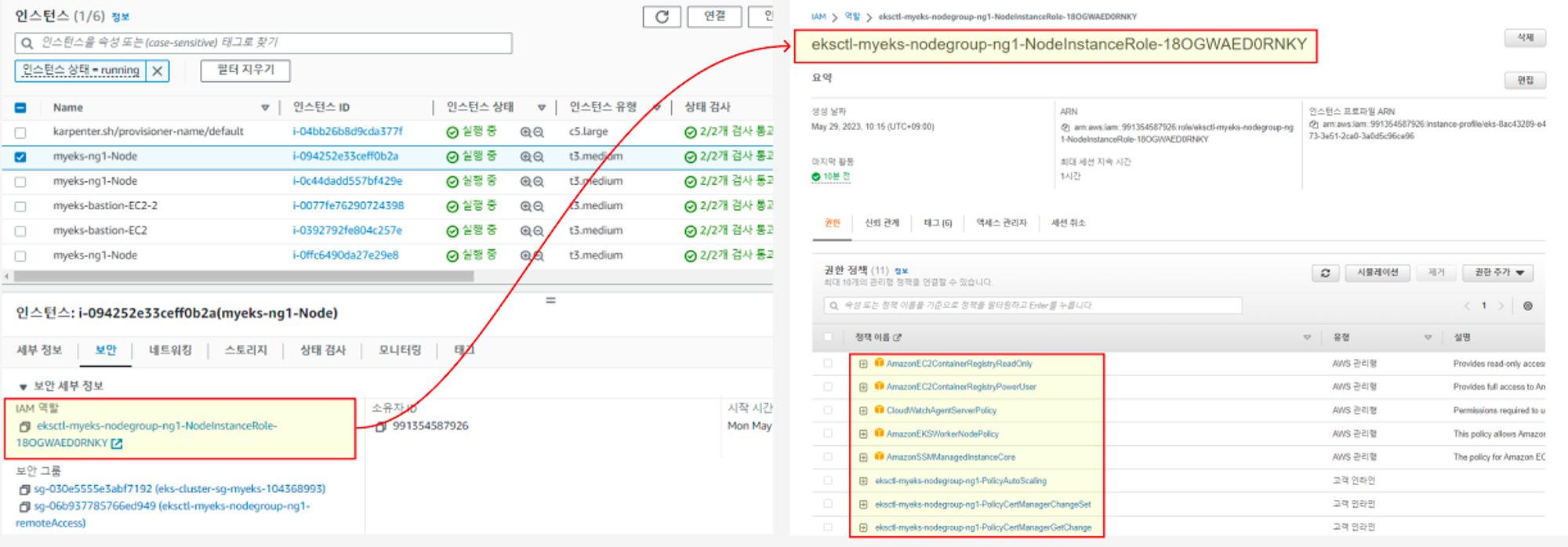

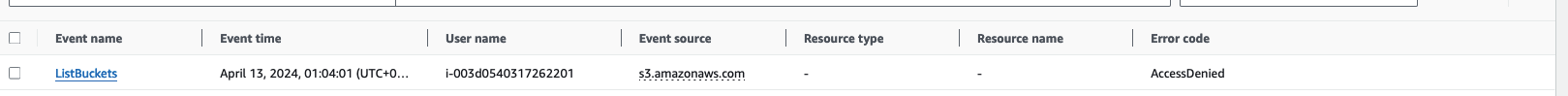

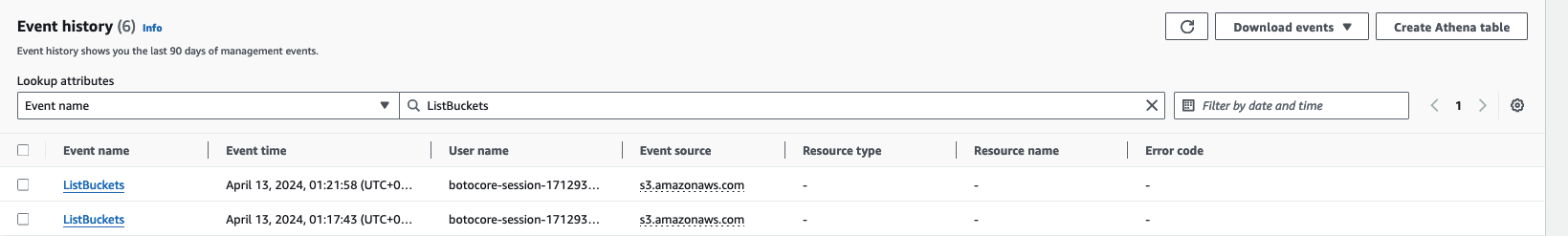

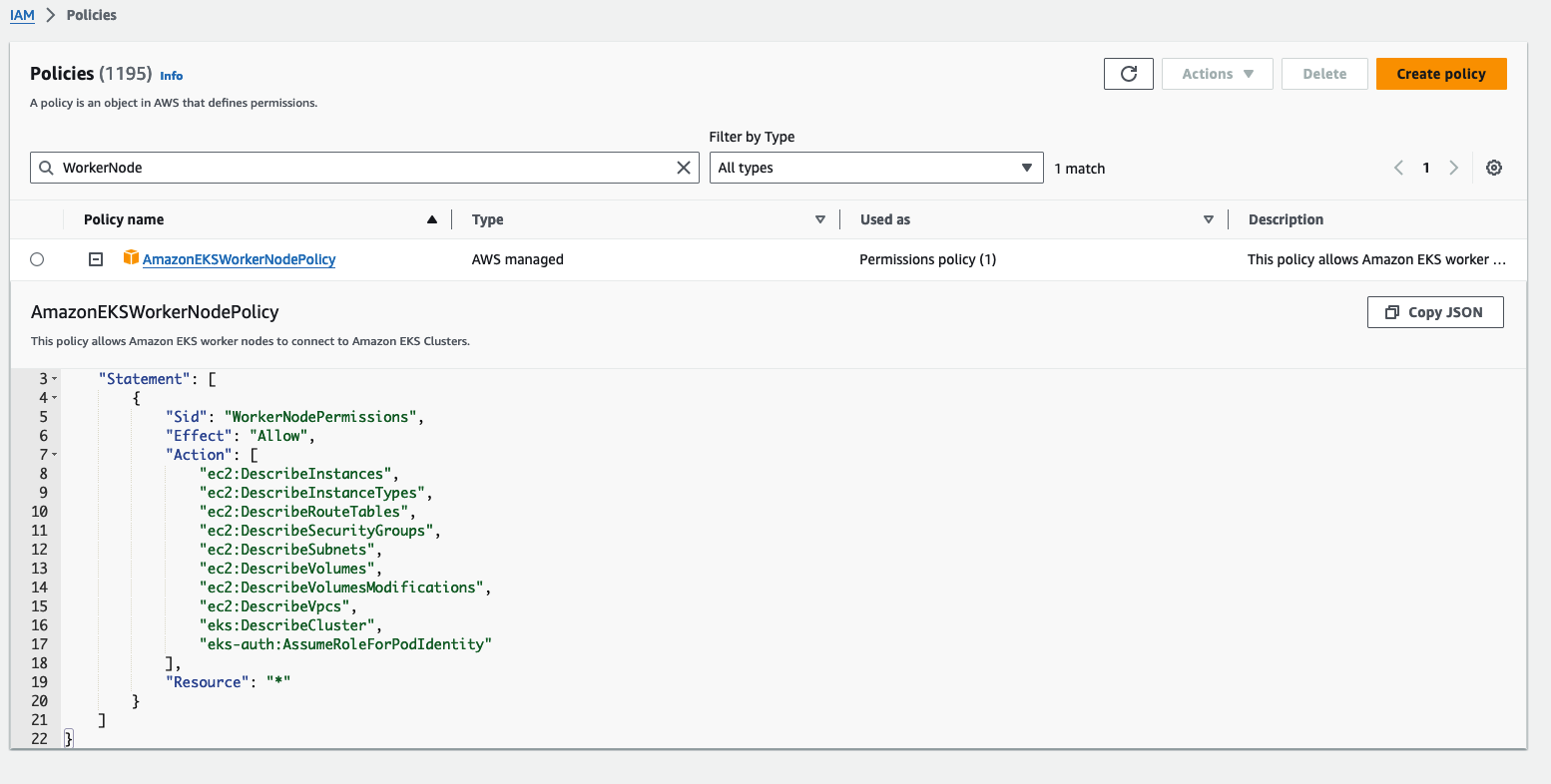

username: my-user- EC2 Instance Profile(IAM Role)에 맵핑된 k8s rbac 확인 해보기

- 노드 mapRoles 확인

# 노드에 STS ARN 정보 확인 : Role 뒤에는 구분자인 인스턴스 ID 입니다.

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node aws sts get-caller-identity --query Arn; done

"arn:aws:sts::236747833953:assumed-role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G/i-0ba38cf4130566468"

"arn:aws:sts::236747833953:assumed-role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G/i-0b895572c43f3680b"

"arn:aws:sts::236747833953:assumed-role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G/i-003d0540317262201"

# aws-auth 컨피그맵 확인 >> system:nodes 와 system:bootstrappers 의 권한은 어떤게 있는지

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl describe configmap -n kube-system aws-auth

Name: aws-auth

Namespace: kube-system

Labels: <none>

Annotations: <none>

Data

====

mapRoles:

----

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

username: system:node:{{EC2PrivateDNSName}}

mapUsers:

----

[]

BinaryData

====

Events: <none>

# Get IAM identity mapping(s)

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes- awscli 파드를 추가하고, 해당 노드(EC2)의 IMDS 정보 확인 : AWS CLI v2 파드 생성

# awscli 파드 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: awscli-pod

spec:

replicas: 2

selector:

matchLabels:

app: awscli-pod

template:

metadata:

labels:

app: awscli-pod

spec:

containers:

- name: awscli-pod

image: amazon/aws-cli

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 생성 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

awscli-pod-5bdb44b5bd-9b99k 1/1 Running 0 16s 192.168.1.162 ip-192-168-1-231.ap-northeast-2.compute.internal <none> <none>

awscli-pod-5bdb44b5bd-g6gdk 1/1 Running 0 16s 192.168.3.16 ip-192-168-3-12.ap-northeast-2.compute.internal <none> <none>

# 파드 이름 변수 지정

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# APODNAME1=$(kubectl get pod -l app=awscli-pod -o jsonpath={.items[0].metadata.name})

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# APODNAME2=$(kubectl get pod -l app=awscli-pod -o jsonpath={.items[1].metadata.name})

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# echo $APODNAME1, $APODNAME2

awscli-pod-5bdb44b5bd-9b99k, awscli-pod-5bdb44b5bd-g6gdk

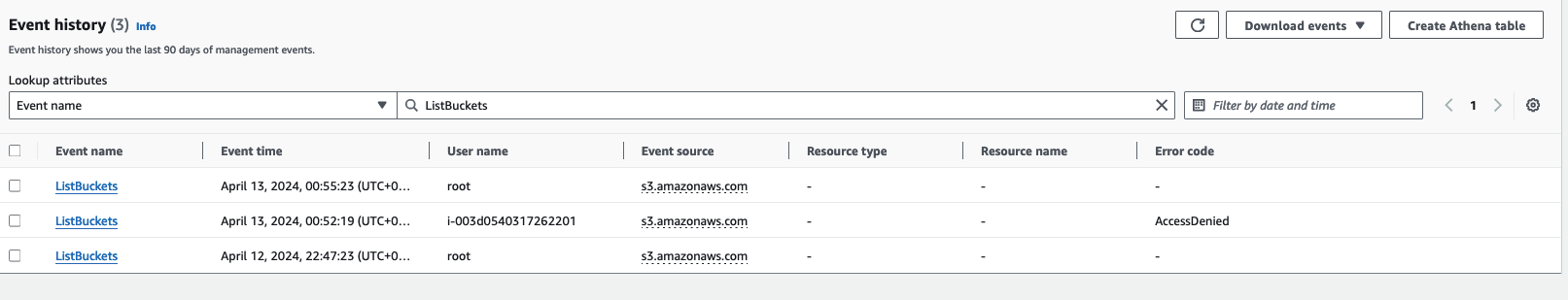

# awscli 파드에서 EC2 InstanceProfile(IAM Role)의 ARN 정보 확인 - 엥 이게 왜 되노

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl exec -it $APODNAME1 -- aws sts get-caller-identity --query Arn

"arn:aws:sts::236747833953:assumed-role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G/i-0ba38cf4130566468"

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl exec -it $APODNAME2 -- aws sts get-caller-identity --query Arn

"arn:aws:sts::236747833953:assumed-role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G/i-003d0540317262201"

# awscli 파드에서 EC2 InstanceProfile(IAM Role)을 사용하여 AWS 서비스 정보 확인 >> 별도 IAM 자격 증명이 없는데 어떻게 조회가 가능한 것일까요?

kubectl exec -it $APODNAME1 -- aws ec2 describe-instances --region ap-northeast-2 --output table --no-cli-pager

kubectl exec -it $APODNAME2 -- aws ec2 describe-vpcs --region ap-northeast-2 --output table --no-cli-pager

- 조회가 가능한 이유는 EC2 인스턴스의 IAM Role 의 권한 때문입니다.

- 이러한 권한들을 Pod 가 사용이 가능합니다.

# EC2 메타데이터 확인 : IDMSv1은 Disable, IDMSv2 활성화 상태, IAM Role - 링크

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl exec -it $APODNAME1 -- bash

bash-4.2#

---------------

bash-4.2# curl -s http://169.254.169.254/ -v

* Trying 169.254.169.254:80...

* Connected to 169.254.169.254 (169.254.169.254) port 80

> GET / HTTP/1.1

> Host: 169.254.169.254

> User-Agent: curl/8.3.0

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Content-Length: 0

< Date: Fri, 12 Apr 2024 14:45:28 GMT

< Server: EC2ws

< Connection: close

< Content-Type: text/plain

<

* Closing connection

# Token 요청

bash-4.2# curl -s -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600" ; echo

AQAEAGvssGgyhaNFZdQEwAJ4-GhOCOZ5t-w1_mZsfyymWFB0OW4WEw==

# Token을 이용한 IMDSv2 사용

bash-4.2# TOKEN=$(curl -s -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600")

bash-4.2# curl -s -H "X-aws-ec2-metadata-token: $TOKEN" –v http://169.254.169.254/ ; echo

1.0

2007-01-19

2007-03-01

2007-08-29

2007-10-10

2007-12-15

2008-02-01

...

bash-4.2# curl -s -H "X-aws-ec2-metadata-token: $TOKEN" –v http://169.254.169.254/latest/ ; echo

dynamic

meta-data

user-data

...

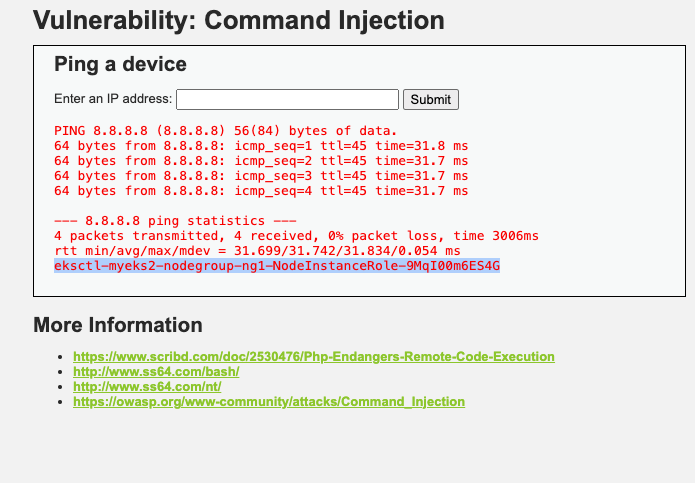

bash-4.2# curl -s -H "X-aws-ec2-metadata-token: $TOKEN" –v http://169.254.169.254/latest/meta-data/iam/security-credentials/ ; echo

eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

# 위에서 출력된 IAM Role을 아래 입력 후 확인

curl -s -H "X-aws-ec2-metadata-token: $TOKEN" –v http://169.254.169.254/latest/meta-data/iam/security-credentials/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

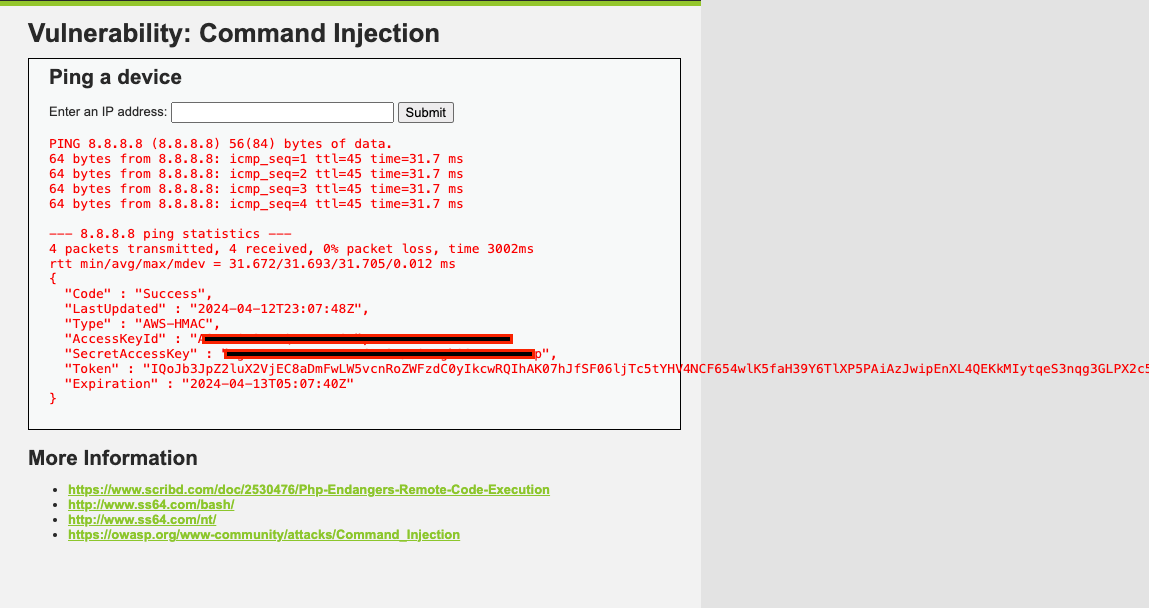

bash-4.2# curl -s -H "X-aws-ec2-metadata-token: $TOKEN" –v http://169.254.169.254/latest/meta-data/iam/security-credentials/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

{

"Code" : "Success",

"LastUpdated" : "2024-04-12T14:46:05Z",

"Type" : "AWS-HMAC",

"AccessKeyId" : "ASIATOH2FIJQUA4MUTSA",

"SecretAccessKey" : "rAHJHsvgXfe3lhf7BVsWB66KtTYXxeClkyOuhhhQ",

"Token" : "IQoJb3JpZ2luX2VjECcaDmFwLW5vcnRoZWFzdC0yIkYwRAIgRhe1HYJN3bBxErWe5CGJJGwstnPNDER/ky+bcKx7kgwCIGGGTSecT1cikanId3PssqlUOOrO/BG3DGMYVURDcl3cKswFCGAQARoMMjM2NzQ3ODMzOTUzIgwvypWs2vej2GLe4nIqqQXbyPQrp6vW8M7RwusZIMJkOgTnLWnJ++20NmBa9QNBx1o2kYPonB6uiWtpcG2V1w9/dfAFMVyswqlmenkw8SZPAnACVOBc+ydSKprGdf3eT4N452Y6cY1huGxlMVVch6KrOjrizoX+qtblvfZGDR7hHA3S9/WAbVk78NuAHUT8mD1HjVNEuRu4TmeC8HApwKtymLuo8fX9qqr9pTr+h0aYd82Dp+rOMar+t2AKD8TmvkkuMjiQBNJqAH2nP3TcoGsomlfX+/FYytB13sBLzMs3C2QxiCnan24b6lJNCx9gjsgERSOnfqm76V5AvRPrnFeEadhX0PixmsXnL7D58/eQnrCkeVBTxEVLaDe9LLo9ptCe+67Bs1CbEMbQCMvwR/8wKtipY5uKASrH9yRq+s2FmmCT6XELfpxarBDd2YyHAt5SegBXPZJSjnZFLAuba8a2djGauKeXRLcYu0XLwXIVNpiSPl+L7IjVO3OeYtaPpBi+QIj+naqDWE+xKMTTitwFzME+DFSyM89xmEWvubJEzXbf+Wr8W9SSvdtxovmdKRSy6A8A4Y5/N87Iesb5vil7dllptgDGGc/39xlmTBhTR7mUniF4zz6rhaYQw+wVkLeAYis0nid96Lm4KTYaxBkAd6vrunvQo3/vScBpVv2G/CXOdYpMsCLB4FE8bIhP6CzZoy/EsK7e7lGGL1Ayfrxnt2Pb7aN57w+aN1j3SoN0jY+UYKxSLT2X0AH536SjnPofsUM6irv+Cgo4PsMQ29owUbF5zkI68gSa1sPaC+eyzHPY9YtArAMgmABPrK9AQNGtBG1uQRfxfsLivD3+N5XJ6wn/UIjRGTIsffnvOsRqX5ytL1oVcADEOsOHD0NzajQbo4EnuVCZWKj61NUnxf+qmJJAEgXlBrEw9ZHlsAY6sgGki7JEAgVA8CvpZFWrt2PKY16+PKpkkc0vDLO8NbS8gkApNnY96T50UcI3932HeXd/n26d1pBCYY4XB1up1TW1MTR0NU0RUoi4FJsiu8FVpy1UAv9bG9QMWxLmuSqTU0xXDBmAbvaq9X9QmAFm+jEG9Tpm/SABW4MxnZ/HY5qKDw2IH+88KiGyt/Xj7KMxzRvaTUE/A/NXcWCa8pitnZl/RW4GvDL1lFL5gzNDia6efe4D",

"Expiration" : "2024-04-12T20:52:39Z"

}

## 출력된 정보는 AWS API를 사용할 수 있는 어느곳에서든지 Expiration 되기전까지 사용 가능 - 위험

exit

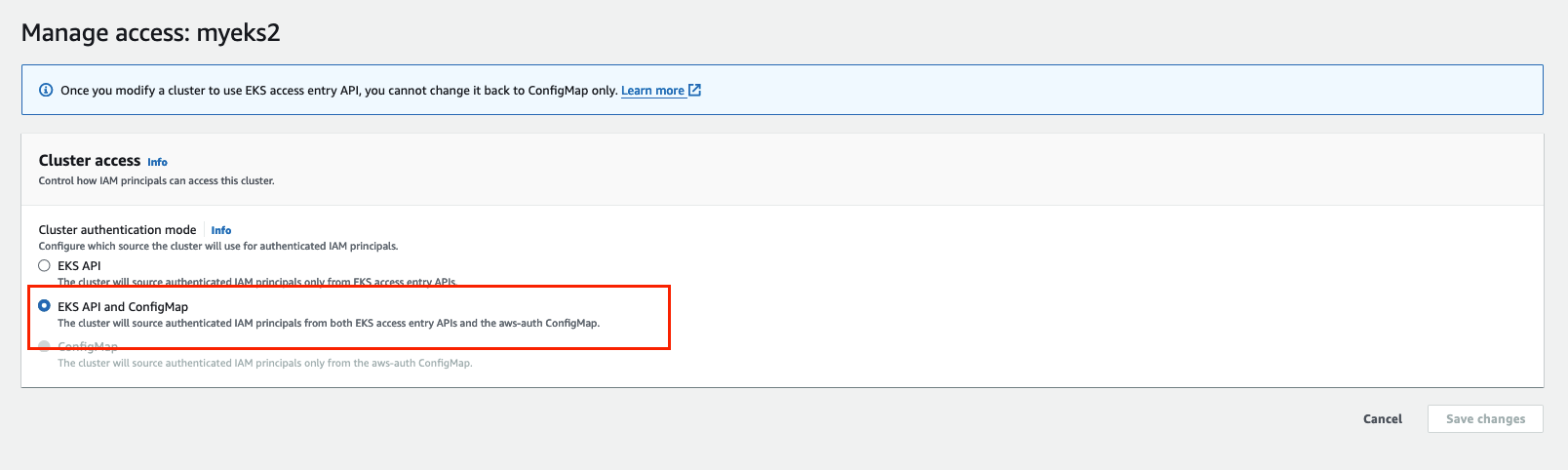

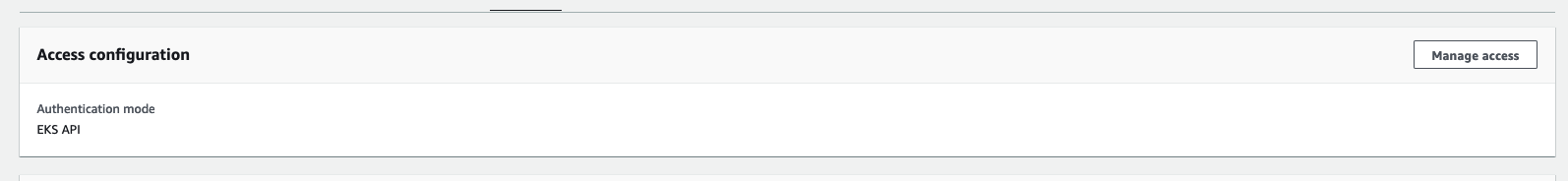

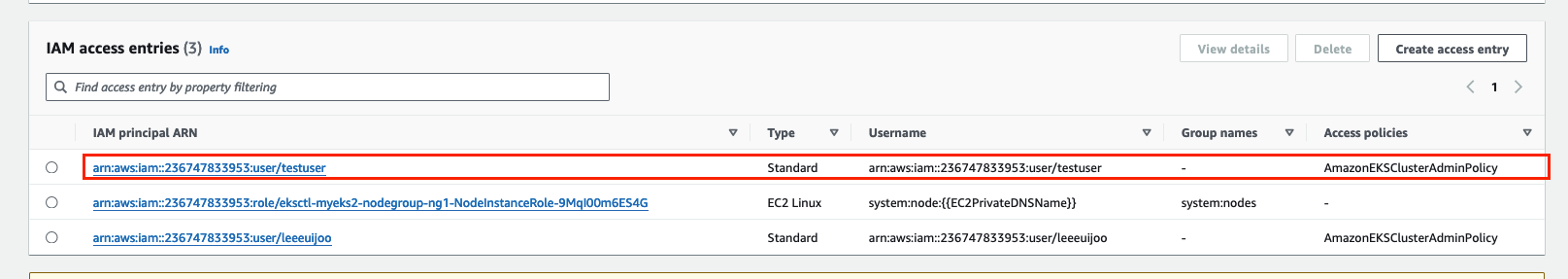

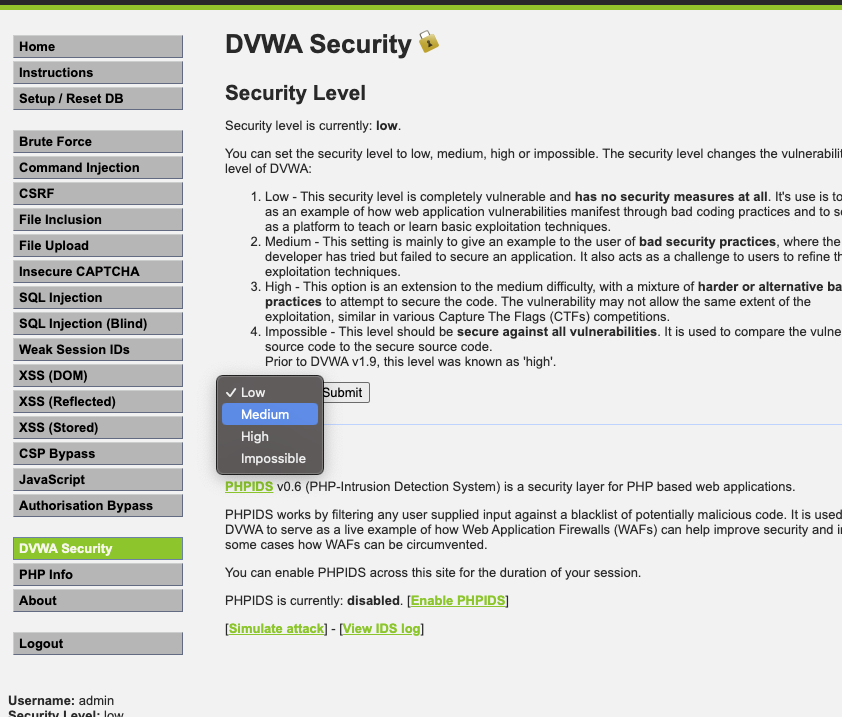

신기술 : A deep dive into simplified(단순화) Amazon EKS access management controls

-

기본적으로 활성화 되어 있음

-

EKS → 액세스 : IAM 액세스 항목

EKS → 액세스 구성 모드 확인 : EKS API 및 ConfigMap ← 정책 중복 시 EKS API 우선되며 ConfigMap은 무시됨

- 두가지 방식으로 인증 처리를 수행

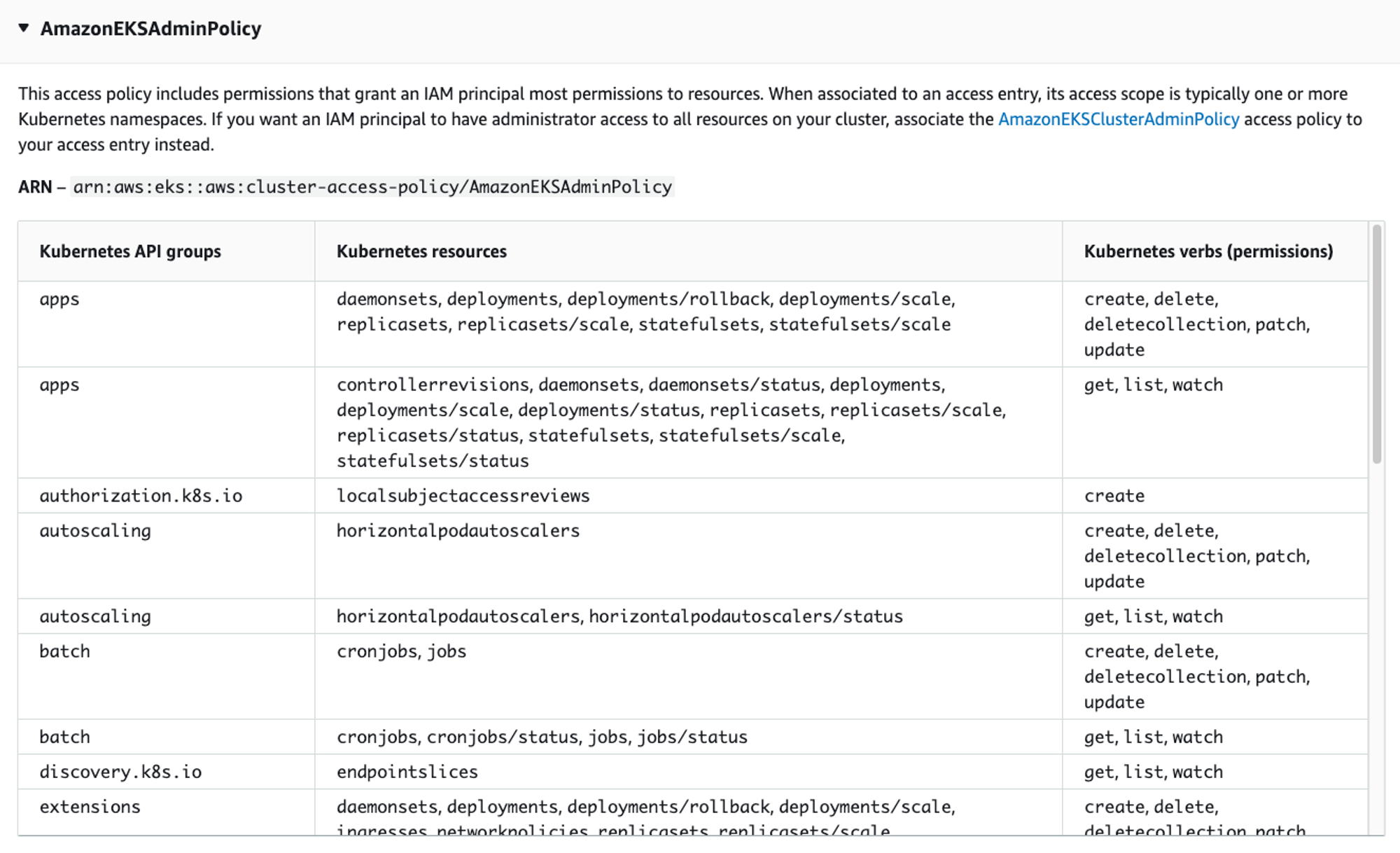

- 기본 정보 확인 : access policy, access entry, associated-access-policy 이 세가지를 조합하면 됩니다.

# EKS API 액세스모드로 변경

aws eks update-cluster-config --name $CLUSTER_NAME --access-config authenticationMode=API

# List all access policies : 클러스터 액세스 관리를 위해 지원되는 액세스 정책

## AmazonEKSClusterAdminPolicy – 클러스터 관리자

## AmazonEKSAdminPolicy – 관리자

## AmazonEKSEditPolicy – 편집

## AmazonEKSViewPolicy – 보기

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks list-access-policies | jq

{

"accessPolicies": [

{

"name": "AmazonEKSAdminPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSAdminPolicy"

},

{

"name": "AmazonEKSClusterAdminPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"

},

{

"name": "AmazonEKSEditPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSEditPolicy"

},

{

"name": "AmazonEKSViewPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSViewPolicy"

},

{

"name": "AmazonEMRJobPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEMRJobPolicy"

}

]

}

# 맵핑 클러스터롤 정보 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get clusterroles -l 'kubernetes.io/bootstrapping=rbac-defaults' | grep -v 'system:'

NAME CREATED AT

admin 2024-04-12T11:54:42Z

cluster-admin 2024-04-12T11:54:42Z

edit 2024-04-12T11:54:42Z

view 2024-04-12T11:54:42Z

kubectl describe clusterroles admin

kubectl describe clusterroles cluster-admin

kubectl describe clusterroles edit

kubectl describe clusterroles view

- 변경 확인

이제 더이상 configmap 을 사용하지 못합니다. 유의할 것

- testuser 설정

# testuser 의 access entry 생성

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks create-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser

{

"accessEntry": {

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/56c7696d-9b7b-6128-2887-ad677a81b0cc",

"createdAt": "2024-04-13T00:09:25.714000+09:00",

"modifiedAt": "2024-04-13T00:09:25.714000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

}

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks list-access-entries --cluster-name $CLUSTER_NAME | jq -r .accessEntries[]

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

arn:aws:iam::236747833953:user/leeeuijoo

arn:aws:iam::236747833953:user/testuser

# testuser에 AmazonEKSClusterAdminPolicy 연동

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks associate-access-policy --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser \

> --policy-arn arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy --access-scope type=cluster

{

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"associatedAccessPolicy": {

"policyArn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy",

"accessScope": {

"type": "cluster",

"namespaces": []

},

"associatedAt": "2024-04-13T00:10:04.648000+09:00",

"modifiedAt": "2024-04-13T00:10:04.648000+09:00"

}

}

# associated-access-policy

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks list-associated-access-policies --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser | jq

{

"associatedAccessPolicies": [

{

"policyArn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy",

"accessScope": {

"type": "cluster",

"namespaces": []

},

"associatedAt": "2024-04-13T00:10:04.648000+09:00",

"modifiedAt": "2024-04-13T00:10:04.648000+09:00"

}

],

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser"

}

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks describe-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser | jq

{

"accessEntry": {

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/56c7696d-9b7b-6128-2887-ad677a81b0cc",

"createdAt": "2024-04-13T00:09:25.714000+09:00",

"modifiedAt": "2024-04-13T00:09:25.714000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

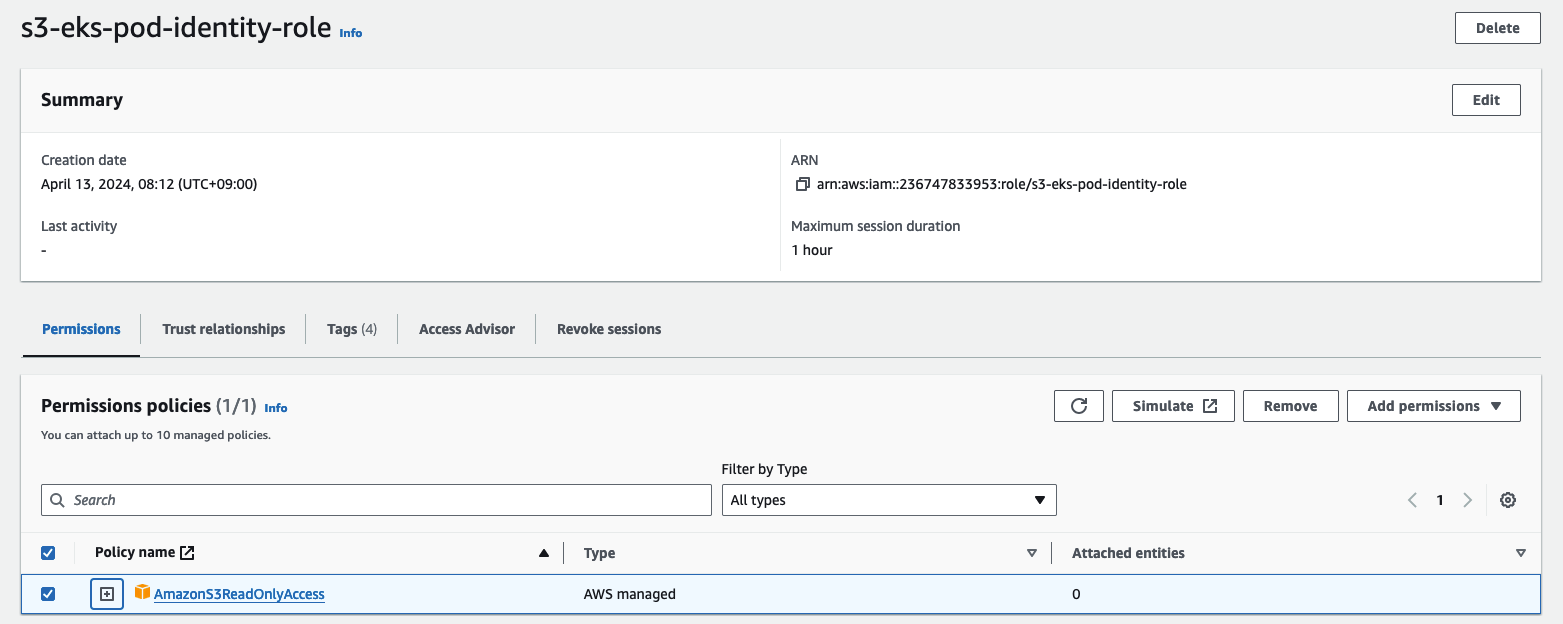

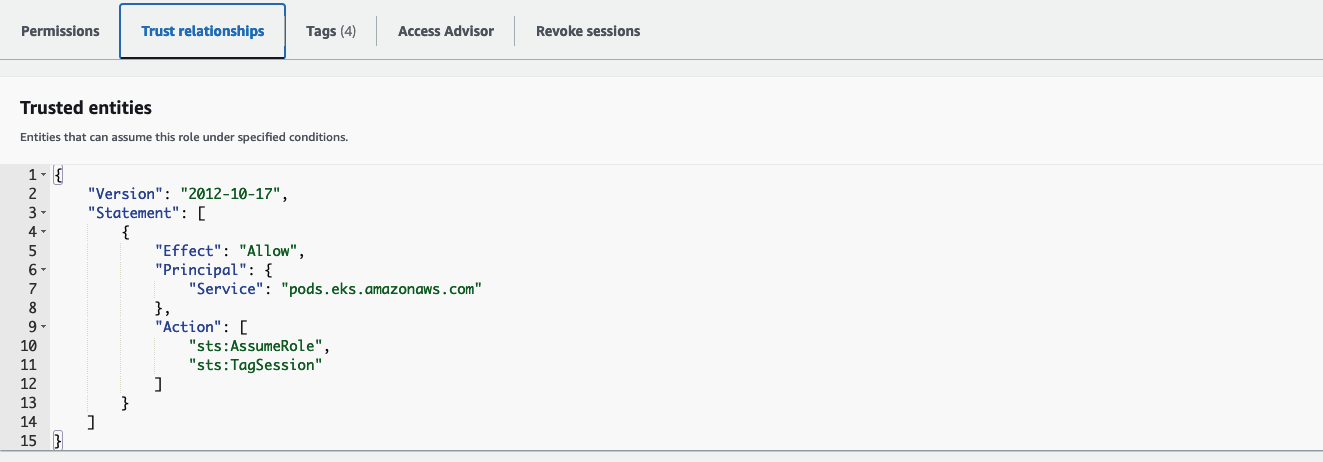

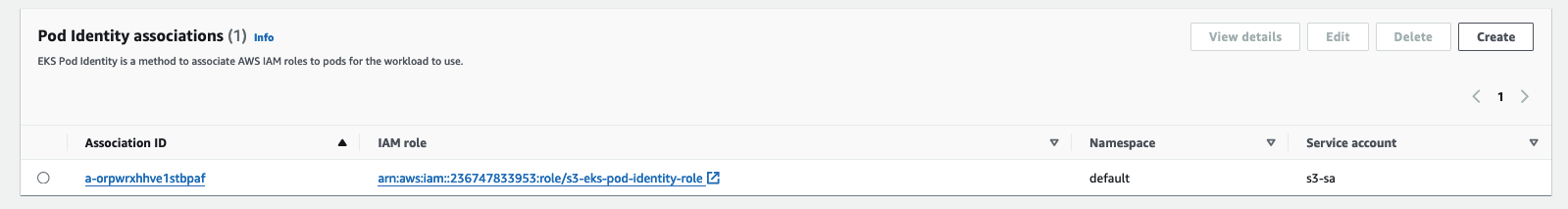

}- 콘솔에서 확인

- [myeks-bastion-2]에서 testuser로 확인

# testuser 정보 확인

(testuser:default) [root@myeks2-bastion-2 ~]# clear

(testuser:default) [root@myeks2-bastion-2 ~]# aws sts get-caller-identity --query Arn

"arn:aws:iam::236747833953:user/testuser"

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl whoami

arn:aws:iam::236747833953:user/testuser

# kubectl 시도

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get pod -v6

I0413 00:15:04.242775 2928 loader.go:395] Config loaded from file: /root/.kube/config

I0413 00:15:05.288504 2928 round_trippers.go:553] GET https://D3C1852AD76B4F7ACB305E84C0E3FAD5.sk1.ap-northeast-2.eks.amazonaws.com/api/v1/namespaces/default/pods?limit=500 200 OK in 1024 milliseconds

NAME READY STATUS RESTARTS AGE

awscli-pod-5bdb44b5bd-9b99k 1/1 Running 0 35m

awscli-pod-5bdb44b5bd-g6gdk 1/1 Running 0 35m

$ kubectl api-resources -v5

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl rbac-tool whoami

{Username: "arn:aws:iam::236747833953:user/testuser",

UID: "aws-iam-authenticator:236747833953:AIDATOH2FIJQZQUSI5J7W",

Groups: ["system:authenticated"],

Extra: {accessKeyId: ["AKIATOH2FIJQSSIHIZGL"],

arn: ["arn:aws:iam::236747833953:user/testuser"],

canonicalArn: ["arn:aws:iam::236747833953:user/testuser"],

principalId: ["AIDATOH2FIJQZQUSI5J7W"],

sessionName: [""]}}

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl auth can-i get pods --all-namespaces

yes

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl auth can-i delete pods --all-namespaces

yes

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get cm -n kube-system aws-auth -o yaml | kubectl neat | yh

apiVersion: v1

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

username: system:node:{{EC2PrivateDNSName}}

mapUsers: |

[]

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

(testuser:default) [root@myeks2-bastion-2 ~]# eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

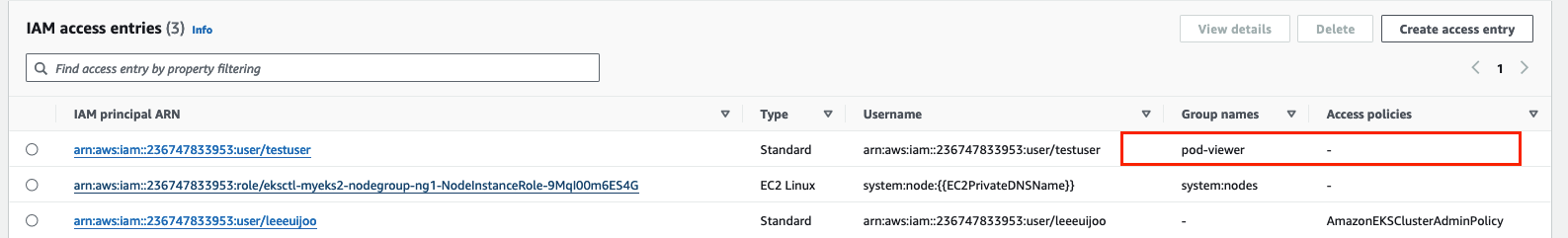

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes- Access entries and Kubernetes groups

# 기존 testuser access entry 제거

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks delete-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks list-access-entries --cluster-name $CLUSTER_NAME | jq -r .accessEntries[]

arn:aws:iam::236747833953:role/eksctl-myeks2-nodegroup-ng1-NodeInstanceRole-9MqI00m6ES4G

arn:aws:iam::236747833953:user/leeeuijoo

# Cluster Role 생성

cat <<EoF> ~/pod-viewer-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: pod-viewer-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["list", "get", "watch"]

EoF

cat <<EoF> ~/pod-admin-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: pod-admin-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["*"]

EoF

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl apply -f ~/pod-viewer-role.yaml

clusterrole.rbac.authorization.k8s.io/pod-viewer-role created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl apply -f ~/pod-admin-role.yaml

clusterrole.rbac.authorization.k8s.io/pod-admin-role created

# Rolebinding

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create clusterrolebinding viewer-role-binding --clusterrole=pod-viewer-role --group=pod-viewer

clusterrolebinding.rbac.authorization.k8s.io/viewer-role-binding created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create clusterrolebinding admin-role-binding --clusterrole=pod-admin-role --group=pod-admin

clusterrolebinding.rbac.authorization.k8s.io/admin-role-binding created

# 앤트리 생성

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks create-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser --kubernetes-group pod-viewer

{

"accessEntry": {

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [

"pod-viewer"

],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/cec76973-bcae-97f3-ed33-ca89066280ce",

"createdAt": "2024-04-13T00:22:49.157000+09:00",

"modifiedAt": "2024-04-13T00:22:49.157000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

}

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks list-associated-access-policies --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser

{

"associatedAccessPolicies": [],

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser"

}

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks describe-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser | jq

{

"accessEntry": {

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [

"pod-viewer"

],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/cec76973-bcae-97f3-ed33-ca89066280ce",

"createdAt": "2024-04-13T00:22:49.157000+09:00",

"modifiedAt": "2024-04-13T00:22:49.157000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

}

- [myeks-bastion-2]에서 testuser로 확인

# testuser 정보 확인

(testuser:default) [root@myeks2-bastion-2 ~]# aws sts get-caller-identity --query Arn

"arn:aws:iam::236747833953:user/testuser"

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl whoami

arn:aws:iam::236747833953:user/testuser

# kubectl 시도

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl get pod -v6

I0413 00:25:55.944784 3495 loader.go:395] Config loaded from file: /root/.kube/config

I0413 00:25:57.126403 3495 round_trippers.go:553] GET https://D3C1852AD76B4F7ACB305E84C0E3FAD5.sk1.ap-northeast-2.eks.amazonaws.com/api/v1/namespaces/default/pods?limit=500 200 OK in 1159 milliseconds

NAME READY STATUS RESTARTS AGE

awscli-pod-5bdb44b5bd-9b99k 1/1 Running 0 46m

awscli-pod-5bdb44b5bd-g6gdk 1/1 Running 0 46m

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl auth can-i get pods --all-namespaces

yes

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl auth can-i delete pods --all-namespaces

no # delete 는 Action 에 넣어주지 않았기 때문

--------이 부분 ------------

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["list", "get", "watch"]- pod-admin 엔트리도 연결

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks update-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser --kubernetes-group pod-admin | jq -r .accessEntry

{

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [

"pod-admin"

],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/cec76973-bcae-97f3-ed33-ca89066280ce",

"createdAt": "2024-04-13T00:22:49.157000+09:00",

"modifiedAt": "2024-04-13T00:28:00.909000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# aws eks describe-access-entry --cluster-name $CLUSTER_NAME --principal-arn arn:aws:iam::$ACCOUNT_ID:user/testuser | jq

{

"accessEntry": {

"clusterName": "myeks2",

"principalArn": "arn:aws:iam::236747833953:user/testuser",

"kubernetesGroups": [

"pod-admin"

],

"accessEntryArn": "arn:aws:eks:ap-northeast-2:236747833953:access-entry/myeks2/user/236747833953/testuser/cec76973-bcae-97f3-ed33-ca89066280ce",

"createdAt": "2024-04-13T00:22:49.157000+09:00",

"modifiedAt": "2024-04-13T00:28:00.909000+09:00",

"tags": {},

"username": "arn:aws:iam::236747833953:user/testuser",

"type": "STANDARD"

}

}- [myeks-bastion-2]에서 testuser로 확인

# testuser delete pod 가능 한지 확인

(testuser:default) [root@myeks2-bastion-2 ~]# kubectl auth can-i delete pods --all-namespaces

yes- Migrate from ConfigMap to access entries - https://catalog.workshops.aws/eks-immersionday/en-US/access-management/4-migrate

3. EKS IRSA & Pod Identity

- EC2 Instance Profile : 사용하기 편하지만, 최소 권한 부여 원칙에 위배하며 보안상 권고하지 않음 : IRSA 를 써야 한다.

# 설정 예시 1 : eksctl 사용 시

eksctl create cluster --name $CLUSTER_NAME ... --external-dns-access --full-ecr-access --asg-access

# 설정 예시 2 : eksctl로 yaml 파일로 노드 생성 시

cat myeks.yaml | yh

...

managedNodeGroups:

- amiFamily: AmazonLinux2

iam:

withAddonPolicies:

albIngress: false

appMesh: false

appMeshPreview: false

autoScaler: true

awsLoadBalancerController: false

certManager: true

cloudWatch: true

ebs: false

efs: false

externalDNS: true

fsx: false

imageBuilder: true

xRay: false

...

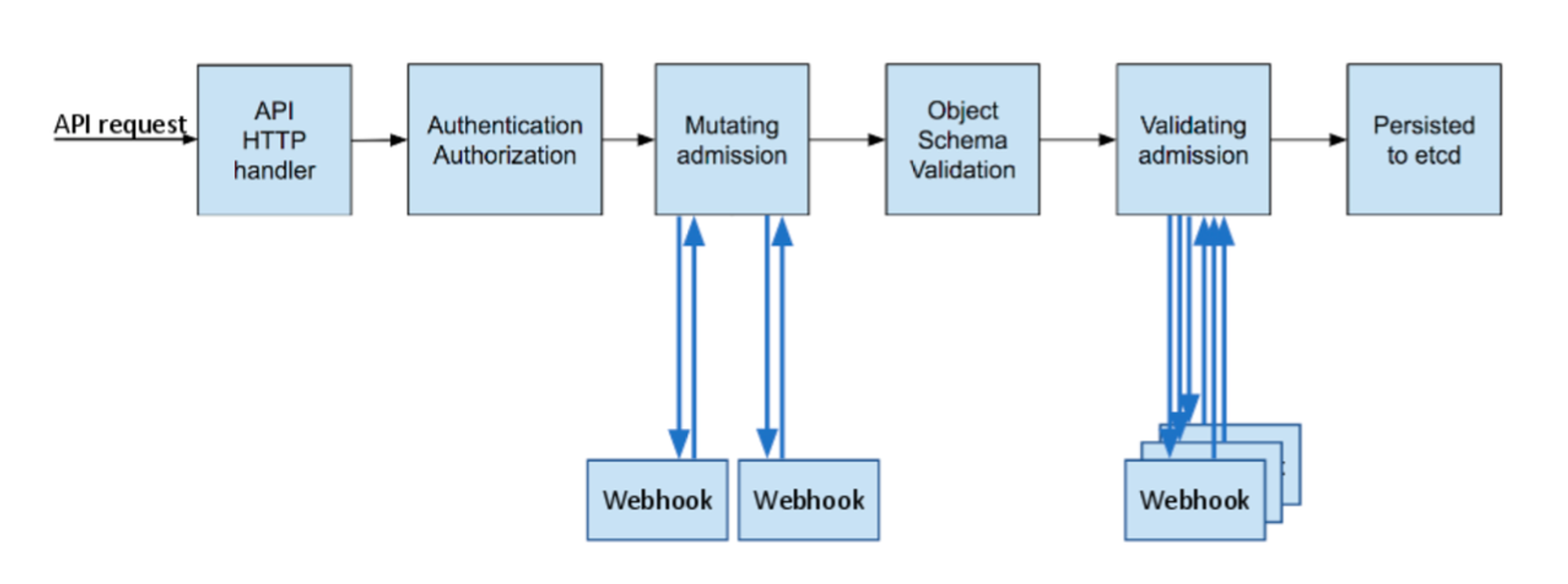

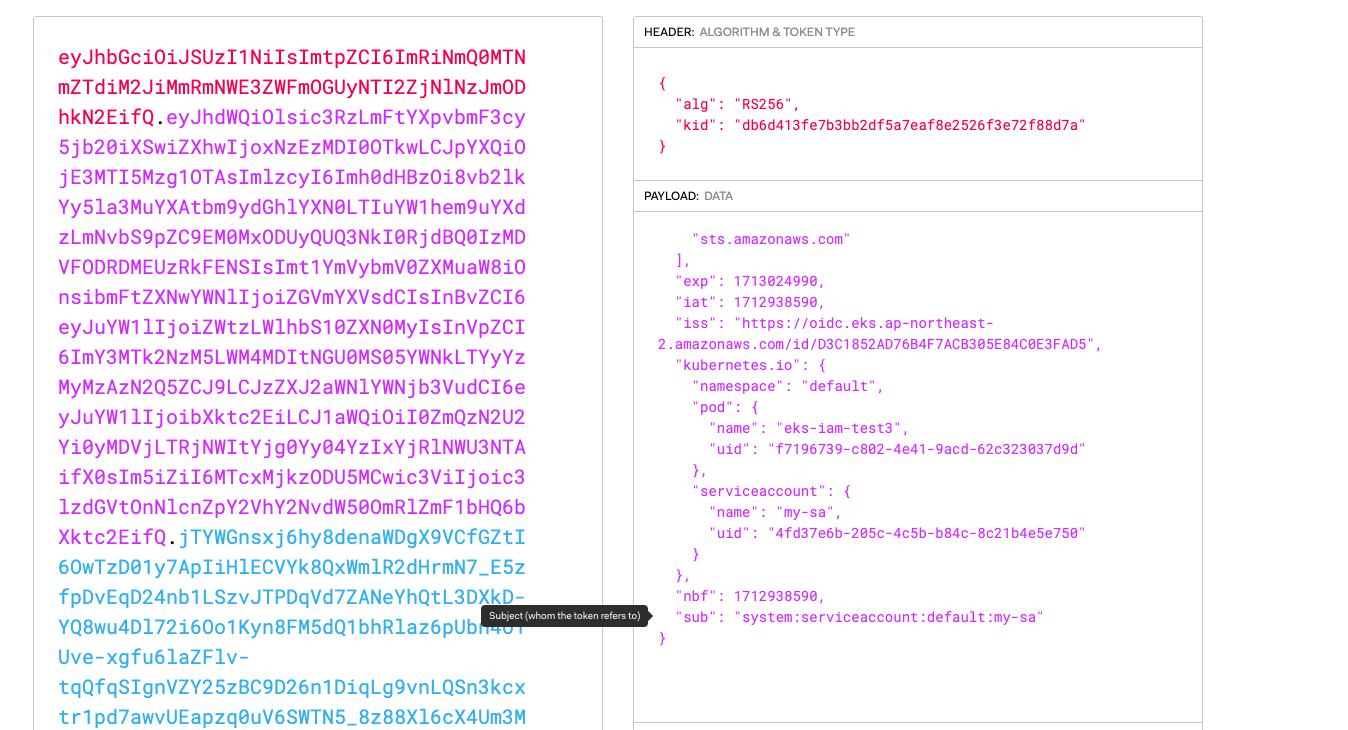

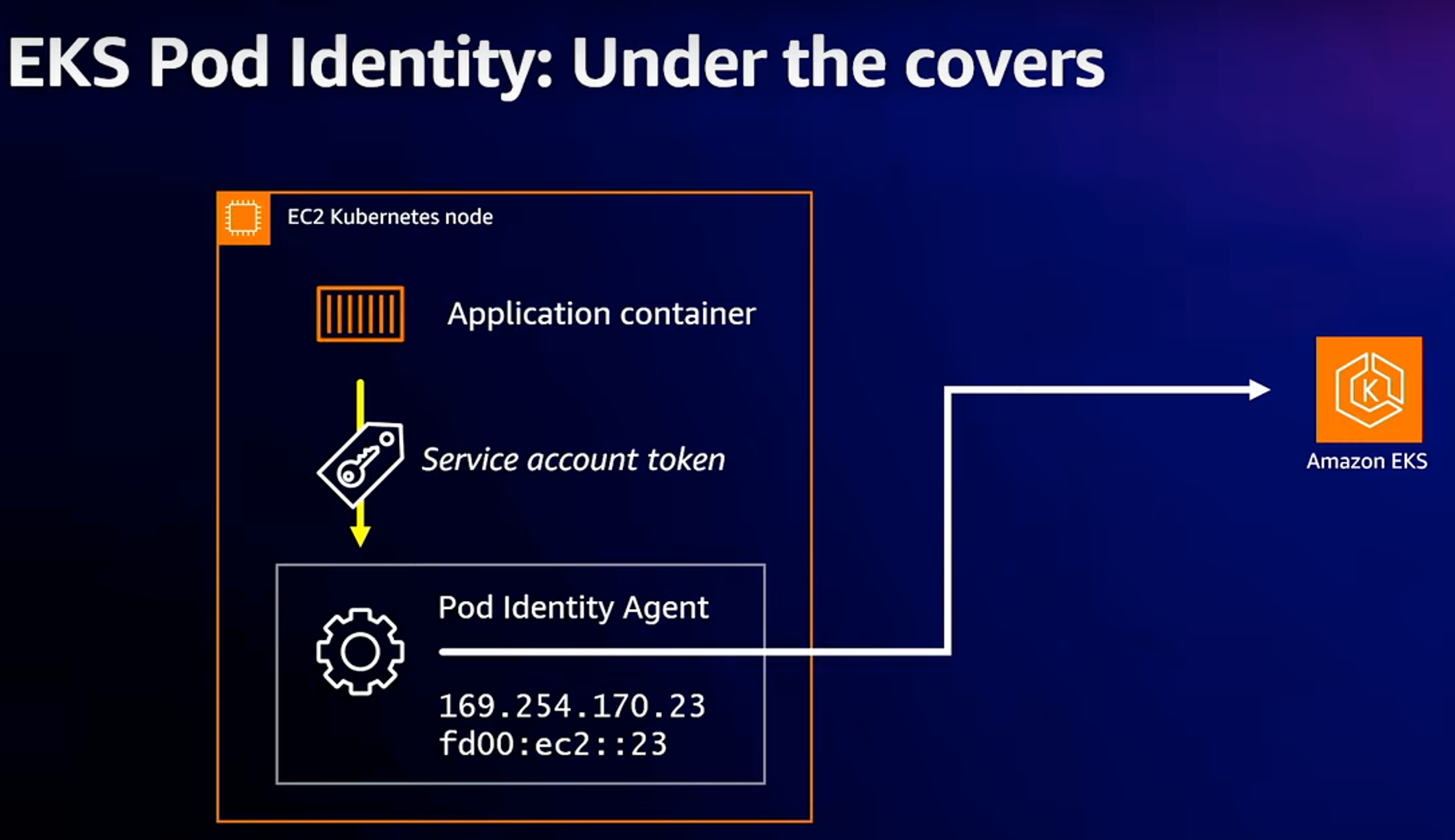

- 동작 : k8s파드 → AWS 서비스 사용 시 ⇒ AWS STS/IAM ↔ IAM OIDC Identity Provider(EKS IdP) 인증/인가

필요 지식 : Service Account Token Volume Projection, Admission Control, JWT(JSON Web Token), OIDC

- Service Account Token Volume Projection

- 서비스 계정 토큰을 이용해서 서비스와 서비스, 즉 파드(pod)와 파드(pod)의 호출에서 자격 증명으로 사용할 수 있을까요?

- 불행히도 기본 서비스 계정 토큰으로는 사용하기에 부족함이 있습니다. 토큰을 사용하는 대상(audience), 유효 기간(expiration) 등 토큰의 속성을 지정할 필요가 있기 때문입니다.

Service Account Token Volume Projection기능을 사용하면 이러한 부족한 점들을 해결할 수 있습니다.

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- mountPath: /var/run/secrets/tokens

name: vault-token

serviceAccountName: build-robot

volumes:

- name: vault-token

projected:

sources:

- serviceAccountToken:

path: vault-token

expirationSeconds: 7200

audience: vault-

Bound Service Account Token Volume 바인딩된 서비스 어카운트 토큰 볼륨

- FEATURE STATE:

Kubernetes v1.22 [stable] - 서비스 어카운트 어드미션 컨트롤러는 토큰 컨트롤러에서 생성한 만료되지 않은 서비스 계정 토큰에 시크릿 기반 볼륨 대신 다음과 같은 프로젝티드 볼륨을 추가한다.

- FEATURE STATE:

- name: kube-api-access-<random-suffix>

projected:

defaultMode: 420 # 420은 rw- 로 소유자는 읽고쓰기 권한과 그룹내 사용자는 읽기만, 보통 0644는 소유자는 읽고쓰고실행 권한과 나머지는 읽고쓰기 권한

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

apiVersion: v1

fieldPath: metadata.namespace

path: namespace프로젝티드 볼륨은 세 가지로 구성

kube-apiserver로부터 TokenRequest API를 통해 얻은서비스어카운트토큰(ServiceAccountToken). 서비스어카운트토큰은 기본적으로 1시간 뒤에, 또는 파드가 삭제될 때 만료된다. 서비스어카운트토큰은 파드에 연결되며 kube-apiserver를 위해 존재한다.- kube-apiserver에 대한 연결을 확인하는 데 사용되는 CA 번들을 포함하는

컨피그맵(ConfigMap). - 파드의 네임스페이스를 참조하는

DownwardA

Configure a Pod to Use a Projected Volume for Storage : 시크릿 컨피그맵 downwardAPI serviceAccountToken의 볼륨 마운트를 하나의 디렉터리에 통합

apiVersion: v1

kind: Pod

metadata:

name: test-projected-volume

spec:

containers:

- name: test-projected-volume

image: busybox:1.28

args:

- sleep

- "86400"

volumeMounts:

- name: all-in-one

mountPath: "/projected-volume"

readOnly: true

volumes:

- name: all-in-one

projected:

sources:

- secret:

name: user

- secret:

name: pass- TEST

# 시크릿 생성

## Create files containing the username and password:

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# echo -n "admin" > ./username.txt

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# echo -n "1f2d1e2e67df" > ./password.txt

## Package these files into secrets:

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create secret generic user --from-file=./username.txt

secret/user created

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl create secret generic pass --from-file=./password.txt

secret/pass created

# 파드 생성

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl apply -f https://k8s.io/examples/pods/storage/projected.yaml

pod/test-projected-volume created

# 파드 확인

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl get pod test-projected-volume -o yaml | ...

volumes:

- name: all-in-one

projected:

sources:

- secret:

name: user

- secret:

name: pass

- name: kube-api-access-z7xpl

projected:

sources:

- serviceAccountToken:

expirationSeconds: 3607

path: token

- configMap:

items:

- key: ca.crt

path: ca.crt

name: kube-root-ca.crt

- downwardAPI:

items:

- fieldRef:

fieldPath: metadata.namespace

path: namespace

# 시크릿 확인

## 묶여 있는 것읗 확인할 수 있습니다.

(leeeuijoo@myeks2:default) [root@myeks2-bastion ~]# kubectl exec -it test-projected-volume -- ls /projected-volume/