썸네일 출처 : https://www.browserstack.com/blog/our-journey-to-managing-jenkins-on-aws-eks/

준비 환경

- AWS EC2 Server

- t2.small

- Ubuntu 20.04

- Jenkins Installed

- Plugin

- Maven Integration

- Maven Invoker

- Docker Pipeline

- Slack Notification

- Plugin

- Maven Installed

- git hub repo for test

AWS CLI Install

# cli 환경 설치를 위한 압축파일 다운

$ curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

$ apt-get -y install unzip

$ unzip awscliv2.zip

$ sudo ./aws/install

$ aws configure

# aws 액세스 정보 입력EKS 클러스터 구성을 위한 eksctl 명령어 Install

설치파일 다운로드

# 설치파일 다운로드

$ curl --silent --location \

"https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp

# 명령어 내 서버로 이동

$ sudo mv /tmp/eksctl /usr/local/bin

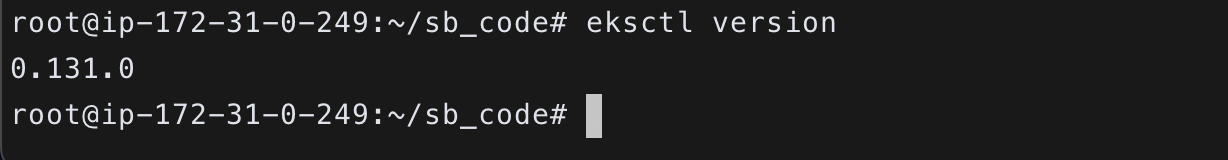

$ eksctl version

# 설치확인 및 버전 확인Kubectl 명령어 Install

# 명령어 다운

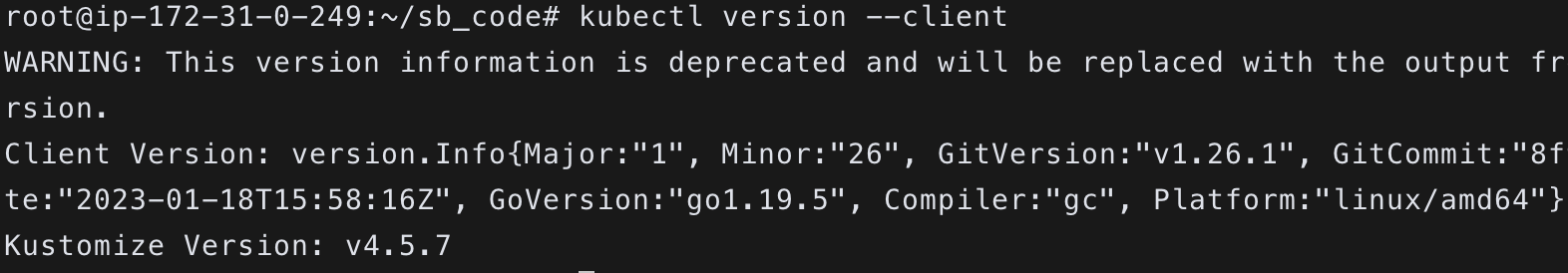

$ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

# 설치

$ sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

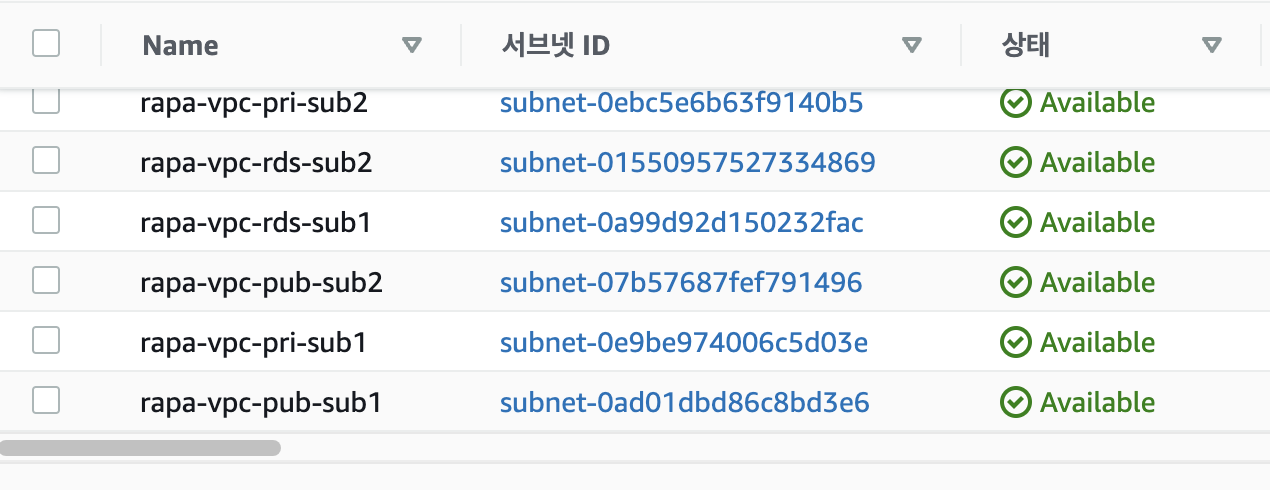

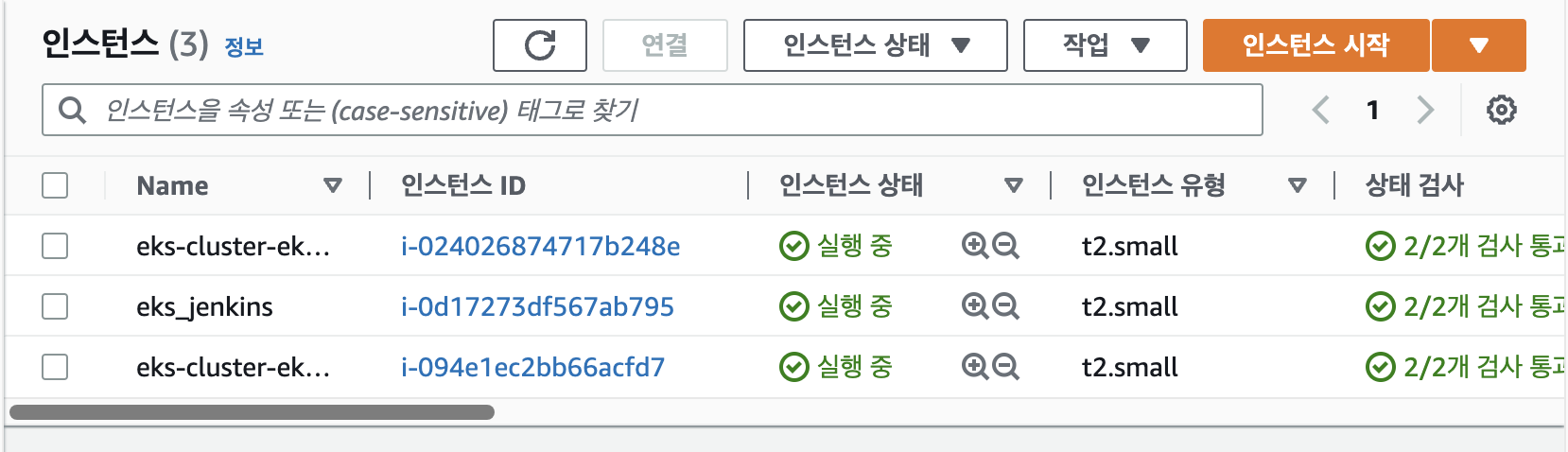

$ kubectl version --client- 이전에 만들어 놓은 vpc public 서브넷 두개

- default vpc에 설치하고 하다면 꼭 가용영역이 a와 c만 선택을 해야함!! b,d는 절대 금지. b,d에 설치시 t2.small t2.micro 유형 선택 불가능 = eks 클러스터 구성 실패❌ 할 확률이 크다

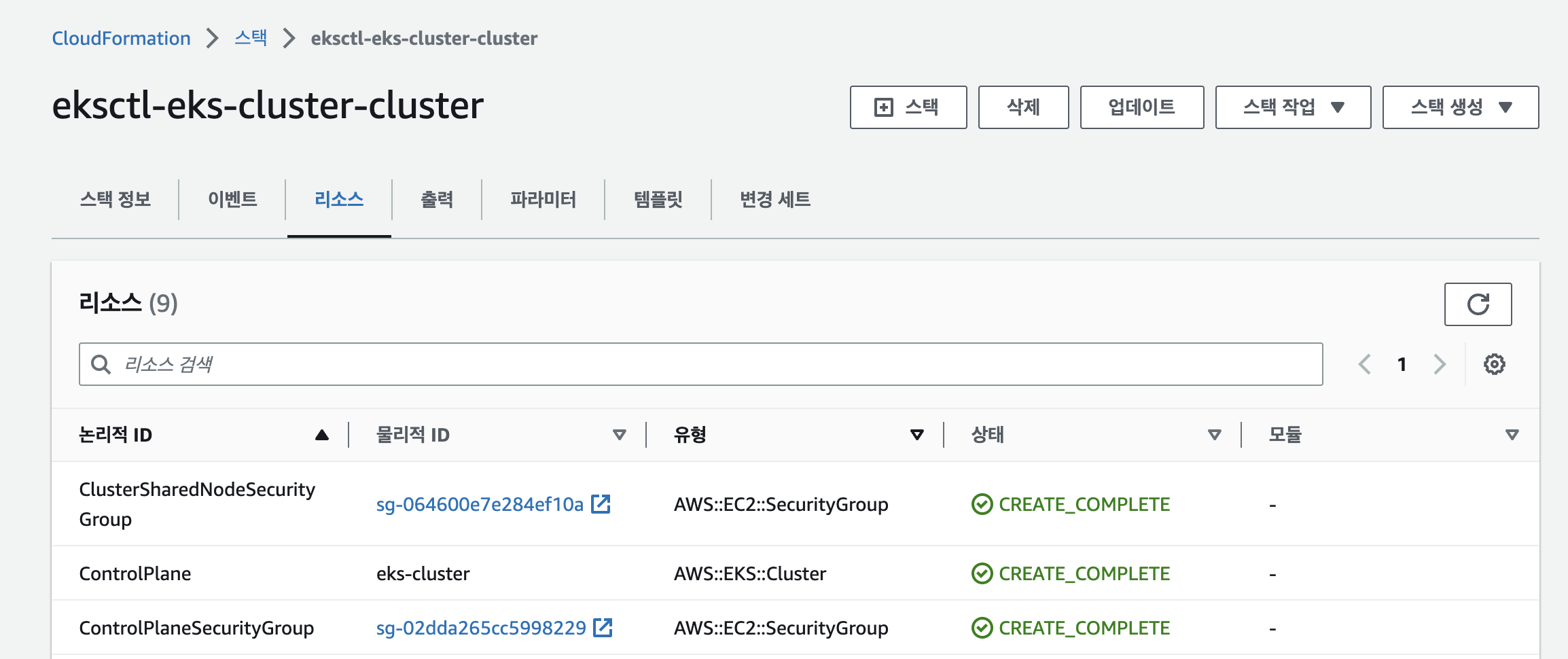

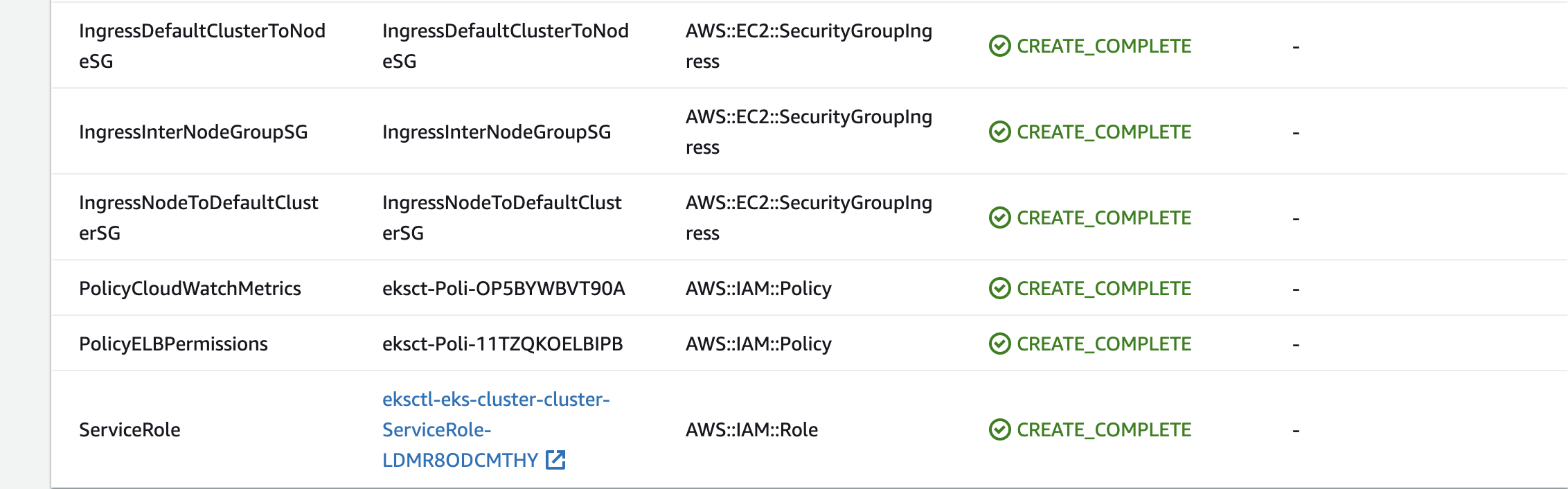

eksctl 을 통한 EKS Cluster 구성

- eks 클러스터 이름 : eks-cluster

- eks 노드그룹 이름 : eks-nodegroup

$ eksctl create cluster --vpc-public-subnets <퍼블릭서브넷ID 1>,<퍼블릭서브넷ID 2> --name <eks 클러스터 이름> --region ap-northeast-2 --version 1.24 --nodegroup-name <eks 노드그룹 이름> --node-type t2.small --nodes 2 --nodes-min 2 --nodes-max 5

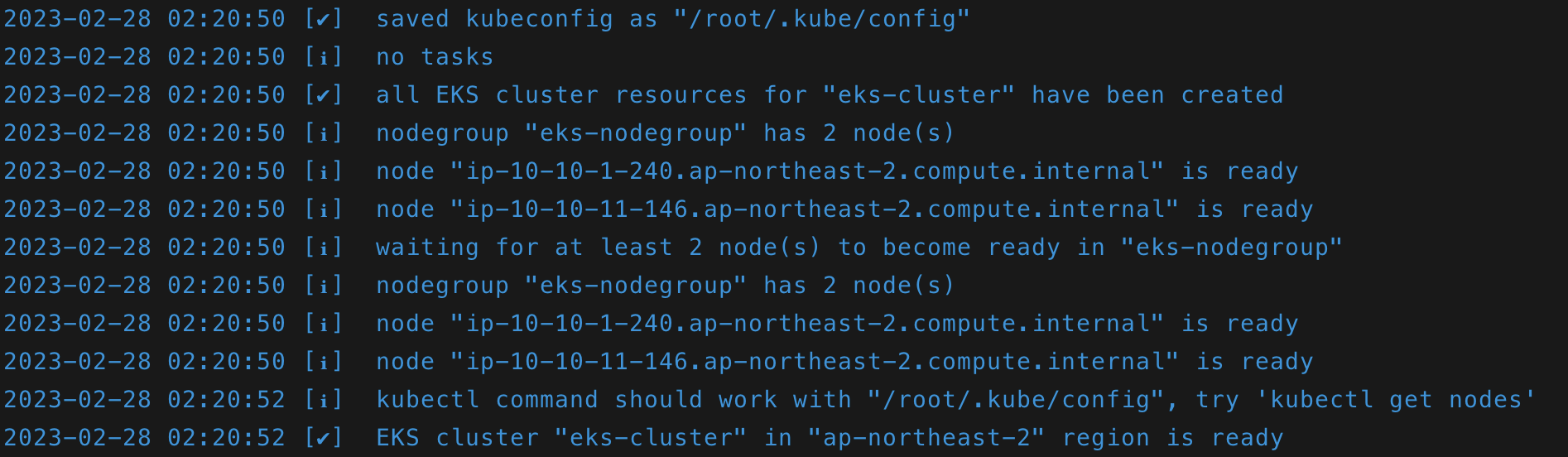

$ eksctl create cluster --vpc-public-subnets subnet-0ad01dbd86c8bd3e6,subnet-07b57687fef791496 --name eks-cluster --region ap-northeast-2 --version 1.24 --nodegroup-name eks-nodegroup --node-type t2.small --nodes 2 --nodes-min 2 --nodes-max 5- 클러스터를 구성하는데 시간이 많이 소요된다 (20~30 Min)

- 클러스터 구성 완료

root@ip-172-31-0-249:~/sb_code# kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-10-1-240.ap-northeast-2.compute.internal Ready <none> 2m51s v1.24.10-eks-48e63af

ip-10-10-11-146.ap-northeast-2.compute.internal Ready <none> 3m1s v1.24.10-eks-48e63af

root@ip-172-31-0-249:~/sb_code#Test 를 위한 manifest Deploy

$ cat h-deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: ip-deploy

spec:

replicas: 2

selector:

matchLabels:

app: ipnginx

template:

metadata:

labels:

app: ipnginx

spec:

containers:

- name: ipnginx

image: oolralra/ipnginx

---

apiVersion: v1

kind: Service

metadata:

name: ip-svc

spec:

selector:

app: ipnginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80$ kubectl apply -f h-deploy.yml

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/ip-deploy-69dcb7f45b-7p84n 1/1 Running 0 15s

pod/ip-deploy-69dcb7f45b-9g8kz 1/1 Running 0 15sip-srv 서비스의 타입을 LoadBalancer 로 변경

$ kubectl patch svc ip-svc -p '{"spec": {"type": "LoadBalancer"}}'

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/ip-deploy-69dcb7f45b-7p84n 1/1 Running 0 5m51s

pod/ip-deploy-69dcb7f45b-9g8kz 1/1 Running 0 5m51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ip-svc LoadBalancer 172.20.85.1 a14c299f0d3b34a4397f21d5c92ba176-122650646.ap-northeast-2.elb.amazonaws.com 80:30668/TCP 5m51s

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 32m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ip-deploy 2/2 2 2 5m51s

NAME DESIRED CURRENT READY AGE

replicaset.apps/ip-deploy-69dcb7f45b 2 2 2 5m51s

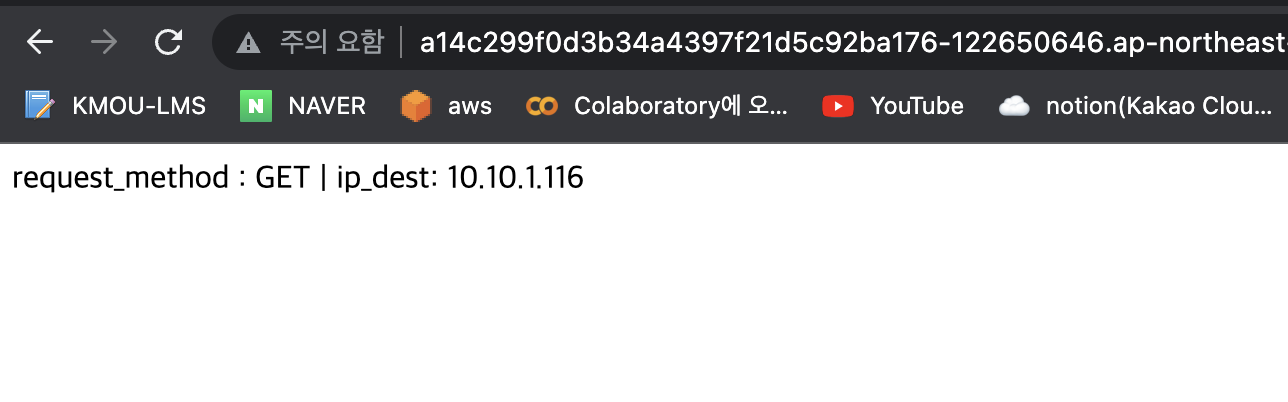

### 로드밸런서 주소로 curl 때리기

### 로드밸런싱 되는지 확인

$ curl a14c299f0d3b34a4397f21d5c92ba176-122650646.ap-northeast-2.elb.amazonaws.com

request_method : GET | ip_dest: 10.10.11.45

$ curl a14c299f0d3b34a4397f21d5c92ba176-122650646.ap-northeast-2.elb.amazonaws.com

request_method : GET | ip_dest: 10.10.1.116- external IP (로드밸런서 엔드포인트) 로 접속

- test 완료됐으면 서비스 삭제

kubectl delete -f h-deploy.yml

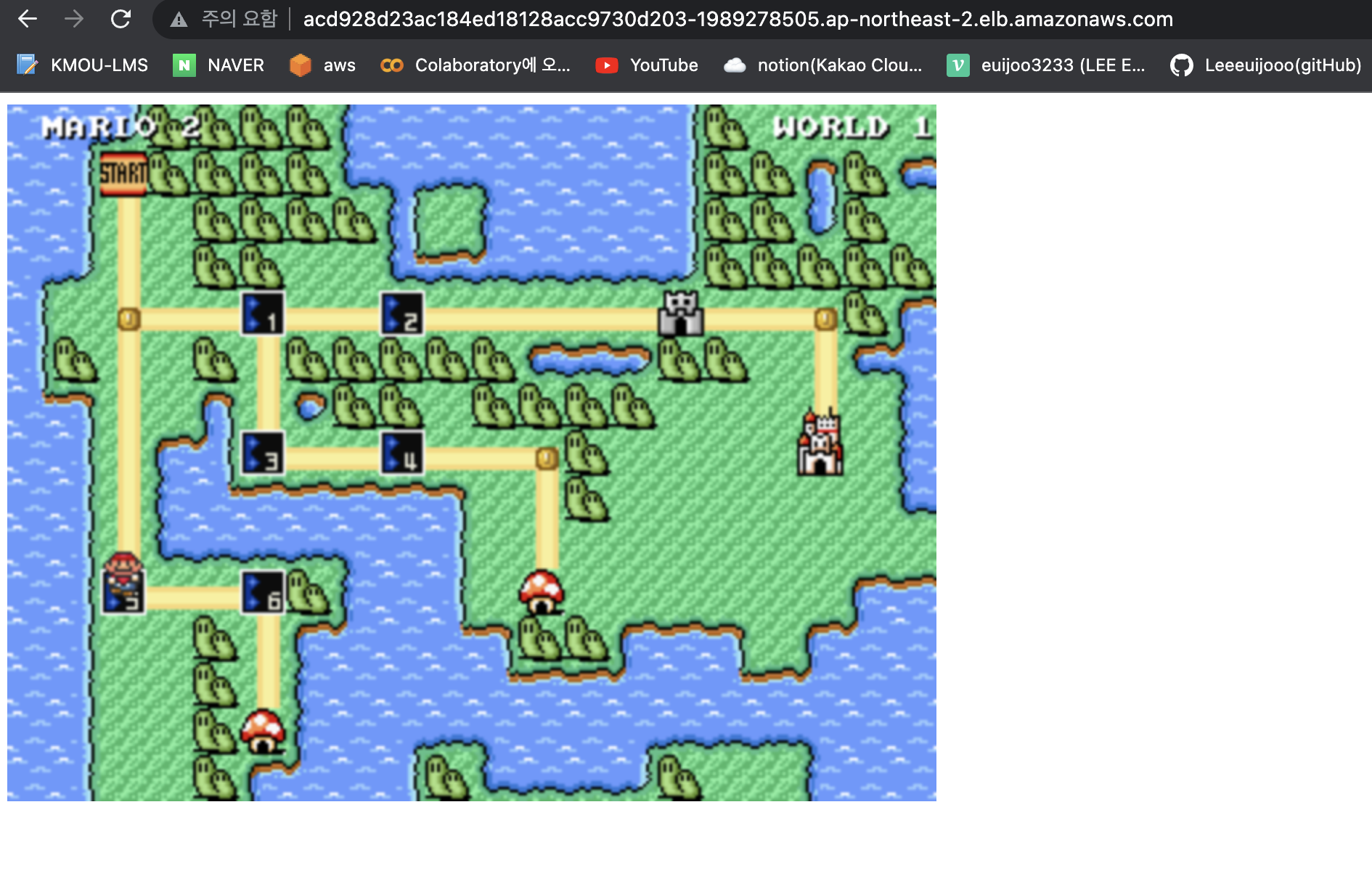

슈퍼마리오 이미지 배포해보기

-

로드밸런서 주소로 접속했을때 도커허브의 pengbai/docker-supermario 배포 (이미지는 8080 port 사용)

-

supermario.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: supermario

spec:

replicas: 2

selector:

matchLabels:

app: supermario

template:

metadata:

labels:

app: supermario

spec:

containers:

- name: supermario

image: pengbai/docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: supermario-service

spec:

selector:

app: supermario

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8080

type: LoadBalancer

root@ip-172-31-0-249:~/yml# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/supermario-76f974f85c-28dx2 1/1 Running 0 51s

pod/supermario-76f974f85c-fw5ks 1/1 Running 0 51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 62m

service/supermario-service LoadBalancer 172.20.169.168 acd928d23ac184ed18128acc9730d203-1989278505.ap-northeast-2.elb.amazonaws.com 80:31304/TCP 51s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/supermario 2/2 2 2 51s

NAME DESIRED CURRENT READY AGE

replicaset.apps/supermario-76f974f85c 2 2 2 51s- 로드 밸런서로 접속

- 정상적으로 배포 완료됨

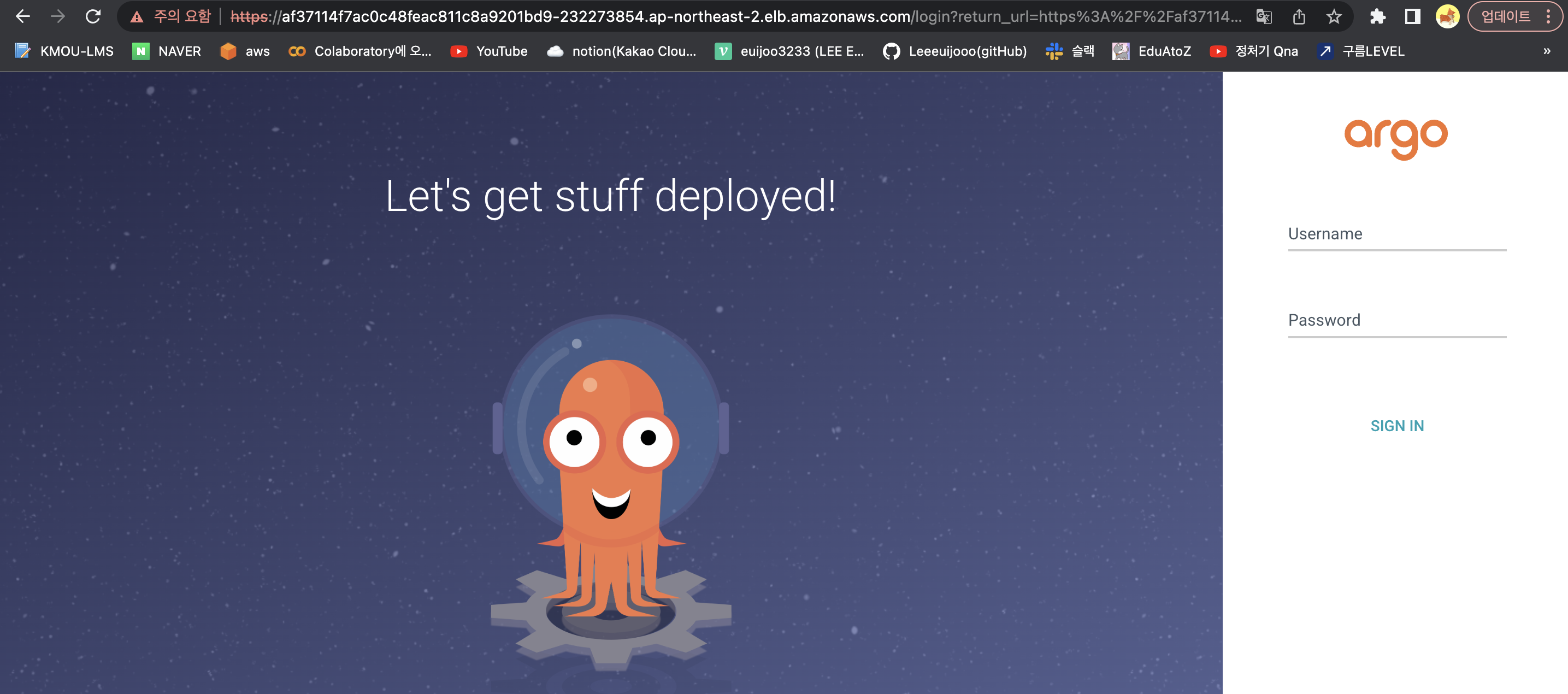

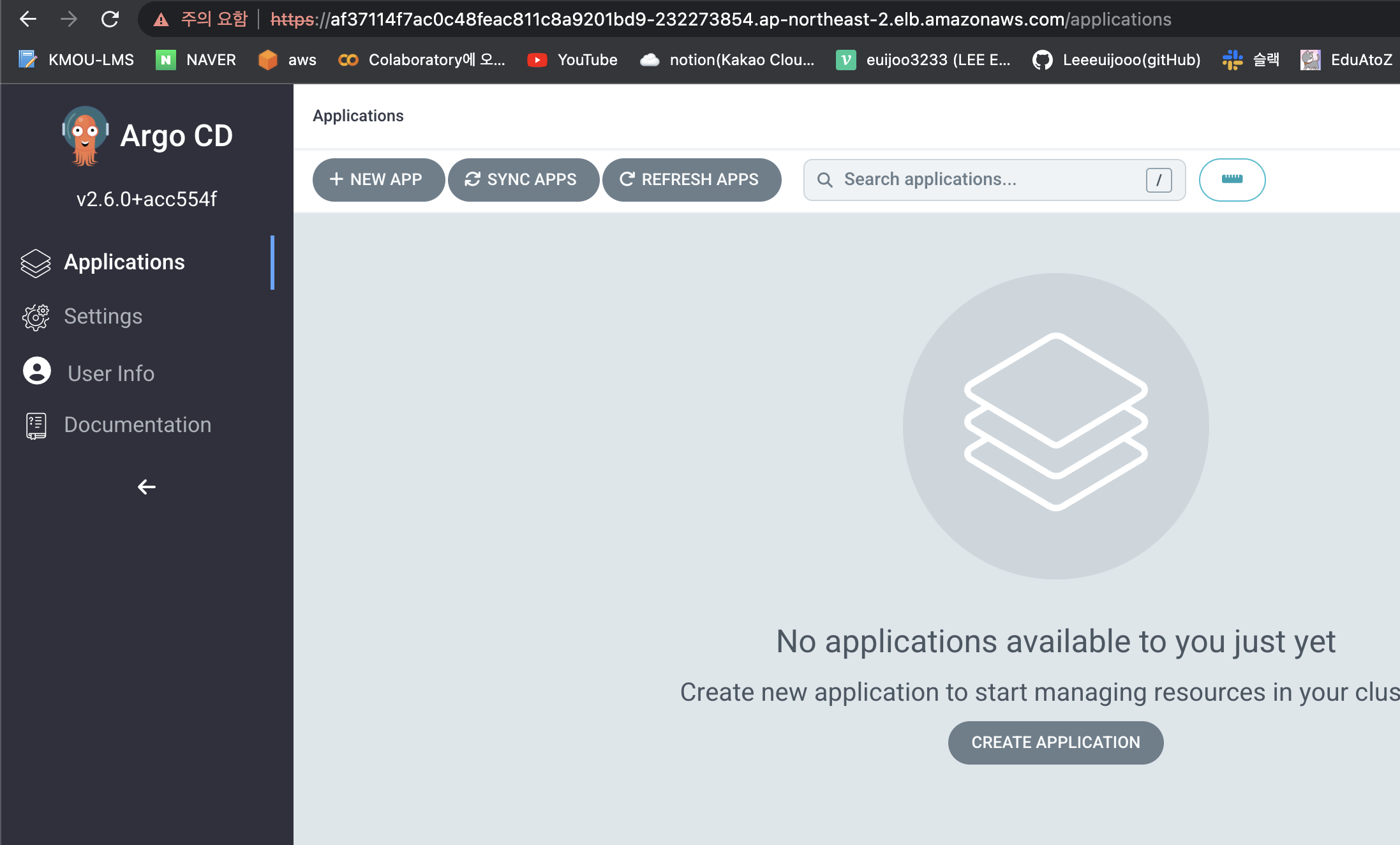

Argo CD 설치

- argo CD 를 설치하기 위한 네임스페이스 설정

$ kubectl create namespace argocd

namespace/argocd created

$ kubectl get namespace

NAME STATUS AGE

argocd Active 6s

default Active 81m

kube-node-lease Active 81m

kube-public Active 81m

kube-system Active 81m- argo CD yaml 파일 다운로드

$ wget https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

$ ls

h-deploy.yml install.yaml supermario.yml

$ kubectl apply -n argocd -f install.yaml

$ kubectl get -n argocd all

NAME READY STATUS RESTARTS AGE

pod/argocd-application-controller-0 1/1 Running 0 3m43s

pod/argocd-applicationset-controller-596ddc6c7d-dd2br 1/1 Running 0 3m43s

pod/argocd-dex-server-78c894df5b-78gmx 1/1 Running 0 3m43s

pod/argocd-notifications-controller-6f65c4ccdb-z5hfh 1/1 Running 0 3m43s

pod/argocd-redis-7dd84c47d6-nv7jg 1/1 Running 0 3m43s

pod/argocd-repo-server-667d685cbc-6vql7 1/1 Running 0 3m43s

pod/argocd-server-6cb4998447-g5clf 1/1 Running 0 3m43s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/argocd-applicationset-controller ClusterIP 172.20.170.149 <none> 7000/TCP,8080/TCP 3m44s

service/argocd-dex-server ClusterIP 172.20.101.38 <none> 5556/TCP,5557/TCP,5558/TCP 3m44s

service/argocd-metrics ClusterIP 172.20.119.132 <none> 8082/TCP 3m44s

service/argocd-notifications-controller-metrics ClusterIP 172.20.47.122 <none> 9001/TCP 3m44s

service/argocd-redis ClusterIP 172.20.190.185 <none> 6379/TCP 3m44s

service/argocd-repo-server ClusterIP 172.20.245.238 <none> 8081/TCP,8084/TCP 3m44s

service/argocd-server ClusterIP 172.20.157.252 <none> 80/TCP,443/TCP 3m44s

service/argocd-server-metrics ClusterIP 172.20.35.85 <none> 8083/TCP 3m44s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/argocd-applicationset-controller 1/1 1 1 3m44s

deployment.apps/argocd-dex-server 1/1 1 1 3m43s

deployment.apps/argocd-notifications-controller 1/1 1 1 3m43s

deployment.apps/argocd-redis 1/1 1 1 3m43s

deployment.apps/argocd-repo-server 1/1 1 1 3m43s

deployment.apps/argocd-server 1/1 1 1 3m43s

NAME DESIRED CURRENT READY AGE

replicaset.apps/argocd-applicationset-controller-596ddc6c7d 1 1 1 3m43s

replicaset.apps/argocd-dex-server-78c894df5b 1 1 1 3m43s

replicaset.apps/argocd-notifications-controller-6f65c4ccdb 1 1 1 3m43s

replicaset.apps/argocd-redis-7dd84c47d6 1 1 1 3m43s

replicaset.apps/argocd-repo-server-667d685cbc 1 1 1 3m43s

replicaset.apps/argocd-server-6cb4998447 1 1 1 3m43s

NAME READY AGE

statefulset.apps/argocd-application-controller 1/1 3m43s- argocd 서비스의 타입을 로드밸런서로 구성

kubectl patch svc argocd-server -n argocd -p '{"spec": {"type": "LoadBalancer"}}'- argo cd 초기 비밀번호 조회

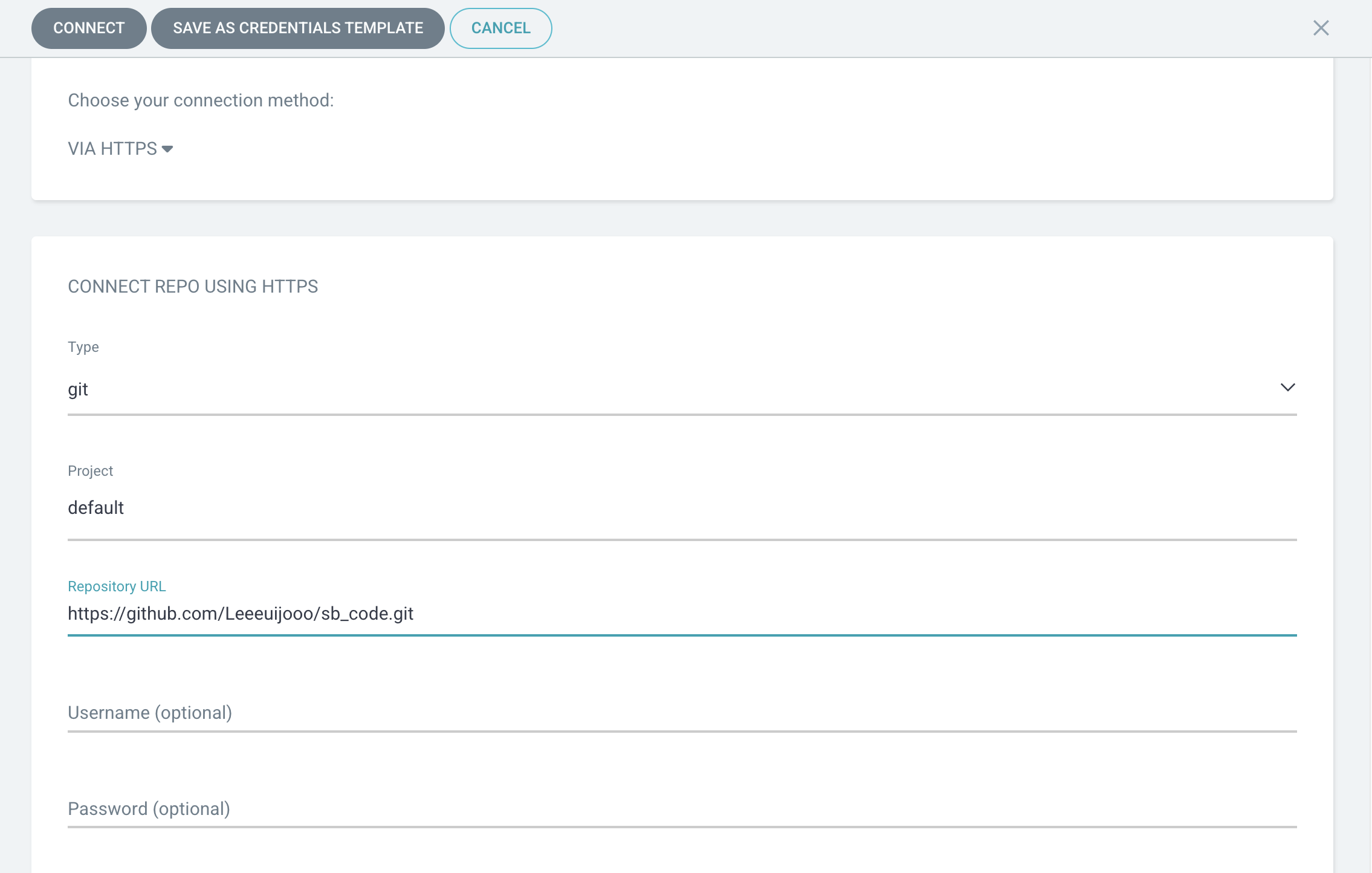

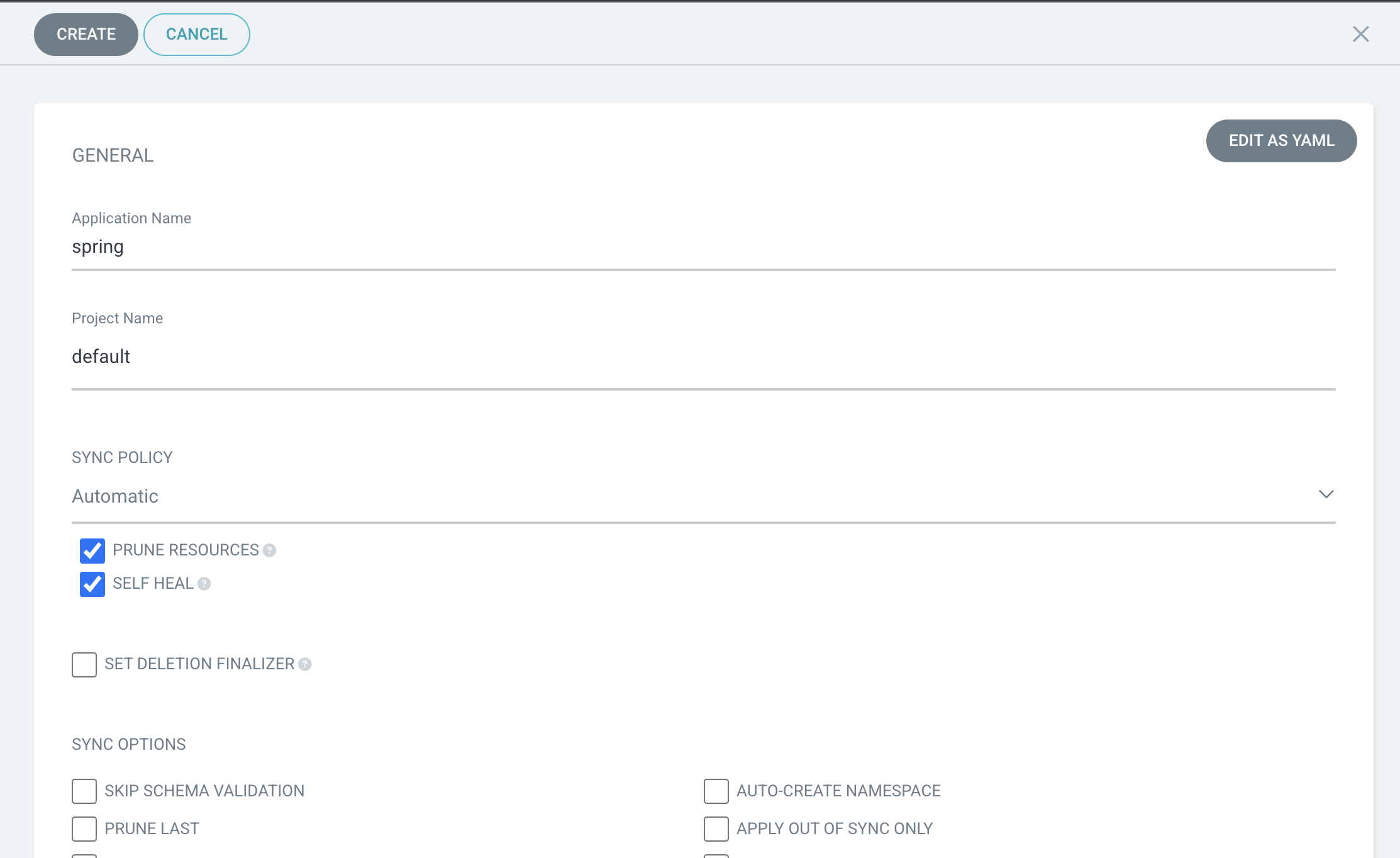

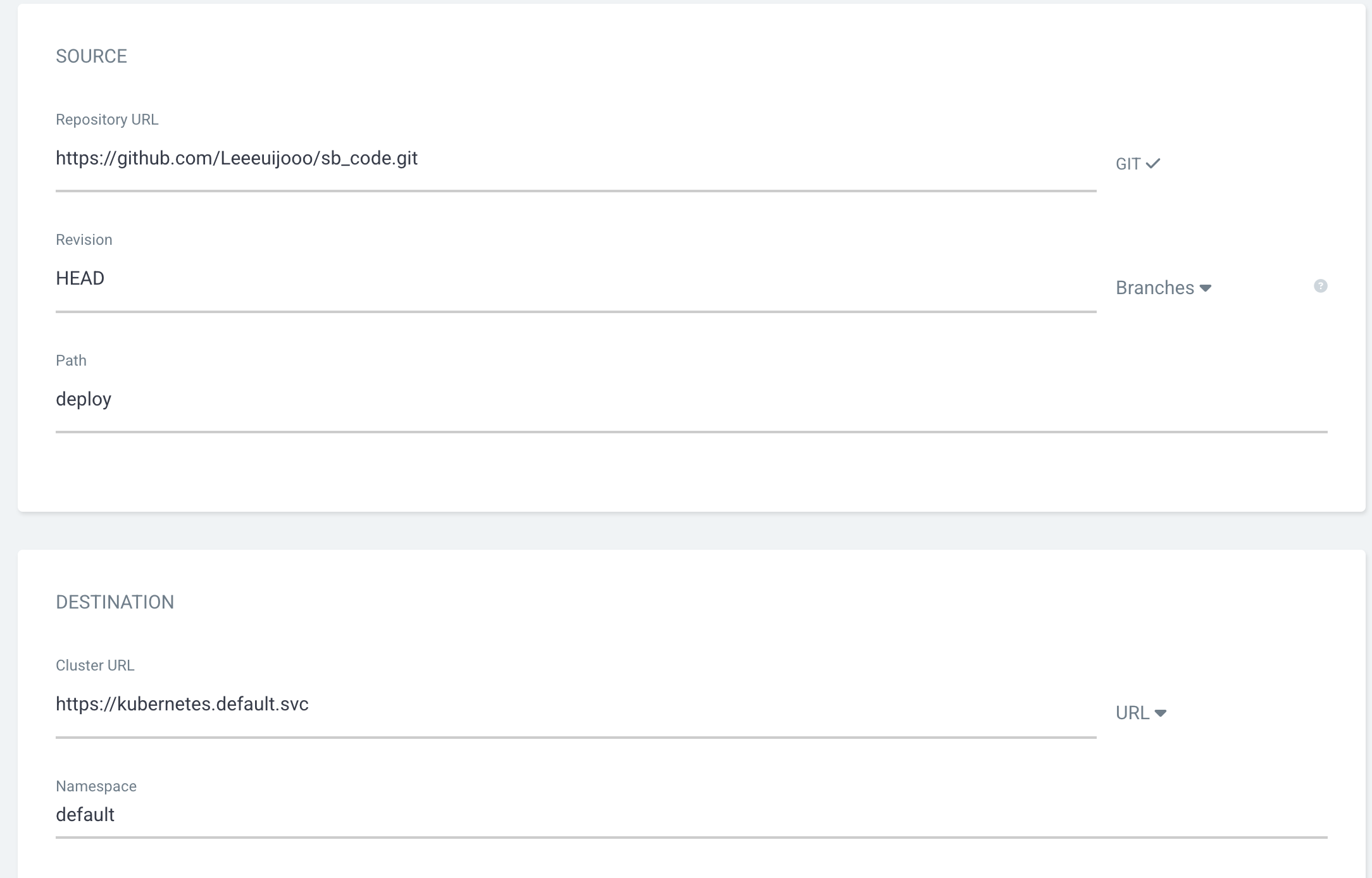

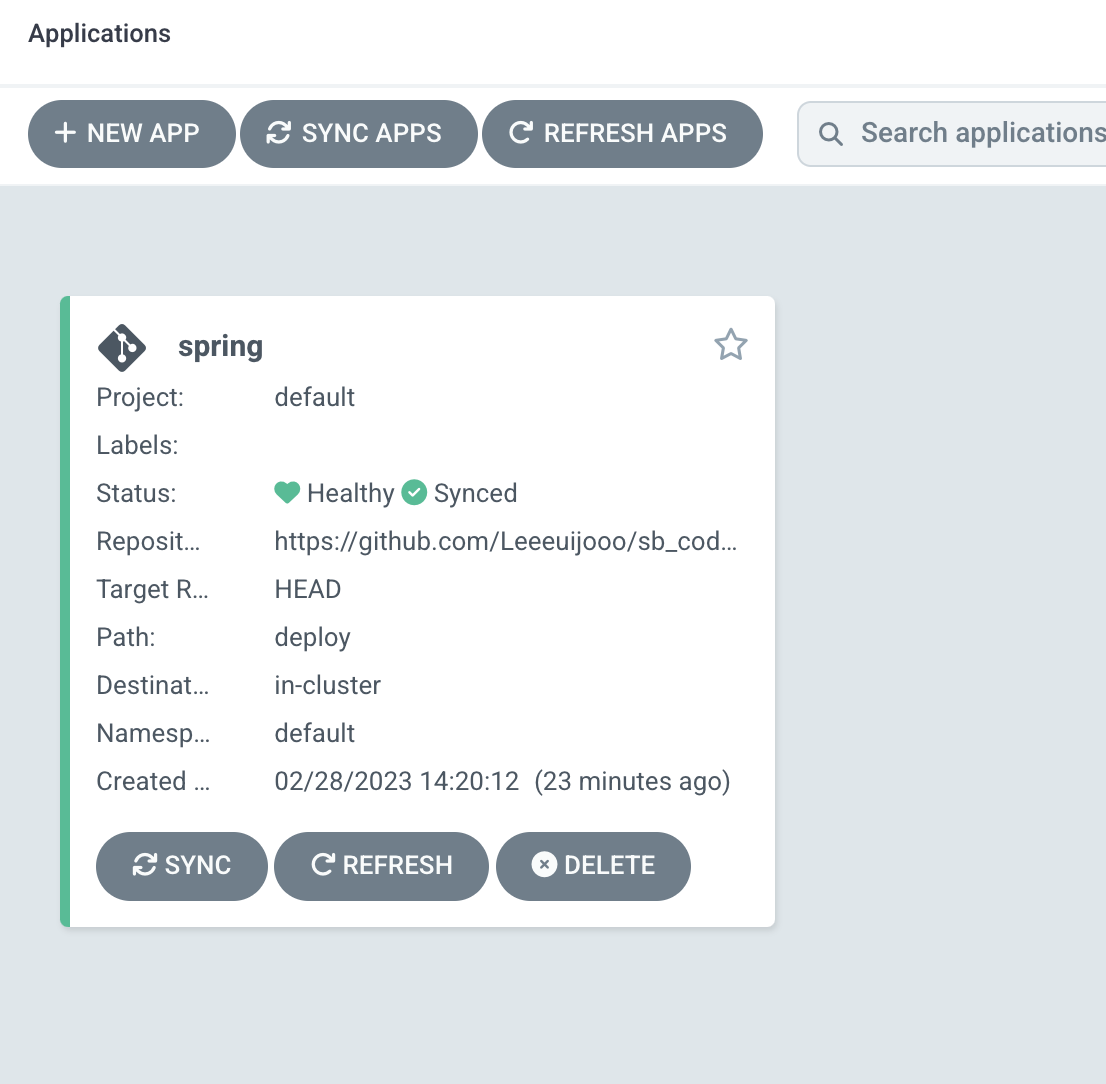

kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo- git repo connect

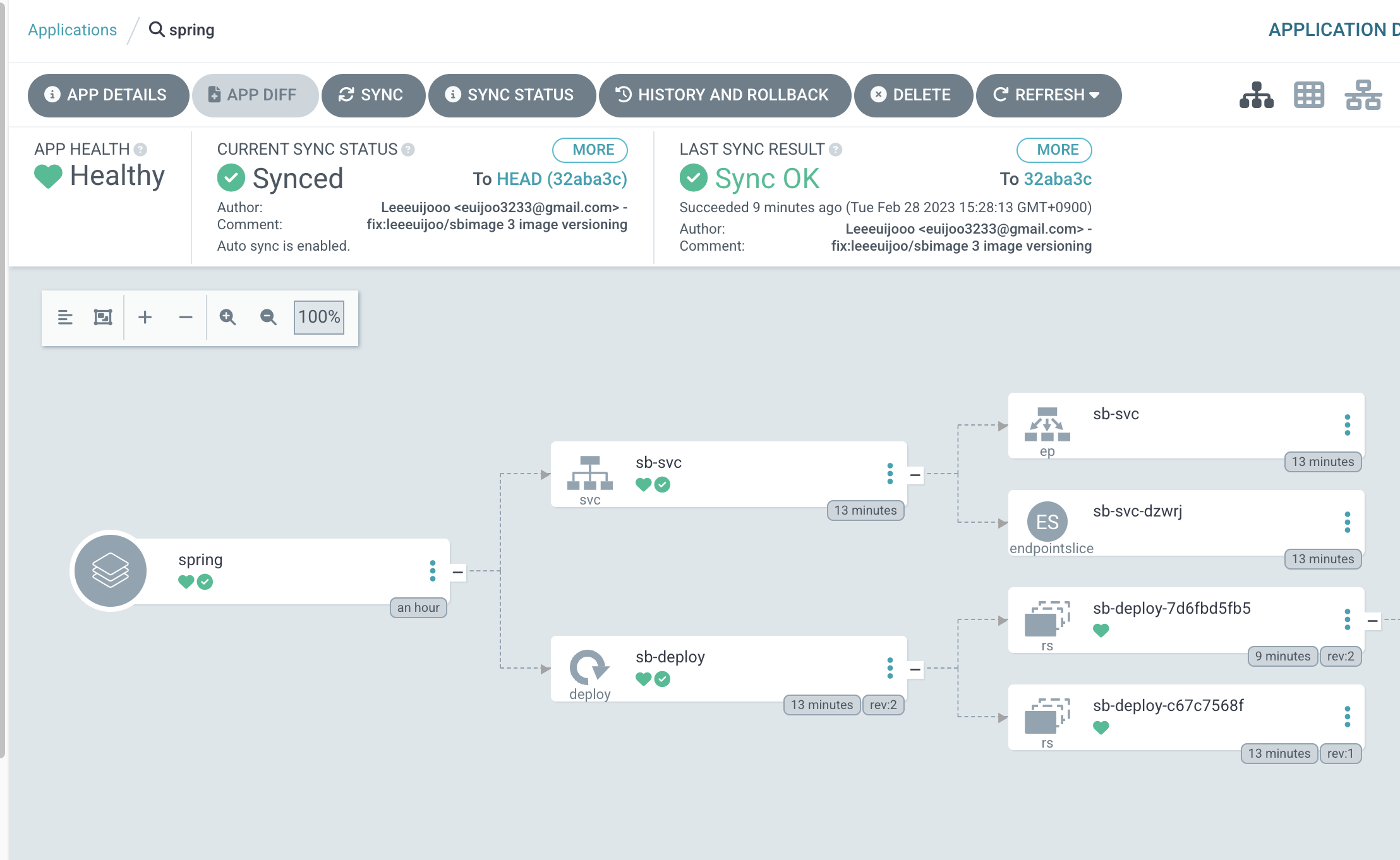

- NEW APP

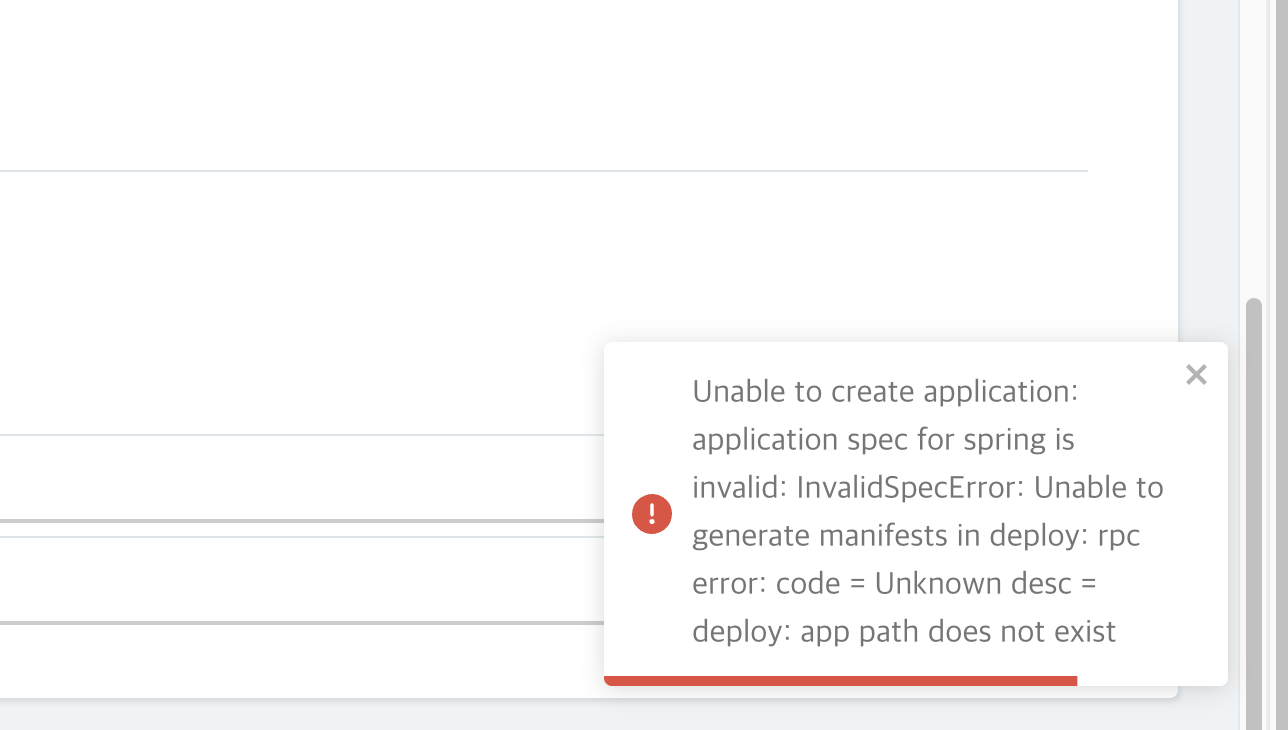

- 에러

- git repo 에 deploy 디렉토리가 존재하지 않는다

- clone 떠와서 디렉토리를 생성해줘서 문제 해결

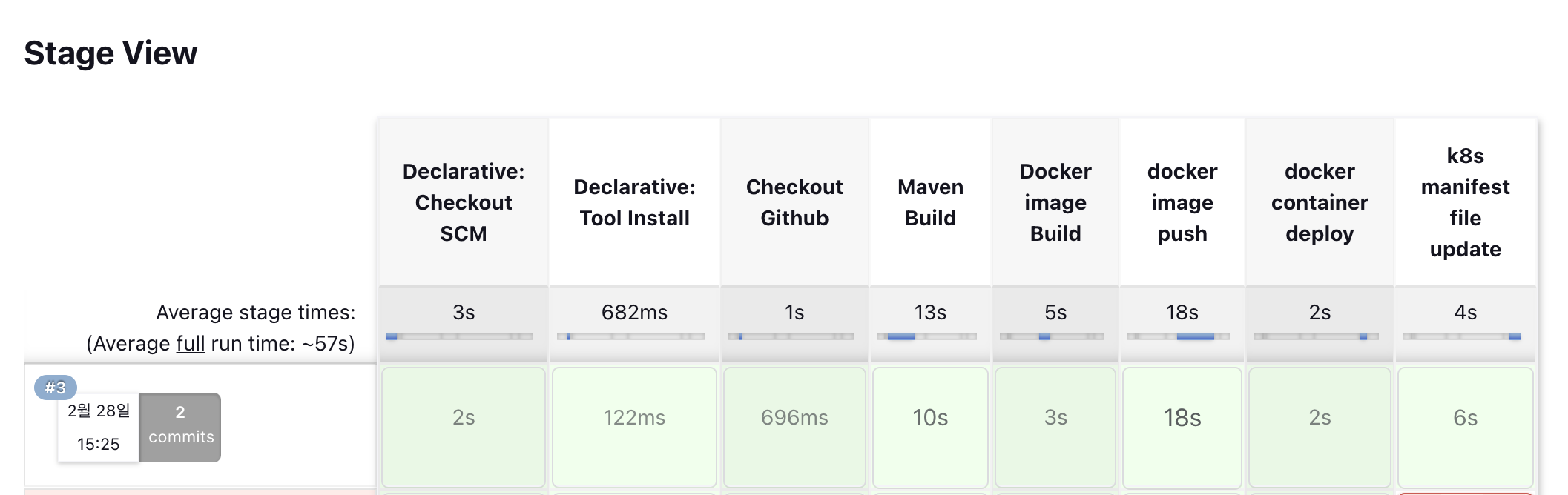

yaml 파일로 deploy 하기

- deploy 하기 전에 k8s 매니페스트 파일을 업데이트 하기 위한 stage를 Jenkins 파일에 추가

stage('k8s manifest file update') {

steps {

git credentialsId: gitCredential,

url: gitWebaddress,

branch: 'main'

// 이미지 태그 변경 후 메인 브랜치에 푸시

sh "git config --global user.email ${gitEmail}"

sh "git config --global user.name ${gitName}"

sh "sed -i 's@${dockerHubRegistry}:.*@${dockerHubRegistry}:${currentBuild.number}@g' deploy/deploy.yml"

sh "git add ."

sh "git commit -m 'fix:${dockerHubRegistry} ${currentBuild.number} image versioning'"

sh "git branch -M main"

sh "git remote remove origin"

sh "git remote add origin ${gitSshaddress}"

sh "git push -u origin main"

}

post {

failure {

echo 'Container Deploy failure'

}

success {

echo 'Container Deploy success'

}

}

}

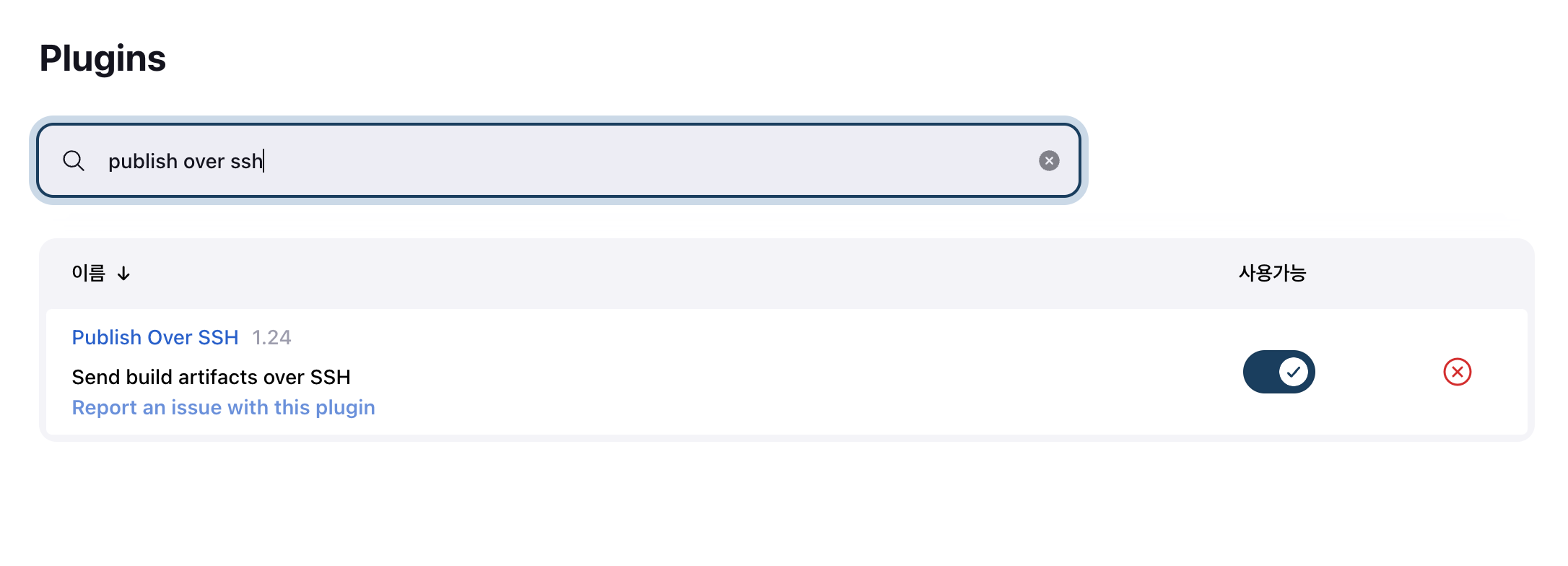

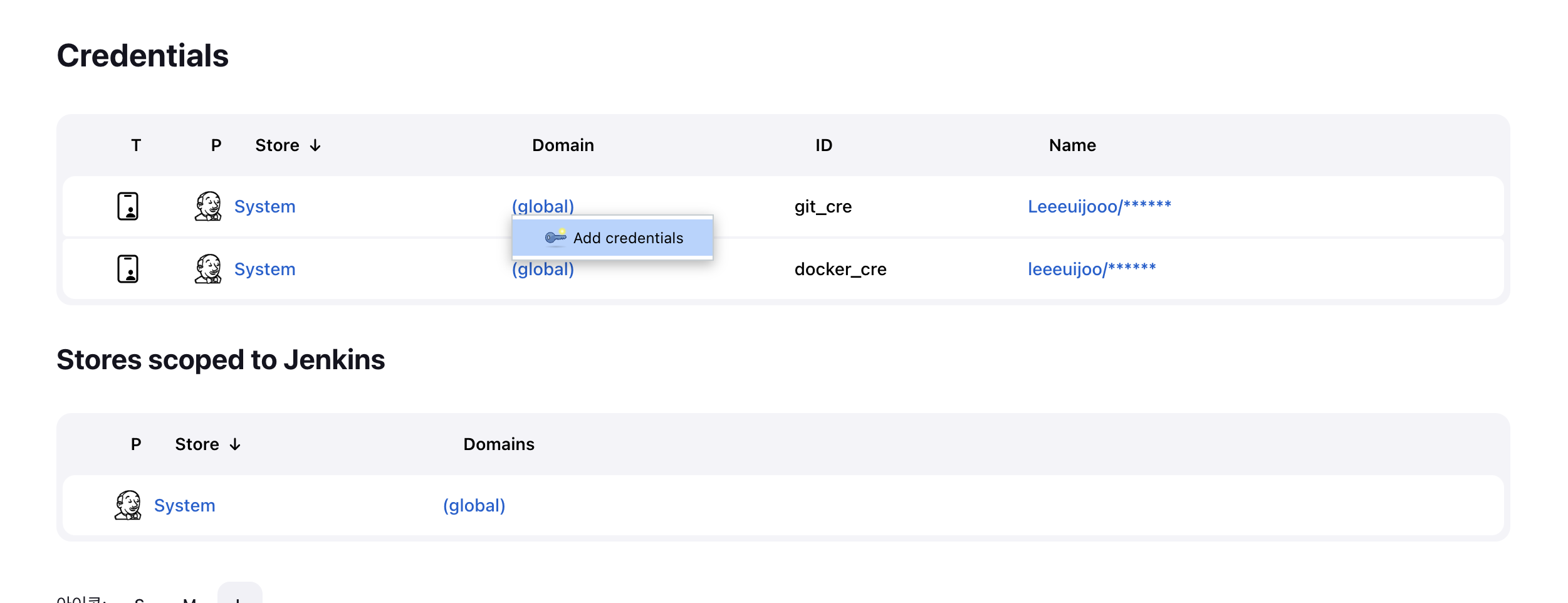

- jenkins 관리 콘솔에서 over ssh 플러그인 설치

- get jenkins keygen

root@ip-172-31-0-249:~/yml/sb_code# su jenkins

jenkins@ip-172-31-0-249:/root/yml/sb_code$ cd ~

jenkins@ip-172-31-0-249:~$ pwd

/var/lib/jenkins

jenkins@ip-172-31-0-249:~$ ssh-keygen

### enter 3

jenkins@ip-172-31-0-249:~$ cd .ssh/

jenkins@ip-172-31-0-249:~/.ssh$ ls

id_rsa id_rsa.pub

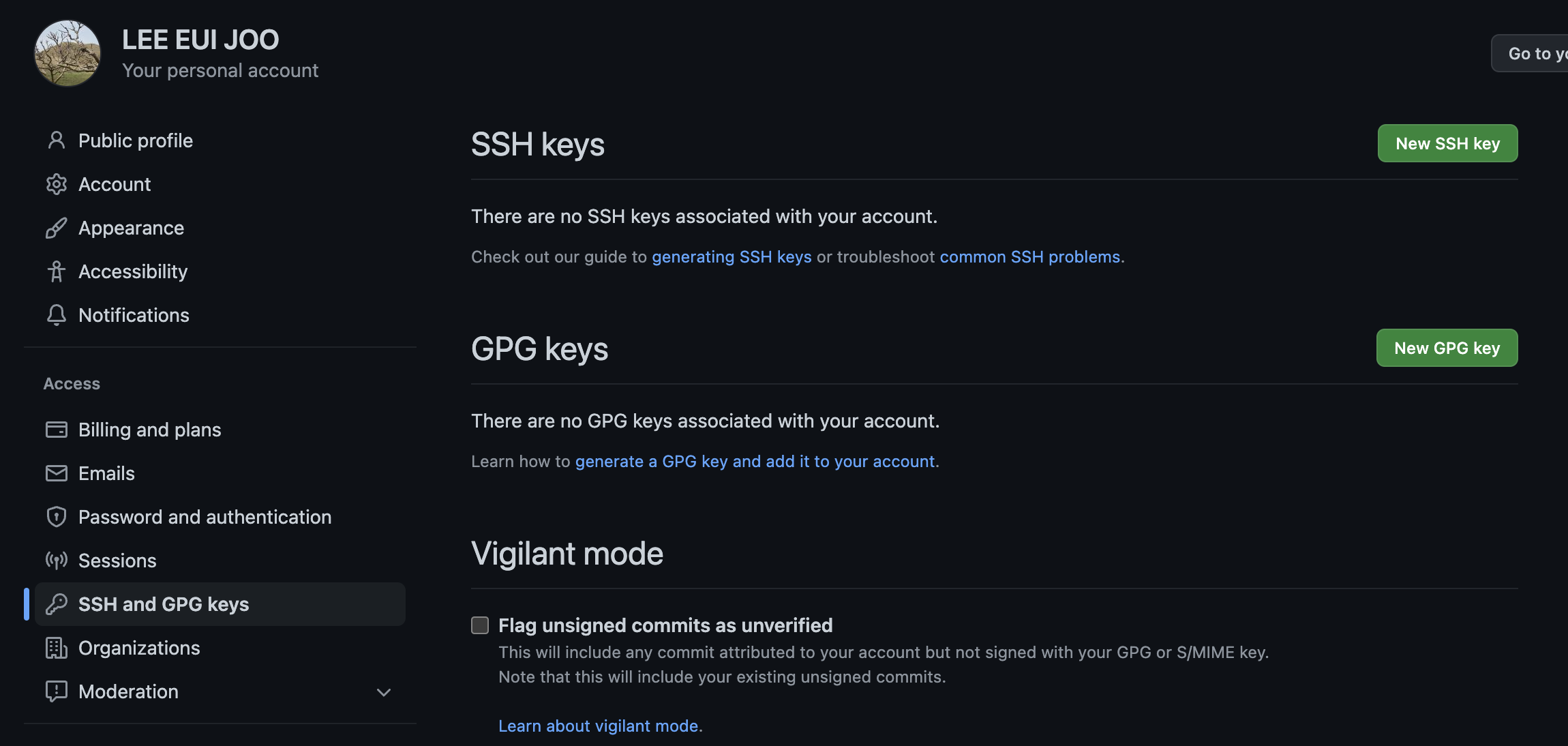

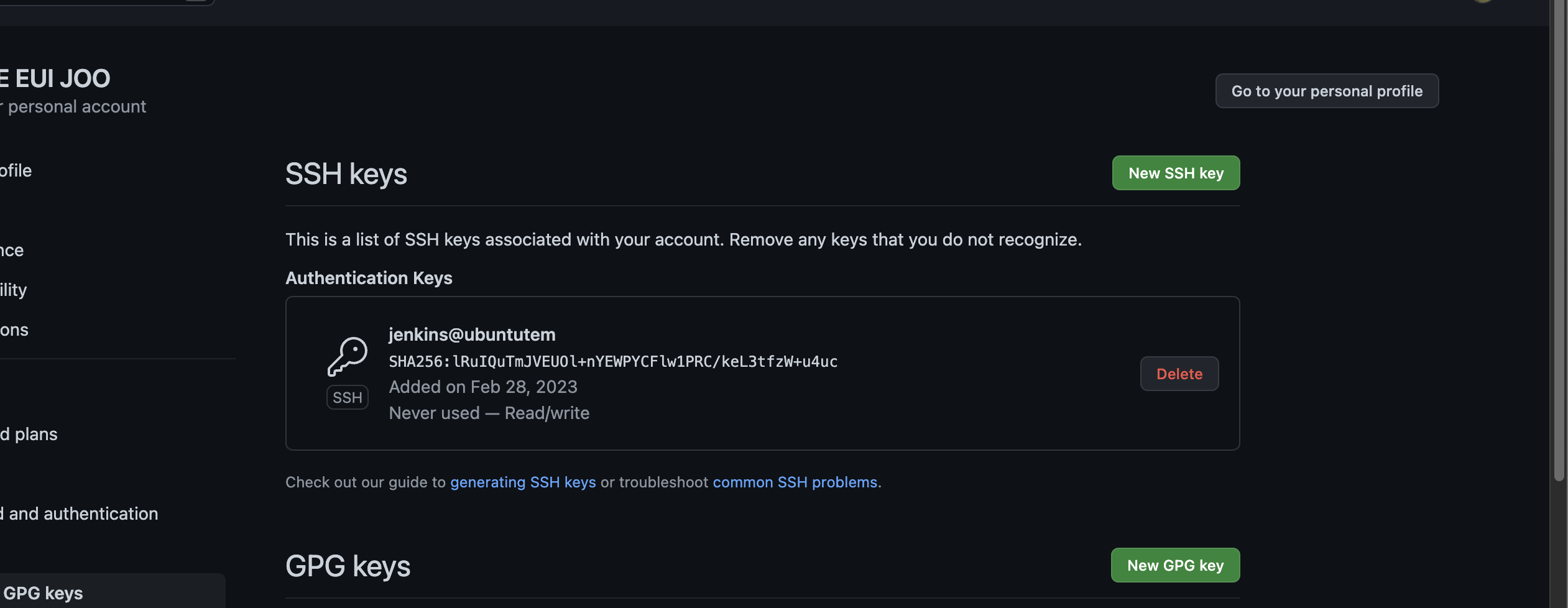

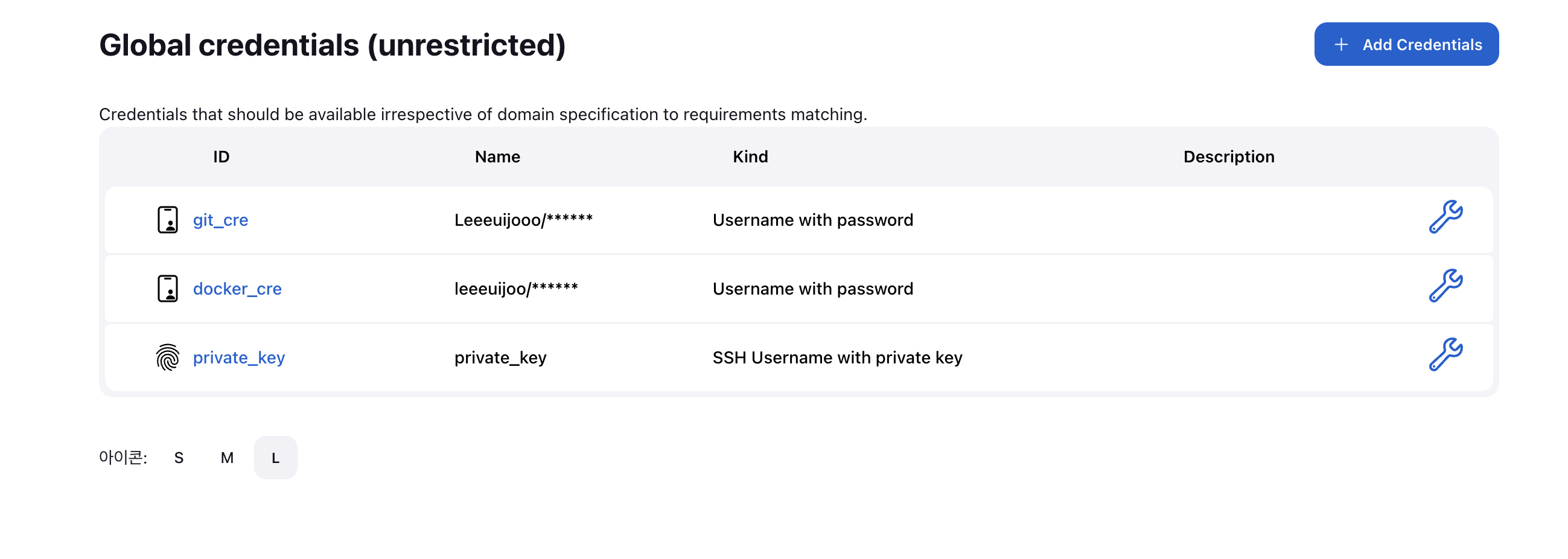

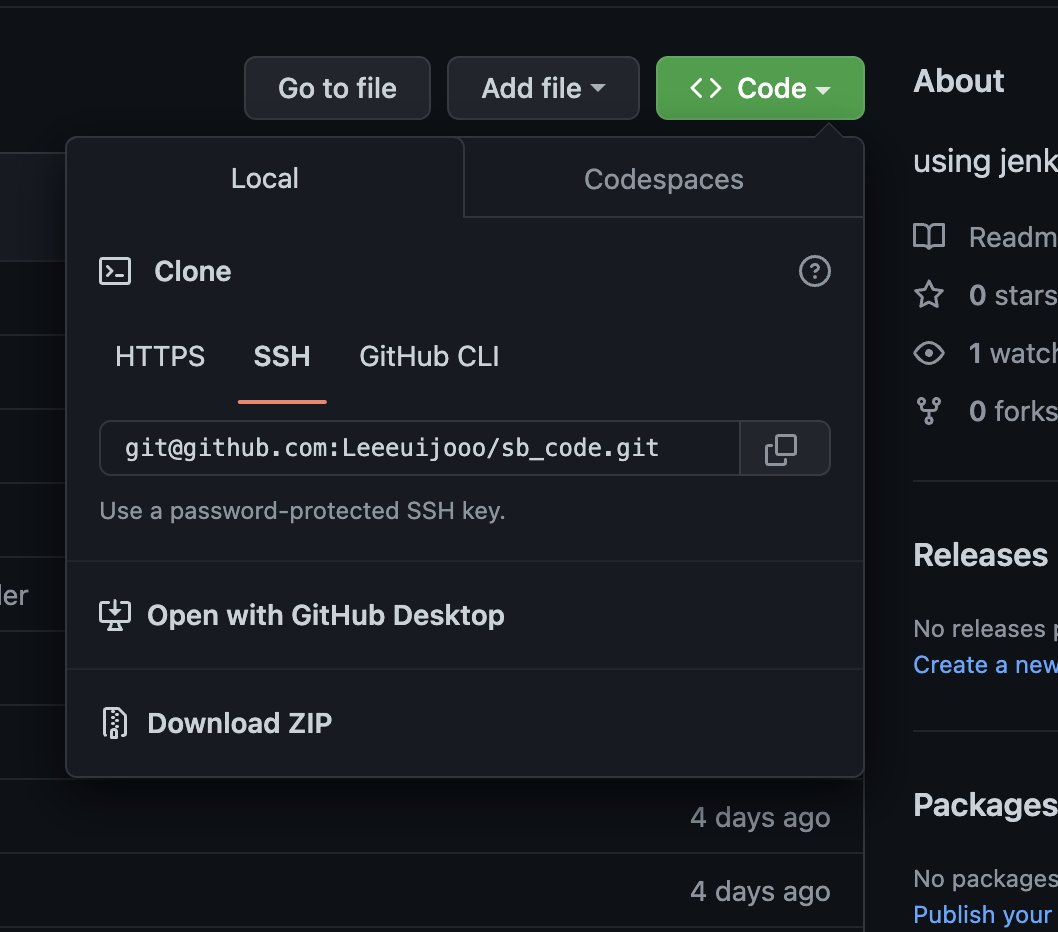

# id_rsa = 젠킨스에 등록할 프라이빗키, id_rsa.pub = 깃허브에 등록할 퍼블릭키- keygen 을 발급 받았으니, git hub 에서 ssh 세팅을 해줘야 한다

- pub key setting

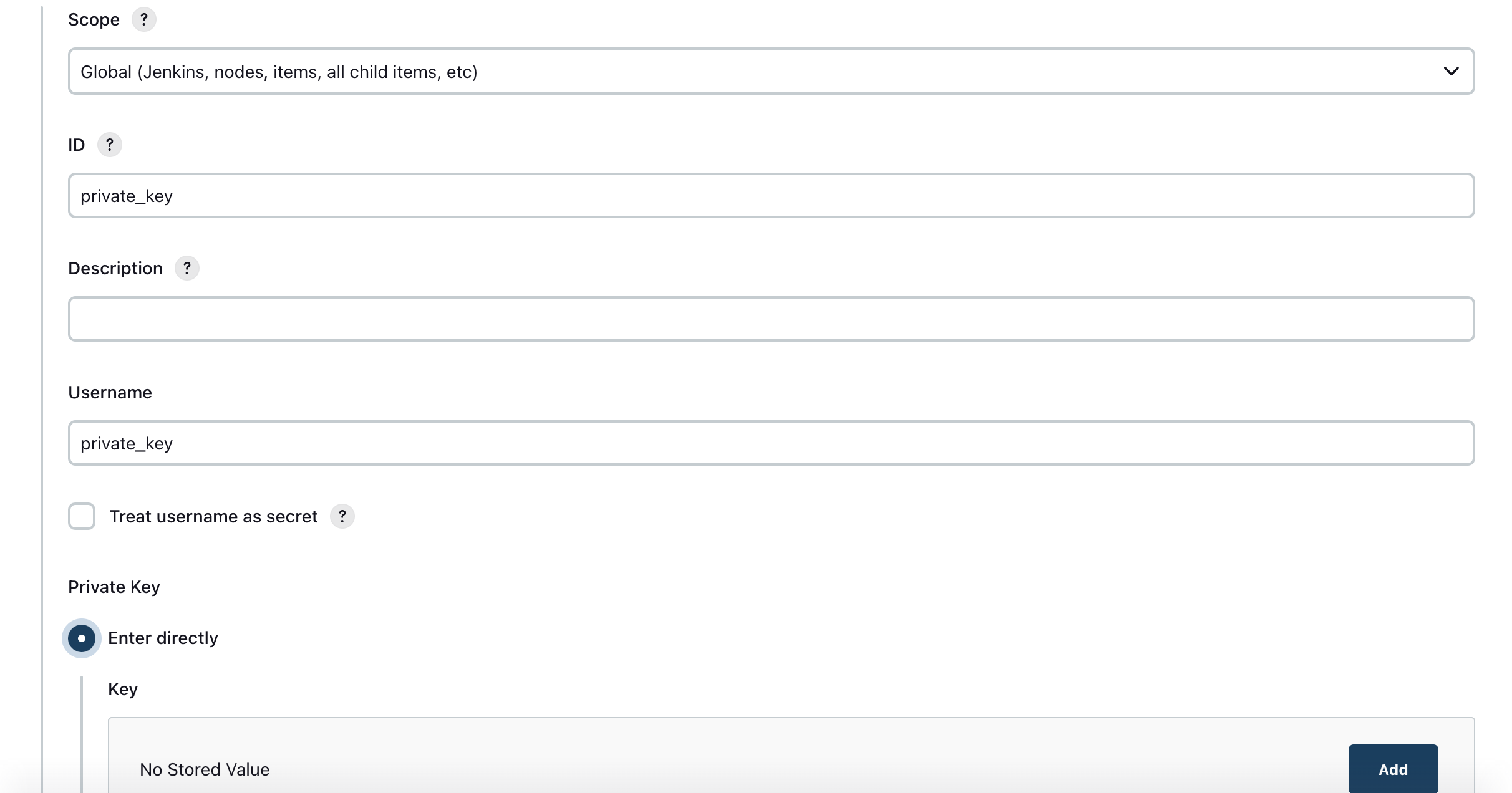

- jenkins 에서 private key 등록

- Enter directly 옵션으로 직접 입력

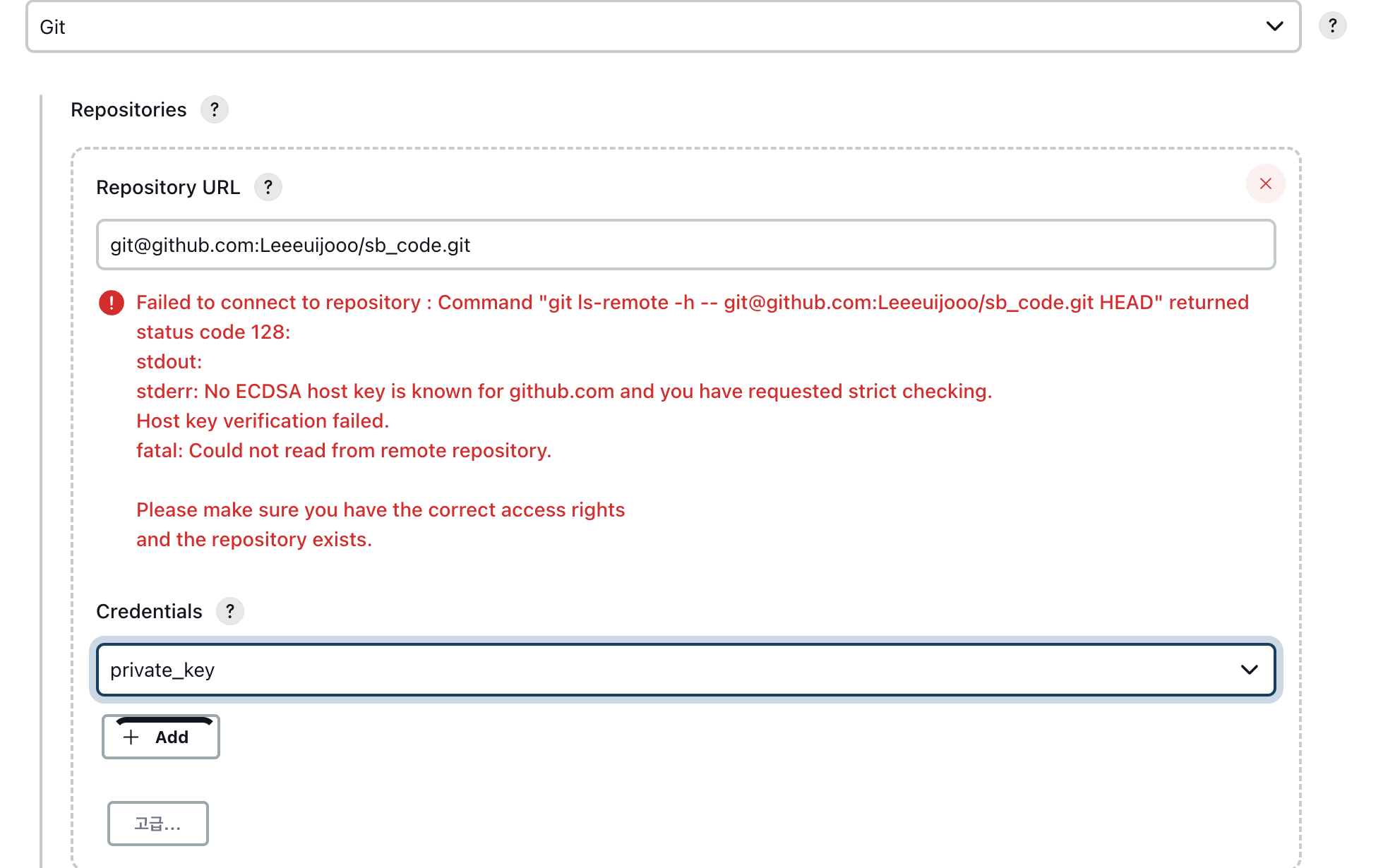

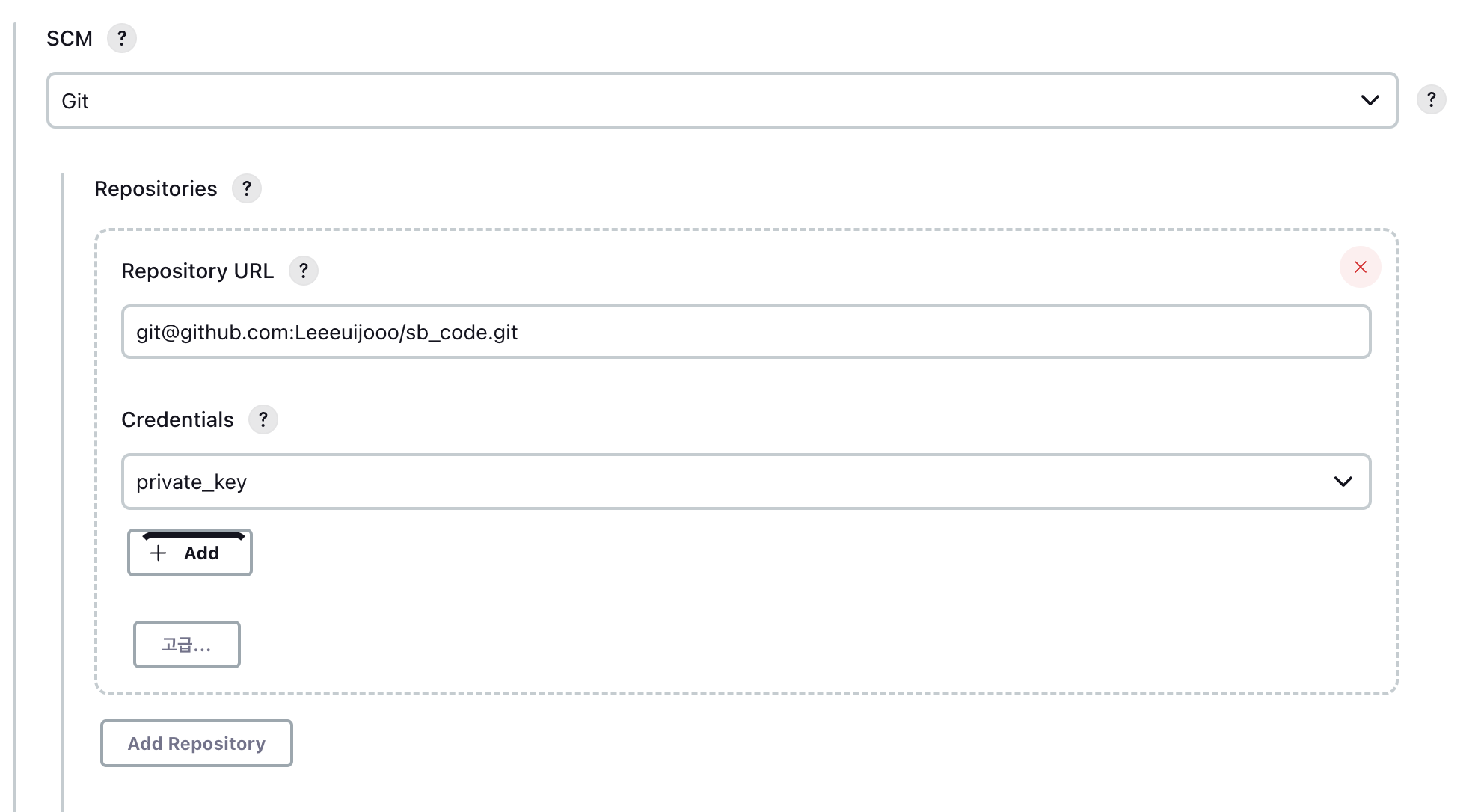

- jenkins ssh repo 설정

에러 발생 known_host 에 등록을 안했기 때문

# known_host 에 등록

$ ssh -vT git@github.com

$ ls

id_rsa id_rsa.pub known_hosts

- Error 해결

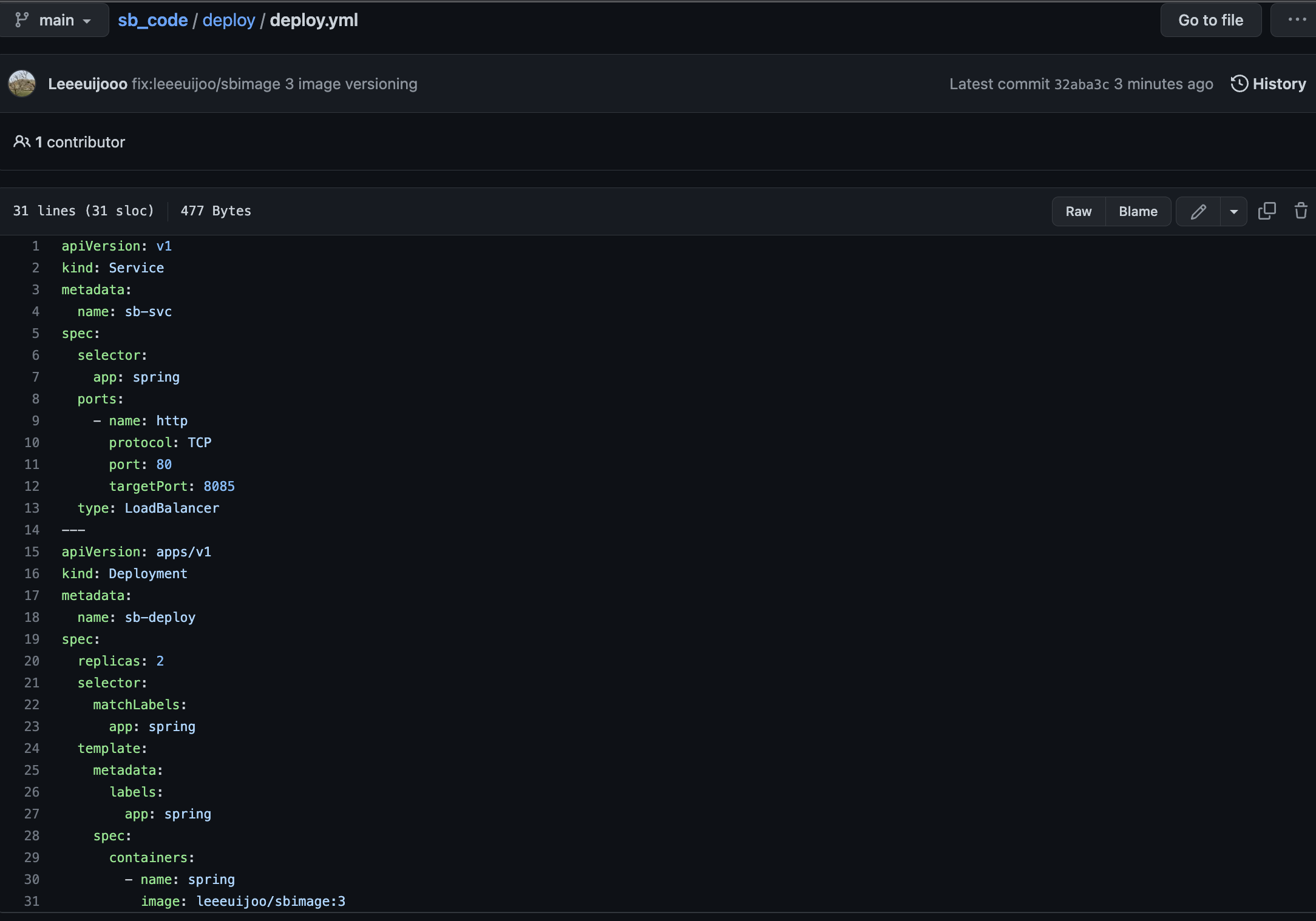

- deploy.yml 배포

apiVersion: v1

kind: Service

metadata:

name: sb-svc

spec:

selector:

app: spring

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8085

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sb-deploy

spec:

replicas: 2

selector:

matchLabels:

app: spring

template:

metadata:

labels:

app: spring

spec:

containers:

- name: spring

image: leeeuijoo/sbimage:latest- yml 파일 push 후 지금 빌드

- git sb_code/deploy/deploy.yml

문제

-

클러스터 이름은 my-cluster. 두개의 워커노드를 갖는 노드그룹.

-

모든앱은 argoCD를 통해 자동배포. argoCD의 서비스 type은 NodePort 일 것.

-

<로드밸런서주소>로 접속했을때 도커허브의 pengbai/docker-supermario를 배포할것. 해당이미지는 8080포트를 사용함.

-

<로드밸런서주소>/sb로 접속했을때 실습했었던 springbootApp.jar로 접속. 해당이미지는 8085포트를 사용함. 또한, 젠킨스 프로젝트 빌드시 도커허브의 이미지 및 k8s 매니페스트파일이 자동으로 업데이트 되어야함.

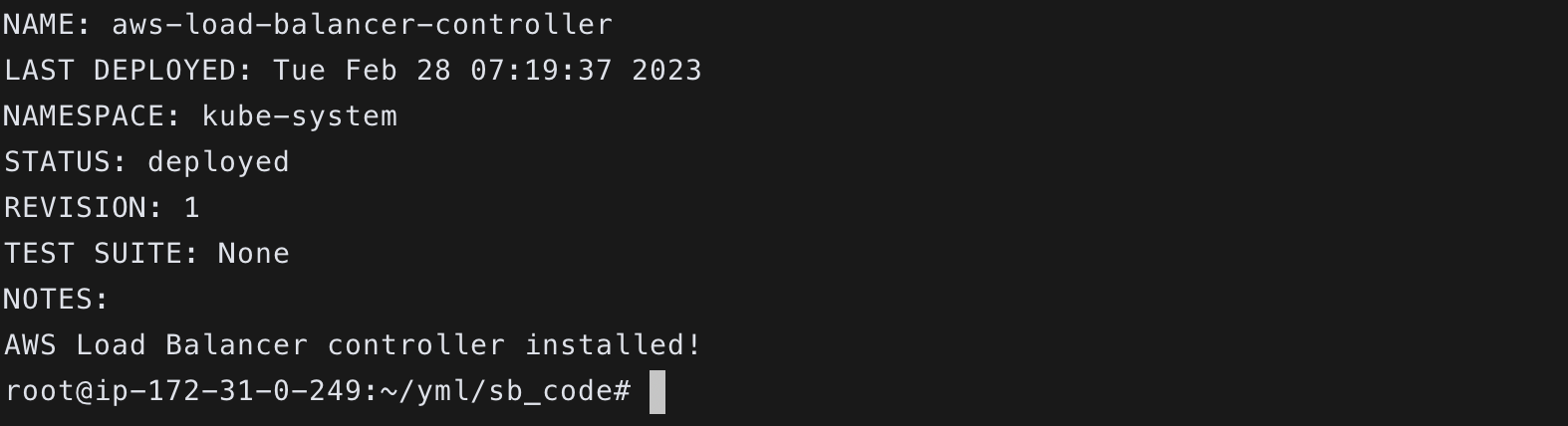

ingress Install

- ingress_sh 쉘스크립트 파일 설치

$ cat ingress_sh

********

#!/bin/bash

CLUSTER_NAME=eks-cluster

#your eks cluster name

ACCOUNT_ID=380962557106

#your aws account ID, You can check on AWS 'IAM'

VPC_ID=vpc-0fdcfa9ae5dc1bfa1

#your VPC ID , EKS installed

EKS_REGION=ap-northeast-2

#Region with EKS installed

eksctl utils associate-iam-oidc-provider --cluster ${CLUSTER_NAME} --approve

curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.3.1/docs/install/iam_policy.json

#정책내용 다운로드

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy \

--policy-document file://iam_policy.json 1> /dev/null

#다운로드한 정책내용이 반영된 AWSLoadBalancerControllerIAMPolicy라는 정책 생성

sleep 1

eksctl create iamserviceaccount \

--cluster=${CLUSTER_NAME} \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::${ACCOUNT_ID}:policy/AWSLoadBalancerControllerIAMPolicy \

--override-existing-serviceaccounts \

--approve

#eksctl을 통해 aws-load-balancer-controller 라는 serviceaccount 생성

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 > get_helm.sh

echo 'DOWNLOAD helm_scrpits'

sleep 2

chmod +x get_helm.sh

./get_helm.sh

helm repo add eks https://aws.github.io/eks-charts

helm repo update

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=${CLUSTER_NAME} \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller \

--set image.repository=602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon/aws-load-balancer-controller \

--set region=${EKS_REGION} \

--set vpcId=${VPC_ID}

#aws-load-balancer-controller를 serviceaccount로 하는 aws-load-balancer-controller 차트 설치

$ sh ingress_install.sh

- 설치 완료

주의

-

만약에 기존에 해당 serviceaccount 및 policy들이 존재한다면 먼저 삭제를 해주고 스크립트를 실행해야한다.

-

iam에 가서 역할 Load로 검색해서 나오는 역할 삭제

-

정책에서 Load로 검색해서 나오는 정책 삭제.

-

Cloud Formation에 존재하는 LoadBalancer 스택도 삭제.

-

헬름으로 설치한 ingress controller가 존재하는경우에도 삭제를 해주고 다시해주는게 좋다.

-

helm uninstall aws-load-balancer-controller -n kube-system

- helm uninstall <차트이름> -n kube-system

-

정리

Jenkins 설치시 문제

- Jenkins 는 50000번 Port 를 쓴다고 한다

- 인스턴스의 보안 그룹에서 50000번 Port를 열어줄 것

Whitelabel Error Page 문제

- Springboot 1.X 에서는 context-path 를 변경해줘야 함

- application.properties 파일에서 수정 (경로 지정)

- Jenkins 에서 다시 빌드

- 참조 https://linkeverything.github.io/springboot/spring-context-path/