사실 쿠버네티스는 GKE로만 접해봤는데, 어쩌다보니 kubernetes를 직접 설치해서 운영하는 프로젝트가 생겼다. 설치를 하다보니 생각도 못한 오류를 보기도 했고, 공식 페이지에도 작업 방법이 여기저기 떨어져 있어서 다시 진행해보려고 하니 어디 있더라 싶어져서 진행했던 내용을 정리해본다.

EC2 인스턴스 준비하기

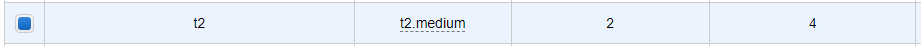

kubernetes master, worker 노드의 경우 기본적으로 권장하는 사이즈가 CPU 2이상, Memory 2GB 이상이다.(https://kubernetes.io/ko/docs/setup/production-environment/tools/kubeadm/install-kubeadm/)

AMI 선택 시 Amazon Linux는 선택하지말 것 (docker, kubernetes repo에서 yum 설치 중 오류가 발생한다.)

Red hat 이미지를 사용했다.

권장 리소스를 맞추기 위해 t2.medium 선택

Security 인바운드 규칙 설정하기

나중에 kubeadm join이 되지 않아서 한참 헤맸던 부분인데, 기본적으로 서브넷 간에 통신이 가능하도록 설정하자.

6443 -> API 서버 포트 (마스터 노드)

22 -> SCP로 파일 전송용

마스터 노드 작업하기

준비작업

iptables가 브리지된 트래픽을 보게 하기

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system1) Docker 설치

https://docs.docker.com/engine/install/#server

CentOS https://docs.docker.com/engine/install/centos/

구버전 삭제

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-enginerepo 설정

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repodocker install

sudo yum install docker-ce docker-ce-cli containerd.iodocker 실행

sudo systemctl start docker점검

sudo docker run hello-world kubernetes를 위한 설정

Cgroup 관리에 systemd를 사용하도록 설정

설정하는 방법 중에 daemon.json을 생성하는 방법과 서비스에 옵션값을 넣는 방법(--exec-opt native.cgroupdriver=systemd)이 있는데, 서비스에 옵션값을 넣게 되면 daemon.json을 수정할 때 오류가 발생하기도 했다.

sudo mkdir /etc/docker

cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF부팅시 실행되게 설정 및 도커 재시작 (설정 적용)

sudo systemctl enable docker

sudo systemctl daemon-reload

sudo systemctl restart docker 설정 확인

Cgroup Driver가 systemd라면 잘 적용된 것이다. (기본 설정은 cgroupfs이며 kubelet과 이 설정이 일치하지 않으면 오류가 발생한다.)

docker info | grep Cgroup

Cgroup Driver: systemd

Cgroup Version: 12) kubectl, kubeadm, kubelet 설치

kubernetes repo설정 및 설치

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# permissive 모드로 SELinux 설정(효과적으로 비활성화)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet3) kubeadm init 진행

설치가 완료되면 kubeadm init을 진행할 수 있다. 중간에 오류가 발생하게 되면 kubeadm reset으로 초기화 한 후에 다시 init으로 진행한다.

[root@i-00ffc2795d3930938 workspace]# kubeadm init

[init] Using Kubernetes version: v1.23.5

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Using existing ca certificate authority

[certs] Using existing apiserver certificate and key on disk

[certs] Using existing apiserver-kubelet-client certificate and key on disk

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [i-00ffc2795d3930938.ap-northeast-2.compute.internal localhost] and IPs [10.0.6.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [i-00ffc2795d3930938.ap-northeast-2.compute.internal localhost] and IPs [10.0.6.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 7.503097 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kunsparently.

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node i-00ffc2795d3930938.ap-northeast-2.compute.internal as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kernal-load-balancers]

[mark-control-plane] Marking the node i-00ffc2795d3930938.ap-northeast-2.compute.internal as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 7spbr0.xdteoukw4xqd0hjx

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.6.100:6443 --token 7spbr0.xdteoukw4xqd0hjx \

--discovery-token-ca-cert-hash sha256:7670b8b63583c50432855e26be0cf8038a4e9c3d666675c54799fa62f8cdbeb1※ token은 24시간이 지나면 사라진다.

# kubeadm token create

# kubeadm token listWorker 노드 작업하기

마스터 노드 작업 1)~2) 작업 진행 후 kubeadm init에서 출력된 kubeadm join을 진행한다.

[root@i-05ea86c151589ec73 /]#kubeadm join 10.0.6.100:6443 --token 7spbr0.xdteoukw4xqd0hjx --discovery-token-ca-cert-hash sha256:7670b8b63583c50432855e26be0cf8038a4e9c3d666675c54799fa62f8cdbeb1

[preflight] Running pre-flight checks

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.이 상태까지 진행을 하면, control-plane에서는 node가 조회되지만 worker node에서는 kubectl get nodes 정보가 실패한다. (API 서버 정보가 없음)

master 노드에서 생성된 config 파일을 동일하게 $HOME/.kube/config로 복사해야 한다.

기본적으로 AWS EC2에서는 패스워드 입력으로 로그인이 막혀있다.

master 노드에서 worker 노드로 접속 할 수 있도록 password 인증을 풀어준다.

[root@i-05ea86c151589ec73 ~]# mkdir -p /home/ec2-user/.kube

[root@i-05ea86c151589ec73 ~]# vim /etc/ssh/sshd_configPasswordAuthentication no -> yes

추가로 신규 사용자를 생성하거나, 기존 ec2-user의 password를 설정한다.

(ec2-user는 권한이 많아서 신규 사용자를 생성하는게 안전할 수 있다. 작업이 완료되면 다시

password 인증을 막아도 된다.)

# To disable tunneled clear text passwords, change to no here!

#PasswordAuthentication yes

#PermitEmptyPasswords no

PasswordAuthentication yesssh 데몬을 재기동 한다.

[root@i-05ea86c151589ec73 /]# systemctl restart sshd.service다시 master 노드로 가서 파일을 복사한다.

[root@i-00ffc2795d3930938 .kube]# scp $HOME/.kube/config ec2-user@10.0.13.67:/home/ec2-user/.kube

ec2-user@10.0.13.67's password:

config kubectl 명령어 수행

[ec2-user@i-05ea86c151589ec73 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

i-00ffc2795d3930938.ap-northeast-2.compute.internal Ready control-plane,master 3h31m v1.23.5

i-05ea86c151589ec73.ap-northeast-2.compute.internal Ready <none> 101m v1.23.5

(다른 사용자가 사용하기 위해서는 동일하게 $HOME 디렉토리에 .kube/config 파일 복사가 필요함)