--

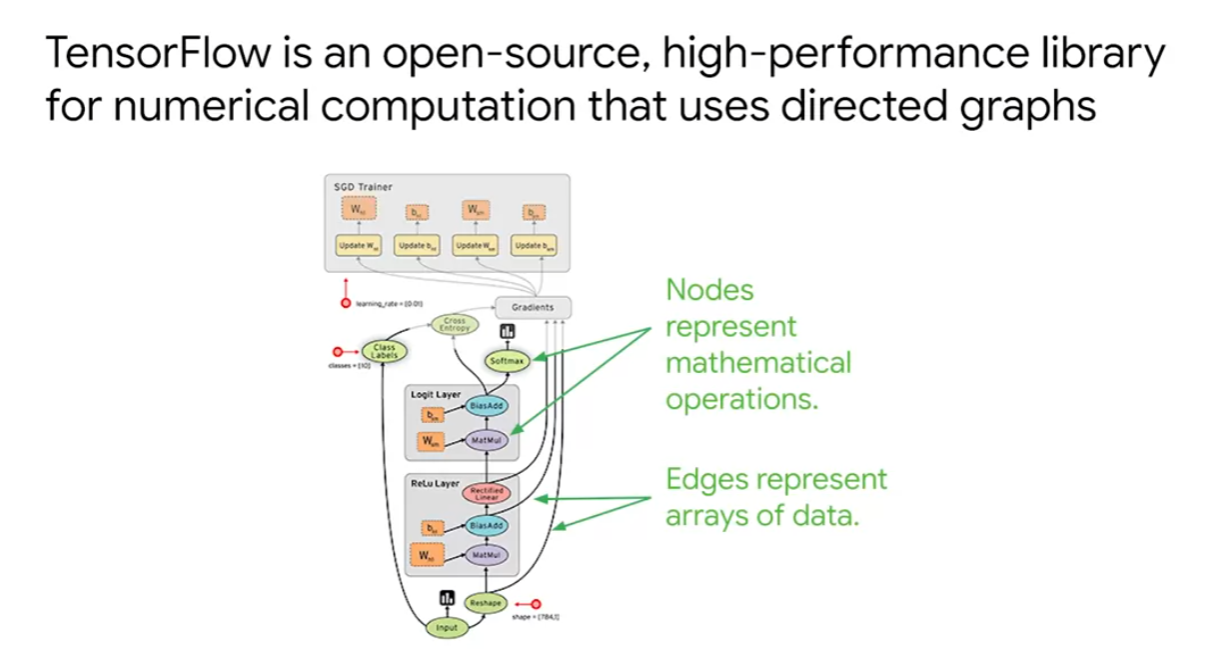

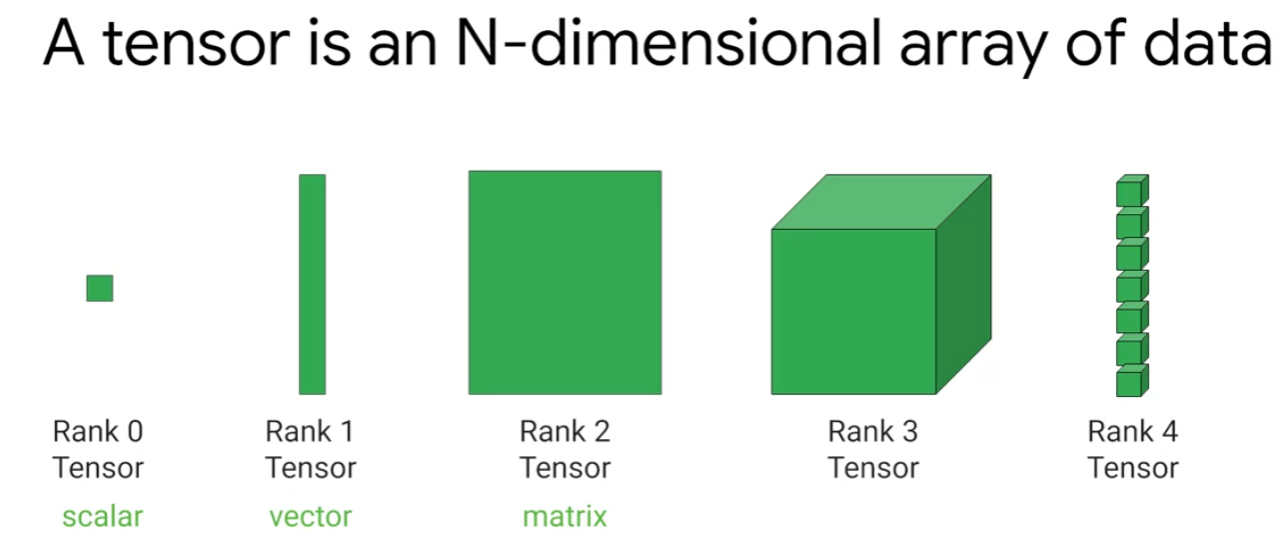

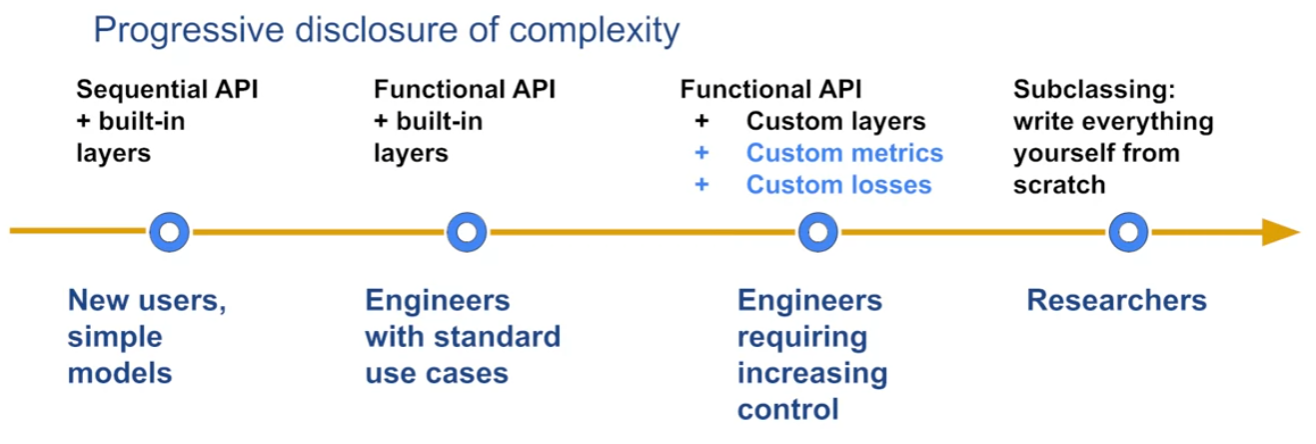

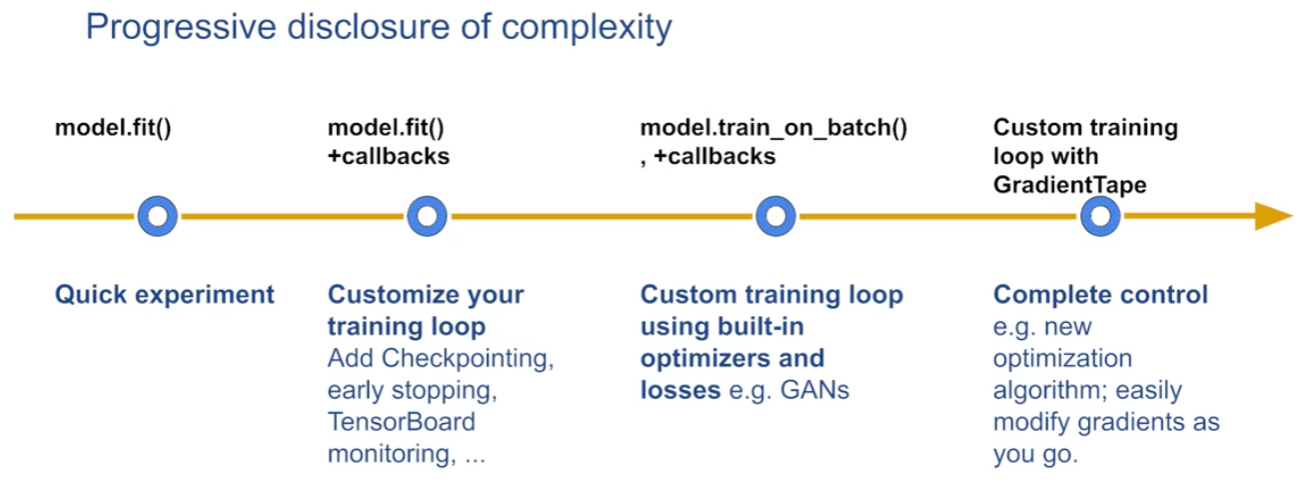

Introduction to Tensorflow

ML concepts( basics to advanced ) and related tools

ML Concepts - Problem Framing, Data processing, Feature Engineering, Training, Tuning, Validation

Tools - Tensorflow, Keras, XGB

GCP ML services

ML APIs( Pretrained models ) - Natural Language, Vision, Video Intelligence

AutoML - Data Labeling Service, Training, Deployment

BQML - Algorithms, Training, Deployment

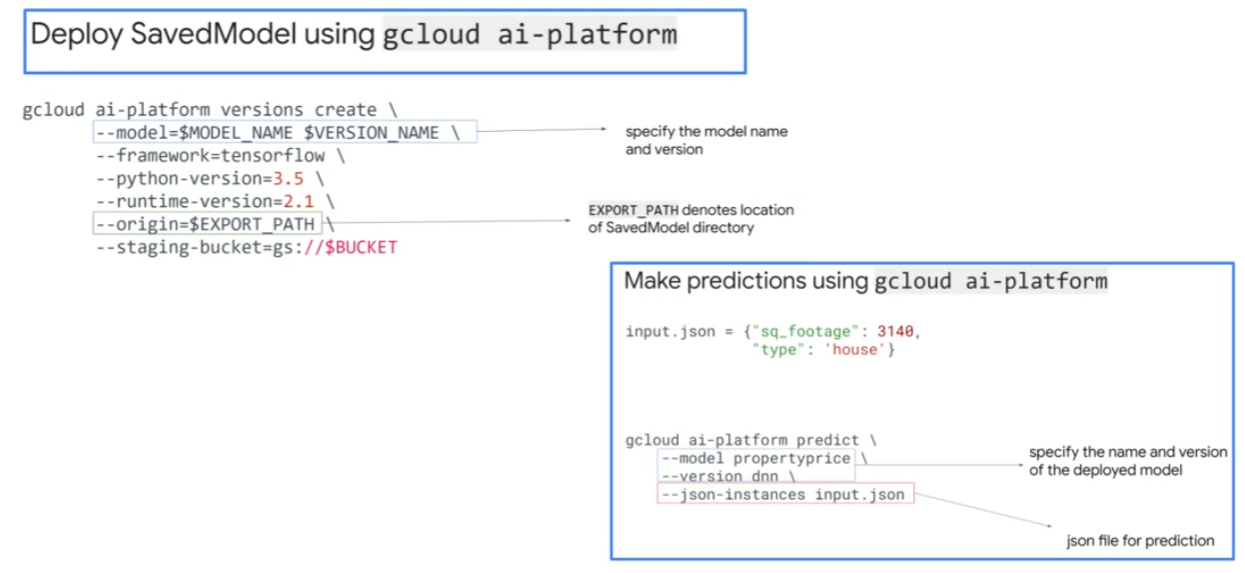

AI Platform - In-built algorithms, Containerized training, Custom prediction routine, Batch Vs Online, XAI

MLOps and related tools

Tensorflow Extended

Kubeflow

Continuous Evaluation and Drift detection

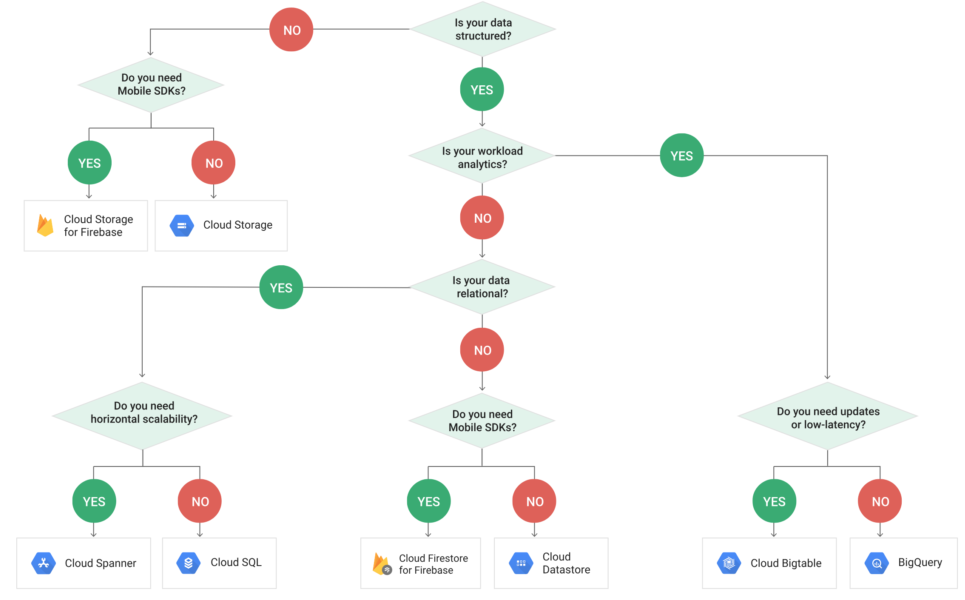

Data services on GCP

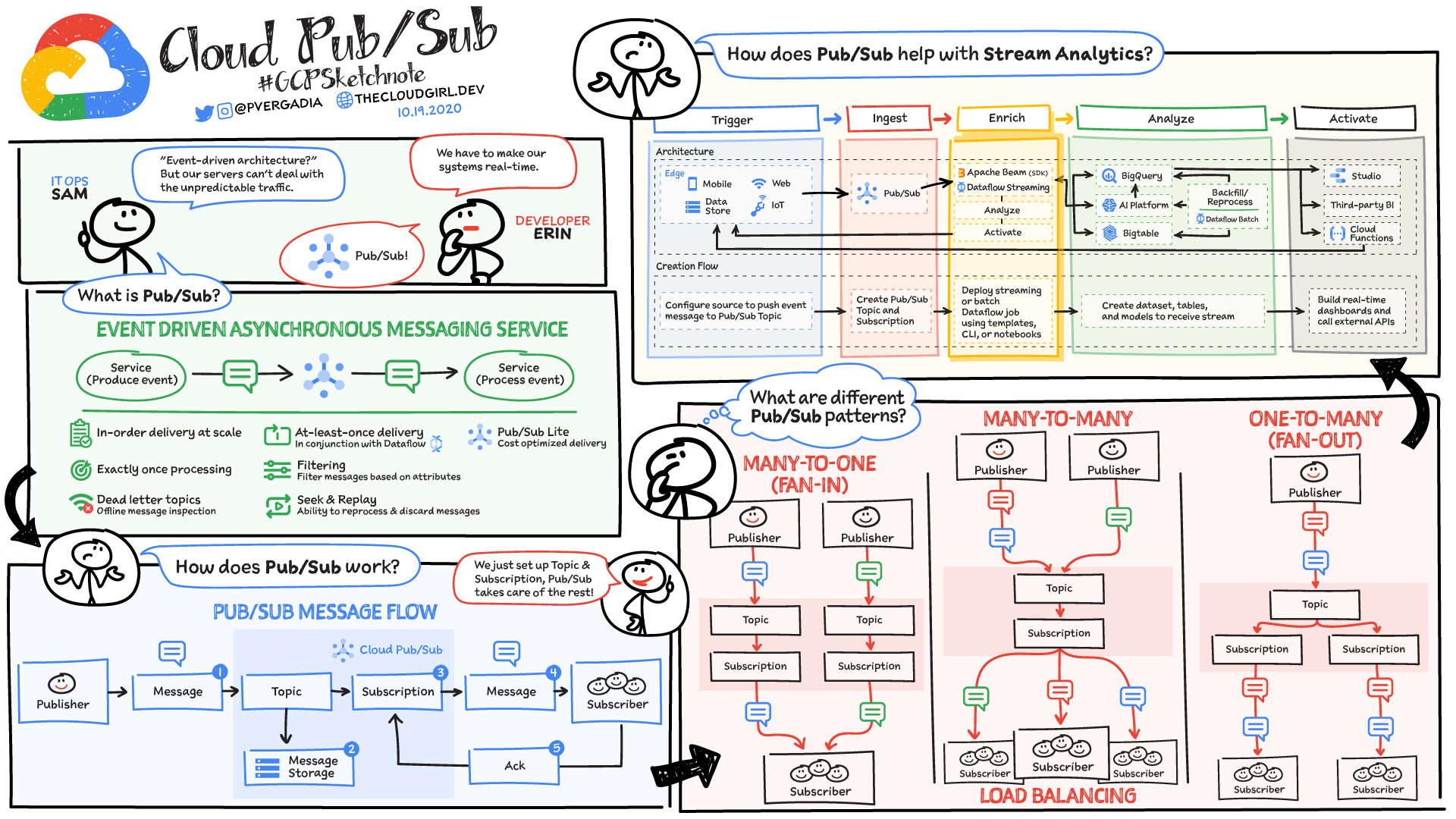

Ingestion and ETL services - PubSub, DataFlow, DataFusion

Storage services - GCS, BigQuery

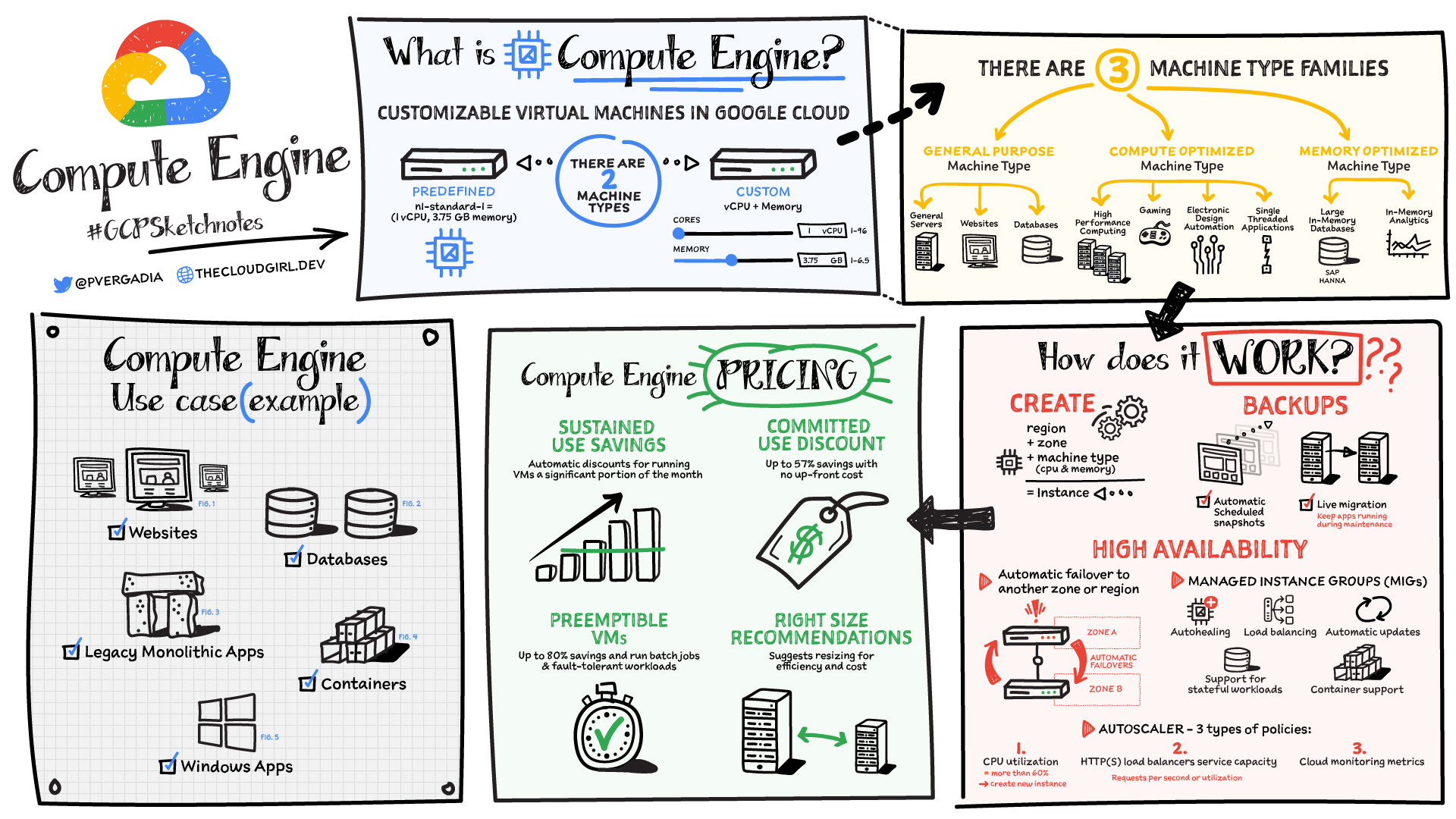

Comparing Machine Learning Models for Predictions in Cloud Dataflow Pipelines

Batch processing

If you are building your batch data processing pipeline, and you want prediction as part of the pipeline, use the direct-model approach for the best performance.

Improve the performance of the direct-model approach by creating micro-batches of the data points before calling the local model for prediction to make use of the parallelization of the vectorized operations.

If your data is populated to Cloud Storage in the format expected for prediction, use AI Platform batch prediction for the best performance.

Use AI Platform if you want to use the power of GPUs for batch prediction.

Do not use AI Platform online prediction for batch prediction.

Stream processing

Use direct-model in the streaming pipeline for best performance and reduced average latency. Predictions are performed locally, with no HTTP calls to remote services.

Decouple your model from your data processing pipelines for better maintainability of models used in online predictions. The best approach is to serve your model as an independent microservice by using AI Platform or any other web hosting service.

Deploy your model as an independent web service to allow multiple data processing pipelines and online apps to consume the model service as an endpoint. In addition, changes to the model are transparent to the apps and pipelines that consume it.

Deploy multiple instances of the service with load balancing to improve the scalability and the availability of the model web service. With AI Platform, you only need to specify the number of nodes (manualScaling) or minNodes (autoScaling) in the yaml configuration file when you deploy a model version.

If you deploy your model in a separate microservice, there are extra costs, depending on the underlying serving infrastructure. See the pricing FAQ for AI Platform online prediction.

Use micro-batching in your streaming data processing pipeline for better performance with both the direct-model and HTTP-model service. Micro-batching reduces the number of HTTP requests to the model service, and uses the vectorized operations of the TensorFlow model to get predictions.

https://thecloudgirl.dev/images/vs.jpg

https://thecloudgirl.dev/images/Compute_h.jpg

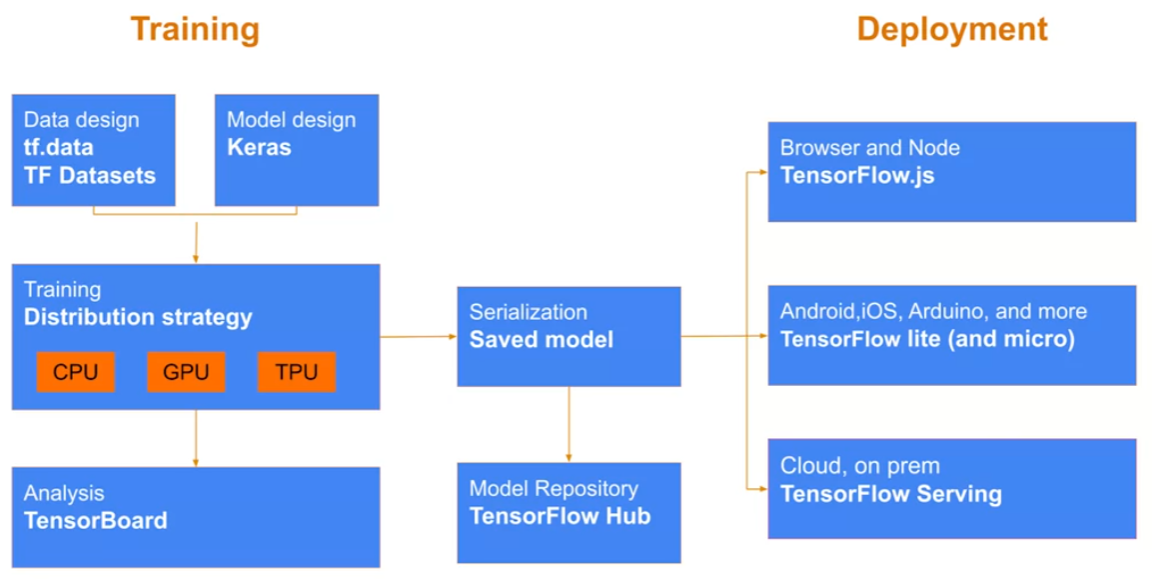

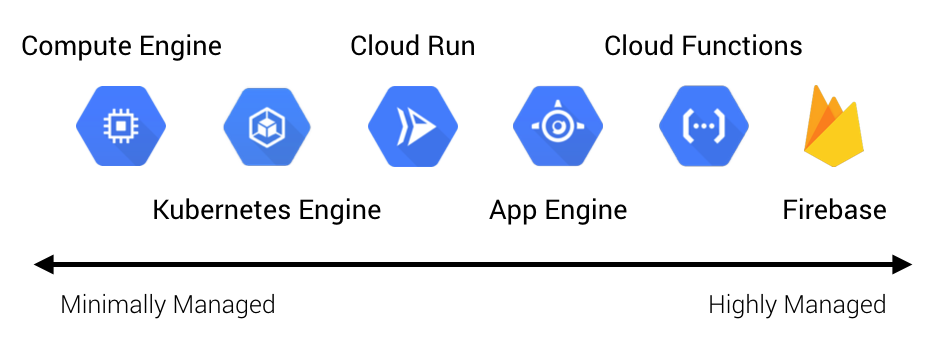

GCP’s Compute Options

GCP has a comprehensive set of compute options ranging from minimally managed VMs all the way to highly managed serverless backends. Below is the full spectrum of GCP’s compute services at the time of this writing. I’ll provide a brief overview of each of these services just to get the lay of the land. We’ll start from the highest level of ui a bstraction and work our way down, and then we’ll hone in on the serverless solutions.

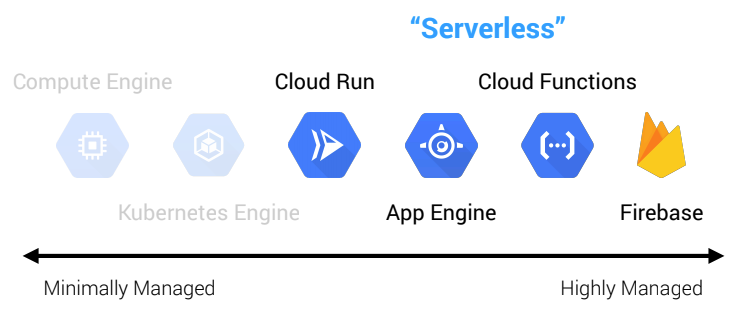

Serverless Options

- Cloud Run: serverless containers (CaaS)

- App Engine: serverless platforms

PaaS : Platform as a Service- A service used to run web applications on a managed platform.

App Engine: (PaaS) to deploy applications directly into a managed, auto-scaling environment. 자동 확장 환경에 애플리케이션을 직접 배포할 수 있는 서비스

- A service used to run web applications on a managed platform.

- Cloud Functions: serverless functions (FaaS)

- Running code in response to events.

- Supports JavaScript, Python and Go.

- Billed to the closest 100 milliseconds.

- Firebase: serverless applications (BaaS)

Apache Beam

Required ML infrastructure that allows you v to train models and serve recommendations using the same data pipeline.

- With Cloud Dataflow, supports both batch and stream data

Virtual machine (VM)

가상머신

An instance hosted on Google's infrastructure.

- Preemptible VMs 선점형 가상머신

- low-cost

- short-duration VM option for batch jobs and fault-tolerant workloads.

- Can terminate at anytime and will terminate within 24 hours.

- Cannot migrate to regular VM and no SLA.

REST API

The Al Platform

REST APIprovides RESTful services for managing jobs, models, and versions, and for making predictions with hosted models on Google Cloud.

You can use the Google APIs Client Library for Python to access the APIs. When using the client library, you use Python representations of the resources and objects used by the API. This is easier and requires less code than working directly with HTTP requests. We recommend the REST API for serving online predictions in particular.

-

Spark

-

Spark vs Hadoop

-

Cashes : in-memory data stores that maintains fast access

Compute Engine - 3 types of availability : Preemptible, auto restart and on host maintenance.

VPN

to connect your existing network to your Google Compute Engine network via an IPsec connection or connect two different Google managed VPN gateways.

CDN

DNS

Google Cloud CDN

Google Cloud CDN uses Google's globally distributed edge points of presence to cache HTTP(S) load balanced content close to your users.

Google Cloud DNS

Google Cloud DNS is a high performance, resilient, global, fully managed DNS service that provides a RESTful API to publish and manage DNS records for your applications and services

Monitoring

Service that collects performance data from GCP, AWS and other popular open source applications.

Logging

Service that stores and analyze and alert on log data.

https://thecloudgirl.dev/images/Dataflow.jpg

https://thecloudgirl.dev/images/BigQuery.png

Cloud BigQuery

Data warehouse that is fully managed with SQL queries good for data analytics and dashboards

https://thecloudgirl.dev/images/GCS.png

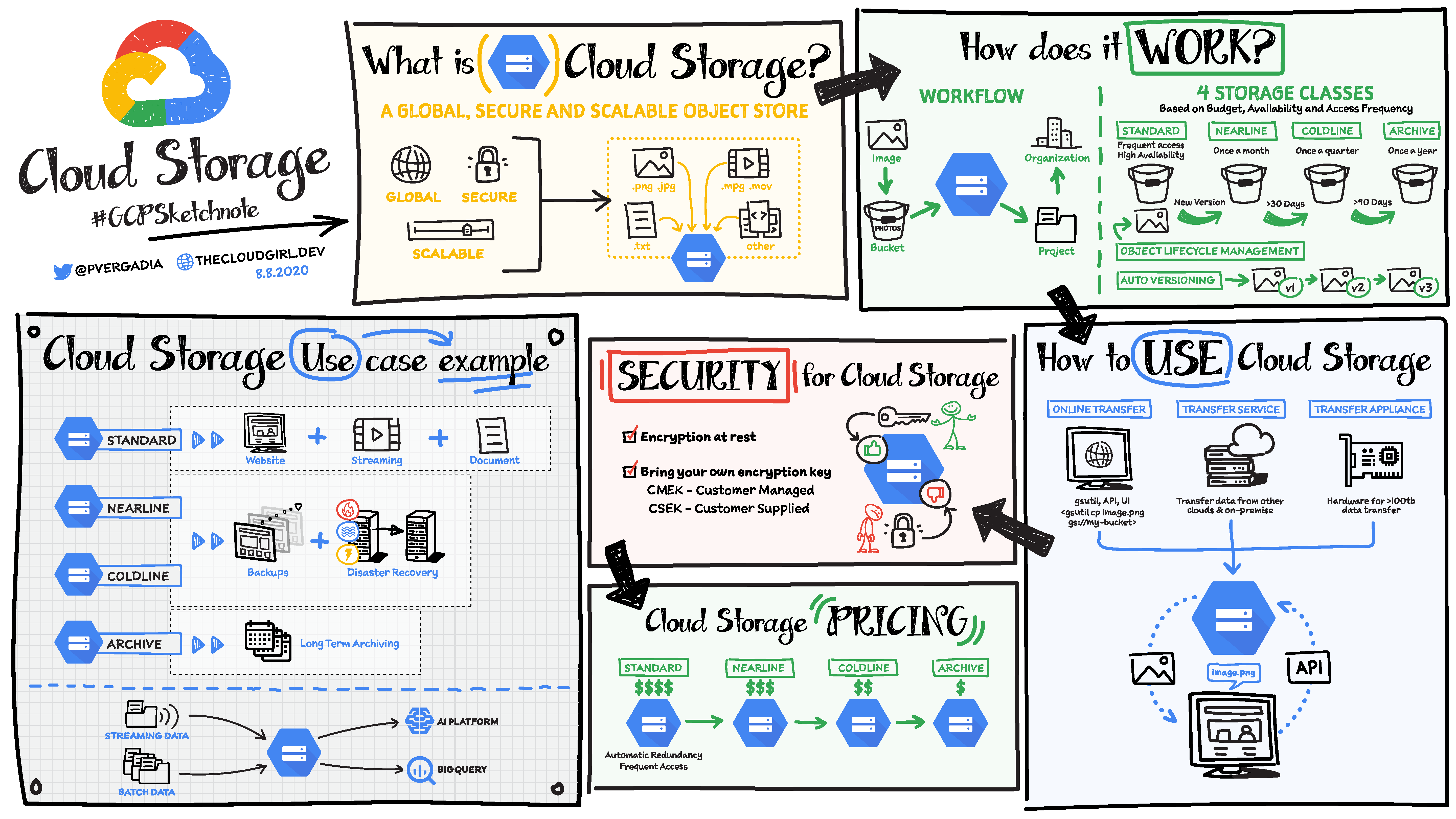

Cloud Storage

- GCP Serverless Object Storage

- Object or blobs storage in buckets with addressable URLs.

- Key

- No limit on size

- stores multiple copies for availability.

- Unstructured data : Binary media like images or movies.

- 4 Cloud Storage Classes :

multi-regional, regional, nearline, coldline- ``

Nearline Storage Class: Data accessed infrequently, no more than once a month. Good for backupsColdline Storage Class: Data accessed very infrequently, once a year. Good for DR or archiving.

- Cloud Storage Bucket

- Niche Option

- High Collaboration, infinite space

- Not a Root Disk

- Global Accessibility

- Lower Performance

https://thecloudgirl.dev/images/pubsub.jpg

https://thecloudgirl.dev/images/Composer.jpg

--

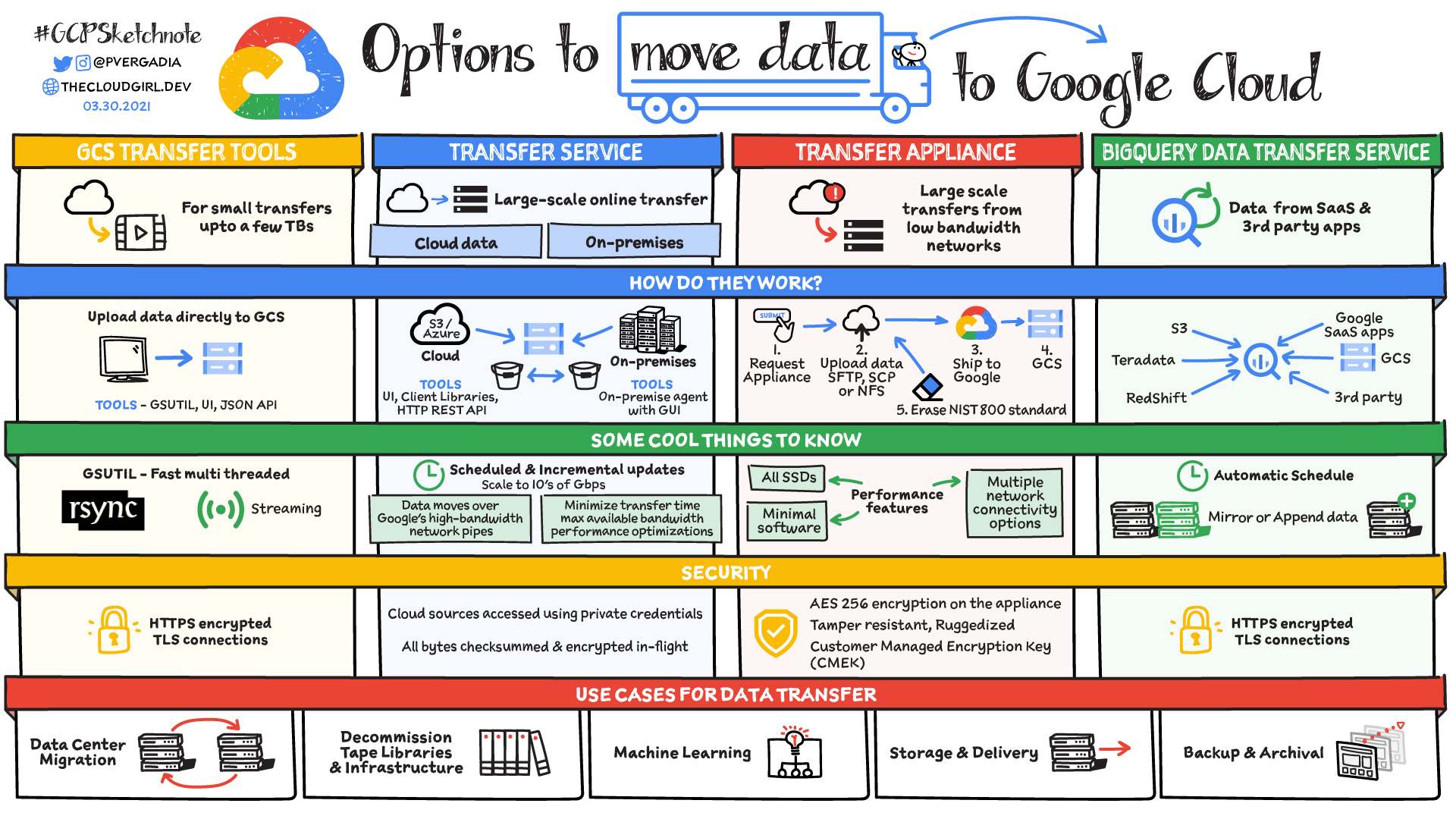

move data from somewhere to Google Cloud

migrationk

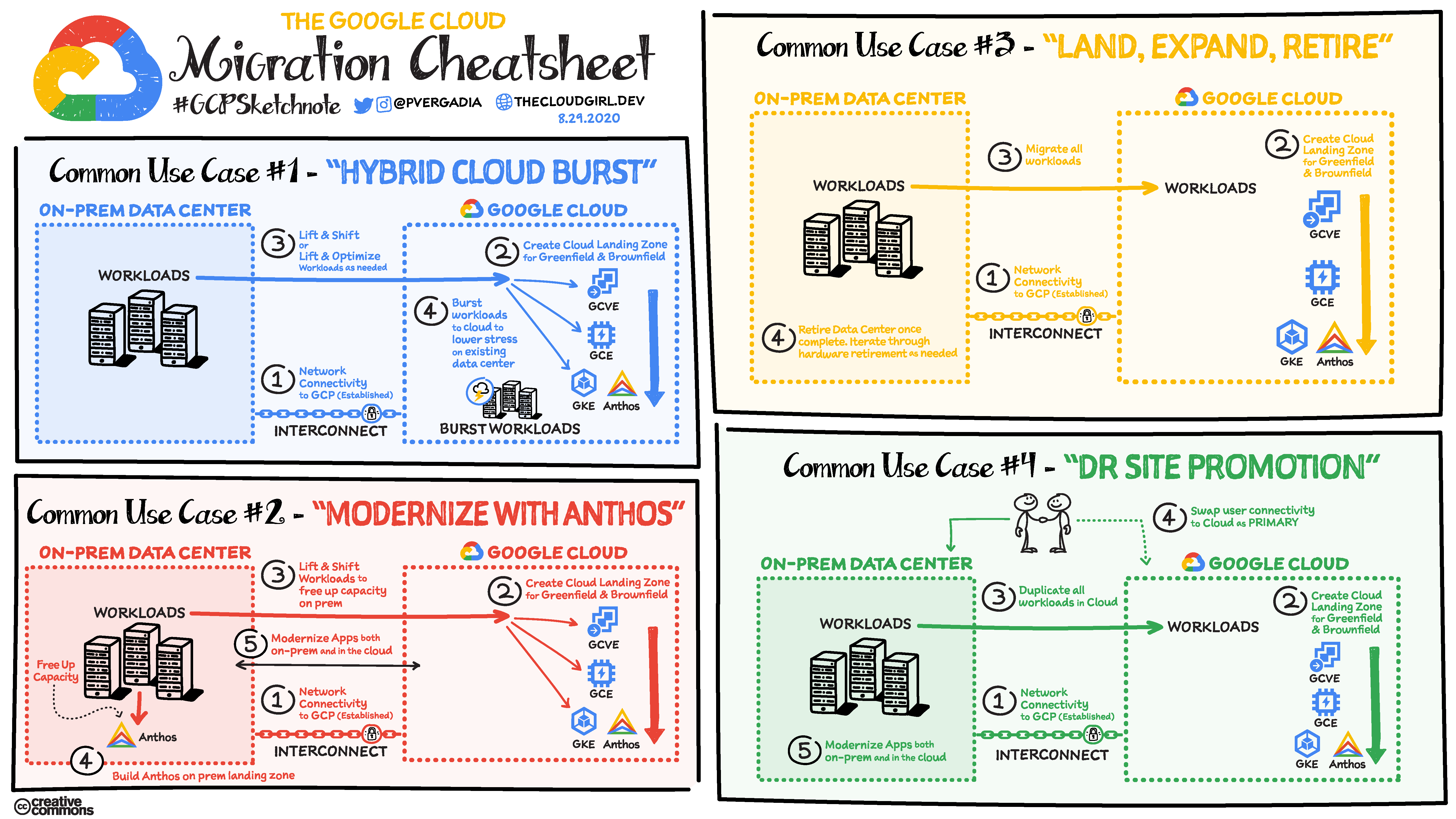

https://thecloudgirl.dev/images/MigrationCheatsheet.png

DataProc

https://thecloudgirl.dev/images/Dataproc.jpg

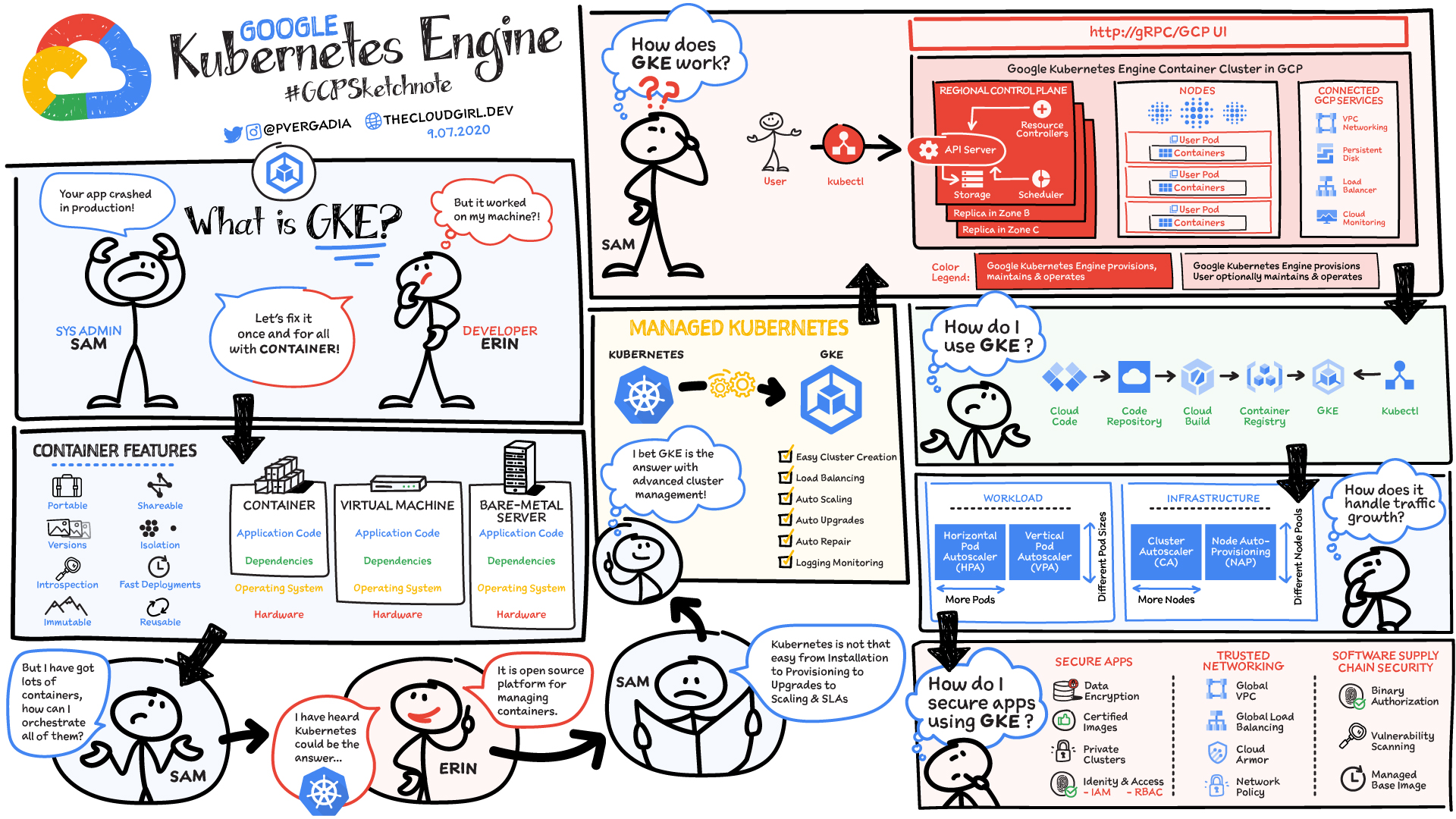

https://thecloudgirl.dev/images/GKE.jpg

Google Vertex AI 간단한 소개

Vertex AI brings together AI Platform & AutoML into a single interface.

Google Cloud의 AI Platform, AutoML 등 다양한 머신러닝 서비스를 하나의 인터페이스(UI / API)에서 사용할 수 있도록 구성된 종합선물 과자세트 같은 서비스입니다.

데이터셋 구성부터 모델 생성, 학습, 테스트, 검증하고 애플리케이션에서 Endpoint로 사용할 수 있도록 배포까지 하나의 파이프라인으로 구성 가능합니다.

API를 이용하여 Jupyter Notebook에서 구성할 수도 있고, 클라우드 엔지니어가 직접 UI에서 AutoML을 이용하여 개발할 수도 있습니다.

GCP options for AI&ML

| AI Platform | Description |

|---|---|

| AI Platform Deep Learning VMs | Preconfigured VMs for deep learning |

| AI Platform Deep Learning Containers | Preconfigured containers for deep learning |

| AI Platform Notebooks | Managed JupyterLab notebook instances |

| AI Platform Pipelines | Hosted ML workflows |

| AI Platform Predictions | Autoscaled model serving |

| AI Platform Training | Distributed AI training |

| AI Platform | Managed platform for ML |

| Auto ML | Description |

|---|---|

| AutoML Natural Language | Custom text models |

| AutoML Tables | Custom structured data models |

| AutoML Translation | Custom domain-specific translation |

| AutoML Video Intelligence | Custom video annotation models |

| AutoML Vision | Custom image models |

| ML API | Description |

|---|---|

| Cloud Natural Language API | Text parsing and analysis |

| Cloud Speech-To-Text API | Convert audio to text |

| Cloud Talent Solutions API | Job search with ML |

| Cloud Text-To-Speech API | Convert text to audio |

| Cloud TPU | Hardware acceleration for ML |

| Cloud Translation API | Language detection and translation |

| Cloud Video Intelligence API | Scene-level video annotation |

| Cloud Vision AP | I Image recognition and classification |

| Contact Center AI | AI in your contact center |

| Dialogflow | Create conversational interfaces |

| Document AI | Analyze, classify, search documents |

| Explainable AI | Understand ML model predictions |

| Recommendations AI | Create custom recommendations |

| Vision Product Search | Visual search for products |