The two key ideas of deep learning for computer vision, convolutional neural networks and backpropagation were already well understood in 1989.

The Long Short- Term Memory (LSTM) algorithm, which is fundamental to deep learning for timeseries, was developed in 1997 and has barely changed since.

So why did deep learning only take off after 2012? What changed in these two decades?

In general, three technical forces are driving advances in machine learning:

- Hardware

- Datasets and benchmarks

- Algorithmic advances

1.3.1 Hardware

Throughout the 2000s, companies like NVIDIA and AMD have been investing billions of dollars in developing fast, massively parallel chips (graphical processing units [GPUs]).

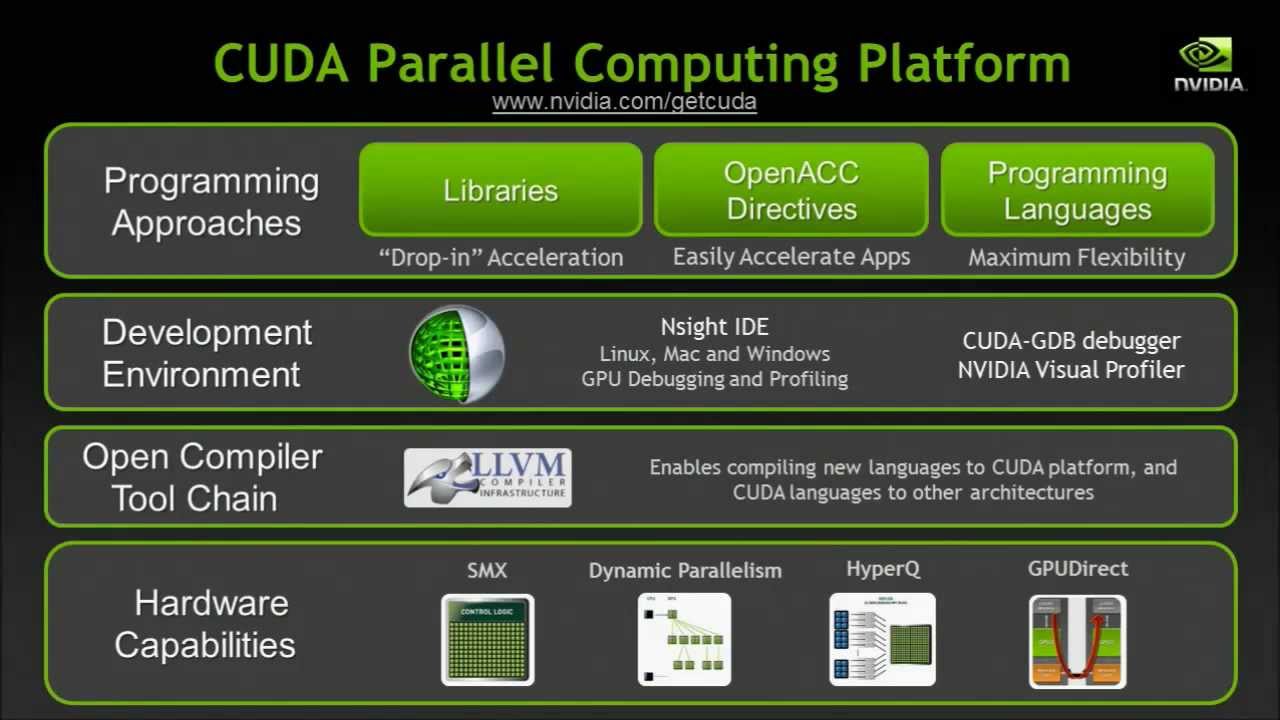

This investment came to benefit the scientific community when, in 2007, NVIDIA launched CUDA (https://developer.nvidia.com/about-cuda), a programming interface for its line of GPUs.

Deep neural networks, consisting mostly of many small matrix multiplications, are also highly parallelizable; and around 2011, some researchers began to write CUDA implementations of neural nets.

Today, the NVIDIA TITAN X, a gaming GPU that cost $1,000 at the end of 2015, can deliver a peak of 6.6 TFLOPS in single precision: 6.6 trillion float32 operations per second. That’s about 350 times more than what you can get out of a modern lap- top. On a TITAN X, it takes only a couple of days to train an ImageNet model.

1.3.2 Data

User-generated image tags on Flickr, for instance, have been a treasure trove of data for computer vision. So are You- Tube videos. And Wikipedia is a key dataset for natural-language processing.

1.3.3 Algorithms

The key issue was that of gradient propagation through deep stacks of layers. The feedback signal used to train neural networks would fade away as the number of layers increased.

This changed around 2009–2010 with the advent of several simple but important algorithmic improvements that allowed for better gradient propagation:

- Better activation functions for neural layers

- Better weight-initialization schemes, starting with layer-wise pretraining, which was

quickly abandoned - Better optimization schemes, such as RMSProp and Adam

RBM(Restricted Boltzmann Machine)

DBN(Deep Belief Network)

1.3.6 Will it last?

Simplicity

Deep learning removes the need for feature engineering, replacing complex, brittle, engineering heavy pipelines with simple, end-to-end trainable models.

Scalability

Deep learning is highly amenable to parallelization on GPUs or TPUs. In addition, deep-learning models are trained by iterating over small batches of data, allowing them to be trained on datasets of arbitrary size.

Versatility and reusability

Unlike many prior machine-learning approaches, deep-learning models can be trained on additional data without restarting from scratch. Furthermore, trained deep-learning models are repurposable and thus reusable: for instance, it’s possible to take a deep-learning model trained for image classification and drop it into a video- processing pipeline.