NumPy, Pandas만 이용해서 경사 하강법을 직접 구현해보자

강사님이 함수로 만든 코드를 참고하면서 만들어보기

코드에 오류가 있을 수 있음

사전 작업

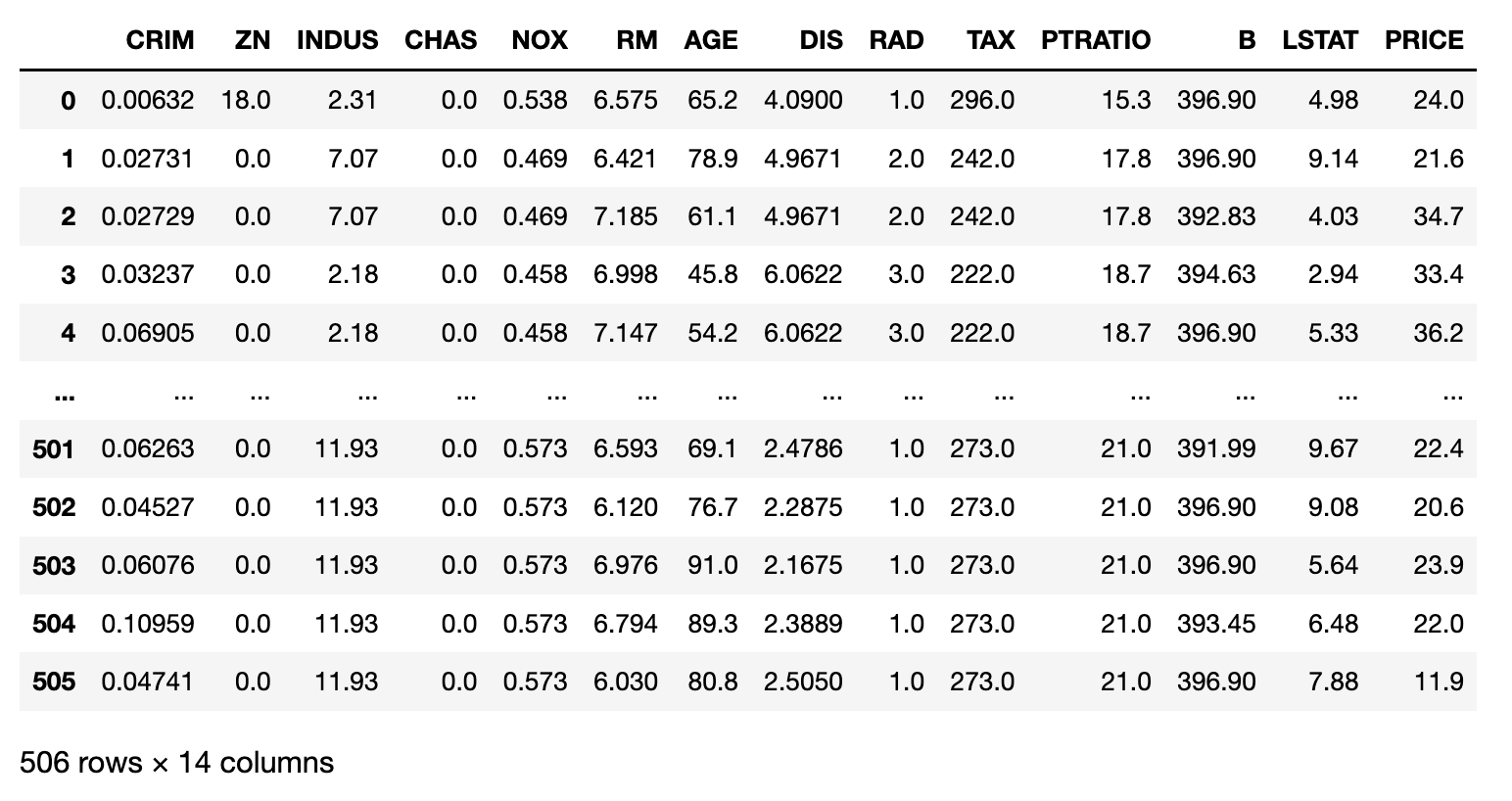

scikit-learn에 기본 탑재되어 있는 보스톤 집값 예측 데이터를 사용함

이 데이터가 scikit-learn 1.2에서 삭제 예정이라고 경고 뜸

# 예시로 사용할 보스톤 집값 데이터 로드

import numpy as np

import pandas as pd

from sklearn.datasets import load_boston

boston = load_boston()

boston_df = pd.DataFrame(boston.data, columns = boston.feature_names)

boston_df['PRICE'] = boston.target

display(boston_df)PRICE(집값):

RM(방의 갯수):

LSTAT (하위 계층 비율):

방의 갯수와 하위 계층 비율로 집값을 예측하는

다중 선형 회귀 (Multiple Linear Regression)를 예로 들자

Gradient Descent 업데이트 식은 다음과 같다.

: bias

: 전체 데이터 건수

각각 에 대한 편미분으로 식이 도출되는데 도출 과정은 생략

신경망은 데이터 스케일링이 선행되어야 함

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

X = scaler.fit_transform(boston_df[['RM', 'LSTAT']])

Xarray([[0.57750527, 0.08967991],

[0.5479977 , 0.2044702 ],

[0.6943859 , 0.06346578],

...,

[0.65433991, 0.10789183],

[0.61946733, 0.13107064],

[0.47307913, 0.16970199]])첫 번째 열이 RM, 두 번째 열이 LSTAT

(Batch) Gradient Descent

# (Batch) Gradient Descent

w0 = np.zeros((1,)) # bias

w1 = np.zeros((1,)) # RM weight

w2 = np.zeros((1,)) # LSTAT weight

learning_rate = 0.01

x1 = X[:, 0] # RM

x2 = X[:, 1] # LSTAT

y = boston_df['PRICE']

iteration = 3000

N = boston_df.shape[0] # 총 행 수

for i in range(iteration):

# Batch (전체 데이터 활용)

y_predict = w0 + w1*x1 + w2*x2

diff = y - y_predict # y - y_hat

w0 = w0 - learning_rate * (-2/N) * (np.dot(np.ones((N,)).T, diff))

w1 = w1 - learning_rate * (-2/N) * (np.dot(x1.T, diff))

w2 = w2 - learning_rate * (-2/N) * (np.dot(x2.T, diff))

# loss 계산 용 (전체 데이터 활용)

y_predict_full = w0 + w1*x1 + w2*x2

diff_full = y - y_predict_full

mse = np.mean(np.square(diff_full))

print('Epoch: ', i+1, '/', iteration)

print('w0:', w0, 'w1:', w1, 'w2:', w2, 'mse(loss):', mse)

Epoch: 1 / 3000

w0: [0.45065613] w1: [0.252369] w2: [0.10914761] mse(loss): 564.6567515182813

Epoch: 2 / 3000

w0: [0.8890071] w1: [0.4982605] w2: [0.21458377] mse(loss): 538.6424811965488

Epoch: 3 / 3000

w0: [1.315389] w1: [0.73785103] w2: [0.31641055] mse(loss): 514.0245946883915

Epoch: 4 / 3000

w0: [1.73012873] w1: [0.97131229] w2: [0.41472723] mse(loss): 490.72786471250197

Epoch: 5 / 3000

w0: [2.13354428] w1: [1.1988113] w2: [0.50963037] mse(loss): 468.68111722304553

...

Epoch: 2996 / 3000

w0: [16.41140273] w1: [24.56270694] w2: [-22.20531815] mse(loss): 30.702224895832693

Epoch: 2997 / 3000

w0: [16.41131816] w1: [24.5637451] w2: [-22.20683464] mse(loss): 30.701886587695054

Epoch: 2998 / 3000

w0: [16.41123358] w1: [24.56478239] w2: [-22.20834962] mse(loss): 30.701548918086537

Epoch: 2999 / 3000

w0: [16.41114901] w1: [24.56581881] w2: [-22.20986309] mse(loss): 30.701211885791857

Epoch: 3000 / 3000

w0: [16.41106442] w1: [24.56685437] w2: [-22.21137505] mse(loss): 30.70087548959799MSE를 30.7009까지 줄임

처음엔y_predict를 가중치 업데이트와 loss 계산에 동시에 사용했는데,

그러니까 loss 계산에서 1번 반복이 반영이 안 돼서y_predict_full로 분리함

이 업데이트 된 로 집값을 예측 후 실제값과 비교해보자

predict_price = w0 + X[:, 0] * w1 + X[:, 1] * w2

boston_df['PREDICT_PRICE'] = predict_price

boston_df.head()좀 차이가 나긴 하는데 실제값과 어느정도 비슷하다.

scikit-learn의 MSE 평가지표를 출력해서 위에서 출력한 MSE와 같은지 확인하자

from sklearn.metrics import mean_squared_error

print(mean_squared_error(boston_df['PREDICT_PRICE'], boston_df['PRICE']))30.70087548959798동일하게 잘 나옴

Stochastic Gradient Descent

# Stochastic Gradient Descent

w0 = np.zeros((1,)) # bias

w1 = np.zeros((1,)) # RM weight

w2 = np.zeros((1,)) # LSTAT weight

learning_rate = 0.01

x1 = X[:, 0] # RM

x2 = X[:, 1] # LSTAT

iteration = 3000

y = boston_df['PRICE']

for i in range(iteration):

random_index = np.random.choice(len(y), 1)

x1_sgd = x1[random_index]

x2_sgd = x2[random_index]

y_sgd = y[random_index]

N = y_sgd.shape[0] # 1

y_predict = w0 + w1*x1_sgd + w2*x2_sgd

diff = y_sgd - y_predict # y - y_hat

w0 = w0 - learning_rate * (-2/N) * (np.dot(np.ones((N,)).T, diff))

w1 = w1 - learning_rate * (-2/N) * (np.dot(x1_sgd.T, diff))

w2 = w2 - learning_rate * (-2/N) * (np.dot(x2_sgd.T, diff))

# loss 계산 용 (전체 데이터 활용)

y_predict_full = w0 + w1*x1 + w2*x2

diff_full = y - y_predict_full

mse = np.mean(np.square(diff_full))

print('Epoch: ', i+1, '/', iteration)

print('w0:', w0, 'w1:', w1, 'w2:', w2, 'mse(loss):', mse)Epoch: 1 / 3000

w0: [0.494] w1: [0.22764323] w2: [0.11450331] mse(loss): 563.3093300205442

Epoch: 2 / 3000

w0: [0.94311372] w1: [0.47040042] w2: [0.22145285] mse(loss): 536.9347841777011

Epoch: 3 / 3000

w0: [1.33083812] w1: [0.64453838] w2: [0.32544516] mse(loss): 515.4746418933317

Epoch: 4 / 3000

w0: [1.68502494] w1: [0.81216464] w2: [0.3850627] mse(loss): 496.4686760142888

Epoch: 5 / 3000

...

Epoch: 2995 / 3000

w0: [16.57777786] w1: [23.60035309] w2: [-22.52582218] mse(loss): 30.952656843339454

Epoch: 2996 / 3000

w0: [16.59119304] w1: [23.60601064] w2: [-22.52260535] mse(loss): 30.93785942342592

Epoch: 2997 / 3000

w0: [16.65359669] w1: [23.6428502] w2: [-22.50889859] mse(loss): 30.8720050225946

Epoch: 2998 / 3000

w0: [16.58024882] w1: [23.59803197] w2: [-22.52106251] mse(loss): 30.951406127922308

Epoch: 2999 / 3000

w0: [16.38677983] w1: [23.50012977] w2: [-22.58934255] mse(loss): 31.24985004163789

Epoch: 3000 / 3000

w0: [16.5376905] w1: [23.5952911] w2: [-22.51417871] mse(loss): 30.990274393368832loss 계산 부분이랑 Stochastic 돌리는 부분을 분리하는 게 좀 헷갈렸다.

훈련할 때는 랜덤으로 1건씩만 뽑아서 하고, loss 계산은 전체 데이터로 하기!

확실히 Stochastic은 loss가 왔다갔다하긴 함

그래도 1건씩 뽑아서 훈련했는데 최종 loss가 Batch 방법과 크게 차이가 안 남

마찬가지로 scikit-learn으로 MSE 출력해서 같은지 확인하자

from sklearn.metrics import mean_squared_error

predict_price = w0 + X[:, 0] * w1 + X[:, 1] * w2

boston_df['PREDICT_PRICE'] = predict_price

print(mean_squared_error(boston_df['PREDICT_PRICE'], boston_df['PRICE']))30.99027439336883동일하게 잘 나옴

Mini-Batch Gradient Descent

- Mini-Batch는 원래 Batch-size만큼 랜덤으로 복원 추출을 하는 방식이다.

방법 (1) - 그런데 Keras를 포함한 대부분의 프레임워크에서는 앞에서부터 순차적으로 Batch-size만큼

잘라서 각 뭉탱이를 모두 사용함방법 (2)

(데이터가 120건인데 Batch-size가 30이면 30건짜리 4뭉탱이를 각각 사용하는게 1 epoch)

두 방식 모두 구현해보자

방법 (1)

- 이 방법은 복원 추출을 하기 때문에 여러번 뽑히는 데이터도 있고, 아예 안 뽑히는 데이터도 있음

# Mini-Batch Gradient Descent (1)

w0 = np.zeros((1,)) # bias

w1 = np.zeros((1,)) # RM weight

w2 = np.zeros((1,)) # LSTAT weight

learning_rate = 0.01

x1 = X[:, 0] # RM

x2 = X[:, 1] # LSTAT

iteration = 3000

batch_size = 30 # 추가

y = boston_df['PRICE']

for i in range(iteration):

# Mini-Batch

batch_indexes = np.random.choice(len(y), 30)

x1_batch = x1[batch_indexes]

x2_batch = x2[batch_indexes]

y_batch = y[batch_indexes]

N = y_batch.shape[0] # batch_size

y_predict = w0 + w1*x1_batch + w2*x2_batch

diff = y_batch - y_predict # y - y_hat

w0 = w0 - learning_rate * (-2/N) * (np.dot(np.ones((N,)).T, diff))

w1 = w1 - learning_rate * (-2/N) * (np.dot(x1_batch.T, diff))

w2 = w2 - learning_rate * (-2/N) * (np.dot(x2_batch.T, diff))

# loss 계산 용 (전체 데이터 활용)

y_predict_full = w0 + w1*x1 + w2*x2

diff_full = y - y_predict_full

mse = np.mean(np.square(diff_full))

print('Epoch: ', i+1, '/', iteration)

print('w0:', w0, 'w1:', w1, 'w2:', w2, 'mse(loss):', mse)Epoch: 1 / 3000

w0: [0.4244] w1: [0.21682127] w2: [0.1027817] mse(loss): 566.7511001829699

Epoch: 2 / 3000

w0: [0.86198816] w1: [0.46062254] w2: [0.20901749] mse(loss): 540.7553263637077

Epoch: 3 / 3000

w0: [1.27403111] w1: [0.67765548] w2: [0.32001439] mse(loss): 517.1149837754973

Epoch: 4 / 3000

w0: [1.69559599] w1: [0.90760566] w2: [0.41307991] mse(loss): 493.5906542485489

Epoch: 5 / 3000

w0: [2.04841005] w1: [1.08797817] w2: [0.50727928] mse(loss): 474.521342621337

...

Epoch: 2995 / 3000

w0: [16.30161267] w1: [24.76956281] w2: [-22.39670035] mse(loss): 30.657821461502913

Epoch: 2996 / 3000

w0: [16.3293612] w1: [24.77904231] w2: [-22.38690985] mse(loss): 30.655505042693452

Epoch: 2997 / 3000

w0: [16.32901428] w1: [24.77595415] w2: [-22.38344501] mse(loss): 30.656278103405413

Epoch: 2998 / 3000

w0: [16.31479088] w1: [24.77599589] w2: [-22.39369972] mse(loss): 30.65597206068832

Epoch: 2999 / 3000

w0: [16.31466988] w1: [24.78145264] w2: [-22.40261359] mse(loss): 30.654322044893856

Epoch: 3000 / 3000

w0: [16.32877266] w1: [24.79229658] w2: [-22.40397188] mse(loss): 30.652045265785286SGD 코드에서 1건 뽑는 부분을 Batch-size만큼 뽑는 거로 수정해주면 됨

loss가 안정적으로 줄어드는 모습이다.

scikit-learn으로 MSE를 출력해서 비교해보자

from sklearn.metrics import mean_squared_error

predict_price = w0 + X[:, 0] * w1 + X[:, 1] * w2

boston_df['PREDICT_PRICE'] = predict_price

print(mean_squared_error(boston_df['PREDICT_PRICE'], boston_df['PRICE']))30.65204526578529똑같이 잘 나온다.

방법 (2)

# Mini-Batch Gradient Descent (2)

w0 = np.zeros((1,)) # bias

w1 = np.zeros((1,)) # RM weight

w2 = np.zeros((1,)) # LSTAT weight

learning_rate = 0.01

x1 = X[:, 0] # RM

x2 = X[:, 1] # LSTAT

iteration = 3000

batch_size = 30 # 추가

y = boston_df['PRICE']

for i in range(iteration):

# Mini-Batch

for batch_step in range(0, len(y), batch_size):

# 처음은 0:30, 두번째는 30:60, ..., 480:510 -> 마지막 초과해도 오류 안 남 (찾는 곳까지 찾음)

x1_batch = x1[batch_step:batch_step + batch_size]

x2_batch = x2[batch_step:batch_step + batch_size]

y_batch = y[batch_step:batch_step + batch_size]

N = y_batch.shape[0] # batch_size

y_predict = w0 + w1*x1_batch + w2*x2_batch

diff = y_batch - y_predict # y - y_hat

w0 = w0 - learning_rate * (-2/N) * (np.dot(np.ones((N,)).T, diff))

w1 = w1 - learning_rate * (-2/N) * (np.dot(x1_batch.T, diff))

w2 = w2 - learning_rate * (-2/N) * (np.dot(x2_batch.T, diff))

# loss 계산 용 (전체 데이터 활용)

y_predict_full = w0 + w1*x1 + w2*x2

diff_full = y - y_predict_full

mse = np.mean(np.square(diff_full))

print('Epoch: ', i+1, '/', iteration)

print('w0:', w0, 'w1:', w1, 'w2:', w2, 'mse(loss):', mse)Epoch: 1 / 3000

w0: [0.41353333] w1: [0.21118173] w2: [0.11625443] mse(loss): 567.2250927629611

Epoch: 1 / 3000

w0: [0.83382878] w1: [0.42504999] w2: [0.21151116] mse(loss): 542.789511284826

Epoch: 1 / 3000

w0: [1.26864819] w1: [0.65529552] w2: [0.29207843] mse(loss): 518.1392189598423

Epoch: 1 / 3000

w0: [1.69338585] w1: [0.89614794] w2: [0.39426111] mse(loss): 494.1211563203328

Epoch: 1 / 3000

...

Epoch: 3000 / 3000

w0: [15.84308421] w1: [26.46770533] w2: [-23.25434094] mse(loss): 30.525829459232945

Epoch: 3000 / 3000

w0: [15.74781122] w1: [26.41812368] w2: [-23.29792025] mse(loss): 30.51332599885515

Epoch: 3000 / 3000

w0: [15.70443764] w1: [26.39258715] w2: [-23.31563948] mse(loss): 30.51978536965735

Epoch: 3000 / 3000

w0: [15.67860952] w1: [26.37572491] w2: [-23.31705219] mse(loss): 30.526893374948802결과를 보니 1 epoch에 총 17개의 뭉탱이가 돌아간다.

scikit-learn의 MSE와 같은지 확인하고 마무리하자

from sklearn.metrics import mean_squared_error

predict_price = w0 + X[:, 0] * w1 + X[:, 1] * w2

boston_df['PREDICT_PRICE'] = predict_price

print(mean_squared_error(boston_df['PREDICT_PRICE'], boston_df['PRICE']))30.526893374948802똑같이 잘 나왔다!

코드를 참고하면서 짜긴 했는데, 그래도 완성해서 뿌듯하다.