EFK 설치 환경

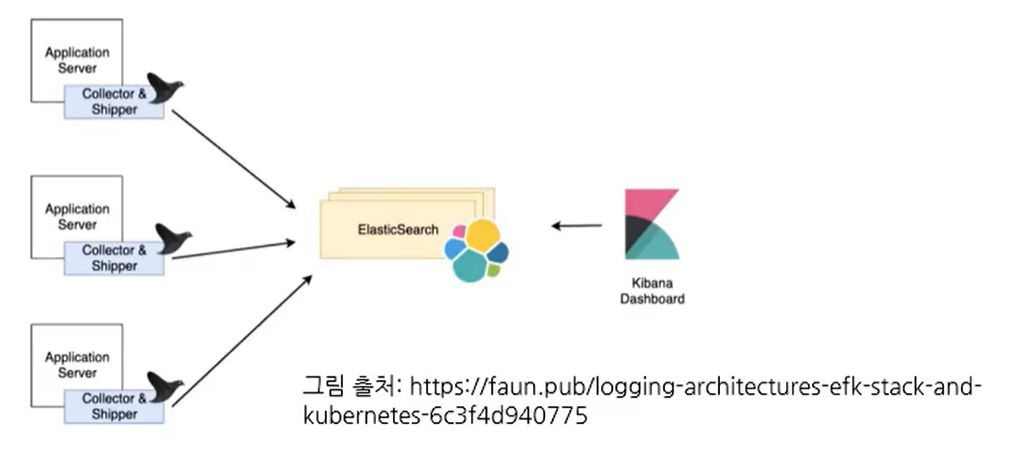

- 쿠버네티스에서 EFK를 사용하면 도커의 각 컨테이너 로그를 수집하고 시각화, 분석 가능

- 쿠버네티스 메모리 8GB 이상 필요

- 엘라스틱 스택은 주로 비츠나 로그스태시를 사용하는 것이 일반적

- 쿠버네티스에서는 fluentd를 사용해서 수집하는 것이 유행

E: Elasticsearch 데이터베이스 & 검색엔진

L: Logstash 데이터 수집기, 파이프라인

K: Kibana 그라파나의 역할, 대시보드 (SIEM, 머신러닝, Alert)

# elasticsearch.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: elastic

labels:

app: elasticsearch

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elastic/elasticsearch:7.14.1

env:

- name: discovery.type

value: single-node

ports:

- containerPort: 9200

- containerPort: 9300

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch-svc

namespace: elastic

spec:

ports:

- name: elasticsearch-rest

nodePort: 30920

port: 9200

protocol: TCP

targetPort: 9200

- name: elasticsearch-nodecom

nodePort: 30930

port: 9300

protocol: TCP

targetPort: 9300

selector:

app: elasticsearch# fluentd.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-system

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-system

labels:

k8s-app: fluentd-logging

version: v1

spec:

selector:

matchLabels:

k8s-app: fluentd-logging

version: v1

template:

metadata:

labels:

k8s-app: fluentd-logging

version: v1

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1-debian-elasticsearch

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: elasticsearch-svc.elastic

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: http

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers# kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: elastic

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: elastic/kibana:7.14.1

env:

- name: SERVER_NAME

value: kibana.kubenetes.example.com

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-svc:9200

ports:

- containerPort: 5601

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kibana

name: kibana-svc

namespace: elastic

spec:

ports:

- nodePort: 30561

port: 5601

protocol: TCP

targetPort: 5601

selector:

app: kibana