This note is based on lecture by Professor 최영우 @ Sookmyung Women's University

Ch 5 - Overfitting

Overfitting meaning

-

When a model does not generalize beyond the training dataset

-

A model should predict well for instances that the model has not yet seen

A Fundamental Trade-Off

- More complex, increase the possibility of overfitting

How to Recognize Overfitting

- holdout (=test) data

- "hold out" some data for which we know the target value

- Not used to build the model, but used to estimate the generalization performance of the model

-

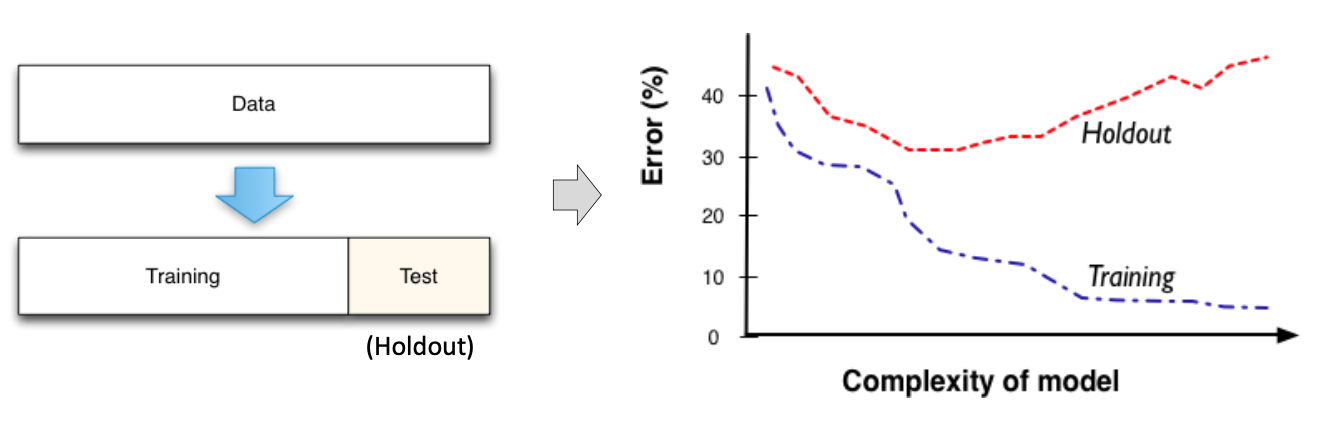

When the model is not complex enough

not very accuarte on both training & test data >> Underfitting

-

As the model gets too complex,

- more accurate on the training data

- less accurate on the test(=holdout) data

--Overfitting!

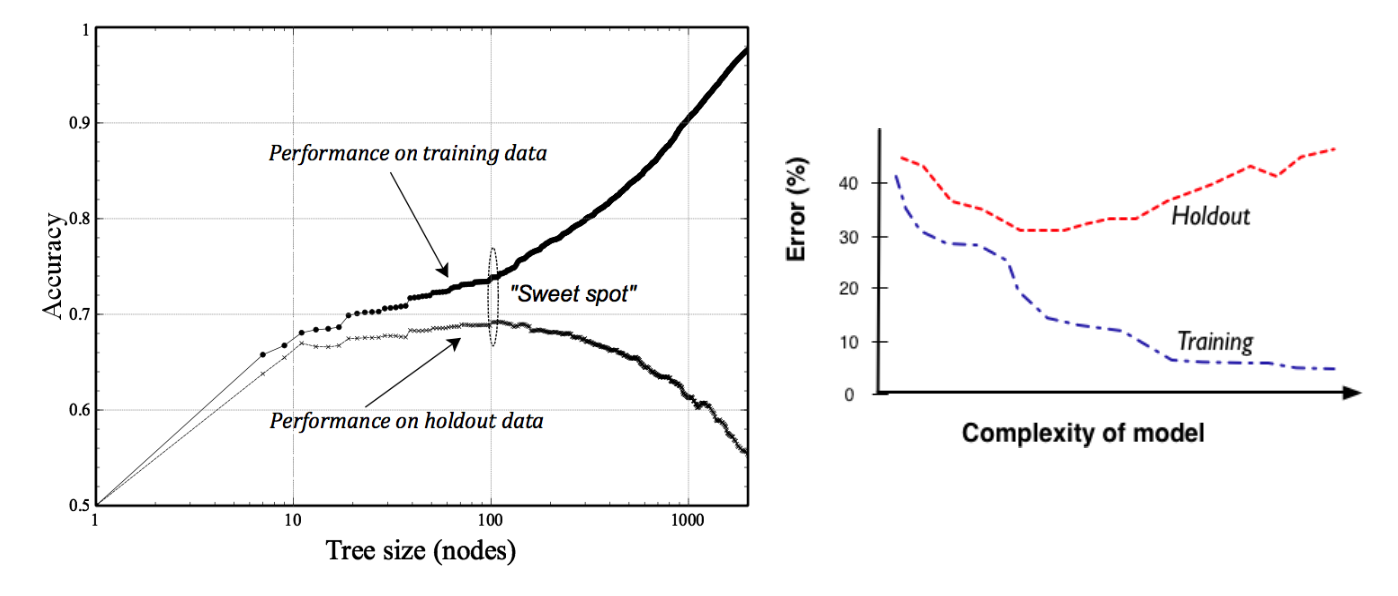

Case 1) Overfitting in Tree Induction

- The complexity of tree = # of nodes

- node=100 넘어가면, overfitting이 된다고 판단할 수 있음. >> tree size를 제한함

- "sweet spot"을 정확히 찾는 방법은 이론상 존재하지 않음.

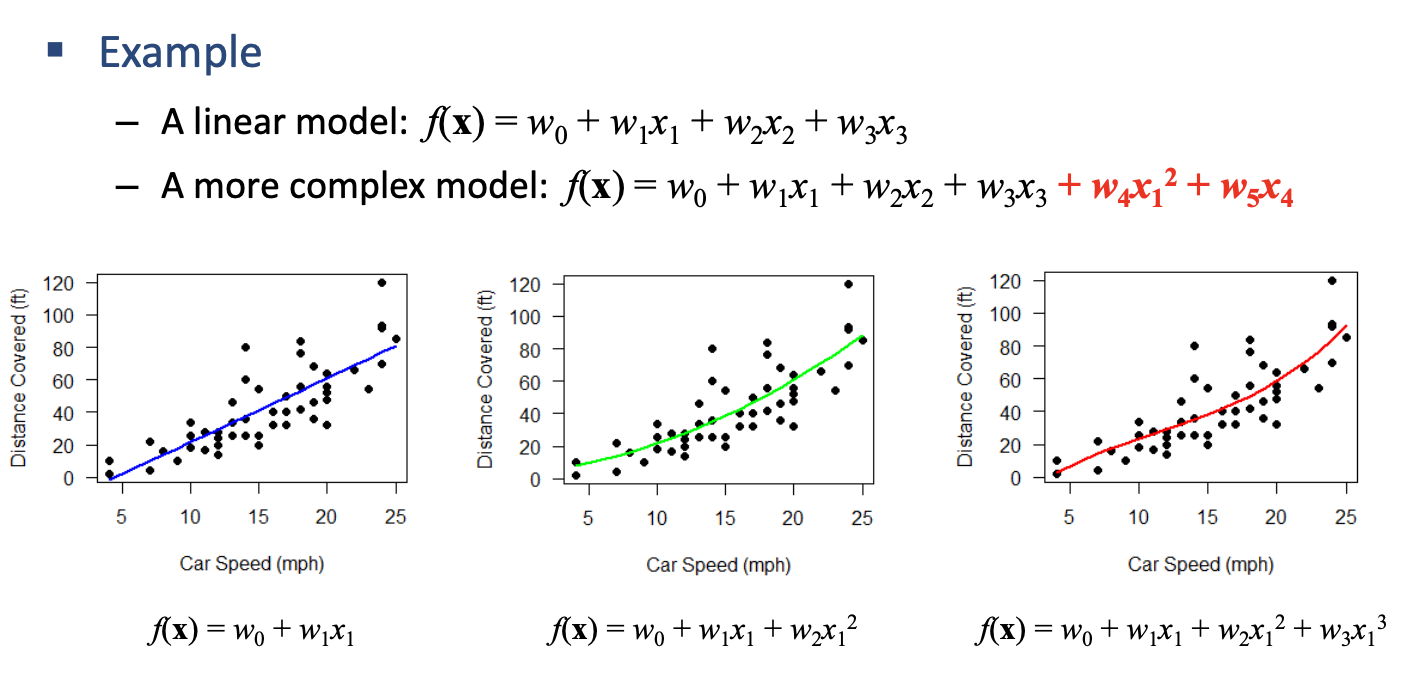

Case 2) Overfitting in Mathematical Functions

-

more terms or variables

-

To avoid overfitting,

- select only informative attributes

- holdout technique(test data 가지고) to check for overfitting

Holdout Evaluation, Cross-Validation

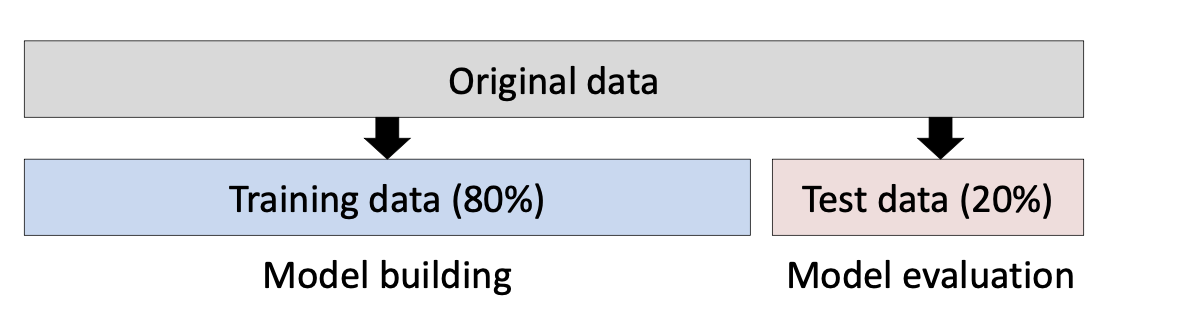

1-1. Holdout evaluation

- train(Model builing), test(model evaluation)로 나누기

- goal: estimate the generalization performance of a model

1-2. 문제점

- test data 한 번 가지고 평가하는 것

- 그래서 여러번 평가하는 cross validation 사용

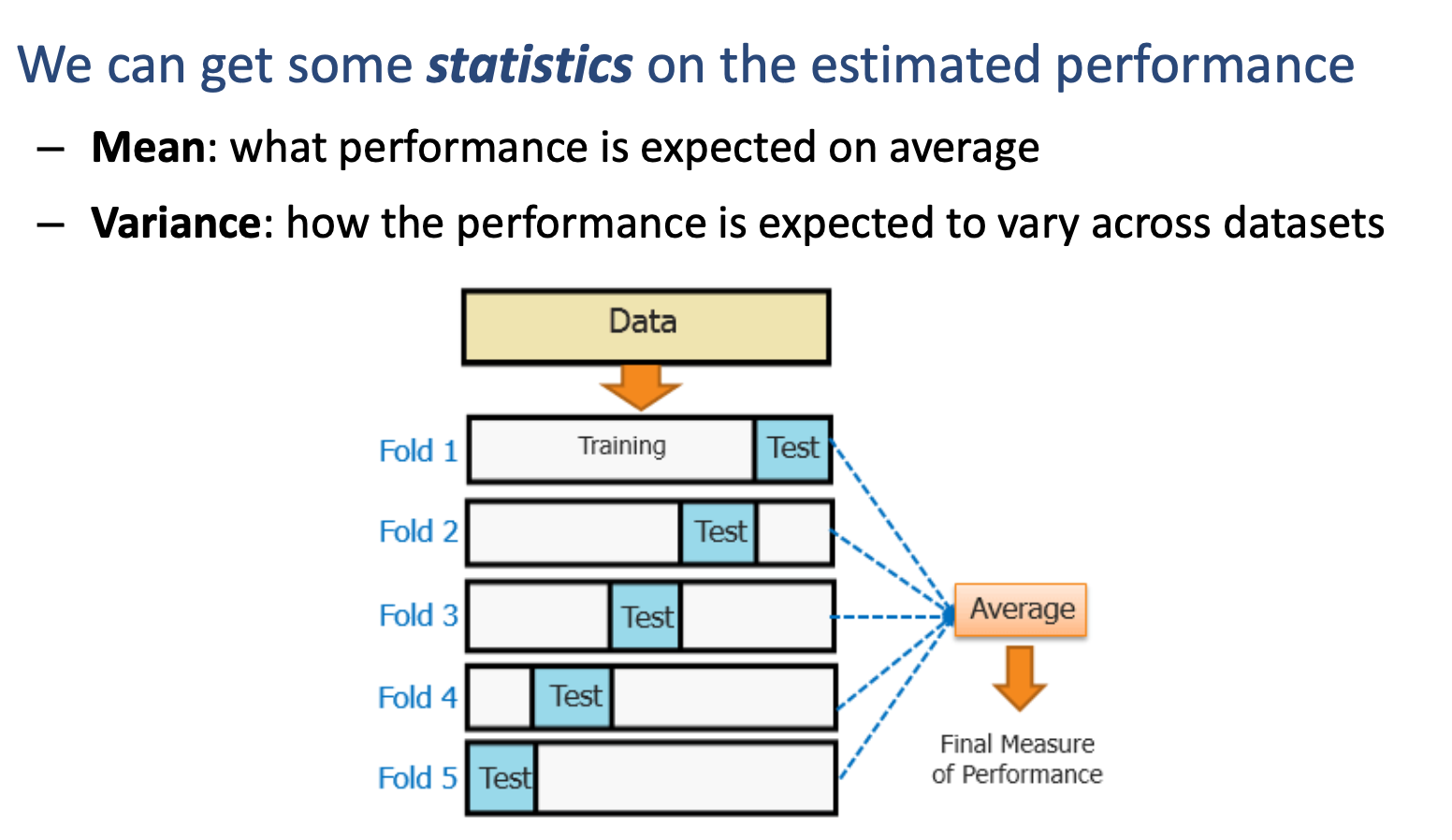

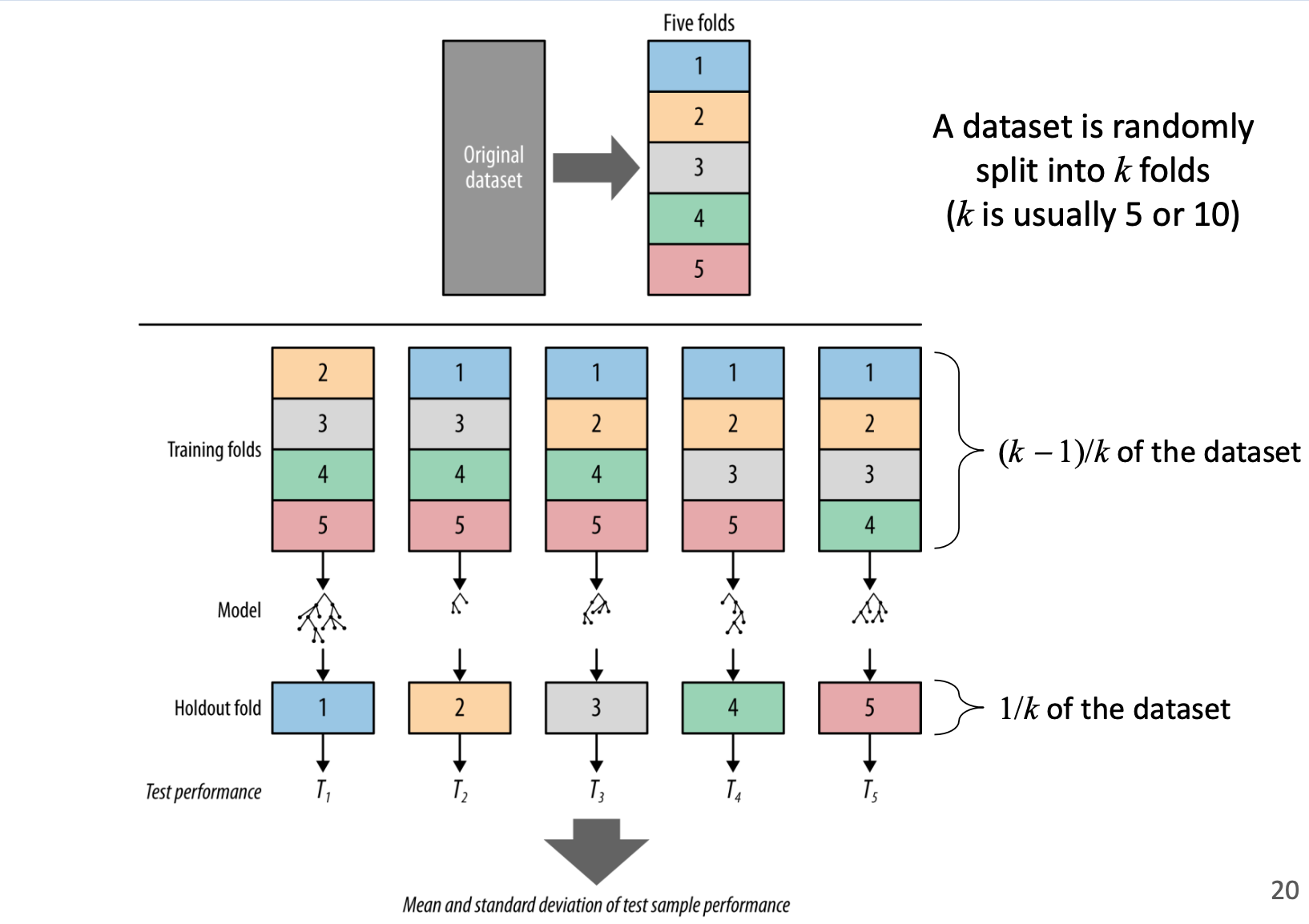

2-1. Cross-Validation

- A more sophisticated holdout evaluation procedure

- Performs holdout evaluation multiple times over the dataset

2-2. k-fold Cross-Validation

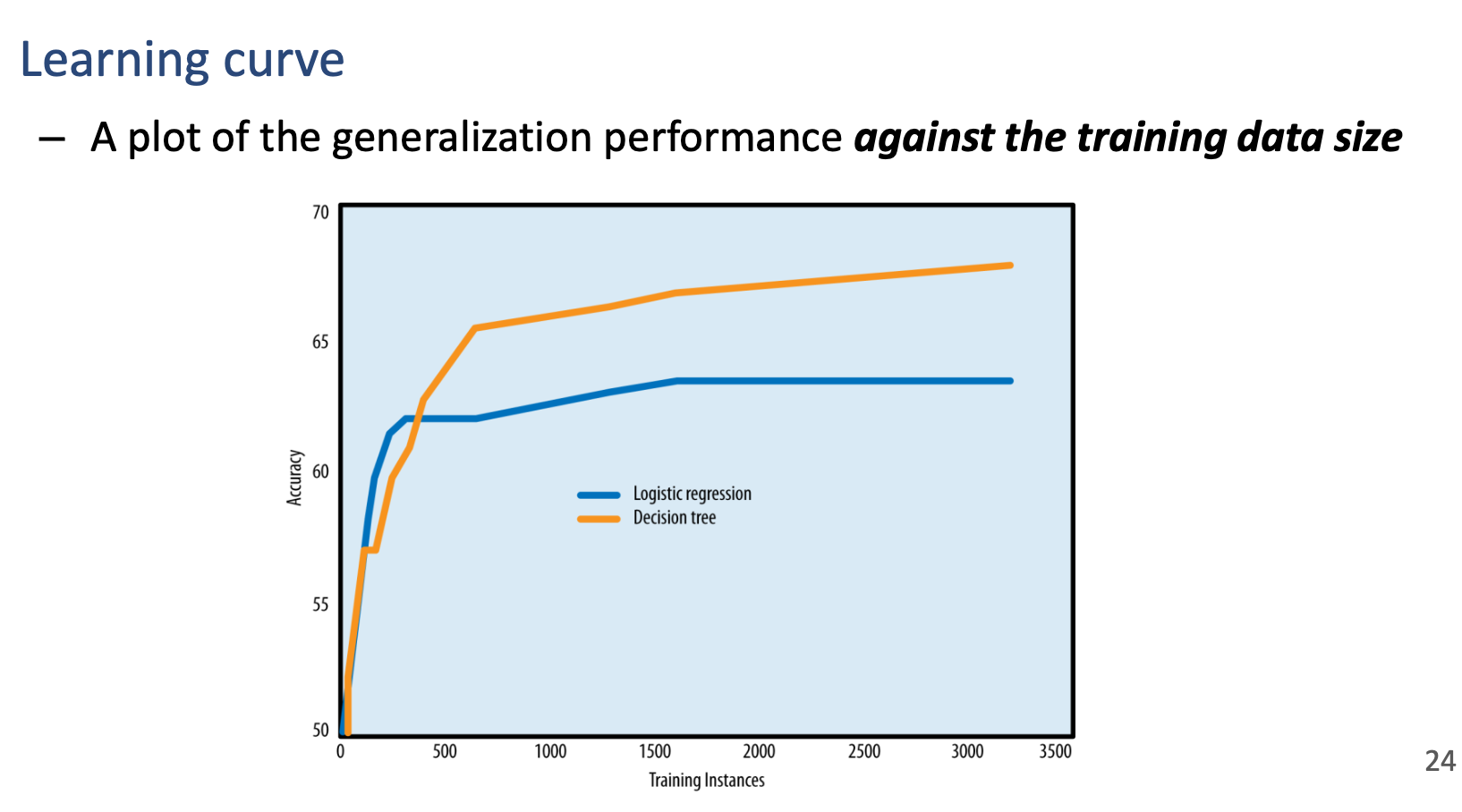

Learning Curve

-

Flexibility

Decision tree > Logistic Regression

-

Logistic Regression: can't model the full complexity of the data ase the data becomes larger (but it overfits less)

-

Decision tree: can model more complex regularities with larger training sets (but it overfits more)

Regularization (Important!)

- Rein the complexity of the model to avoid overfitting

Case1) Regularization for Tree Induction

- Stop growing the tree before it gets too complex

- Grow the tree until it's too large, then "prune" it back to reduce its size

- Build trees with different number of nodes and pick the best

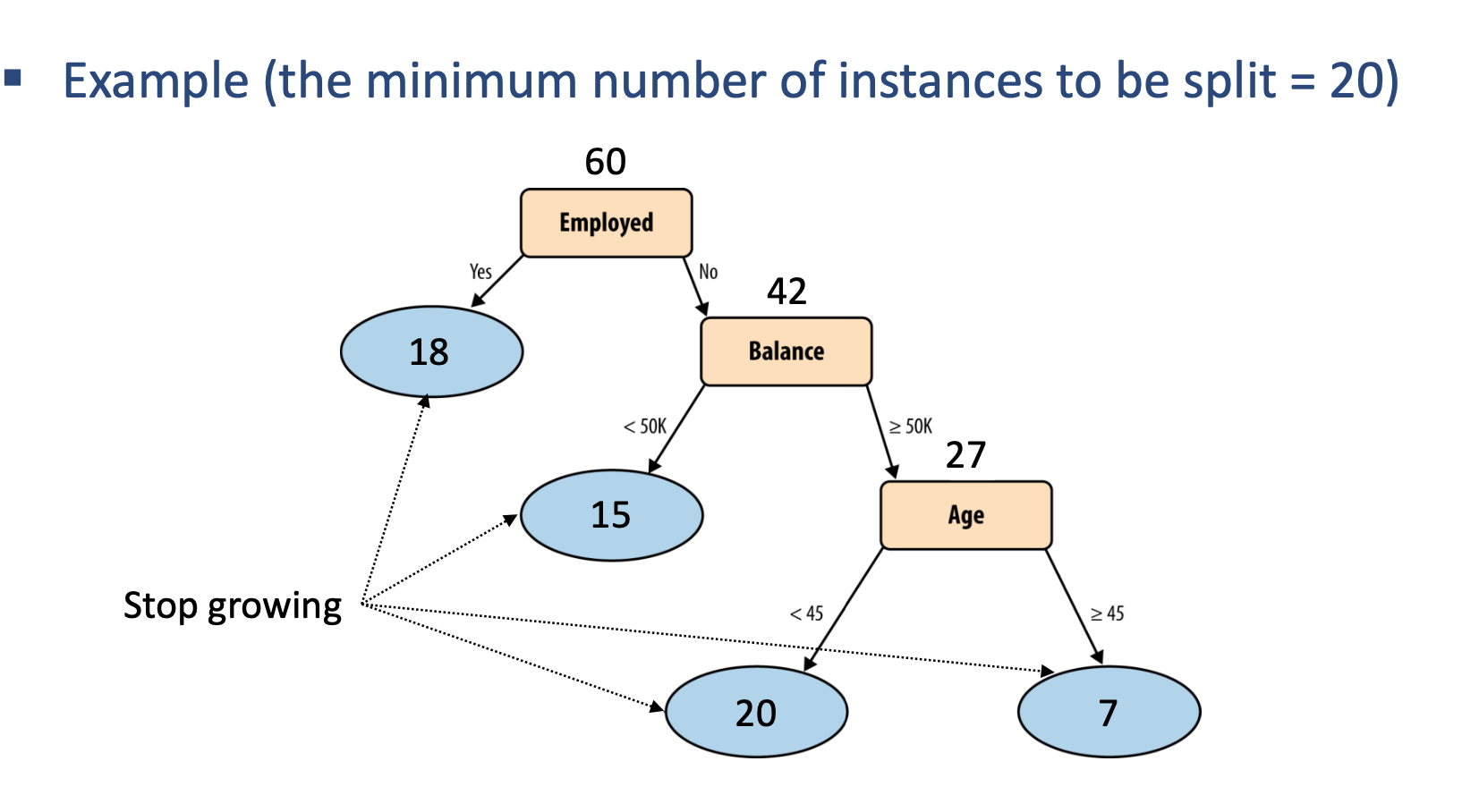

1. Limit Tree Size

- Specify a minimum number of instances to be split

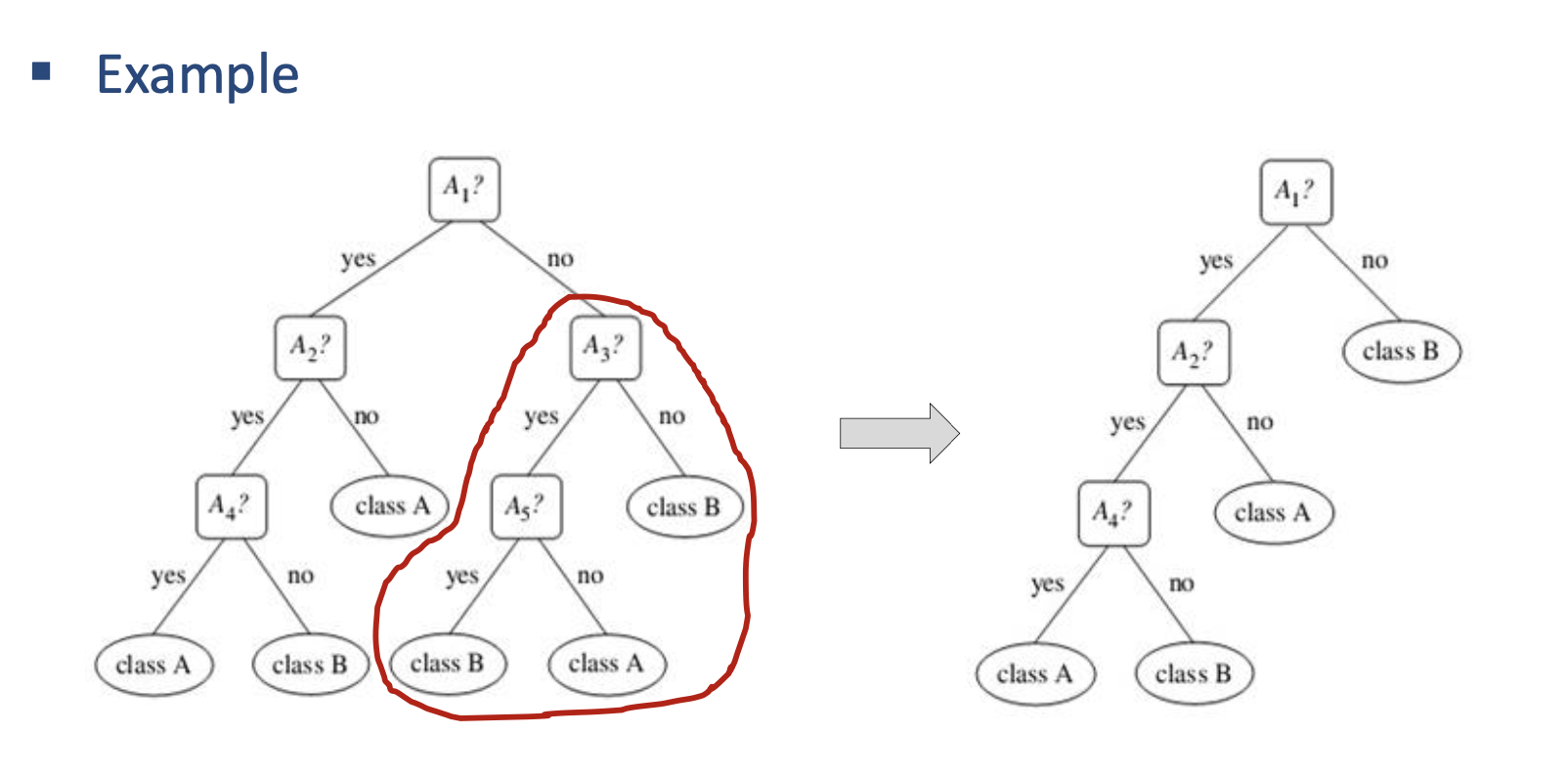

2. Prune an Overly Large Tree

- Cut off leaves and branches, replacing them with leaves

If this replacement does not reduce its accuracy

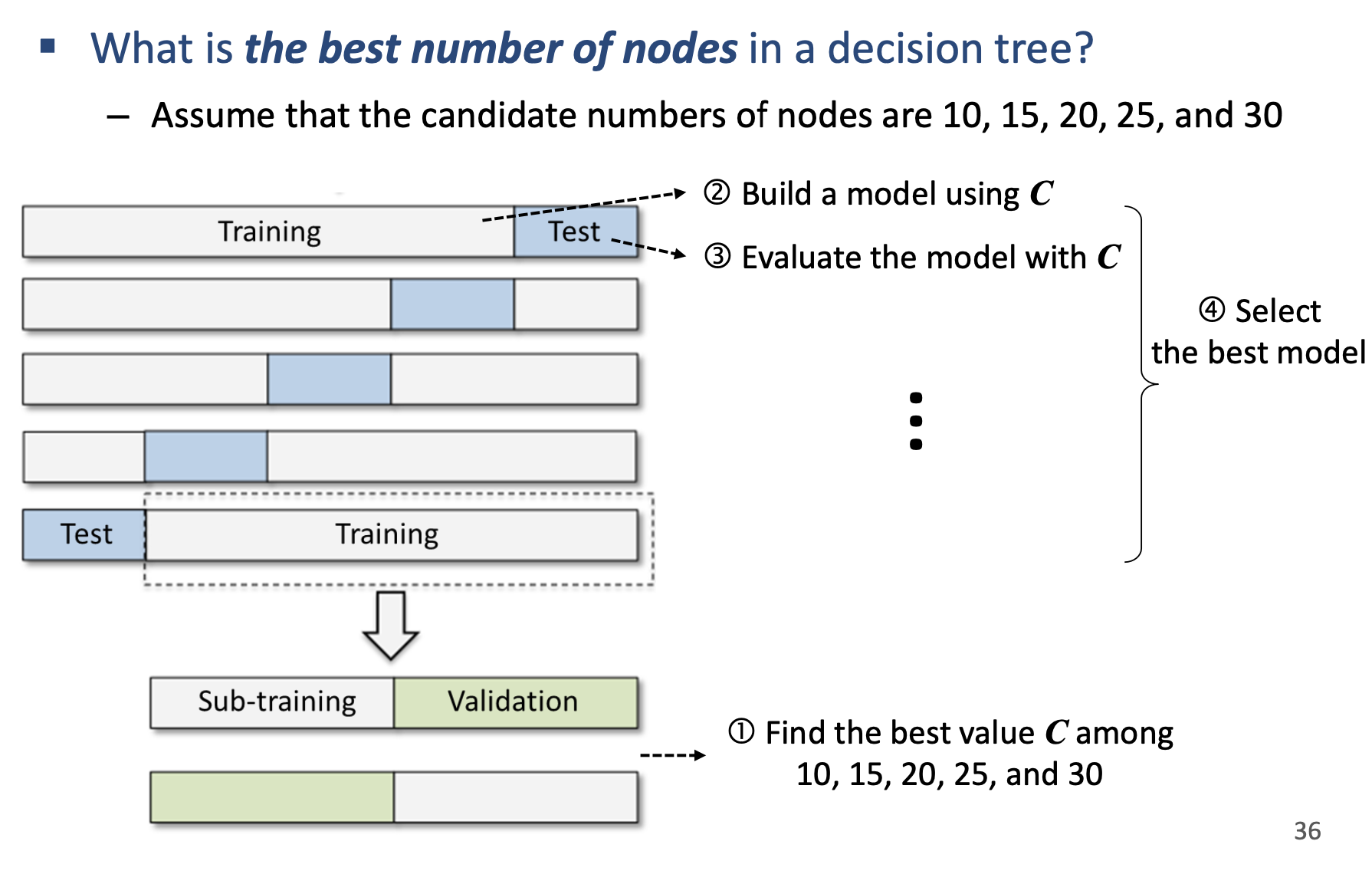

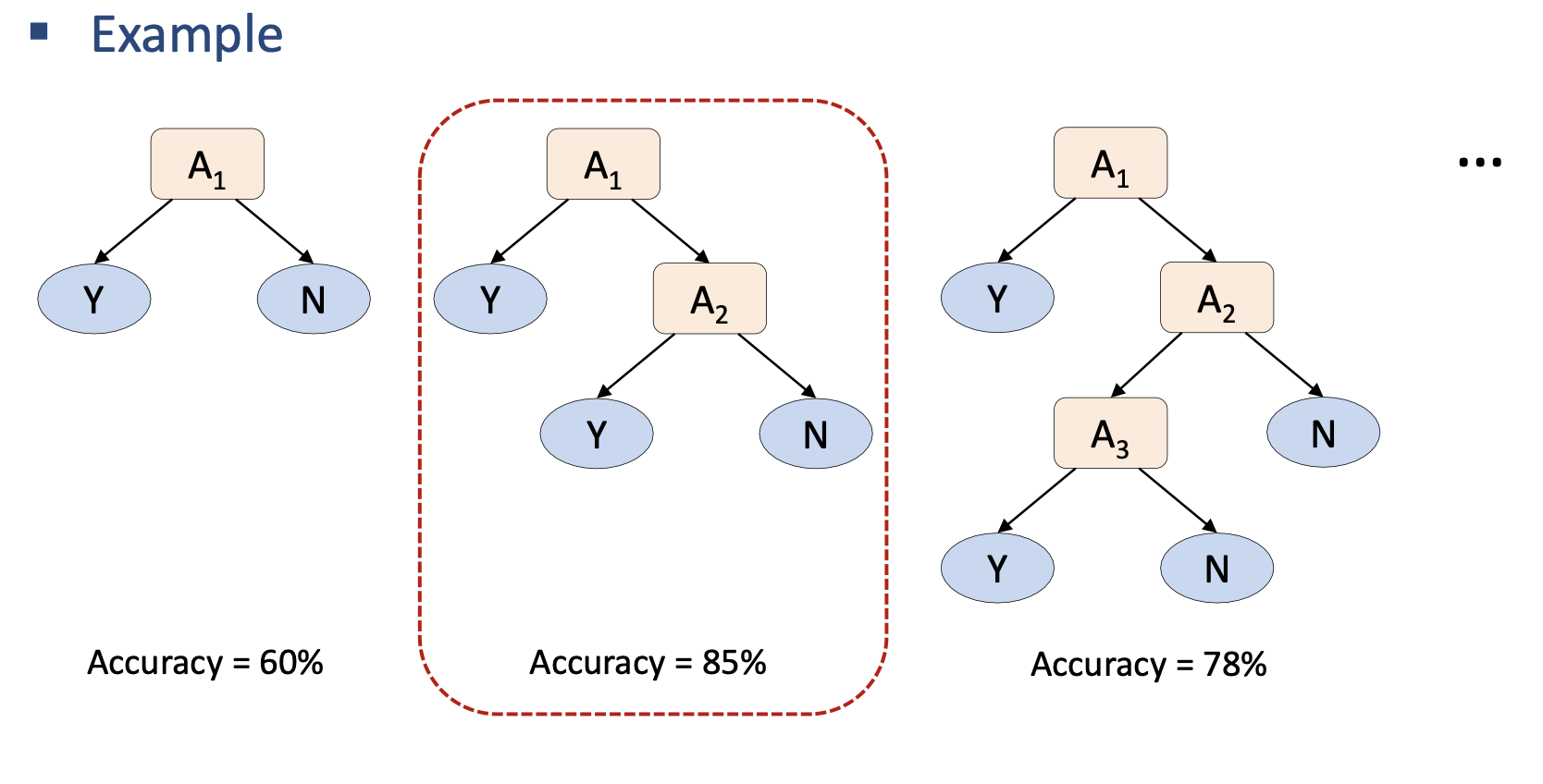

3. Build Many Trees and Pick the Best

- The tree with the best accuracy

Case 2) Regularization for Linear Models

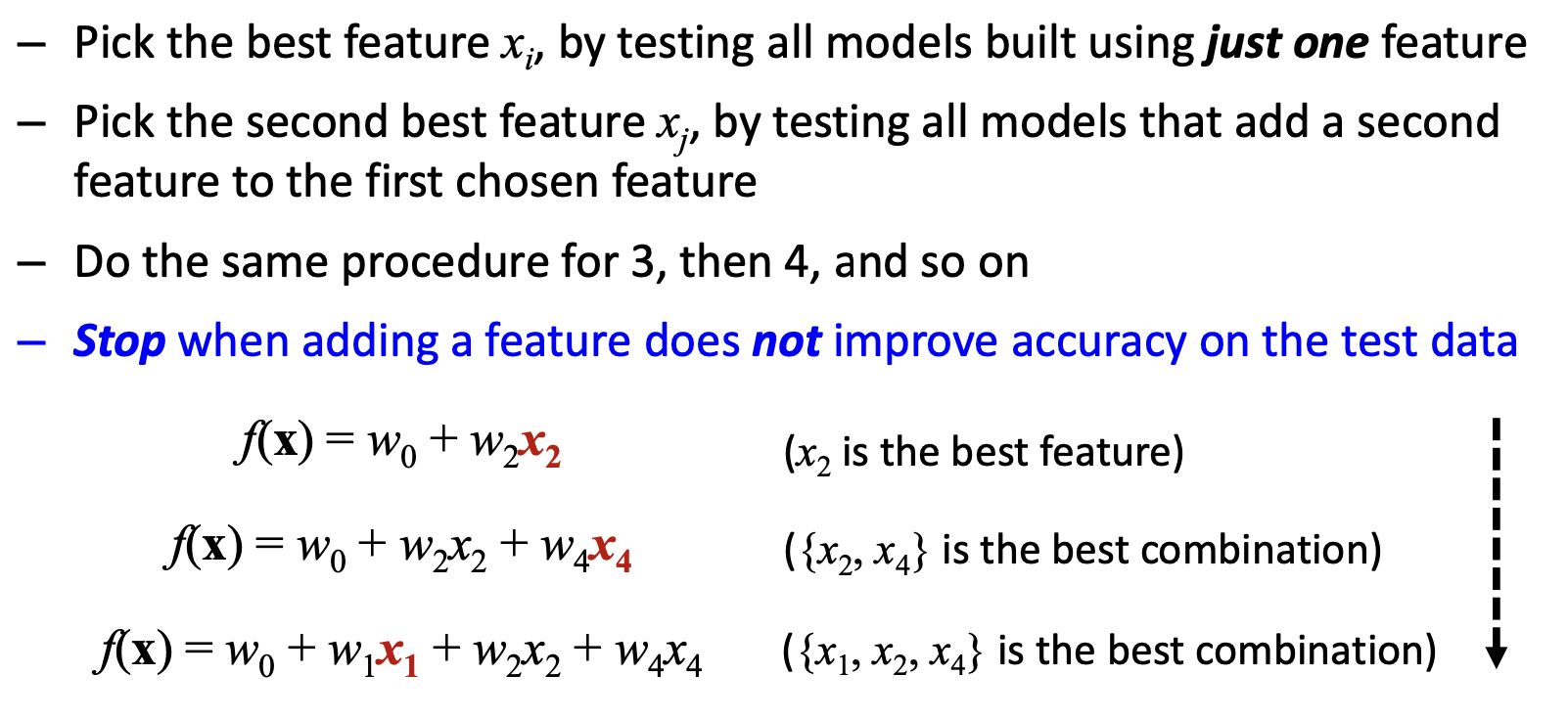

Sequential forward selection (SFS)

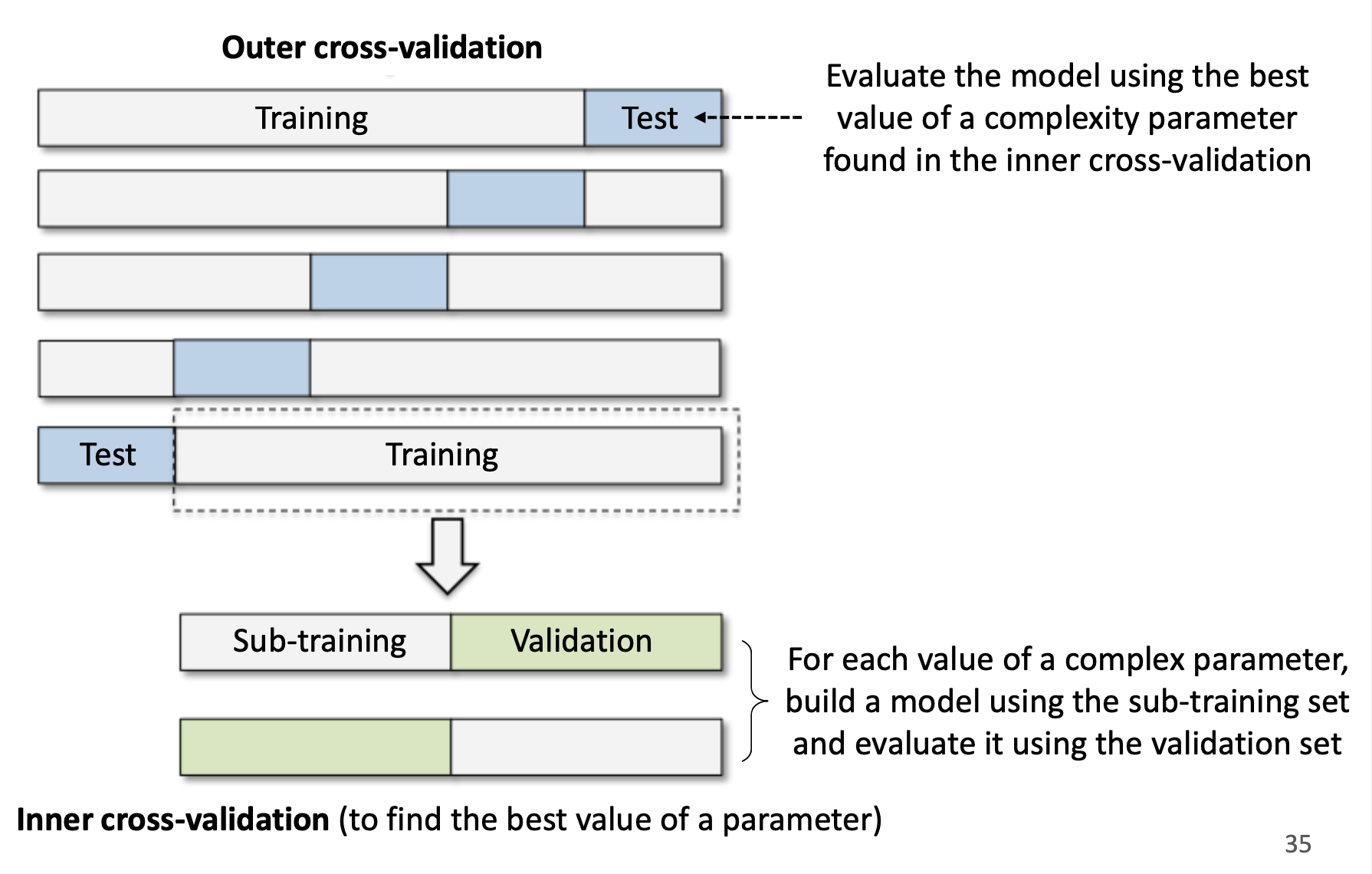

Case 3) Nested Cross-Validation

-

Used when we don't know the best value of a complexity parameter

-

Cross-validation

Used when the value of the complexitiy parameter is fixed

-

Nested cross-validation

Used when we want to find the best value of a complexity parameter