AutoGen - User Guide > GroupChat > Customize Speaker Selection (멀티에이전트에서 사용자 지정 발화자 선택)

AutoGen

AutoGen > User Guide > GroupChat > Customize Speaker Selection

https://microsoft.github.io/autogen/0.2/docs/topics/groupchat/customized_speaker_selection

Customize Speaker Selection

GroupChat에서는 GroupChat 객체에 함수를 전달하여 발화자 선택을 커스터마이징할 수 있다. 이 함수를 사용하면 더 결정론적인 에이전트 워크플로우를 구성할 수 있으며, 이를 구현할 때는 StateFlow 패턴을 따르는 것을 권장한다. 자세한 내용은 StateFlow 블로그를 참고

StateFlow

https://microsoft.github.io/autogen/0.2/blog/2024/02/29/StateFlow/

StateFlow 정리 포스팅

https://velog.io/@heyggun/AutoGen-StateFlow-GroupChat%EC%97%90%EC%84%9C-%EC%82%AC%EC%9A%A9%EC%9E%90-%EC%A7%80%EC%A0%95-%EB%B0%9C%ED%99%94%EC%9E%90-%EC%84%A0%ED%83%9D%EC%9D%B4-%EA%B0%80%EB%8A%A5%ED%95%9C-%EC%83%81%ED%83%9C-%EA%B8%B0%EB%B0%98-%EC%9B%8C%ED%81%AC%ED%94%8C%EB%A1%9C%EC%9A%B0-%EA%B5%AC%EC%B6%95

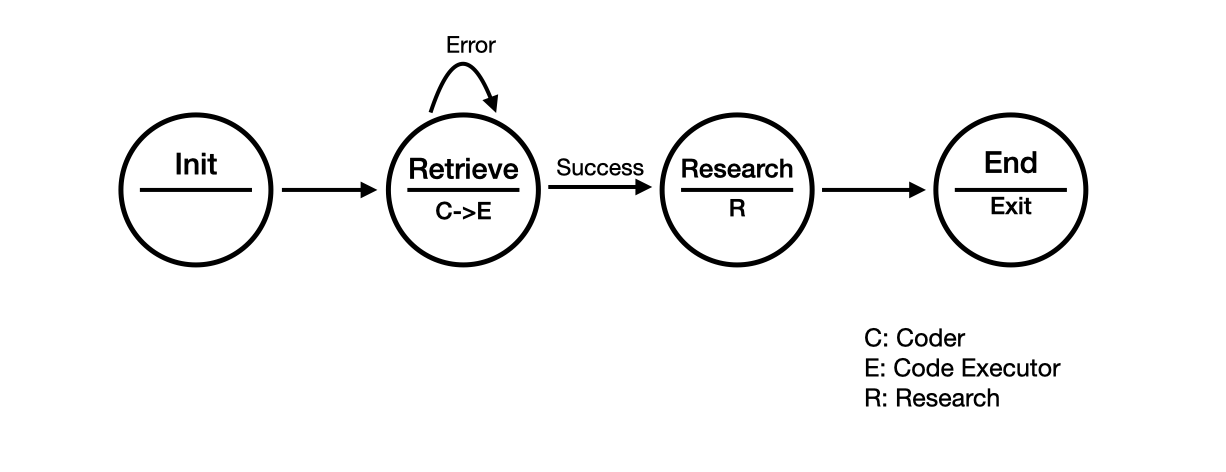

An example research workflow

다음은 사용자 지정 발화자 선택 기능이 포함된 연구용 StateFlow 모델을 구축하는 간단한 예시이다. 먼저 다음과 같은 에이전트들을 정의한다.

- Initializer (초기화자) : 작업을 전송하여 워크플로우를 시작한다.

- Coder (코더) : 코드를 작성하여 인터넷에서 논문을 검색한다.

- Executor (실행자) : 코드를 실행한다.

- Scientist (과학자) : 논문을 읽고 요약을 작성한다.

위 그림에서는 4개의 상태(Init, Retrieve, Research, End)로 구성된 간단한 연구 워크플로우를 정의한다. 각 상태에서는 다양한 에이전트들을 호출하여 작업을 수행한다.

- Init (초기화) : Initializer를 사용하여 워크플로우를 시작한다.

- Retrieve (검색) : 먼저 Coder를 호출하여 코드를 작성하고, 이후 Executor를 호출하여 코드를 실행한다.

- Research (연구) : Scientist를 호출하여 논문을 읽고 요약을 작성하게 한다.

- End (종료) : 워크플로우를 종료한다.

Create your speaker selection function

다음은 speaker selection 함수의 뼈대이다. 이 함수에 speaker selection 로직을 정의하여 완성한다.

import autogen

from autogen import Agent, GroupChat

from typing import Literal, Union

from config import settings

api_key = settings.openai_api_key.get_secret_value()

llm_config = {

"config_list":

[

{

"model" : "gpt-4o-mini",

"api_key" : api_key

}

]

}

code_execution_config= {

"last_n_messages" : 3,

"work_dir" : "paper",

"use_docker" : False,

}

def custom_speaker_selection_func(

last_speaker: Agent,

groupchat: GroupChat

) -> Union[Agent, Literal["auto", "manual", "random", "round_robin"], None]:

"""

Define a customized speaker selection function.

A recommended way is to define a transition for each speaker in the groupchat.

Parameters:

- last_speaker : Agent

The last speaker in the group chat.

- groupchat : GroupChat

The GroupChat object

Return:

Return one of the following:

1. an `Agent` class, it must be one of the agents in the group chat.

2. a string from ["auto", "manual", "random", "round_roubin"] to select a default method to use.

3. None, which indicates the chat should be terminated.

"""

pass다음으로 에이전트들을 정의한다.

initializer = UserProxyAgent(

name="init"

)

coder = autogen.AssistantAgent(

name="Retrieve_Action_1",

llm_config = llm_config,

system_message= """You are the Coder. Given a topic, write code to retrieve related papers form the arXiv API, print their title, authors, abstract, and link.

You write python/shell code to solve tasks. Wrap the code in a code block that specifies the script type. The user can't modify your code. So do not suggest incomplete code which requires others to modify.

Don't use a code block if it's not intended to be executed by the executor.

Don't include multiple code blocks in one response. Do not ask others to copy and paste the result.

Check the execution result return ed by the executor.

If the result indicates there is an error, fix the error and output the code again.

Suggest the full code instread of partial code or code changes.

If the error can't be fixed or if the task is not solved even after the code is executed successfully, analyze the problem, revisit you assumption, collect additional info you need, and think of a different approach to try.

"""

)

executor = autogen.UserProxyAgent(

name="Retrieve_Action_2",

system_message= "Executor. Execute the code written by the Coder and report the reulst.",

human_input_mode="NEVER",

code_execution_config=code_execution_config,

)

scientist = autogen.AssistantAgent(

name="Research_Action_1",

llm_config=llm_config,

system_message="""You are the Scientist. Please categorize papers after seeing their abstracts printed and create a markdown table with Domain, Titel, Authors, Summary and Link"""

)

상태 전이 함수를 정의한다.

def state_transition(last_speaker, groupchat):

messages = groupchat.messages

if last_speaker is initializer:

return coder

elif last_speaker is coder:

return executor

elif last_speaker is executor:

if messages[-1]["content"] == "exitcode: 1":

return coder

else:

return scientist

elif last_speaker == "Scientist":

return None

GroupChat과 GroupChat manager를 정의하고 Chat을 시작한다.

groupchat = autogen.GroupChat(

agents=[initializer, coder, executor, scientist],

messages=[],

max_round=20,

speaker_selection_method=state_transition,

)

manager = autogen.GroupChatManager(groupchat=groupchat, llm_config=llm_config)

result = initializer.initiate_chat(

manager,

message="Topic : LLM applications papers from last week. Requirement : 5- 10 papers from different domains."

)그렇다면 아래와 같은 결과로 GroupChat이 진행된다.

init (to chat_manager):

Topic : LLM applications papers from last week. Requirement : 5- 10 papers from different domains.

--------------------------------------------------------------------------------

Next speaker: Retrieve_Action_1

Retrieve_Action_1 (to chat_manager):

```python

import requests

from datetime import datetime, timedelta

import xml.etree.ElementTree as ET

def get_arxiv_papers(topic, days=7, max_results=10):

# Calculate the date from one week ago

end_date = datetime.utcnow()

start_date = end_date - timedelta(days=days)

# Format dates for arXiv's API query

start_date_str = start_date.strftime('%Y%m%d')

end_date_str = end_date.strftime('%Y%m%d')

# Define the arXiv API URL

url = f'http://export.arxiv.org/api/query?search_query=all:{topic}&start=0&max_results={max_results}&sortBy=submittedDate&sortOrder=descending'

response = requests.get(url)

if response.status_code == 200:

# Parse the XML response

root = ET.fromstring(response.content)

entries = root.findall('{http://www.w3.org/2005/Atom}entry')

for entry in entries:

title = entry.find('{http://www.w3.org/2005/Atom}title').text

authors = [author.find('{http://www.w3.org/2005/Atom}name').text for author in entry.findall('{http://www.w3.org/2005/Atom}author')]

abstract = entry.find('{http://www.w3.org/2005/Atom}summary').text

link = entry.find('{http://www.w3.org/2005/Atom}id').text

print(f'Title: {title}')

print(f'Authors: {", ".join(authors)}')

print(f'Abstract: {abstract}')

print(f'Link: {link}\n')

else:

print(f'Error fetching data: {response.status_code}')

# Specify the topic

get_arxiv_papers('LLM applications') ```

--------------------------------------------------------------------------------

Next speaker: Retrieve_Action_2

>>>>>>>> EXECUTING CODE BLOCK 0 (inferred language is python)...

Retrieve_Action_2 (to chat_manager):

exitcode: 0 (execution succeeded)

Code output:

Title: Learning Streaming Video Representation via Multitask Training

Authors: Yibin Yan, Jilan Xu, Shangzhe Di, Yikun Liu, Yudi Shi, Qirui Chen, Zeqian Li, Yifei Huang, Weidi Xie

Abstract: Understanding continuous video streams plays a fundamental role in real-time

applications including embodied AI and autonomous driving. Unlike offline video

understanding, streaming video understanding requires the ability to process

video streams frame by frame, preserve historical information, and make

low-latency decisions.To address these challenges, our main contributions are

three-fold. (i) We develop a novel streaming video backbone, termed as

StreamFormer, by incorporating causal temporal attention into a pre-trained

vision transformer. This enables efficient streaming video processing while

maintaining image representation capability.(ii) To train StreamFormer, we

propose to unify diverse spatial-temporal video understanding tasks within a

multitask visual-language alignment framework. Hence, StreamFormer learns

global semantics, temporal dynamics, and fine-grained spatial relationships

simultaneously. (iii) We conduct extensive experiments on online action

detection, online video instance segmentation, and video question answering.

StreamFormer achieves competitive results while maintaining efficiency,

demonstrating its potential for real-time applications.

Link: http://arxiv.org/abs/2504.20041v1

Title: AutoJudge: Judge Decoding Without Manual Annotation

Authors: Roman Garipov, Fedor Velikonivtsev, Ruslan Svirschevski, Vage Egiazarian, Max Ryabinin

Abstract: We introduce AutoJudge, a framework that accelerates large language model

(LLM) inference with task-specific lossy speculative decoding. Instead of

matching the original model output distribution token-by-token, we identify

which of the generated tokens affect the downstream quality of the generated

response, relaxing the guarantee so that the "unimportant" tokens can be

generated faster. Our approach relies on a semi-greedy search algorithm to test

which of the mismatches between target and draft model should be corrected to

preserve quality, and which ones may be skipped. We then train a lightweight

classifier based on existing LLM embeddings to predict, at inference time,

which mismatching tokens can be safely accepted without compromising the final

answer quality. We test our approach with Llama 3.2 1B (draft) and Llama 3.1 8B

(target) models on zero-shot GSM8K reasoning, where it achieves up to 1.5x more

accepted tokens per verification cycle with under 1% degradation in answer

accuracy compared to standard speculative decoding and over 2x with small loss

in accuracy. When applied to the LiveCodeBench benchmark, our approach

automatically detects other, programming-specific important tokens and shows

similar speedups, demonstrating its ability to generalize across tasks.

Link: http://arxiv.org/abs/2504.20039v1

Title: Cam-2-Cam: Exploring the Design Space of Dual-Camera Interactions for

Smartphone-based Augmented Reality

Authors: Brandon Woodard, Melvin He, Mose Sakashita, Jing Qian, Zainab Iftikhar, Joseph LaViola Jr

Abstract: Off-the-shelf smartphone-based AR systems typically use a single front-facing

or rear-facing camera, which restricts user interactions to a narrow field of

view and small screen size, thus reducing their practicality. We present

\textit{Cam-2-Cam}, an interaction concept implemented in three

smartphone-based AR applications with interactions that span both cameras.

Results from our qualitative analysis conducted on 30 participants presented

two major design lessons that explore the interaction space of smartphone AR

while maintaining critical AR interface attributes like embodiment and

immersion: (1) \textit{Balancing Contextual Relevance and Feedback Quality}

serves to outline a delicate balance between implementing familiar interactions

people do in the real world and the quality of multimodal AR responses and (2)

\textit{Preventing Disorientation using Simultaneous Capture and Alternating

Cameras} which details how to prevent disorientation during AR interactions

using the two distinct camera techniques we implemented in the paper.

Additionally, we consider observed user assumptions or natural tendencies to

inform future implementations of dual-camera setups for smartphone-based AR. We

envision our design lessons as an initial pioneering step toward expanding the

interaction space of smartphone-based AR, potentially driving broader adoption

and overcoming limitations of single-camera AR.

Link: http://arxiv.org/abs/2504.20035v1

Title: More Clear, More Flexible, More Precise: A Comprehensive Oriented Object

Detection benchmark for UAV

Authors: Kai Ye, Haidi Tang, Bowen Liu, Pingyang Dai, Liujuan Cao, Rongrong Ji

Abstract: Applications of unmanned aerial vehicle (UAV) in logistics, agricultural

automation, urban management, and emergency response are highly dependent on

oriented object detection (OOD) to enhance visual perception. Although existing

datasets for OOD in UAV provide valuable resources, they are often designed for

specific downstream tasks.Consequently, they exhibit limited generalization

performance in real flight scenarios and fail to thoroughly demonstrate

algorithm effectiveness in practical environments. To bridge this critical gap,

we introduce CODrone, a comprehensive oriented object detection dataset for

UAVs that accurately reflects real-world conditions. It also serves as a new

benchmark designed to align with downstream task requirements, ensuring greater

applicability and robustness in UAV-based OOD.Based on application

requirements, we identify four key limitations in current UAV OOD datasets-low

image resolution, limited object categories, single-view imaging, and

restricted flight altitudes-and propose corresponding improvements to enhance

their applicability and robustness.Furthermore, CODrone contains a broad

spectrum of annotated images collected from multiple cities under various

lighting conditions, enhancing the realism of the benchmark. To rigorously

evaluate CODrone as a new benchmark and gain deeper insights into the novel

challenges it presents, we conduct a series of experiments based on 22

classical or SOTA methods.Our evaluation not only assesses the effectiveness of

CODrone in real-world scenarios but also highlights key bottlenecks and

opportunities to advance OOD in UAV applications.Overall, CODrone fills the

data gap in OOD from UAV perspective and provides a benchmark with enhanced

generalization capability, better aligning with practical applications and

future algorithm development.

Link: http://arxiv.org/abs/2504.20032v1

Title: All-Subsets Important Separators with Applications to Sample Sets,

Balanced Separators and Vertex Sparsifiers in Directed Graphs

Authors: Aditya Anand, Euiwoong Lee, Jason Li, Thatchaphol Saranurak

Abstract: Given a directed graph $G$ with $n$ vertices and $m$ edges, a parameter $k$

and two disjoint subsets $S,T \subseteq V(G)$, we show that the number of

all-subsets important separators, which is the number of $A$-$B$ important

vertex separators of size at most $k$ over all $A \subseteq S$ and $B \subseteq

T$, is at most $\beta(|S|, |T|, k) = 4^k {|S| \choose \leq k} {|T| \choose \leq

2k}$, where ${x \choose \leq c} = \sum_{i = 1}^c {x \choose i}$, and that they

can be enumerated in time $O(\beta(|S|,|T|,k)k^2(m+n))$. This is a

generalization of the folklore result stating that the number of $A$-$B$

important separators for two fixed sets $A$ and $B$ is at most $4^k$ (first

implicitly shown by Chen, Liu and Lu Algorithmica '09). From this result, we

obtain the following applications: We give a construction for detection sets

and sample sets in directed graphs, generalizing the results of Kleinberg

(Internet Mathematics' 03) and Feige and Mahdian (STOC' 06) to directed graphs.

Via our new sample sets, we give the first FPT algorithm for finding balanced

separators in directed graphs parameterized by $k$, the size of the separator.

Our algorithm runs in time $2^{O(k)} (m + n)$. We also give a $O({\sqrt{\log

k}})$ approximation algorithm for the same problem. Finally, we present new

results on vertex sparsifiers for preserving small cuts.

Link: http://arxiv.org/abs/2504.20027v1

Title: Better To Ask in English? Evaluating Factual Accuracy of Multilingual

LLMs in English and Low-Resource Languages

Authors: Pritika Rohera, Chaitrali Ginimav, Gayatri Sawant, Raviraj Joshi

Abstract: Multilingual Large Language Models (LLMs) have demonstrated significant

effectiveness across various languages, particularly in high-resource languages

such as English. However, their performance in terms of factual accuracy across

other low-resource languages, especially Indic languages, remains an area of

investigation. In this study, we assess the factual accuracy of LLMs - GPT-4o,

Gemma-2-9B, Gemma-2-2B, and Llama-3.1-8B - by comparing their performance in

English and Indic languages using the IndicQuest dataset, which contains

question-answer pairs in English and 19 Indic languages. By asking the same

questions in English and their respective Indic translations, we analyze

whether the models are more reliable for regional context questions in Indic

languages or when operating in English. Our findings reveal that LLMs often

perform better in English, even for questions rooted in Indic contexts.

Notably, we observe a higher tendency for hallucination in responses generated

in low-resource Indic languages, highlighting challenges in the multilingual

understanding capabilities of current LLMs.

Link: http://arxiv.org/abs/2504.20022v1

Title: Modular Machine Learning: An Indispensable Path towards New-Generation

Large Language Models

Authors: Xin Wang, Haoyang Li, Zeyang Zhang, Haibo Chen, Wenwu Zhu

Abstract: Large language models (LLMs) have dramatically advanced machine learning

research including natural language processing, computer vision, data mining,

etc., yet they still exhibit critical limitations in reasoning, factual

consistency, and interpretability. In this paper, we introduce a novel learning

paradigm -- Modular Machine Learning (MML) -- as an essential approach toward

new-generation LLMs. MML decomposes the complex structure of LLMs into three

interdependent components: modular representation, modular model, and modular

reasoning, aiming to enhance LLMs' capability of counterfactual reasoning,

mitigating hallucinations, as well as promoting fairness, safety, and

transparency. Specifically, the proposed MML paradigm can: i) clarify the

internal working mechanism of LLMs through the disentanglement of semantic

components; ii) allow for flexible and task-adaptive model design; iii) enable

interpretable and logic-driven decision-making process. We present a feasible

implementation of MML-based LLMs via leveraging advanced techniques such as

disentangled representation learning, neural architecture search and

neuro-symbolic learning. We critically identify key challenges, such as the

integration of continuous neural and discrete symbolic processes, joint

optimization, and computational scalability, present promising future research

directions that deserve further exploration. Ultimately, the integration of the

MML paradigm with LLMs has the potential to bridge the gap between statistical

(deep) learning and formal (logical) reasoning, thereby paving the way for

robust, adaptable, and trustworthy AI systems across a wide range of real-world

applications.

Link: http://arxiv.org/abs/2504.20020v1

Title: MINT: Multi-Vector Search Index Tuning

Authors: Jiongli Zhu, Yue Wang, Bailu Ding, Philip A. Bernstein, Vivek Narasayya, Surajit Chaudhuri

Abstract: Vector search plays a crucial role in many real-world applications. In

addition to single-vector search, multi-vector search becomes important for

multi-modal and multi-feature scenarios today. In a multi-vector database, each

row is an item, each column represents a feature of items, and each cell is a

high-dimensional vector. In multi-vector databases, the choice of indexes can

have a significant impact on performance. Although index tuning for relational

databases has been extensively studied, index tuning for multi-vector search

remains unclear and challenging. In this paper, we define multi-vector search

index tuning and propose a framework to solve it. Specifically, given a

multi-vector search workload, we develop algorithms to find indexes that

minimize latency and meet storage and recall constraints. Compared to the

baseline, our latency achieves 2.1X to 8.3X speedup.

Link: http://arxiv.org/abs/2504.20018v1

Title: Applying LLM-Powered Virtual Humans to Child Interviews in

Child-Centered Design

Authors: Linshi Li, Hanlin Cai

Abstract: In child-centered design, directly engaging children is crucial for deeply

understanding their experiences. However, current research often prioritizes

adult perspectives, as interviewing children involves unique challenges such as

environmental sensitivities and the need for trust-building. AI-powered virtual

humans (VHs) offer a promising approach to facilitate engaging and multimodal

interactions with children. This study establishes key design guidelines for

LLM-powered virtual humans tailored to child interviews, standardizing

multimodal elements including color schemes, voice characteristics, facial

features, expressions, head movements, and gestures. Using ChatGPT-based prompt

engineering, we developed three distinct Human-AI workflows (LLM-Auto,

LLM-Interview, and LLM-Analyze) and conducted a user study involving 15

children aged 6 to 12. The results indicated that the LLM-Analyze workflow

outperformed the others by eliciting longer responses, achieving higher user

experience ratings, and promoting more effective child engagement.

Link: http://arxiv.org/abs/2504.20016v1

Title: LLM-Generated Fake News Induces Truth Decay in News Ecosystem: A Case

Study on Neural News Recommendation

Authors: Beizhe Hu, Qiang Sheng, Juan Cao, Yang Li, Danding Wang

Abstract: Online fake news moderation now faces a new challenge brought by the

malicious use of large language models (LLMs) in fake news production. Though

existing works have shown LLM-generated fake news is hard to detect from an

individual aspect, it remains underexplored how its large-scale release will

impact the news ecosystem. In this study, we develop a simulation pipeline and

a dataset with ~56k generated news of diverse types to investigate the effects

of LLM-generated fake news within neural news recommendation systems. Our

findings expose a truth decay phenomenon, where real news is gradually losing

its advantageous position in news ranking against fake news as LLM-generated

news is involved in news recommendation. We further provide an explanation

about why truth decay occurs from a familiarity perspective and show the

positive correlation between perplexity and news ranking. Finally, we discuss

the threats of LLM-generated fake news and provide possible countermeasures. We

urge stakeholders to address this emerging challenge to preserve the integrity

of news ecosystems.

Link: http://arxiv.org/abs/2504.20013v1

--------------------------------------------------------------------------------

Next speaker: Research_Action_1

Research_Action_1 (to chat_manager):

Here is the markdown table summarizing the LLM applications papers retrieved from the last week:

| Domain | Title | Authors | Summary | Link |

|----------------------|-------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|--------------------------------------------|

| Multimedia | Learning Streaming Video Representation via Multitask Training | Yibin Yan, Jilan Xu, Shangzhe Di, Yikun Liu, Yudi Shi, Qirui Chen, Zeqian Li, Yifei Huang, Weidi Xie | This paper proposes a streaming video backbone named StreamFormer that integrates causal temporal attention for efficient real-time video understanding, maintaining historical context and low-latency decisions. It also unifies various spatial-temporal tasks within a multitask framework, demonstrating efficient performance across online video tasks. | [Link](http://arxiv.org/abs/2504.20041v1) |

| Natural Language | AutoJudge: Judge Decoding Without Manual Annotation | Roman Garipov, Fedor Velikonivtsev, Ruslan Svirschevski, Vage Egiazarian, Max Ryabinin | AutoJudge accelerates LLM inference with lossy speculative decoding by identifying and skipping "unimportant" tokens, achieving significant speedups while maintaining answer quality. The framework demonstrates effectiveness across coding tasks, suggesting it offers a promising generalization across various tasks. | [Link](http://arxiv.org/abs/2504.20039v1) |

| Augmented Reality | Cam-2-Cam: Exploring the Design Space of Dual-Camera Interactions for Smartphone-based Augmented Reality | Brandon Woodard, Melvin He, Mose Sakashita, Jing Qian, Zainab Iftikhar, Joseph LaViola Jr | This work introduces Cam-2-Cam dual-camera interactions for smartphone AR, highlighting design lessons that enhance user experience by balancing feedback quality and preventing disorientation. It aims to broaden the interaction possibilities of smartphone AR systems. | [Link](http://arxiv.org/abs/2504.20035v1) |

| UAV Detection | More Clear, More Flexible, More Precise: A Comprehensive Oriented Object Detection benchmark for UAV | Kai Ye, Haidi Tang, Bowen Liu, Pingyang Dai, Liujuan Cao, Rongrong Ji | The paper presents CODrone, an oriented object detection dataset designed for UAVs that addresses limitations of current datasets while providing a new benchmark for enhanced realism and applicability. It serves to improve algorithm performance in real-world UAV applications, discussing challenges and future opportunities in oriented object detection. | [Link](http://arxiv.org/abs/2504.20032v1) |

| Graph Theory | All-Subsets Important Separators with Applications to Sample Sets, Balanced Separators and Vertex Sparsifiers in Directed Graphs | Aditya Anand, Euiwoong Lee, Jason Li, Thatchaphol Saranurak | This study generalizes important node separator findings in directed graphs, elaborating new constructions for detection and sample sets. It shares implications for finding balanced separators and presents algorithms impacting vertex sparsifiers while enhancing understanding of vertex relationships in directed graph structures. | [Link](http://arxiv.org/abs/2504.20027v1) |

| Multilingual Models | Better To Ask in English? Evaluating Factual Accuracy of Multilingual LLMs in English and Low-Resource Languages | Pritika Rohera, Chaitrali Ginimav, Gayatri Sawant, Raviraj Joshi | The study investigates the factual accuracy of multilingual LLMs by comparing their performance in English versus low-resource languages. The findings indicate that models often perform better in English, revealing challenges in low-resource languages, including a higher hallucination rate in responses. | [Link](http://arxiv.org/abs/2504.20022v1) |

| Modular ML | Modular Machine Learning: An Indispensable Path towards New-Generation Large Language Models | Xin Wang, Haoyang Li, Zeyang Zhang, Haibo Chen, Wenwu Zhu | This paper presents a Modular Machine Learning paradigm, dissecting LLMs into components to enhance reasoning, transparency, and flexibility. It suggests deep integrations of neural and symbolic processes, urging future research on overcoming challenges in model design and interpretability. | [Link](http://arxiv.org/abs/2504.20020v1) |

| Multi-Vector Search | MINT: Multi-Vector Search Index Tuning | Jiongli Zhu, Yue Wang, Bailu Ding, Philip A. Bernstein, Vivek Narasayya, Surajit Chaudhuri | The authors define multi-vector search index tuning for improving search performance in multi-vector databases. The proposed framework aims to optimize indexes that significantly reduce latency compared to baseline methods, paving the way for better performance in multi-modal applications. | [Link](http://arxiv.org/abs/2504.20018v1) |

| Child Psychology | Applying LLM-Powered Virtual Humans to Child Interviews in Child-Centered Design | Linshi Li, Hanlin Cai | This study demonstrates the use of LLM-powered virtual humans tailored for child-centered design interviews. It sets design guidelines and shows how LLM workflows can enhance child engagement during interviews, outperforming traditional methods in terms of eliciting responses and user experiences. | [Link](http://arxiv.org/abs/2504.20016v1) |

| News Ecosystem | LLM-Generated Fake News Induces Truth Decay in News Ecosystem: A Case Study on Neural News Recommendation | Beizhe Hu, Qiang Sheng, Juan Cao, Yang Li, Danding Wang | This research explores the impact of LLM-generated fake news on the news recommendation ecosystem, revealing a "truth decay" effect. It highlights the challenges of moderating fake news and suggests countermeasures to preserve news integrity, showing how generated content may disrupt real news ranking within recommendation systems. | [Link](http://arxiv.org/abs/2504.20013v1) |

Feel free to use or modify this table for your needs!

--------------------------------------------------------------------------------

>>>>>>>> TERMINATING RUN (c8b7aa31-32e1-47e6-909c-bef2495440b9): No next speaker selected