라즈베리파이5에 llama3.cpp porting 하기.

참고 : https://github.com/ggerganov/llama.cpp/blob/master/docs/build.md

< 라즈베리파이5 - RAM 8GB 모델 사용>

- git 에서 설치하기

- git clone https://github.com/ggerganov/llama.cpp.git

- cd llama.cpp

- make -j

-

weight 받기.

-hugging face 에서 GGUF 파일로 받기

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

wget https://huggingface.co/lmstudio-community/Meta-Llama-3.1-8B-Instruct-GGUF/resolve/main/Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf설치 후 아래 코드로 실행 확인

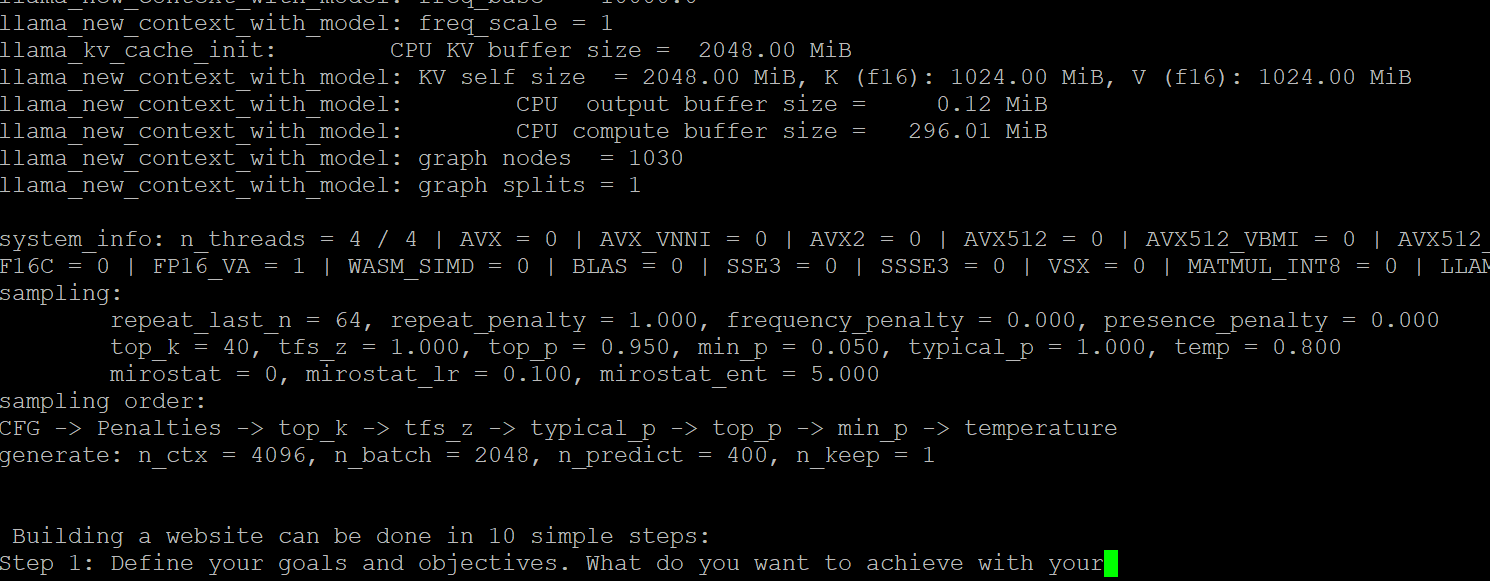

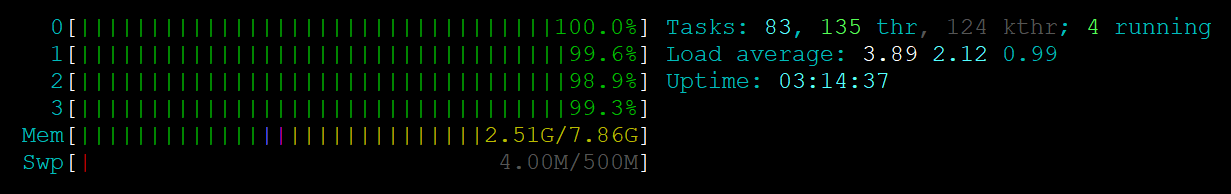

./llama-cli -m models/eta-Llama-3.1-8B-Instruct-Q4_K_M.gguf -p "What is life?" -n 400 -e-

-

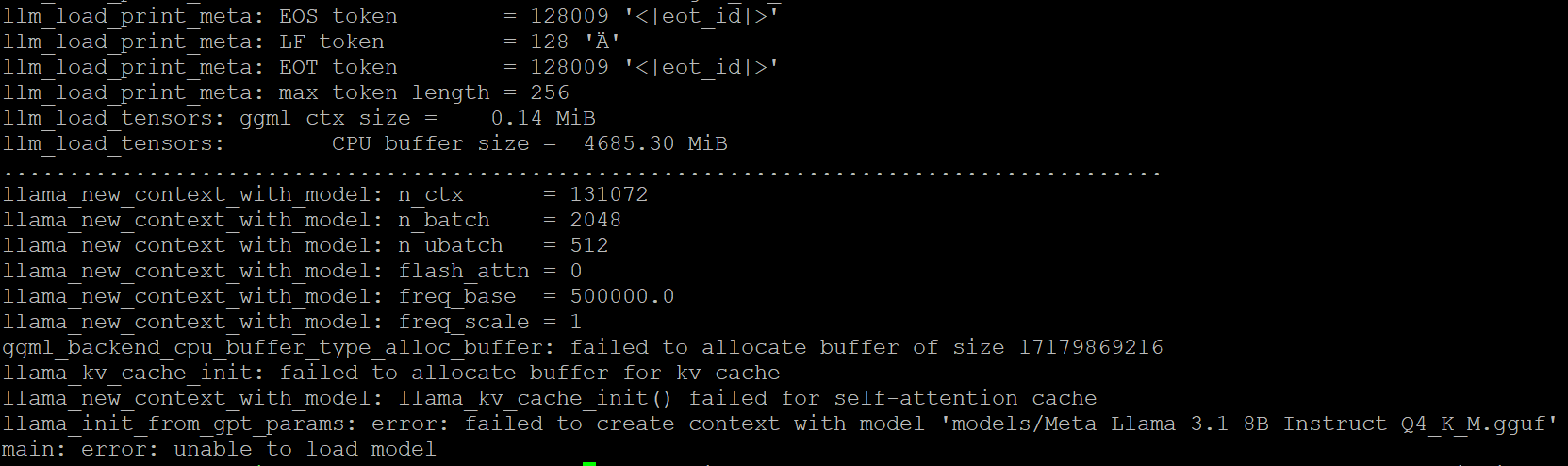

cpu buffer memory 부족 error 17179869216 ( 16GB?)

wget https://huggingface.co/zhentaoyu/Llama-2-7b-chat-hf-Q4_K_S-GGUF/resolve/main/llama-2-7b-chat-hf-q4_k_s.gguf- 좀더 작은 모델로 하니, 돌아감.