이상치 탐지

Workshop Assessment

Welcome to the assessment section of this course. In the previous labs you successfully applied machine learning and deep learning techniques for the task of anomaly detection on network packet data. Equipped with this background, you can apply these techniques to any type of data (images or audio) across different use cases. In this assessment, you will apply supervised and unsupervised techniques for intrusion detection on the NSL KDD dataset.

If you are successfully able to complete this assessment, you will be able to generate a certificate of competency for the course. Good luck!

Objectives

This assessment seeks to test the following concepts:

Building and training an Xgboost model.

Building and training an autoencoder neural network.

Detecting anomalies using different thresholding methods.

The total duration of the assessment is 2 hrs, however, if you are unable to complete the assessment today, you are more than welcome to return to it at a later time to try and complete it then.

Section 1: Preparation - Done for You

The Dataset

We will be using the NSL-KDD dataset published by the University of New Brunswick in this assessment. While the dataset is similar to the KDD dataset used throughout the workshop in terms of the features used, it varies in the following respects:

Removal of redundant and duplicate records in the dataset to prevent classifiers from overfitting a particular class.

The number of selected records from each difficulty level group is inversely proportional to the percentage of records in the original KDD data set making the task of unsupervised classification slightly more challenging.

Imports

import numpy as np

import pandas as pd

import os

import random as python_random

import xgboost as xgb

import matplotlib.pyplot as plt

import pickle

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import optimizers

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Dropout

from tensorflow.keras.utils import plot_model

from collections import OrderedDict

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_curve, auc, confusion_matrix

from sklearn.metrics import roc_auc_score,confusion_matrix,classification_report,roc_curve

We will use our own accuracy score functions for the sake of grading this assessment

from assessment import xgb_accuracy_score, autoencoder_accuracy_score

from tensorflow.keras.models import load_model, model_from_json

np.random.seed(42)

python_random.seed(42)

tf.random.set_seed(42)

os.environ['PYTHONHASHSEED']=str(42)

Load the Data

col_names = ["duration","protocol_type","service","flag","src_bytes","dst_bytes","land","wrong_fragment","urgent","hot","num_failed_logins","logged_in",

"num_compromised","root_shell","su_attempted","num_root","num_file_creations","num_shells","num_access_files","num_outbound_cmds",

"is_host_login","is_guest_login","count","srv_count","serror_rate","srv_serror_rate","rerror_rate","srv_rerror_rate","same_srv_rate",

"diff_srv_rate","srv_diff_host_rate","dst_host_count","dst_host_srv_count","dst_host_same_srv_rate","dst_host_diff_srv_rate",

"dst_host_same_src_port_rate","dst_host_srv_diff_host_rate","dst_host_serror_rate","dst_host_srv_serror_rate","dst_host_rerror_rate",

"dst_host_srv_rerror_rate","label"]

df = pd.read_csv("data/KDDTrain+_20Percent.txt", header=None, names=col_names, index_col=False)

text_l = ['protocol_type', 'service', 'flag', 'land', 'logged_in', 'is_host_login', 'is_guest_login']

df.head(5)

duration protocol_type service flag src_bytes dst_bytes land wrong_fragment urgent hot ... dst_host_srv_count dst_host_same_srv_rate dst_host_diff_srv_rate dst_host_same_src_port_rate dst_host_srv_diff_host_rate dst_host_serror_rate dst_host_srv_serror_rate dst_host_rerror_rate dst_host_srv_rerror_rate label

0 0 tcp ftp_data SF 491 0 0 0 0 0 ... 25 0.17 0.03 0.17 0.00 0.00 0.00 0.05 0.00 normal

1 0 udp other SF 146 0 0 0 0 0 ... 1 0.00 0.60 0.88 0.00 0.00 0.00 0.00 0.00 normal

2 0 tcp private S0 0 0 0 0 0 0 ... 26 0.10 0.05 0.00 0.00 1.00 1.00 0.00 0.00 neptune

3 0 tcp http SF 232 8153 0 0 0 0 ... 255 1.00 0.00 0.03 0.04 0.03 0.01 0.00 0.01 normal

4 0 tcp http SF 199 420 0 0 0 0 ... 255 1.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 normal

5 rows × 42 columns

Describe the different classes of Labels

pd.DataFrame(df['label'].value_counts())

label

normal 13449

neptune 8282

ipsweep 710

satan 691

portsweep 587

smurf 529

nmap 301

back 196

teardrop 188

warezclient 181

pod 38

guess_passwd 10

warezmaster 7

buffer_overflow 6

imap 5

rootkit 4

multihop 2

phf 2

spy 1

loadmodule 1

ftp_write 1

land 1

Data Preprocessing

Create one-hot encoded categorical columns in the dataset

cat_vars = ['protocol_type', 'service', 'flag', 'land', 'logged_in','is_host_login', 'is_guest_login']

Find unique labels for each category

cat_data = pd.get_dummies(df[cat_vars])

Check that the categorical variables were created correctly

cat_data.head()

land logged_in is_host_login is_guest_login protocol_type_icmp protocol_type_tcp protocol_type_udp service_IRC service_X11 service_Z39_50 ... flag_REJ flag_RSTO flag_RSTOS0 flag_RSTR flag_S0 flag_S1 flag_S2 flag_S3 flag_SF flag_SH

0 0 0 0 0 0 1 0 0 0 0 ... 0 0 0 0 0 0 0 0 1 0

1 0 0 0 0 0 0 1 0 0 0 ... 0 0 0 0 0 0 0 0 1 0

2 0 0 0 0 0 1 0 0 0 0 ... 0 0 0 0 1 0 0 0 0 0

3 0 1 0 0 0 1 0 0 0 0 ... 0 0 0 0 0 0 0 0 1 0

4 0 1 0 0 0 1 0 0 0 0 ... 0 0 0 0 0 0 0 0 1 0

5 rows × 84 columns

Separate the numerical columns

numeric_vars = list(set(df.columns.values.tolist()) - set(cat_vars))

numeric_vars.remove('label')

numeric_data = df[numeric_vars].copy()

Check that the numeric data has been captured accurately

numeric_data.head()

num_outbound_cmds srv_count dst_host_srv_serror_rate dst_host_count count serror_rate dst_host_srv_count dst_host_same_srv_rate dst_host_serror_rate dst_host_same_src_port_rate ... num_access_files dst_host_rerror_rate dst_bytes urgent num_failed_logins num_file_creations srv_rerror_rate dst_host_srv_diff_host_rate diff_srv_rate srv_serror_rate

0 0 2 0.00 150 2 0.0 25 0.17 0.00 0.17 ... 0 0.05 0 0 0 0 0.0 0.00 0.00 0.0

1 0 1 0.00 255 13 0.0 1 0.00 0.00 0.88 ... 0 0.00 0 0 0 0 0.0 0.00 0.15 0.0

2 0 6 1.00 255 123 1.0 26 0.10 1.00 0.00 ... 0 0.00 0 0 0 0 0.0 0.00 0.07 1.0

3 0 5 0.01 30 5 0.2 255 1.00 0.03 0.03 ... 0 0.00 8153 0 0 0 0.0 0.04 0.00 0.2

4 0 32 0.00 255 30 0.0 255 1.00 0.00 0.00 ... 0 0.00 420 0 0 0 0.0 0.00 0.00 0.0

5 rows × 34 columns

numeric_cat_data = pd.concat([numeric_data, cat_data], axis=1)

Assessment Task 1: Data Selection

The first part of this assessment checks whether you understand the data you are working with. If successful, you should be able to load and split the data in order to begin learning from it.

In the code block below, replace each #### FIX ME #### with solutions which:

Determine the number of classes in the dataset.

Set the variable test_size to the fraction of the dataset you would like to use for testing.

Capture the labels

labels = df['label'].copy()

Convert labels to integers

le = LabelEncoder()

integer_labels = le.fit_transform(labels)

num_labels = len(np.unique(integer_labels))

Split data into test and train

x_train, x_test, y_train, y_test = train_test_split(numeric_cat_data,

integer_labels,

test_size= 0.2,

random_state= 42)

print(x_train.shape)

print(y_train.shape)

print(x_test.shape)

print(y_test.shape)

(20153, 118)

(20153,)

(5039, 118)

(5039,)

Make sure to only fit the the scaler on the training data

scaler = MinMaxScaler()

x_train = scaler.fit_transform(x_train)

x_test = scaler.transform(x_test)

Convert the data to FP32

x_train = x_train.astype(np.float32)

x_test = x_test.astype(np.float32)

Assessment Task 2 : XGBoost - Set the XGBoost Parameters

Treat the question as a multi-class supervised learning problem and train a GPU-accelerated XGBoost model on the given dataset. Refer to the documentation or your previous tasks to fix the parameter list. You may reference the notebooks from previous sections by opening the file explorer on the left-hand side of the JupyterLab screen.

This task checks that you know how these parameters impact training.

FIX ME ###,

params = {

'num_rounds': 100,

'max_depth': 6,

'max_leaves': 2**8,

'alpha': 0.9,

'eta': 0.1,

'gamma': 0.1,

'learning_rate': 0.1,

'subsample': 1,

'reg_lambda': 1,

'scale_pos_weight': 2,

'tree_method': 'gpu_hist',

'n_gpus': 1,

'objective': 'multi:softmax',

'num_class': num_labels,

'verbose': True

}

Assessment Task 3: Model Training

In this next task, you will prove that you can build and fit an accelerated XGBoost Model.

Initiate training by referring to the XGBoost API documentation.

Fit the model on test data to obtain the predictions.

model = xgb.train(params, dtrain, num_rounds, evals=evals)

dtrain = xgb.DMatrix(x_train, label=y_train)

dtest = xgb.DMatrix(x_test, label=y_test)

evals = [(dtest, 'test',), (dtrain, 'train')]

num_rounds = params['num_rounds']

model = xgb.train(params, dtrain, num_rounds, evals=evals)

[02:28:13] WARNING: ../src/learner.cc:576:

Parameters: { "n_gpus", "num_rounds", "scale_pos_weight", "verbose" } might not be used.

This could be a false alarm, with some parameters getting used by language bindings but

then being mistakenly passed down to XGBoost core, or some parameter actually being used

but getting flagged wrongly here. Please open an issue if you find any such cases.

[02:28:13] WARNING: ../src/learner.cc:1115: Starting in XGBoost 1.3.0, the default evaluation metric used with the objective 'multi:softmax' was changed from 'merror' to 'mlogloss'. Explicitly set eval_metric if you'd like to restore the old behavior.

[0] test-mlogloss:2.06719 train-mlogloss:2.06762

[1] test-mlogloss:1.70150 train-mlogloss:1.70151

[2] test-mlogloss:1.45209 train-mlogloss:1.45167

[3] test-mlogloss:1.26213 train-mlogloss:1.26124

[4] test-mlogloss:1.10966 train-mlogloss:1.10816

[5] test-mlogloss:0.98295 train-mlogloss:0.98114

[6] test-mlogloss:0.87585 train-mlogloss:0.87361

[7] test-mlogloss:0.78380 train-mlogloss:0.78119

[8] test-mlogloss:0.70373 train-mlogloss:0.70084

[9] test-mlogloss:0.63336 train-mlogloss:0.63026

[10] test-mlogloss:0.57127 train-mlogloss:0.56797

[11] test-mlogloss:0.51631 train-mlogloss:0.51275

[12] test-mlogloss:0.46727 train-mlogloss:0.46349

[13] test-mlogloss:0.42337 train-mlogloss:0.41951

[14] test-mlogloss:0.38401 train-mlogloss:0.38003

[15] test-mlogloss:0.34858 train-mlogloss:0.34455

[16] test-mlogloss:0.31680 train-mlogloss:0.31261

[17] test-mlogloss:0.28815 train-mlogloss:0.28394

[18] test-mlogloss:0.26225 train-mlogloss:0.25798

[19] test-mlogloss:0.23891 train-mlogloss:0.23454

[20] test-mlogloss:0.21782 train-mlogloss:0.21341

[21] test-mlogloss:0.19885 train-mlogloss:0.19434

[22] test-mlogloss:0.18171 train-mlogloss:0.17713

[23] test-mlogloss:0.16615 train-mlogloss:0.16150

[24] test-mlogloss:0.15216 train-mlogloss:0.14739

[25] test-mlogloss:0.13942 train-mlogloss:0.13458

[26] test-mlogloss:0.12784 train-mlogloss:0.12300

[27] test-mlogloss:0.11741 train-mlogloss:0.11251

[28] test-mlogloss:0.10788 train-mlogloss:0.10299

[29] test-mlogloss:0.09928 train-mlogloss:0.09435

[30] test-mlogloss:0.09142 train-mlogloss:0.08651

[31] test-mlogloss:0.08432 train-mlogloss:0.07941

[32] test-mlogloss:0.07789 train-mlogloss:0.07294

[33] test-mlogloss:0.07194 train-mlogloss:0.06698

[34] test-mlogloss:0.06653 train-mlogloss:0.06155

[35] test-mlogloss:0.06164 train-mlogloss:0.05668

[36] test-mlogloss:0.05719 train-mlogloss:0.05221

[37] test-mlogloss:0.05320 train-mlogloss:0.04819

[38] test-mlogloss:0.04953 train-mlogloss:0.04451

[39] test-mlogloss:0.04618 train-mlogloss:0.04116

[40] test-mlogloss:0.04321 train-mlogloss:0.03813

[41] test-mlogloss:0.04047 train-mlogloss:0.03537

[42] test-mlogloss:0.03796 train-mlogloss:0.03282

[43] test-mlogloss:0.03577 train-mlogloss:0.03056

[44] test-mlogloss:0.03374 train-mlogloss:0.02848

[45] test-mlogloss:0.03185 train-mlogloss:0.02658

[46] test-mlogloss:0.03010 train-mlogloss:0.02482

[47] test-mlogloss:0.02856 train-mlogloss:0.02323

[48] test-mlogloss:0.02716 train-mlogloss:0.02176

[49] test-mlogloss:0.02591 train-mlogloss:0.02042

[50] test-mlogloss:0.02477 train-mlogloss:0.01918

[51] test-mlogloss:0.02371 train-mlogloss:0.01805

[52] test-mlogloss:0.02269 train-mlogloss:0.01699

[53] test-mlogloss:0.02179 train-mlogloss:0.01603

[54] test-mlogloss:0.02095 train-mlogloss:0.01514

[55] test-mlogloss:0.02021 train-mlogloss:0.01432

[56] test-mlogloss:0.01950 train-mlogloss:0.01357

[57] test-mlogloss:0.01891 train-mlogloss:0.01289

[58] test-mlogloss:0.01834 train-mlogloss:0.01227

[59] test-mlogloss:0.01781 train-mlogloss:0.01169

[60] test-mlogloss:0.01729 train-mlogloss:0.01117

[61] test-mlogloss:0.01686 train-mlogloss:0.01068

[62] test-mlogloss:0.01644 train-mlogloss:0.01022

[63] test-mlogloss:0.01604 train-mlogloss:0.00979

[64] test-mlogloss:0.01568 train-mlogloss:0.00941

[65] test-mlogloss:0.01534 train-mlogloss:0.00905

[66] test-mlogloss:0.01506 train-mlogloss:0.00872

[67] test-mlogloss:0.01480 train-mlogloss:0.00842

[68] test-mlogloss:0.01456 train-mlogloss:0.00813

[69] test-mlogloss:0.01434 train-mlogloss:0.00787

[70] test-mlogloss:0.01415 train-mlogloss:0.00764

[71] test-mlogloss:0.01396 train-mlogloss:0.00742

[72] test-mlogloss:0.01376 train-mlogloss:0.00720

[73] test-mlogloss:0.01362 train-mlogloss:0.00702

[74] test-mlogloss:0.01348 train-mlogloss:0.00684

[75] test-mlogloss:0.01329 train-mlogloss:0.00666

[76] test-mlogloss:0.01316 train-mlogloss:0.00650

[77] test-mlogloss:0.01303 train-mlogloss:0.00635

[78] test-mlogloss:0.01292 train-mlogloss:0.00621

[79] test-mlogloss:0.01281 train-mlogloss:0.00608

[80] test-mlogloss:0.01272 train-mlogloss:0.00596

[81] test-mlogloss:0.01261 train-mlogloss:0.00584

[82] test-mlogloss:0.01253 train-mlogloss:0.00573

[83] test-mlogloss:0.01247 train-mlogloss:0.00562

[84] test-mlogloss:0.01240 train-mlogloss:0.00554

[85] test-mlogloss:0.01235 train-mlogloss:0.00546

[86] test-mlogloss:0.01226 train-mlogloss:0.00536

[87] test-mlogloss:0.01224 train-mlogloss:0.00529

[88] test-mlogloss:0.01220 train-mlogloss:0.00523

[89] test-mlogloss:0.01218 train-mlogloss:0.00518

[90] test-mlogloss:0.01215 train-mlogloss:0.00512

[91] test-mlogloss:0.01212 train-mlogloss:0.00507

[92] test-mlogloss:0.01209 train-mlogloss:0.00502

[93] test-mlogloss:0.01205 train-mlogloss:0.00498

[94] test-mlogloss:0.01204 train-mlogloss:0.00494

[95] test-mlogloss:0.01201 train-mlogloss:0.00491

[96] test-mlogloss:0.01199 train-mlogloss:0.00486

[97] test-mlogloss:0.01197 train-mlogloss:0.00483

[98] test-mlogloss:0.01196 train-mlogloss:0.00480

[99] test-mlogloss:0.01193 train-mlogloss:0.00476

FIX ME

preds = model.predict(dtest)

print(preds)

true_labels = y_test

true_labels

[17. 11. 9. ... 11. 11. 11.]

array([17, 11, 9, ..., 11, 11, 11])

If predictions > 0.5, pred_labels = 1 else pred_labels = 0

pred_labels = np.argmax(preds, axis=1)

pred_labels = preds.astype(int)

pred_labels

array([17, 11, 9, ..., 11, 11, 11])

Get the accuracy score for your model's predictions. In order to pass this part of the assessment, you need to attain an accuracy greater than 90%.

NOTE: We are using our own accuracy score function in order to help grade the assessment,

though it will behave here exactly like its scikit-learn couterpart accuracy_score.

xgb_acc = xgb_accuracy_score(true_labels, pred_labels)

print ('XGBoost Accuracy Score :', xgb_acc)

XGBoost Accuracy Score : 0.9978170271879341

Assessment Task 4: Implement a Confusion Matrix

Show that you can determine the performance of your model by implementing a confusion matrix.

cm = confusion_matrix(true_labels, pred_labels)

def plot_confusion_matrix(cm, title='Confusion matrix', cmap=plt.cm.Greens):

plt.figure(figsize=(10,10),)

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

plt.tight_layout()

width, height = cm.shape

for x in range(width):

for y in range(height):

plt.annotate(str(cm[x][y]), xy=(y, x),

horizontalalignment='center',

verticalalignment='center')

plt.ylabel('True label')

plt.xlabel('Predicted label')

plot_confusion_matrix(cm)

Autoencoder Model

As the second major part of this assessment, you get to train your own autoencoder neural network to understand inherant clusters in your data. Build an autoencoder treating this as a brinary classification problem. Feel free to open the file viewer on the left of the JupyterLab environment to view the notebooks from previous sections if you need a reference to guide your work.

alt text

Assessment Task 5: Set the Hyperparameters

40

input_dim = x_train.shape[1]

Model hyperparameters

batch_size = 64

Latent dimension: higher values add network capacity

while lower values increase efficiency of the encoding

latent_dim = 10

Number of epochs: should be high enough for the network to learn from the data,

but not so high as to overfit the training data or diverge

max_epochs = 40

learning_rate = 0.001

Assessment Task 6: Build the Encoder Segment

Fix the dimensions of the input (number of features in the dataset) in the input layer.

Define the hidden layers of the encoder. We recommended using at least 3-4 layers.

Consider adding dropout layers to the encoder to help avoid overfitting.

Experiment with different activation functions (relu, tanh, sigmoid etc.).

Feel free to open the file viewer on the left of the JupyterLab environment to view the notebooks from previous sections if you need a reference to guide your work.

The encoder will consist of a number of dense layers that decrease in size

as we taper down towards the bottleneck of the network: the latent space.

input_data = Input(shape=(input_dim,), name='encoder_input')

Hidden layers

encoder = Dense(units= 64, activation= 'relu', name= 'encoder_1')(input_data) # #### FIX ME #### -> 64, 'encoder_1'

encoder = Dense(units= 32, activation='relu', name='encoder_2')(encoder) #추가

encoder = Dropout(0.1)(encoder) #추가

Make your Encoder Deeper

Bottleneck layer

latent_encoding = Dense(latent_dim, activation='linear', name='latent_encoding')(encoder) # #### FIX ME #### -> latent_dim

We instantiate the encoder model, look at a summary of it's layers, and visualize it.

We instantiate the encoder model, look at a summary of it's layers, and visualize it.

encoder_model = Model(input_data, latent_encoding)

encoder_model.summary()

Model: "model_2"

Layer (type) Output Shape Param #

encoder_input (InputLayer) [(None, 118)] 0

encoder_1 (Dense) (None, 64) 7616

encoder_2 (Dense) (None, 32) 2080

dropout_1 (Dropout) (None, 32) 0

latent_encoding (Dense) (None, 10) 330

=================================================================

Total params: 10,026

Trainable params: 10,026

Non-trainable params: 0

Assessment Task 7: Build Decoder Segment

Fix the dimensions of the input to the decoder.

Grow the network from the latent layer to the output layer of size equal to the input layer.

Experiment with different activation functions (tanh, relu, sigmoid etc.).

The output is the same dimension as the input data we are reconstructing.

reconstructed_data = Dense(units = #### FIX ME ####, activation='linear', name='reconstructed_data')(decoder)

The decoder network is a mirror image of the encoder network.

decoder = Dense(units = 32, activation= 'relu', name='decoder_1')(latent_encoding) # #### FIX ME #### -> 32, 'relu'

decoder = Dense(units = 64, activation= 'relu', name='decoder_2')(decoder) #추가

The output is the same dimension as the input data we are reconstructing.

reconstructed_data = Dense(units = input_dim, activation='linear', name='reconstructed_data')(decoder) # #### FIX ME #### -> input_dim

We instantiate the encoder model, look at a summary of it's layers, and visualize it.

We instantiate the encoder model, look at a summary of its layers, and visualize it.

autoencoder_model = Model(input_data, reconstructed_data)

autoencoder_model.summary()

Model: "model_3"

Layer (type) Output Shape Param #

encoder_input (InputLayer) [(None, 118)] 0

encoder_1 (Dense) (None, 64) 7616

encoder_2 (Dense) (None, 32) 2080

dropout_1 (Dropout) (None, 32) 0

latent_encoding (Dense) (None, 10) 330

decoder_1 (Dense) (None, 32) 352

decoder_2 (Dense) (None, 64) 2112

reconstructed_data (Dense) (None, 118) 7670

=================================================================

Total params: 20,160

Trainable params: 20,160

Non-trainable params: 0

Assessment Task 8: Initiate Training of the Model

Fix the learning rate Hint: Think in the order of 10e-4.

Choose an appropriate error metric for the loss function (mse, rmse, mae etc.).

Think about whether you want to shuffle your dataset during training.

Initiate training of the autoencoder on the given dataset.

mae

opt = optimizers.Adam(learning_rate= learning_rate) # #### FIX ME #### -> learning_rate

autoencoder_model.compile(optimizer=opt, loss='mae') # #### FIX ME #### -> 'mse'

train_history = autoencoder_model.fit(#### FIX ME ####, #### FIX ME #####,

shuffle= #### FIX ME #### ,

epochs=max_epochs,

batch_size=batch_size,

validation_data=(x_test, x_test))

train_history = autoencoder_model.fit(x_train, x_train, # #### FIX ME #### -> x_train, x_train

shuffle= True , # #### FIX ME #### -> True

epochs=max_epochs,

batch_size=batch_size,

validation_data=(x_test, x_test))

Epoch 1/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0656 - val_loss: 0.0626

Epoch 2/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0605 - val_loss: 0.0590

Epoch 3/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0571 - val_loss: 0.0558

Epoch 4/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0545 - val_loss: 0.0542

Epoch 5/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0536 - val_loss: 0.0538

Epoch 6/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 7/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 8/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 9/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 10/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 11/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 12/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0534 - val_loss: 0.0538

Epoch 13/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 14/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 15/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 16/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 17/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 18/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 19/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0534 - val_loss: 0.0538

Epoch 20/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 21/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 22/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 23/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 24/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 25/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 26/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 27/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 28/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 29/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 30/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 31/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 32/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 33/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 34/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 35/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 36/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 37/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 38/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 39/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

Epoch 40/40

315/315 [==============================] - 1s 2ms/step - loss: 0.0535 - val_loss: 0.0538

plt.plot(train_history.history['loss'])

plt.plot(train_history.history['val_loss'])

plt.legend(['loss on train data', 'loss on validation data'])

<matplotlib.legend.Legend at 0x7fbb64276d90>

Assessment Task 9: Computing Reconstruction Errors

Fit the trained model on the test dataset.

Compute the reconstruction scores using MSE as the error metric.

x_test_recon = autoencoder_model.predict(#### FIX ME ####)

Reconstruct the data using our trained autoencoder model

x_test_recon = autoencoder_model.predict(x_test) # #### FIX ME #### -> x_test

The reconstruction score is the mean of the reconstruction errors (relatively high scores are anomalous)

reconstruction_scores = np.mean((x_test - x_test_recon)**2, axis=1)

158/158 [==============================] - 0s 815us/step

Store the reconstruction data in a Pandas dataframe

anomaly_data = pd.DataFrame({'recon_score':reconstruction_scores})

def convert_label_to_binary(labels):

my_labels = labels.copy()

my_labels[my_labels != 11] = 1

my_labels[my_labels == 11] = 0

return my_labels

Convert our labels to binary

binary_labels = convert_label_to_binary(y_test)

Add the binary labels to our anomaly dataframe

anomaly_data['binary_labels'] = binary_labels

Let's check if the reconstruction statistics are different for labeled anomalies

anomaly_data.groupby(by='binary_labels').describe()

recon_score

count mean std min 25% 50% 75% max

binary_labels

0 2674.0 0.030670 0.010958 0.009629 0.023971 0.029020 0.033133 0.091640

1 2365.0 0.065691 0.011892 0.010581 0.065526 0.069704 0.071132 0.094558

Assessment Task 10: Anomaly Detection

Plot the area under the curve

Set the optimal threshold that separates normal packets from anomalous packets.

Threshold should be calculated as the difference between the true positive rate and false positive rate.

fpr, tpr, thresholds = roc_curve(binary_labels, reconstruction_scores)

roc_auc = auc(fpr, tpr)

plt.figure(figsize=(10,10))

plt.plot(fpr, tpr, lw=1, label='ROC curve (area = %0.2f)' % roc_auc)

plt.plot([0, 1], [0, 1], color='lime', linestyle='--')

plt.xlim([0.0, 1.0])

plt.ylim([0.0, 1.05])

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.title('Receiver operating characteristic')

plt.legend(loc="lower right")

plt.show()

We can pick the threshold based on the differeence between the true positive rate (tpr)

and the false positive rate (fpr)

optimal_threshold_idx = np.argmax(tpr - fpr) # #### FIX ME #### -> tpr - fpr

optimal_threshold = thresholds[optimal_threshold_idx]

print(optimal_threshold)

0.042937886

print ('Autoencoder Accuracy Score :', accuracy_score(ae_acc))

Use the optimal threshold value you just printed in the previous cell.

thresh = optimal_threshold # #### FIX ME #### -> optimal_threshold

print(thresh)

pred_labels = (reconstruction_scores > thresh).astype(int)

results = confusion_matrix(binary_labels, pred_labels)

We are using our own accuracy score function in order to grade the assessment

ae_acc = autoencoder_accuracy_score(binary_labels, pred_labels)

print ('Autoencoder Accuracy Score :', ae_acc)

0.042937886

Autoencoder Accuracy Score : 0.927168088906529

In order to pass the assessment, you need to an accuracy of at least 90%.

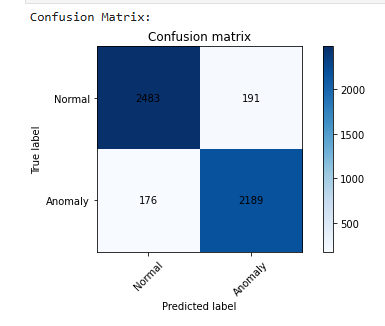

Confusion Matrix

This time, we'll create the confusion matrix for you.

print ('Confusion Matrix: ')

def plot_confusion_matrix(cm, target_names, title='Confusion matrix', cmap=plt.cm.Blues):

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(target_names))

plt.xticks(tick_marks, target_names, rotation=45)

plt.yticks(tick_marks, target_names)

plt.tight_layout()

width, height = cm.shape

for x in range(width):

for y in range(height):

plt.annotate(str(cm[x][y]), xy=(y, x),

horizontalalignment='center',

verticalalignment='center')

plt.ylabel('True label')

plt.xlabel('Predicted label')

plot_confusion_matrix(results, ['Normal','Anomaly'])

Confusion Matrix:

Assessment Task 11: Check Your Assessment Score

Before proceeding, confirm your XGBoost model accuracy is greater than 95% and that your autoencoder accuracy is greater than 90%. If it isn't please continue work on the notebook until you've met these benchmarks.

print ("Accuracy of the XGBoost Model: ", xgb_acc)

print ("Accuracy of the Autoencoder Model: ", ae_acc)

Accuracy of the XGBoost Model: 0.9978170271879341

Accuracy of the Autoencoder Model: 0.927168088906529

Run the following cell to grade your assessment.

from assessment import run_assessment

run_assessment()

Testing your XGBoost solution

Required accuracy greater than 95%....

Your XGBoost model is accurate enough!

Testing your autoencoder solution

Required accuracy greater than 90%....

Your autoencoder model is accurate enough!

You passed the assessment. Congratulations!!!!!

See instructions below for how to get credit for your work.

!nvidia-smi

!nvidia-smi

Mon Feb 10 02:38:43 2025

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.82.01 Driver Version: 470.82.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A10G On | 00000000:00:1E.0 Off | 0 |

| 0% 29C P0 58W / 300W | 21291MiB / 22731MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

+-----------------------------------------------------------------------------+

If the cell above tells you that you passed the assessment, read below for instructions on how to get credit for your work.

Get Credit for Your Work

To get credit for your assessment and generate a certificate of competency for the course, return to the browser tab where you opened this JupyterLab environment and click the "ASSESS TASK" button, as shown below:

맞습니다. 이상치 탐지(Anomaly Detection) 작업 자체만 놓고 보면, 복잡한 딥러닝 모델보다 단순한 머신러닝 모델이 더 효과적일 수 있는 경우가 많습니다. 그 이유는 다음과 같습니다:

-

데이터 불균형 (Data Imbalance): 이상치 탐지 문제에서는 정상 데이터가 대부분이고 이상치 데이터는 매우 적은 경우가 일반적입니다. 딥러닝 모델은 대량의 데이터가 필요하고, 데이터 불균형에 취약할 수 있습니다. 반면, 일부 머신러닝 알고리즘(예: One-Class SVM, Isolation Forest)은 이러한 불균형 문제에 더 강건합니다.

-

과적합 (Overfitting): 딥러닝 모델은 복잡도가 높아, 훈련 데이터에 과적합되기 쉽습니다. 특히 이상치 데이터가 적은 경우, 과적합 위험이 더 커집니다. 단순한 머신러닝 모델은 일반화 성능이 더 좋을 수 있습니다.

-

해석 가능성 (Interpretability): 단순한 모델은 결과를 해석하기 쉽습니다. 어떤 특징이 이상치 탐지에 중요한 역할을 하는지 파악하기 용이합니다. 이는 시스템 운영자가 이상 징후의 원인을 이해하고 대응하는 데 도움이 됩니다.

-

계산 비용 (Computational Cost): 딥러닝 모델은 학습과 추론에 많은 계산 자원(GPU, 메모리)을 필요로 합니다. 단순한 머신러닝 모델은 더 적은 자원으로도 빠르게 학습하고 예측할 수 있습니다.

-

실시간 처리 (Real-time Processing): 실시간 이상 탐지 시스템에서는 빠른 응답 속도가 중요합니다. 단순한 모델은 딥러닝 모델보다 추론 속도가 빠르므로 실시간 처리에 유리합니다.

단순한 머신러닝 모델을 사용한 이상치 탐지 예시:

-

One-Class SVM (Support Vector Machine):

- 정상 데이터만 사용하여 모델을 학습합니다.

- 정상 데이터의 분포를 나타내는 초평면(hyperplane)을 찾고, 이 초평면에서 멀리 떨어진 데이터를 이상치로 간주합니다.

from sklearn.svm import OneClassSVM # 정상 데이터만 사용하여 학습 x_train_normal = x_train[y_train == 11] # One-Class SVM 모델 model = OneClassSVM(nu=0.1, kernel="rbf", gamma=0.1) # nu: 이상치 비율, kernel, gamma 조정 model.fit(x_train_normal) # 테스트 데이터에 대한 예측 (1: 정상, -1: 이상치) pred = model.predict(x_test) # 이진 레이블로 변환 (0: 정상, 1: 이상치) pred_binary = (pred == -1).astype(int) # 성능 평가 (confusion matrix, accuracy 등) # ... -

Isolation Forest:

- 랜덤 포레스트(Random Forest)와 유사한 방식으로 작동합니다.

- 데이터를 무작위로 분할하여 트리를 구성하고, 이상치는 적은 횟수의 분할로도 고립(isolation)될 가능성이 높다는 점을 이용합니다.

from sklearn.ensemble import IsolationForest # Isolation Forest 모델 model = IsolationForest(n_estimators=100, contamination='auto', random_state=42) # n_estimators, contamination 조정 model.fit(x_train) # 전체 training data 사용 # 테스트 데이터에 대한 예측 (1: 정상, -1: 이상치) pred = model.predict(x_test) # 이진 레이블로 변환 (0: 정상, 1: 이상치) pred_binary = (pred == -1).astype(int) -

Local Outlier Factor (LOF):

- 데이터 포인트 주변의 밀도를 비교하여 이상치를 탐지합니다.

- 밀도가 낮은 영역에 있는 데이터 포인트를 이상치로 간주합니다.

from sklearn.neighbors import LocalOutlierFactor # LOF 모델 model = LocalOutlierFactor(n_neighbors=20, contamination='auto') # n_neighbors, contamination 조정 # 테스트 데이터에 대한 예측 (1: 정상, -1: 이상치) pred = model.fit_predict(x_test) # fit_predict 사용 # 이진 레이블로 변환 (0: 정상, 1: 이상치) pred_binary = (pred == -1).astype(int) -

(Robust) Mahalanobis Distance: Outlier가 아닌 데이터의 분포를 multivariate Gaussian이라고 가정하고, 이 분포의 중심(centroid)에서 멀리 떨어진 데이터를 outlier라고 판단합니다.

from sklearn.covariance import EmpiricalCovariance, MinCovDet robust_cov = MinCovDet().fit(x_train) # Minimum Covariance Determinant # Mahalanobis distances of the training set (on which the fit is based) # dist = robust_cov.mahalanobis(x_train) # Mahalanobis distances of the test set dist_test = robust_cov.mahalanobis(x_test) # threshold 설정: outlier 비율에 따라 quantile을 정해줌. outlier_ratio = 0.05 # 5% threshold = np.quantile(dist_test, 1.0 - outlier_ratio) pred_binary = (dist_test > threshold).astype(int) # threshold를 넘으면 anomaly(1), 아니면 정상(0)

결론:

단순한 머신러닝 모델은 이상치 탐지 문제에서 딥러닝 모델보다 더 나은 성능, 빠른 속도, 높은 해석 가능성을 제공할 수 있습니다. 어떤 모델이 가장 적합한지는 데이터의 특성과 문제의 요구 사항에 따라 달라집니다. 따라서 다양한 모델을 실험해보고, 성능을 비교하여 최적의 모델을 선택하는 것이 중요합니다.

만약 딥러닝 모델(Autoencoder, LSTM, Transformer, GAN 등)을 사용하는 것이 반드시 필요한 상황이 아니라면, 위에서 제시한 단순한 머신러닝 모델들을 먼저 시도해 보는 것을 추천합니다.

좋은 아이디어입니다! A10G GPU는 충분한 성능을 가지고 있으므로, 언어 모델이나 트랜스포머 기반의 흥미로운 프로젝트를 진행할 수 있습니다.

현재 사용 중인 데이터는 NSL-KDD 데이터셋으로, 네트워크 침입 탐지(Network Intrusion Detection)를 위한 데이터입니다. 각 레코드는 네트워크 연결에 대한 정보를 담고 있으며, 다양한 특징(feature)들로 구성됩니다.

- duration: 연결 지속 시간

- protocol_type: 프로토콜 유형 (TCP, UDP, ICMP)

- service: 서비스 유형 (http, ftp, smtp 등)

- flag: 연결 상태 플래그

- src_bytes: 소스에서 대상으로 전송된 바이트 수

- dst_bytes: 대상에서 소스로 전송된 바이트 수

- ... (그 외 다양한 특징들)

- label: 정상/비정상 여부 및 공격 유형

이 데이터를 가지고, 좀 더 흥미로운 프로젝트를 진행하기 위해 몇 가지 제안을 드립니다. 기존에 사용하던 라이브러리 (TensorFlow, Keras, XGBoost, scikit-learn) 와 NSL-KDD 데이터를 활용하거나, 또는 필요한 경우 다른 데이터를 추가/결합할 수도 있습니다.

프로젝트 아이디어:

-

Transformer 기반 침입 탐지 시스템:

- NSL-KDD 데이터의 시퀀스적 특성을 활용합니다.

- 각 연결의 특징들을 시간 순서대로 입력하여 Transformer 모델을 학습시킵니다.

- 실시간 네트워크 트래픽을 모니터링하고, 이상 징후를 탐지하는 시스템을 구축할 수 있습니다.

- Attention 메커니즘을 시각화하여 어떤 특징이 이상 징후 탐지에 중요한 역할을 하는지 분석할 수 있습니다.

# TensorFlow와 Keras를 사용하여 Transformer 모델을 구현 import tensorflow as tf from tensorflow.keras.layers import Input, Embedding, MultiHeadAttention, Dense, GlobalAveragePooling1D, LayerNormalization, Dropout from tensorflow.keras.models import Model # 파라미터 num_features = x_train.shape[1] # 입력 feature 개수 d_model = 64 # 임베딩 차원 num_heads = 4 # Multi-head attention 헤드 개수 ff_dim = 128 # Feedforward 네트워크 차원 seq_length = 10 # sequence 길이. 하나의 "문장" 처럼 취급할 feature 개수. 조절 가능. num_transformer_blocks = 2 # Transformer block 개수. # 입력 데이터 reshape (samples, seq_length, features) # 예: 10개 feature를 묶어서 하나의 timestep으로 간주. def reshape_for_transformer(data, seq_length): num_samples = data.shape[0] num_features = data.shape[1] # padding if num_features % seq_length != 0: pad_len = seq_length - (num_features % seq_length) padding = np.zeros((num_samples, pad_len)) data = np.concatenate([data, padding], axis=1) num_features += pad_len return data.reshape((num_samples, seq_length, num_features // seq_length))

x_train_reshaped = reshape_for_transformer(x_train, seq_length)

x_test_reshaped = reshape_for_transformer(x_test, seq_length)

# Transformer Block

def transformer_block(inputs, d_model, num_heads, ff_dim, rate=0.1):

attn_output = MultiHeadAttention(num_heads=num_heads, key_dim=d_model)(inputs, inputs)

attn_output = Dropout(rate)(attn_output)

out1 = LayerNormalization(epsilon=1e-6)(inputs + attn_output)

ffn_output = Dense(ff_dim, activation="relu")(out1)

ffn_output = Dense(inputs.shape[-1])(ffn_output) # inputs.shape[-1] : feature dimension

ffn_output = Dropout(rate)(ffn_output)

return LayerNormalization(epsilon=1e-6)(out1 + ffn_output)

# 모델 구성

inputs = Input(shape=(seq_length, x_train_reshaped.shape[2])) # (seq_length, features)

x = inputs

for _ in range(num_transformer_blocks):

x = transformer_block(x, d_model, num_heads, ff_dim)

x = GlobalAveragePooling1D()(x) # 또는 Flatten()

x = Dropout(0.1)(x)

x = Dense(20, activation="relu")(x) # Fully-connected layer

x = Dropout(0.1)(x)

# binary classification

outputs = Dense(1, activation="sigmoid")(x) # 이진 분류 (정상/비정상)

#outputs = Dense(num_labels, activation="softmax")(x) # 다중 클래스 분류

model = Model(inputs=inputs, outputs=outputs)

# Compile

model.compile(optimizer="adam", loss="binary_crossentropy", metrics=["accuracy"]) # 이진 분류

# model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"]) # 다중 클래스

# 학습 (y_train, y_test는 0, 1로 변환된 binary label 이어야 함)

y_train_binary = (y_train != 11).astype(int) # 11: normal, others: attack -> 0: attack, 1: normal

y_test_binary = (y_test != 11).astype(int)

model.fit(x_train_reshaped, y_train_binary, batch_size=32, epochs=10, validation_data=(x_test_reshaped, y_test_binary))

```-

LSTM 기반 침입 탐지:

- Transformer 대신 LSTM (Long Short-Term Memory) 네트워크를 사용하여 시퀀스 데이터를 처리합니다.

- LSTM은 장기 의존성을 포착하는 데 효과적입니다.

from tensorflow.keras.layers import LSTM # LSTM 모델 (위 Transformer 코드와 유사한 구조) inputs = Input(shape=(seq_length, x_train_reshaped.shape[2])) x = LSTM(64, return_sequences=True)(inputs) # return_sequences=True: 모든 타임 스텝의 출력을 반환 x = LSTM(32)(x) # 마지막 타임 스텝의 출력만 사용 x = Dropout(0.1)(x) x = Dense(20, activation="relu")(x) x = Dropout(0.1)(x) outputs = Dense(1, activation="sigmoid")(x) # 이진 분류 model = Model(inputs=inputs, outputs=outputs) model.compile(optimizer="adam", loss="binary_crossentropy", metrics=["accuracy"]) model.fit(x_train_reshaped, y_train_binary, batch_size=32, epochs=10, validation_data=(x_test_reshaped, y_test_binary)) -

GAN (Generative Adversarial Network) 기반 이상 탐지:

- GAN을 사용하여 정상 트래픽 패턴을 학습하고, 생성된 데이터와 실제 데이터의 차이를 통해 이상 징후를 탐지합니다.

- Generator는 정상 트래픽과 유사한 데이터를 생성하고, Discriminator는 실제 데이터와 생성된 데이터를 구별합니다.

- Discriminator가 실제 데이터를 가짜 데이터로 잘못 분류하는 경우, 해당 데이터는 이상 징후로 간주될 수 있습니다.

import tensorflow as tf from tensorflow.keras.layers import Input, Dense, LeakyReLU, Dropout, Reshape, Flatten from tensorflow.keras.models import Model from tensorflow.keras.optimizers import Adam # Generator def build_generator(latent_dim, output_dim): model = tf.keras.Sequential() model.add(Dense(128, input_dim=latent_dim)) model.add(LeakyReLU(alpha=0.2)) model.add(Dense(256)) model.add(LeakyReLU(alpha=0.2)) model.add(Dense(512)) model.add(LeakyReLU(alpha=0.2)) model.add(Dense(output_dim, activation='tanh')) # 출력 범위를 [-1, 1]로 조정 (MinMaxScaler 대신) return model # Discriminator def build_discriminator(input_dim): model = tf.keras.Sequential() model.add(Dense(512, input_dim=input_dim)) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.3)) model.add(Dense(256)) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.3)) model.add(Dense(128)) model.add(LeakyReLU(alpha=0.2)) model.add(Dropout(0.3)) model.add(Dense(1, activation='sigmoid')) # 진짜/가짜 판별 (0~1) return model

# GAN 모델

def build_gan(generator, discriminator):

discriminator.trainable = False # Generator 학습 시 Discriminator는 학습되지 않도록 설정

model = tf.keras.Sequential()

model.add(generator)

model.add(discriminator)

return model

# 파라미터

latent_dim = 100 # 잠재 공간 차원

input_dim = x_train.shape[1]

# Optimizer

optimizer = Adam(0.0002, 0.5)

# Discriminator

discriminator = build_discriminator(input_dim)

discriminator.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy'])

# Generator

generator = build_generator(latent_dim, input_dim)

# GAN

gan = build_gan(generator, discriminator)

gan.compile(loss='binary_crossentropy', optimizer=optimizer)

# 학습 (정상 데이터만 사용)

x_train_normal = x_train[y_train == 11] # 정상 데이터만 추출 (label이 11인 경우)

epochs = 50

batch_size = 64

half_batch = batch_size // 2

for epoch in range(epochs):

# ---------------------

# Discriminator 학습

# ---------------------

# 정상 데이터 배치

idx = np.random.randint(0, x_train_normal.shape[0], half_batch)

real_data = x_train_normal[idx]

# 가짜 데이터 배치 (노이즈로부터 생성)

noise = np.random.normal(0, 1, (half_batch, latent_dim))

fake_data = generator.predict(noise)

# Discriminator 학습 (진짜/가짜 데이터에 대한 레이블은 각각 1, 0)

d_loss_real = discriminator.train_on_batch(real_data, np.ones((half_batch, 1)))

d_loss_fake = discriminator.train_on_batch(fake_data, np.zeros((half_batch, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Generator 학습

# ---------------------

noise = np.random.normal(0, 1, (batch_size, latent_dim))

valid_y = np.array([1] * batch_size) # Generator는 모두 진짜(1)로 속이도록 학습

# Generator 학습 (Discriminator를 속이도록)

g_loss = gan.train_on_batch(noise, valid_y)

# 진행 상황 출력

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

# 이상 징후 탐지: Discriminator를 사용하여 테스트 데이터의 reconstruction error를 계산.

# 정상 데이터에 대한 Discriminator 출력 (예측 확률)

pred_normal = discriminator.predict(x_train_normal)

# 비정상 데이터 (테스트 데이터)에 대한 Discriminator 출력

pred_anomalous = discriminator.predict(x_test)

# reconstruction error 계산 (예: 1 - 예측 확률)

recon_error_normal = 1 - pred_normal

recon_error_anomalous = 1 - pred_anomalous

# 시각화 및 임계값 설정 ... (Autoencoder 예제와 유사하게)

```

GAN은 학습이 불안정할 수 있으므로, 하이퍼파라미터 튜닝 및 학습 과정 모니터링이 중요합니다.-

시계열 예측 (Time Series Forecasting):

- NSL-KDD 데이터를 시계열 데이터로 간주하고, 과거 트래픽 패턴을 기반으로 미래 트래픽을 예측합니다.

- 예측 값과 실제 값의 차이를 통해 이상 징후를 탐지할 수 있습니다.

- LSTM, GRU, Transformer 등을 사용하여 시계열 예측 모델을 구축할 수 있습니다.

-

Adversarial Examples를 이용한 공격 및 방어

- FGSM(Fast Gradient Sign Method)와 같은 기법을 써서, 기존의 침입탐지 모델(classifier)을 속이는 Adversarial Examples을 생성해 봅니다.

- Adversarial Training을 통해, 이러한 공격에 강건한(robust) 모델을 만들어 봅니다.

이러한 아이디어들을 바탕으로, 흥미로운 프로젝트를 진행할 수 있습니다. 어떤 아이디어가 가장 마음에 드시는지, 또는 다른 아이디어가 있는지 알려주시면, 좀 더 구체적인 구현 방법과 필요한 라이브러리, 코드 예시 등을 제공해 드리겠습니다.

NSL-KDD 데이터를 기반으로 가상의 데이터를 생성하는 방법은 여러 가지가 있습니다. 그중에서도 GAN (Generative Adversarial Network)과 VAE (Variational Autoencoder)를 사용하는 방법을 설명하고, 전체 코드를 제공하겠습니다. 이 두 가지 방법은 모두 주어진 데이터의 분포를 학습하여, 그 분포와 유사한 새로운 데이터를 생성하는 데 사용됩니다.

주의: 가상 데이터를 생성하는 것은 원래 데이터의 분포를 모방하는 것이지, 실제와 똑같은 새로운 침입을 만들어내는 것이 아닙니다. 생성된 데이터는 모델 학습, 테스트, 성능 평가 등에 활용될 수 있지만, 실제 네트워크 환경에 대한 침입을 시도하는 데 사용되어서는 절대 안 됩니다.

1. GAN (Generative Adversarial Network)을 사용한 가상 데이터 생성

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, LeakyReLU, Dropout, BatchNormalization

from tensorflow.keras.models import Model, Sequential

from tensorflow.keras.optimizers import Adam

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

# 데이터 로드 및 전처리 (Assessment 코드에서 가져옴)

col_names = ["duration","protocol_type","service","flag","src_bytes","dst_bytes","land","wrong_fragment","urgent","hot","num_failed_logins","logged_in",

"num_compromised","root_shell","su_attempted","num_root","num_file_creations","num_shells","num_access_files","num_outbound_cmds",

"is_host_login","is_guest_login","count","srv_count","serror_rate","srv_serror_rate","rerror_rate","srv_rerror_rate","same_srv_rate",

"diff_srv_rate","srv_diff_host_rate","dst_host_count","dst_host_srv_count","dst_host_same_srv_rate","dst_host_diff_srv_rate",

"dst_host_same_src_port_rate","dst_host_srv_diff_host_rate","dst_host_serror_rate","dst_host_srv_serror_rate","dst_host_rerror_rate",

"dst_host_srv_rerror_rate","label"]

df = pd.read_csv("data/KDDTrain+_20Percent.txt", header=None, names=col_names, index_col=False)

cat_vars = ['protocol_type', 'service', 'flag', 'land', 'logged_in','is_host_login', 'is_guest_login']

cat_data = pd.get_dummies(df[cat_vars])

numeric_vars = list(set(df.columns.values.tolist()) - set(cat_vars))

numeric_vars.remove('label')

numeric_data = df[numeric_vars].copy()

numeric_cat_data = pd.concat([numeric_data, cat_data], axis=1)

# 훈련 데이터만 사용 (label 사용 X)

x_train = numeric_cat_data.values

# 데이터 정규화

scaler = MinMaxScaler()

x_train = scaler.fit_transform(x_train)

x_train = x_train.astype(np.float32)

# Generator

def build_generator(latent_dim, output_dim):

model = Sequential()

model.add(Dense(128, input_dim=latent_dim))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(BatchNormalization(momentum=0.8))

model.add(Dense(output_dim, activation='sigmoid')) # 출력 범위를 [0, 1]로 (MinMaxScaler 사용했으므로)

return model

# Discriminator

def build_discriminator(input_dim):

model = Sequential()

model.add(Dense(512, input_dim=input_dim))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.4))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.4))

model.add(Dense(128))

model.add(LeakyReLU(alpha=0.2))

model.add(Dropout(0.4))

model.add(Dense(1, activation='sigmoid')) # 진짜/가짜 판별 (0~1)

return model

# GAN 모델

def build_gan(generator, discriminator):

discriminator.trainable = False # Generator 학습 시 Discriminator는 학습되지 않도록

model = Sequential()

model.add(generator)

model.add(discriminator)

return model

# 하이퍼파라미터

latent_dim = 100 # 잠재 공간 차원

input_dim = x_train.shape[1]

batch_size = 64

epochs = 100 # 학습 횟수 (조절 필요)

# Optimizer

optimizer = Adam(0.0002, 0.5)

# Discriminator

discriminator = build_discriminator(input_dim)

discriminator.compile(loss='binary_crossentropy', optimizer=optimizer, metrics=['accuracy'])

# Generator

generator = build_generator(latent_dim, input_dim)

# GAN

gan = build_gan(generator, discriminator)

gan.compile(loss='binary_crossentropy', optimizer=optimizer)

# 학습

for epoch in range(epochs):

# ---------------------

# Discriminator 학습

# ---------------------

# 진짜 데이터 배치

idx = np.random.randint(0, x_train.shape[0], batch_size)

real_data = x_train[idx]

# 가짜 데이터 배치 (노이즈로부터 생성)

noise = np.random.normal(0, 1, (batch_size, latent_dim))

fake_data = generator.predict(noise)

# Discriminator 학습 (진짜/가짜 데이터에 대한 레이블은 각각 1, 0)

d_loss_real = discriminator.train_on_batch(real_data, np.ones((batch_size, 1)))

d_loss_fake = discriminator.train_on_batch(fake_data, np.zeros((batch_size, 1)))

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

# ---------------------

# Generator 학습

# ---------------------

noise = np.random.normal(0, 1, (batch_size, latent_dim))

valid_y = np.array([1] * batch_size) # Generator는 모두 진짜(1)로 속이도록 학습

# Generator 학습 (Discriminator를 속이도록)

g_loss = gan.train_on_batch(noise, valid_y)

# 진행 상황 출력

print ("%d [D loss: %f, acc.: %.2f%%] [G loss: %f]" % (epoch, d_loss[0], 100*d_loss[1], g_loss))

# 가상 데이터 생성

num_samples = 1000 # 생성할 샘플 개수

noise = np.random.normal(0, 1, (num_samples, latent_dim))

generated_data = generator.predict(noise)

# Min-Max Scaling 역변환 (생성된 데이터는 0~1 사이 값)

generated_data = scaler.inverse_transform(generated_data)

# 생성된 데이터 확인 (처음 5개 샘플)

print(generated_data[:5])

# 필요한 경우, 생성된 데이터를 DataFrame으로 변환

generated_df = pd.DataFrame(generated_data, columns=numeric_cat_data.columns)

print(generated_df.head())2. VAE (Variational Autoencoder)를 사용한 가상 데이터 생성

import numpy as np

import pandas as pd

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Lambda, LeakyReLU, BatchNormalization

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras import backend as K

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import train_test_split

# 데이터 로드 및 전처리 (Assessment 코드에서 가져옴. GAN 코드와 동일)

col_names = ["duration","protocol_type","service","flag","src_bytes","dst_bytes","land","wrong_fragment","urgent","hot","num_failed_logins","logged_in",

"num_compromised","root_shell","su_attempted","num_root","num_file_creations","num_shells","num_access_files","num_outbound_cmds",

"is_host_login","is_guest_login","count","srv_count","serror_rate","srv_serror_rate","rerror_rate","srv_rerror_rate","same_srv_rate",

"diff_srv_rate","srv_diff_host_rate","dst_host_count","dst_host_srv_count","dst_host_same_srv_rate","dst_host_diff_srv_rate",

"dst_host_same_src_port_rate","dst_host_srv_diff_host_rate","dst_host_serror_rate","dst_host_srv_serror_rate","dst_host_rerror_rate",

"dst_host_srv_rerror_rate","label"]

df = pd.read_csv("data/KDDTrain+_20Percent.txt", header=None, names=col_names, index_col=False)

cat_vars = ['protocol_type', 'service', 'flag', 'land', 'logged_in','is_host_login', 'is_guest_login']

cat_data = pd.get_dummies(df[cat_vars])

numeric_vars = list(set(df.columns.values.tolist()) - set(cat_vars))

numeric_vars.remove('label')

numeric_data = df[numeric_vars].copy()

numeric_cat_data = pd.concat([numeric_data, cat_data], axis=1)

# 훈련 데이터만 사용 (label 사용 X)

x_train = numeric_cat_data.values

# 데이터 정규화

scaler = MinMaxScaler()

x_train = scaler.fit_transform(x_train)

x_train = x_train.astype(np.float32)

# VAE 모델 구성

intermediate_dim = 256

latent_dim = 2 # 잠재 공간 차원 (2차원으로 시각화하기 좋음)

input_dim = x_train.shape[1]

batch_size = 128

epochs = 50

# Encoder

inputs = Input(shape=(input_dim,), name='encoder_input')

x = Dense(intermediate_dim, activation='relu')(inputs)

x = BatchNormalization()(x)

z_mean = Dense(latent_dim, name='z_mean')(x)

z_log_var = Dense(latent_dim, name='z_log_var')(x)

# Sampling function

def sampling(args):

z_mean, z_log_var = args

epsilon = K.random_normal(shape=(K.shape(z_mean)[0], latent_dim), mean=0., stddev=1.)

return z_mean + K.exp(z_log_var / 2) * epsilon

# Latent space sampling

z = Lambda(sampling, output_shape=(latent_dim,), name='z')([z_mean, z_log_var])

# Decoder

decoder_hid = Dense(intermediate_dim, activation='relu')

decoder_out = Dense(input_dim, activation='sigmoid') # 출력 범위를 [0, 1]로 (MinMaxScaler)

hid_decoded = decoder_hid(z)

x_decoded_mean = decoder_out(hid_decoded)

# VAE 모델

vae = Model(inputs, x_decoded_mean)

# Decoder 모델 (생성용)

decoder_input = Input(shape=(latent_dim,))

_hid_decoded = decoder_hid(decoder_input)

_x_decoded_mean = decoder_out(_hid_decoded)

decoder = Model(decoder_input, _x_decoded_mean)

# Encoder 모델 (latent vector 추출용)

encoder = Model(inputs, z_mean)

# VAE Loss

def vae_loss(x, x_decoded_mean):

xent_loss = K.sum(K.binary_crossentropy(x, x_decoded_mean), axis=-1) # Reconstruction loss

kl_loss = -0.5 * K.sum(1 + z_log_var - K.square(z_mean) - K.exp(z_log_var), axis=-1) # KL divergence

return K.mean(xent_loss + kl_loss)

# Compile

vae.compile(optimizer=Adam(learning_rate=0.001), loss=vae_loss)

# 학습

vae.fit(x_train, x_train,

shuffle=True,

epochs=epochs,

batch_size=batch_size)

# 가상 데이터 생성

num_samples = 1000

noise = np.random.normal(0, 1, (num_samples, latent_dim)) # Standard normal distribution

generated_data = decoder.predict(noise)

# Min-Max Scaling 역변환

generated_data = scaler.inverse_transform(generated_data)

# 생성된 데이터 확인 (처음 5개)

print(generated_data[:5])

# DataFrame으로 변환 (선택 사항)

generated_df = pd.DataFrame(generated_data, columns=numeric_cat_data.columns)

print(generated_df.head())핵심 차이점 (GAN vs VAE):

- GAN:

- Generator와 Discriminator라는 두 개의 네트워크가 경쟁적으로 학습합니다.

- Generator는 가짜 데이터를 생성하고, Discriminator는 진짜와 가짜를 구별합니다.

- 학습이 불안정할 수 있지만, 선명하고 현실적인 데이터를 생성하는 경향이 있습니다.

- VAE:

- Encoder와 Decoder로 구성된 하나의 네트워크입니다.

- Encoder는 입력을 잠재 공간(latent space)으로 압축하고, Decoder는 잠재 공간에서 다시 원래 데이터로 복원합니다.

- 잠재 공간에 제약(regularization)을 가하여, 데이터의 분포를 학습하고 새로운 데이터를 생성합니다.

- GAN보다 학습이 안정적이지만, 생성된 데이터가 흐릿(blurry)할 수 있습니다.

- 잠재 공간을 시각화하거나, 잠재 공간을 조작하여 데이터의 특성을 제어할 수 있습니다.

어떤 방법을 선택할지는 생성하려는 데이터의 특성과 목적에 따라 결정해야 합니다.