Clustering analysis : Key concepts and Implementation in Python

Clustering : Introduction

What is clustering?

To partition the observations into groups() so that the pairwise dissimilarities between those assigned to the same cluster tend to be smaller than those in differenct clusters

Types of clustering algorithm

| Type | Description | Example |

|---|---|---|

| Combinatorial algorithms | Work directly on the observed data with no direct reference to an underlyning probability model | |

| Iterative descent clustering | K-means clustering | |

| Mixture modeling | Supposes that the data is an i.i.d sample from some population described by a probability distribution function | Mixture model |

| Mode seekers | Take a nonparametric perspective, attempting to directly estimate distinct mode of the probability distribution function | Patient Rule Induction Method(PRIM), Generalized Association Rules |

| Hierachical clustering |

Iterative greedy descent clustering

- An initial partition is specified

- At each iterative step, the cluster assignment are changed in such a way that the value of the criterion is improved from its previous value

- When the prescription is unable to provide an improvement, the algorithm terminates with the current assignmnet as its solution

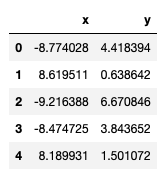

Example data generation

- Make a toy data using

make_blobsinsklearn!

from sklearn.datasets import make_blobs

x, y = make_blobs(n_samples=100, centers=3, n_features=2, random_state=7)

points = pd.DataFrame(x, y).reset_index(drop=True)

points.columns = ["x", "y"]

points.head()

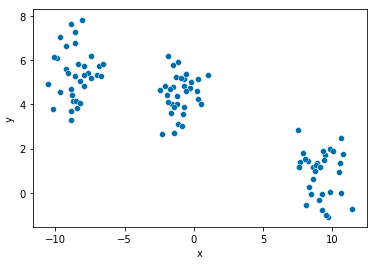

import seaborn as sns

sns.scatterplot(x="x", y="y", data=points, palette="Set2");

1. K-means

- The K-means algorithms is one of the most popular iterative descent clustering methods

Algorithm

| Given | Change | |

|---|---|---|

| Step1 | Cluster assignment | Change the means of cluster |

| Step2 | A current set of means | Assigning of each observations |

- For a given cluster assignment, the total variance is minimized with respect to yielding the means of the currently assigned cluster

- Given a current set of means , the total variance is minimized by assigning each observation to the closest (current) cluster mean

- Step 1 and 2 are iterated until the assignmnets do not change

Notice on intial centroids

- One should start the algorithm with many different random choices for the starting means, abd choose the solution having smallest value of the objective fucntion

2. Gaussian mixture

EM algorithm

| Step | Given | Update |

|---|---|---|

| E step | Mixture component parameters | Responsibilities |

| M step | Responsibilities | Mixture component parameters |

- E step

- Assigns responsibilities for each data point based in its relative density under each mixture component

- M step

- Recomputes the component density parameters based on the current responsibilities

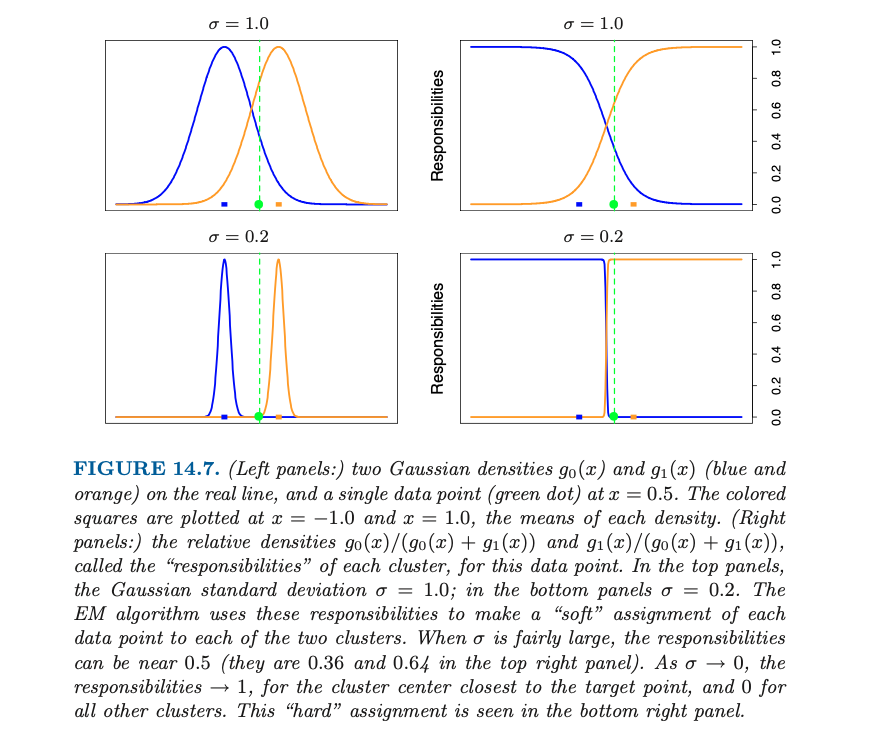

- Responsibilities

- For given two density and and a data point , the relative densitiesare called the of each cluster, for this data point

- For given two density and and a data point , the relative densities

Gaussian mixture as soft K-means clustering

- EM algorithm is a soft version of K-means clustering, making probabilistic (rather than deterministic) assignments of points to cluster centers

- As , these probabilities become 0 and 1, and the two methods K-means and Gaussian mixture coincide

3. Hierachical clustering

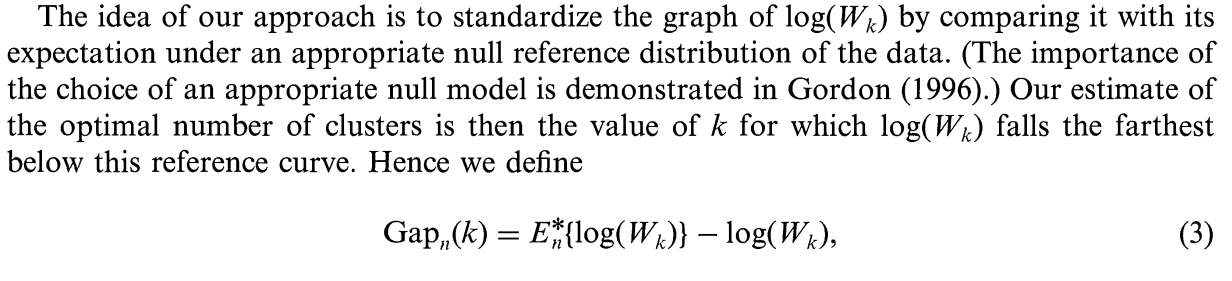

Gap statistics

Tibishirani proposed a statistic for choosing optimal number of clusters in 2001, called :

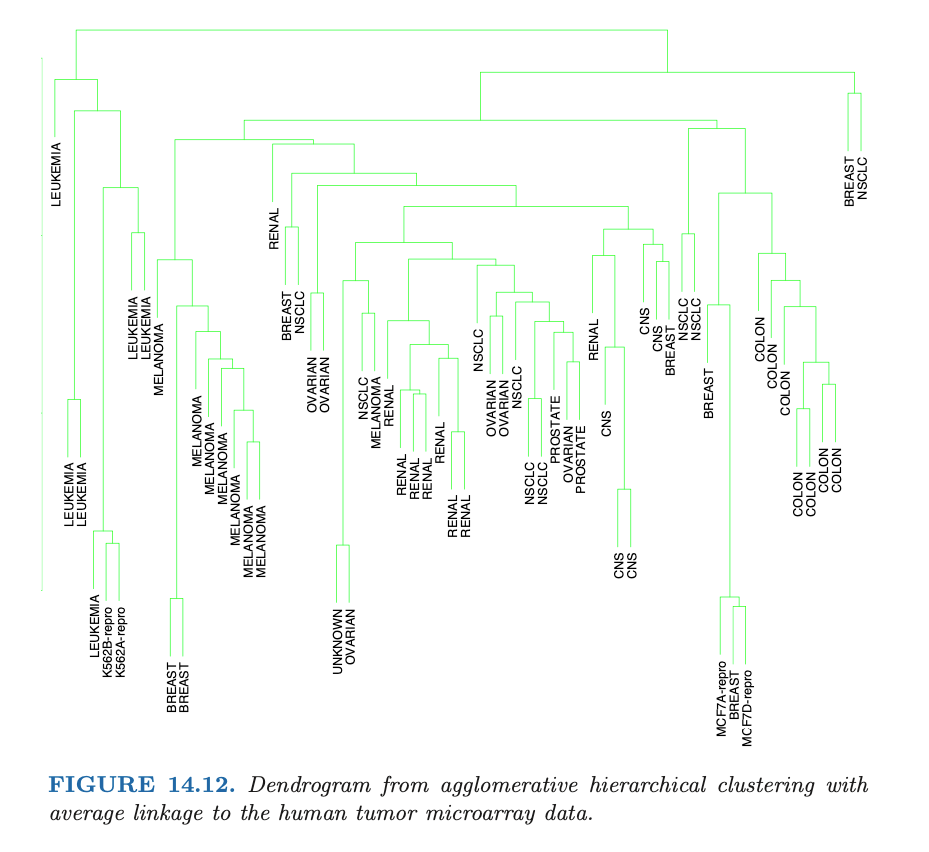

Dendrogram

A provides a highly interpretable complete description of the hierachical clustering in a graphical format.

Agglomerative vs. Divisive

| Agglomerative | Divisive |

|---|---|

| Bottom up | Top down |

| Recursively merge | Recursively split |

| Begin with every observation representing a singleton cluster | Begin with the entire data set as a single cluster |

- Agglomerative clustering

- Begin with every observation representing a singleton cluster

- At each of the steps, the closest two cluseters are merged into a single cluster, producing one less cluster at the next higher level

- Therefore, a measure of dissimilarity between two clusters must be defined

- Divisive clustering

- This approach has not been studied nearly as extensively as agglomerative methods in the clustering literature

Dissimilarity measures

| Type | Formulation | Description |

|---|---|---|

| Single linkage | - Violate the compactness property - i.e., produce clusters with very large diameters | |

| Complete linkage | - Violate the closeness property - i.e., observations assigned to a cluster can be much closer to members of the other clusters | |

| Group average | - Compromise SL and CL - But have invariance property | |

| Ward linkage | where with |

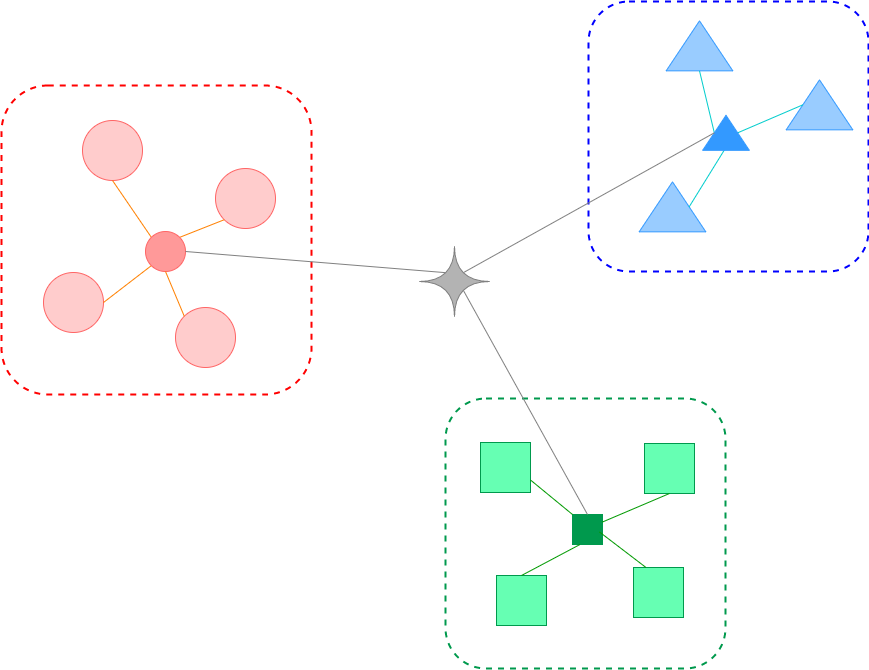

4. Self-Organizing Maps

What is Self-Organizing Maps(SOM)?

- The SOM procedure tries to bend the plane so that the buttons(green dots) approximate the data points as well as possible

- The plane has the prototype(usually, centroid)

- Once the model is fit, the observations can be mapped down(projected) onto the two-dimensional grid

How to do SOM?

- By updating the prototypes! (green dots!)

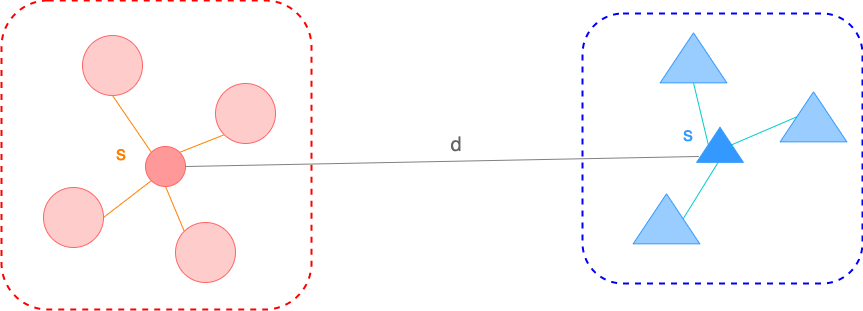

- The observations are processed one at a time(these algorithms are called ). For each ,

- Fine the closest prototype to in Euclidean distance in

- For all neighbors of , move toward via the update

- The neighbors of are defined to be all such that the distance between and is small

- (NOTICE) That "distance" is defined in the integer topological coordinates of the prototypes, rather than in the feature space

- The simplest approach uses Euclidean distance, and "small" is determined by a threshold

- The neighborhood of always includes itself

- is the learning rate

- In summary, SOM is

- Input

- data

- Dimension of rectangular grid

- Parameters

- : the learning rate

- : the distance threshold

- Output

- Fitted prototypes

- Projected retangular grid

- Input

- https://www.kaggle.com/code/izzettunc/introduction-to-time-series-clustering/notebook

SOM vs. K-means

5. Spectral clustering

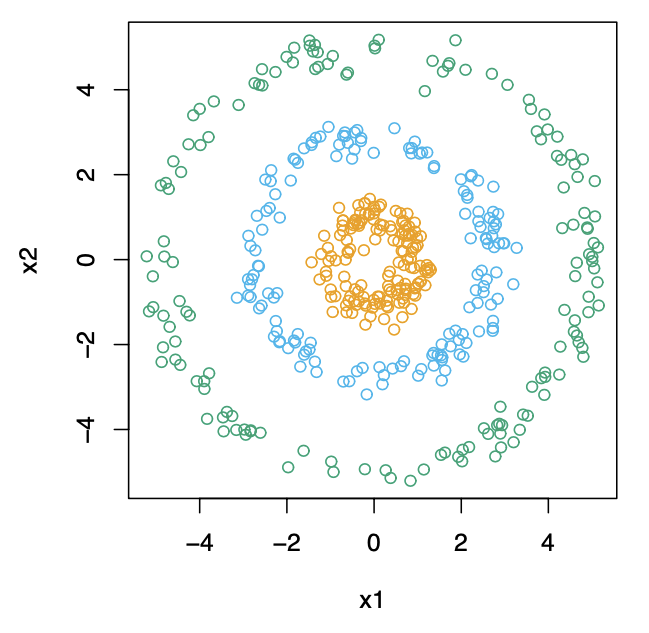

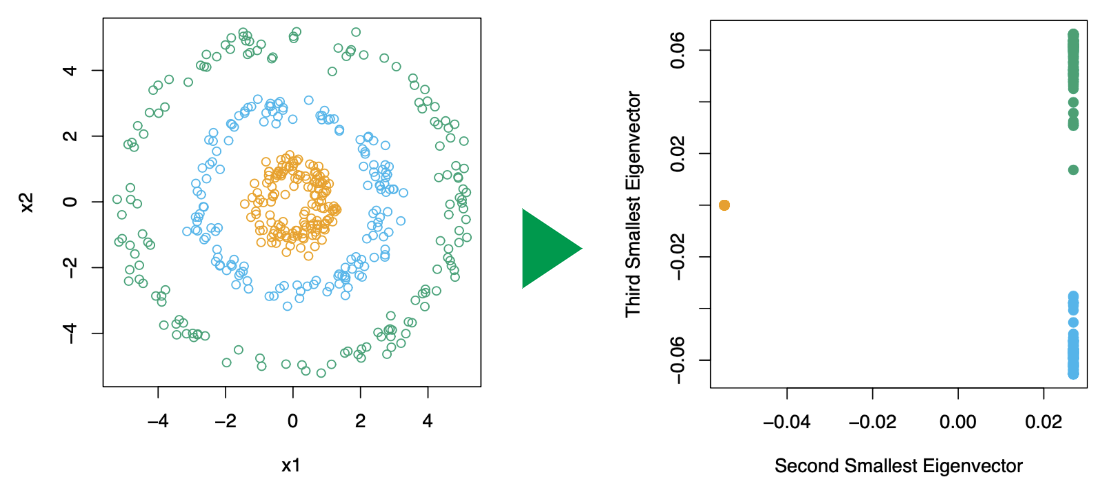

Traditional clustering methods like -means will not work well when the clusters are non-convex like below: (See Figure 14.29 in ESL p.546)

Spectral clustering is a generalization of standard clustering methods, and is designed for these situations

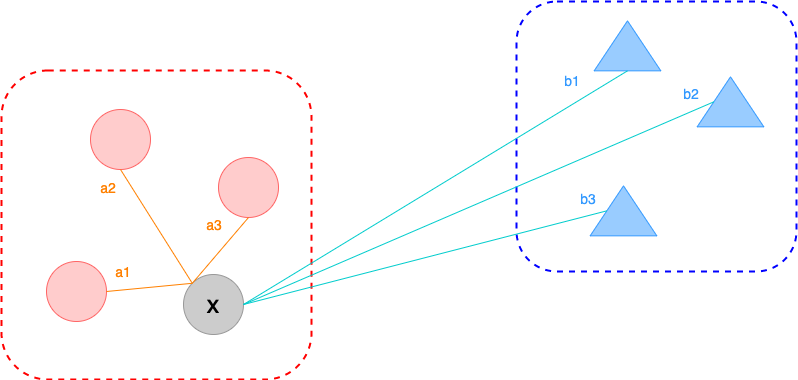

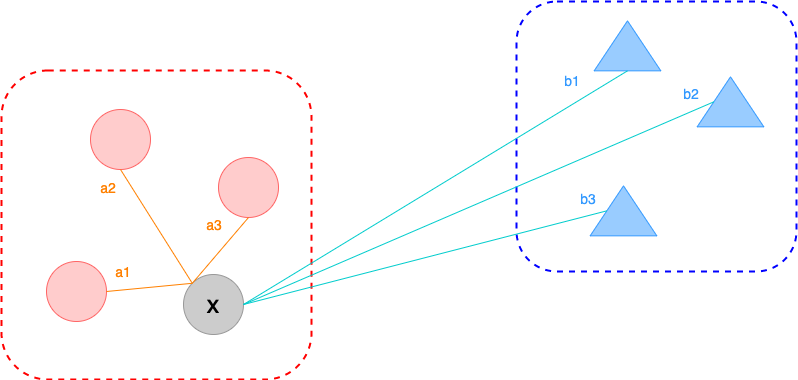

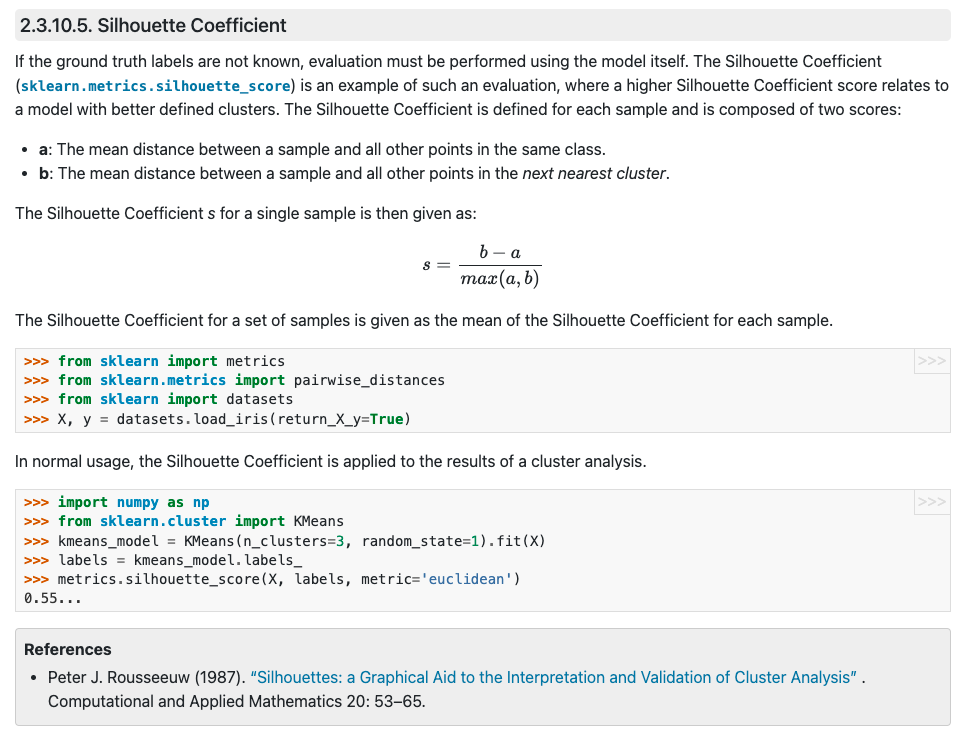

Clustering = graph-partition problem

Clustering can be rephrased as a graph-partition problem, with setup as below:

- a matrix of pairwise similarities between all observation pairs

- represent the obeservations in an

- : the vertices representing the observations

- : the edges connecting pairs of vertices if their similarity is positive, the edges are weighted by the

We wish to partition the graph, such that edges between different groups have low weight, and within a group have high weight. The idea of spectral clustering is to construct similarity graphs that represent the local neighborhood relationships between observations.

Graph Laplacian

The is defined by

where

- : the matrix of edge weights from a similarity graph is called the

- : a diagonal matrix with diagonal elements , the sum of the weights of the edges connected to it. This is called

Note that this is . There are a number of normalized versions have been proposed - for example, .

Steps for spectral clustering

-

Define appropriate (i.e., vertices and edges) that reflects local behavior of observations.

-

Get the correspoding to the similarity graph

-

Find the eigenvectors corresponding to the smallest eigenvalues of (, defined above). (For understanding, see this link and this link). This changes the data points from left to right. (See Figure 14.29 in ESL p.546)

-

Using a standard method like -means, cluster the rows of to yield a clustering of the original data points

Performance evaluation

| Silhouette Coefficient | Calinski-Harabasz Index | Davies-Bouldin Index | |

|---|---|---|---|

|  |  | |

| Mean of scaled differences between within-cluster distance and nearest-cluster distance | The ratio of the between-cluster dispersion and within-cluster dispersion | Mean of the average similarity between each cluster and its most similar(nearest) one | |

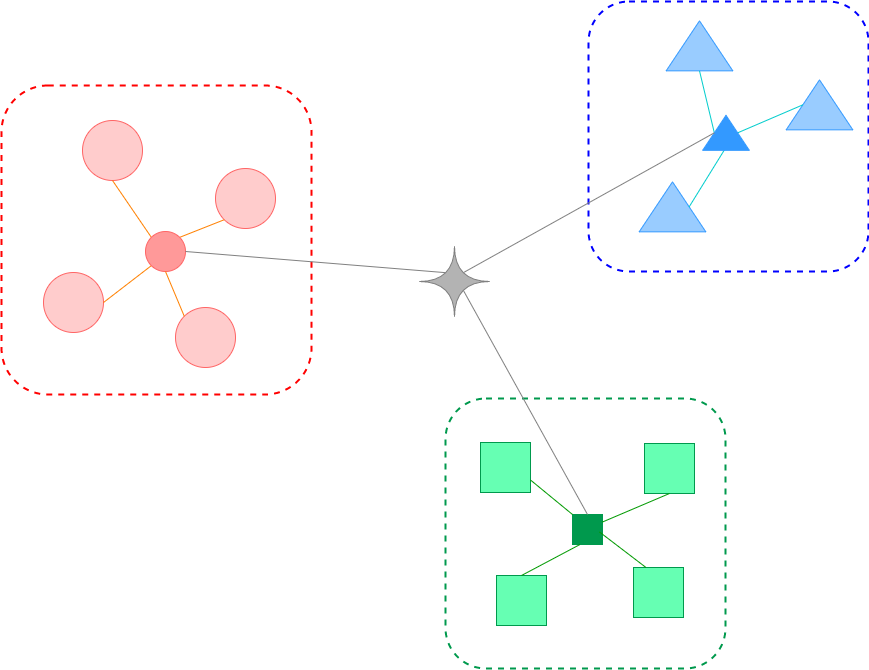

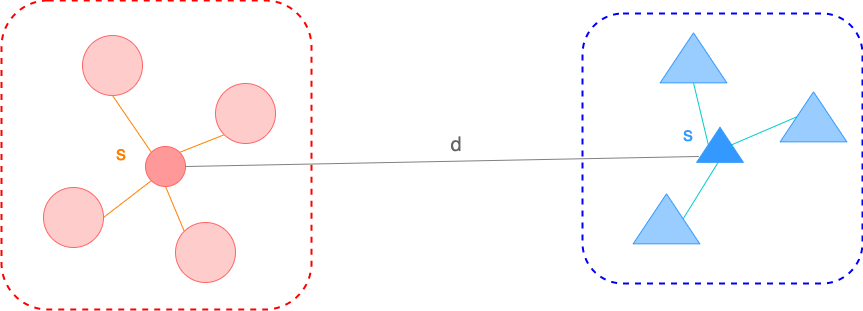

| For a single sample, where - : the mean distance between a sample and all other points in the same cluster - : the mean distance between a sample and all other points in the next nearest cluster For a set of samples, the mean of the Silhouette Coefficient for each sample | For a set of data of size which has been clustered into clusters, the Calinski-Harabasz score is defined as where - : the trace of the between group dispersion matrix - : the trace of the within-cluster dispersion matrix with - : the set of points in cluster - : the center of cluster - : the center of | The Davies-Bouldin index is defined as where with - : the average distance between each point of cluster and the centroid of that cluster - : the distance between cluster centroid and |

- Silhouette Coefficient

- diff(within cluster distance, nearest cluster distance)

- nearest cluster distance = min(between cluster distance)

- For a given single sample point ,

- within cluster distance

- nearest cluster distance

- (The Silhouette Coefficient for a set of samples)= mean(the Silhouette Coefficient for each sample)

- diff(within cluster distance, nearest cluster distance)

-

- (Between cluster variance)/(Within cluster variance)

-

- mean(nearest cluster similarity)

Choose optimal K

[References]

- Hastie, T., Tibshirani, R., Friedman, J. (2008) The Elements of Statistical Learning : Data Mining, Inference, and Prediction. Springer

- https://towardsdatascience.com/time-series-clustering-deriving-trends-and-archetypes-from-sequential-data-bb87783312b4

- https://scikit-learn.org/stable/modules/clustering.html#clustering-performance-evaluation