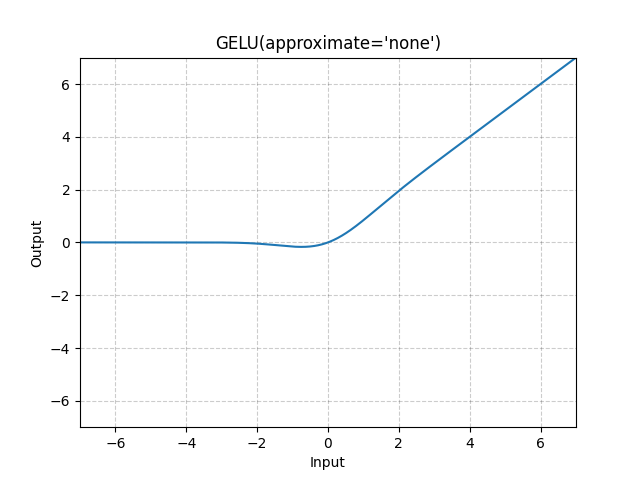

[AI] GELU(Gaussian Error Linear Units)

GELU(Gaussian Error Linear Units)

where is the Cumulative Distribution Function for Gaussian Distribution.

When the approximate argument is ‘’, Gelu is estimated with:

- Input: (∗), where \∗ means any number of dimensions.

- Output: (∗), same shape as the input.

Reference

[1] https://pytorch.org/docs/stable/generated/torch.nn.GELU.html#torch.nn.GELU