Cluster Autoscaler

참고 - https://www.eksworkshop.com/beginner/080_scaling/deploy_ca/

참고 - https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/autoscaling.html

Cluster AutoScaler는 특정 Tag가 있는 Node를 스케줄링 하므로, 특정 Node Group을 스케줄링 하고 싶다면

태그를 지정합니다. 즉, Autoscaling Group에 수동으로 해줘야합니다.

CA같은 경우는 해당 Pod가 Pending 상태에 있어, Node를 Scale_Out 해준다. 그런데 그럼 Scale In은 어떻게 가능할까? 즉, Pod에 CPU 사용상태가 0m가 되어야한다는 점이다. 이것을 꼭 명심하자

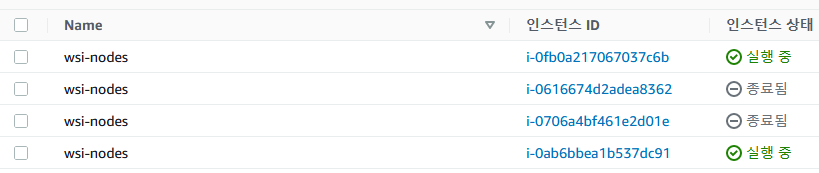

Configure the ASG

- ASG 설정 확인

aws autoscaling \

describe-auto-scaling-groups \

--query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='eksworkshop-eksctl']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \

--output table- 최대 용량을 4개의 인스턴스 Update

export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='$ClusterName']].AutoScalingGroupName" --output text)

aws autoscaling \

update-auto-scaling-group \

--auto-scaling-group-name ${ASG_NAME} \

--min-size 2 \

--desired-capacity 2 \

--max-size 4- 업데이트 확인

aws autoscaling \

describe-auto-scaling-groups \

--query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='eksworkshop-eksctl']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \

--output tableIAM roles for service accounts

- 작업할 공간 디렉토리 생성

mkdir cluster-autoscaler && cd cluster-autoscalerIAM roles for service accounts 시작

2. CA 포드가 자동 크기 조정 그룹과 상호 작용하도록 허용하는 서비스 계정에 대한 IAM 정책 생성

cat << EOF > k8s-asg-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeTags",

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeLaunchTemplateVersions"

],

"Resource": "*",

"Effect": "Allow"

}

]

}

EOF

aws iam create-policy \

--policy-name k8s-asg-policy \

--policy-document file://k8s-asg-policy.json- 마지막으로 kube-system 네임스페이스에서 cluster-autoscaler 서비스 계정에 대한 IAM 역할을 생성합니다(IAMServiceAccountCreate)

eksctl create iamserviceaccount \

--name cluster-autoscaler \

--namespace kube-system \

--cluster wsi-eks-cluster \

--attach-policy-arn "arn:aws:iam::040217728499:policy/k8s-asg-policy" \

--approve \

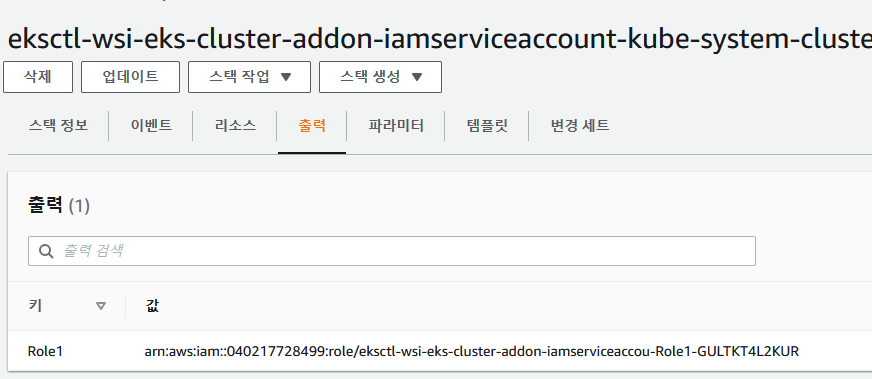

--override-existing-serviceaccounts- IAM 역할의 ARN이 있는 서비스 계정에 주석이 추가되었는지 확인합니다.

kubectl -n kube-system describe sa cluster-autoscalerOutput(위에 명령어 입력시 출력)

Name: cluster-autoscaler

Namespace: kube-system

Labels: app.kubernetes.io/managed-by=eksctl

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::040217728499:role/eksctl-wsi-eks-cluster-addon-iamserviceaccou-Role1-GULTKT4L2KUR

Image pull secrets: <none>

Mountable secrets: cluster-autoscaler-token-h56mz

Tokens: cluster-autoscaler-token-h56mz

Events: <none>Deploy the Cluster Autoscaler (CA)

- Cluster Autoscaler YAML File Download

curl -o cluster-autoscaler-autodiscover.yaml https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml- YAML 파일 수정 YOUR CLUSTER NAME 이 부분입니다.

sed -i 's|<YOUR CLUSTER NAME>|wsi-eks-cluster|g' ./cluster-autoscaler-autodiscover.yaml- 배포

kubectl apply -f cluster-autoscaler-autodiscover.yaml- cluster-autoscaler 서비스 계정 주석 지정 -> CloudFormation 출력 보면 확인 가능

kubectl annotate serviceaccount cluster-autoscaler \

-n kube-system \

eks.amazonaws.com/role-arn=arn:aws:iam::040217728499:role/eksctl-wsi-eks-cluster-addon-iamserviceaccou-Role1-GULTKT4L2KUR

- 배포 패치 -> cluster-autoscaler.kubernetes.io/safe-to-evict 주석을 Cluster Autoscaler pods에 추가

kubectl -n kube-system \

annotate deployment.apps/cluster-autoscaler \

cluster-autoscaler.kubernetes.io/safe-to-evict="false"

또는,

kubectl patch deployment cluster-autoscaler \

-n kube-system \

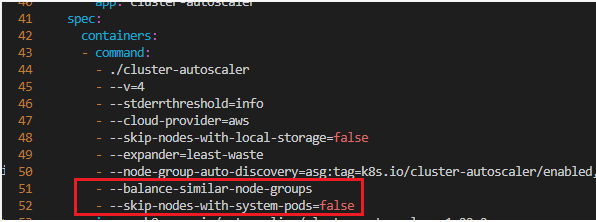

-p '{"spec":{"template":{"metadata":{"annotations":{"cluster-autoscaler.kubernetes.io/safe-to-evict": "false"}}}}}'- Cluster Autoscaler 배포 편집 -> 아래 참고

kubectl -n kube-system edit deployment./cluster-autoscaler

:wq

- Cluster Autoscaler Version Update

export K8S_VERSION=$(kubectl version --short | grep 'Server Version:' | sed 's/[^0-9.]*\([0-9.]*\).*/\1/' | cut -d. -f1,2)

export AUTOSCALER_VERSION=$(curl -s "https://api.github.com/repos/kubernetes/autoscaler/releases" | grep '"tag_name":' | sed -s 's/.*-\([0-9][0-9\.]*\).*/\1/' | grep -m1 ${K8S_VERSION})

kubectl -n kube-system \

set image deployment.apps/cluster-autoscaler \

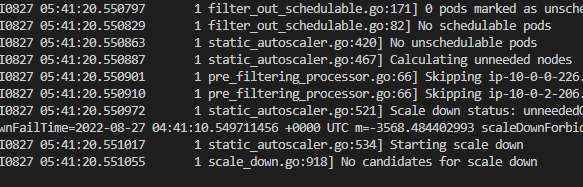

cluster-autoscaler=us.gcr.io/k8s-artifacts-prod/autoscaling/cluster-autoscaler:v${AUTOSCALER_VERSION}- Watch the logs -> 아래와 비슷한 마지막 출력

kubectl -n kube-system logs -f deployment/cluster-autoscaler

Scale Test

- Pod 배포

cat <<EoF> nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-to-scaleout

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

service: nginx

app: nginx

spec:

containers:

- image: nginx

name: nginx-to-scaleout

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 512Mi

EoF

kubectl apply -f ~/environment/cluster-autoscaler/nginx.yaml

kubectl get deployment/nginx-to-scaleout

kubectl scale --replicas=50 deployment/nginx-to-scaleout

kubectl get pods -l app=nginx -o wide --watch- 분할 터미널로 확인(터미널 하나 더 뛰운다음 확인)

kubectl -n kube-system logs -f deployment/cluster-autoscaler- Node 확인

kubectl get nodesScale In 확인

-

다시 Pod 갯수 줄이기

kubectl scale --replicas=1 deployment/nginx-to-scaleout -

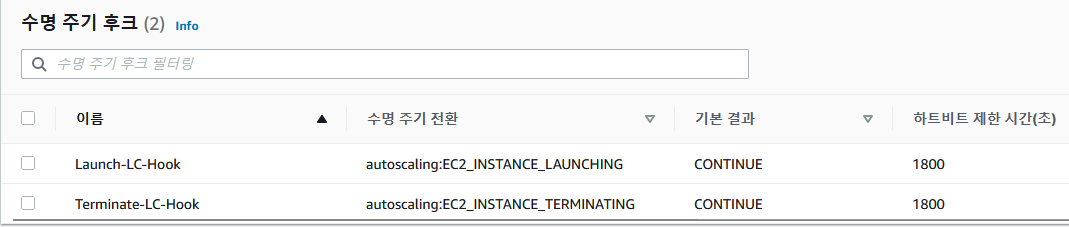

그럼 Node가 수명주기후크로 인해서 6분뒤에 다시 Scale In 합니다.