이 글은 CloudNet@팀의 AWS EKS Workshop Study(AEWS) 3기 스터디 내용을 바탕으로 작성되었습니다.

AEWS는 CloudNet@의 '가시다'님께서 진행하는 스터디로, EKS를 학습하는 과정입니다.

EKS를 깊이 있게 이해할 기회를 주시고, 소중한 지식을 나눠주시는 가시다님께 다시 한번 감사드립니다.

이 글이 EKS를 학습하는 분들께 도움이 되길 바랍니다.

1. 가상의 온프레미스 환경에서 K8S 업그레이드 계획 짜기 (In-place 방식)

1.1 환경 소개

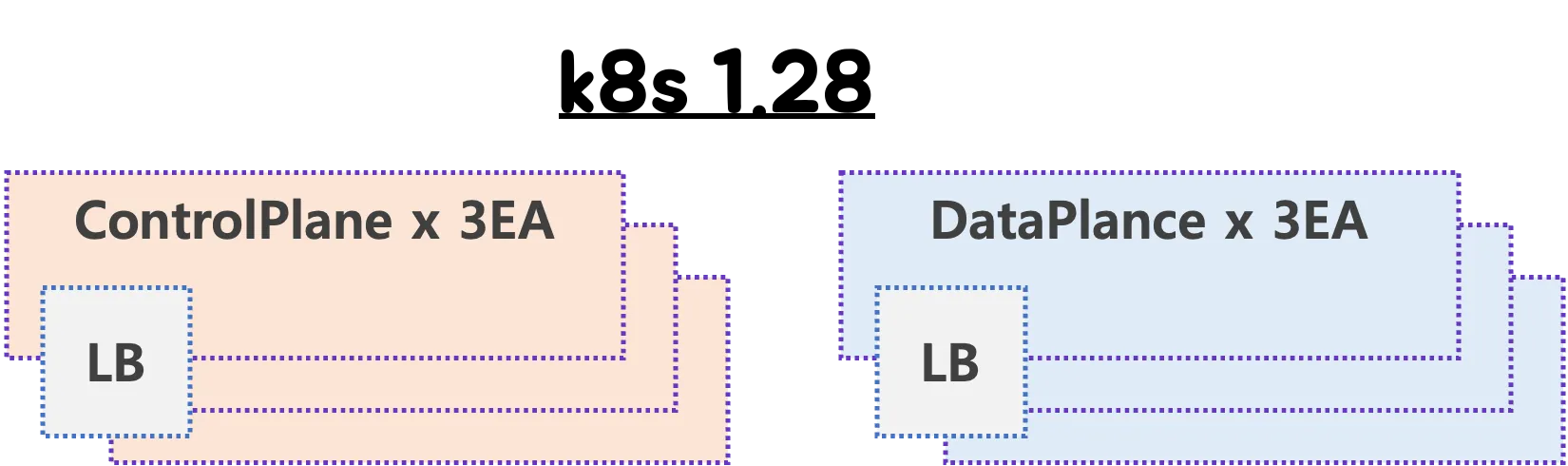

- 현재 Kubernetes 버전: 1.28.2

- 목표 Kubernetes 버전: 1.32

- 노드 구성:

- Control Plane(CP) 노드: 3대

- Data Plane(DP) 노드: 3대

- 하드웨어 로드밸런서(HW LB)를 이용해 CP 노드 앞단에 부하 분산 구성

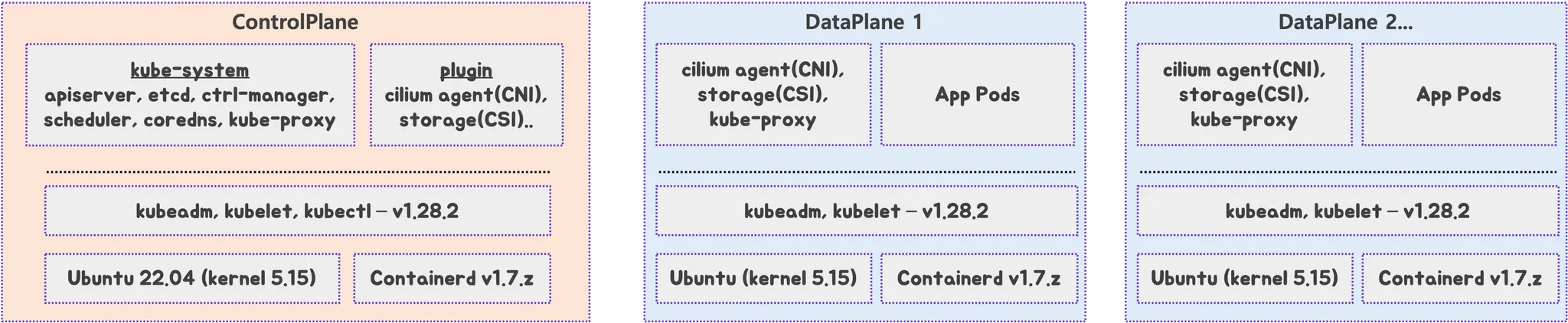

- OS 및 주요 컴포넌트 버전:

- Ubuntu 22.04 (kernel 5.15)

- Containerd 1.7.x

- kubeadm, kubelet: 1.28.2

- CNI: Cilium 1.14.x

- CSI: OpenEBS 3.y.z

- 애플리케이션 및 요구사항 확인 : 현황 조사 후 작성

1.2 사전 호환성 검토

업그레이드에 앞서 각 구성요소의 커널 및 K8S 호환성을 다음과 같이 확인합니다.

1-2-1. Kubernetes 1.32의 커널 요구사항

- Kubernetes 공식 문서에 따르면 일부 기능(User namespace 등)은 Linux kernel 6.5 이상이 필요합니다.

- 단, 해당 기능 사용 여부에 따라 현재의 kernel 5.15로도 사용 가능할 수 있으나, 확장성과 안정성을 고려하여 커널 업그레이드가 권장됩니다.

1-2-2. Containerd 호환성

- Kubernetes 1.32에서 container runtime으로 containerd 사용 시, containerd 공식 호환성 문서를 참조합니다.

- 기능적으로 User namespace 등을 사용하려면 containerd v2.0 이상, runc v1.2 이상이 요구됩니다. 해당 조건을 만족하도록 containerd 업그레이드가 필요합니다.

1-2-3. CNI (Cilium)

- Cilium 공식 문서에 따르면, Kubernetes 1.32는 Cilium 1.14 이상에서 지원되며,

- 일부 기능(BPF host-routing 등)을 사용하기 위해선 커널 5.10 이상이 요구됩니다. 현재 사용 중인 커널 5.15는 해당 기능 사용에 문제가 없습니다.

1-2-4. CSI

1-2-5. 애플리케이션 호환성 확인

- 클러스터에 배포된 애플리케이션 및 워크로드의 Kubernetes API 버전 호환성, Deprecated API 사용 여부 등을 사전 점검합니다.

1.3 업그레이드 방식 결정

- 업그레이드 방식: In-place vs Blue-Green

- Dev - Stg - Prd 별 각기 다른 방법 vs 모두 동일 방법

- In-place Upgrade 방식 선택

- 장점: 리소스 낭비 최소화, 구축 시간 절약

- 단점: 서비스 영향 가능성 → 이에 대한 사전 점검 및 모니터링 강화 필요

- Blue-Green 방식은 리소스를 이중으로 요구하기 때문에 본 가상 환경에서는 선택하지 않았습니다.

1.4 업그레이드 계획 수립

업그레이드는 다음의 순서와 기준으로 수행합니다.

1-4-1. 사전 준비 작업

- (옵션) 각 작업 별 상세 명령 실행 및 스크립트 작성, 작업 중단 실패 시 롤백 명령/스크립트 작성: 반복 작업 자동화 및 실패 시 신속한 복구를 위한 대비

- 모니터링 시스템 구성/점검: 업그레이드 과정 중 이상 징후 탐지

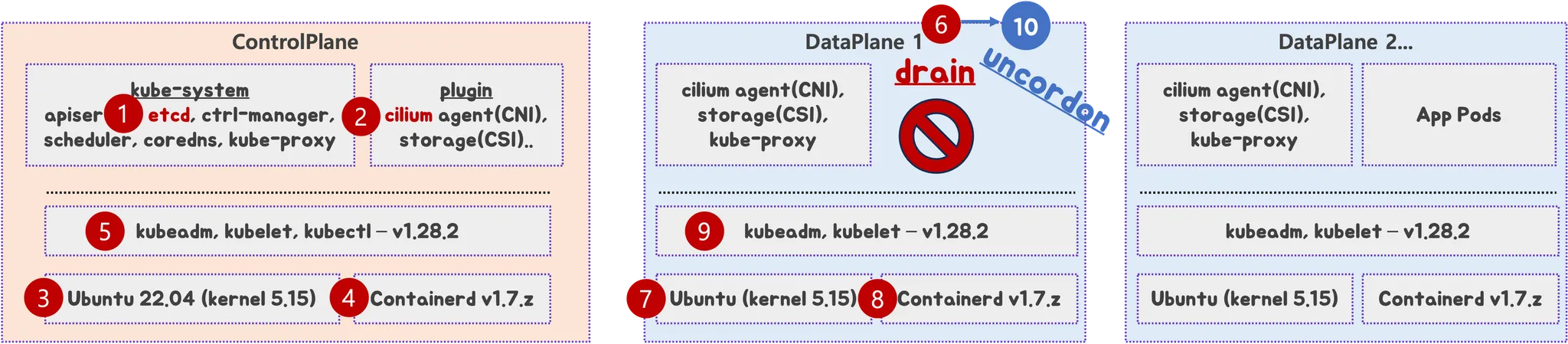

- ① ETCD 백업: Control Plane 노드에서 etcd snapshot 수행

- ② CNI(Cilium) 업그레이드: Kubernetes와 호환되는 최신 버전으로 선제적 업그레이드

1.5 Control Plane 노드 업그레이드 (1.28 → 1.30)

1대씩 순차적으로 업그레이드합니다. 각 노드에서 다음의 절차를 진행합니다.

1-5-1. 1.28 → 1.29

- ③ OS(kernel) 업그레이드 → 재부팅

- ④ containerd 업그레이드 → containerd 서비스 재시작

- ⑤ kubeadm 업그레이드 → kubelet/kubectl 업그레이드 → kubelet 재시작

1-5-2. 1.29 → 1.30

- ⑤ kubeadm 업그레이드 → kubelet/kubectl 업그레이드 → kubelet 재시작

업그레이드 완료 후 각 노드의 상태 점검 및 클러스터 정상 동작 확인

1.6 Data Plane 노드 업그레이드 (1.28 → 1.30)

DP 노드도 1대씩 순차적으로 다음과 같이 진행합니다.

1-6-1. 1.28 → 1.29

- ⑥

kubectl drain <노드>→ 파드 Eviction 및 서비스 중단 여부 모니터링 - ⑦ OS(kernel) 업그레이드 → 재부팅

- ⑧ containerd 업그레이드 → containerd 서비스 재시작

- ⑨ kubeadm 업그레이드 → kubelet 업그레이드 → kubelet 재시작

1-6-2. 1.29 → 1.30

- ⑨ kubeadm 업그레이드 → kubelet 업그레이드 → kubelet 재시작

업그레이드 완료 후 각 노드의 상태 점검

⑩ kubectl uncordon <노드> → 노드 복귀 및 상태 점검

1.7 1차 기능 점검

Control Plane과 Data Plane 노드가 모두 1.30으로 업그레이드된 이후, 전체 애플리케이션 동작 및 Kubernetes 기능을 점검합니다.

- 주요 점검 항목: 파드 상태, 서비스 연결, 로그 오류, 리소스 스케줄링

1.8 Control Plane 노드 업그레이드 (1.30 → 1.32)

각 노드에 대해 다음 절차 버전별 반복

- kubeadm 업그레이드 → kubelet/kubectl 업그레이드 → kubelet 재시작

- 노드 상태 점검

1.9 Data Plane 노드 업그레이드 (1.30 → 1.32)

각 노드에 대해 다음 절차 버전별 반복

kubectl drain <노드>→ 파드 Eviction 및 서비스 중단 여부 모니터링- kubeadm 업그레이드 → kubelet 업그레이드 → kubelet 재시작

업그레이드 완료 후 각 노드의 상태 점검

kubectl uncordon <노드> → 노드 복귀 및 상태 확인

1.10 2차 기능 점검

전체 노드가 1.32 버전으로 업그레이드 완료된 이후,

클러스터 상태, 워크로드 정상 배포, 파드 스케줄링, 네트워크 연결, 모니터링 시스템 연동 등을 최종 점검합니다.

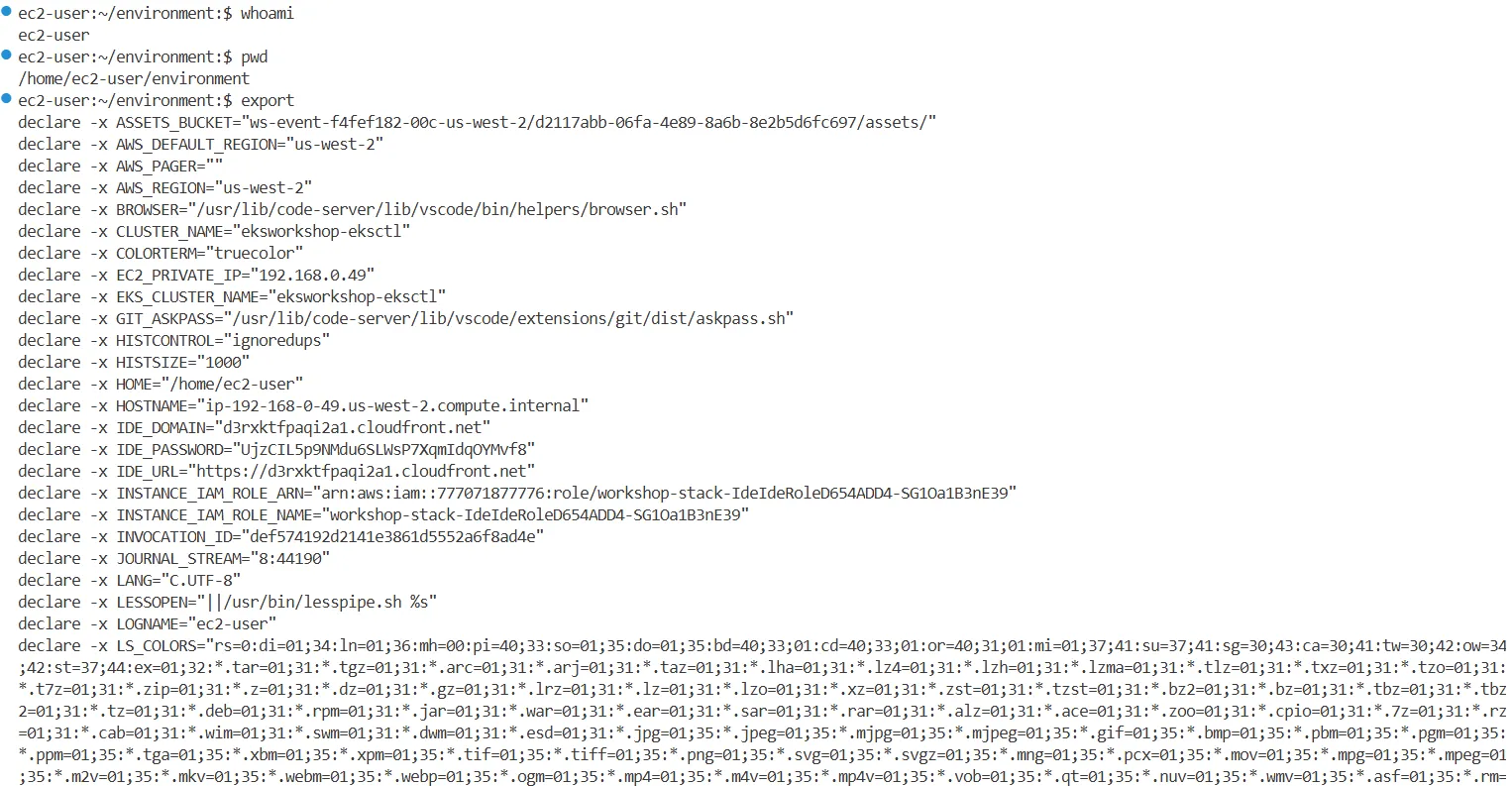

2. Amazon EKS Upgrade Workshop

2.1 Workshop 개요

Amazon EKS Upgrade Workshop은 EKS 클러스터의 다양한 업그레이드 전략(In-Place, Blue/Green 등)을 소개하고 실습할 수 있도록 구성된 실험 기반 교육입니다. 실습에서는 In-Place 방식의 업그레이드 전략을 다루며, Terraform, ArgoCD를 기반으로 한 자동화된 클러스터 업그레이드 방식을 실습하는 것을 목적으로 합니다.

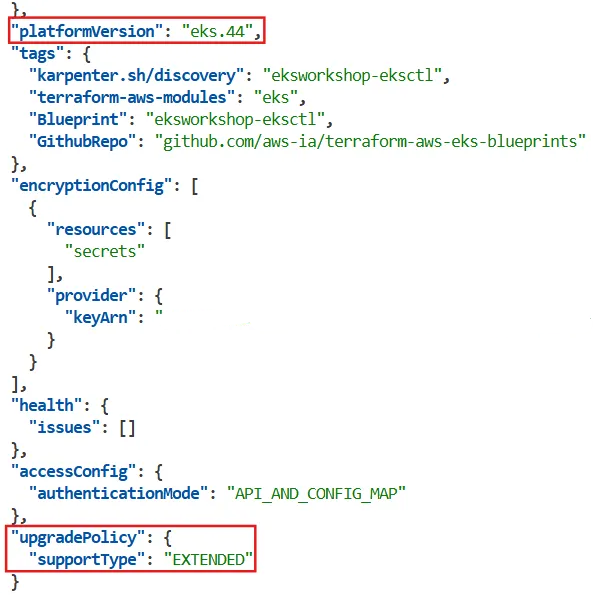

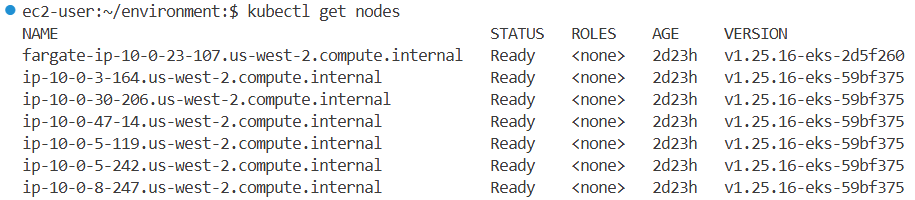

2.2 기본 환경 및 클러스터 정보

- Kubernetes 버전: v1.25.16

- EKS 플랫폼 버전: eks.44

- 클러스터 이름:

eksworkshop-eksctl - 리전:

us-west-2 - 기반 OS 및 런타임: Amazon Linux 2(AL2), 커널 버전 5.10.234, containerd 1.7.25

- 접속 사용자:

ec2-user - 배포 도구: Terraform, Helm, ArgoCD

- 접속 환경: EC2 기반 IDE-Server (CloudFormation으로 배포)

- 클러스터 배포: Terraform으로 구성된 Blueprints 사용

- Terraform State 저장소: S3 버킷 사용

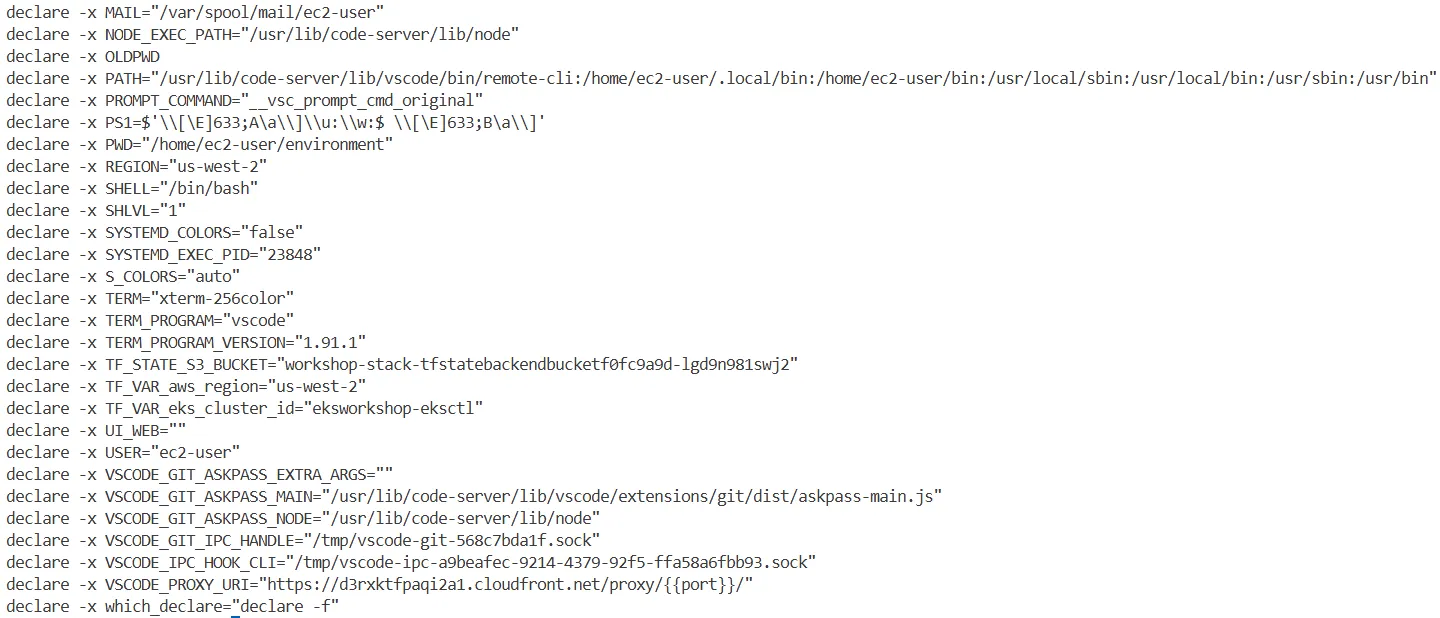

#

whoami

ec2-user

pwd

/home/ec2-user/environment

export

declare -x ASSETS_BUCKET="ws-event-069b6df5-757-us-west-2/d2117abb-06fa-4e89-8a6b-8e2b5d6fc697/assets/"

declare -x AWS_DEFAULT_REGION="us-west-2"

declare -x AWS_PAGER=""

declare -x AWS_REGION="us-west-2"

declare -x CLUSTER_NAME="eksworkshop-eksctl"

declare -x EC2_PRIVATE_IP="192.168.0.59"

declare -x EKS_CLUSTER_NAME="eksworkshop-eksctl"

declare -x GIT_ASKPASS="/usr/lib/code-server/lib/vscode/extensions/git/dist/askpass.sh"

declare -x HOME="/home/ec2-user"

declare -x HOSTNAME="ip-192-168-0-59.us-west-2.compute.internal"

declare -x IDE_DOMAIN="dboqritcd2u5f.cloudfront.net"

declare -x IDE_PASSWORD="pKsnXRPkxDvZH2GfZ9IPF3ULW74RbKjH"

declare -x IDE_URL="https://dboqritcd2u5f.cloudfront.net"

declare -x INSTANCE_IAM_ROLE_ARN="arn:aws:iam::271345173787:role/workshop-stack-IdeIdeRoleD654ADD4-Qhv1nLkOGItJ"

declare -x INSTANCE_IAM_ROLE_NAME="workshop-stack-IdeIdeRoleD654ADD4-Qhv1nLkOGItJ"

declare -x REGION="us-west-2"

declare -x TF_STATE_S3_BUCKET="workshop-stack-tfstatebackendbucketf0fc9a9d-isuyoohioh8p"

declare -x TF_VAR_aws_region="us-west-2"

declare -x TF_VAR_eks_cluster_id="eksworkshop-eksctl"

declare -x USER="ec2-user"

declare -x VSCODE_PROXY_URI="https://dboqritcd2u5f.cloudfront.net/proxy/{{port}}/"

...

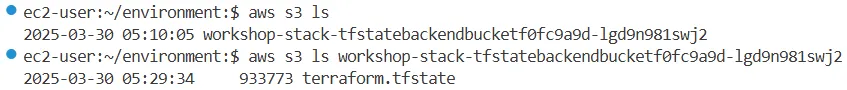

# s3 버킷 확인

aws s3 ls

2025-03-28 11:56:25 workshop-stack-tfstatebackendbucketf0fc9a9d-2phddn8usnlw

aws s3 ls s3://workshop-stack-tfstatebackendbucketf0fc9a9d-2phddn8usnlw

2025-03-28 12:18:23 940996 terraform.tfstate

# 환경변수(테라폼 포함) 및 단축키 alias 등 확인

cat ~/.bashrc

...

export EKS_CLUSTER_NAME=eksworkshop-eksctl

export CLUSTER_NAME=eksworkshop-eksctl

export AWS_DEFAULT_REGION=us-west-2

export REGION=us-west-2

export AWS_REGION=us-west-2

export TF_VAR_eks_cluster_id=eksworkshop-eksctl

export TF_VAR_aws_region=us-west-2

export ASSETS_BUCKET=ws-event-069b6df5-757-us-west-2/d2117abb-06fa-4e89-8a6b-8e2b5d6fc697/assets/

export TF_STATE_S3_BUCKET=workshop-stack-tfstatebackendbucketf0fc9a9d-isuyoohioh8p

alias k=kubectl

alias kgn="kubectl get nodes -o wide"

alias kgs="kubectl get svc -o wide"

alias kgd="kubectl get deploy -o wide"

alias kgsa="kubectl get svc -A -o wide"

alias kgda="kubectl get deploy -A -o wide"

alias kgp="kubectl get pods -o wide"

alias kgpa="kubectl get pods -A -o wide"

aws eks update-kubeconfig --name eksworkshop-eksctl

export AWS_PAGER=""

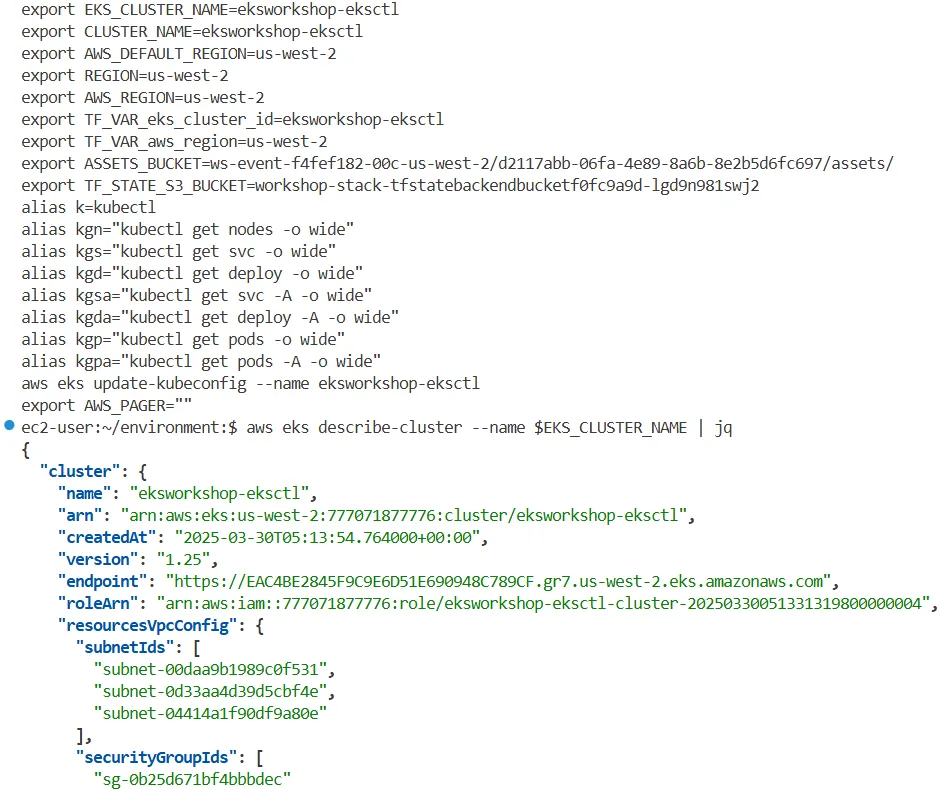

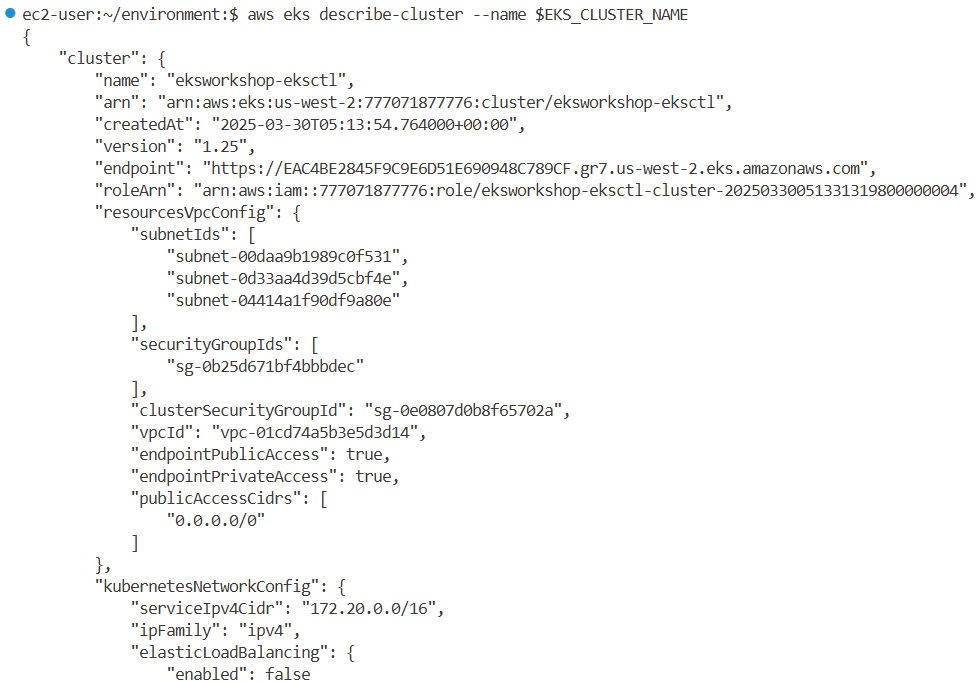

# eks 플랫폼 버전 eks.44

aws eks describe-cluster --name $EKS_CLUSTER_NAME | jq

{

"cluster": {

"name": "eksworkshop-eksctl",

"arn": "arn:aws:eks:us-west-2:271345173787:cluster/eksworkshop-eksctl",

"createdAt": "2025-03-25T02:20:34.060000+00:00",

"version": "1.25",

"endpoint": "https://B852364D70EA6D62672481D278A15059.gr7.us-west-2.eks.amazonaws.com",

"roleArn": "arn:aws:iam::271345173787:role/eksworkshop-eksctl-cluster-20250325022010584300000004",

"resourcesVpcConfig": {

"subnetIds": [

"subnet-047ab61ad85c50486",

"subnet-0aeb12f673d69f7c5",

"subnet-01bbd11a892aec6ee"

],

"securityGroupIds": [

"sg-0ef166af090e168d5"

],

"clusterSecurityGroupId": "sg-09f8b41af6cadd619",

"vpcId": "vpc-052bf403eaa32d5de",

"endpointPublicAccess": true,

"endpointPrivateAccess": true,

"publicAccessCidrs": [

"0.0.0.0/0"

]

},

"kubernetesNetworkConfig": {

"serviceIpv4Cidr": "172.20.0.0/16",

"ipFamily": "ipv4",

"elasticLoadBalancing": {

"enabled": false

}

},

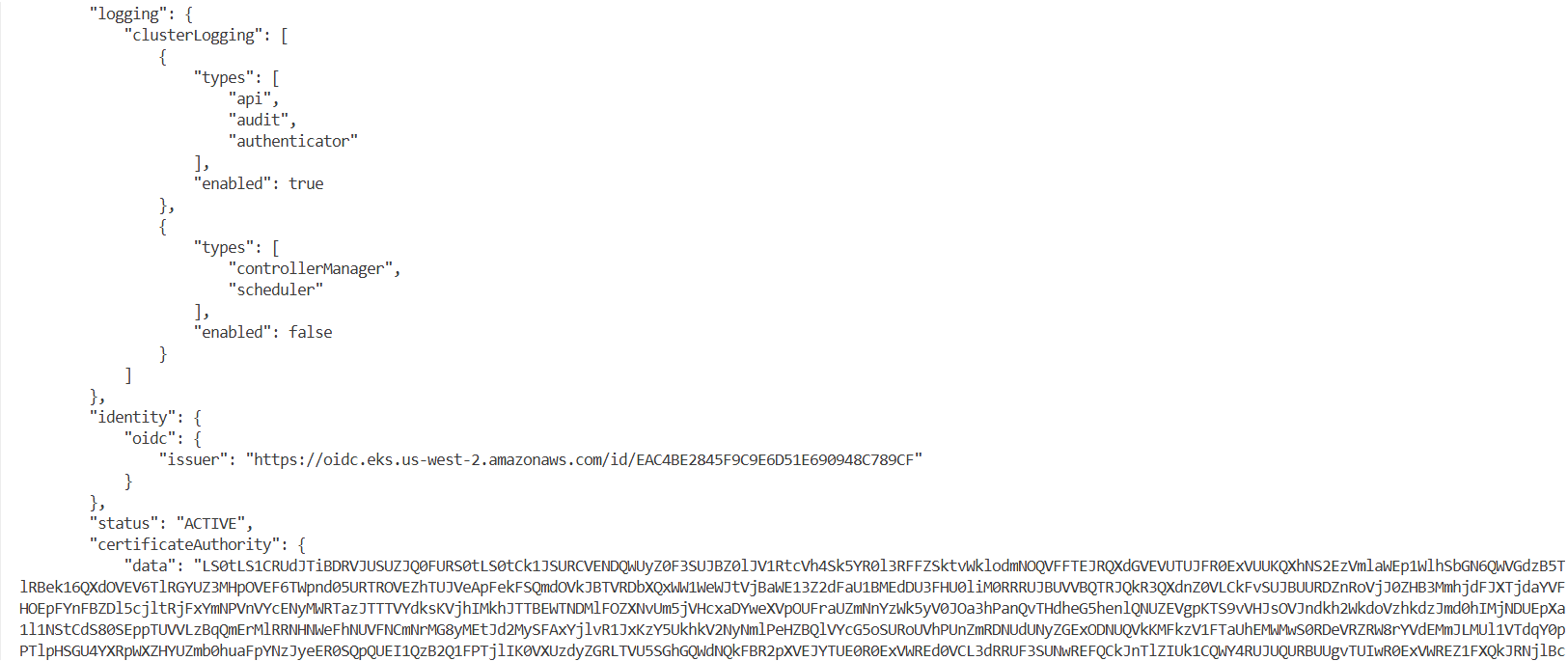

"logging": {

"clusterLogging": [

{

"types": [

"api",

"audit",

"authenticator"

],

"enabled": true

},

{

"types": [

"controllerManager",

"scheduler"

],

"enabled": false

}

]

},

"identity": {

"oidc": {

"issuer": "https://oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059"

}

},

"status": "ACTIVE",

"certificateAuthority": {

"data": "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJUG52UE1YVThibmd3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TlRBek1qVXdNakl3TkRSYUZ3MHpOVEF6TWpNd01qSTFORFJhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURDMGxZNk9kTkhqQlhyaStPVUd0cHQ1UVl3YUdCZUw5V2s1QkFwSUQrRVllQVQzS1k5VmFQSGhUK2cKdTIyM096SHlXUjNJNjlhbWd2cS94RUg2RUFqRUFUTkxKWHd3MXQ1dVNhaG1Ia3lUWEhtUkl2M3hwQVEzalY0KwpReFQrWWJBYjNpMjdjd3d5OGNRckRBTU1BZUszd29JWmR5OVkxWUx2ZkMxUnA4WEU3bitLcGpRTkE5UmlvYzFxCnU2RGh0bzJ5dHdOTE5WQ0RuRSswbTN2M3AzMlZFTW91UjlMbkFRaEUza1BvL2ZjeVB6VWVQelMwRTdRdTdXSmMKZXJHcGNBbmtFdithRjNiK2p5ZndiWHBFLzFCQWtaZWt2UmtsN0FreVF2ZU5wU1RITEZnZDNMamtnWE52djFEcwp5SUI1UHJKSGV6RTk2UEZFbkRGMGtmZGVaVzNGQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJSVm9zWGs2Zyt4ek9ycEd6QTlOU0xnTmVSalhUQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQy9qQWtUMHJRcwpyaDFrZ2ozOG54b3c2WkdsVUxCKzQrY0NPU3p1K3FpNDFTWVpHamliemJXTklBTGIzakFCNEd4Y05sbWxlTVE2Cms5V0JqZk1yb3d6ZHhNQWZOc2R6VStUMGx1dmhSUkVRZjVDL0pNaVdtVWRuZGo1SHhWQmFLM0luc0pUV2hCRlIKSFdEaEcwVm5qeGJiVitlQnZYQUtaWmNuc21iMm5JNENxWGRBdWxKbFFiY201Y25UTDNBOXlYdzY5K1ZucCtmdgo3czFRd3pnWCt0NFc3Zm1DeEVCN1hoL2MyaXg3Q1hER0hqWE1OWVFsNVlNbEdWaWVWbTFKQkhWaUhXYlA4UE9JCmpEbUcvWDExZVczRFhoQ24yTFU1dlVOQTZKNUFQTmlBb0J5WU54VGhndDkvem5zZG0xL1dNZDQ5U0RGREVUcWkKeGhRWjlqQ2VNbXZFCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K"

},

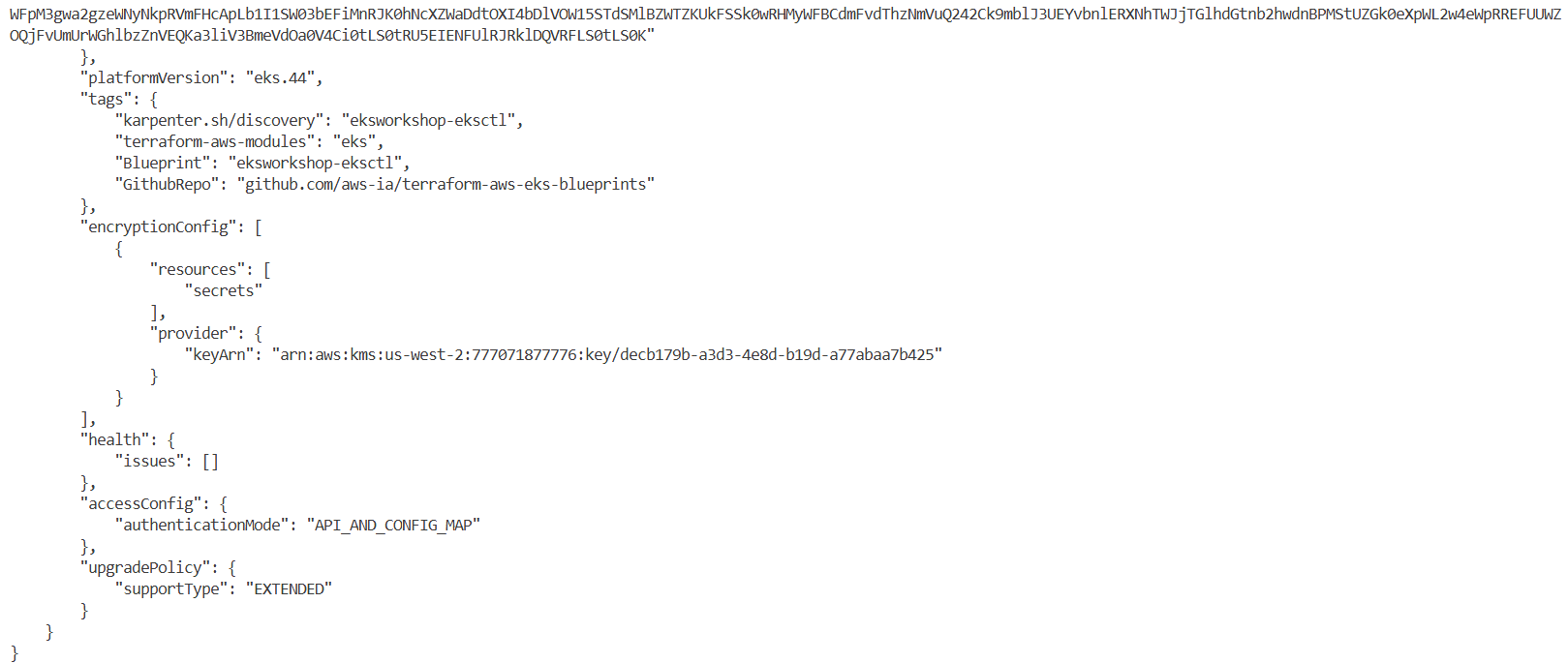

"platformVersion": "eks.44",

"tags": {

"karpenter.sh/discovery": "eksworkshop-eksctl",

"terraform-aws-modules": "eks",

"Blueprint": "eksworkshop-eksctl",

"GithubRepo": "github.com/aws-ia/terraform-aws-eks-blueprints"

},

"encryptionConfig": [

{

"resources": [

"secrets"

],

"provider": {

"keyArn": "~~"

}

}

],

"health": {

"issues": []

},

"accessConfig": {

"authenticationMode": "API_AND_CONFIG_MAP"

},

"upgradePolicy": {

"supportType": "EXTENDED"

}

}

}

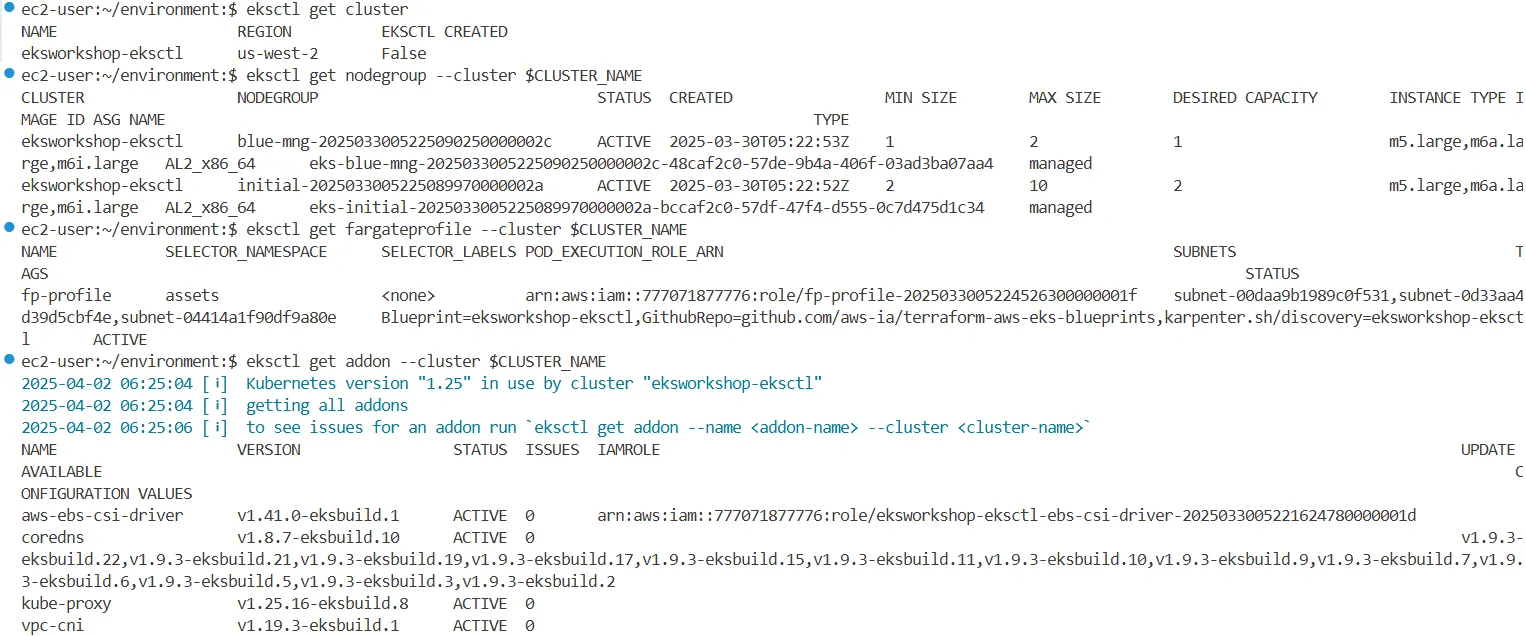

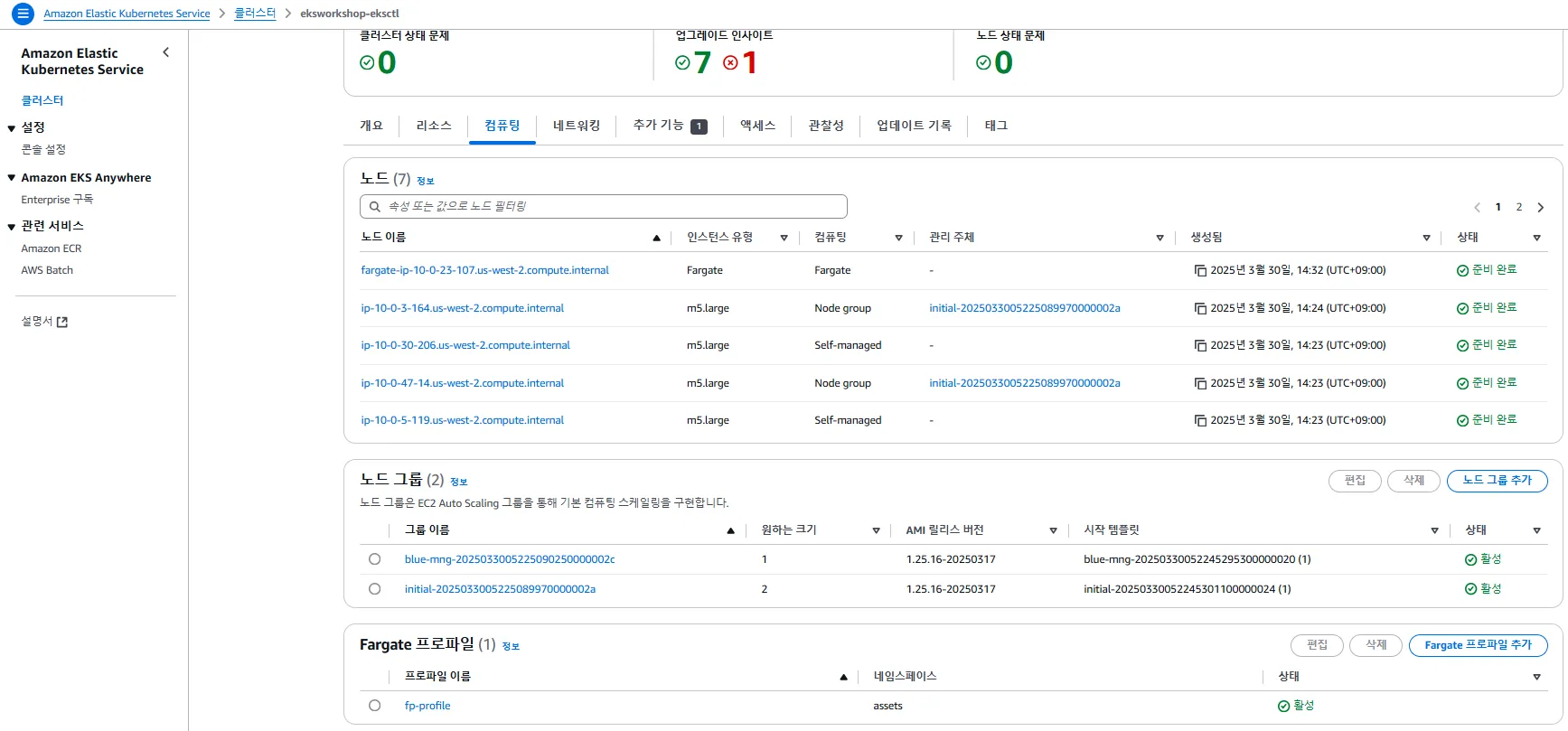

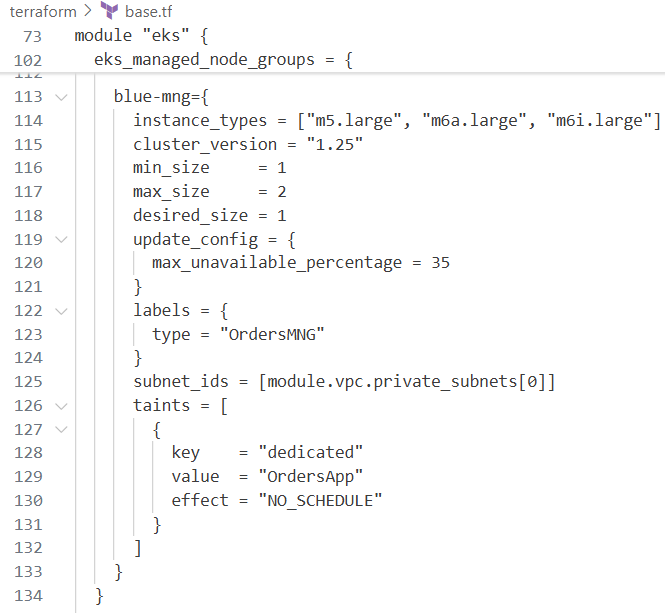

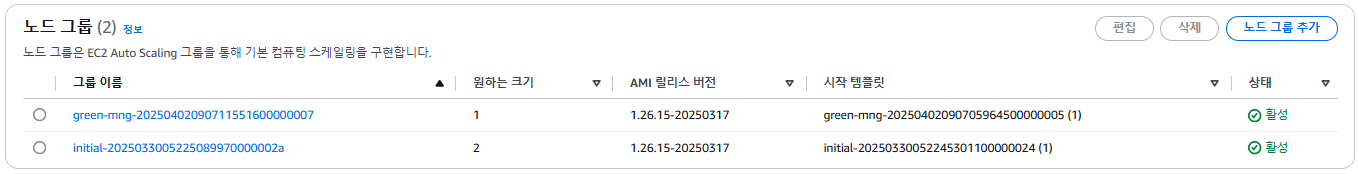

2.3 클러스터 구성 정보

노드 그룹 및 타입

- Managed Node Group:

blue-mng,initial그룹 존재

- Fargate 프로파일:

fp-profile - Self-managed Node 존재

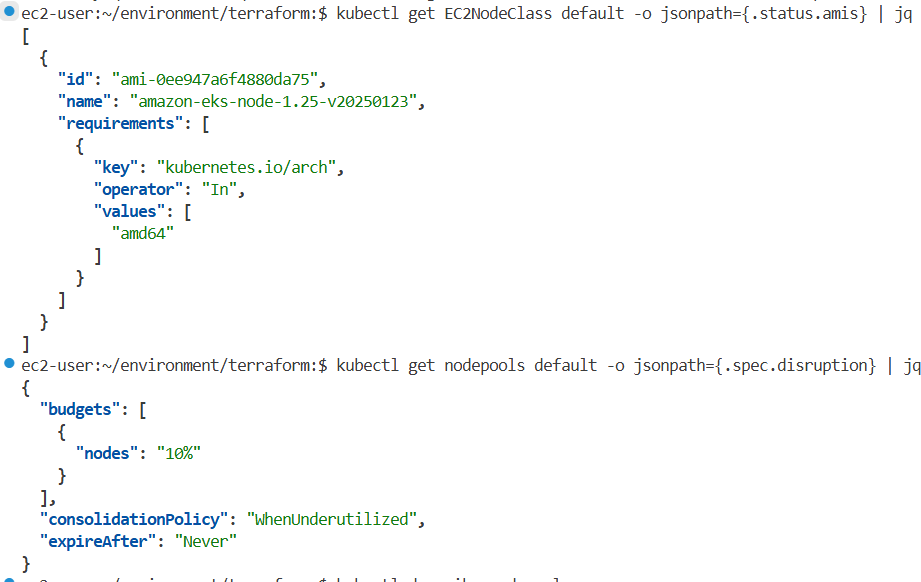

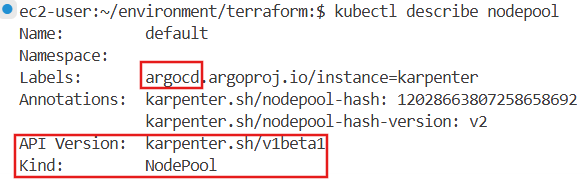

- Karpenter: NodeClaim에 의해 자동 프로비저닝되는 노드 존재

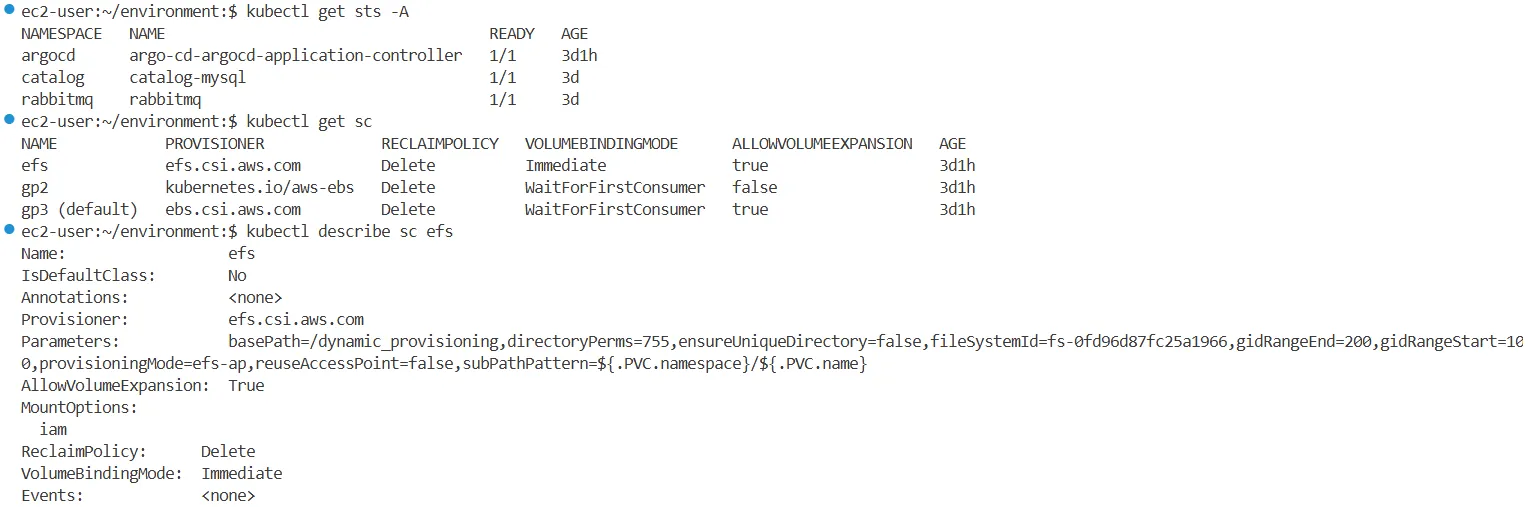

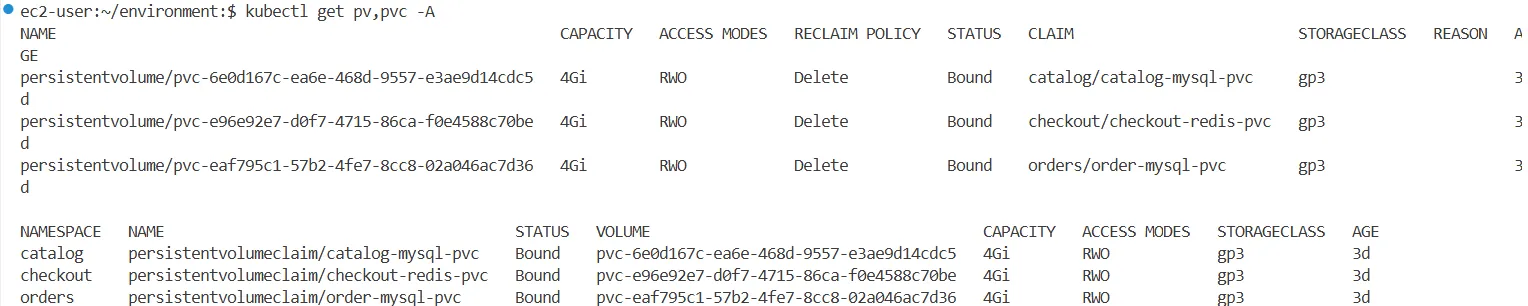

스토리지 및 볼륨 구성

- StorageClass: gp2 (기존), gp3 (기본), efs

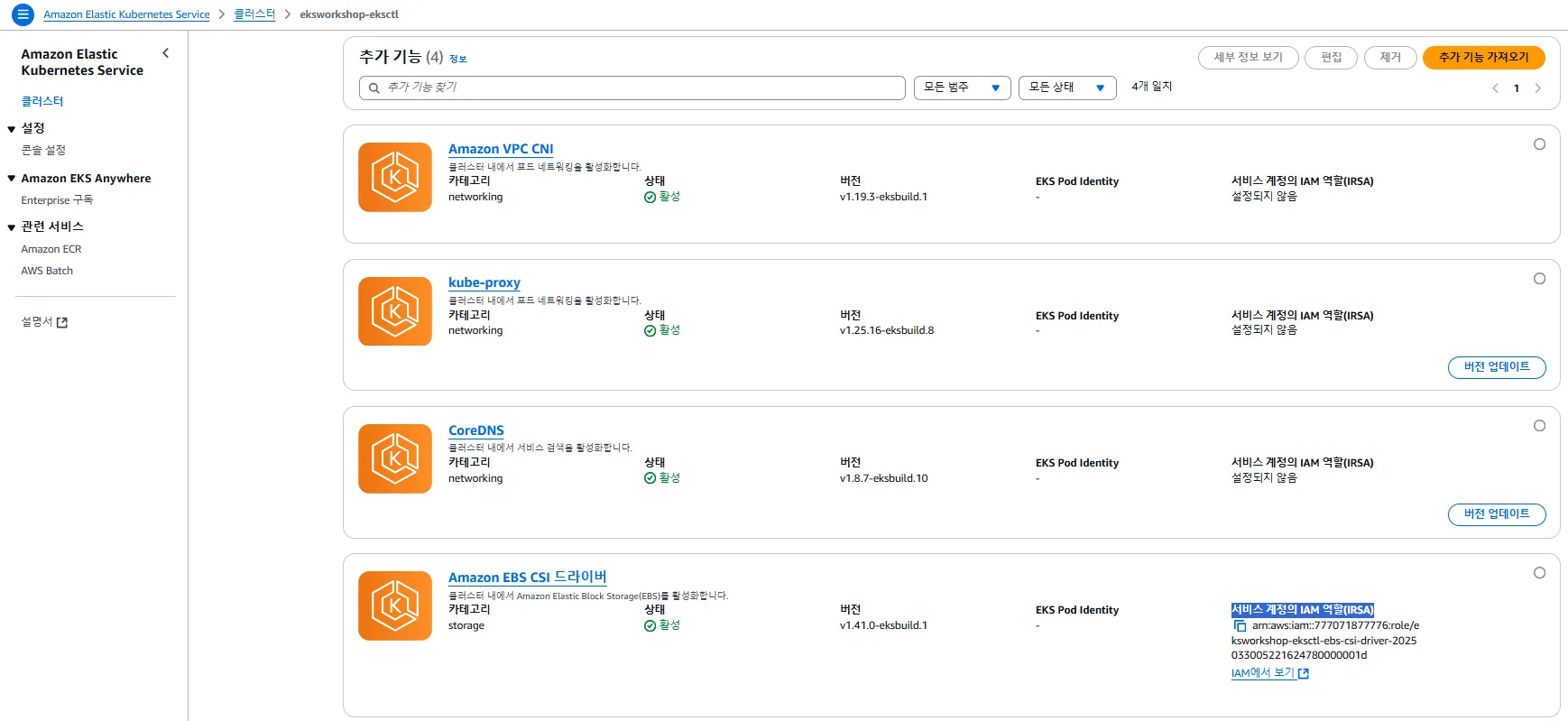

애드온

- CoreDNS: v1.8.7-eksbuild.10

- kube-proxy: v1.25.16-eksbuild.8

- vpc-cni, ebs-csi-driver, efs-csi-driver 등 활성화됨

Helm으로 배포된 컴포넌트

- Argo CD, Karpenter, EFS/Load Balancer CSI Driver 등

- 모든 Helm 차트 상태는 deployed

#

eksctl get cluster

NAME REGION EKSCTL CREATED

eksworkshop-eksctl us-west-2 False

eksctl get nodegroup --cluster $CLUSTER_NAME

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

eksworkshop-eksctl blue-mng-20250325023020754500000029 ACTIVE 2025-03-25T02:30:22Z 1 2 1 m5.large,m6a.large,m6i.large AL2_x86_64 eks-blue-mng-20250325023020754500000029-00cae591-7abe-6143-dd33-c720df2700b6 managed

eksworkshop-eksctl initial-2025032502302076080000002c ACTIVE 2025-03-25T02:30:22Z 2 10 2 m5.large,m6a.large,m6i.large AL2_x86_64 eks-initial-2025032502302076080000002c-30cae591-7ac2-1e9c-2415-19975314b08b managed

eksctl get fargateprofile --cluster $CLUSTER_NAME

NAME SELECTOR_NAMESPACE SELECTOR_LABELS POD_EXECUTION_ROLE_ARN SUBNETS TAGS STATUS

fp-profile assets <none> arn:aws:iam::271345173787:role/fp-profile-2025032502301523520000001f subnet-0aeb12f673d69f7c5,subnet-047ab61ad85c50486,subnet-01bbd11a892aec6ee Blueprint=eksworkshop-eksctl,GithubRepo=github.com/aws-ia/terraform-aws-eks-blueprints,karpenter.sh/discovery=eksworkshop-eksctl ACTIVE

eksctl get addon --cluster $CLUSTER_NAME

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.41.0-eksbuild.1 ACTIVE 0 arn:aws:iam::271345173787:role/eksworkshop-eksctl-ebs-csi-driver-2025032502294618580000001d

coredns v1.8.7-eksbuild.10 ACTIVE 0 v1.9.3-eksbuild.22,v1.9.3-eksbuild.21,v1.9.3-eksbuild.19,v1.9.3-eksbuild.17,v1.9.3-eksbuild.15,v1.9.3-eksbuild.11,v1.9.3-eksbuild.10,v1.9.3-eksbuild.9,v1.9.3-eksbuild.7,v1.9.3-eksbuild.6,v1.9.3-eksbuild.5,v1.9.3-eksbuild.3,v1.9.3-eksbuild.2

kube-proxy v1.25.16-eksbuild.8 ACTIVE 0

vpc-cni v1.19.3-eksbuild.1 ACTIVE 0

#

kubectl get node --label-columns=eks.amazonaws.com/capacityType,node.kubernetes.io/lifecycle,karpenter.sh/capacity-type,eks.amazonaws.com/compute-type

NAME STATUS ROLES AGE VERSION CAPACITYTYPE LIFECYCLE CAPACITY-TYPE COMPUTE-TYPE

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 135m v1.25.16-eks-2d5f260 fargate

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 145m v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 145m v1.25.16-eks-59bf375 self-managed

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 145m v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 135m v1.25.16-eks-59bf375 spot

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 145m v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 145m v1.25.16-eks-59bf375 self-managed

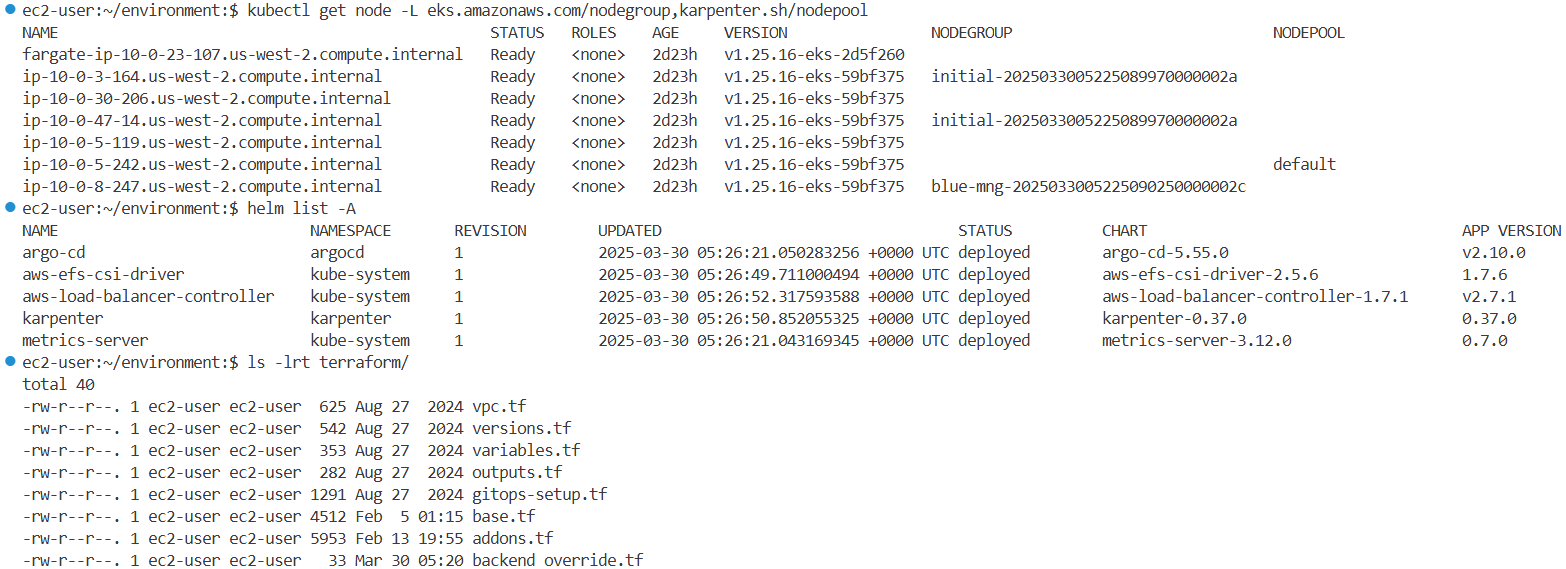

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

NAME STATUS ROLES AGE VERSION NODEGROUP NODEPOOL

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 154m v1.25.16-eks-2d5f260

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 163m v1.25.16-eks-59bf375 initial-2025032502302076080000002c

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 164m v1.25.16-eks-59bf375

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 163m v1.25.16-eks-59bf375 blue-mng-20250325023020754500000029

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 154m v1.25.16-eks-59bf375 default

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 163m v1.25.16-eks-59bf375 initial-2025032502302076080000002c

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 164m v1.25.16-eks-59bf375

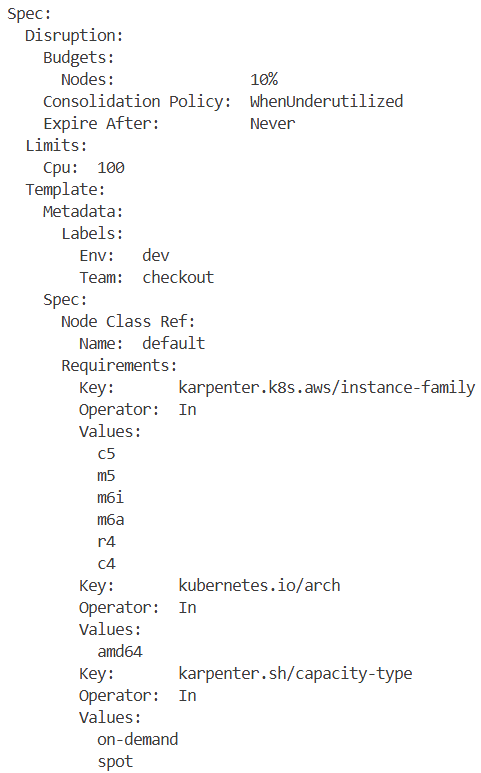

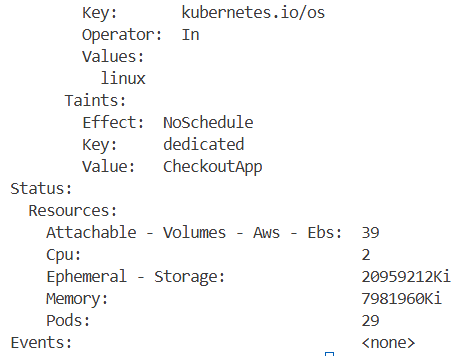

kubectl get nodepools

k get NAME NODECLASS

default default

kubectl get nodeclaims -o yaml

kubectl get nodeclaims

NAME TYPE ZONE NODE READY AGE

default-rpl9w c5.xlarge us-west-2c ip-10-0-39-95.us-west-2.compute.internal True 155m

kubectl get node --label-columns=node.kubernetes.io/instance-type,kubernetes.io/arch,kubernetes.io/os,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE ARCH OS ZONE

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 136m v1.25.16-eks-2d5f260 amd64 linux us-west-2c

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 146m v1.25.16-eks-59bf375 m5.large amd64 linux us-west-2a

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 147m v1.25.16-eks-59bf375 m5.large amd64 linux us-west-2b

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 146m v1.25.16-eks-59bf375 m5.large amd64 linux us-west-2a

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 137m v1.25.16-eks-59bf375 c5.xlarge amd64 linux us-west-2c

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 146m v1.25.16-eks-59bf375 m5.large amd64 linux us-west-2c

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 147m v1.25.16-eks-59bf375 m5.large amd64 linux us-west-2a

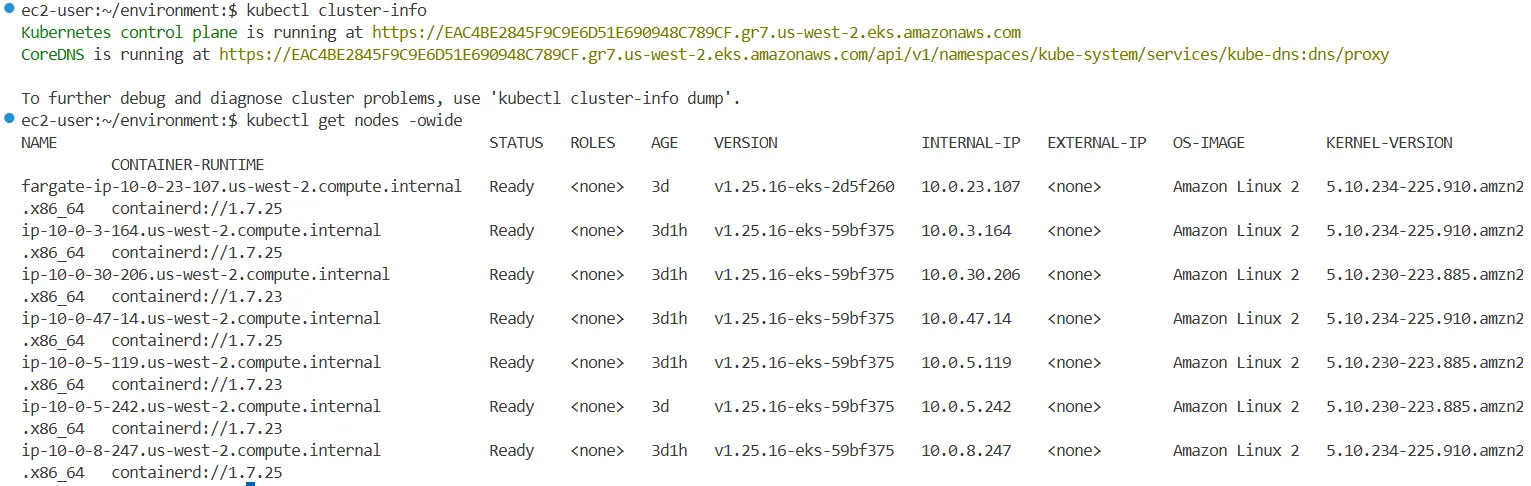

#

kubectl cluster-info

Kubernetes control plane is running at https://B852364D70EA6D62672481D278A15059.gr7.us-west-2.eks.amazonaws.com

CoreDNS is running at https://B852364D70EA6D62672481D278A15059.gr7.us-west-2.eks.amazonaws.com/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

#

kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 120m v1.25.16-eks-2d5f260 10.0.41.147 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 130m v1.25.16-eks-59bf375 10.0.12.228 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 130m v1.25.16-eks-59bf375 10.0.26.119 <none> Amazon Linux 2 5.10.230-223.885.amzn2.x86_64 containerd://1.7.23

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 130m v1.25.16-eks-59bf375 10.0.3.199 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 120m v1.25.16-eks-59bf375 10.0.39.95 <none> Amazon Linux 2 5.10.230-223.885.amzn2.x86_64 containerd://1.7.23

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 130m v1.25.16-eks-59bf375 10.0.44.106 <none> Amazon Linux 2 5.10.234-225.910.amzn2.x86_64 containerd://1.7.25

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 130m v1.25.16-eks-59bf375 10.0.6.184 <none> Amazon Linux 2 5.10.230-223.885.amzn2.x86_64 containerd://1.7.23

#

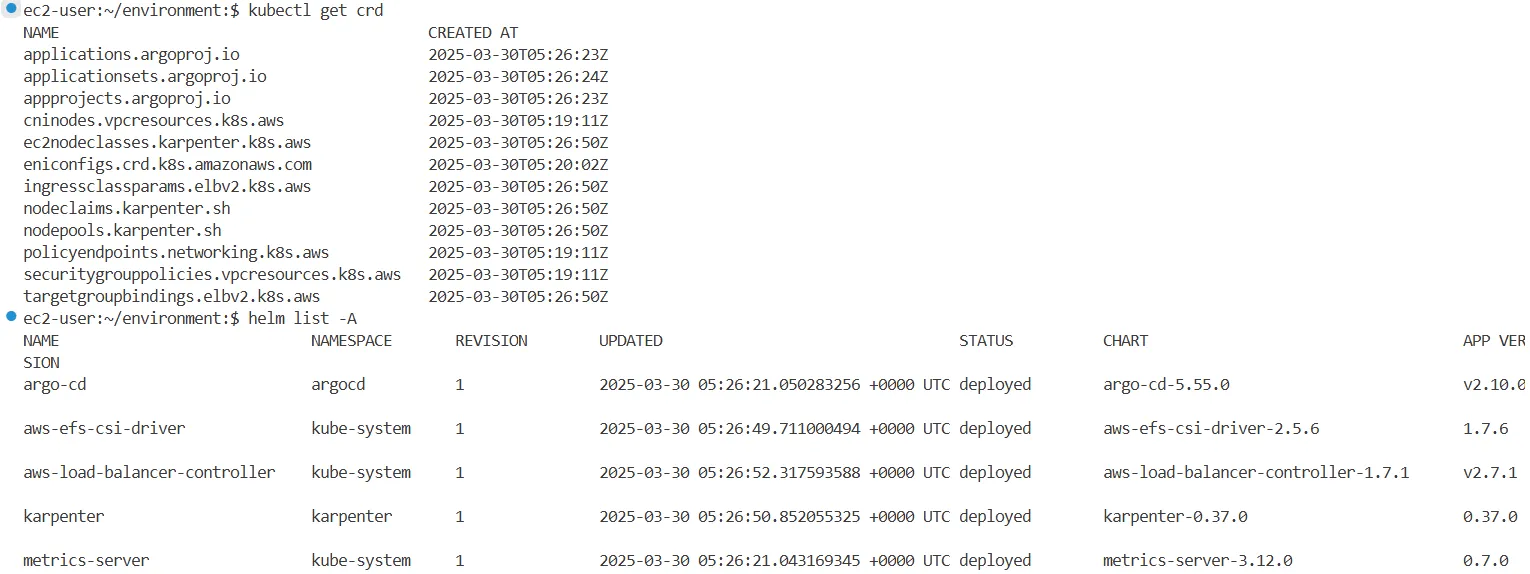

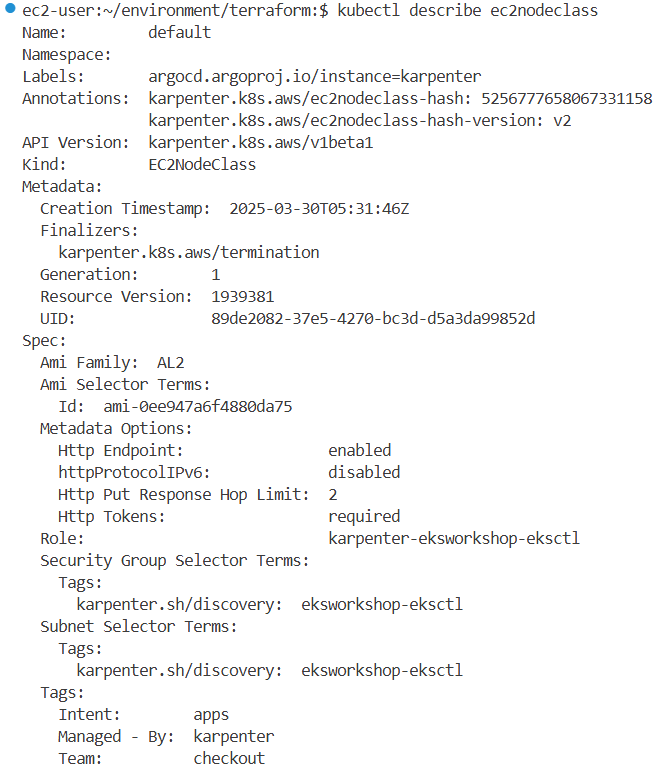

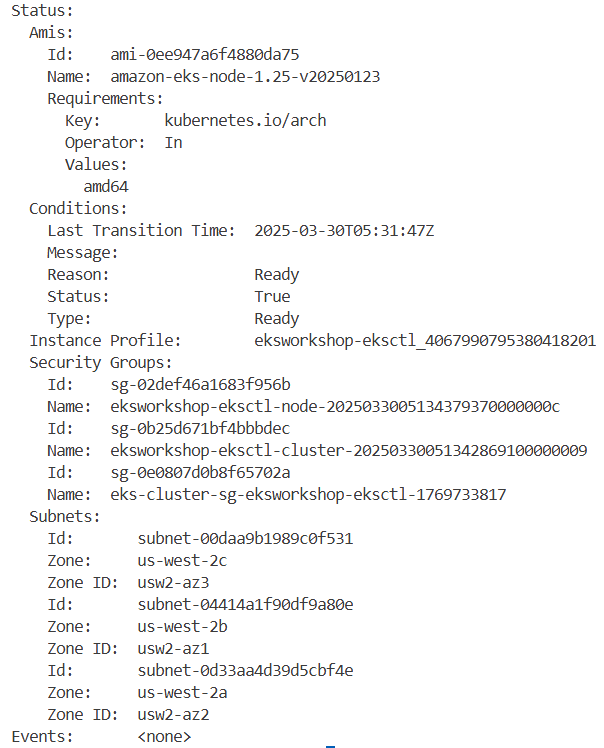

kubectl get crd

NAME CREATED AT

applications.argoproj.io 2025-03-25T02:34:49Z

applicationsets.argoproj.io 2025-03-25T02:34:49Z

appprojects.argoproj.io 2025-03-25T02:34:49Z

cninodes.vpcresources.k8s.aws 2025-03-25T02:26:13Z

ec2nodeclasses.karpenter.k8s.aws 2025-03-25T02:35:15Z

eniconfigs.crd.k8s.amazonaws.com 2025-03-25T02:27:35Z

ingressclassparams.elbv2.k8s.aws 2025-03-25T02:35:15Z

nodeclaims.karpenter.sh 2025-03-25T02:35:15Z

nodepools.karpenter.sh 2025-03-25T02:35:16Z

policyendpoints.networking.k8s.aws 2025-03-25T02:26:13Z

securitygrouppolicies.vpcresources.k8s.aws 2025-03-25T02:26:13Z

targetgroupbindings.elbv2.k8s.aws 2025-03-25T02:35:15Z

helm list -A

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

argo-cd argocd 1 2025-03-25 02:34:46.490919128 +0000 UTC deployed argo-cd-5.55.0 v2.10.0

aws-efs-csi-driver kube-system 1 2025-03-25 02:35:16.380271414 +0000 UTC deployed aws-efs-csi-driver-2.5.6 1.7.6

aws-load-balancer-controller kube-system 1 2025-03-25 02:35:17.147637895 +0000 UTC deployed aws-load-balancer-controller-1.7.1 v2.7.1

karpenter karpenter 1 2025-03-25 02:35:16.452581818 +0000 UTC deployed karpenter-0.37.0 0.37.0

metrics-server kube-system 1 2025-03-25 02:34:46.480366515 +0000 UTC deployed metrics-server-3.12.0 0.7.0

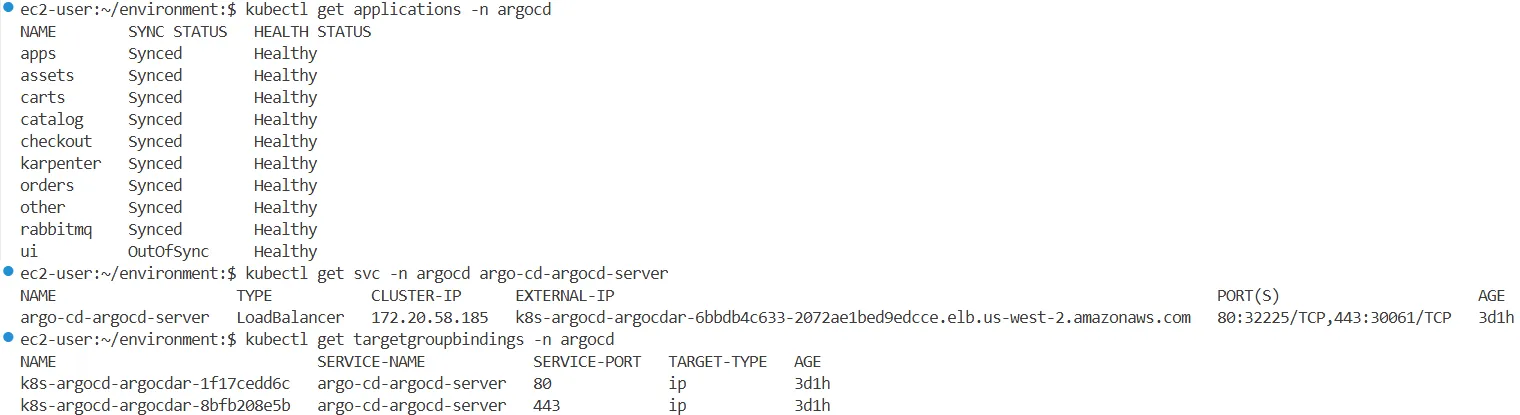

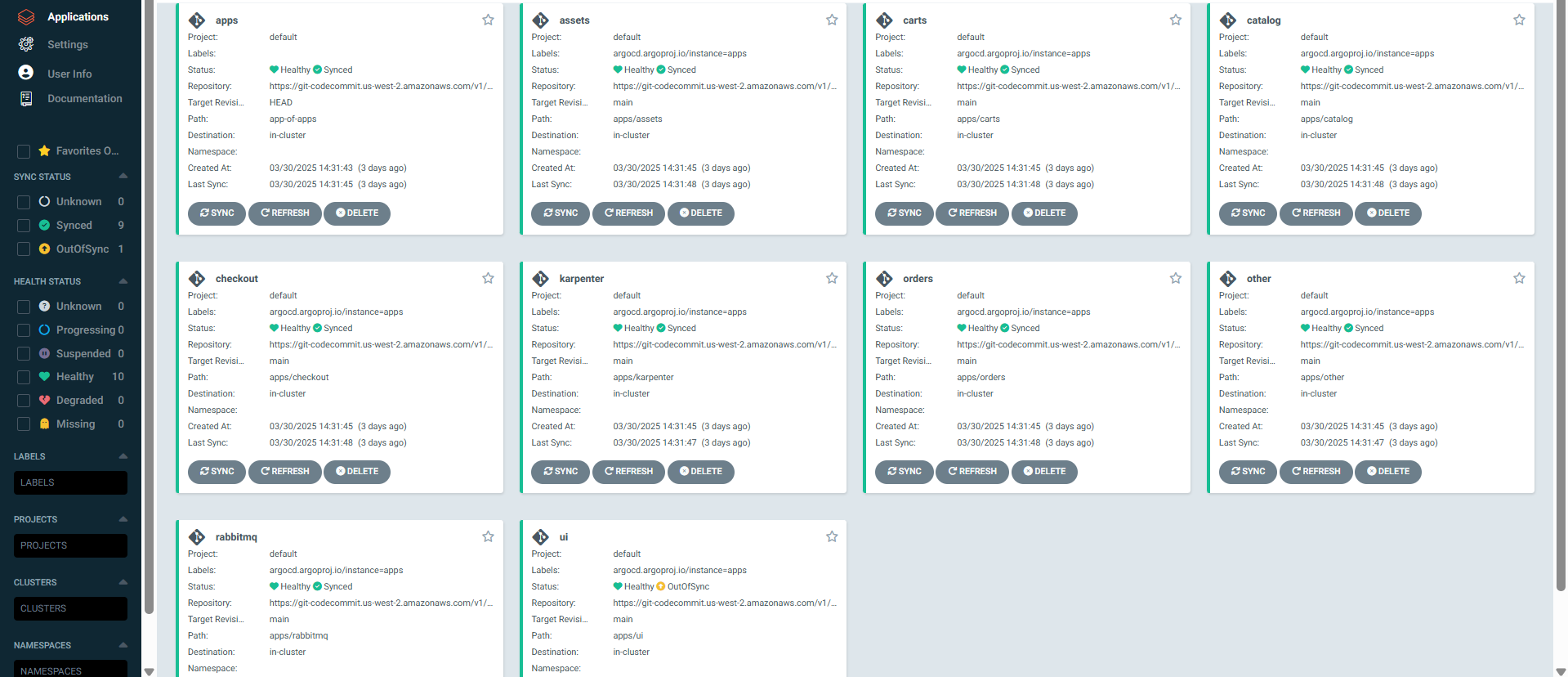

kubectl get applications -n argocd

NAME SYNC STATUS HEALTH STATUS

apps Synced Healthy

assets Synced Healthy

carts Synced Healthy

catalog Synced Healthy

checkout Synced Healthy

karpenter Synced Healthy

orders Synced Healthy

other Synced Healthy

rabbitmq Synced Healthy

ui OutOfSync Healthy

#

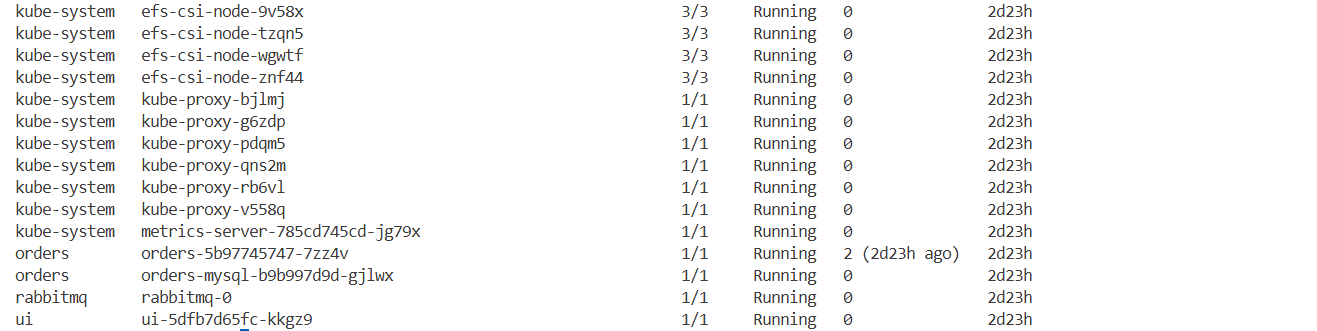

kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argo-cd-argocd-application-controller-0 1/1 Running 0 146m

argocd argo-cd-argocd-applicationset-controller-74d9c9c5c7-6bsk8 1/1 Running 0 146m

argocd argo-cd-argocd-dex-server-6dbbd57479-h8r6b 1/1 Running 0 146m

argocd argo-cd-argocd-notifications-controller-fb4b954d5-lvp2z 1/1 Running 0 146m

argocd argo-cd-argocd-redis-76b4c599dc-5gj95 1/1 Running 0 146m

argocd argo-cd-argocd-repo-server-6b777b579d-thwrk 1/1 Running 0 146m

argocd argo-cd-argocd-server-86bdbd7b89-8t6f5 1/1 Running 0 146m

assets assets-7ccc84cb4d-s8wwb 1/1 Running 0 141m

carts carts-7ddbc698d8-zts9k 1/1 Running 0 141m

carts carts-dynamodb-6594f86bb9-8pzk5 1/1 Running 0 141m

catalog catalog-857f89d57d-9nfnw 1/1 Running 3 (140m ago) 141m

catalog catalog-mysql-0 1/1 Running 0 141m

checkout checkout-558f7777c-fs8dk 1/1 Running 0 141m

checkout checkout-redis-f54bf7cb5-whk4r 1/1 Running 0 141m

karpenter karpenter-547d656db9-qzfmm 1/1 Running 0 146m

karpenter karpenter-547d656db9-tsg8n 1/1 Running 0 146m

kube-system aws-load-balancer-controller-774bb546d8-pphh5 1/1 Running 0 145m

kube-system aws-load-balancer-controller-774bb546d8-r7k45 1/1 Running 0 145m

kube-system aws-node-6xgbd 2/2 Running 0 140m

kube-system aws-node-j5qzk 2/2 Running 0 145m

kube-system aws-node-mvg22 2/2 Running 0 145m

kube-system aws-node-pnx48 2/2 Running 0 145m

kube-system aws-node-sh8lt 2/2 Running 0 145m

kube-system aws-node-z7g8q 2/2 Running 0 145m

kube-system coredns-98f76fbc4-4wz5j 1/1 Running 0 145m

kube-system coredns-98f76fbc4-k4wnz 1/1 Running 0 145m

kube-system ebs-csi-controller-6b575b5f4d-dqdqp 6/6 Running 0 145m

kube-system ebs-csi-controller-6b575b5f4d-wwc7q 6/6 Running 0 145m

kube-system ebs-csi-node-72rl8 3/3 Running 0 145m

kube-system ebs-csi-node-g6cn4 3/3 Running 0 145m

kube-system ebs-csi-node-jvrwj 3/3 Running 0 145m

kube-system ebs-csi-node-jwndw 3/3 Running 0 145m

kube-system ebs-csi-node-qqmsh 3/3 Running 0 145m

kube-system ebs-csi-node-qwpf5 3/3 Running 0 140m

kube-system efs-csi-controller-5d74ddd947-97ncr 3/3 Running 0 146m

kube-system efs-csi-controller-5d74ddd947-mzkqd 3/3 Running 0 146m

kube-system efs-csi-node-69hzl 3/3 Running 0 140m

kube-system efs-csi-node-6k8g2 3/3 Running 0 146m

kube-system efs-csi-node-8hvhz 3/3 Running 0 146m

kube-system efs-csi-node-9fnxz 3/3 Running 0 146m

kube-system efs-csi-node-gb2xm 3/3 Running 0 146m

kube-system efs-csi-node-rcggq 3/3 Running 0 146m

kube-system kube-proxy-6b9vq 1/1 Running 0 145m

kube-system kube-proxy-6lqcc 1/1 Running 0 145m

kube-system kube-proxy-9x46z 1/1 Running 0 145m

kube-system kube-proxy-k26rj 1/1 Running 0 145m

kube-system kube-proxy-xk9tc 1/1 Running 0 145m

kube-system kube-proxy-xvh4j 1/1 Running 0 140m

kube-system metrics-server-785cd745cd-c57q7 1/1 Running 0 146m

orders orders-5b97745747-mxg72 1/1 Running 2 (140m ago) 141m

orders orders-mysql-b9b997d9d-2p8pn 1/1 Running 0 141m

rabbitmq rabbitmq-0 1/1 Running 0 141m

ui ui-5dfb7d65fc-9vgt9 1/1 Running 0 141m

#

kubectl get pdb -A

NAMESPACE NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

karpenter karpenter N/A 1 1 145m

kube-system aws-load-balancer-controller N/A 1 1 145m

kube-system coredns N/A 1 1 153m

kube-system ebs-csi-controller N/A 1 1 145m

#

kubectl get svc -n argocd argo-cd-argocd-server

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argo-cd-argocd-server LoadBalancer 172.20.10.210 k8s-argocd-argocdar-eb7166e616-ed2069d8c15177c9.elb.us-west-2.amazonaws.com 80:32065/TCP,443:31156/TCP 150m

kubectl get targetgroupbindings -n argocd

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-argocd-argocdar-9c77afbc38 argo-cd-argocd-server 443 ip 153m

k8s-argocd-argocdar-efa4621491 argo-cd-argocd-server 80 ip 153m

karpenter,aws-load-balancer-controller,coredns,ebs-csi-controller에 PDB 설정되어 있음- Argo CD 서버는

LoadBalancer타입, 외부 접근 가능 - 관련 TargetGroupBinding 2개 존재 (443, 80)

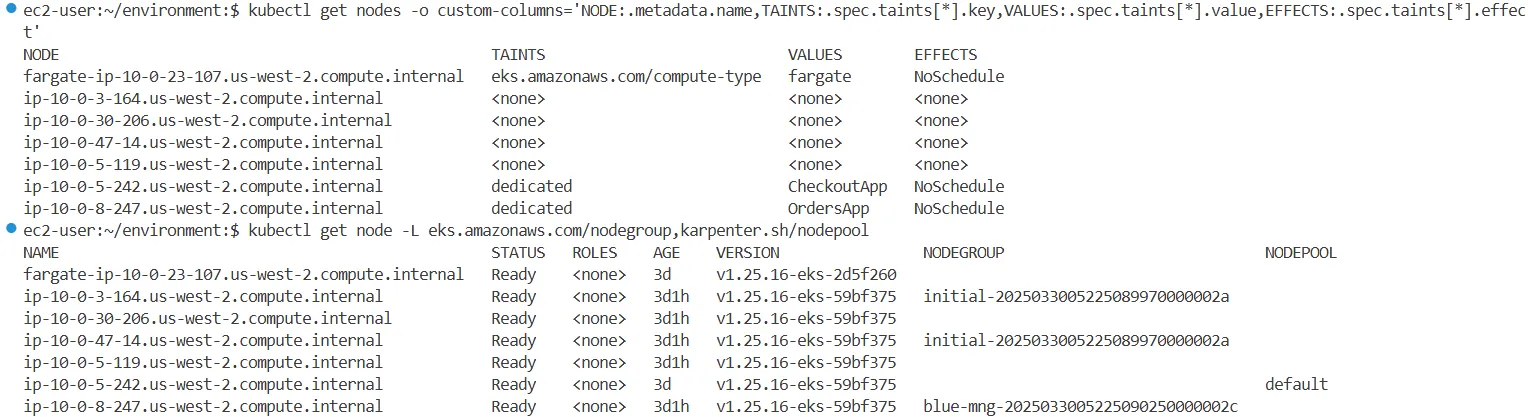

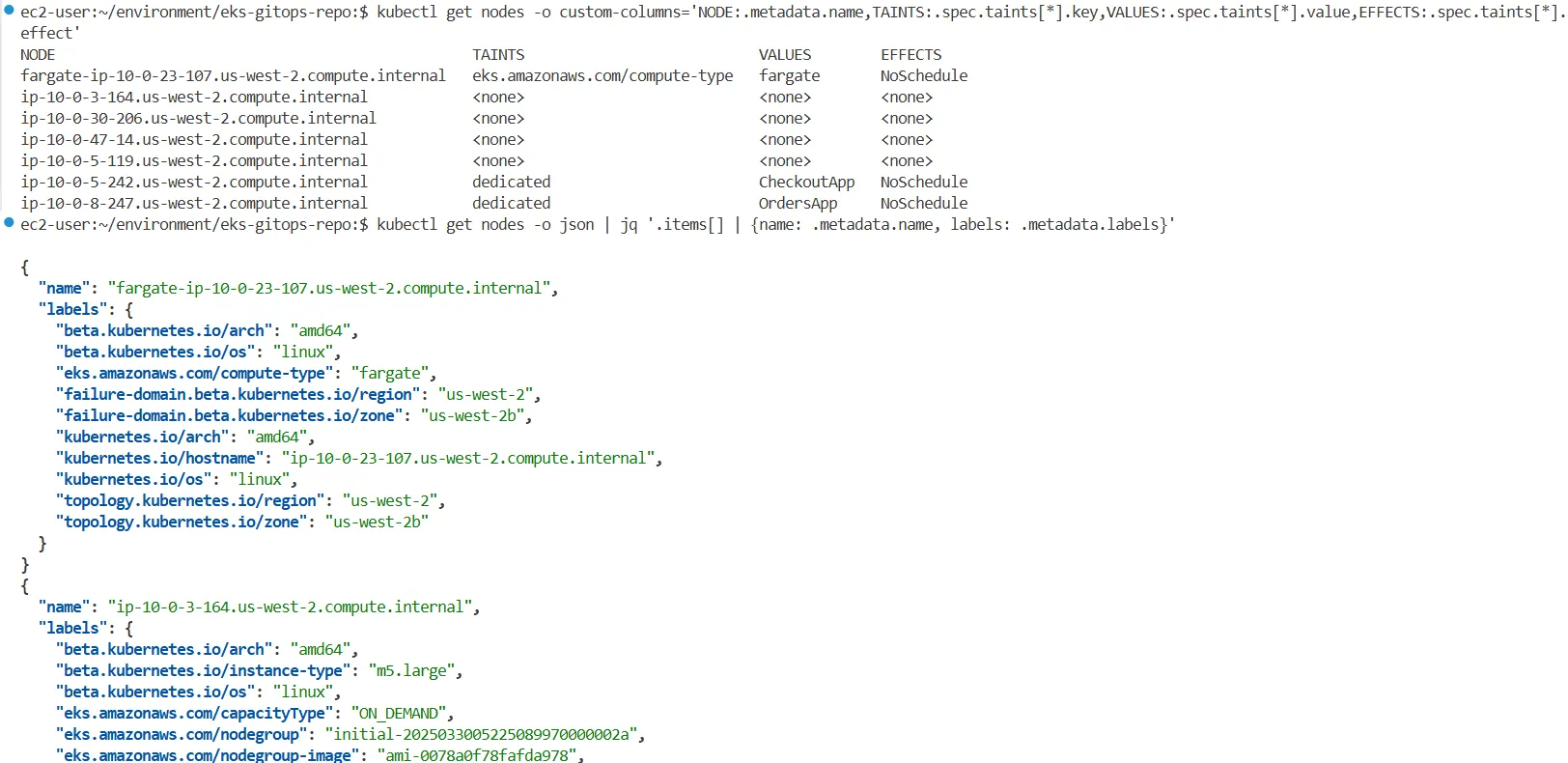

# 노드에 taint 정보 확인

kubectl get nodes -o custom-columns='NODE:.metadata.name,TAINTS:.spec.taints[*].key,VALUES:.spec.taints[*].value,EFFECTS:.spec.taints[*].effect'

NODE TAINTS VALUES EFFECTS

fargate-ip-10-0-2-38.us-west-2.compute.internal eks.amazonaws.com/compute-type fargate NoSchedule

ip-10-0-0-72.us-west-2.compute.internal dedicated CheckoutApp NoSchedule

ip-10-0-13-217.us-west-2.compute.internal <none> <none> <none>

ip-10-0-28-252.us-west-2.compute.internal <none> <none> <none>

ip-10-0-3-168.us-west-2.compute.internal dedicated OrdersApp NoSchedule

ip-10-0-40-107.us-west-2.compute.internal <none> <none> <none>

ip-10-0-42-84.us-west-2.compute.internal <none> <none> <none>

#

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

NAME STATUS ROLES AGE VERSION NODEGROUP NODEPOOL

fargate-ip-10-0-2-38.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-2d5f260

ip-10-0-0-72.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375 default

ip-10-0-13-217.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375 initial-2025032812112870770000002c

ip-10-0-28-252.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375

ip-10-0-3-168.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375 blue-mng-20250328121128698500000029

ip-10-0-40-107.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375

ip-10-0-42-84.us-west-2.compute.internal Ready <none> 16h v1.25.16-eks-59bf375 initial-2025032812112870770000002c

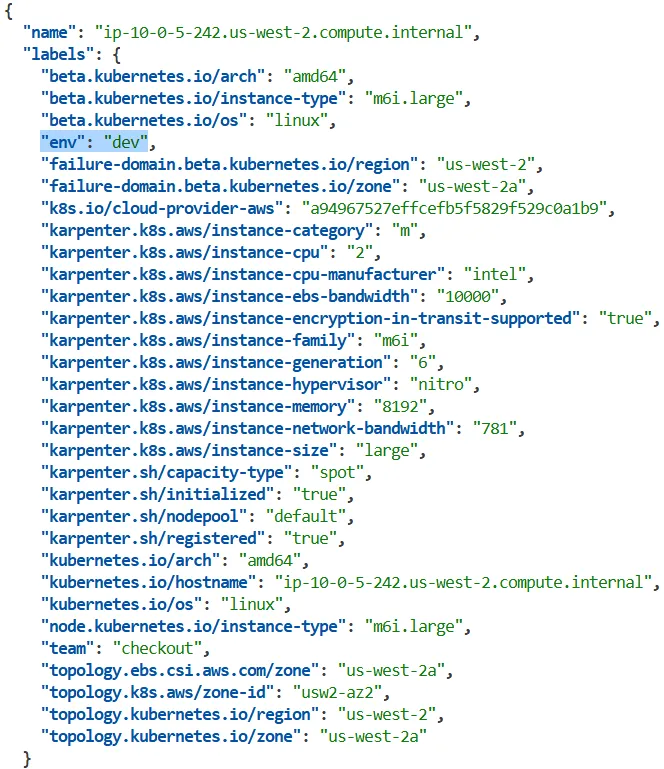

# 노드 별 label 확인

kubectl get nodes -o json | jq '.items[] | {name: .metadata.name, labels: .metadata.labels}'

...

{

"name": "ip-10-0-0-72.us-west-2.compute.internal",

"labels": {

"beta.kubernetes.io/arch": "amd64",

"beta.kubernetes.io/instance-type": "m5.large",

"beta.kubernetes.io/os": "linux",

"env": "dev",

...

#

kubectl get sts -A

NAMESPACE NAME READY AGE

argocd argo-cd-argocd-application-controller 1/1 150m

catalog catalog-mysql 1/1 145m

rabbitmq rabbitmq 1/1 145m

#

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs efs.csi.aws.com Delete Immediate true 152m

gp2 kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 163m

gp3 (default) ebs.csi.aws.com Delete WaitForFirstConsumer true 152m

kubectl describe sc efs

...

Provisioner: efs.csi.aws.com

Parameters: basePath=/dynamic_provisioning,directoryPerms=755,ensureUniqueDirectory=false,fileSystemId=fs-0b269b8e2735b9c59,gidRangeEnd=200,gidRangeStart=100,provisioningMode=efs-ap,reuseAccessPoint=false,subPathPattern=${.PVC.namespace}/${.PVC.name}

AllowVolumeExpansion: True

...

#

kubectl get pv,pvc -A

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-7aa7d213-892d-4f79-bf90-a530d648ff63 4Gi RWO Delete Bound catalog/catalog-mysql-pvc gp3 146m

persistentvolume/pvc-875d1ca7-e28b-421a-8a7e-2ae9d7480b9a 4Gi RWO Delete Bound orders/order-mysql-pvc gp3 146m

persistentvolume/pvc-b612df9f-bb9d-4b82-a973-fe40360c2ef5 4Gi RWO Delete Bound checkout/checkout-redis-pvc gp3 145m

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

catalog persistentvolumeclaim/catalog-mysql-pvc Bound pvc-7aa7d213-892d-4f79-bf90-a530d648ff63 4Gi RWO gp3 146m

checkout persistentvolumeclaim/checkout-redis-pvc Bound pvc-b612df9f-bb9d-4b82-a973-fe40360c2ef5 4Gi RWO gp3 146m

orders persistentvolumeclaim/order-mysql-pvc Bound pvc-875d1ca7-e28b-421a-8a7e-2ae9d7480b9a 4Gi RWO gp3 146m- Argo CD Application Controller, MySQL, RabbitMQ 등의 StatefulSet 존재 및 정상 실행 중

#

aws eks list-access-entries --cluster-name $CLUSTER_NAME

{

"accessEntries": [

"arn:aws:iam::271345173787:role/aws-service-role/eks.amazonaws.com/AWSServiceRoleForAmazonEKS",

"arn:aws:iam::271345173787:role/blue-mng-eks-node-group-20250325022024229600000010",

"arn:aws:iam::271345173787:role/default-selfmng-node-group-2025032502202377680000000e",

"arn:aws:iam::271345173787:role/fp-profile-2025032502301523520000001f",

"arn:aws:iam::271345173787:role/initial-eks-node-group-2025032502202398930000000f",

"arn:aws:iam::271345173787:role/karpenter-eksworkshop-eksctl",

"arn:aws:iam::271345173787:role/workshop-stack-IdeIdeRoleD654ADD4-Qhv1nLkOGItJ"

]

}

#

eksctl get iamidentitymapping --cluster $CLUSTER_NAME

ARN USERNAME GROUPS ACCOUNT

arn:aws:iam::271345173787:role/WSParticipantRole admin system:masters

arn:aws:iam::271345173787:role/blue-mng-eks-node-group-20250325022024229600000010 system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::271345173787:role/fp-profile-2025032502301523520000001f system:node:{{SessionName}} system:bootstrappers,system:nodes,system:node-proxier

arn:aws:iam::271345173787:role/initial-eks-node-group-2025032502202398930000000f system:node:{{EC2PrivateDNSName}} system:bootstrappers,system:nodes

arn:aws:iam::271345173787:role/workshop-stack-IdeIdeRoleD654ADD4-Qhv1nLkOGItJ admin system:masters

#

kubectl describe cm -n kube-system aws-auth

...

mapRoles:

----

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::271345173787:role/blue-mng-eks-node-group-20250325022024229600000010

username: system:node:{{EC2PrivateDNSName}}

- groups:

- system:bootstrappers

- system:nodes

rolearn: arn:aws:iam::271345173787:role/initial-eks-node-group-2025032502202398930000000f

username: system:node:{{EC2PrivateDNSName}}

- groups:

- system:bootstrappers

- system:nodes

- system:node-proxier

rolearn: arn:aws:iam::271345173787:role/fp-profile-2025032502301523520000001f

username: system:node:{{SessionName}}

- groups:

- system:masters

rolearn: arn:aws:iam::271345173787:role/WSParticipantRole

username: admin

- groups:

- system:masters

rolearn: arn:aws:iam::271345173787:role/workshop-stack-IdeIdeRoleD654ADD4-Qhv1nLkOGItJ

username: admin

...

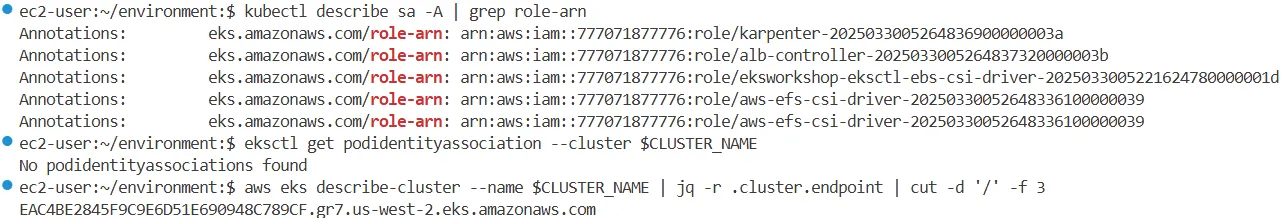

# IRSA : karpenter, alb-controller, ebs-csi-driver, aws-efs-csi-driver 4곳에서 사용

eksctl get iamserviceaccount --cluster $CLUSTER_NAME

kubectl describe sa -A | grep role-arn

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::363835126563:role/karpenter-2025032812153665450000003a

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::363835126563:role/alb-controller-20250328121536592300000039

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::363835126563:role/eksworkshop-eksctl-ebs-csi-driver-2025032812105428630000001d

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::363835126563:role/aws-efs-csi-driver-2025032812153665870000003b

Annotations: eks.amazonaws.com/role-arn: arn:aws:iam::363835126563:role/aws-efs-csi-driver-2025032812153665870000003b

# pod identity 없음

eksctl get podidentityassociation --cluster $CLUSTER_NAME

# EKS API Endpoint

aws eks describe-cluster --name $CLUSTER_NAME | jq -r .cluster.endpoint | cut -d '/' -f 3

B852364D70EA6D62672481D278A15059.gr7.us-west-2.eks.amazonaws.com

dig +short $APIDNS

35.163.123.202

44.246.155.206

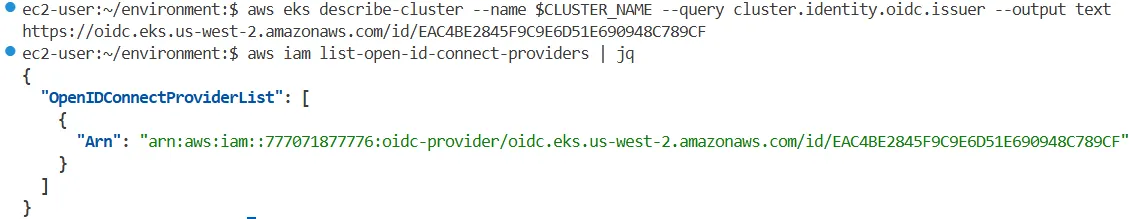

# OIDC

aws eks describe-cluster --name $CLUSTER_NAME --query cluster.identity.oidc.issuer --output text

https://oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059

aws iam list-open-id-connect-providers | jq

{

"OpenIDConnectProviderList": [

{

"Arn": "arn:aws:iam::363835126563:oidc-provider/oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059"

}

]

}aws-authConfigMap 및eksctl명령어로 Role과 그룹 간 매핑 확인system:masters권한 보유자 존재- OIDC 공급자는 IAM에 등록됨

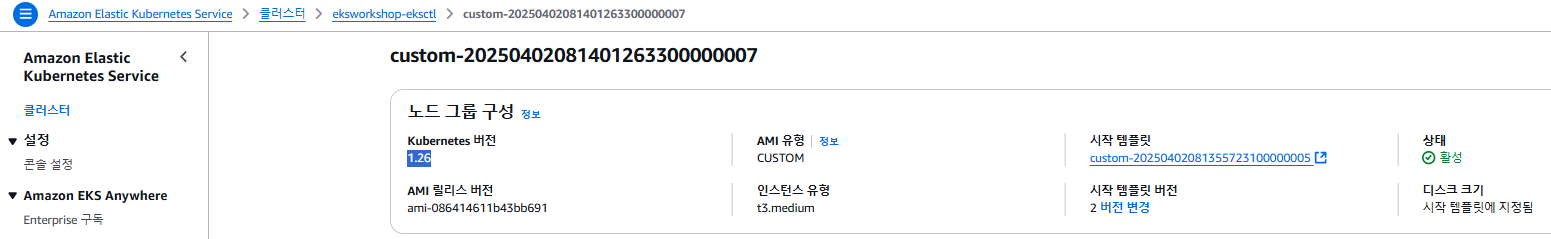

2.4 AWS 관리 콘솔에서 정보 확인

2.4.1 EKS 클러스터 상태 확인

Compute 섹션

- Amazon EKS 클러스터의 Compute 탭에서는 현재 클러스터에 연결된 노드 그룹과 Fargate 프로파일을 시각적으로 확인할 수 있습니다.

Add-on 섹션

- EKS 관리형 애드온들의 설치 상태와 버전이 확인되었습니다.

대표적으로는 vpc-cni, kube-proxy, coredns, aws-ebs-csi-driver 등이 포함되어 있으며, 활성(Active) 상태입니다.

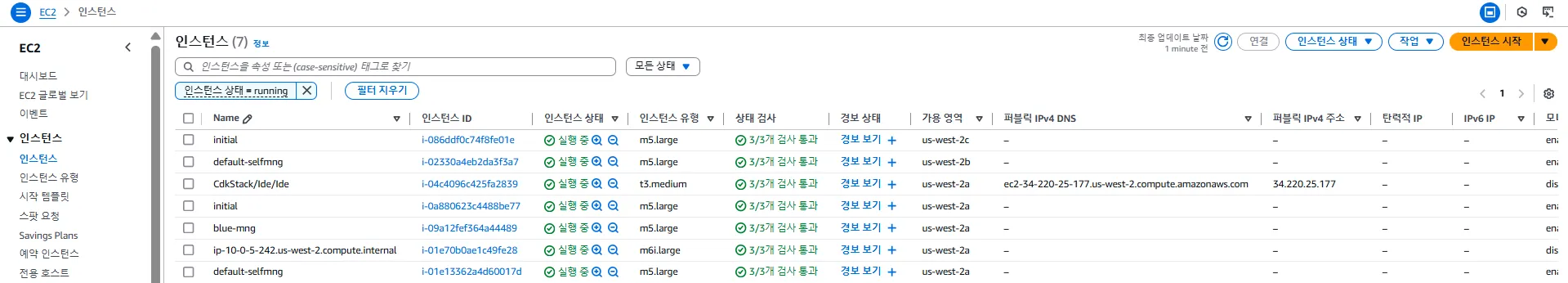

2.4.2 EC2 인스턴스 현황

EC2 인스턴스

- IDE 서버를 제외하고, EKS 클러스터에 연결된 워커 노드 6대의 EC2 인스턴스가 기동 중입니다.

- Fargate 노드가 1대 존재하며 EC2 인스턴스로는 노출되지 않습니다.

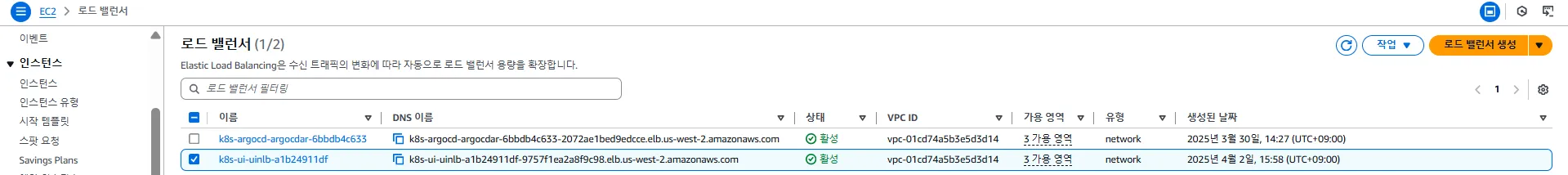

로드밸런서

- Argo CD에 대한 외부 접근을 허용하기 위한 Network Load Balancer(NLB)가 생성되어 있습니다.

- 이 NLB는 LoadBalancer 타입의 Kubernetes 서비스(

argo-cd-argocd-server)와 연결되어 외부 IP로 트래픽을 전달합니다.

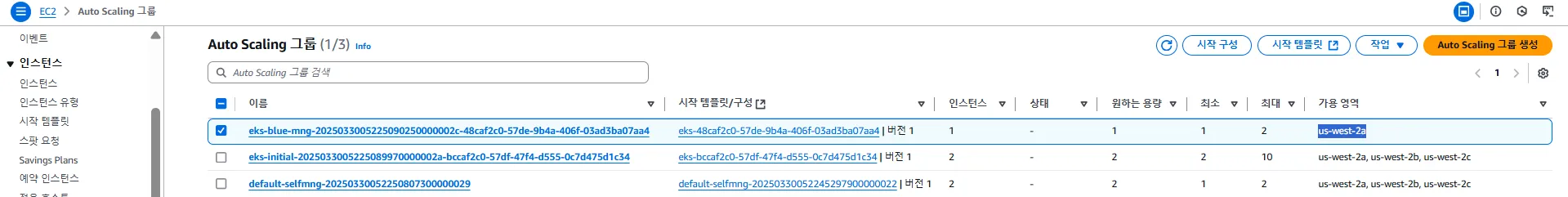

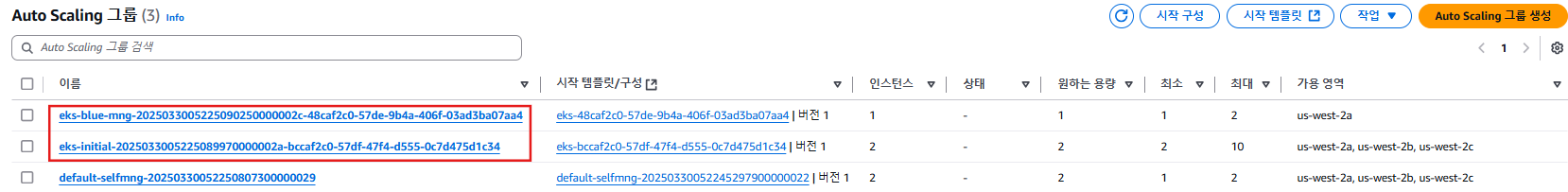

Auto Scaling Group (ASG)

- 클러스터에는 총 3개의 ASG가 존재합니다.

- 그 중

blue-mng관리형 노드 그룹은 us-west-2a 하나의 가용 영역(AZ)만 사용하고 있는 것이 특징입니다.

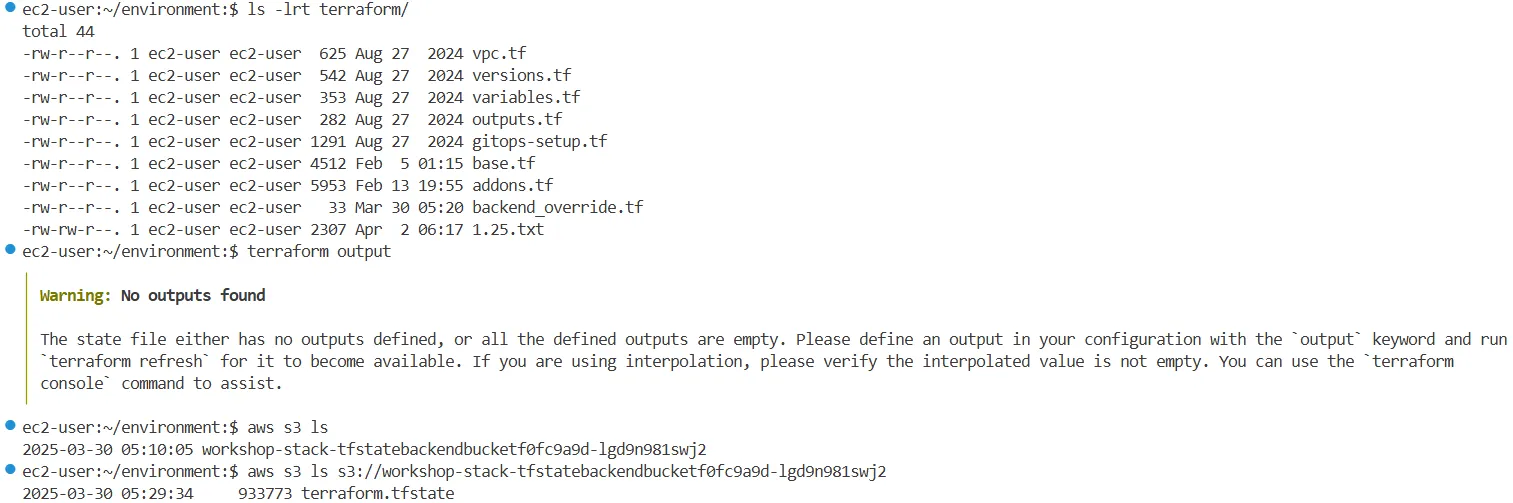

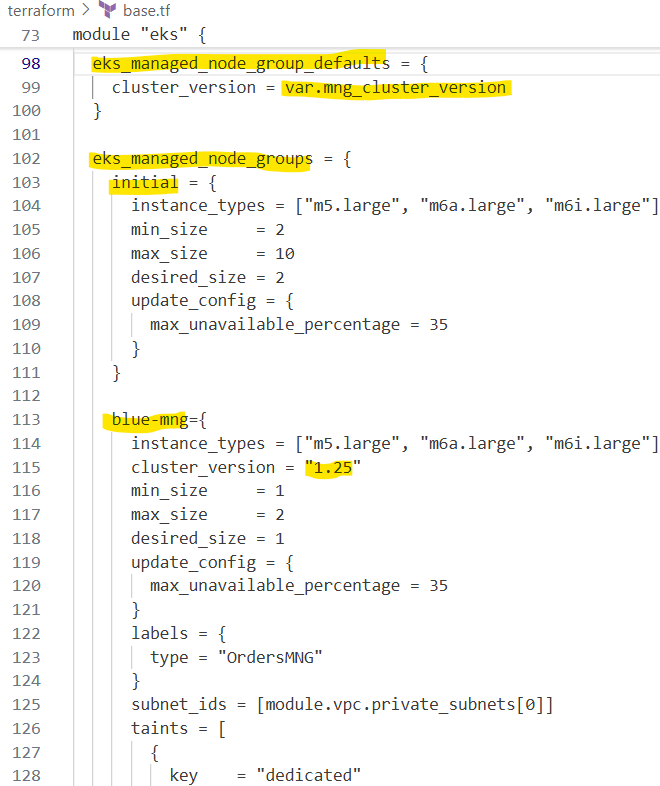

2.5 Terraform 실습 환경 구성 정리

2.5.1 전체 파일 구조 및 상태

- 실습에 사용된 Terraform 디렉토리 내 주요 파일 목록:

# 파일 확인

ec2-user:~/environment:$ ls -lrt terraform/

total 40

-rw-r--r--. 1 ec2-user ec2-user 625 Aug 27 2024 vpc.tf

-rw-r--r--. 1 ec2-user ec2-user 542 Aug 27 2024 versions.tf

-rw-r--r--. 1 ec2-user ec2-user 353 Aug 27 2024 variables.tf

-rw-r--r--. 1 ec2-user ec2-user 282 Aug 27 2024 outputs.tf

-rw-r--r--. 1 ec2-user ec2-user 1291 Aug 27 2024 gitops-setup.tf

-rw-r--r--. 1 ec2-user ec2-user 4512 Feb 5 01:15 base.tf

-rw-r--r--. 1 ec2-user ec2-user 5953 Feb 13 19:55 addons.tf

-rw-r--r--. 1 ec2-user ec2-user 33 Mar 25 02:26 backend_override.tf

# 해당 경로에 tfstate 파일은 없다.

ec2-user:~/environment/terraform:$ terraform output

╷

│ Warning: No outputs foundterraform.tfstate파일은 로컬에 존재하지 않으며, S3에 저장되어 있음:

aws s3 ls

2025-03-28 11:56:25 workshop-stack-tfstatebackendbucketf0fc9a9d-wt2fbt9qjdpc

aws s3 ls s3://workshop-stack-tfstatebackendbucketf0fc9a9d-wt2fbt9qjdpc

2025-03-28 12:18:23 940996 terraform.tfstate

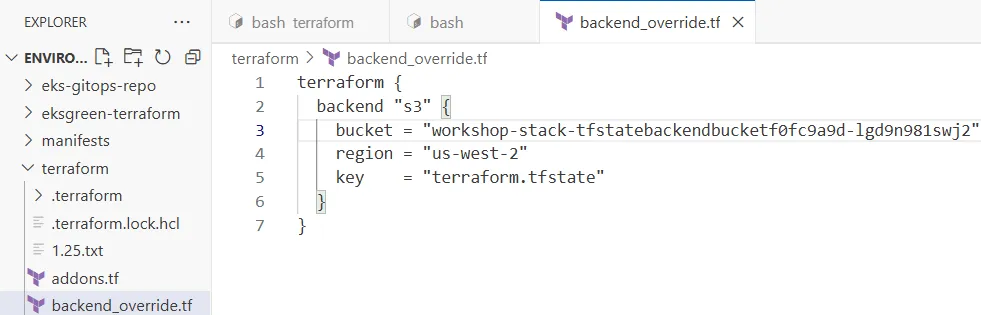

backend_override.tf에 다음과 같은 백엔드 설정이 포함됨:

# backend_override.tf 수정

terraform {

backend "s3" {

bucket = "workshop-stack-tfstatebackendbucketf0fc9a9d-wt2fbt9qjdpc"

region = "us-west-2"

key = "terraform.tfstate"

}

}

# 확인

terraform state list

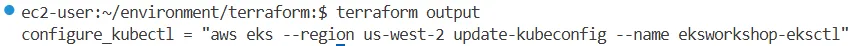

terraform output

configure_kubectl = "aws eks --region us-west-2 update-kubeconfig --name eksworkshop-eksctl"

2.5.2 주요 Terraform 구성 파일 설명

vpc.tf

terraform-aws-modules/vpc/aws모듈 사용- 퍼블릭/프라이빗 서브넷 구성, NAT Gateway 활성화

- Karpenter 탐지를 위한 태그 추가

versions.tf

- Terraform 버전 1.3 이상

- AWS, Helm, Kubernetes provider 정의

variables.tf

- 클러스터 버전:

1.25 - MNG 클러스터 버전:

1.25 - AMI ID는 빈 문자열로 기본값 지정됨

outputs.tf

kubectl설정 명령어 출력용 변수 정의

gitops-setup.tf

aws_codecommit_repository: GitOps용 CodeCommit 저장소 생성aws_iam_user: ArgoCD 사용자 생성- 관련 자격증명은 Secrets Manager에 저장

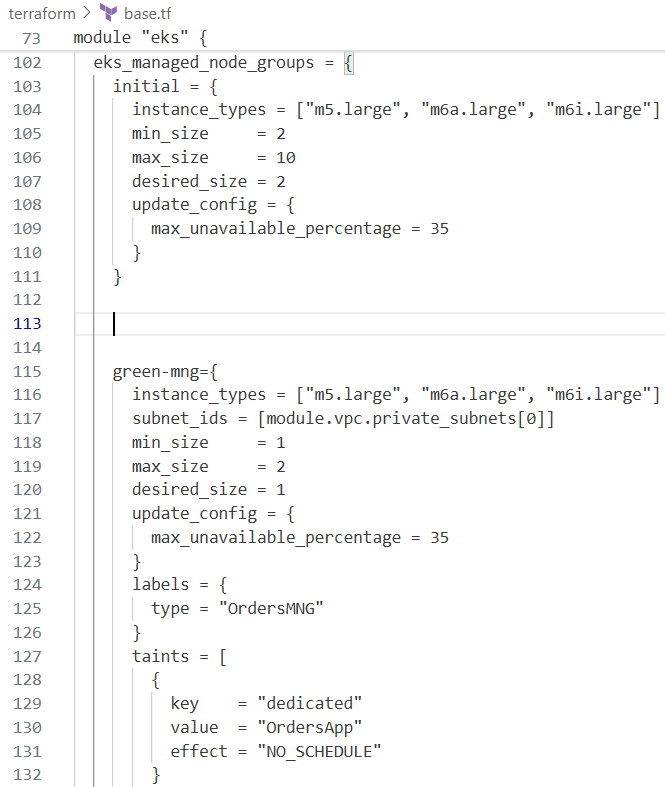

base.tf

terraform-aws-modules/eks/aws모듈 사용 (v20.14)- 클러스터, Fargate 프로필, EKS 관리형 및 셀프매니지드 노드 그룹 구성

karpenter.sh/discovery태그 포함self_managed_node_groups의 경우 사용자 정의 AMI와 bootstrap 인자 포함

addons.tf

aws-ia/eks-blueprints-addons/aws모듈 사용 (v1.16)- EKS Add-ons 설치:

- CoreDNS

- Kube-proxy

- VPC CNI

- aws-ebs-csi-driver (IRSA 연동)

- ArgoCD는 LoadBalancer 형식으로 배포

- Karpenter, EFS CSI 드라이버, Metrics Server 등 활성화

- EFS 구성 포함 (mount target 및 security group 지정)

- Storage Class 구성:

gp2: 기본 클래스 해제gp3: 기본 클래스 지정efs: EFS 기반 Storage Class 생성

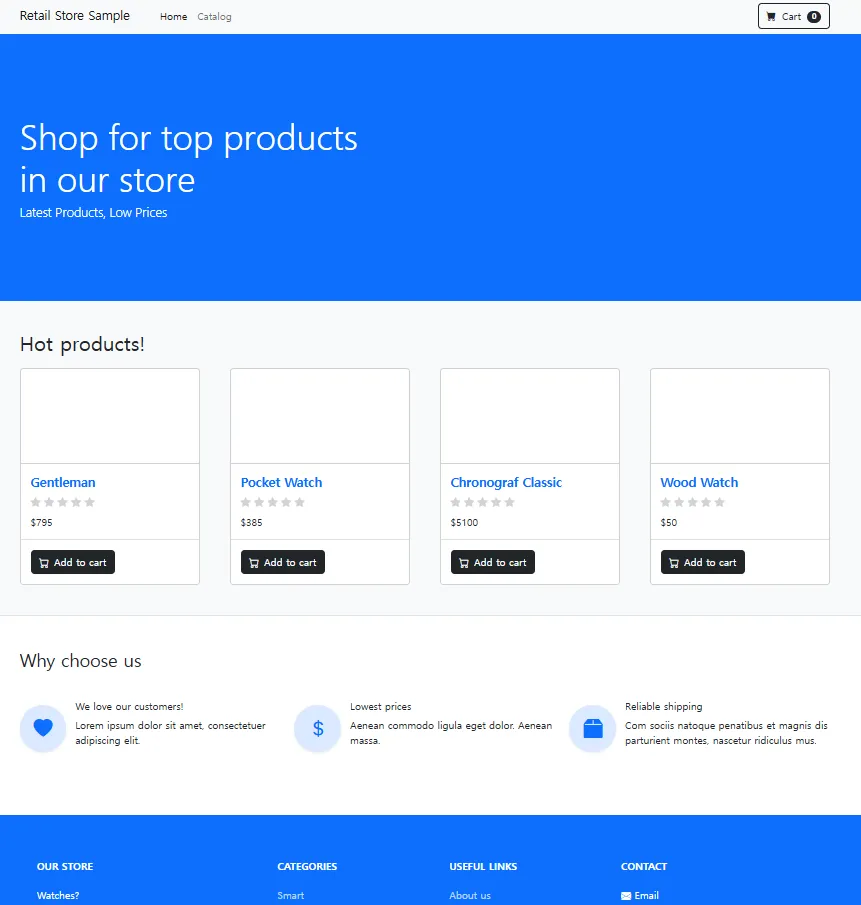

2.6 샘플 애플리케이션

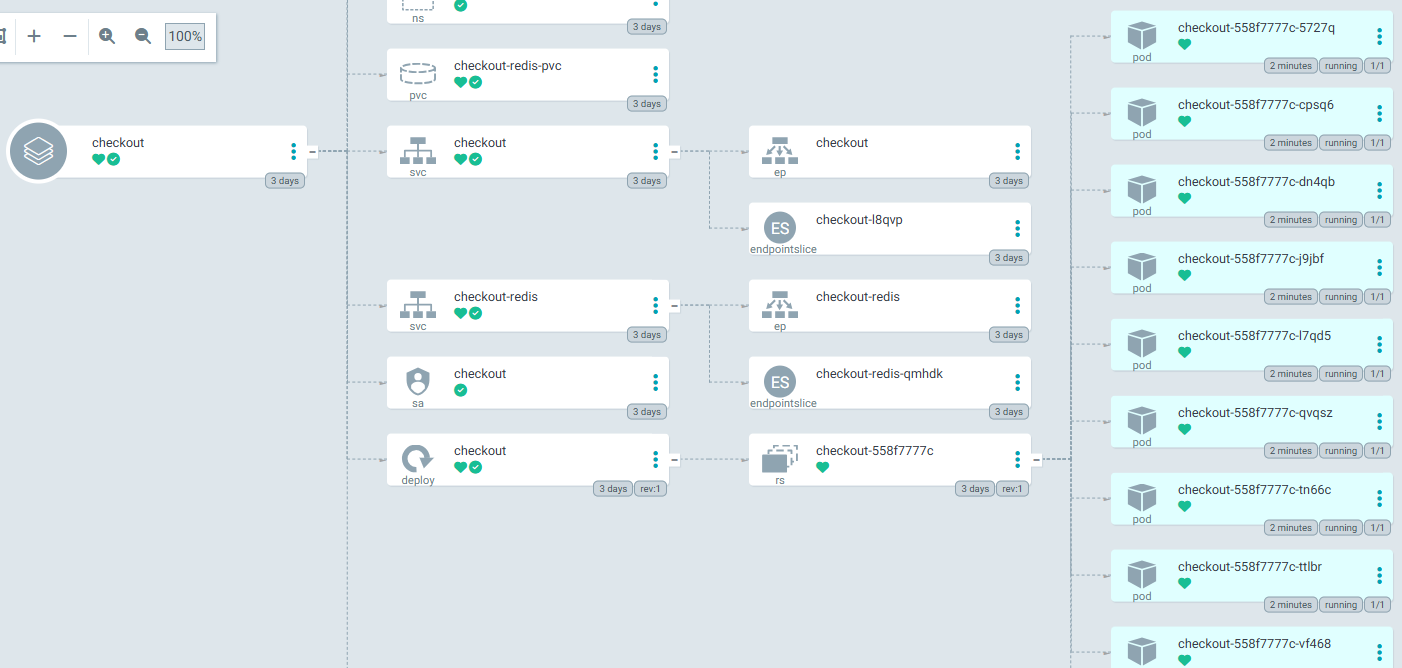

이 실습에서는 고객이 웹 인터페이스를 통해 상품을 탐색하고, 장바구니에 추가하며, 결제를 진행할 수 있는 간단한 소매점 웹 스토어 애플리케이션을 배포합니다. 해당 애플리케이션은 Amazon EKS 클러스터에 구성되며, 초기에는 외부 AWS 서비스(NLB, RDS 등) 없이 EKS 내부 리소스만으로 운영됩니다.

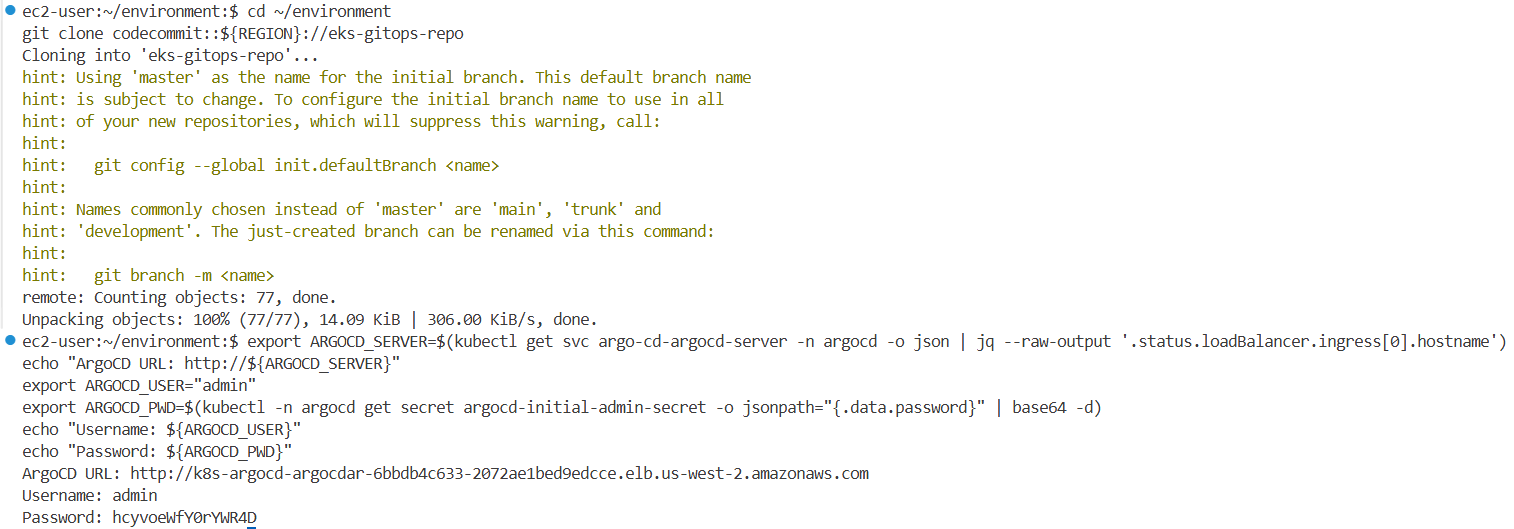

2.6.1 GitOps 방식 배포 (ArgoCD)

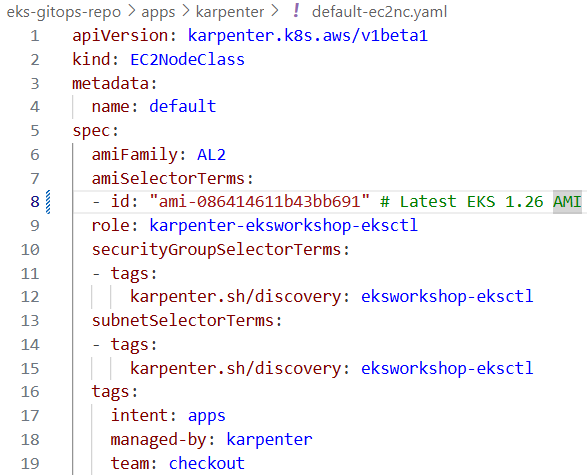

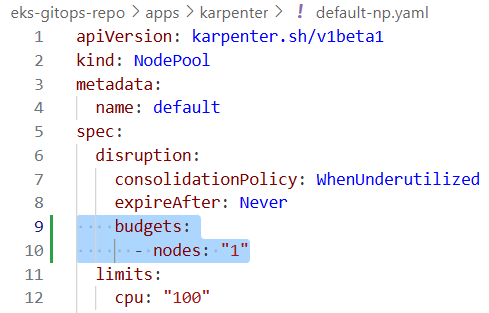

GitOps를 위해 AWS CodeCommit 저장소(eks-gitops-repo)가 활용됩니다. 해당 저장소는 로컬 IDE 환경에 복제하여 ArgoCD에 연동합니다.

#

cd ~/environment

git clone codecommit::${REGION}://eks-gitops-repo

# 디렉토리 구조

sudo yum install tree -y

tree eks-gitops-repo/ -L 2

eks-gitops-repo/

├── app-of-apps

│ ├── Chart.yaml

│ ├── templates

│ └── values.yaml

└── apps

├── assets

├── carts

├── catalog

├── checkout

├── karpenter

├── kustomization.yaml

├── orders

├── other

├── rabbitmq

└── ui

# ArgoCD 접속 정보

# Login to ArgoCD Console using credentials from following commands:

export ARGOCD_SERVER=$(kubectl get svc argo-cd-argocd-server -n argocd -o json | jq --raw-output '.status.loadBalancer.ingress[0].hostname')

echo "ArgoCD URL: http://${ARGOCD_SERVER}"

ArgoCD URL: http://k8s-argocd-argocdar-01634fea43-3cdeb4d8a7e05ff9.elb.us-west-2.amazonaws.com

export ARGOCD_USER="admin"

export ARGOCD_PWD=$(kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d)

echo "Username: ${ARGOCD_USER}"

echo "Password: ${ARGOCD_PWD}"

Username: admin

Password: 7kYgVfdcwv7aOo3s

혹은

export | grep ARGOCD_PWD

declare -x ARGOCD_PWD="BM4BevfR1u5GZHB3"

#

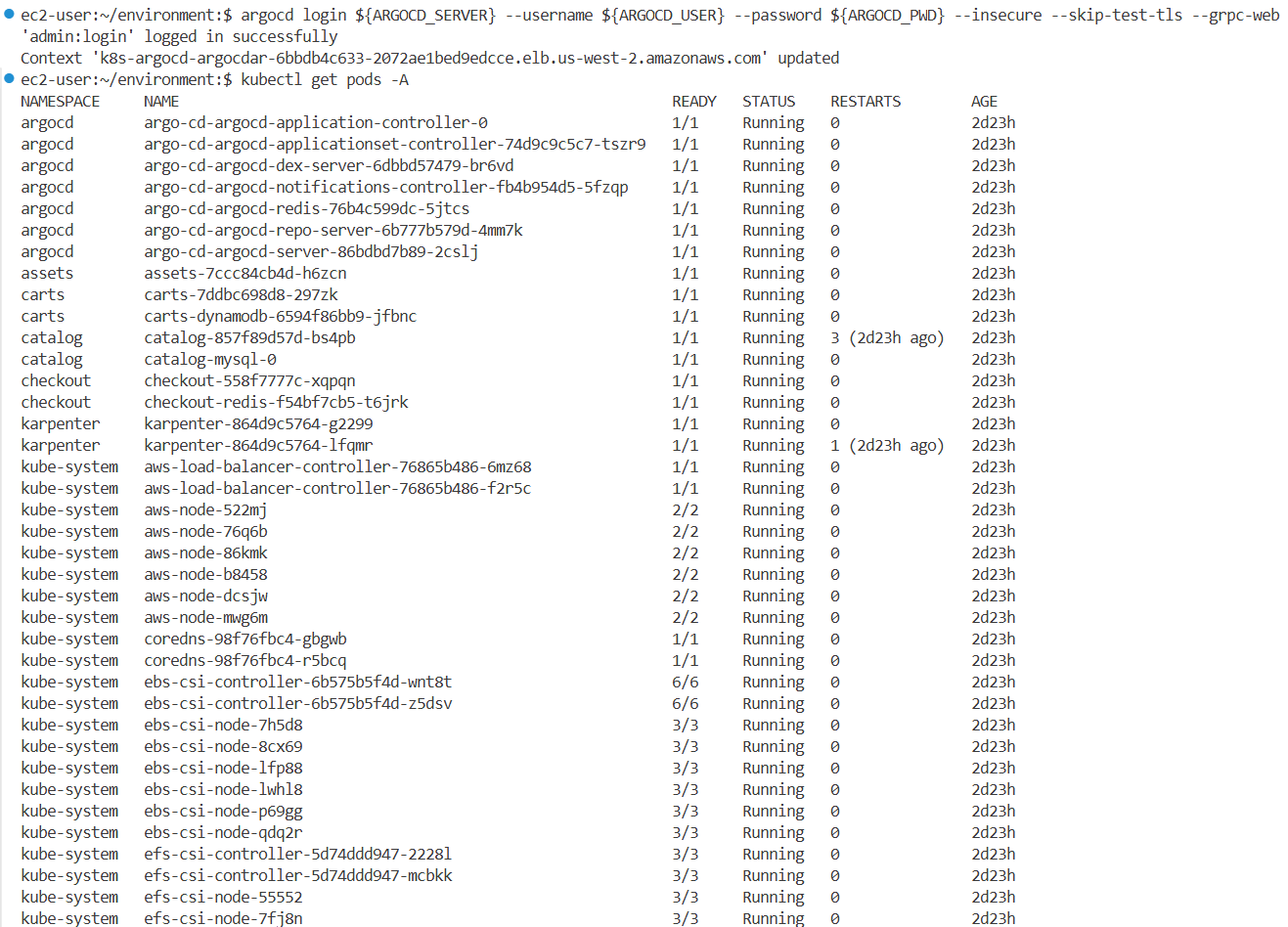

argocd login ${ARGOCD_SERVER} --username ${ARGOCD_USER} --password ${ARGOCD_PWD} --insecure --skip-test-tls --grpc-web

'admin:login' logged in successfully

Context 'k8s-argocd-argocdar-eb7166e616-ed2069d8c15177c9.elb.us-west-2.amazonaws.com' updated

#

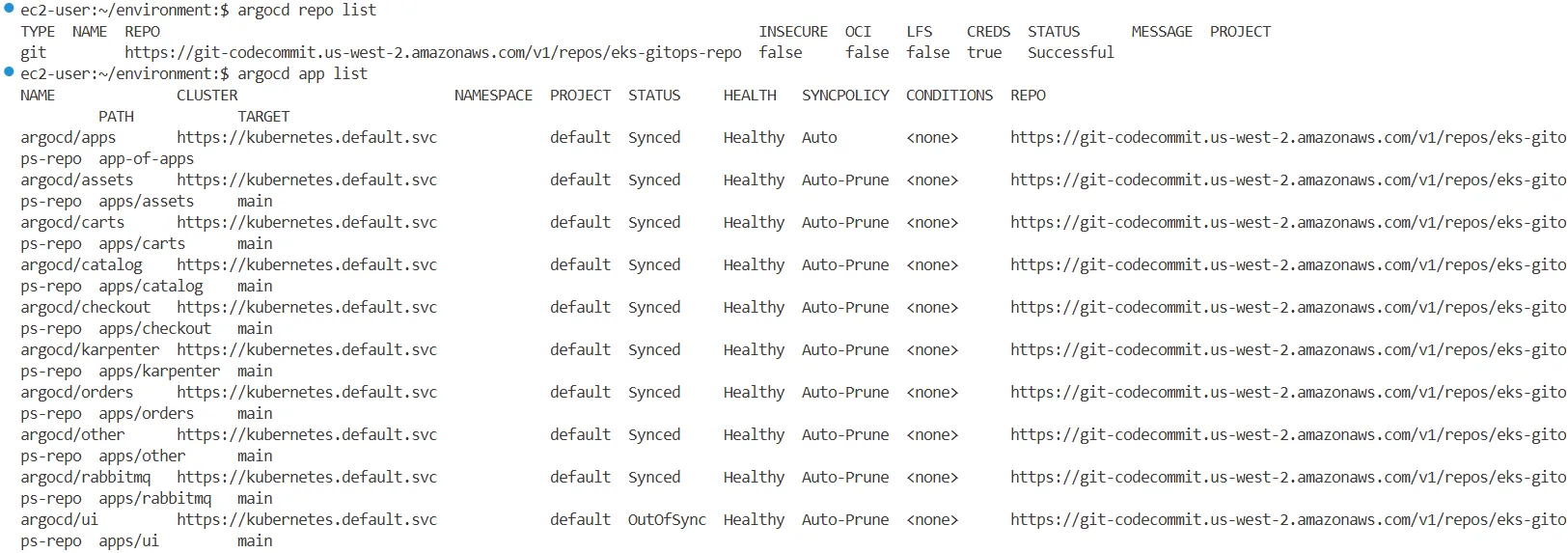

argocd repo list

TYPE NAME REPO INSECURE OCI LFS CREDS STATUS MESSAGE PROJECT

git https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo false false false true Successful

#

argocd app list

NAME CLUSTER NAMESPACE PROJECT STATUS HEALTH SYNCPOLICY CONDITIONS REPO PATH TARGET

argocd/apps https://kubernetes.default.svc default Synced Healthy Auto <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo app-of-apps

argocd/assets https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/assets main

argocd/carts https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/carts main

argocd/catalog https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/catalog main

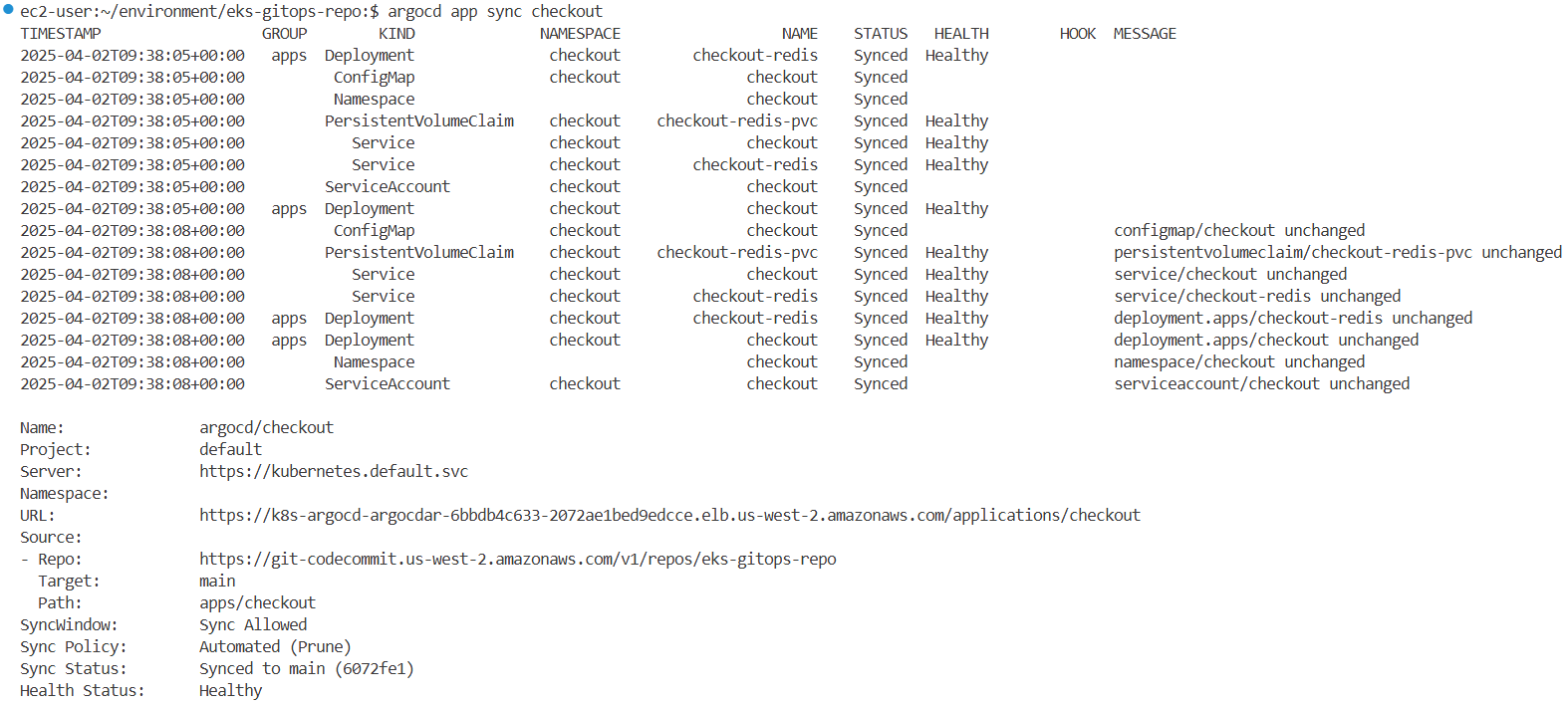

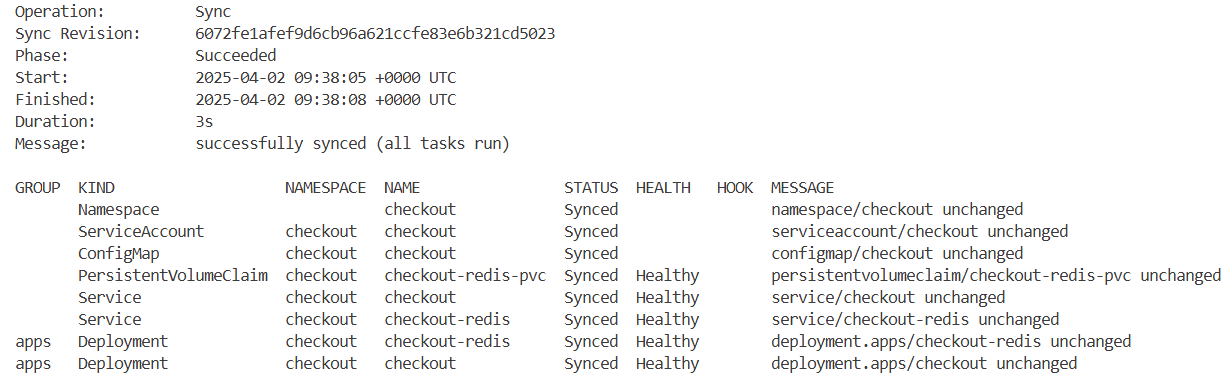

argocd/checkout https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/checkout main

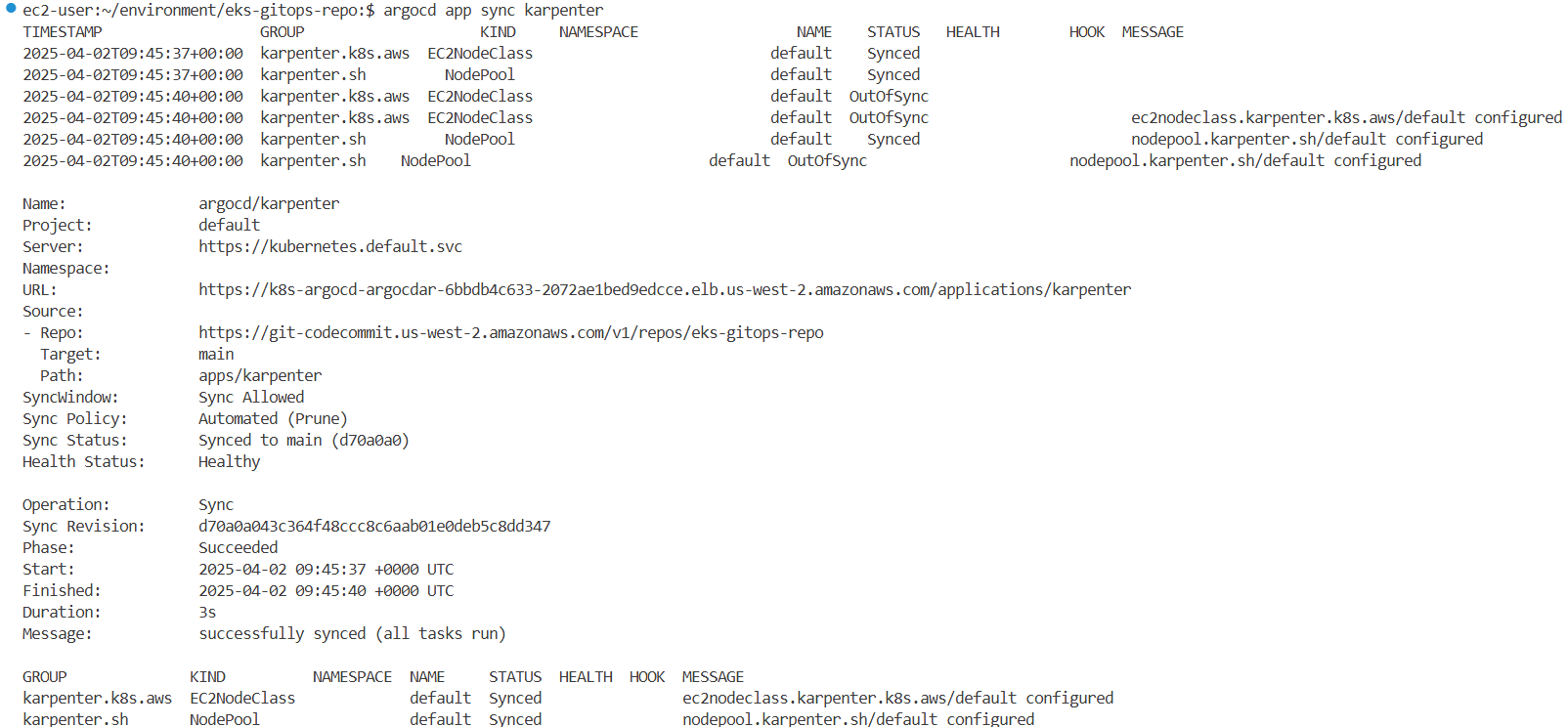

argocd/karpenter https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/karpenter main

argocd/orders https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/orders main

argocd/other https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/other main

argocd/rabbitmq https://kubernetes.default.svc default Synced Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/rabbitmq main

argocd/ui https://kubernetes.default.svc default OutOfSync Healthy Auto-Prune <none> https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo apps/ui main

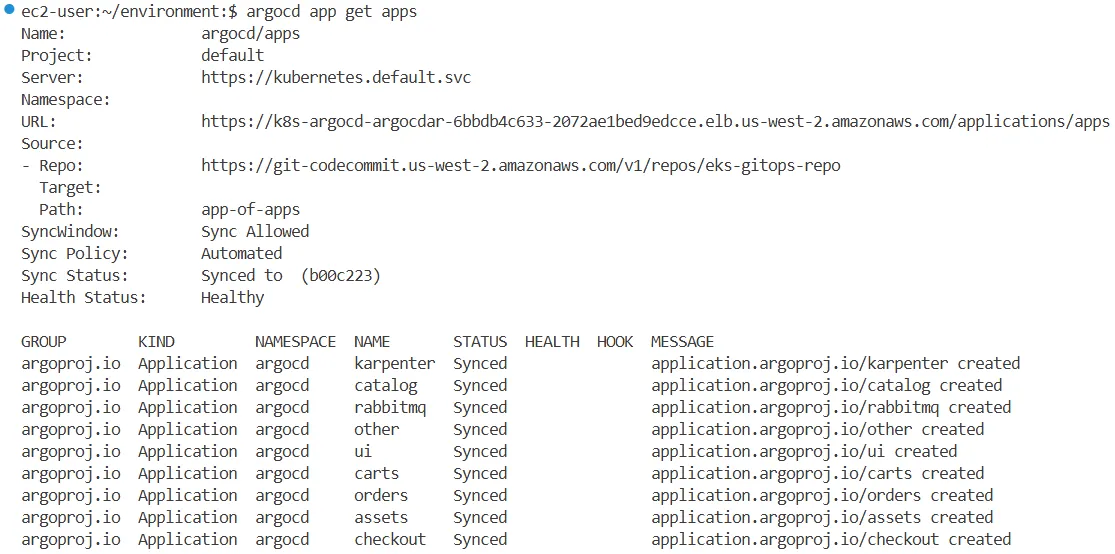

argocd app get apps

Name: argocd/apps

Project: default

Server: https://kubernetes.default.svc

Namespace:

URL: https://k8s-argocd-argocdar-eb7166e616-ed2069d8c15177c9.elb.us-west-2.amazonaws.com/applications/apps

Source:

- Repo: https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo

Target:

Path: app-of-apps

SyncWindow: Sync Allowed

Sync Policy: Automated

Sync Status: Synced to (acc257a)

Health Status: Healthy

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

argoproj.io Application argocd karpenter Synced application.argoproj.io/karpenter created

argoproj.io Application argocd carts Synced application.argoproj.io/carts created

argoproj.io Application argocd assets Synced application.argoproj.io/assets created

argoproj.io Application argocd catalog Synced application.argoproj.io/catalog created

argoproj.io Application argocd checkout Synced application.argoproj.io/checkout created

argoproj.io Application argocd rabbitmq Synced application.argoproj.io/rabbitmq created

argoproj.io Application argocd other Synced application.argoproj.io/other created

argoproj.io Application argocd ui Synced application.argoproj.io/ui created

argoproj.io Application argocd orders Synced application.argoproj.io/orders created

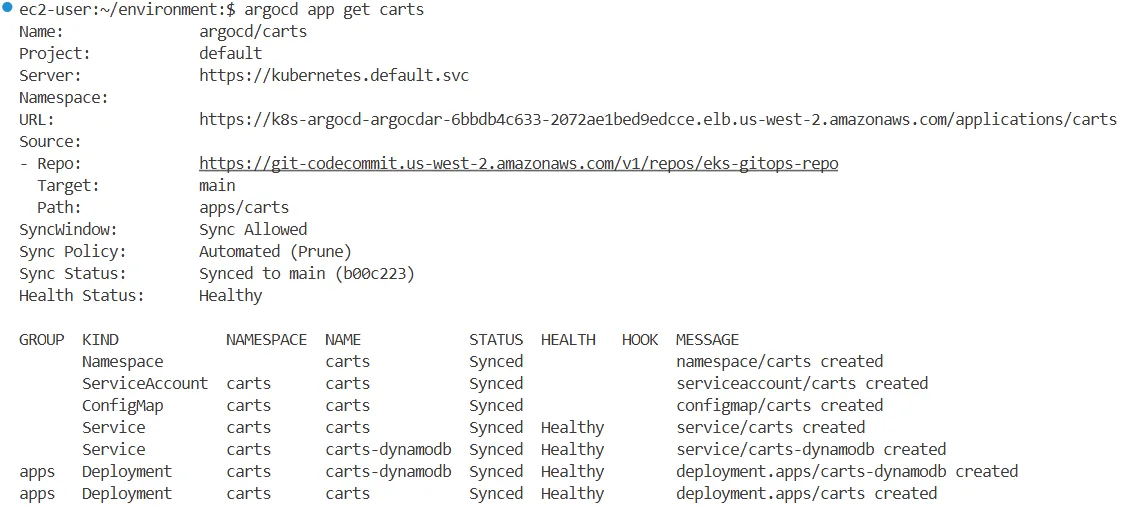

argocd app get carts

Name: argocd/carts

Project: default

Server: https://kubernetes.default.svc

Namespace:

URL: https://k8s-argocd-argocdar-eb7166e616-ed2069d8c15177c9.elb.us-west-2.amazonaws.com/applications/carts

Source:

- Repo: https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo

Target: main

Path: apps/carts

SyncWindow: Sync Allowed

Sync Policy: Automated (Prune)

Sync Status: Synced to main (acc257a)

Health Status: Healthy

GROUP KIND NAMESPACE NAME STATUS HEALTH HOOK MESSAGE

Namespace carts Synced namespace/carts created

ServiceAccount carts carts Synced serviceaccount/carts created

ConfigMap carts carts Synced configmap/carts created

Service carts carts-dynamodb Synced Healthy service/carts-dynamodb created

Service carts carts Synced Healthy service/carts created

apps Deployment carts carts Synced Healthy deployment.apps/carts created

apps Deployment carts carts-dynamodb Synced Healthy deployment.apps/carts-dynamodb created

#

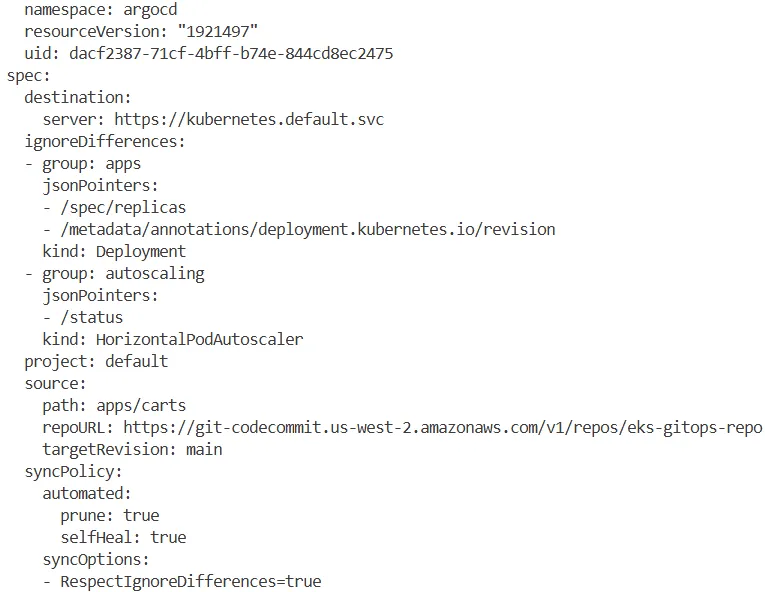

argocd app get carts -o yaml

...

spec:

destination:

server: https://kubernetes.default.svc

ignoreDifferences:

- group: apps

jsonPointers:

- /spec/replicas

- /metadata/annotations/deployment.kubernetes.io/revision

kind: Deployment

- group: autoscaling

jsonPointers:

- /status

kind: HorizontalPodAutoscaler

project: default

source:

path: apps/carts

repoURL: https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo

targetRevision: main

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- RespectIgnoreDifferences=true

...

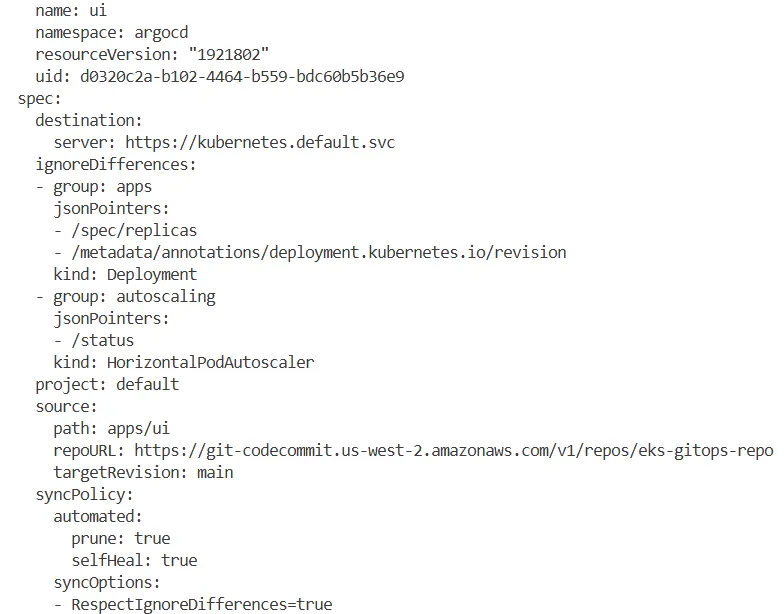

argocd app get ui -o yaml

...

spec:

destination:

server: https://kubernetes.default.svc

ignoreDifferences:

- group: apps

jsonPointers:

- /spec/replicas

- /metadata/annotations/deployment.kubernetes.io/revision

kind: Deployment

- group: autoscaling

jsonPointers:

- /status

kind: HorizontalPodAutoscaler

project: default

source:

path: apps/ui

repoURL: https://git-codecommit.us-west-2.amazonaws.com/v1/repos/eks-gitops-repo

targetRevision: main

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- RespectIgnoreDifferences=true

...

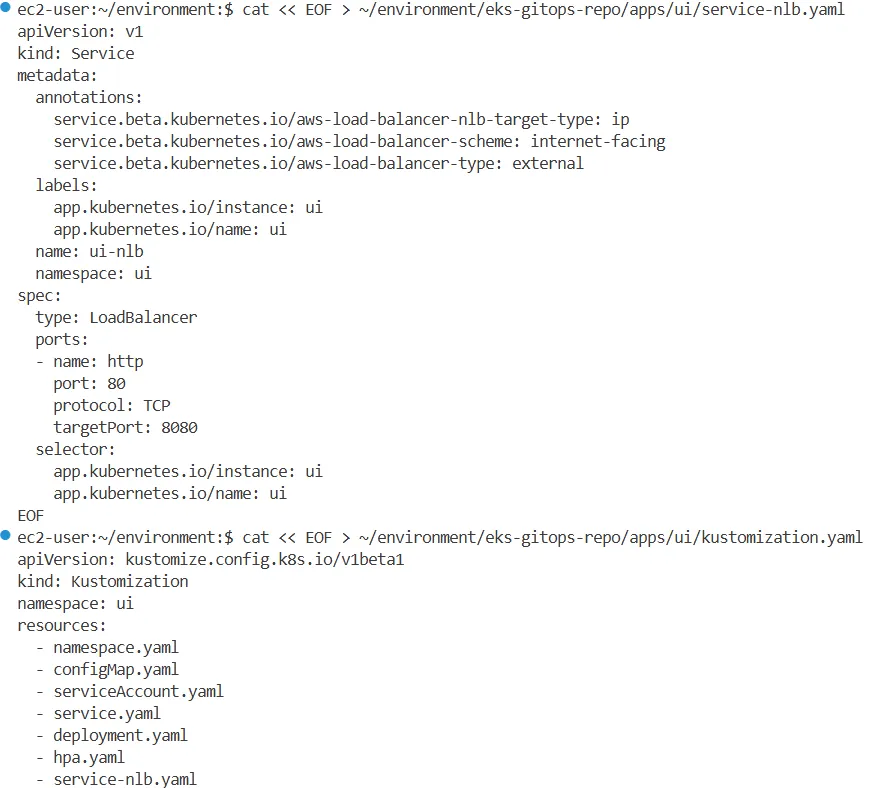

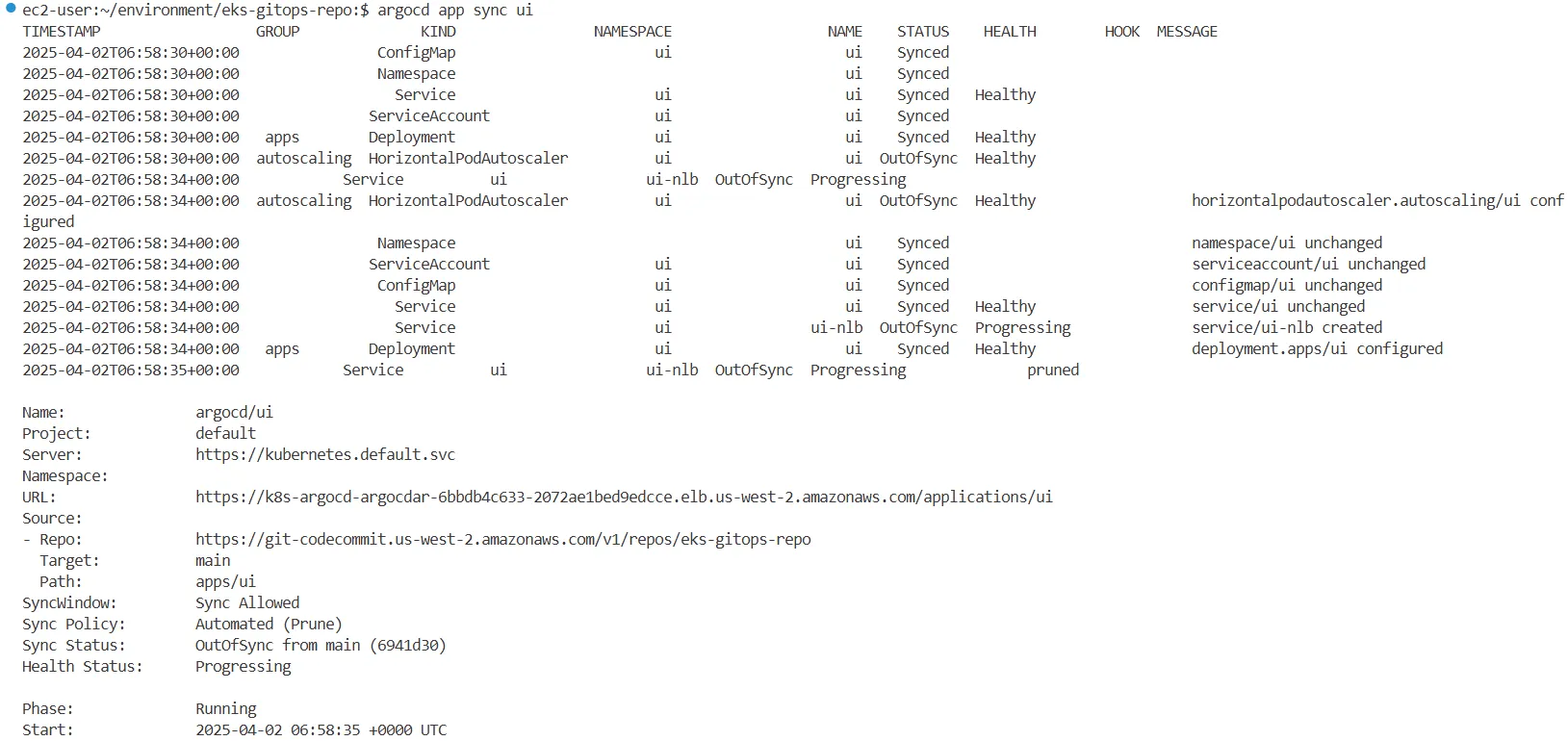

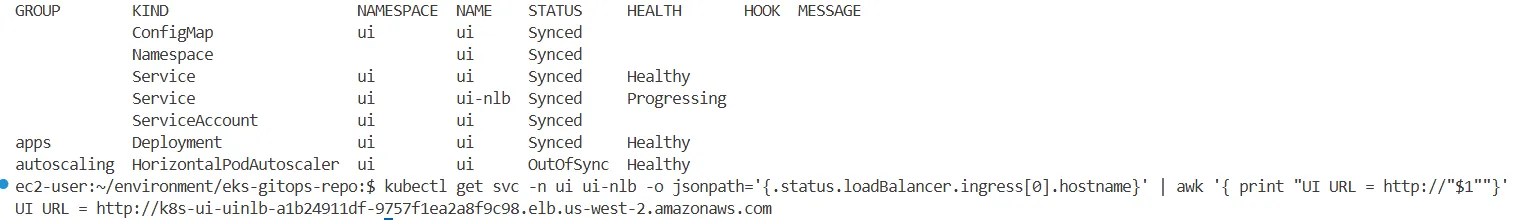

2.6.2 UI 접속을 위한 NLB 설정

#

cat << EOF > ~/environment/eks-gitops-repo/apps/ui/service-nlb.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-type: external

labels:

app.kubernetes.io/instance: ui

app.kubernetes.io/name: ui

name: ui-nlb

namespace: ui

spec:

type: LoadBalancer

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app.kubernetes.io/instance: ui

app.kubernetes.io/name: ui

EOF

cat << EOF > ~/environment/eks-gitops-repo/apps/ui/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: ui

resources:

- namespace.yaml

- configMap.yaml

- serviceAccount.yaml

- service.yaml

- deployment.yaml

- hpa.yaml

- service-nlb.yaml

EOF

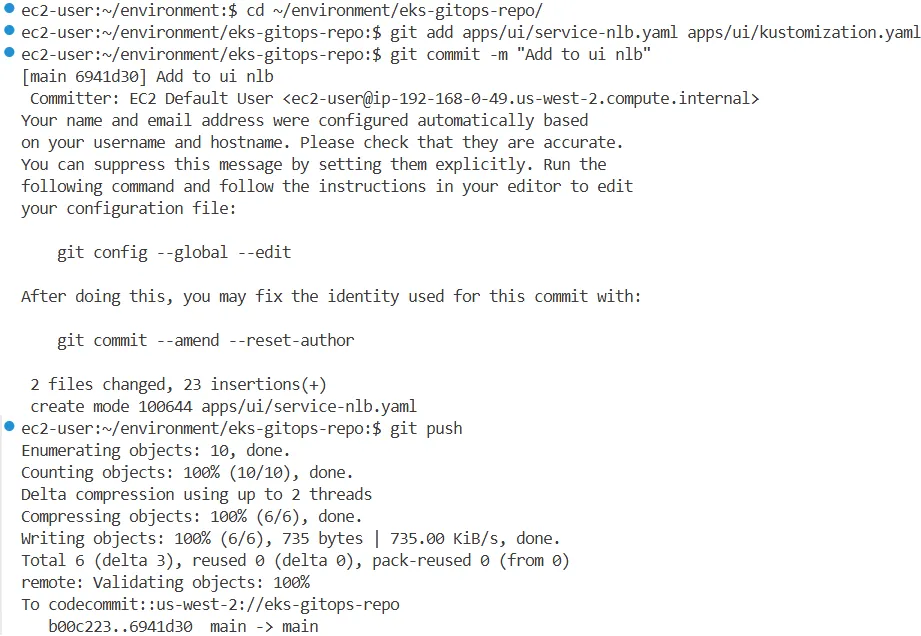

#

cd ~/environment/eks-gitops-repo/

git add apps/ui/service-nlb.yaml apps/ui/kustomization.yaml

git commit -m "Add to ui nlb"

git push

argocd app sync ui

...

#

# UI 접속 URL 확인 (1.5, 1.3 배율)

kubectl get svc -n ui ui-nlb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}' | awk '{ print "UI URL = http://"$1""}'

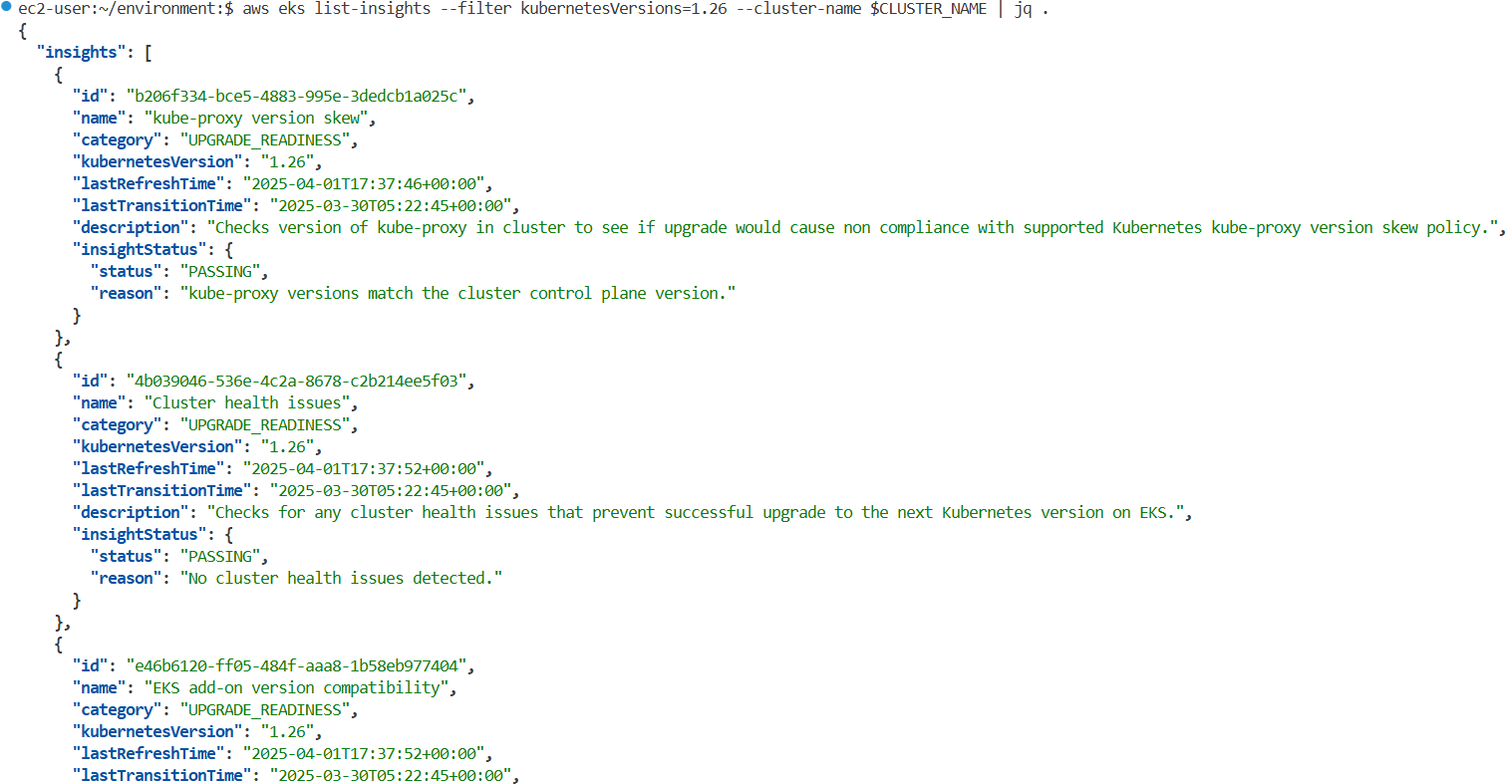

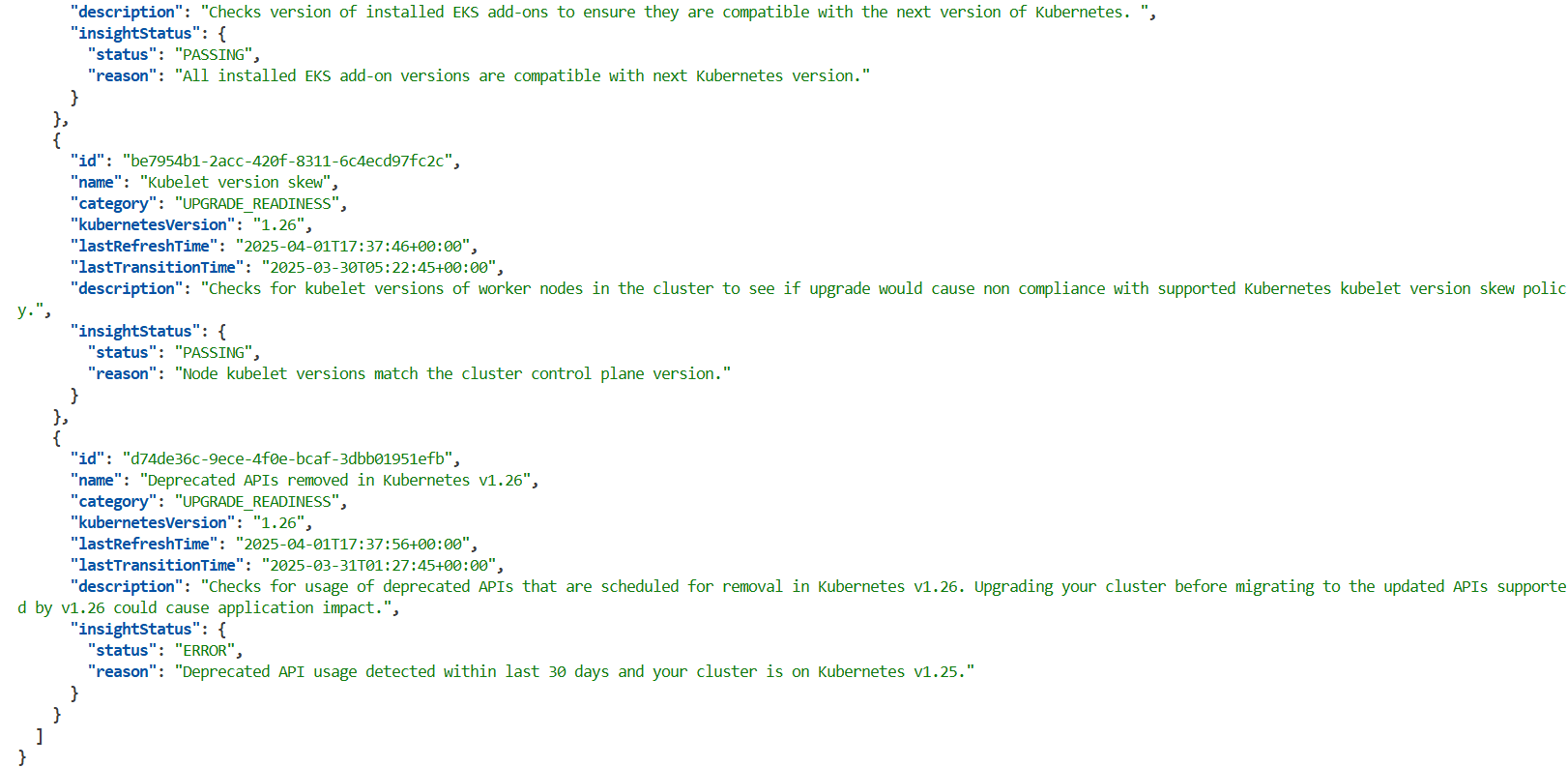

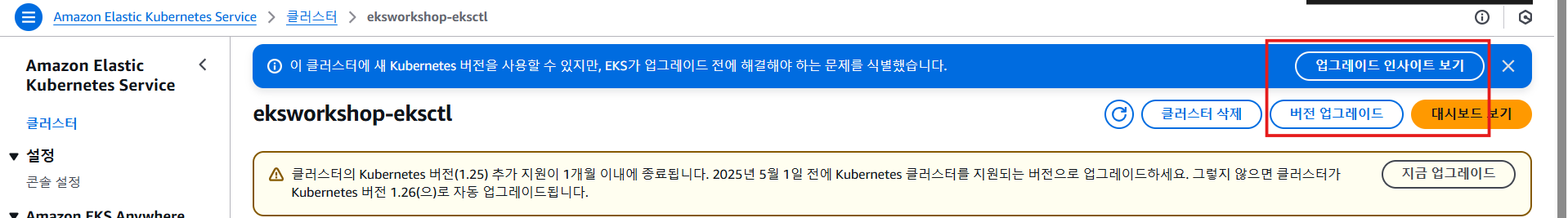

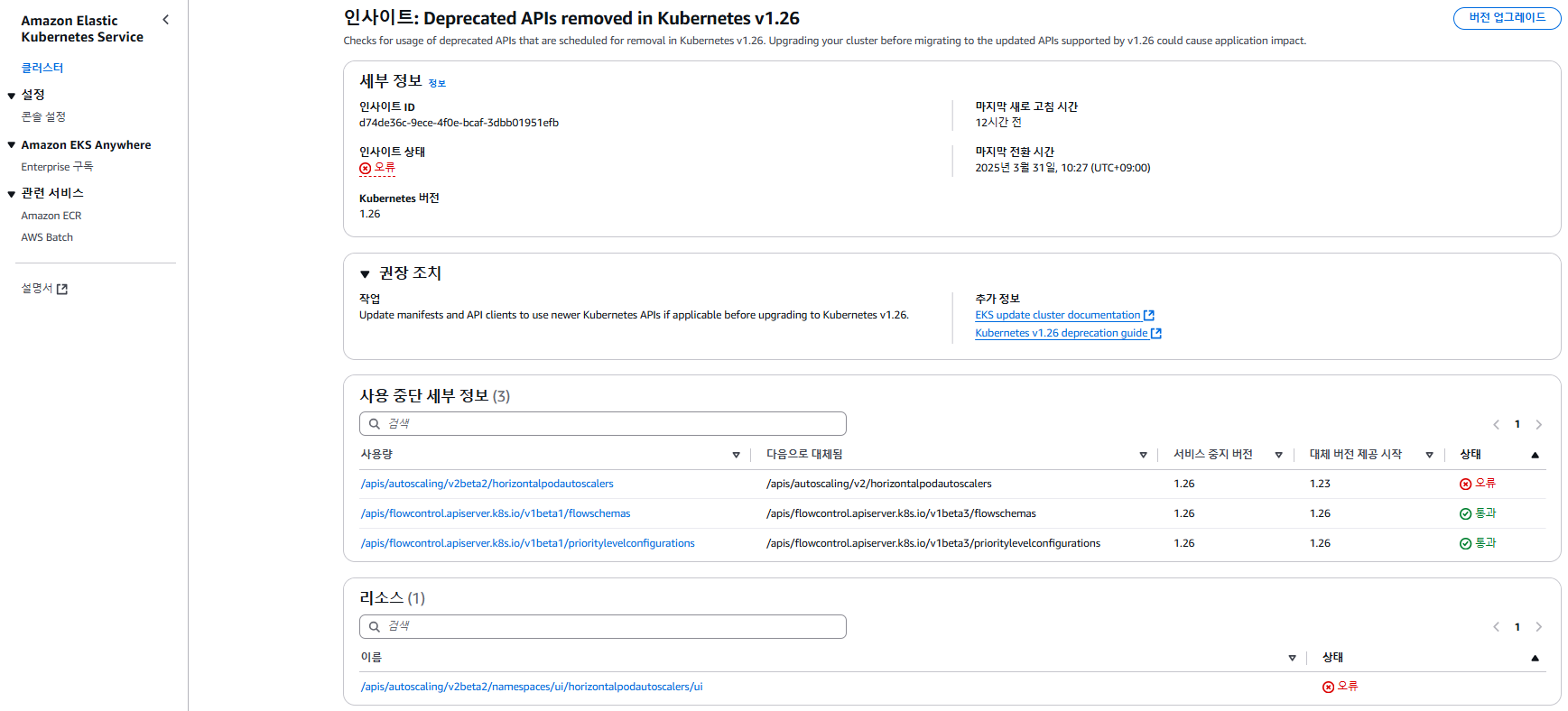

2.7 클러스터 업그레이드 준비 - EKS 업그레이드 인사이트

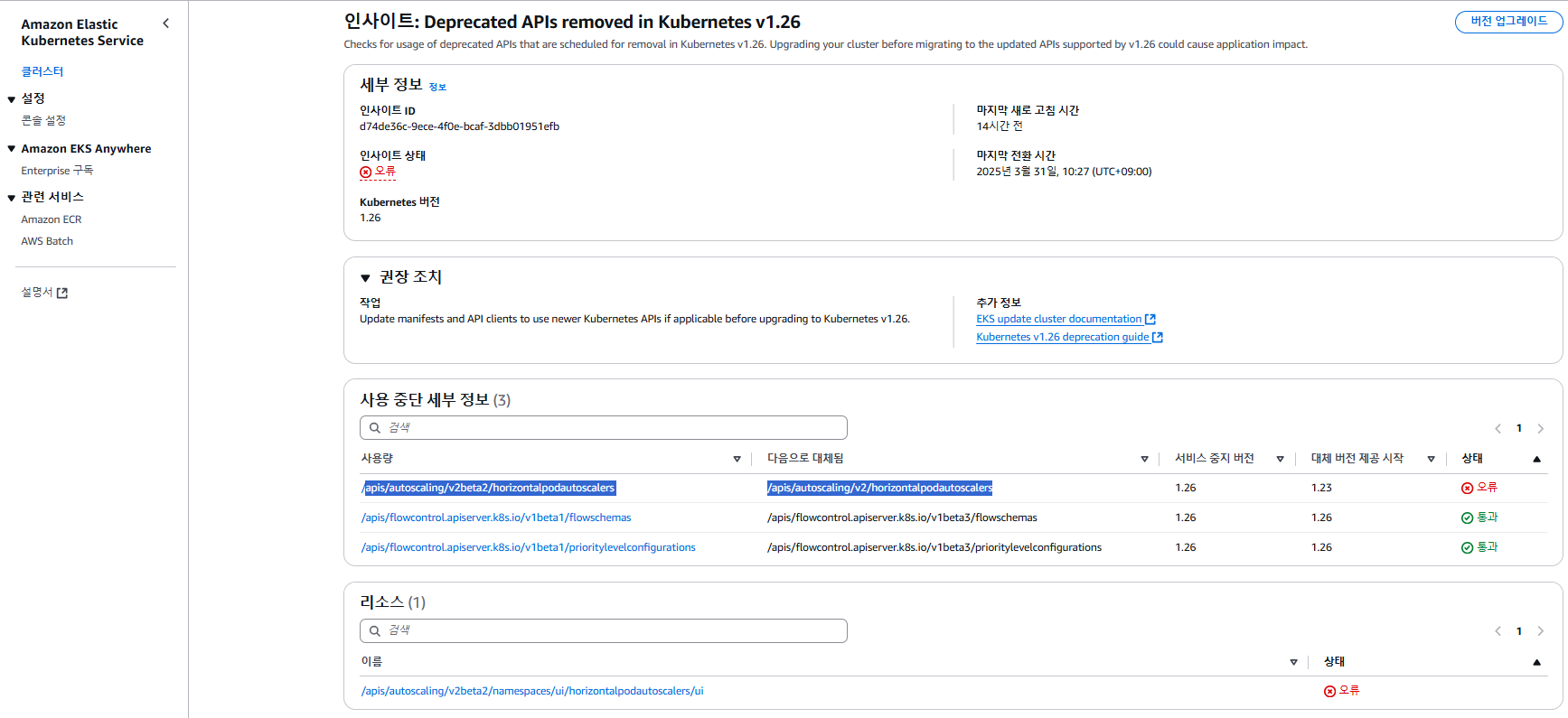

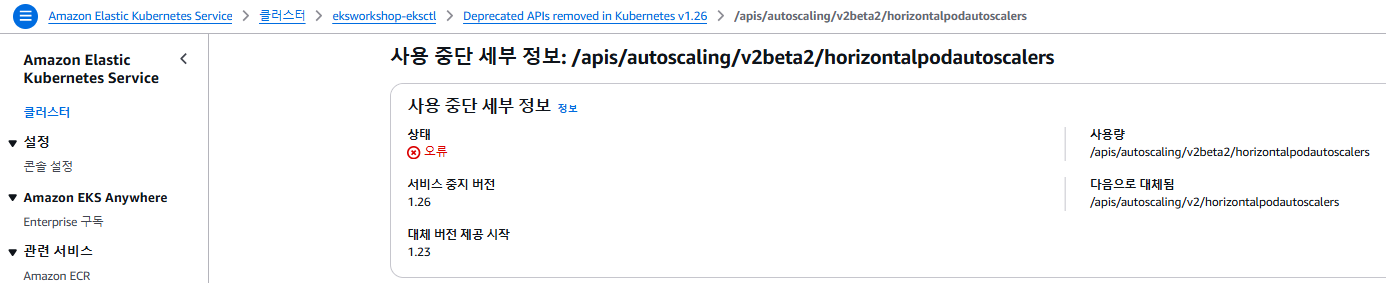

EKS Upgrade Insights는 Amazon EKS 클러스터에서 Kubernetes 업그레이드와 관련된 리스크를 사전에 파악하고, 해결책을 제공하는 기능입니다.

#

aws eks list-insights --filter kubernetesVersions=1.26 --cluster-name $CLUSTER_NAME | jq .

{

"insights": [

{

"id": "00d5cb0c-45ef-4a1a-9324-d499a143baad",

"name": "Deprecated APIs removed in Kubernetes v1.26",

"category": "UPGRADE_READINESS",

"kubernetesVersion": "1.26",

"lastRefreshTime": "2025-03-29T08:28:13+00:00",

"lastTransitionTime": "2025-03-29T08:27:52+00:00",

"description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.26. Upgrading your cluster before migrating to the updated APIs supported by v1.26 could cause application impact.",

"insightStatus": {

"status": "ERROR",

"reason": "Deprecated API usage detected within last 30 days and your cluster is on Kubernetes v1.25."

}

},

...

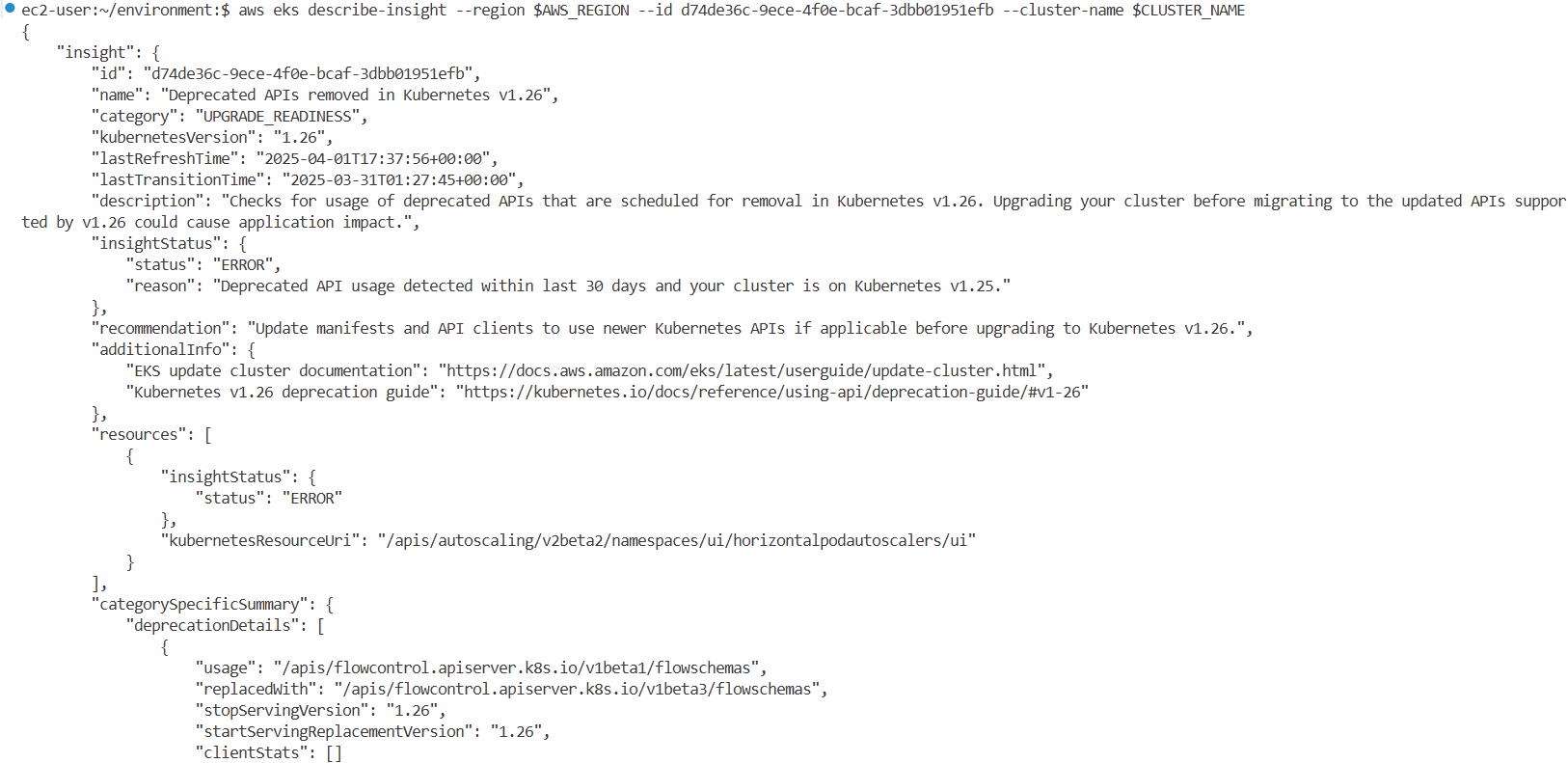

# 상세 설명 확인

aws eks describe-insight --region $AWS_REGION --id 00d5cb0c-45ef-4a1a-9324-d499a143baad --cluster-name $CLUSTER_NAME | jq

{

"insight": {

"id": "00d5cb0c-45ef-4a1a-9324-d499a143baad",

"name": "Deprecated APIs removed in Kubernetes v1.26",

"category": "UPGRADE_READINESS",

"kubernetesVersion": "1.26",

"lastRefreshTime": "2025-03-29T08:28:13+00:00",

"lastTransitionTime": "2025-03-29T08:27:52+00:00",

"description": "Checks for usage of deprecated APIs that are scheduled for removal in Kubernetes v1.26. Upgrading your cluster before migrating to the updated APIs supported by v1.26 could cause application impact.",

"insightStatus": {

"status": "ERROR",

"reason": "Deprecated API usage detected within last 30 days and your cluster is on Kubernetes v1.25."

},

"recommendation": "Update manifests and API clients to use newer Kubernetes APIs if applicable before upgrading to Kubernetes v1.26.",

"additionalInfo": {

"EKS update cluster documentation": "https://docs.aws.amazon.com/eks/latest/userguide/update-cluster.html",

"Kubernetes v1.26 deprecation guide": "https://kubernetes.io/docs/reference/using-api/deprecation-guide/#v1-26"

},

"resources": [

{

"insightStatus": {

"status": "ERROR"

},

"kubernetesResourceUri": "/apis/autoscaling/v2beta2/namespaces/ui/horizontalpodautoscalers/ui"

}

],

"categorySpecificSummary": {

"deprecationDetails": [

{

"usage": "/apis/flowcontrol.apiserver.k8s.io/v1beta1/flowschemas",

"replacedWith": "/apis/flowcontrol.apiserver.k8s.io/v1beta3/flowschemas",

"stopServingVersion": "1.26",

"startServingReplacementVersion": "1.26",

"clientStats": []

},

{

"usage": "/apis/flowcontrol.apiserver.k8s.io/v1beta1/prioritylevelconfigurations",

"replacedWith": "/apis/flowcontrol.apiserver.k8s.io/v1beta3/prioritylevelconfigurations",

"stopServingVersion": "1.26",

"startServingReplacementVersion": "1.26",

"clientStats": []

},

{

"usage": "/apis/autoscaling/v2beta2/horizontalpodautoscalers",

"replacedWith": "/apis/autoscaling/v2/horizontalpodautoscalers",

"stopServingVersion": "1.26",

"startServingReplacementVersion": "1.23",

"clientStats": [

{

"userAgent": "argocd-application-controller",

"numberOfRequestsLast30Days": 43077,

"lastRequestTime": "2025-03-29T08:17:37+00:00"

}

]

}

],

"addonCompatibilityDetails": []

}

}

}

AWS 관리 콘솔 확인

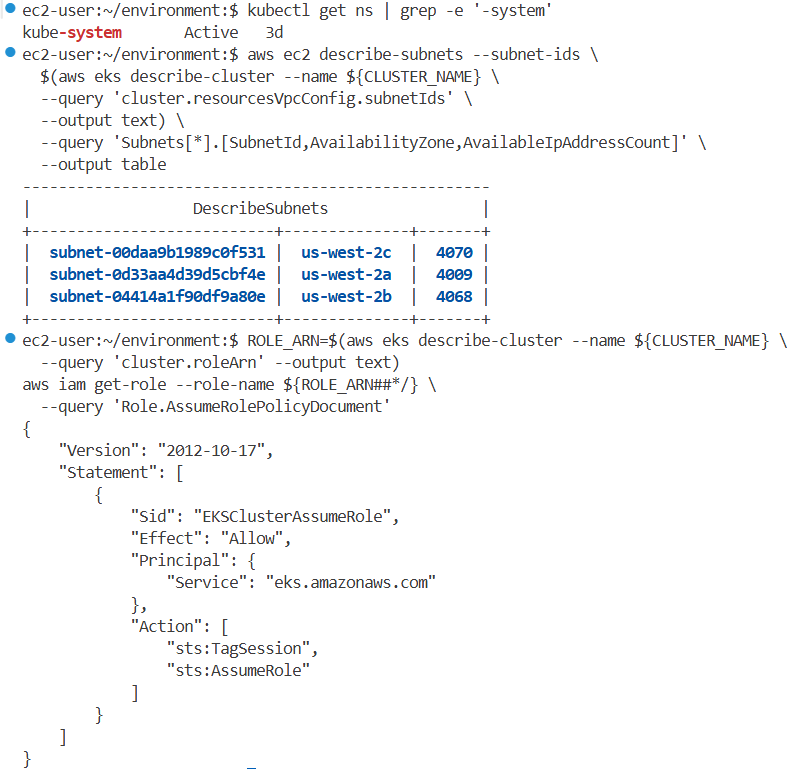

2.8 클러스터 업그레이드 준비 - (선택 사항) 설명 시나리오

2.9 클러스터 업그레이드 준비 - AWS EKS 업그레이드 전 기본 요구 사항 확인

사용 가능한 IP 주소 및 EKS IAM Role 확인

IAM 역할을 사용할 수 있는지, 그리고 계정에 올바른 assume role policy이 있는지 확인하려면 다음 명령을 실행할 수 있습니다.

aws ec2 describe-subnets --subnet-ids \

$(aws eks describe-cluster --name ${CLUSTER_NAME} \

--query 'cluster.resourcesVpcConfig.subnetIds' \

--output text) \

--query 'Subnets[*].[SubnetId,AvailabilityZone,AvailableIpAddressCount]' \

--output table

----------------------------------------------------

| DescribeSubnets |

+---------------------------+--------------+-------+

| subnet-0aeb12f673d69f7c5 | us-west-2c | 4038 |

| subnet-047ab61ad85c50486 | us-west-2b | 4069 |

| subnet-01bbd11a892aec6ee | us-west-2a | 4030 |

+---------------------------+--------------+-------+

ROLE_ARN=$(aws eks describe-cluster --name ${CLUSTER_NAME} \

--query 'cluster.roleArn' --output text)

aws iam get-role --role-name ${ROLE_ARN##*/} \

--query 'Role.AssumeRolePolicyDocument' | jq

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "EKSClusterAssumeRole",

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": [

"sts:TagSession",

"sts:AssumeRole"

]

}

]

}

aws iam get-role --role-name ${ROLE_ARN##*/} | jq

...

2.10 클러스터 업그레이드 준비 - EKS 클러스터 업그레이드 고가용성(HA) 전략

EKS 환경에서 데이터 플레인 업그레이드 중 워크로드 가용성을 보장하기 위한 PodDisruptionBudget(PDB) 및 TopologySpreadConstraints 구성 작업에 대해 정리한 내용입니다.

2.10.1 목적 및 배경

EKS 환경에서 데이터 플레인(Data Plane) 업그레이드가 발생할 때, 워크로드의 가용성(Availability)을 유지하는 것이 매우 중요합니다. 특히, 단일 노드 또는 가용영역(AZ)에 집중된 Pod는 업그레이드 중에 중단될 수 있으므로, 이를 방지하기 위해 다음과 같은 설정이 필요합니다:

- PodDisruptionBudget(PDB) 설정

→ 자발적 중단(예: 노드 드레이닝) 시 최소한의 가용성을 보장함 - TopologySpreadConstraints 설정

→ 워크로드를 다양한 노드 및 가용영역에 분산 배치하여 리스크를 최소화함

특히, 서비스 중요도가 높은 워크로드는 이 설정들을 통해 무중단 운영을 구현하는 것이 필수입니다.

2.10.2 현재 상태 확인 및 준비

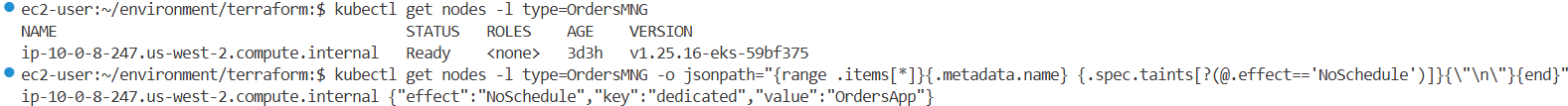

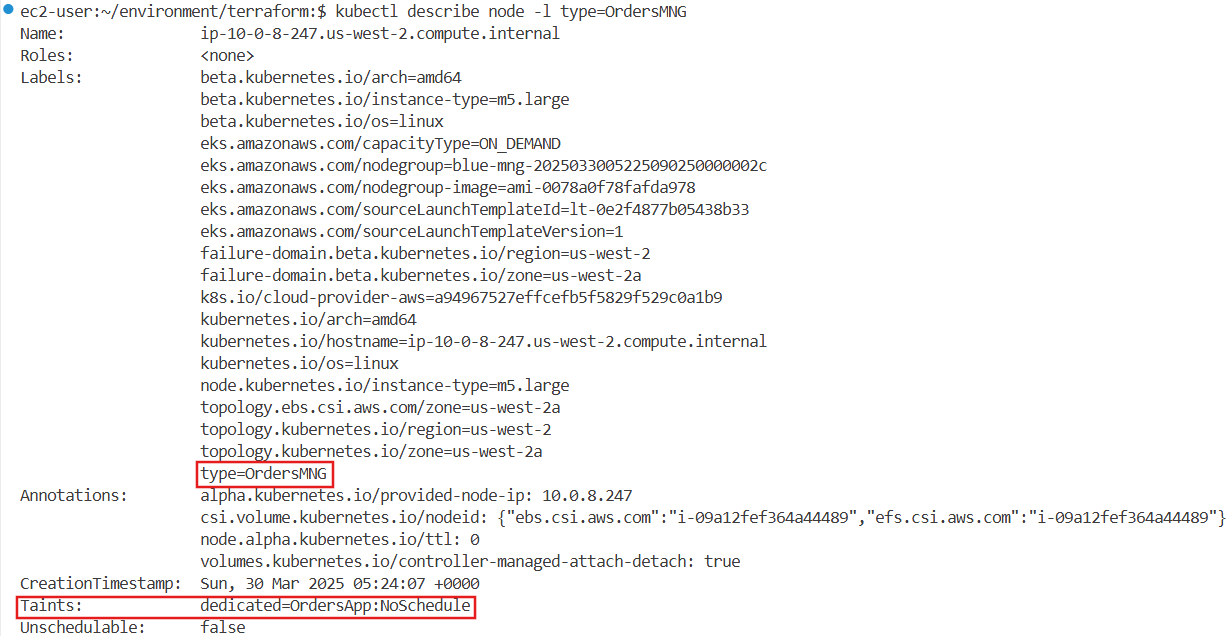

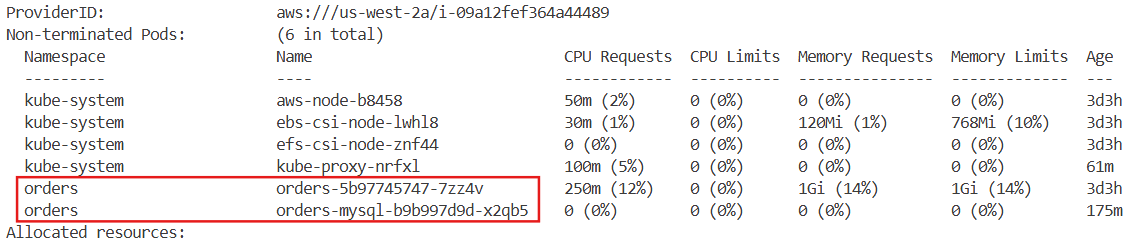

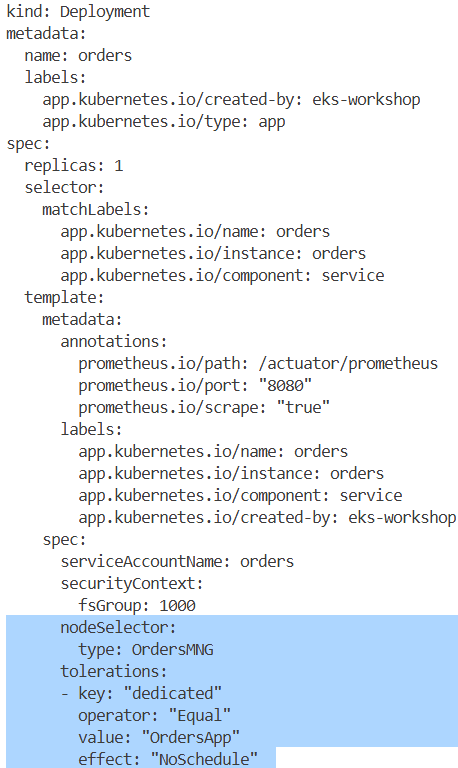

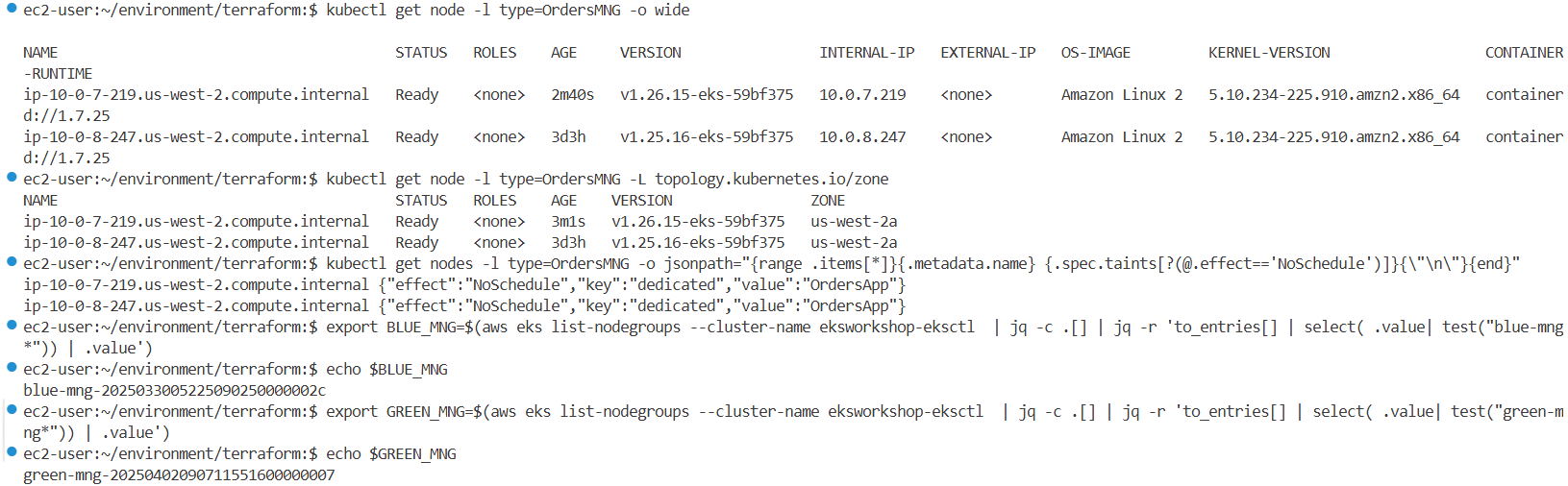

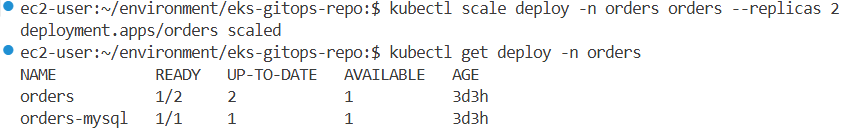

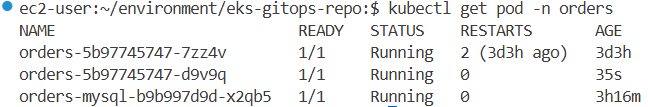

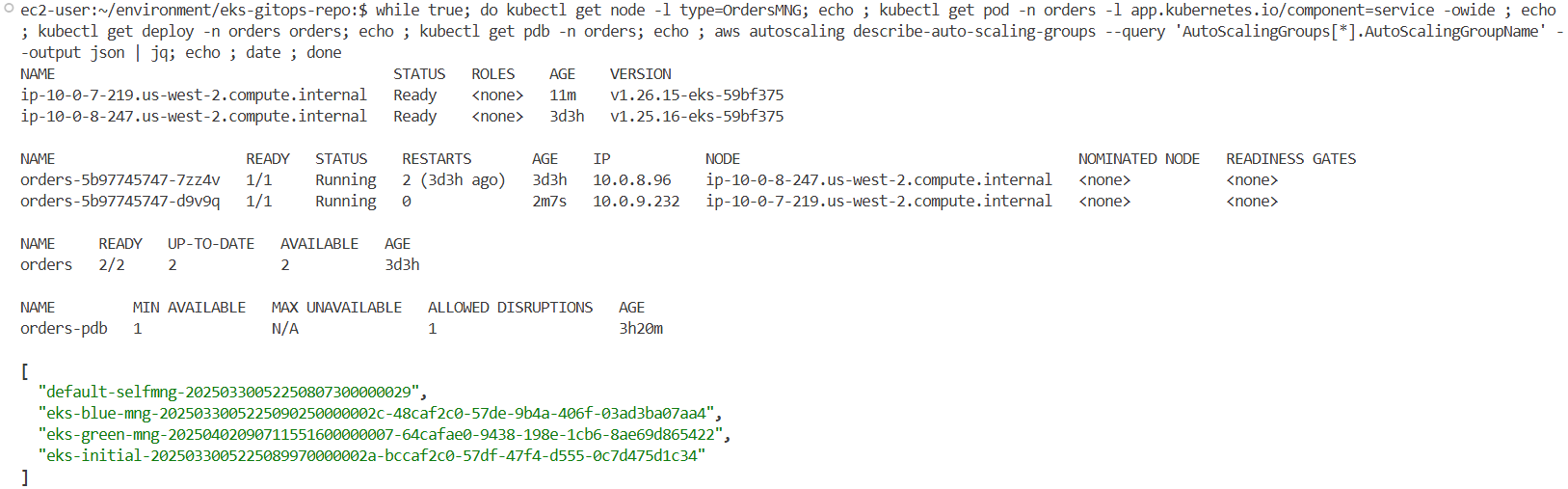

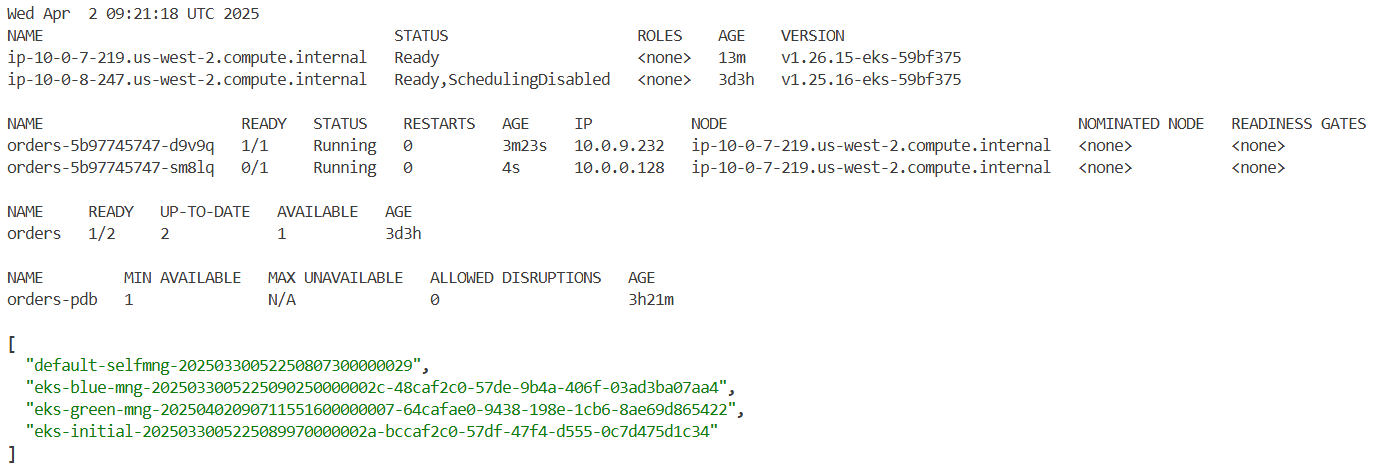

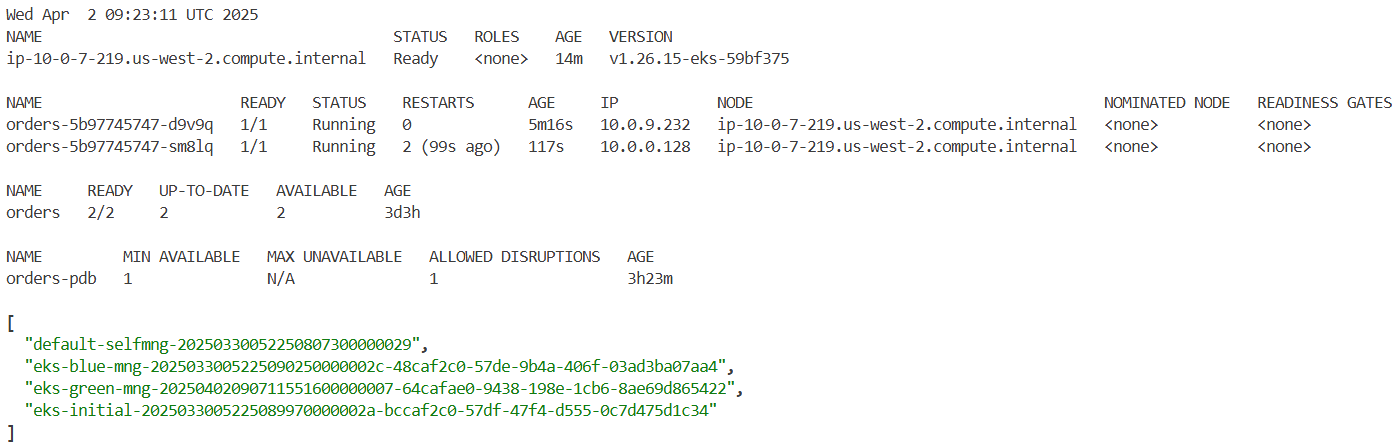

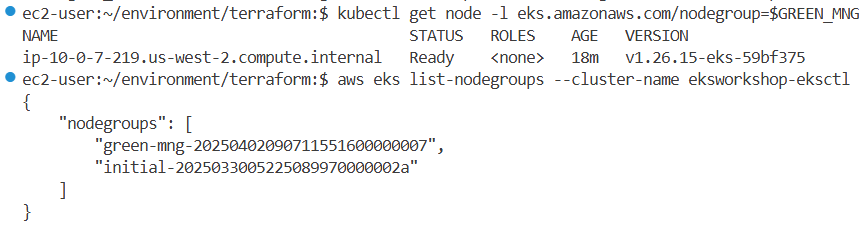

워크로드 중 orders 서비스가 있는 orders 네임스페이스를 대상으로 가용성 보장 구성을 적용합니다.

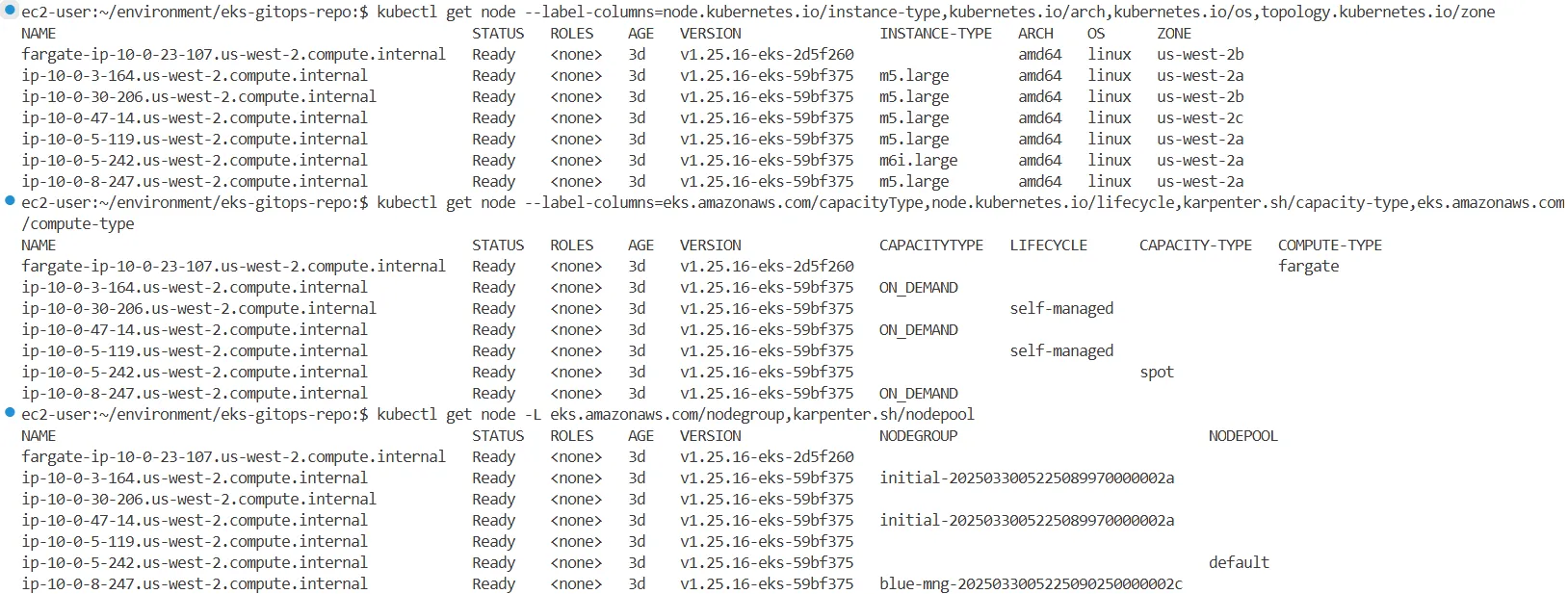

노드에 할당된 라벨, 인스턴스 타입, 가용영역, taint 등을 확인하여 분산 상태를 파악합니다.

#

kubectl get node --label-columns=node.kubernetes.io/instance-type,kubernetes.io/arch,kubernetes.io/os,topology.kubernetes.io/zone

kubectl get node --label-columns=eks.amazonaws.com/capacityType,node.kubernetes.io/lifecycle,karpenter.sh/capacity-type,eks.amazonaws.com/compute-type

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

kubectl get nodes -o custom-columns='NODE:.metadata.name,TAINTS:.spec.taints[*].key,VALUES:.spec.taints[*].value,EFFECTS:.spec.taints[*].effect'

kubectl get nodes -o json | jq '.items[] | {name: .metadata.name, labels: .metadata.labels}'

#

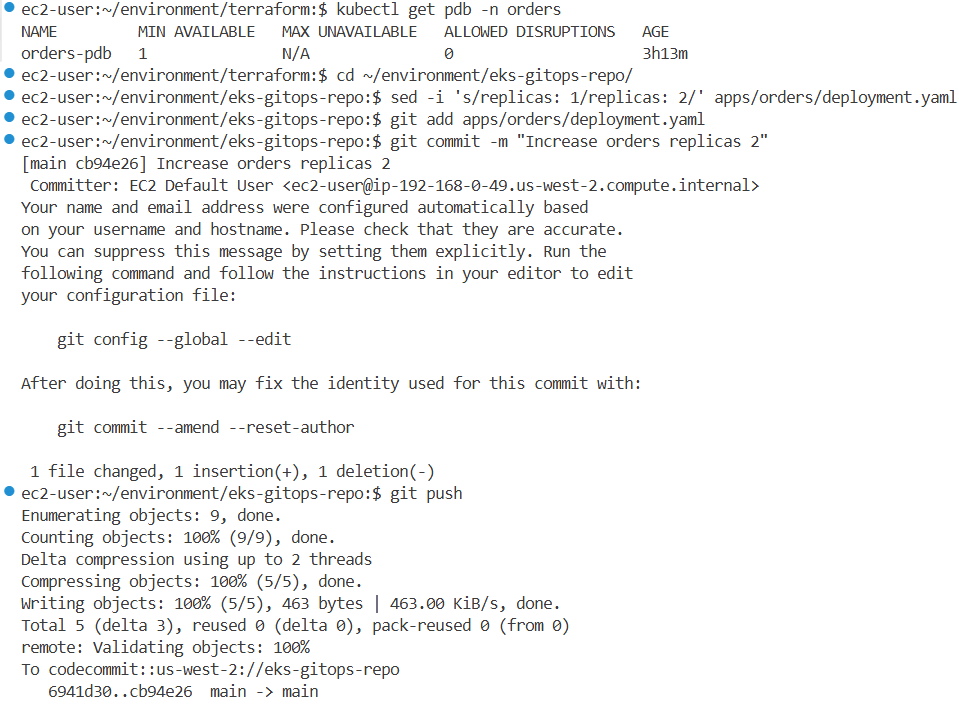

kubectl get-all -n orders

kubectl get pdb -n orders

kubectl get pod -n orders --show-labels

NAME READY STATUS RESTARTS AGE LABELS

orders-5b97745747-mxg72 1/1 Running 2 (5h48m ago) 5h48m app.kubernetes.io/component=service,app.kubernetes.io/created-by=eks-workshop,app.kubernetes.io/instance=orders,app.kubernetes.io/name=orders,pod-template-hash=5b97745747

orders-mysql-b9b997d9d-2p8pn 1/1 Running 0 5h48m app.kubernetes.io/component=mysql,app.kubernetes.io/created-by=eks-workshop,app.kubernetes.io/instance=orders,app.kubernetes.io/name=orders,app.kubernetes.io/team=database,pod-template-hash=b9b997d9d

# orders 에 pdb.yaml 없음

tree eks-gitops-repo/apps/orders/

eks-gitops-repo/apps/orders/

├── configMap.yaml

├── deployment-mysql.yaml

├── deployment.yaml

├── kustomization.yaml

├── namespace.yaml

├── pvc.yaml

├── secrets.yaml

├── service-mysql.yaml

├── service.yaml

└── serviceAccount.yaml→ 현재 orders 네임스페이스에는 PDB가 설정되어 있지 않으며, orders와 orders-mysql 두 개의 Pod가 배포되어 있습니다.

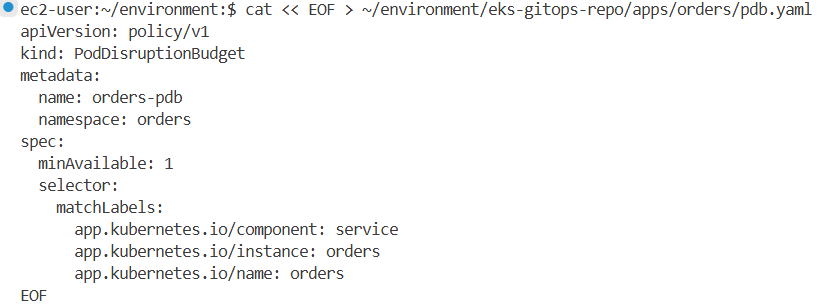

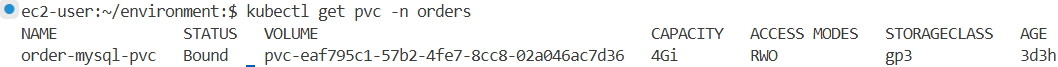

2.10.3 PodDisruptionBudget 구성

orders 서비스의 가용성을 보장하기 위해 PDB를 구성합니다. 아래 설정은 orders 서비스의 Pod가 최소 1개는 항상 유지되도록 합니다.

# orders 에 pdb.yaml 작성

cat << EOF > ~/environment/eks-gitops-repo/apps/orders/pdb.yaml

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: orders-pdb

namespace: orders

spec:

minAvailable: 1

selector:

matchLabels:

app.kubernetes.io/component: service

app.kubernetes.io/instance: orders

app.kubernetes.io/name: orders

EOF

# kustomization.yaml이 리소스를 추가하고 CodeCommit repo에 변경 사항을 커밋하려면 를 업데이트합니다

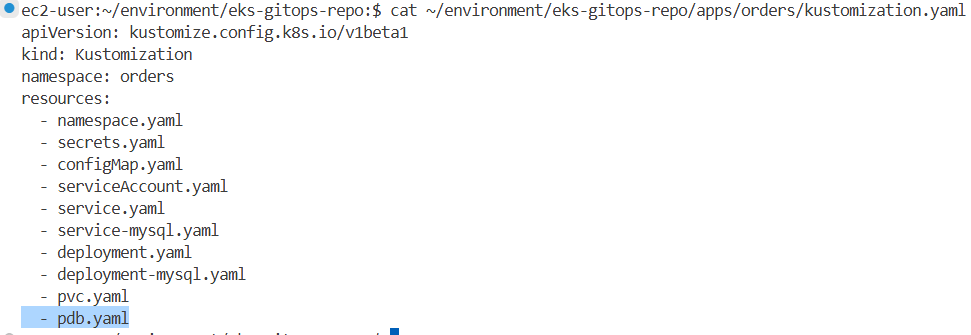

cat ~/environment/eks-gitops-repo/apps/orders/kustomization.yaml

echo " - pdb.yaml" >> ~/environment/eks-gitops-repo/apps/orders/kustomization.yaml

cat ~/environment/eks-gitops-repo/apps/orders/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: orders

resources:

- namespace.yaml

- secrets.yaml

- configMap.yaml

- serviceAccount.yaml

- service.yaml

- service-mysql.yaml

- deployment.yaml

- deployment-mysql.yaml

- pvc.yaml

- pdb.yaml

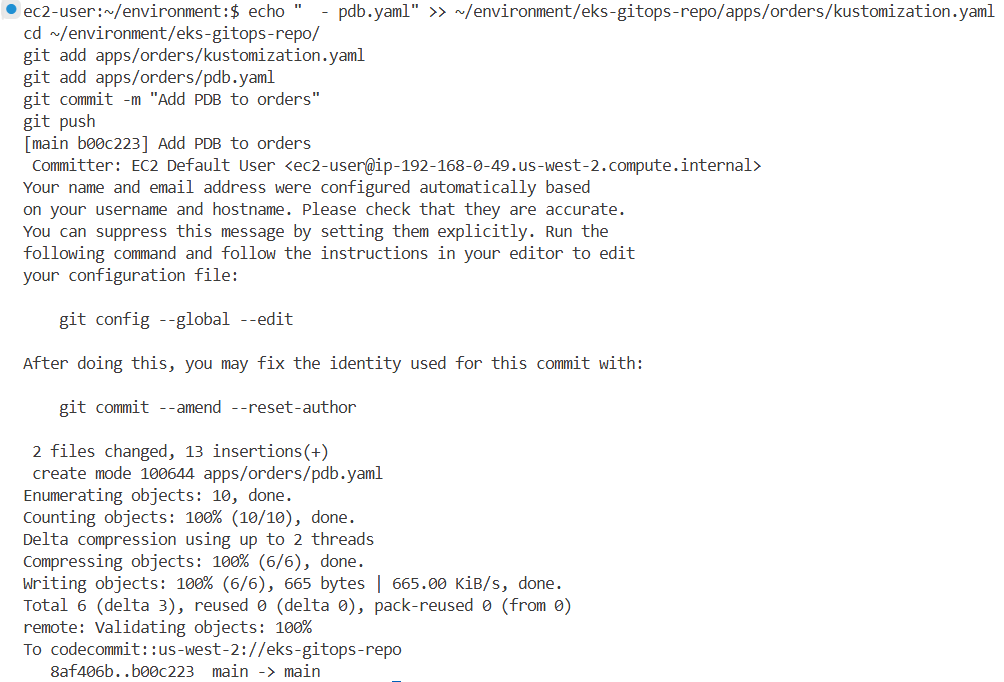

# Git 커밋 및 푸시

cd ~/environment/eks-gitops-repo/

git add apps/orders/kustomization.yaml

git add apps/orders/pdb.yaml

git commit -m "Add PDB to orders"

git push

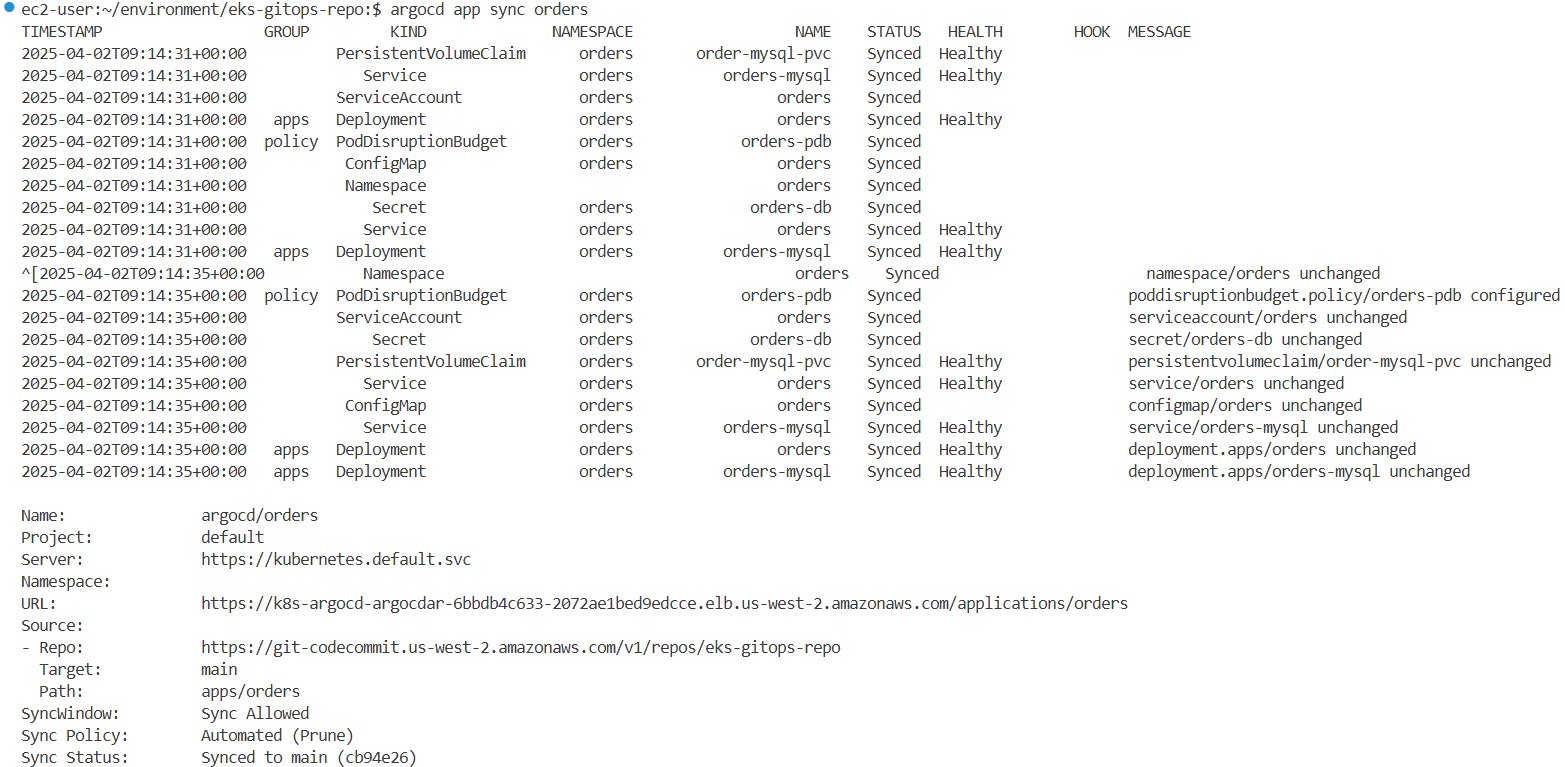

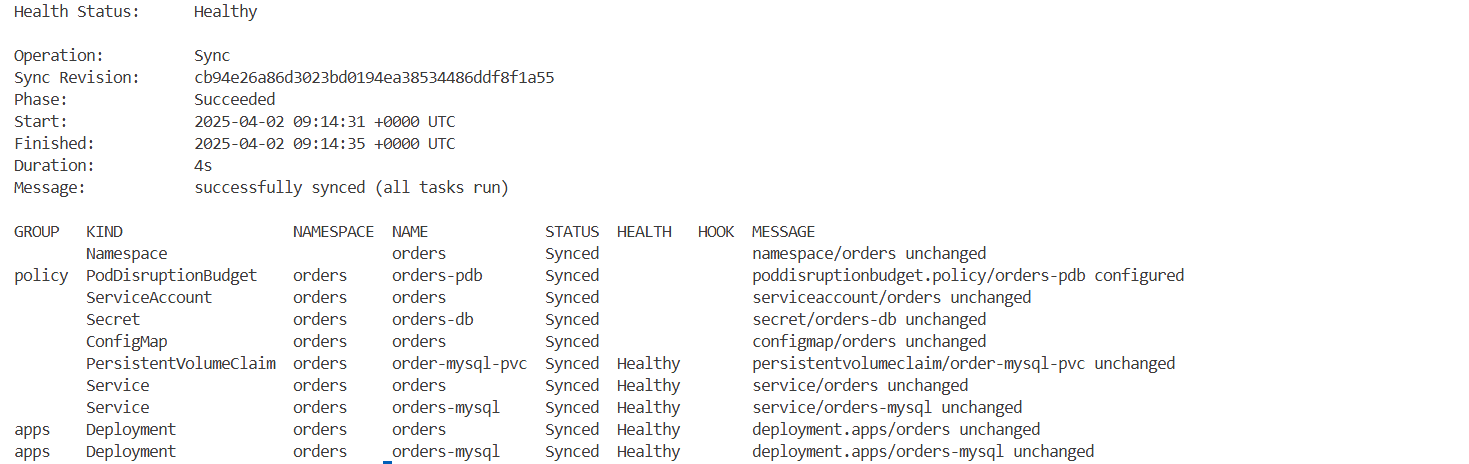

# 마지막으로 ArgoCD UI나 아래 명령을 사용하여 argocd 애플리케이션을 동기화합니다.

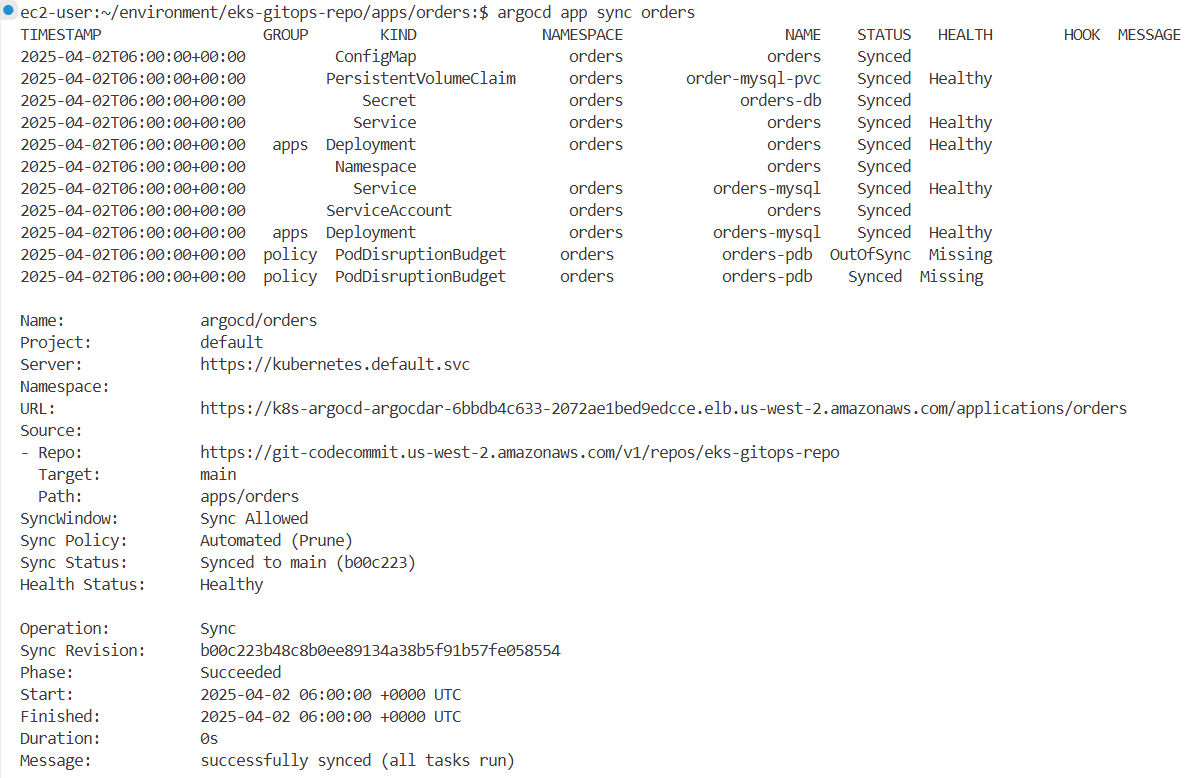

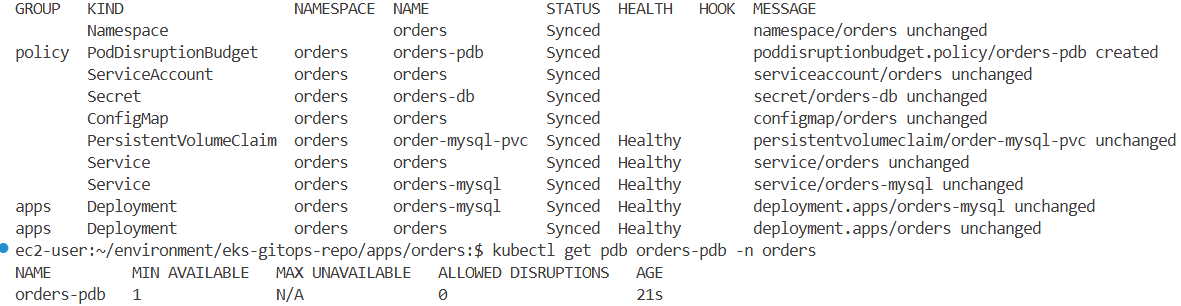

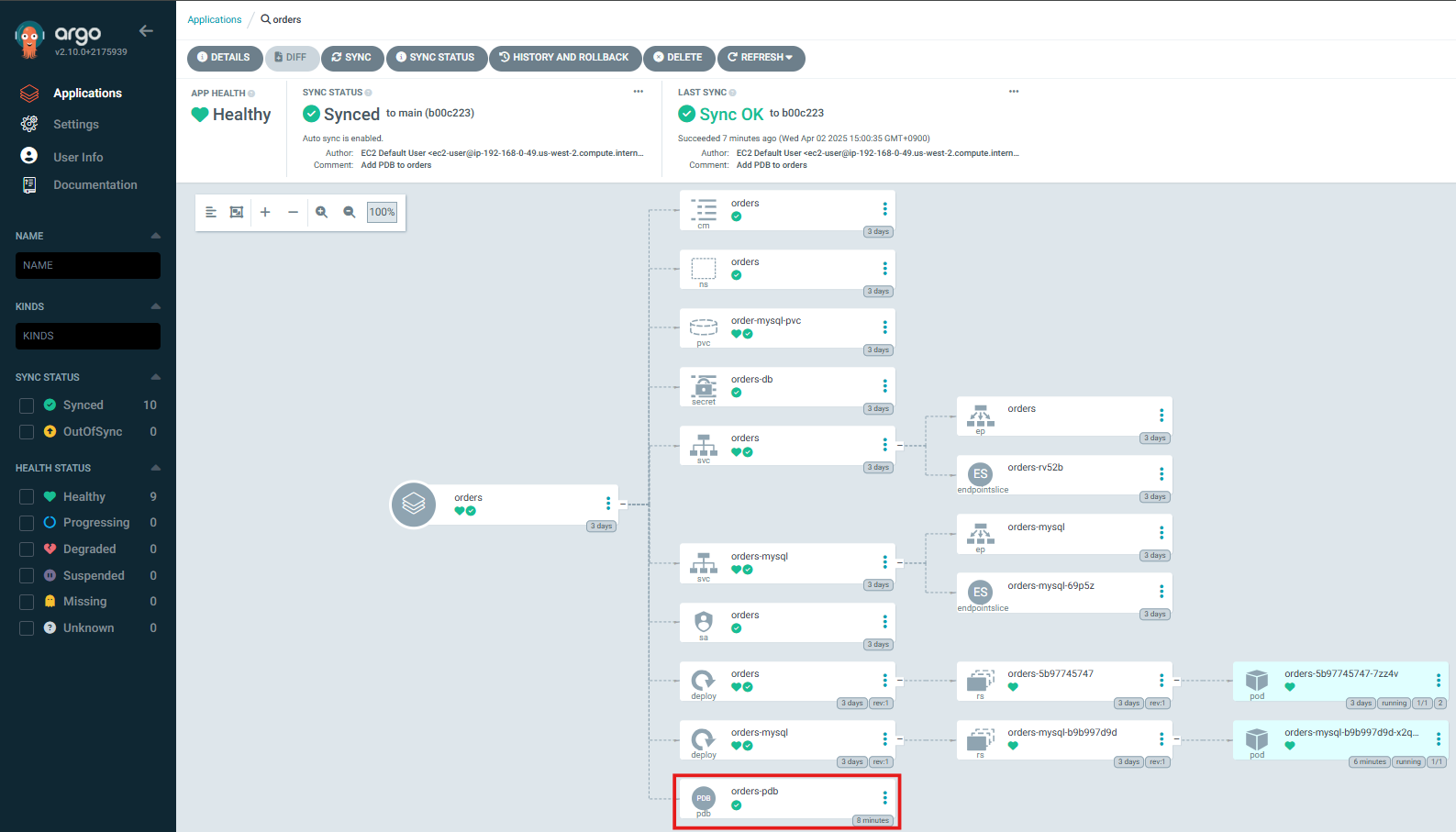

argocd app sync orders

...

Sync Revision: c1a355735e446d98f8dc80d74d121a76d82c6f3c

Phase: Succeeded

Start: 2025-03-25 08:35:11 +0000 UTC

Finished: 2025-03-25 08:35:12 +0000 UTC

Duration: 1s

Message: successfully synced (all tasks run)

...→ 동기화 성공 메시지를 통해 클러스터에 반영되었음을 확인할 수 있습니다.

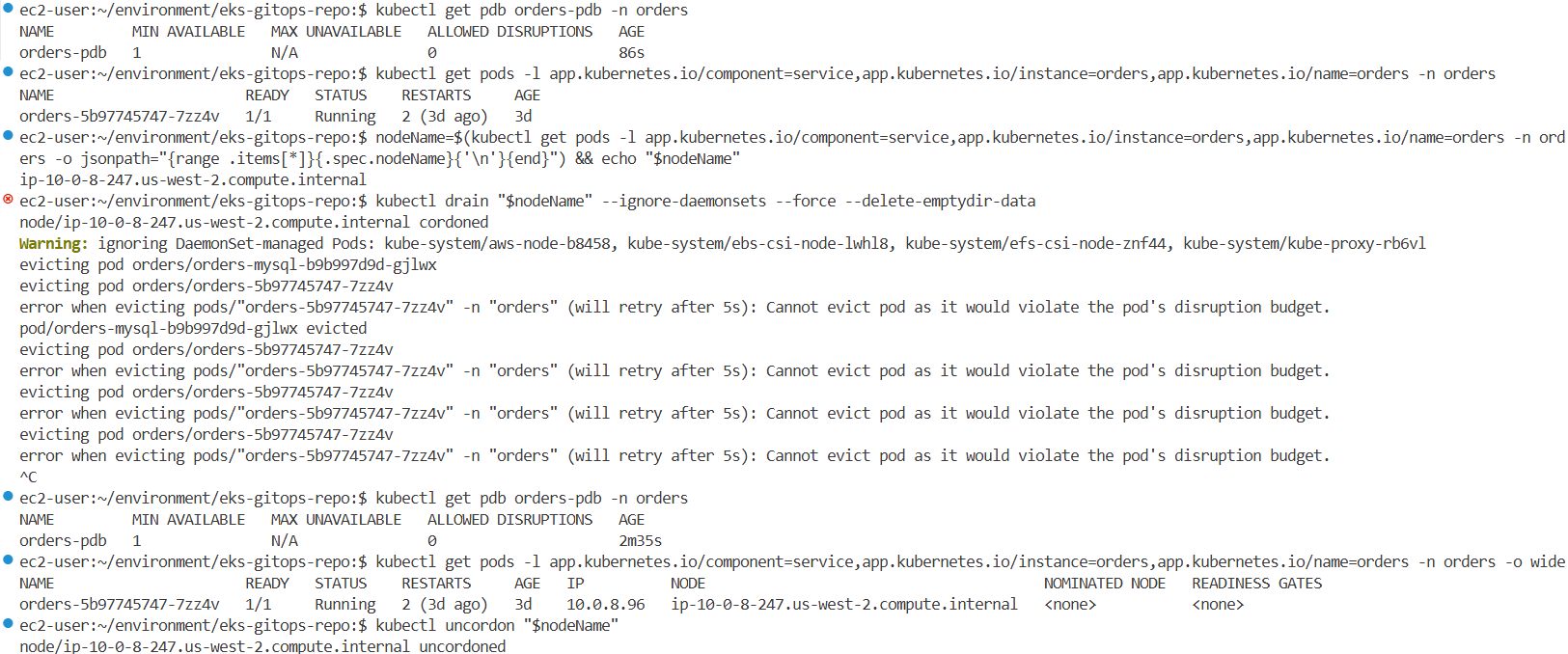

2.10.4 PDB 동작 테스트

# PDB의 현재 상태를 확인하여 사용 가능한 Pod 수와 필요한 최소 가용성을 파악합니다.

kubectl get pdb orders-pdb -n orders

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

orders-pdb 1 N/A 0 3m13s

# PDB 선택기에 지정된 레이블을 사용하여 PDB에서 관리하는 Pod를 나열합니다.

kubectl get pods -l app.kubernetes.io/component=service,app.kubernetes.io/instance=orders,app.kubernetes.io/name=orders -n orders

NAME READY STATUS RESTARTS AGE

orders-5b97745747-mxg72 1/1 Running 2 (5h57m ago) 5h57m

# 이제 Pod가 실행 중인 노드의 이름을 추출해야 합니다.

nodeName=$(kubectl get pods -l app.kubernetes.io/component=service,app.kubernetes.io/instance=orders,app.kubernetes.io/name=orders -n orders -o jsonpath="{range .items[*]}{.spec.nodeName}{'\n'}{end}") && echo "$nodeName"

ip-10-0-3-199.us-west-2.compute.internal

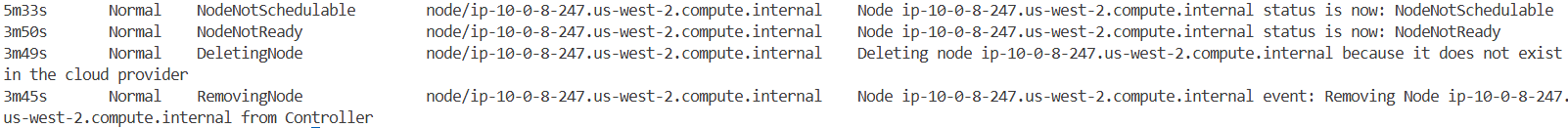

# PDB를 테스트하려면 수동으로 포드 중 하나를 제거하여 중단을 시뮬레이션할 수 있습니다 : orders-mysql 는 쫓겨나지만 orders는 안나감.

# drain 은 해당 노드에 파드를 Evicted 하고 cordon 설정됨.

kubectl drain "$nodeName" --ignore-daemonsets --force --delete-emptydir-data

## 노드를 드레이닝하면 PDB에서 관리하는 포드를 포함하여 해당 노드의 모든 포드를 퇴거하려고 시도합니다. 노드를 드레이닝하면 정의된 PDB 요구 사항을 위반하므로 오류가 발생합니다.

error when evicting pods/"orders-5b97745747-j2h8d" -n "orders" (will retry after 5s): Cannot evict pod as it would violate the pod's disruption budget.

evicting pod orders/orders-5b97745747-j2h8d

Ctrl + C 명령 실행을 중지

#

kubectl get node "$nodeName"

NAME STATUS ROLES AGE VERSION

ip-10-0-3-168.us-west-2.compute.internal Ready,SchedulingDisabled <none> 23h v1.25.16-eks-59bf375

kubectl describe node "$nodeName" | grep -i taint -A2

Taints: dedicated=OrdersApp:NoSchedule

node.kubernetes.io/unschedulable:NoSchedule

Unschedulable: true

# PDB 상태를 다시 확인하여 지정된 최소 가용성을 준수하는지 확인하세요.

kubectl get pdb orders-pdb -n orders

NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE

orders-pdb 1 N/A 0 5m30s

# 나머지 Pod가 계속 실행 중이며 최소 가용성 요구 사항을 충족하는지 확인하려면 Pod와 노드의 상태를 확인하세요.

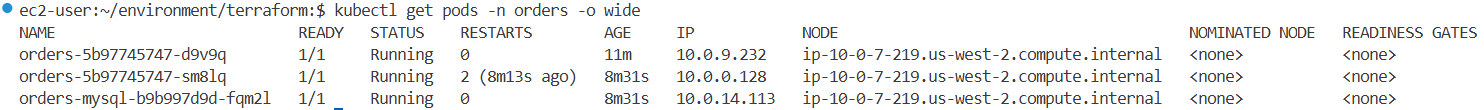

kubectl get pods -l app.kubernetes.io/component=service,app.kubernetes.io/instance=orders,app.kubernetes.io/name=orders -n orders -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

orders-5b97745747-mxg72 1/1 Running 2 (5h59m ago) 6h 10.0.5.219 ip-10-0-3-199.us-west-2.compute.internal <none> <none>

# 중단 중에도 애플리케이션이 계속 정상적으로 작동하고 가용성 요구 사항을 충족하는지 확인하기 위해 애플리케이션의 동작을 모니터링합니다.

# 테스트 후 노드의 차단을 해제하여 새로운 Pod를 예약할 수 있습니다.

kubectl uncordon "$nodeName"해당 노드를 drain하여 PDB 동작을 테스트합니다.

→ 결과: orders Pod는 PodDisruptionBudget 설정으로 인해 Evict되지 않으며, 에러 발생

노드 상태가 SchedulingDisabled로 바뀌며, unschedulable taint가 설정된 것을 확인합니다.

drain 테스트 후에는 다음 명령어로 다시 노드를 정상화합니다.

2.11 In-place cluster 업그레이드 - Control Plane 업그레이드

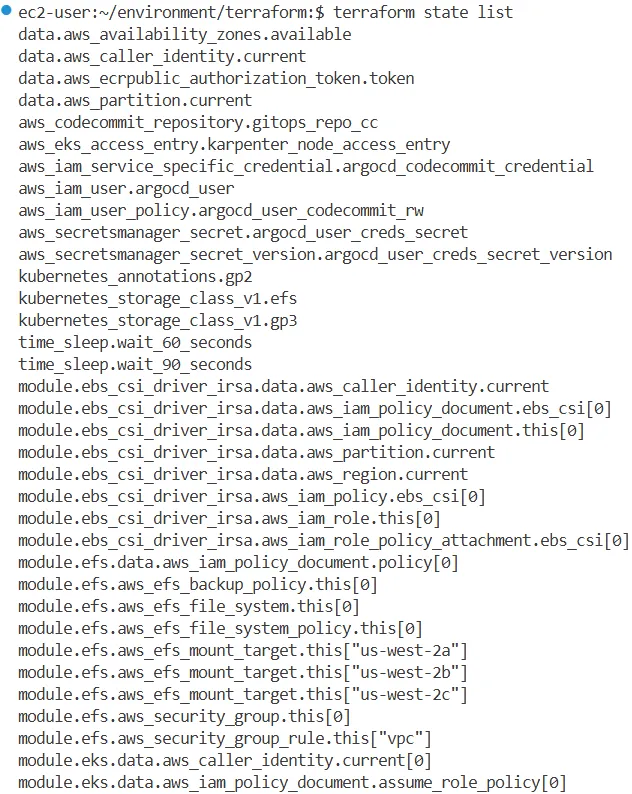

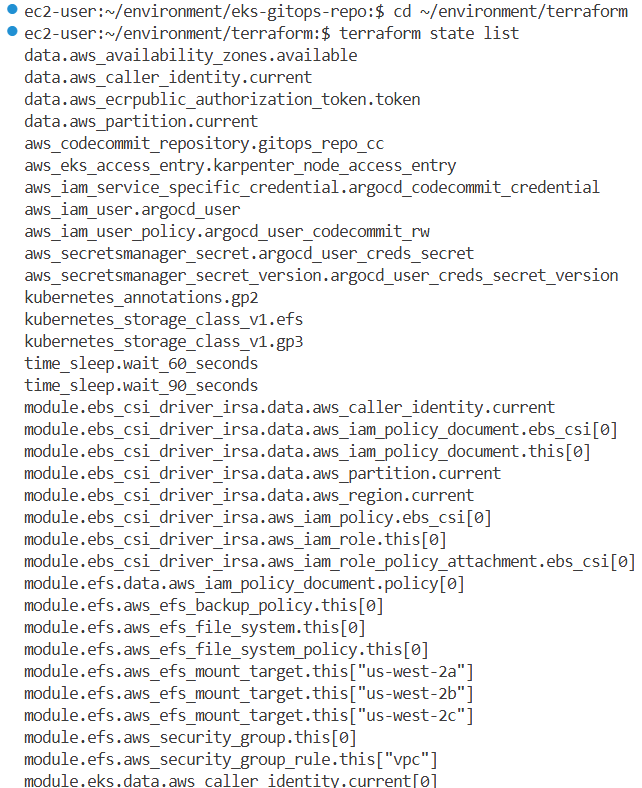

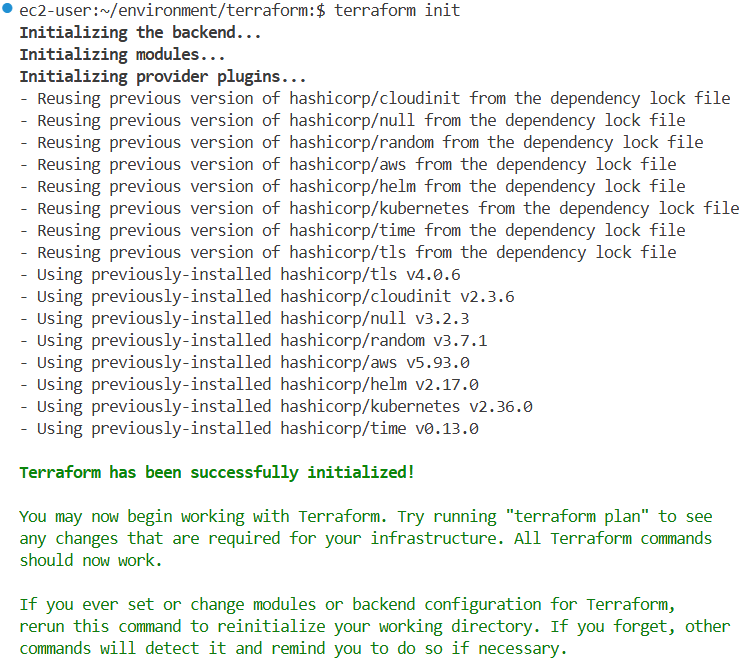

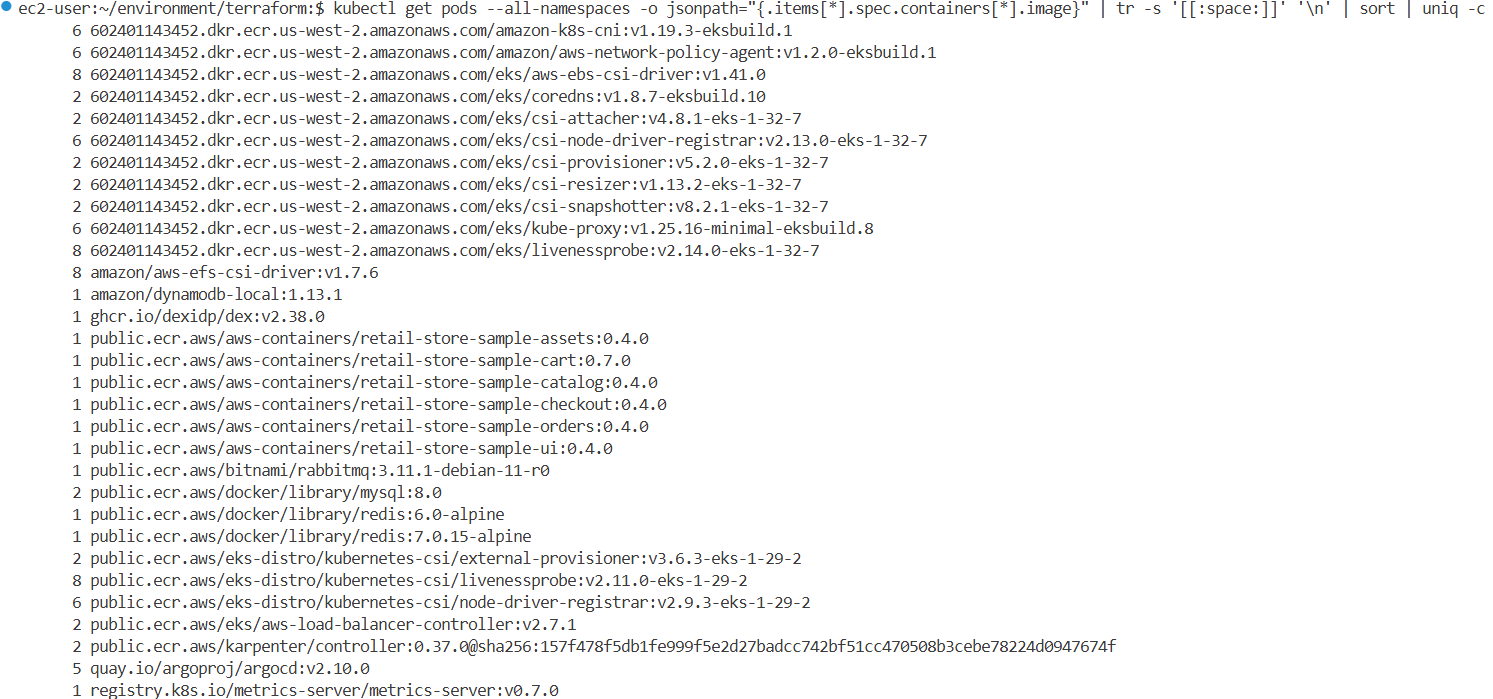

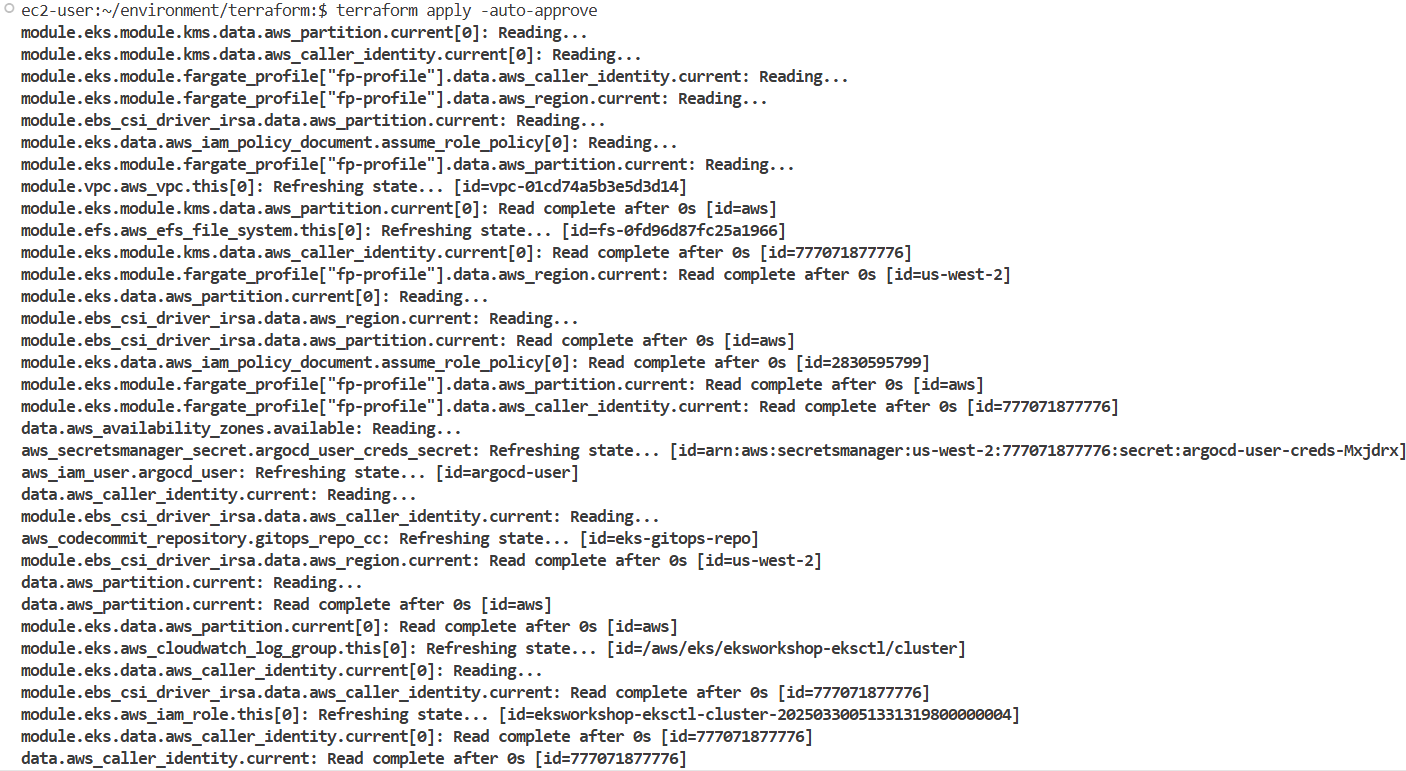

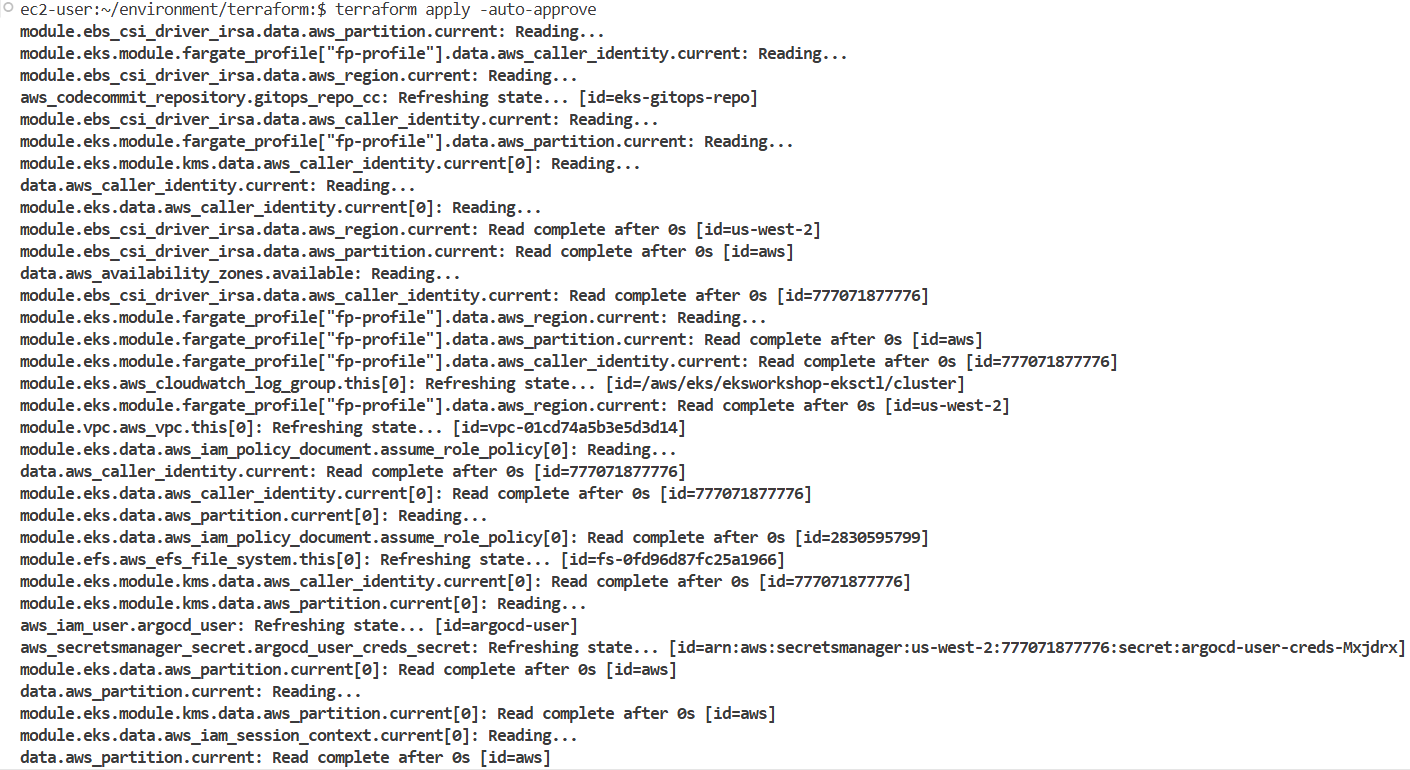

eksctl, AWS 관리 콘솔, aws cli, Terraform 방법 중 이 랩에서는 terraform을 사용하여 클러스터를 업그레이드합니다. EKS 클러스터는 이미 terraform을 통해 이 랩에 프로비저닝되었습니다. terraform init, plan 및 apply를 수행하여 리소스 상태를 새로 고칩니다.

cd ~/environment/terraform

terraform state list

모니터링을 위한 작업 추가

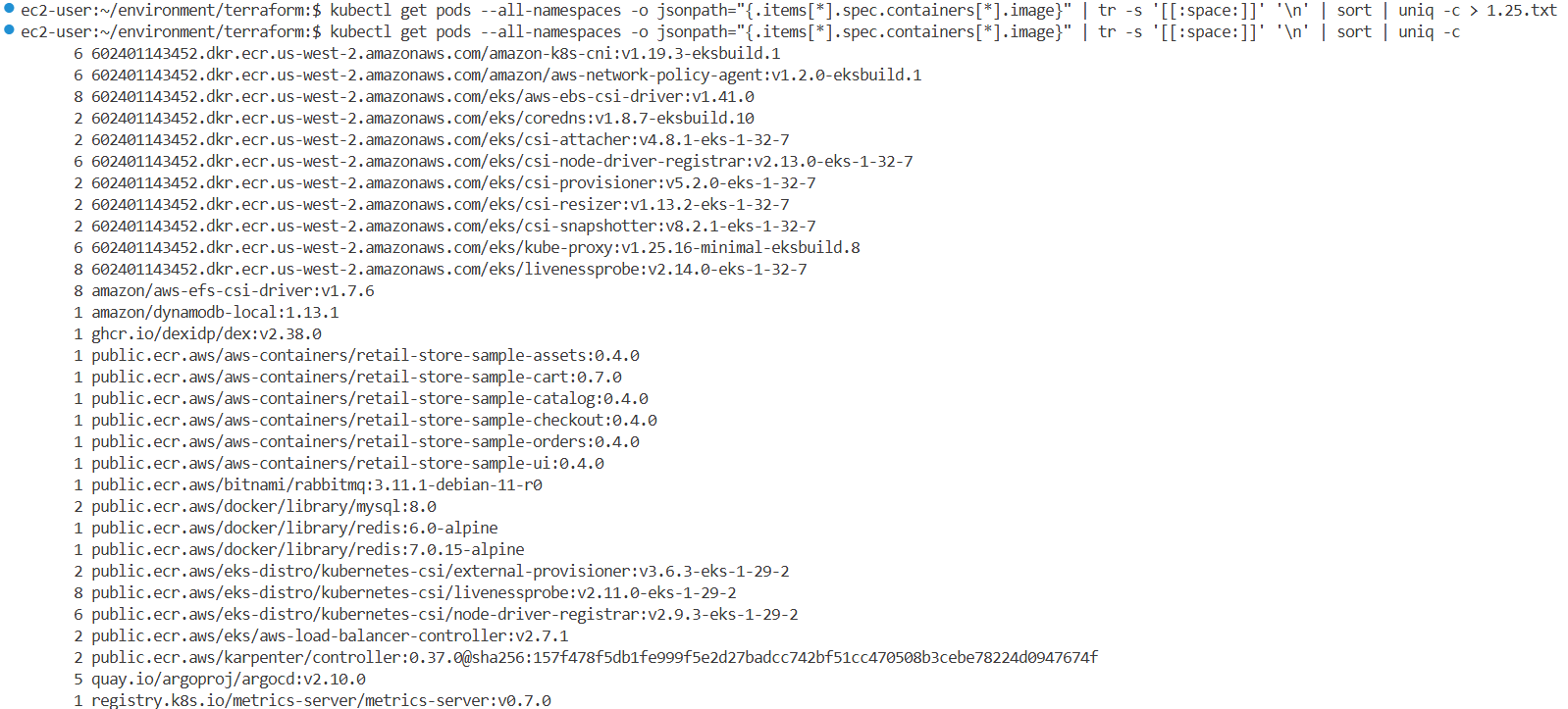

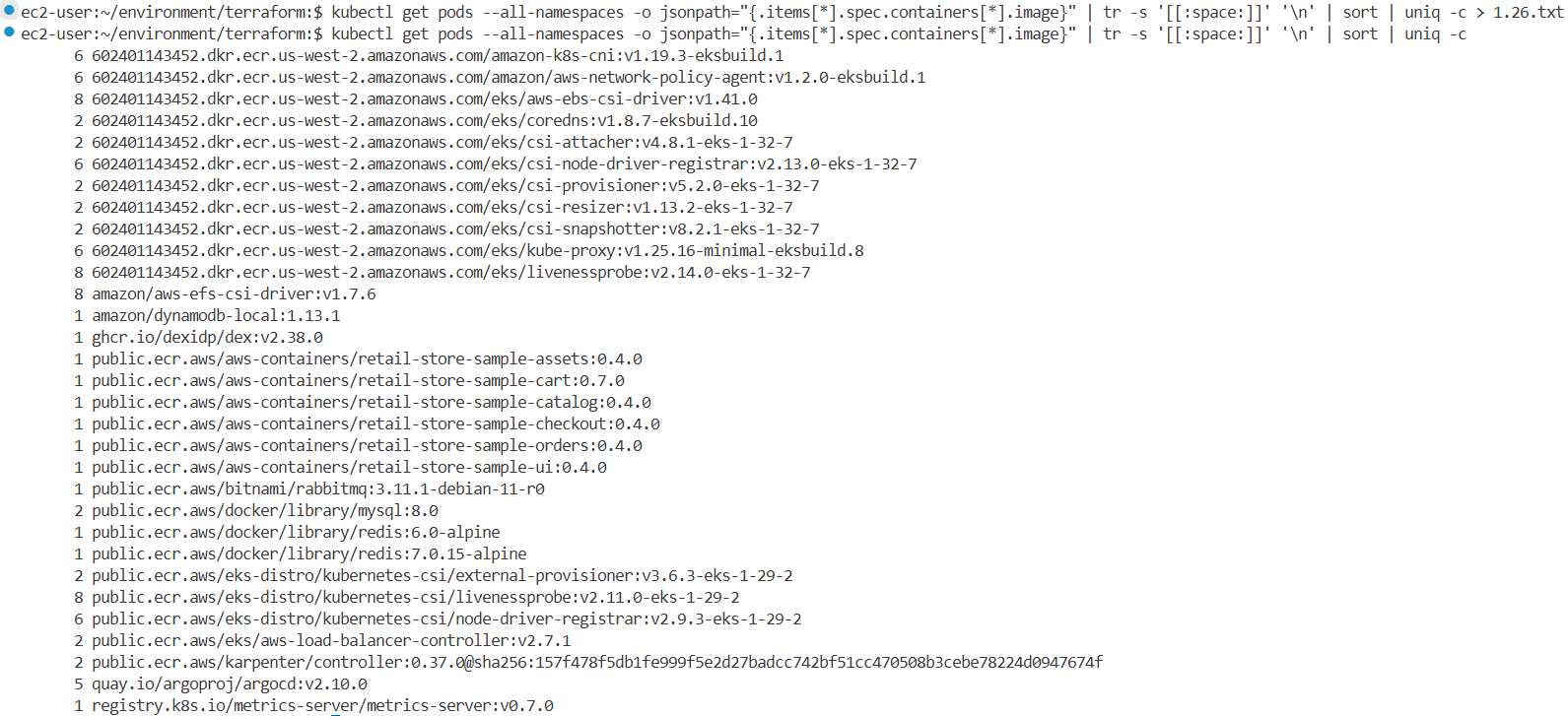

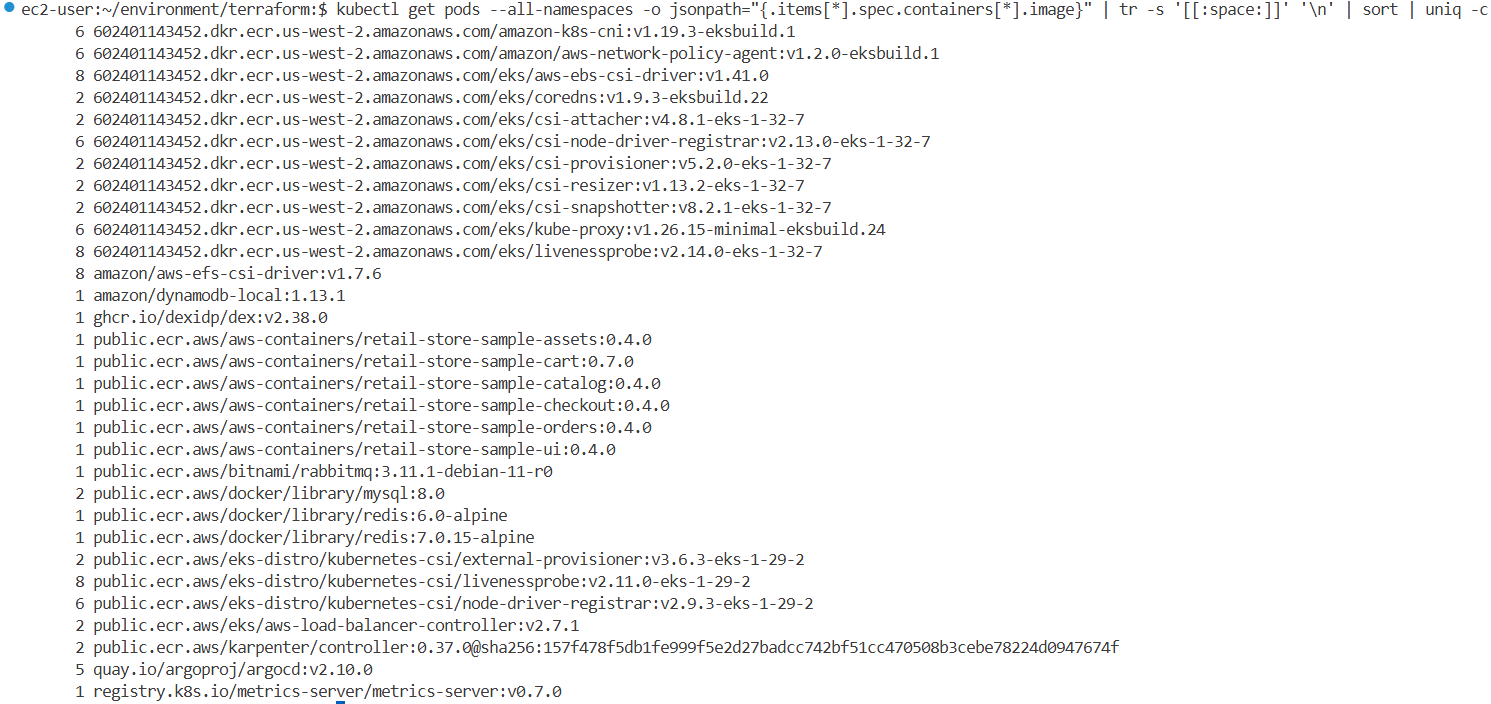

# 현재 버전 확인 : 파일로 저장해두기

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c > 1.25.txt

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon-k8s-cni:v1.19.3-eksbuild.1

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon/aws-network-policy-agent:v1.2.0-eksbuild.1

8 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-ebs-csi-driver:v1.41.0

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v1.8.7-eksbuild.10

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-attacher:v4.8.1-eks-1-32-7

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-node-driver-registrar:v2.13.0-eks-1-32-7

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-provisioner:v5.2.0-eks-1-32-7

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-resizer:v1.13.2-eks-1-32-7

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-snapshotter:v8.2.1-eks-1-32-7

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v1.25.16-minimal-eksbuild.8

8 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/livenessprobe:v2.14.0-eks-1-32-7

8 amazon/aws-efs-csi-driver:v1.7.6

1 amazon/dynamodb-local:1.13.1

1 ghcr.io/dexidp/dex:v2.38.0

1 hjacobs/kube-ops-view:20.4.0

1 public.ecr.aws/aws-containers/retail-store-sample-assets:0.4.0

1 public.ecr.aws/aws-containers/retail-store-sample-cart:0.7.0

1 public.ecr.aws/aws-containers/retail-store-sample-catalog:0.4.0

1 public.ecr.aws/aws-containers/retail-store-sample-checkout:0.4.0

1 public.ecr.aws/aws-containers/retail-store-sample-orders:0.4.0

1 public.ecr.aws/aws-containers/retail-store-sample-ui:0.4.0

1 public.ecr.aws/bitnami/rabbitmq:3.11.1-debian-11-r0

2 public.ecr.aws/docker/library/mysql:8.0

1 public.ecr.aws/docker/library/redis:6.0-alpine

1 public.ecr.aws/docker/library/redis:7.0.15-alpine

2 public.ecr.aws/eks-distro/kubernetes-csi/external-provisioner:v3.6.3-eks-1-29-2

8 public.ecr.aws/eks-distro/kubernetes-csi/livenessprobe:v2.11.0-eks-1-29-2

6 public.ecr.aws/eks-distro/kubernetes-csi/node-driver-registrar:v2.9.3-eks-1-29-2

2 public.ecr.aws/eks/aws-load-balancer-controller:v2.7.1

2 public.ecr.aws/karpenter/controller:0.37.0@sha256:157f478f5db1fe999f5e2d27badcc742bf51cc470508b3cebe78224d0947674f

5 quay.io/argoproj/argocd:v2.10.0

1 registry.k8s.io/metrics-server/metrics-server:v0.7.0

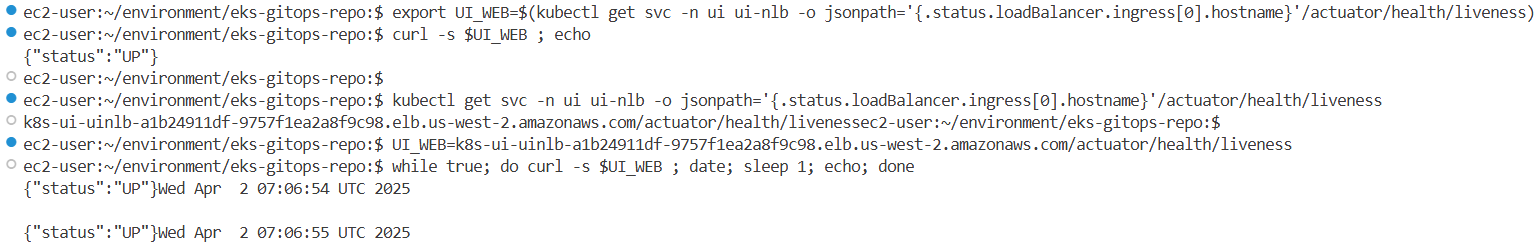

# IDE-Server 혹은 자신의 PC에서 반복 접속 해두자!

export UI_WEB=$(kubectl get svc -n ui ui-nlb -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'/actuator/health/liveness)

curl -s $UI_WEB ; echo

{"status":"UP"}

# 반복 접속 1

UI_WEB=k8s-ui-uinlb-d75345d621-e2b7d1ff5cf09378.elb.us-west-2.amazonaws.com/actuator/health/liveness

while true; do curl -s $UI_WEB ; date; sleep 1; echo; done

# 반복 접속 2 : aws cli 자격증명 설정 필요

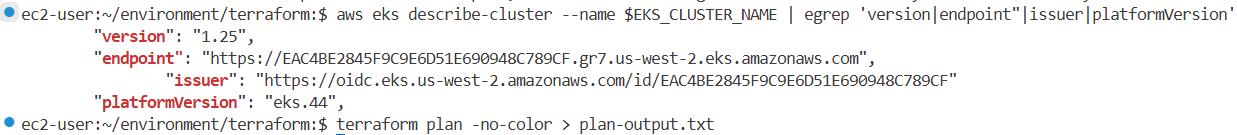

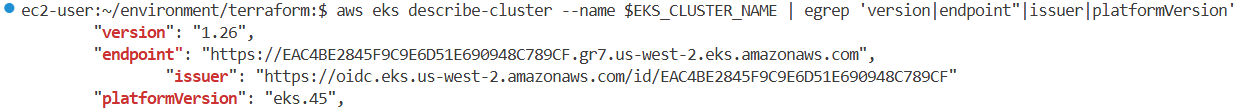

aws eks describe-cluster --name $EKS_CLUSTER_NAME | egrep 'version|endpoint"|issuer|platformVersion'

"version": "1.25",

"endpoint": "https://A77BDC5EEBAE5EC887F1747B6AE965B3.gr7.us-west-2.eks.amazonaws.com",

"issuer": "https://oidc.eks.us-west-2.amazonaws.com/id/A77BDC5EEBAE5EC887F1747B6AE965B3"

"platformVersion": "eks.44",

# 반복 접속 2

while true; do curl -s $UI_WEB; date; aws eks describe-cluster --name eksworkshop-eksctl | egrep 'version|endpoint"|issuer|platformVersion'; echo ; sleep 2; echo; done

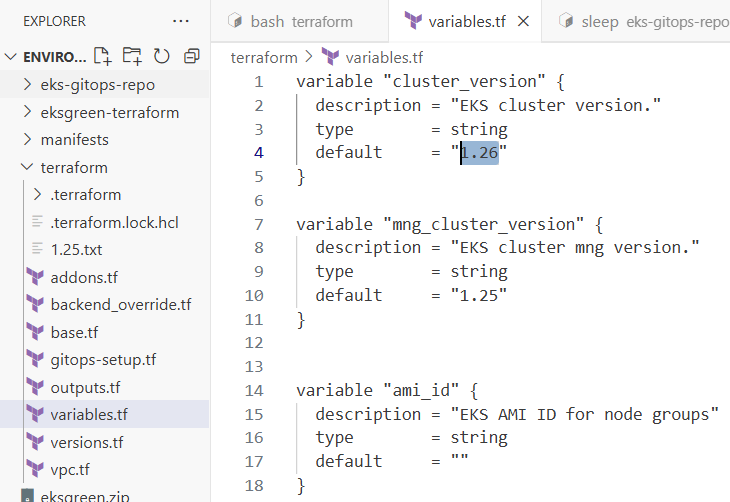

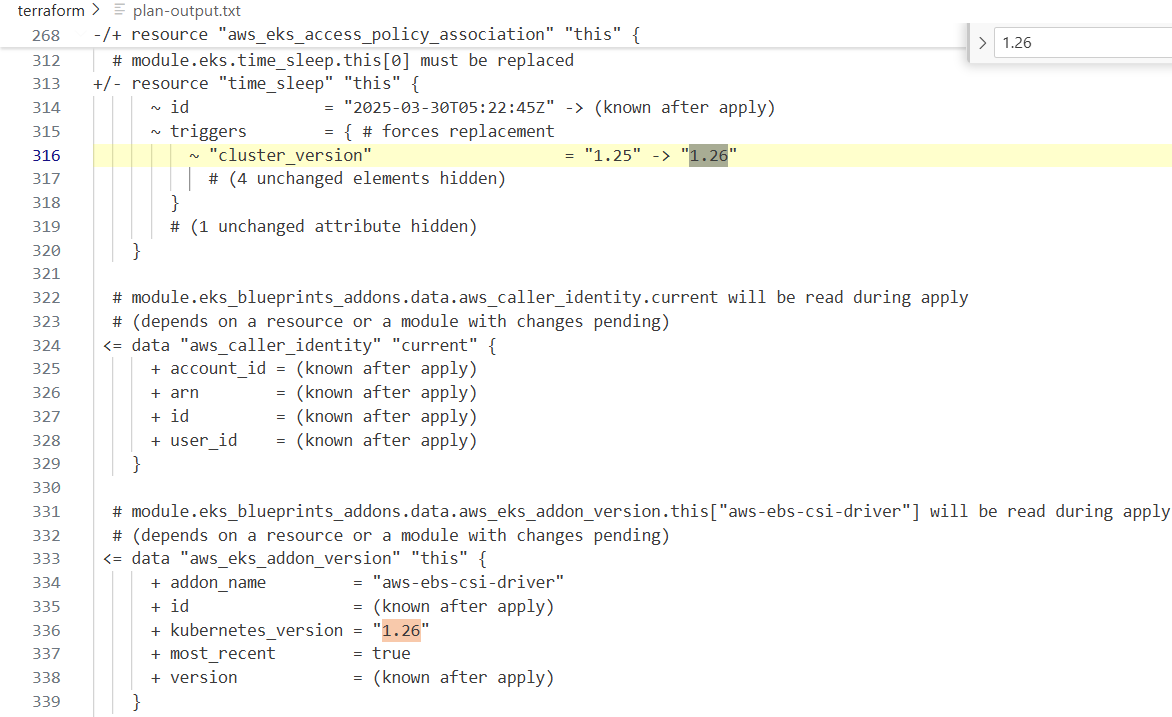

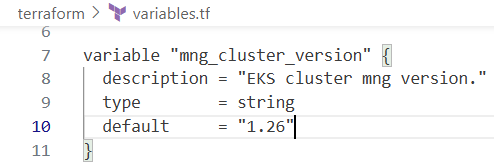

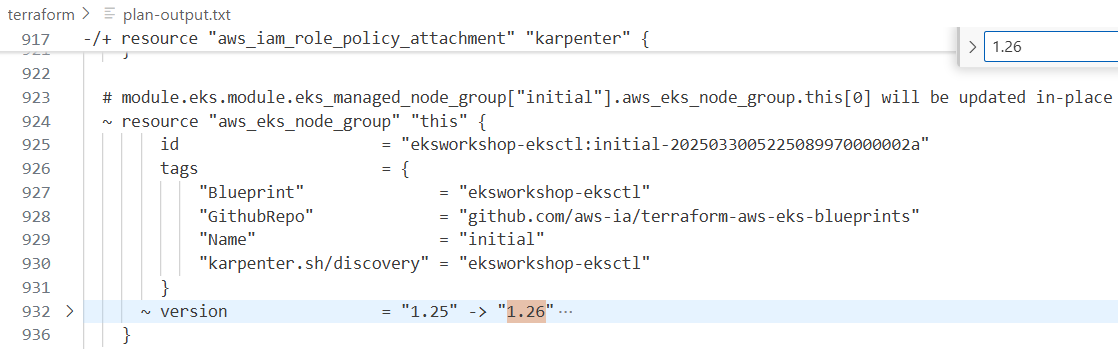

variables.tf 수정

variables.tf의 cluster_version 값을 1.25 -> 1.26으로 변경

# 기본 정보

aws eks describe-cluster --name $EKS_CLUSTER_NAME | egrep 'version|endpoint"|issuer|platformVersion'

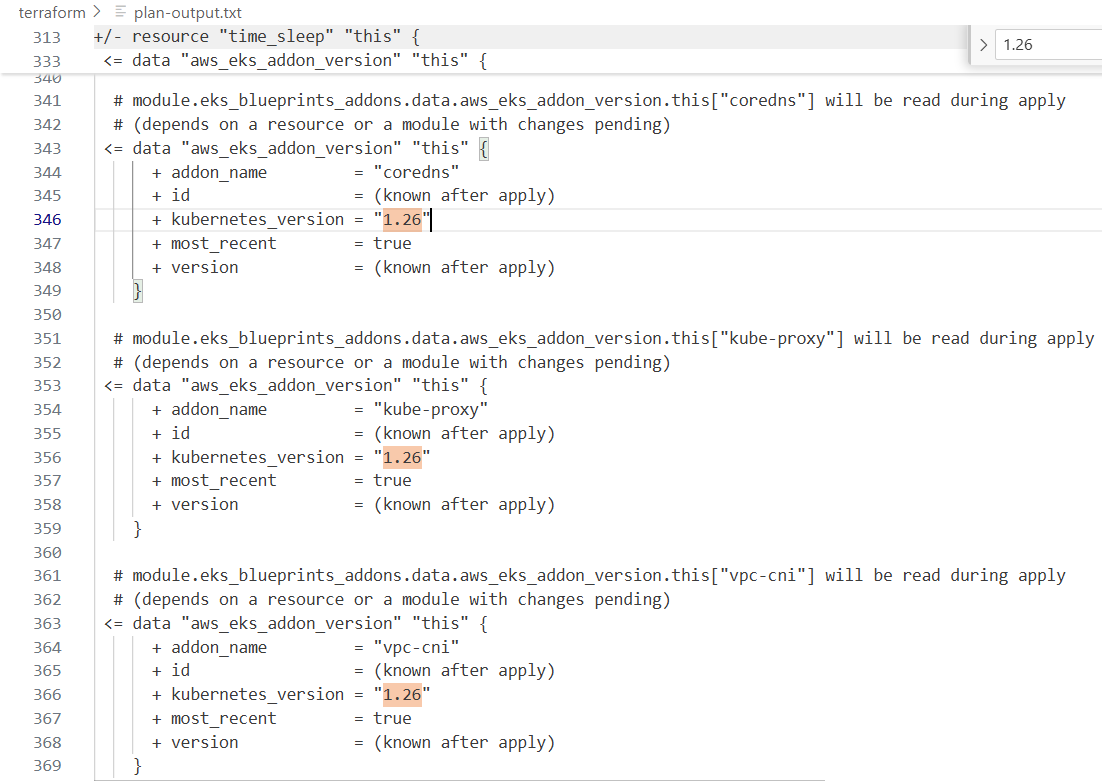

# Plan을 적용하면 Terraform이 어떤 변경을 하는지 볼 수 있습니다.

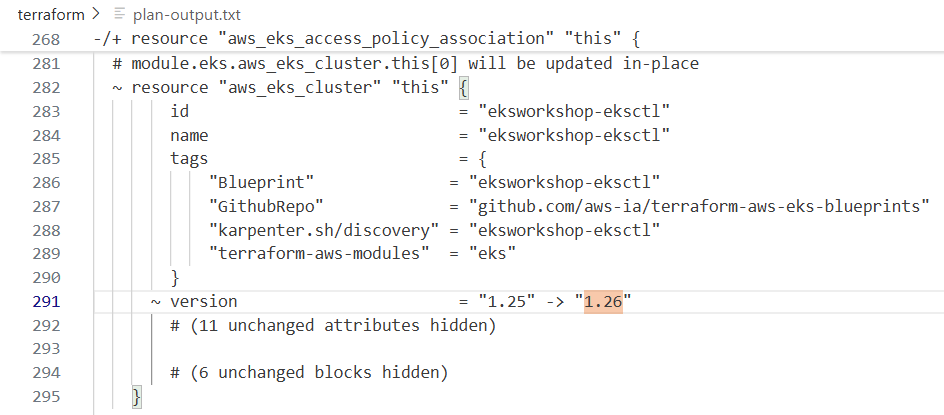

terraform plan

terraform plan -no-color > plan-output.txt # IDE에서 열어볼것

...

<= data "tls_certificate" "this" {

+ certificates = (known after apply)

+ id = (known after apply)

+ url = "https://oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059"

}

# module.eks.aws_iam_openid_connect_provider.oidc_provider[0] will be updated in-place

~ resource "aws_iam_openid_connect_provider" "oidc_provider" {

id = "arn:aws:iam::271345173787:oidc-provider/oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059"

tags = {

"Blueprint" = "eksworkshop-eksctl"

"GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

"Name" = "eksworkshop-eksctl-eks-irsa"

"karpenter.sh/discovery" = "eksworkshop-eksctl"

}

~ thumbprint_list = [

- "9e99a48a9960b14926bb7f3b02e22da2b0ab7280",

] -> (known after apply)

# (4 unchanged attributes hidden)

}

# module.eks_blueprints_addons.data.aws_eks_addon_version.this["aws-ebs-csi-driver"] will be read during apply

# (depends on a resource or a module with changes pending)

<= data "aws_eks_addon_version" "this" {

+ addon_name = "aws-ebs-csi-driver"

+ id = (known after apply)

+ kubernetes_version = "1.26"

+ most_recent = true

+ version = (known after apply)

}

# module.eks_blueprints_addons.aws_eks_addon.this["vpc-cni"] will be updated in-place

~ resource "aws_eks_addon" "this" {

~ addon_version = "v1.19.3-eksbuild.1" -> (known after apply)

id = "eksworkshop-eksctl:vpc-cni"

tags = {

"Blueprint" = "eksworkshop-eksctl"

"GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

}

# (11 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# module.eks_blueprints_addons.aws_iam_role_policy_attachment.karpenter["AmazonEC2ContainerRegistryReadOnly"] must be replaced

-/+ resource "aws_iam_role_policy_attachment" "karpenter" {

~ id = "karpenter-eksworkshop-eksctl-20250325094537762400000002" -> (known after apply)

~ policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly" -> (known after apply) # forces replacement

# (1 unchanged attribute hidden)

}

# module.eks_blueprints_addons.module.aws_efs_csi_driver.data.aws_iam_policy_document.assume[0] will be read during apply

# (depends on a resource or a module with changes pending)

<= data "aws_iam_policy_document" "assume" {

+ id = (known after apply)

+ json = (known after apply)

+ minified_json = (known after apply)

+ statement {

+ actions = [

+ "sts:AssumeRoleWithWebIdentity",

]

+ effect = "Allow"

+ condition {

+ test = "StringEquals"

+ values = [

+ "sts.amazonaws.com",

]

+ variable = "oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059:aud"

}

+ condition {

+ test = "StringEquals"

+ values = [

+ "system:serviceaccount:kube-system:efs-csi-controller-sa",

]

+ variable = "oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059:sub"

}

+ principals {

+ identifiers = [

+ "arn:aws:iam::271345173787:oidc-provider/oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059",

]

+ type = "Federated"

}

}

+ statement {

+ actions = [

+ "sts:AssumeRoleWithWebIdentity",

]

+ effect = "Allow"

+ condition {

+ test = "StringEquals"

+ values = [

+ "sts.amazonaws.com",

]

+ variable = "oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059:aud"

}

+ condition {

+ test = "StringEquals"

+ values = [

+ "system:serviceaccount:kube-system:efs-csi-node-sa",

]

+ variable = "oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059:sub"

}

+ principals {

+ identifiers = [

+ "arn:aws:iam::271345173787:oidc-provider/oidc.eks.us-west-2.amazonaws.com/id/B852364D70EA6D62672481D278A15059",

]

+ type = "Federated"

}

}

}

# 기본 정보

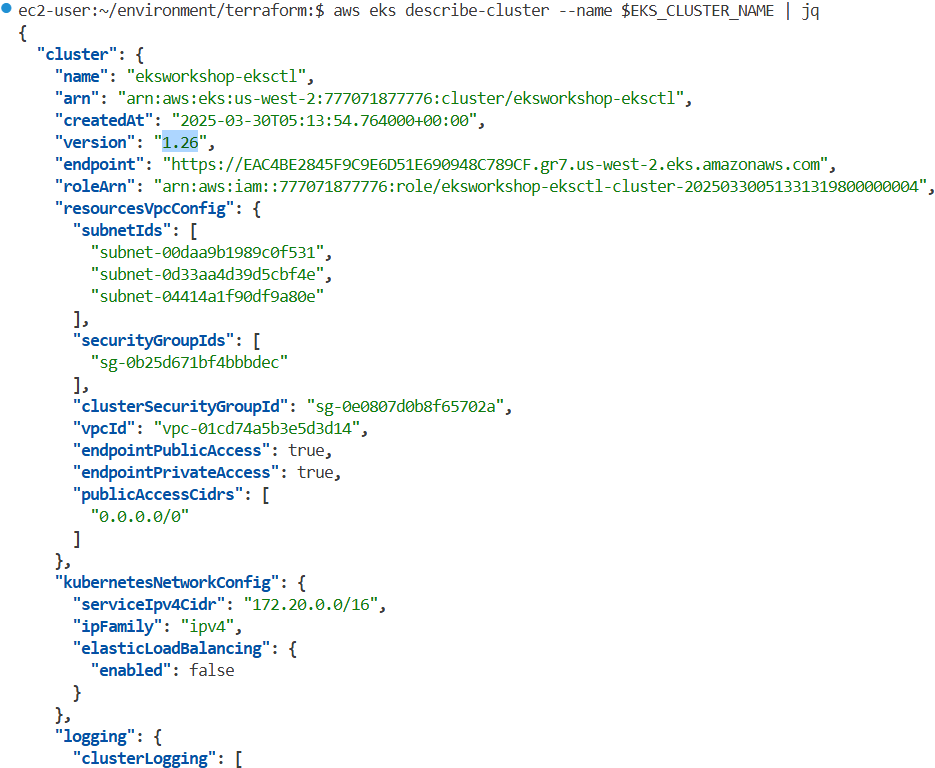

aws eks describe-cluster --name $EKS_CLUSTER_NAME | egrep 'version|endpoint"|issuer|platformVersion'

# 클러스터 버전을 변경하면 테라폼 계획에 표시된 것처럼, 관리 노드 그룹에 대한 특정 버전이나 AMI가 테라폼 파일에 정의되지 않은 경우,

# eks 클러스터 제어 평면과 관리 노드 그룹 및 애드온과 같은 관련 리소스를 업데이트하게 됩니다.

# 이 계획을 적용하여 제어 평면 버전을 업데이트해 보겠습니다.

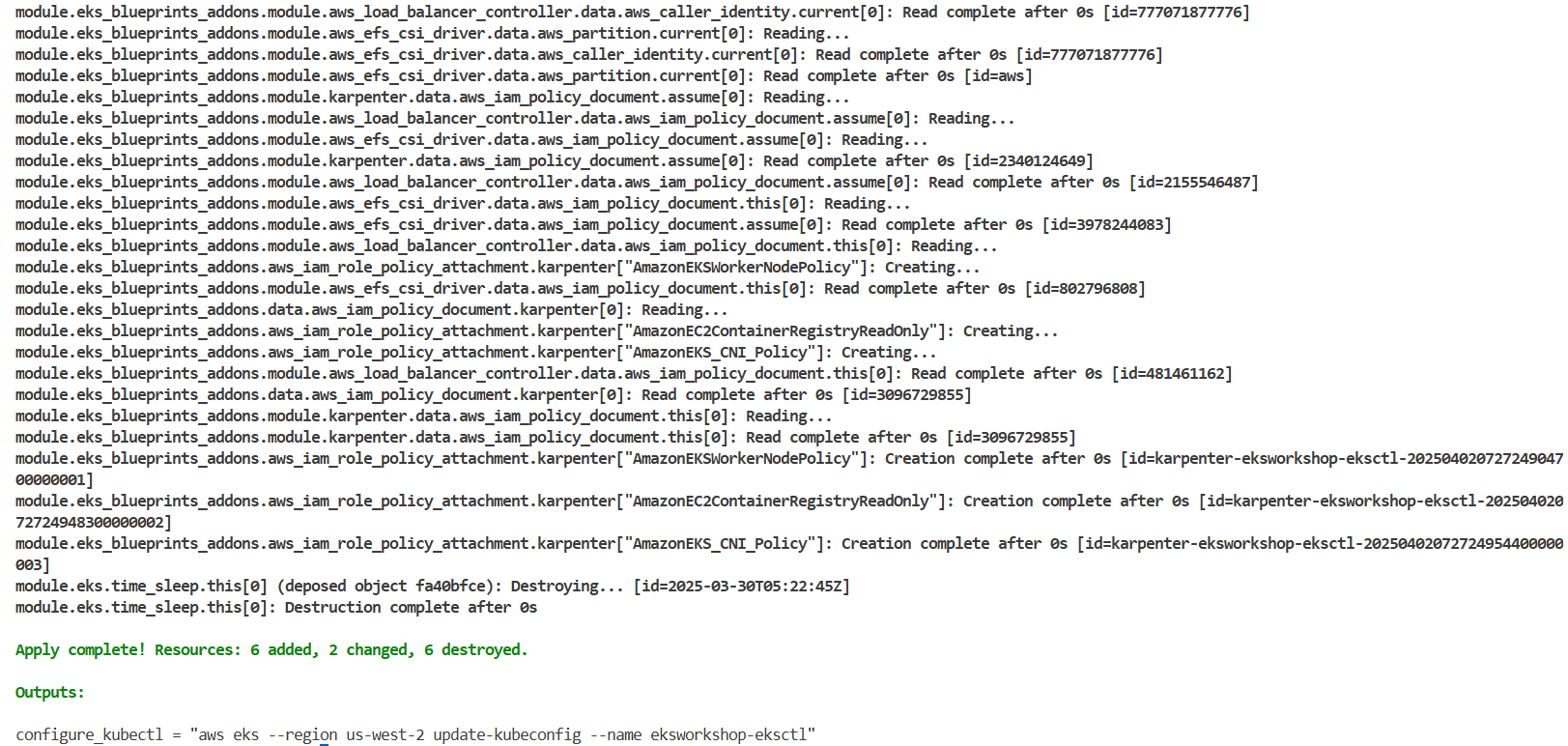

terraform apply -auto-approve # 10분 소요

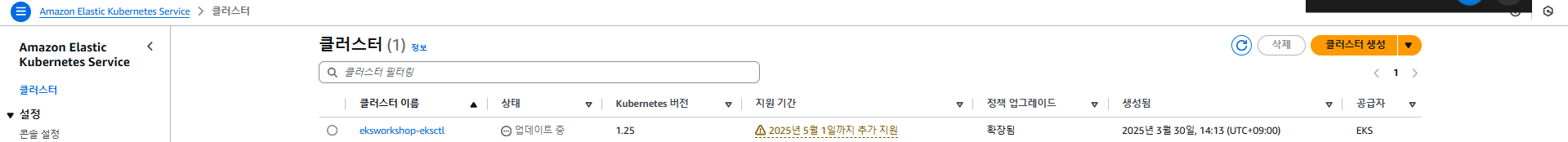

# eks control plane 1.26 업글 확인

aws eks describe-cluster --name $EKS_CLUSTER_NAME | jq

...

"version": "1.26",

# endpoint, issuer, platformVersion 동일 => IRSA 를 사용하는 4개의 App 동작에 문제 없음!

aws eks describe-cluster --name $EKS_CLUSTER_NAME | egrep 'version|endpoint"|issuer|platformVersion'

# 파드 컨테이너 이미지 버전 모두 동일

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c > 1.26.txt

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

diff 1.26.txt 1.25.txt # 동일함

# 파드 AGE 정보 확인 : 재생성된 파드 없음!

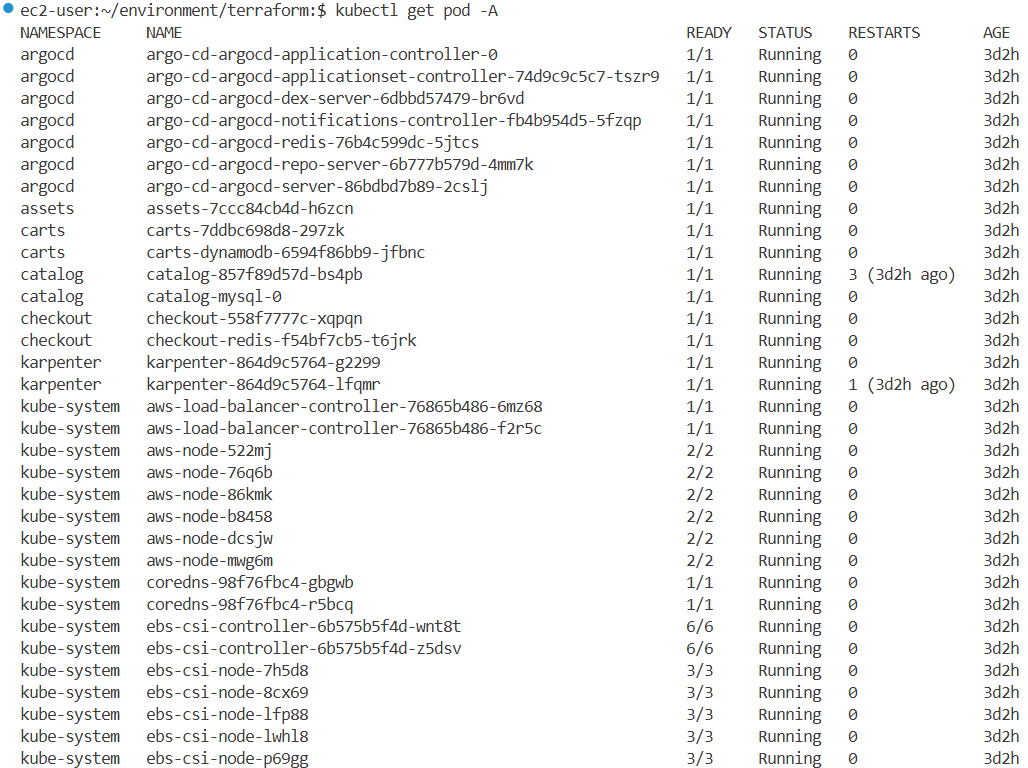

kubectl get pod -A

...

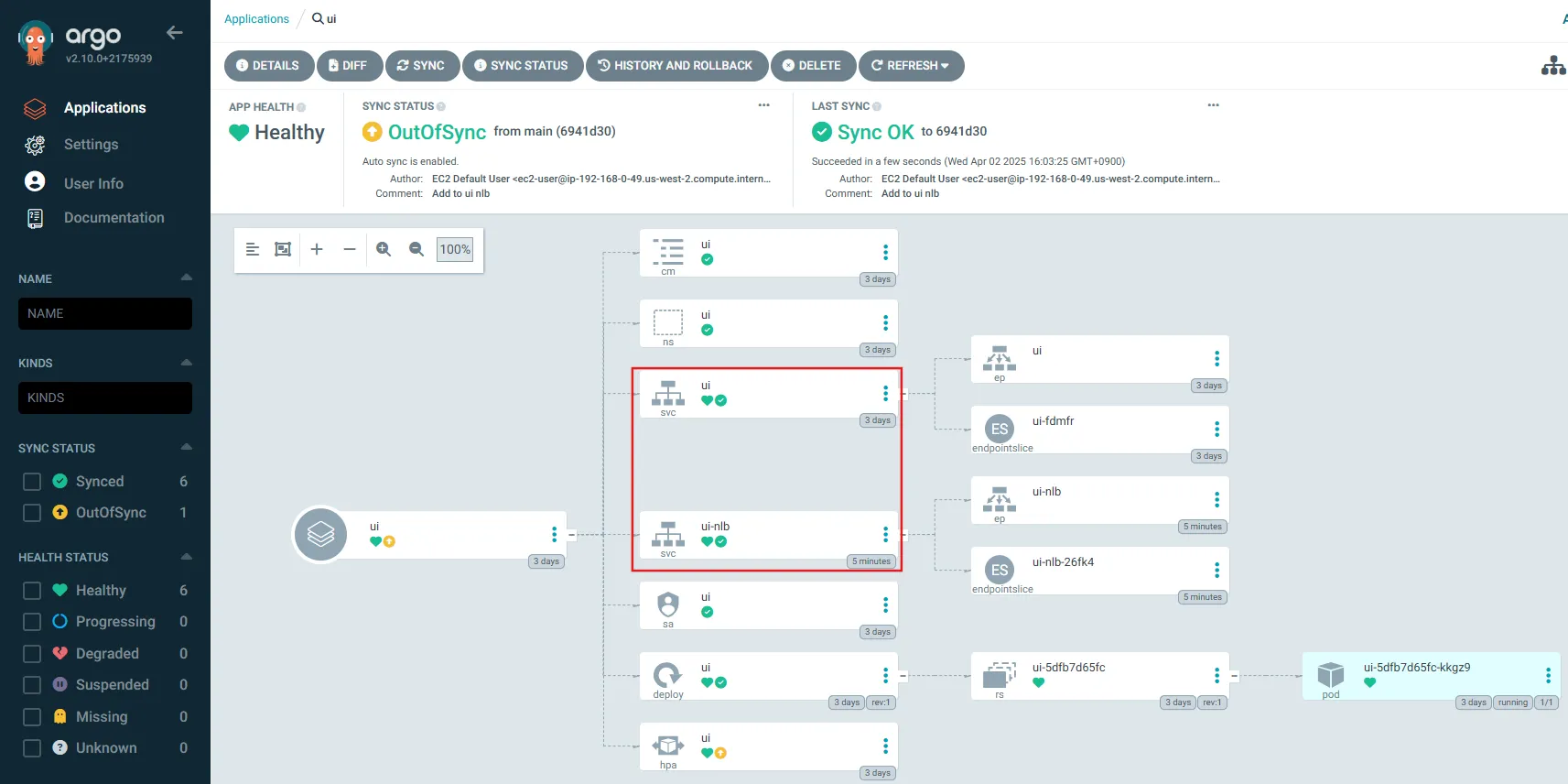

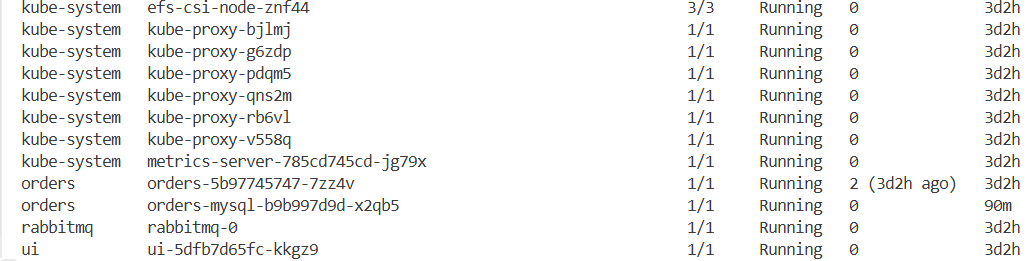

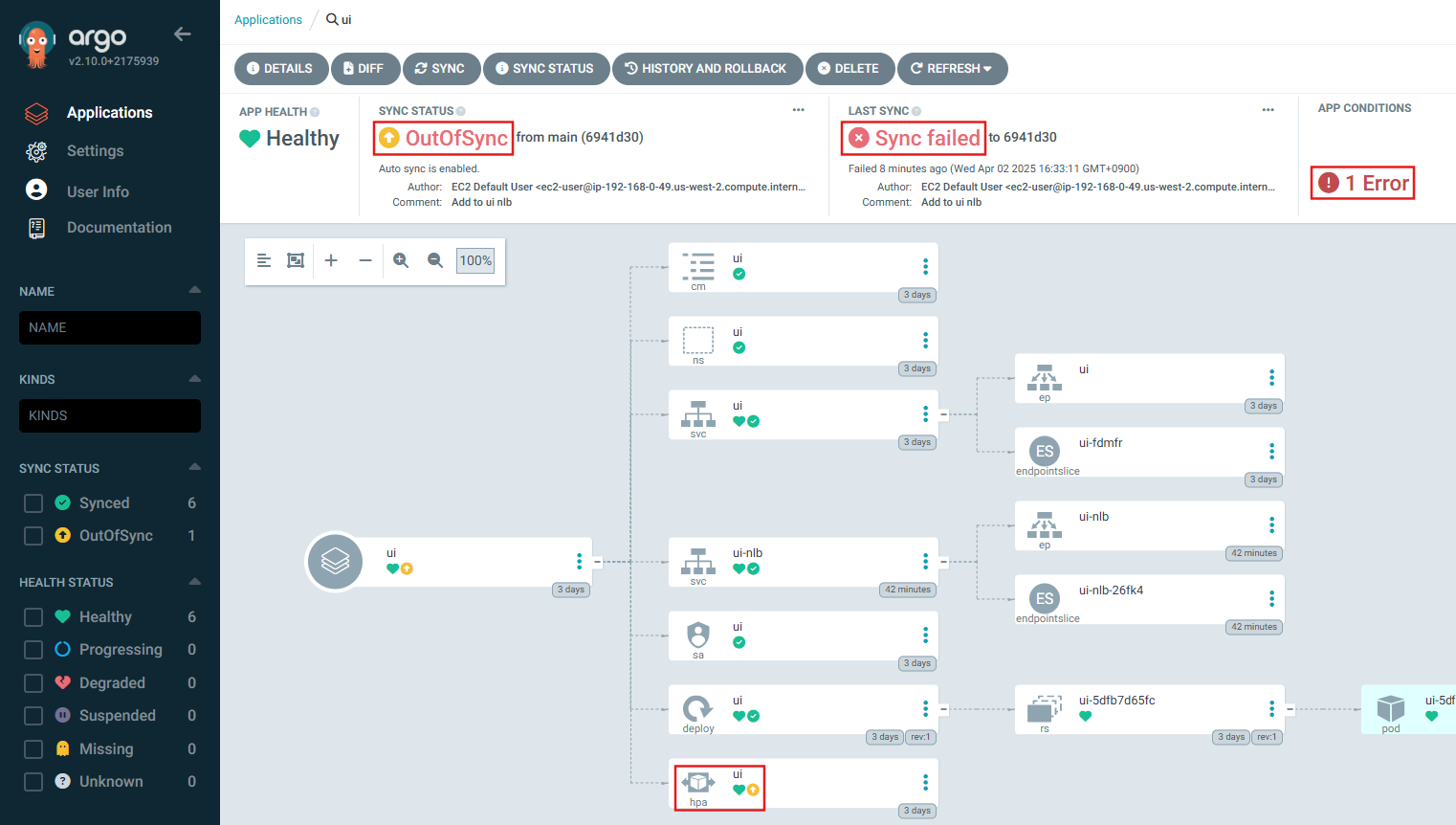

Control Plane Upgrade 후 상태 확인

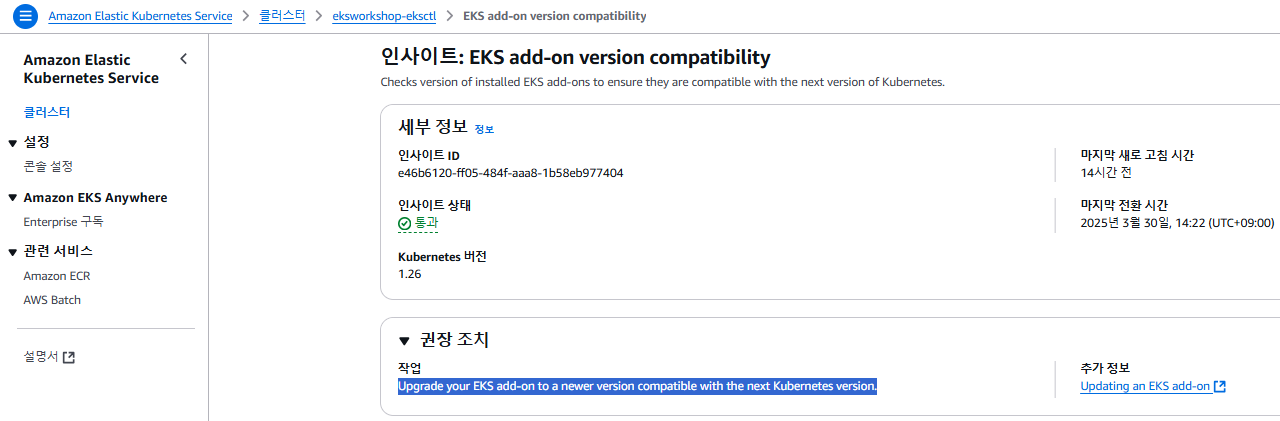

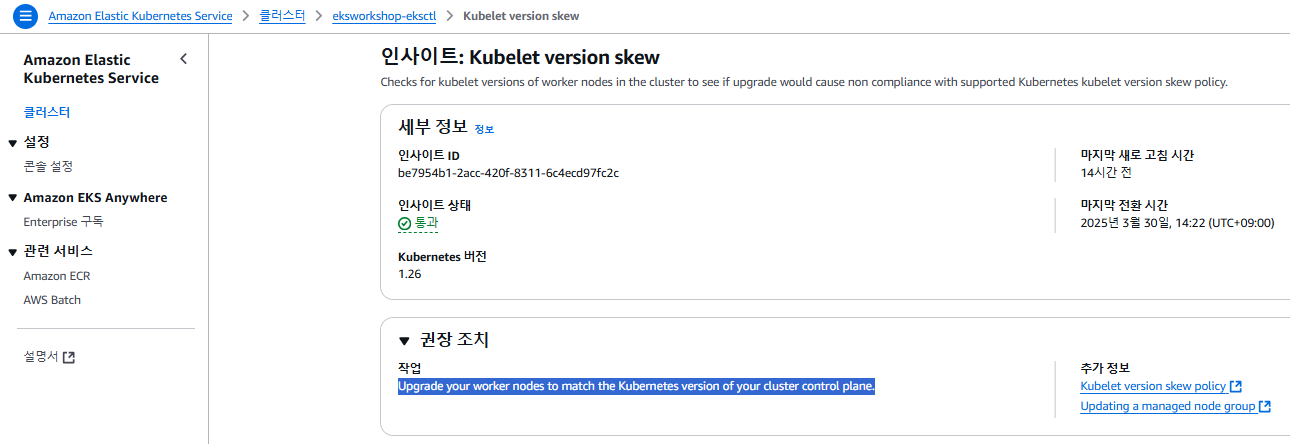

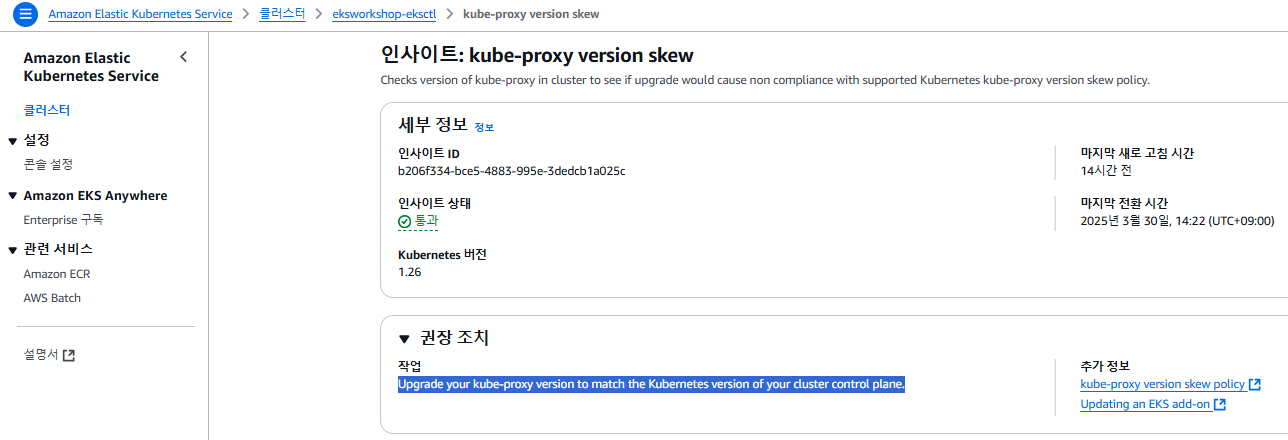

Cluster Insights

Cluster Insights: EKS add-on version compatibility

Cluster Insights: Kubelet version skew

Cluster Insights: kube-proxy version skew

Cluster Insights: Deprecated APIs removed in Kubernetes v1.26

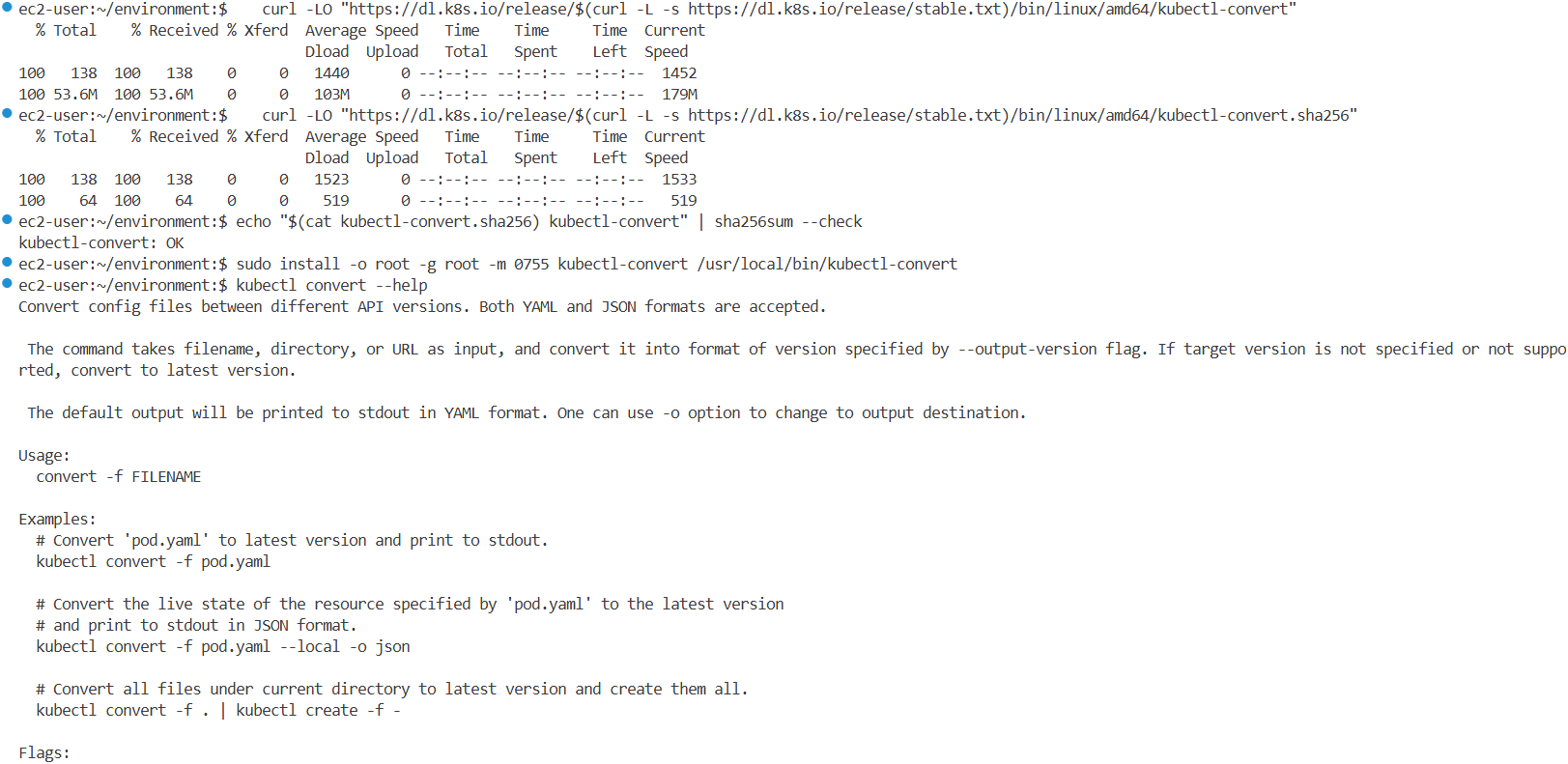

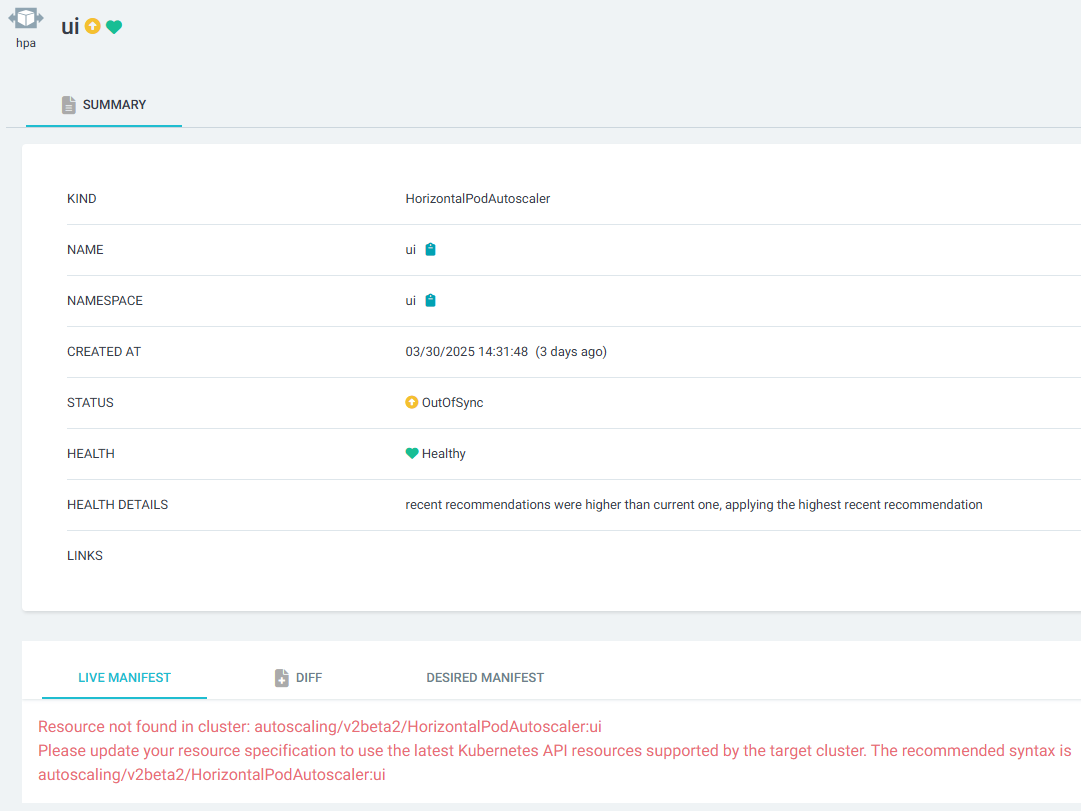

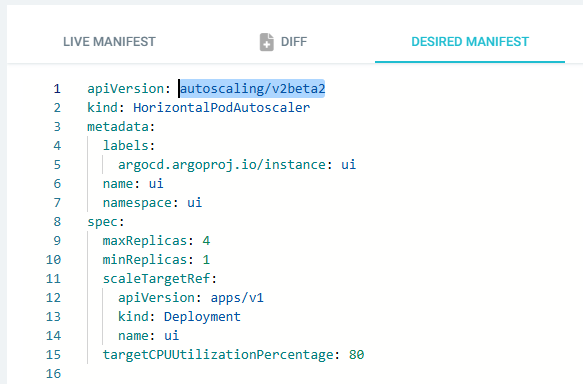

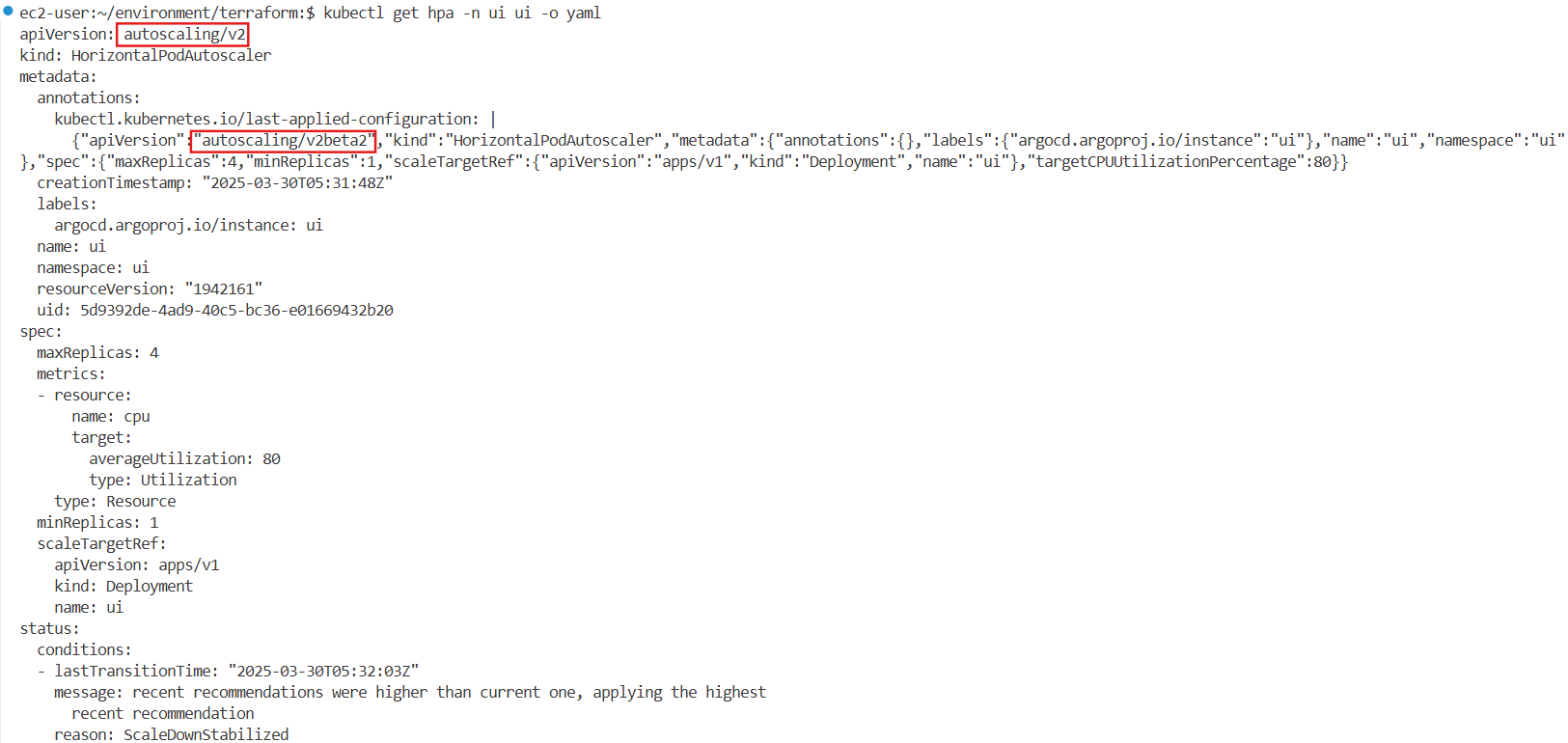

ArgoCD에 UI App(HPA) 상태 확인

#

kubectl get hpa -n ui ui -o yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"autoscaling/v2beta2","kind":"HorizontalPodAutoscaler","metadata":{"annotations":{},"labels":{"argocd.argoproj.io/instance":"ui"},"name":"ui","namespace":"ui"},"spec":{"maxReplicas":4,"minReplicas":1,"scaleTargetRef":{"apiVersion":"apps/v1","kind":"Deployment","name":"ui"},"targetCPUUtilizationPercentage":80}}

creationTimestamp: "2025-03-25T02:40:17Z"

labels:

argocd.argoproj.io/instance: ui

...

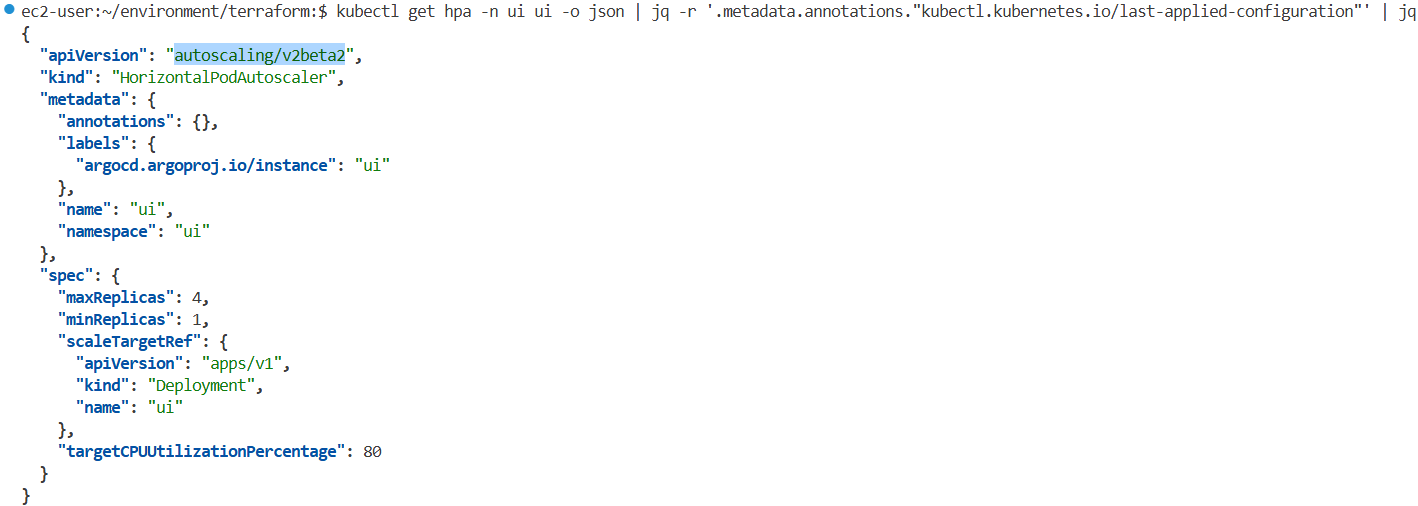

kubectl get hpa -n ui ui -o json | jq -r '.metadata.annotations."kubectl.kubernetes.io/last-applied-configuration"' | jq

{

"apiVersion": "autoscaling/v2beta2",

"kind": "HorizontalPodAutoscaler",

"metadata": {

"annotations": {},

"labels": {

"argocd.argoproj.io/instance": "ui"

},

"name": "ui",

"namespace": "ui"

},

"spec": {

"maxReplicas": 4,

"minReplicas": 1,

"scaleTargetRef": {

"apiVersion": "apps/v1",

"kind": "Deployment",

"name": "ui"

},

"targetCPUUtilizationPercentage": 80

}

}

#

kubectl get hpa -n ui ui -o yaml | kubectl neat > hpa-ui.yaml

kubectl convert -f hpa-ui.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

creationTimestamp: null

labels:

argocd.argoproj.io/instance: ui

name: ui

namespace: ui

spec:

maxReplicas: 4

metrics:

- resource:

name: cpu

target:

averageUtilization: 80

type: Utilization

type: Resource

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: ui

status:

currentMetrics: null

desiredReplicas: 0

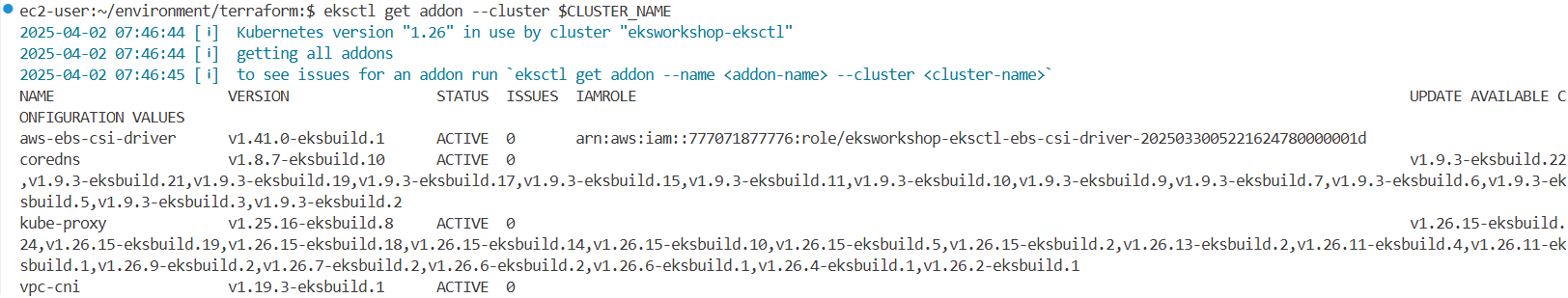

2.12 EKS 애드온 업그레이드

Amazon EKS 클러스터 업그레이드의 일환으로, 클러스터에 설치된 애드온도 함께 업그레이드해야 합니다.

# 가능한 업그레이드 버전 정보 확인

eksctl get addon --cluster $CLUSTER_NAME

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.41.0-eksbuild.1 ACTIVE 0 arn:aws:iam::271345173787:role/eksworkshop-eksctl-ebs-csi-driver-2025032502294618580000001d

coredns v1.8.7-eksbuild.10 ACTIVE 0 v1.9.3-eksbuild.22,v1.9.3-eksbuild.21,v1.9.3-eksbuild.19,v1.9.3-eksbuild.17,v1.9.3-eksbuild.15,v1.9.3-eksbuild.11,v1.9.3-eksbuild.10,v1.9.3-eksbuild.9,v1.9.3-eksbuild.7,v1.9.3-eksbuild.6,v1.9.3-eksbuild.5,v1.9.3-eksbuild.3,v1.9.3-eksbuild.2

kube-proxy v1.25.16-eksbuild.8 ACTIVE 0 v1.26.15-eksbuild.24,v1.26.15-eksbuild.19,v1.26.15-eksbuild.18,v1.26.15-eksbuild.14,v1.26.15-eksbuild.10,v1.26.15-eksbuild.5,v1.26.15-eksbuild.2,v1.26.13-eksbuild.2,v1.26.11-eksbuild.4,v1.26.11-eksbuild.1,v1.26.9-eksbuild.2,v1.26.7-eksbuild.2,v1.26.6-eksbuild.2,v1.26.6-eksbuild.1,v1.26.4-eksbuild.1,v1.26.2-eksbuild.1

vpc-cni v1.19.3-eksbuild.1 ACTIVE 0

#

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/amazon-k8s-cni:v1.19.3-eksbuild.1

8 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/aws-ebs-csi-driver:v1.41.0

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v1.8.7-eksbuild.10

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/csi-provisioner:v5.2.0-eks-1-32-7

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v1.25.16-minimal-eksbuild.8

8 amazon/aws-efs-csi-driver:v1.7.6

2 public.ecr.aws/eks/aws-load-balancer-controller:v2.7.1

#

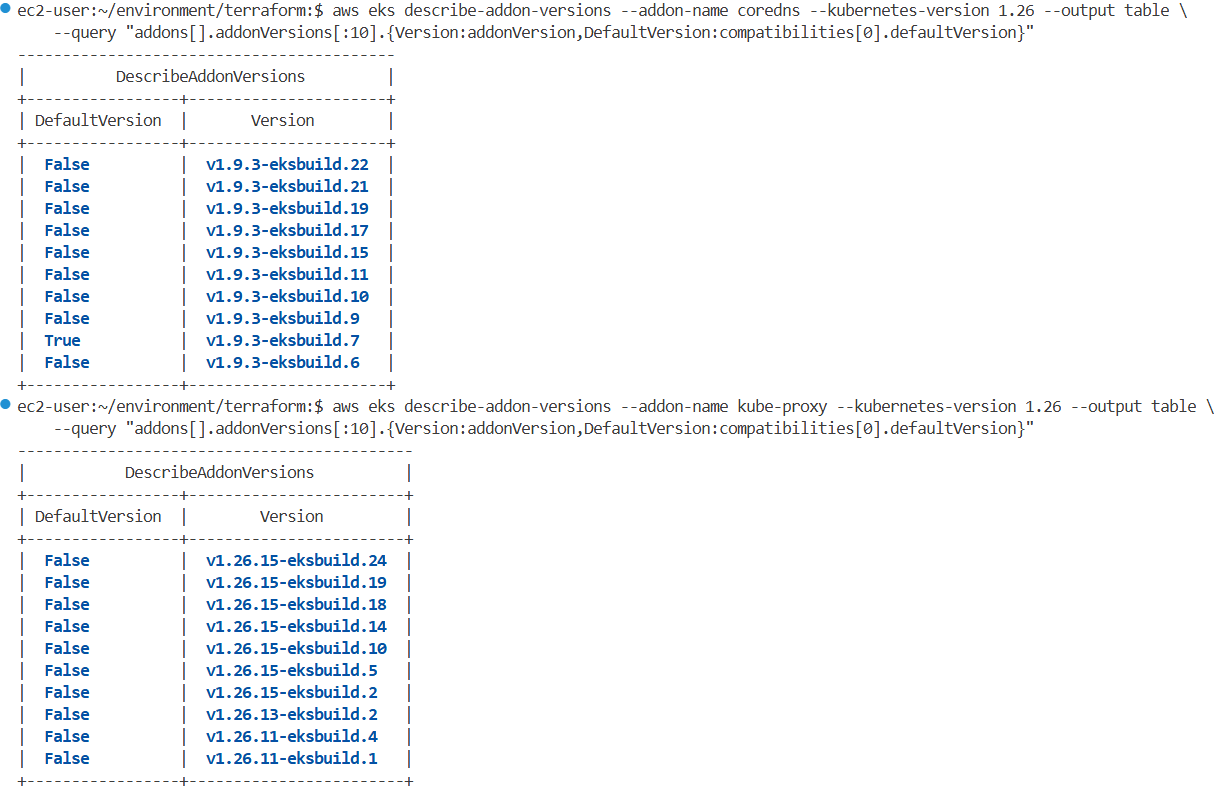

aws eks describe-addon-versions --addon-name coredns --kubernetes-version 1.26 --output table \

--query "addons[].addonVersions[:10].{Version:addonVersion,DefaultVersion:compatibilities[0].defaultVersion}"

------------------------------------------

| DescribeAddonVersions |

+-----------------+----------------------+

| DefaultVersion | Version |

+-----------------+----------------------+

| False | v1.9.3-eksbuild.22 |

| False | v1.9.3-eksbuild.21 |

| False | v1.9.3-eksbuild.19 |

| False | v1.9.3-eksbuild.17 |

| False | v1.9.3-eksbuild.15 |

| False | v1.9.3-eksbuild.11 |

| False | v1.9.3-eksbuild.10 |

| False | v1.9.3-eksbuild.9 |

| True | v1.9.3-eksbuild.7 |

| False | v1.9.3-eksbuild.6 |

+-----------------+----------------------+

#

aws eks describe-addon-versions --addon-name kube-proxy --kubernetes-version 1.26 --output table \

--query "addons[].addonVersions[:10].{Version:addonVersion,DefaultVersion:compatibilities[0].defaultVersion}"

--------------------------------------------

| DescribeAddonVersions |

+-----------------+------------------------+

| DefaultVersion | Version |

+-----------------+------------------------+

| False | v1.26.15-eksbuild.24 |

| False | v1.26.15-eksbuild.19 |

| False | v1.26.15-eksbuild.18 |

| False | v1.26.15-eksbuild.14 |

| False | v1.26.15-eksbuild.10 |

| False | v1.26.15-eksbuild.5 |

| False | v1.26.15-eksbuild.2 |

| False | v1.26.13-eksbuild.2 |

| False | v1.26.11-eksbuild.4 |

| False | v1.26.11-eksbuild.1 |

+-----------------+------------------------+

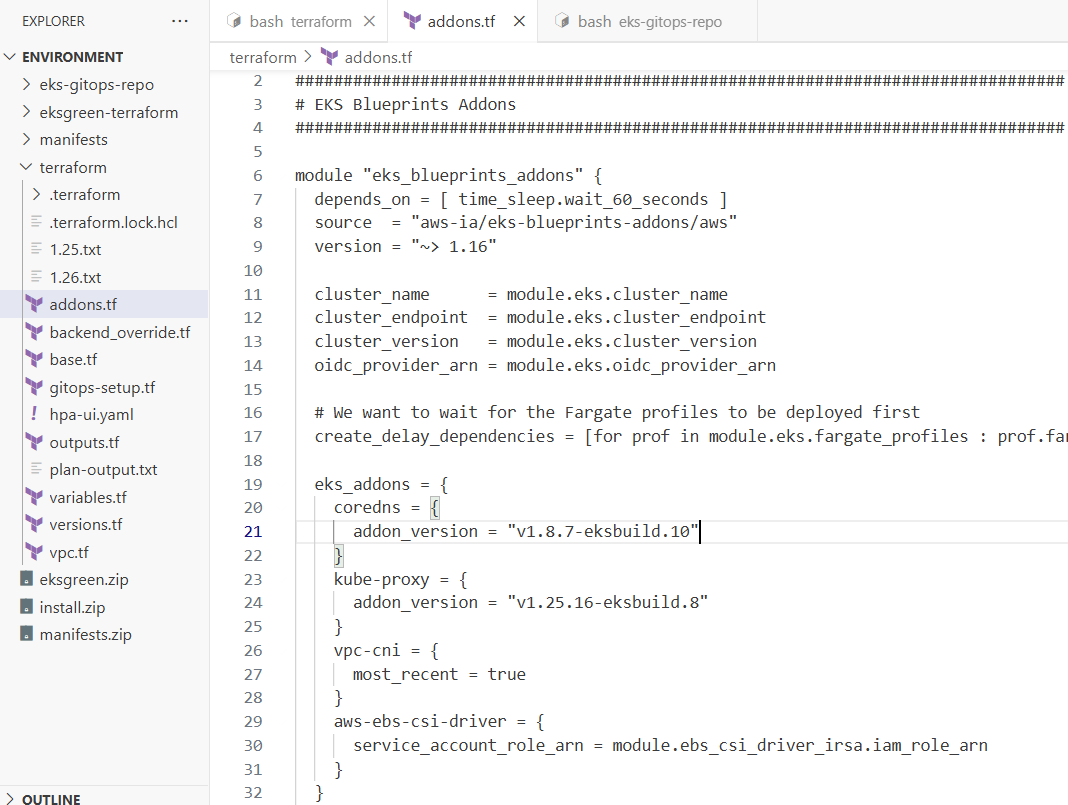

기존

eks_addons = {

coredns = {

addon_version = "v1.8.7-eksbuild.10"

}

kube-proxy = {

addon_version = "v1.25.16-eksbuild.8"

}

vpc-cni = {

most_recent = true

}

aws-ebs-csi-driver = {

service_account_role_arn = module.ebs_csi_driver_irsa.iam_role_arn

}

}

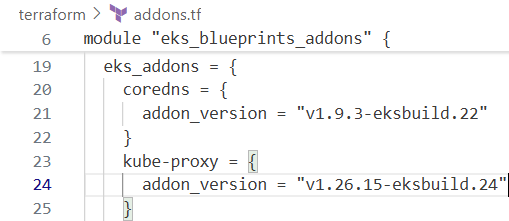

수정

eks_addons = {

coredns = {

version = "v1.9.3-eksbuild.22" # Recommended version for EKS 1.26

}

kube_proxy = {

version = "v1.26.15-eksbuild.24" # Recommended version for EKS 1.26

}

vpc-cni = {

most_recent = true

}

aws-ebs-csi-driver = {

service_account_role_arn = module.ebs_csi_driver_irsa.iam_role_arn

}

}

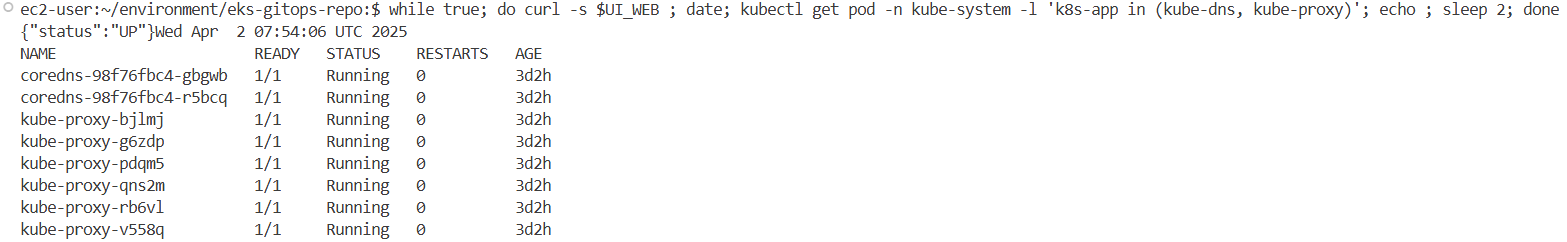

# 반복 접속 : 아래 coredns, kube-proxy addon 업그레이드 시 ui 무중단 통신 확인!

aws eks update-kubeconfig --name eksworkshop-eksctl # 자신의 집 PC일 경우

kubectl get pod -n kube-system -l k8s-app=kube-dns

kubectl get pod -n kube-system -l k8s-app=kube-proxy

kubectl get pod -n kube-system -l 'k8s-app in (kube-dns, kube-proxy)'

while true; do curl -s $UI_WEB ; date; kubectl get pod -n kube-system -l 'k8s-app in (kube-dns, kube-proxy)'; echo ; sleep 2; done

변경 사항을 저장한 후, 아래 명령어를 통해 무중단 롤링 방식으로 애드온을 업그레이드합니다.

cd ~/environment/terraform/

terraform plan -no-color | tee addon.txt

...

# module.eks_blueprints_addons.aws_eks_addon.this["coredns"] will be updated in-place

~ resource "aws_eks_addon" "this" {

~ addon_version = "v1.8.7-eksbuild.10" -> "v1.9.3-eksbuild.22"

id = "eksworkshop-eksctl:coredns"

tags = {

"Blueprint" = "eksworkshop-eksctl"

"GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

}

# (11 unchanged attributes hidden)

# (1 unchanged block hidden)

}

# module.eks_blueprints_addons.aws_eks_addon.this["kube-proxy"] will be updated in-place

~ resource "aws_eks_addon" "this" {

~ addon_version = "v1.25.16-eksbuild.8" -> "v1.26.15-eksbuild.24"

id = "eksworkshop-eksctl:kube-proxy"

tags = {

"Blueprint" = "eksworkshop-eksctl"

"GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

}

...

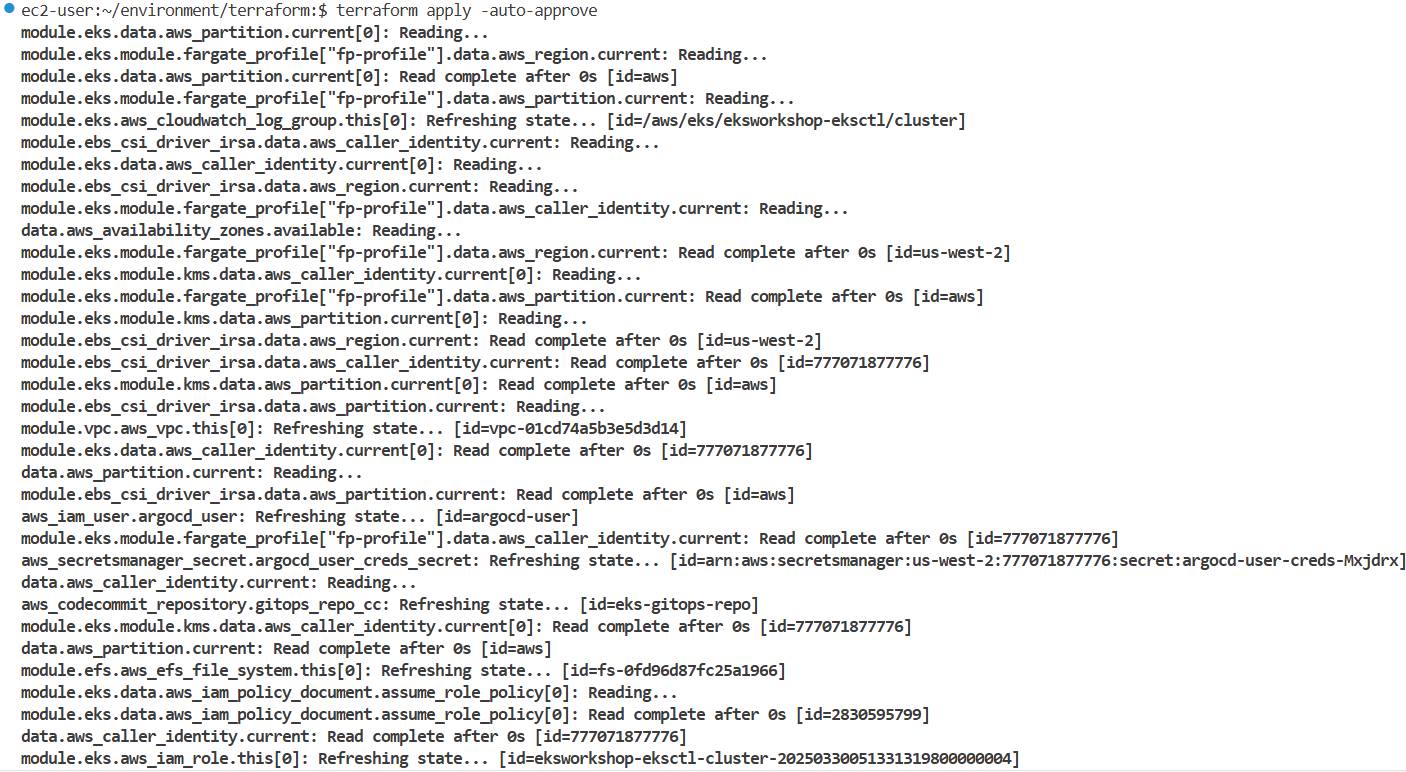

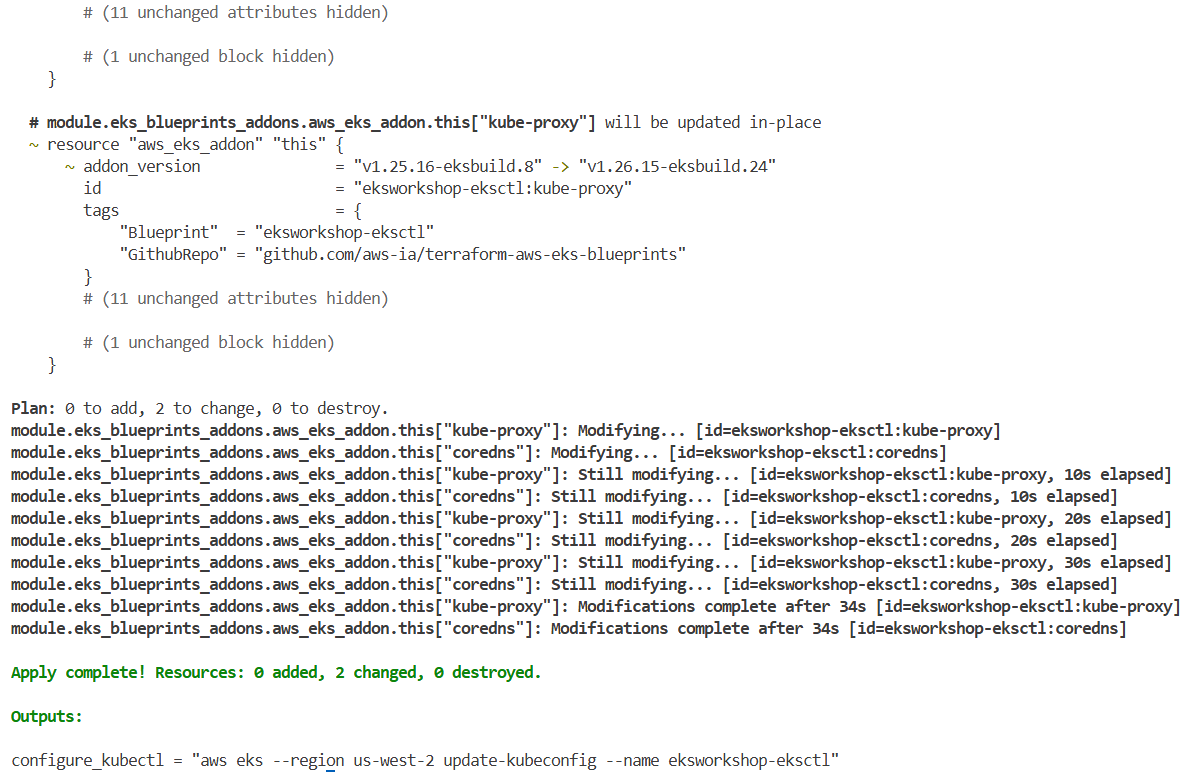

# 1분 정도 이내로 롤링 업데이트 완료!

terraform apply -auto-approve

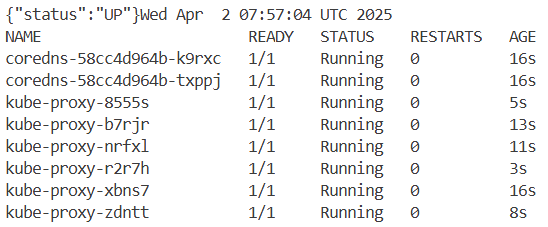

kubectl get pod -n kube-system -l 'k8s-app in (kube-dns, kube-proxy)'

NAME READY STATUS RESTARTS AGE

coredns-58cc4d964b-7867s 1/1 Running 0 71s

coredns-58cc4d964b-qb8zc 1/1 Running 0 71s

kube-proxy-2f6nv 1/1 Running 0 61s

kube-proxy-7n8xj 1/1 Running 0 67s

kube-proxy-7p4p2 1/1 Running 0 70s

kube-proxy-g2vvp 1/1 Running 0 54s

kube-proxy-ks4xp 1/1 Running 0 64s

kube-proxy-kxvh5 1/1 Running 0 57s

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

2 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v1.9.3-eksbuild.22

6 602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v1.26.15-minimal-eksbuild.24

...

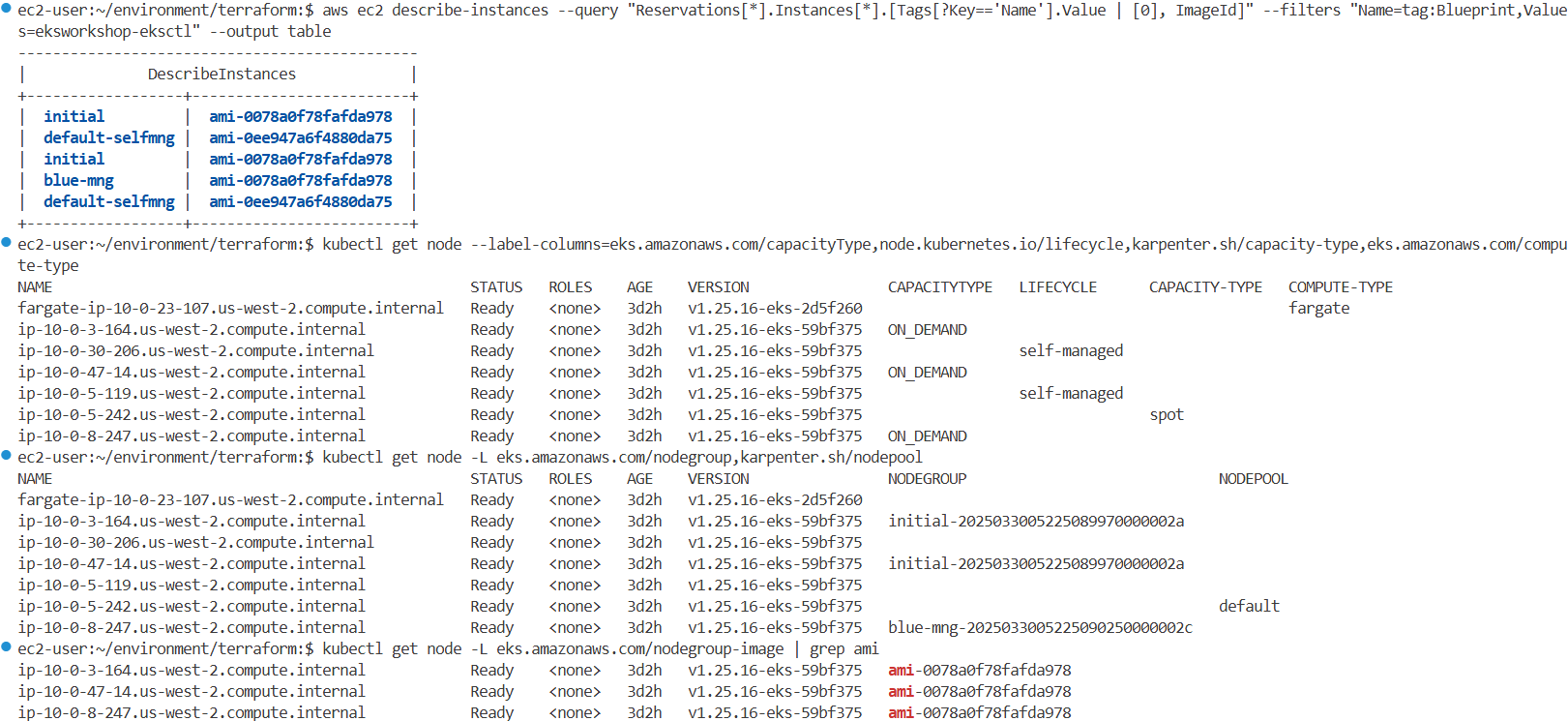

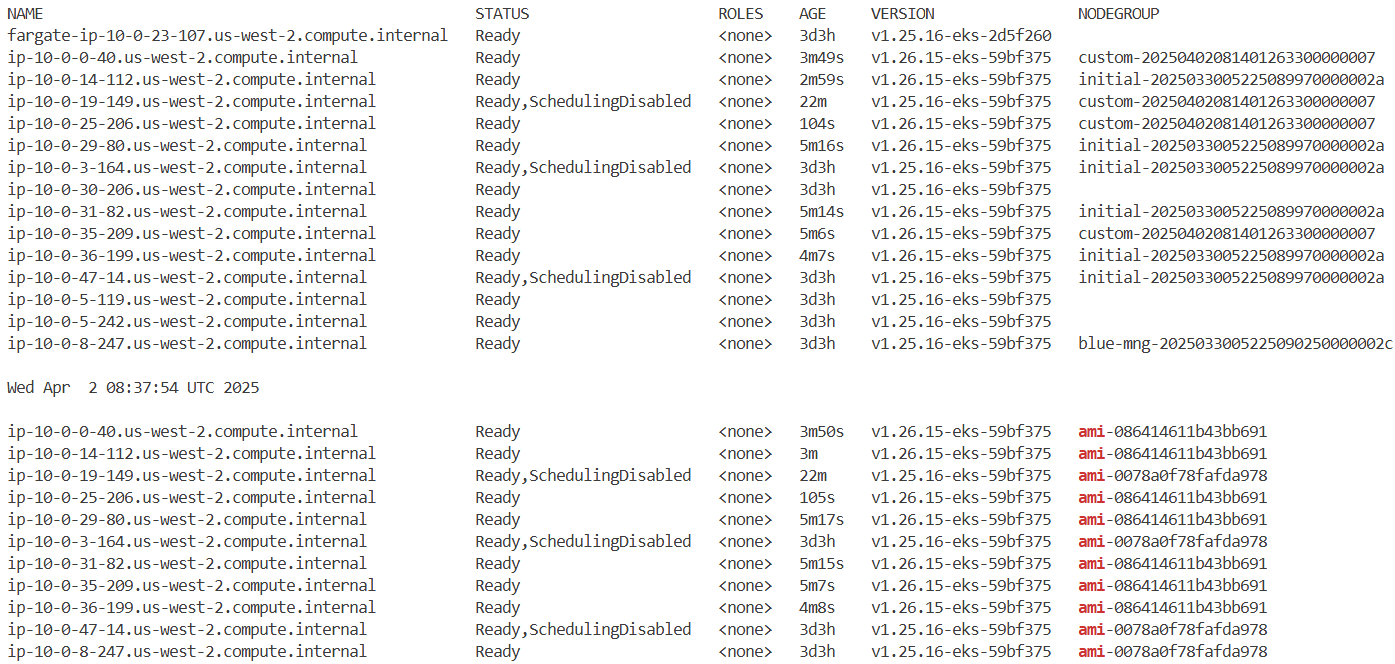

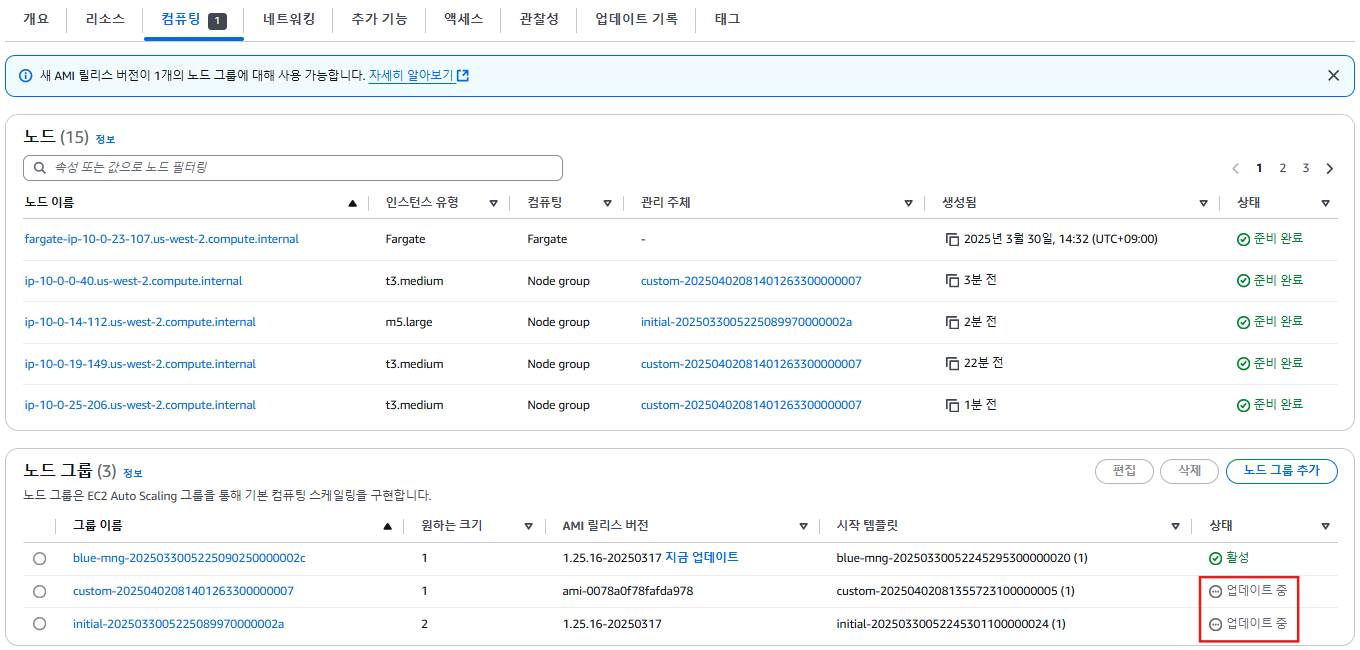

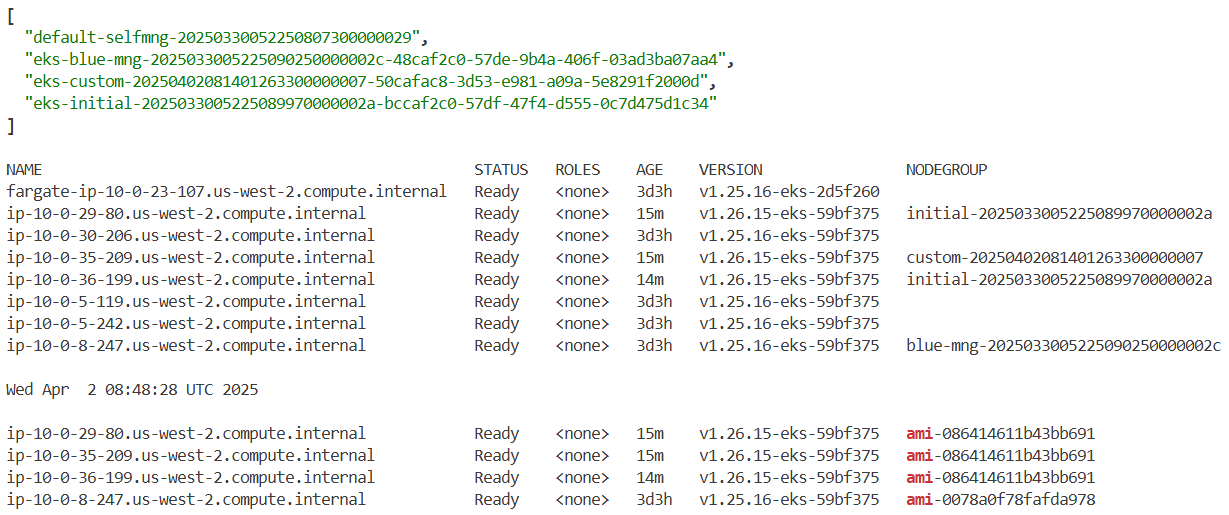

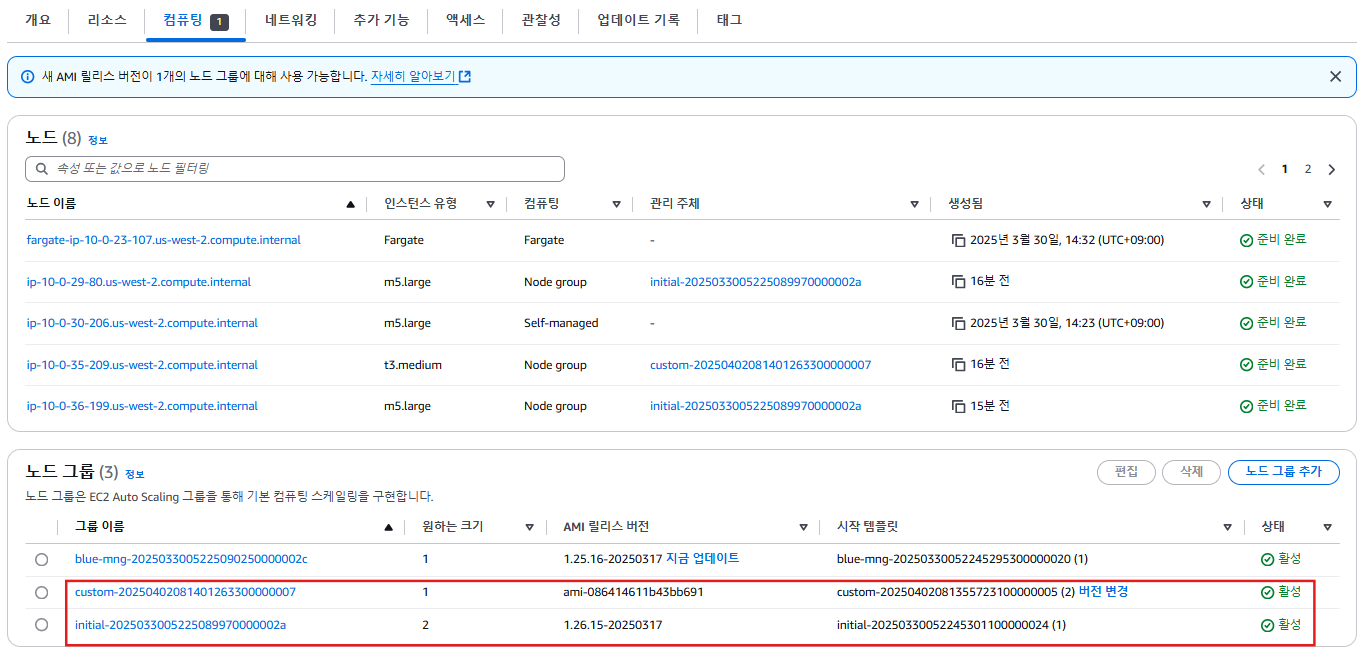

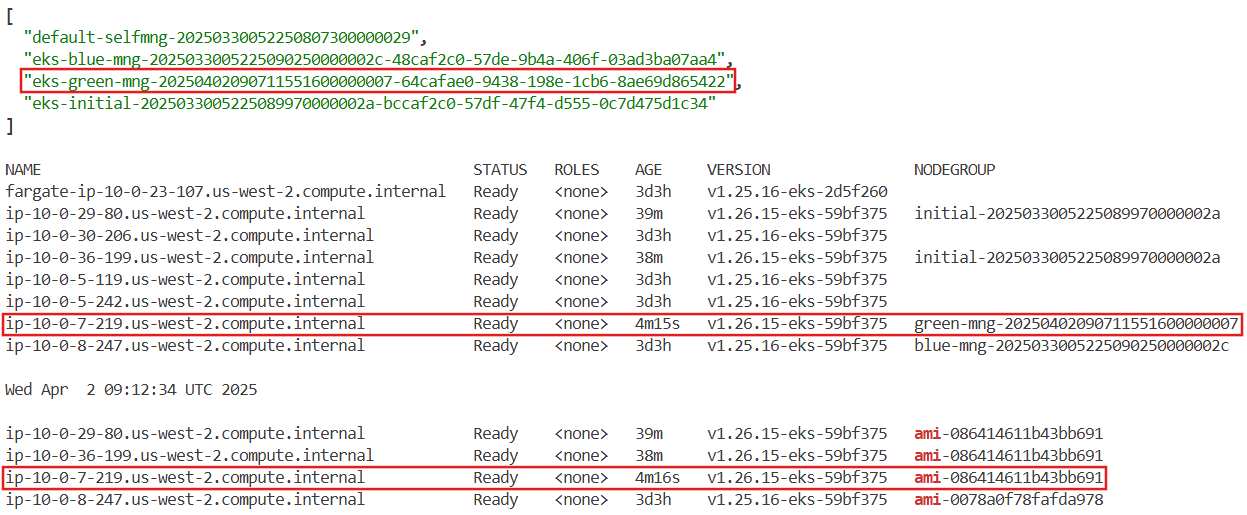

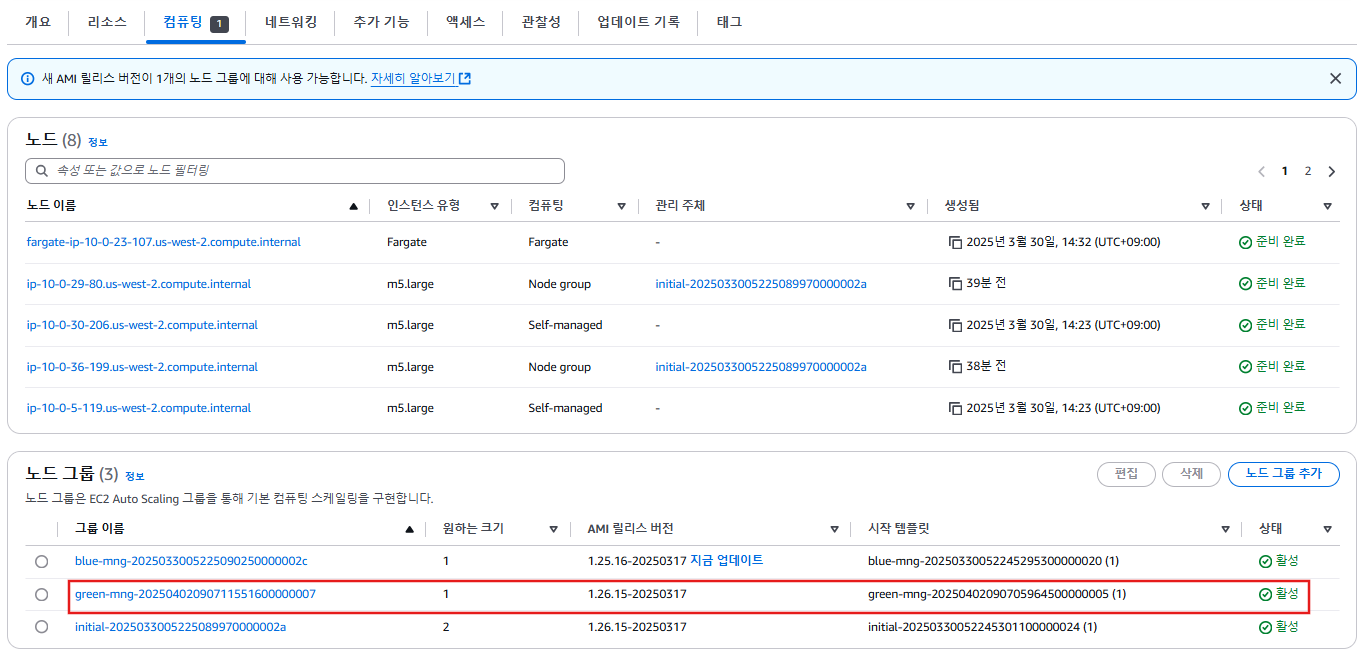

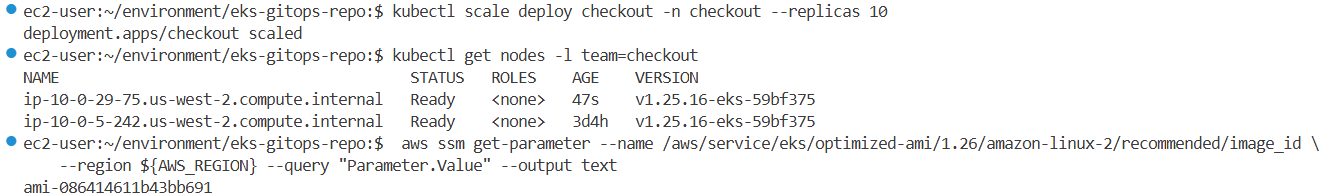

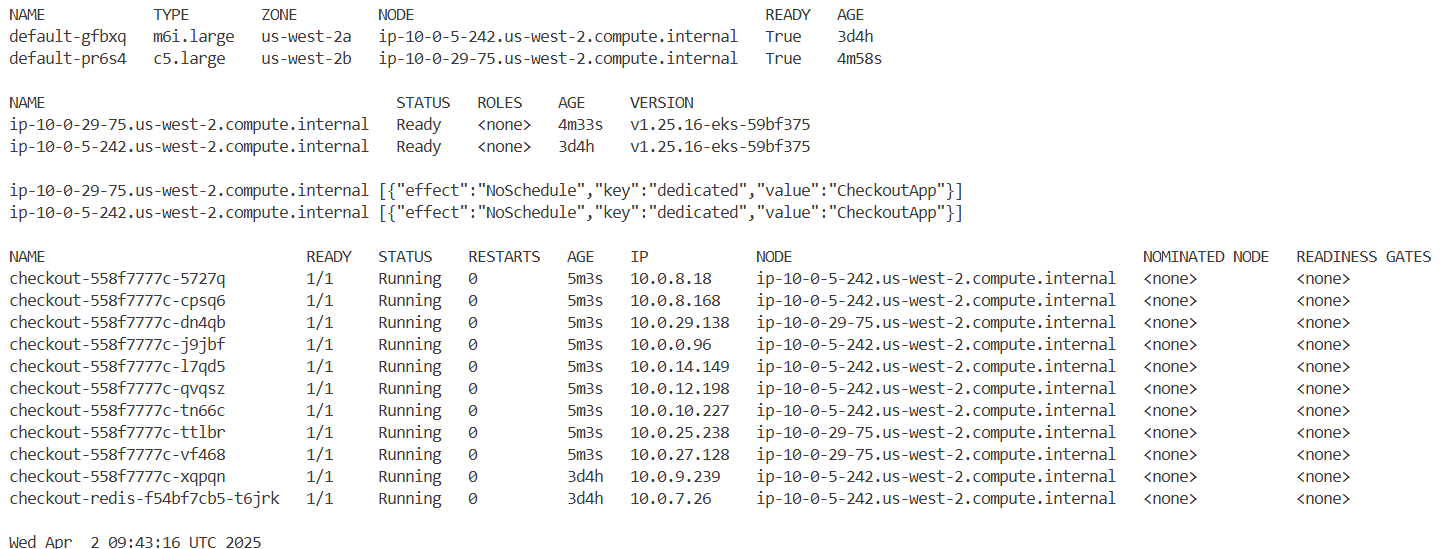

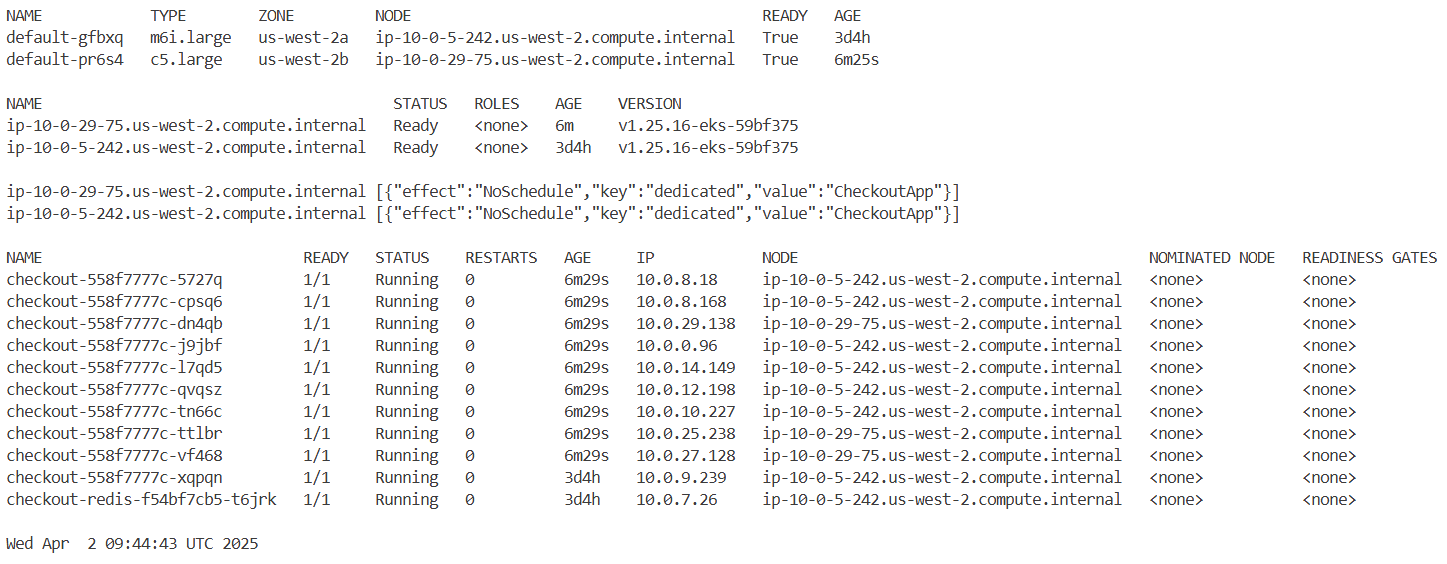

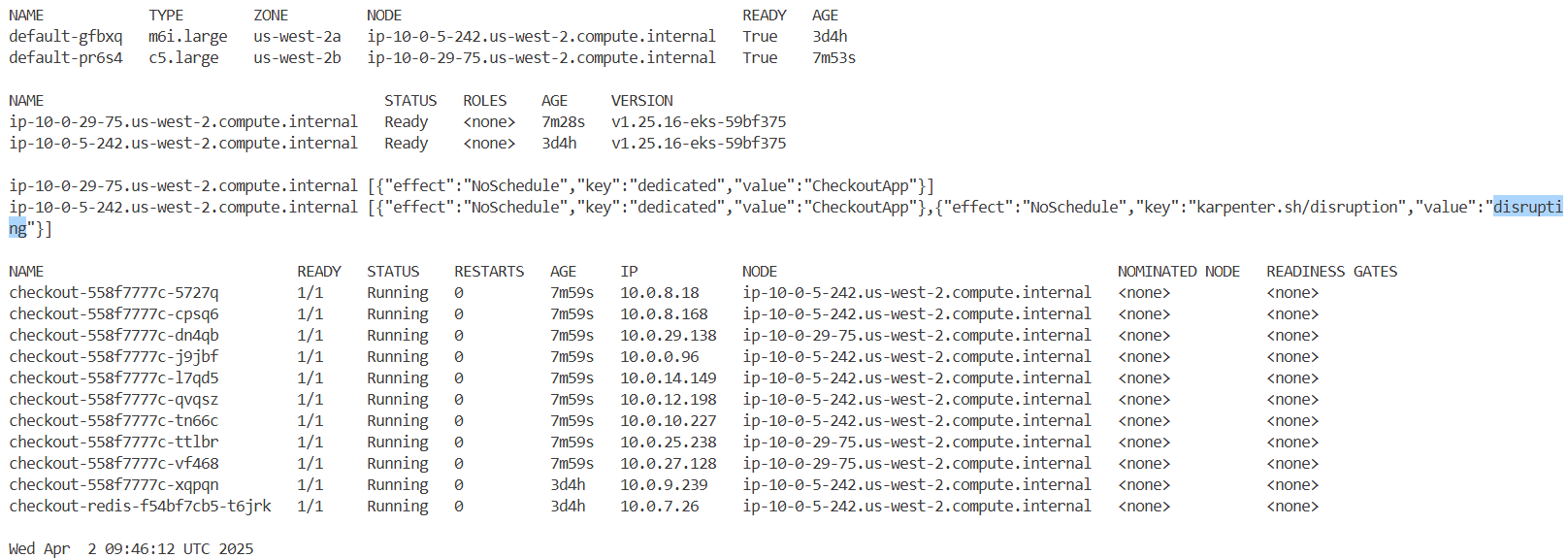

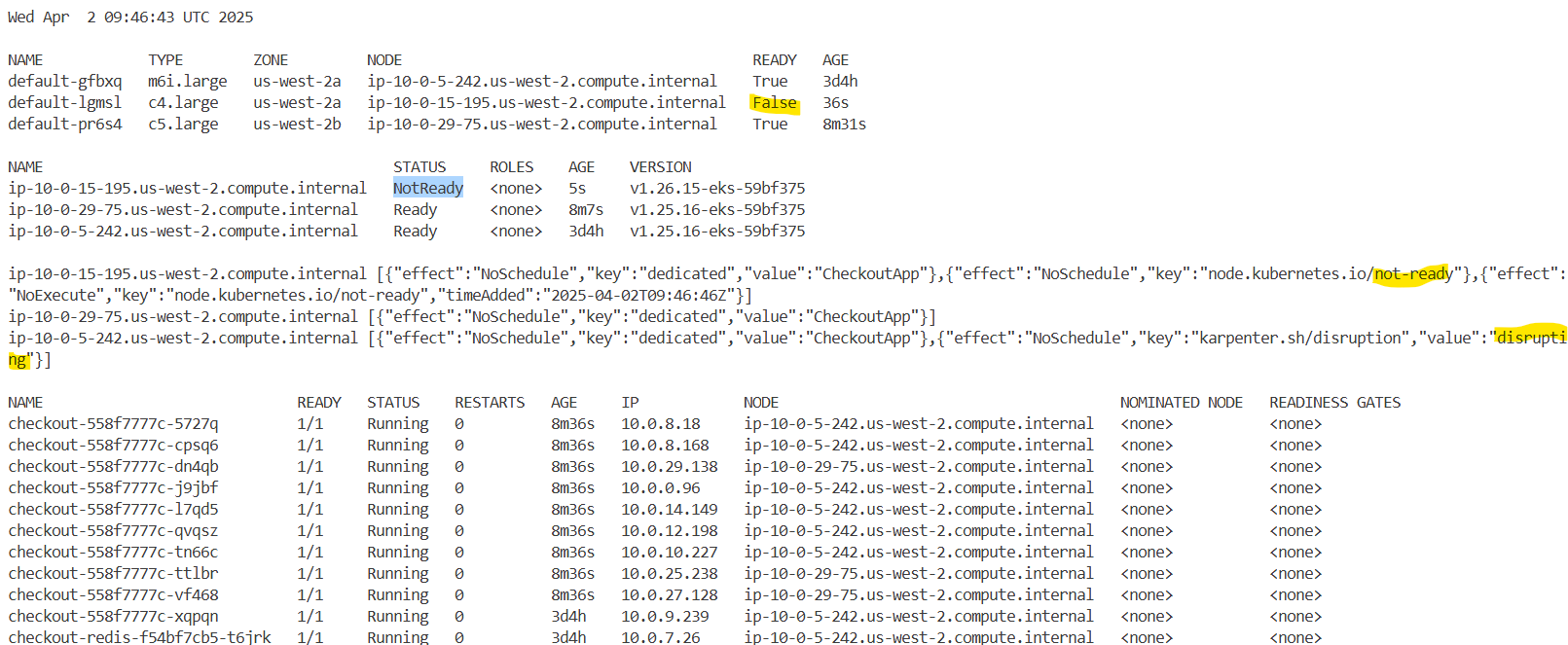

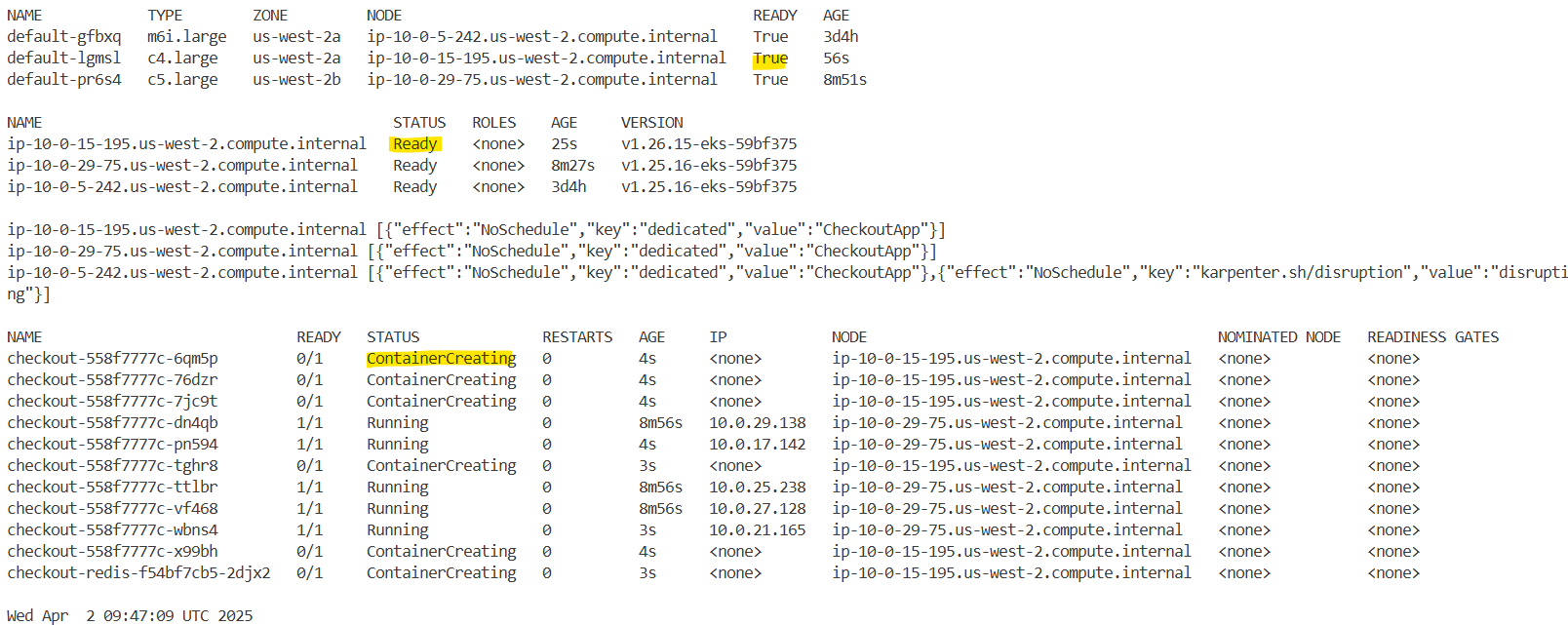

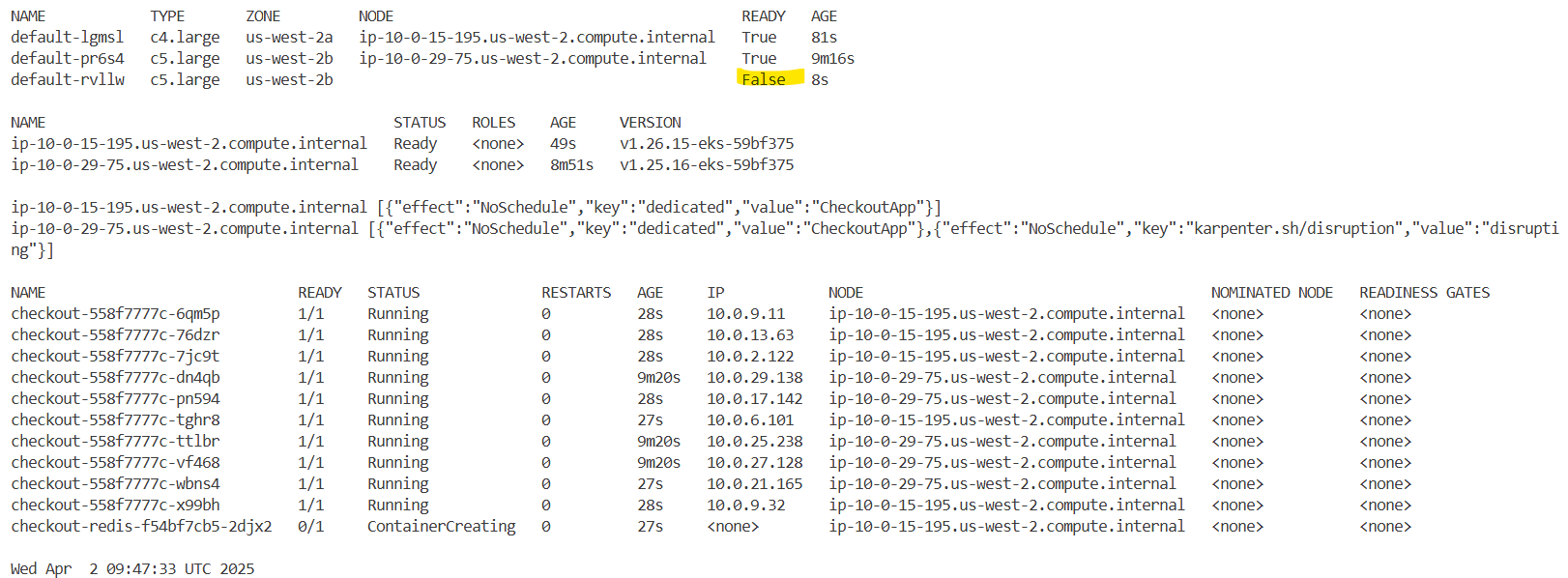

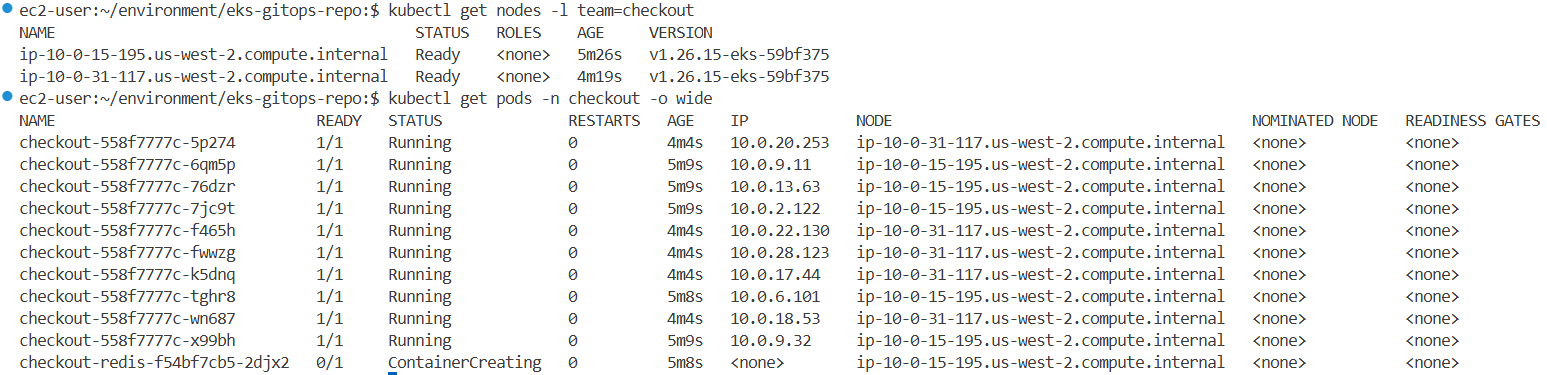

2.13 In-Place Managed Node group 업그레이드

#

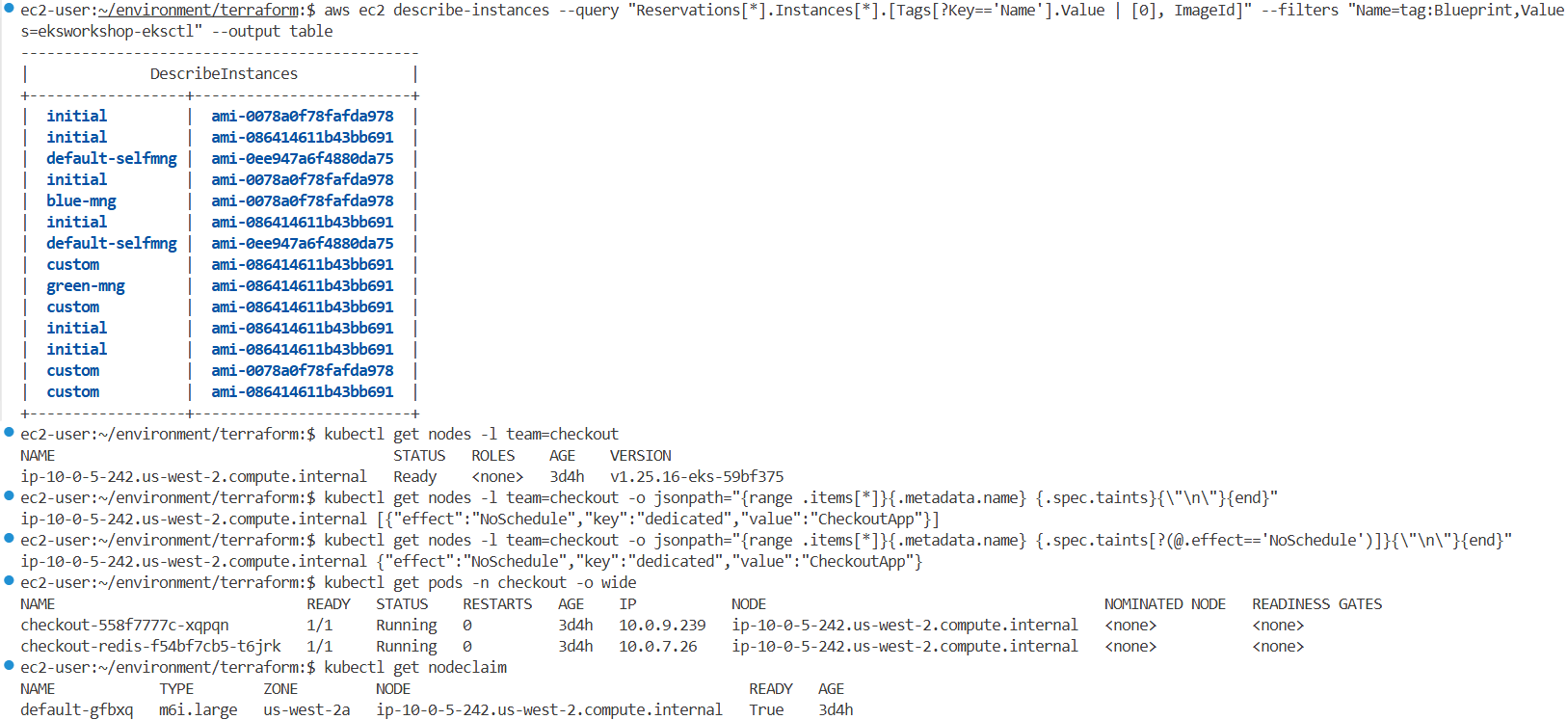

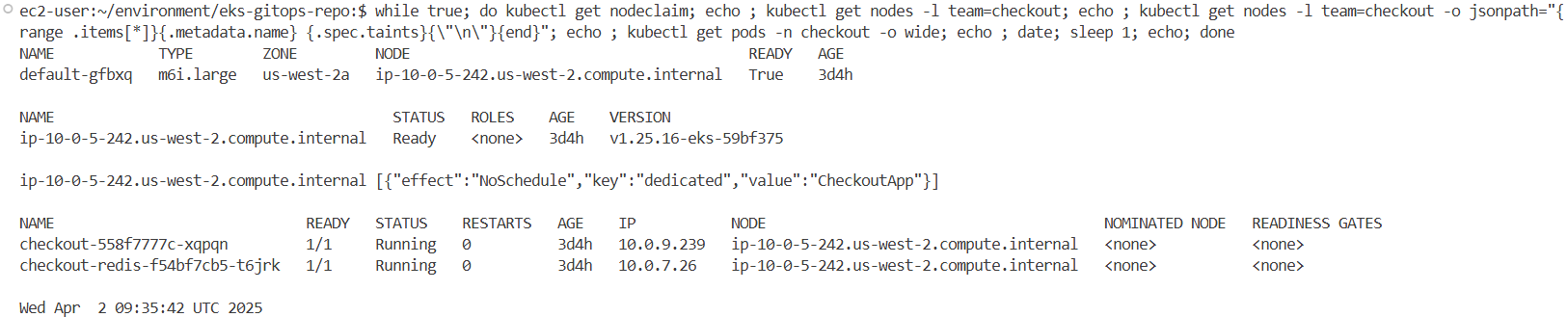

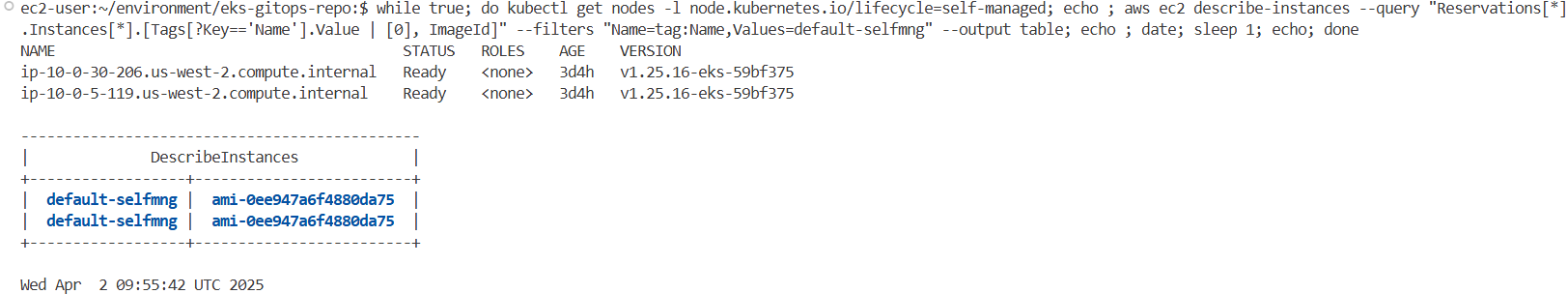

aws ec2 describe-instances --query "Reservations[*].Instances[*].[Tags[?Key=='Name'].Value | [0], ImageId]" --filters "Name=tag:Blueprint,Values=eksworkshop-eksctl" --output table

#

kubectl get node --label-columns=eks.amazonaws.com/capacityType,node.kubernetes.io/lifecycle,karpenter.sh/capacity-type,eks.amazonaws.com/compute-type

NAME STATUS ROLES AGE VERSION CAPACITYTYPE LIFECYCLE CAPACITY-TYPE COMPUTE-TYPE

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-2d5f260 fargate

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 self-managed

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 spot

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 ON_DEMAND

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 self-managed

kubectl get node -L eks.amazonaws.com/nodegroup,karpenter.sh/nodepool

NAME STATUS ROLES AGE VERSION NODEGROUP NODEPOOL

fargate-ip-10-0-41-147.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-2d5f260

ip-10-0-12-228.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 initial-2025032502302076080000002c

ip-10-0-26-119.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375

ip-10-0-3-199.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 blue-mng-20250325023020754500000029

ip-10-0-39-95.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 default

ip-10-0-44-106.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375 initial-2025032502302076080000002c

ip-10-0-6-184.us-west-2.compute.internal Ready <none> 12h v1.25.16-eks-59bf375

#

kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami

ip-10-0-13-217.us-west-2.compute.internal Ready <none> 26h v1.25.16-eks-59bf375 ami-0078a0f78fafda978

ip-10-0-3-168.us-west-2.compute.internal Ready <none> 26h v1.25.16-eks-59bf375 ami-0078a0f78fafda978

ip-10-0-42-84.us-west-2.compute.internal Ready <none> 26h v1.25.16-eks-59bf375 ami-0078a0f78fafda978

#

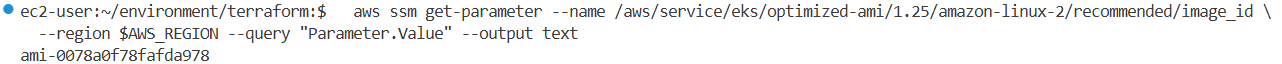

aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.25/amazon-linux-2/recommended/image_id \

--region $AWS_REGION --query "Parameter.Value" --output text

ami-0078a0f78fafda978

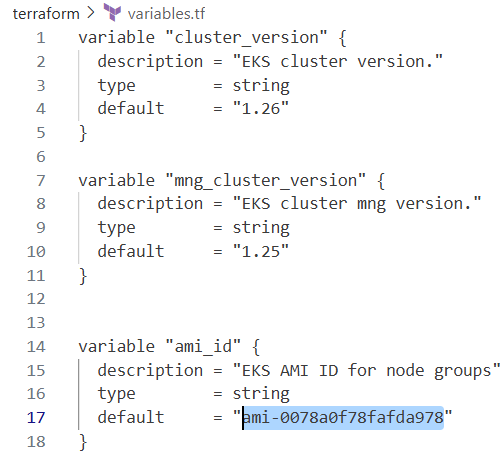

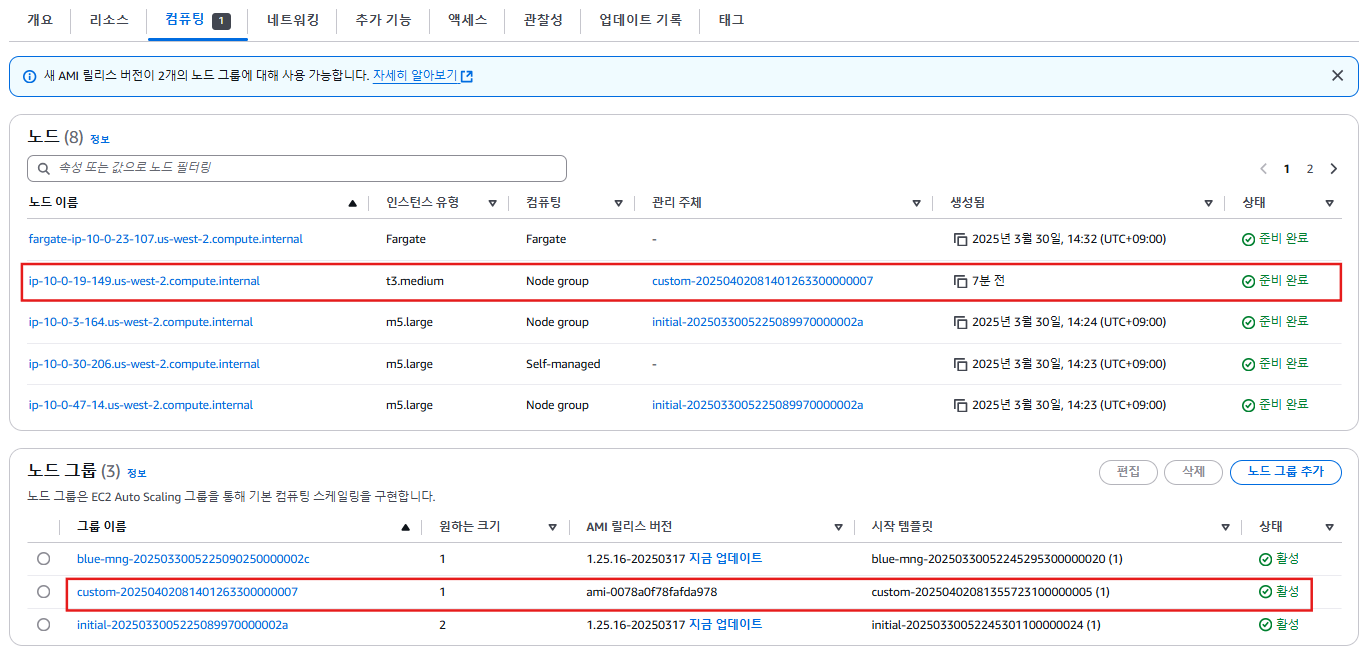

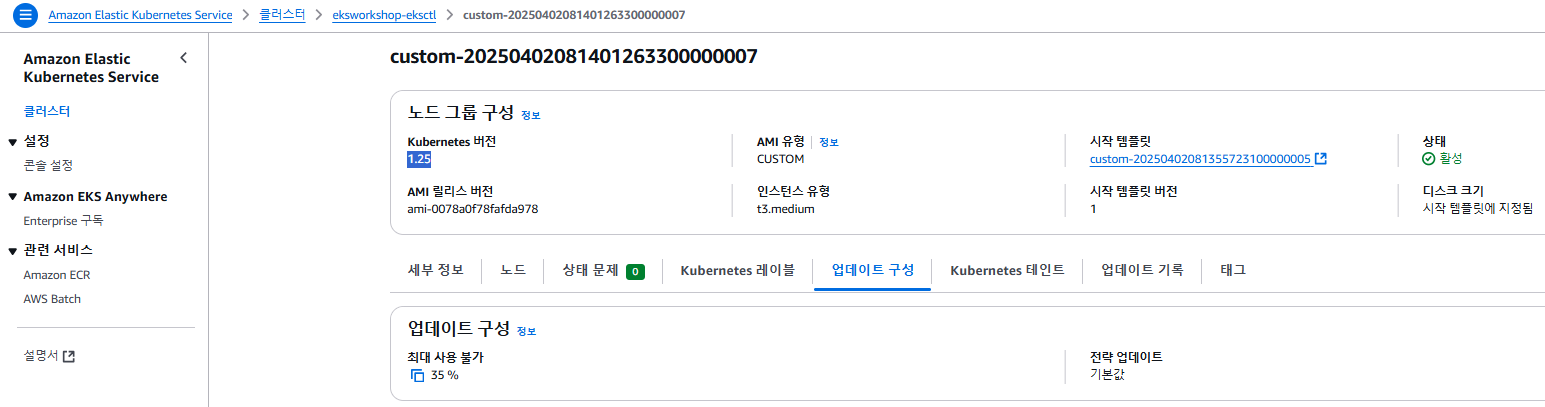

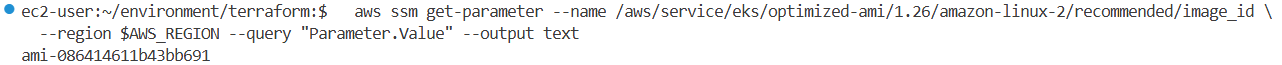

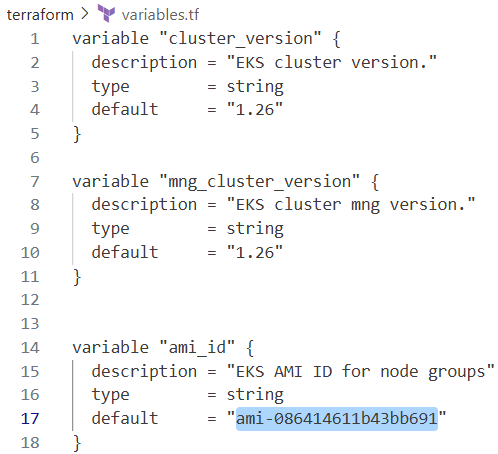

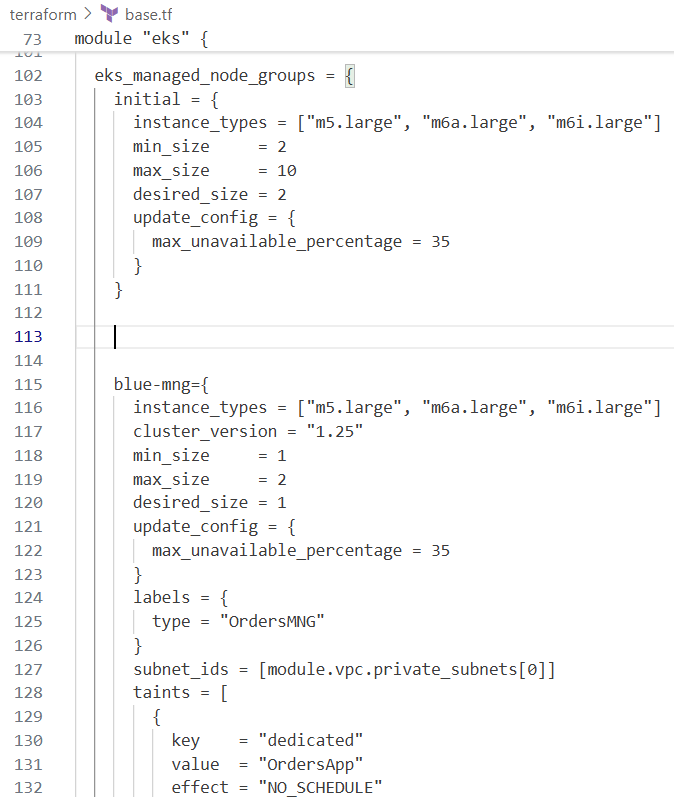

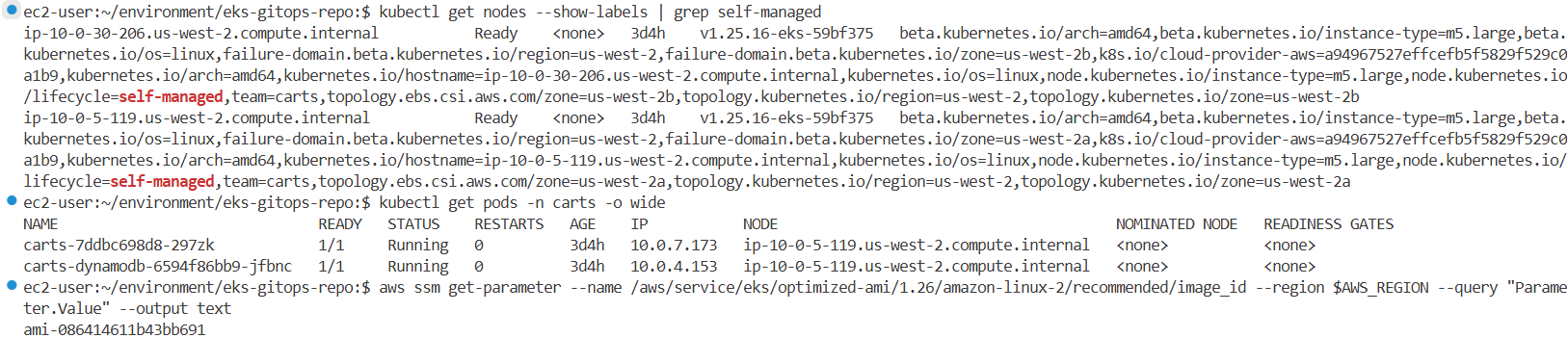

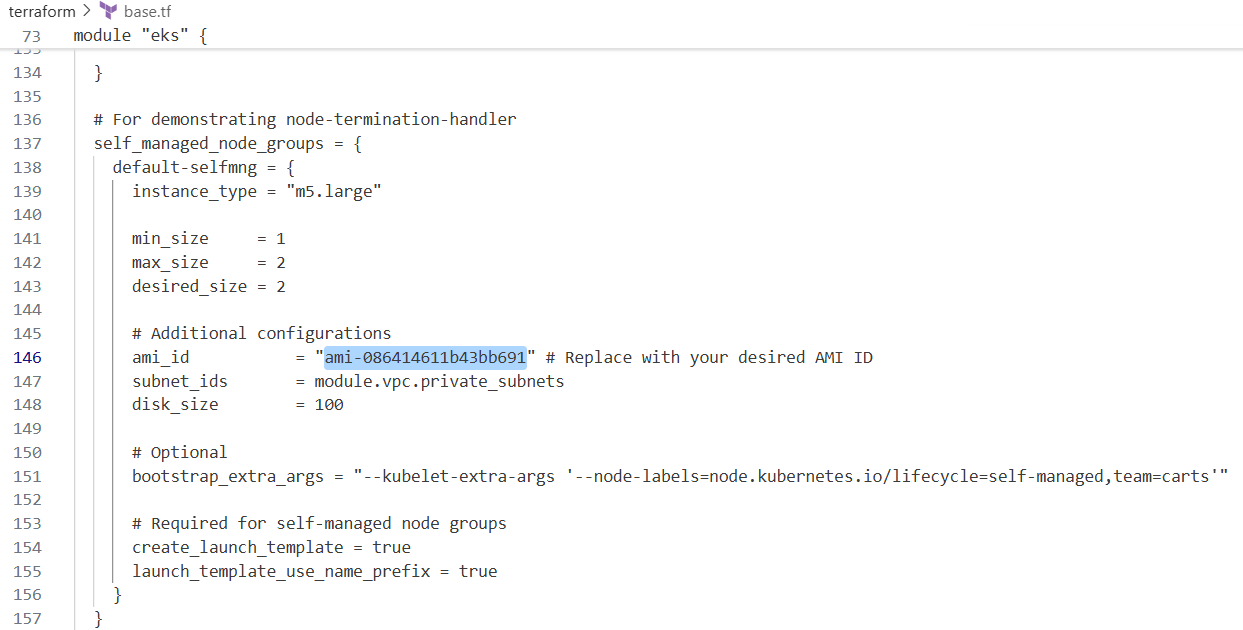

variables.tf에서 ami_id 업데이트

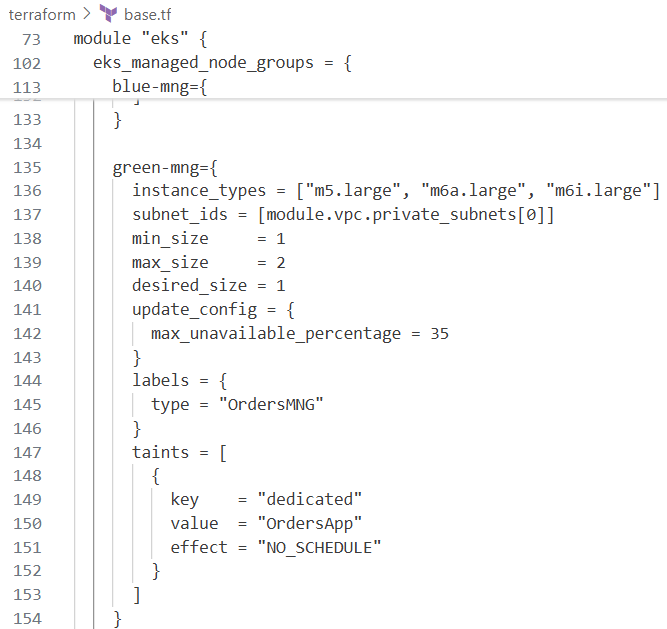

base.tf에 코드 추가

custom = {

instance_types = ["t3.medium"]

min_size = 1

max_size = 2

desired_size = 1

update_config = {

max_unavailable_percentage = 35

}

ami_id = try(var.ami_id)

enable_bootstrap_user_data = true

}

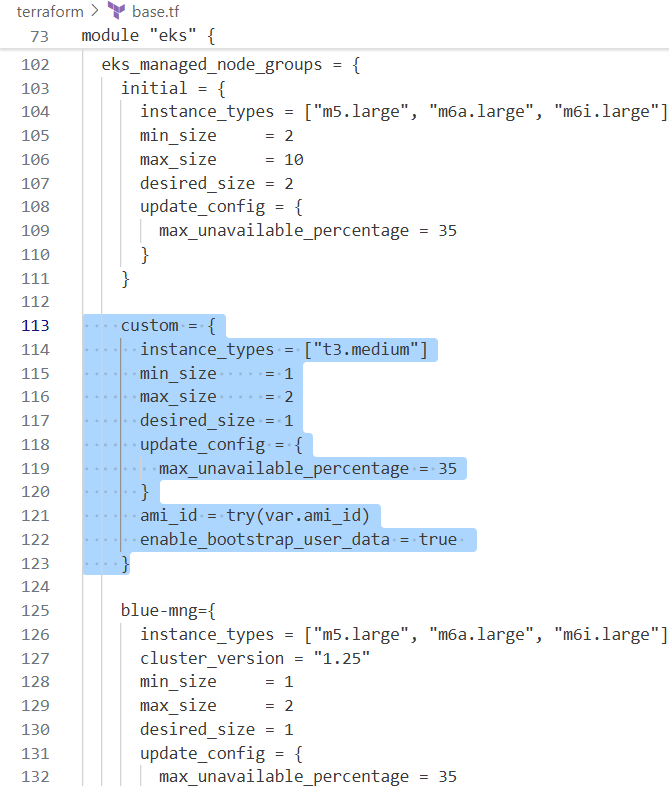

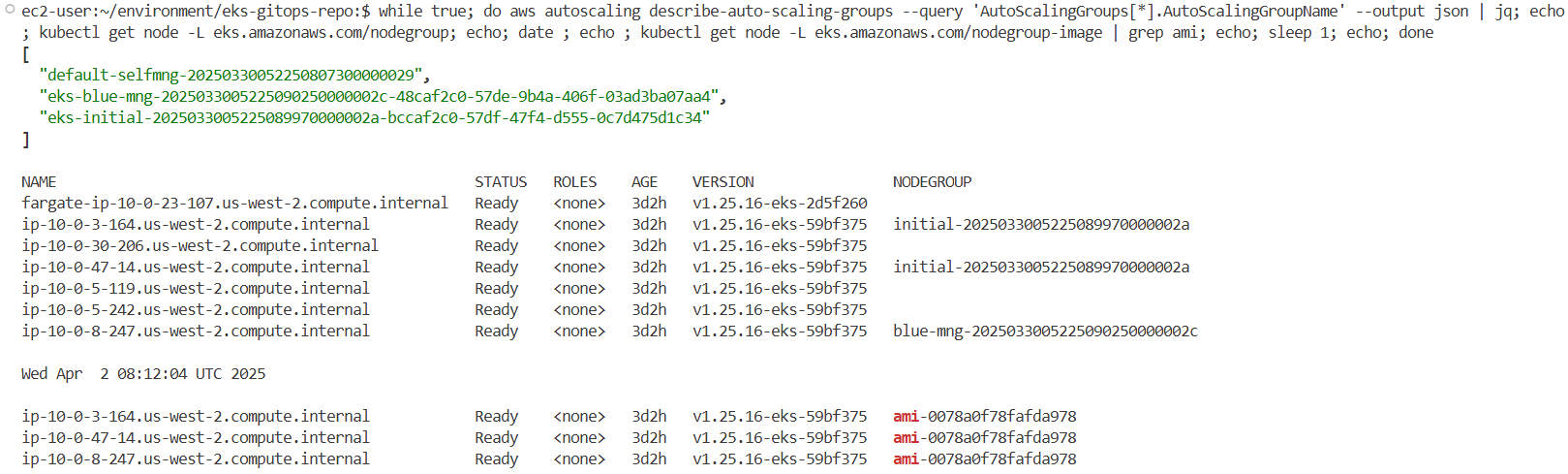

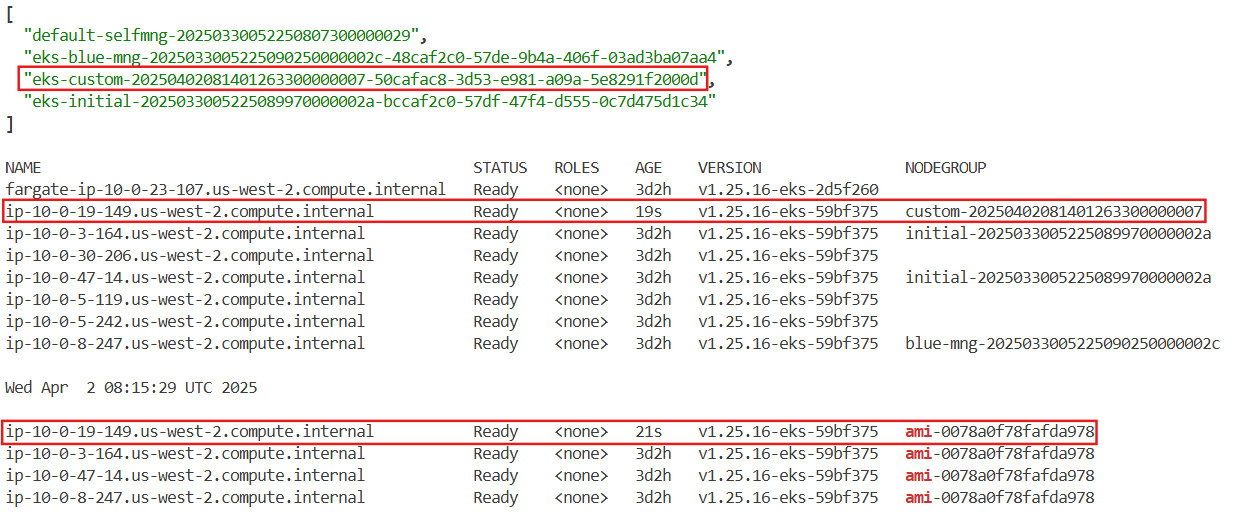

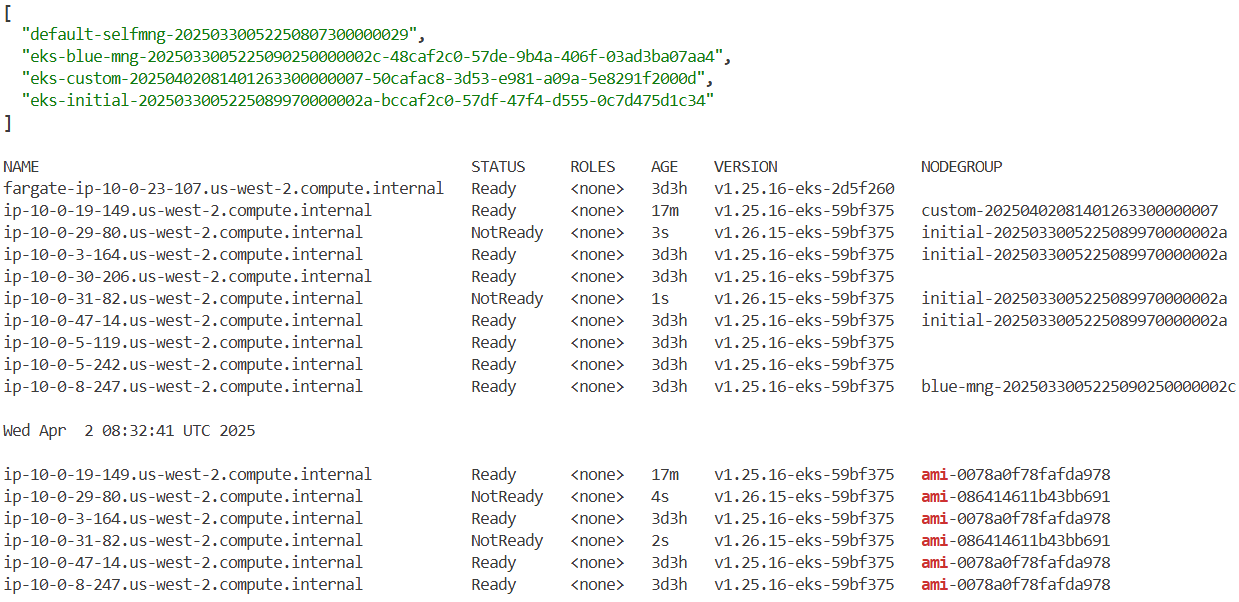

# 모니터링

aws autoscaling describe-auto-scaling-groups --query 'AutoScalingGroups[*].AutoScalingGroupName' --output json | jq

kubectl get node -L eks.amazonaws.com/nodegroup

kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami

while true; do aws autoscaling describe-auto-scaling-groups --query 'AutoScalingGroups[*].AutoScalingGroupName' --output json | jq; echo ; kubectl get node -L eks.amazonaws.com/nodegroup; echo; date ; echo ; kubectl get node -L eks.amazonaws.com/nodegroup-image | grep ami; echo; sleep 1; echo; done

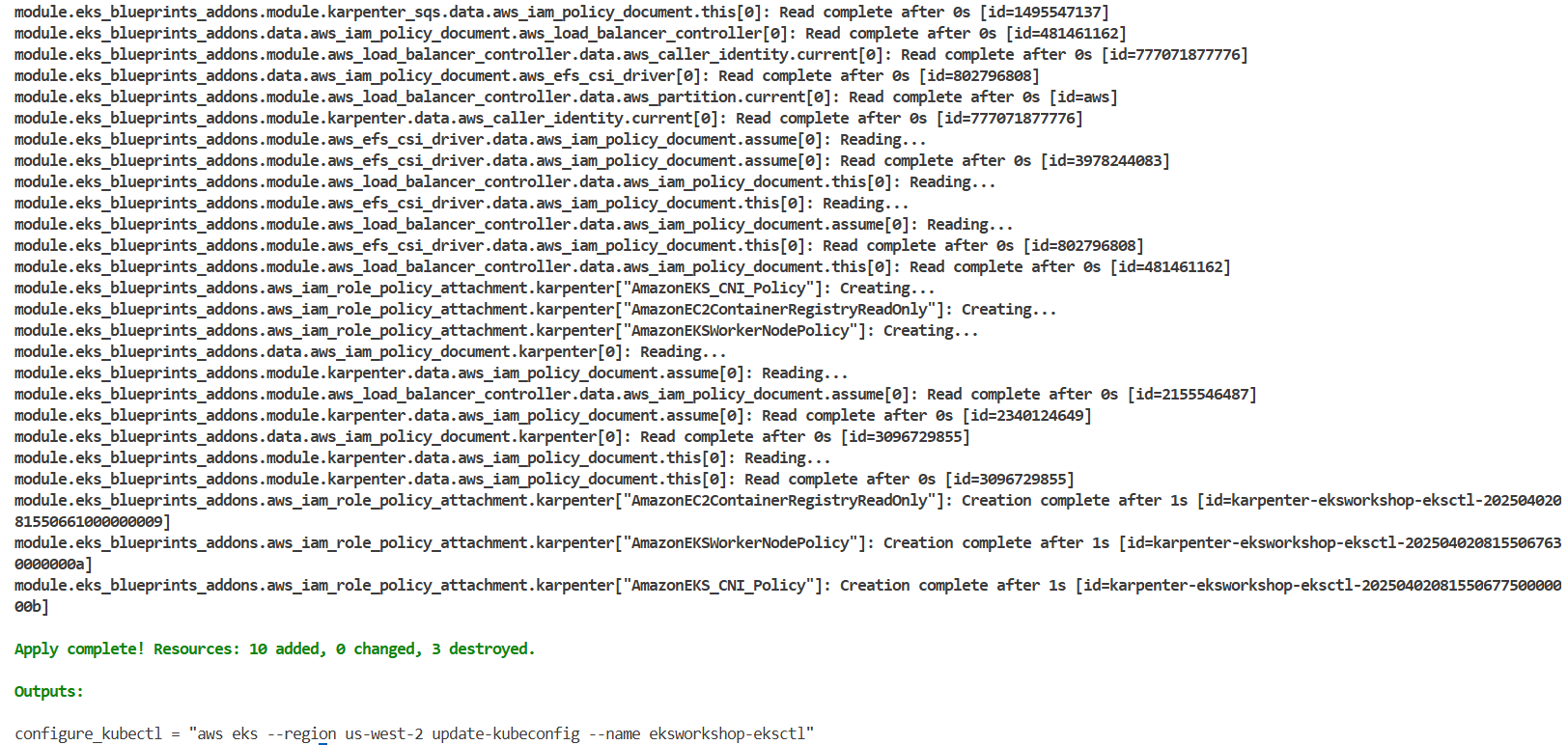

terraform apply -auto-approve

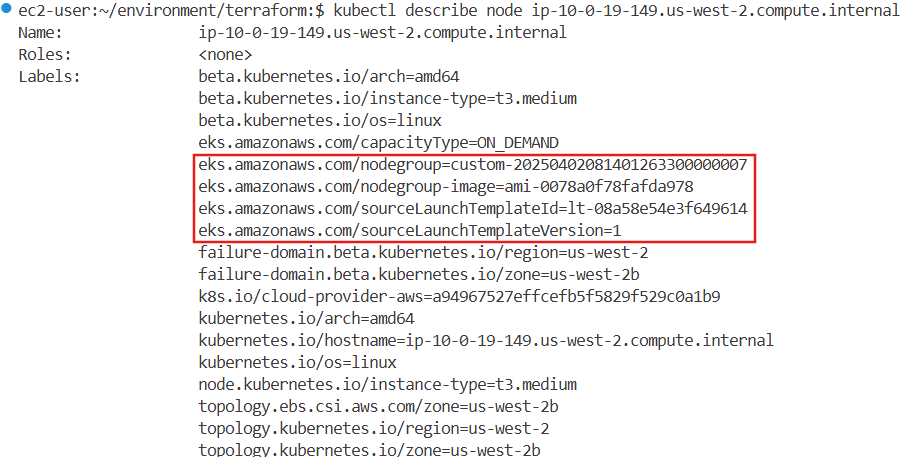

kubectl describe node ip-10-0-44-132.us-west-2.compute.internal

...

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/instance-type=t3.medium

beta.kubernetes.io/os=linux

eks.amazonaws.com/capacityType=ON_DEMAND

eks.amazonaws.com/nodegroup=custom-20250325154855579500000007

eks.amazonaws.com/nodegroup-image=ami-0078a0f78fafda978

eks.amazonaws.com/sourceLaunchTemplateId=lt-0db2ddc3fdfb0afb1

eks.amazonaws.com/sourceLaunchTemplateVersion=1

...

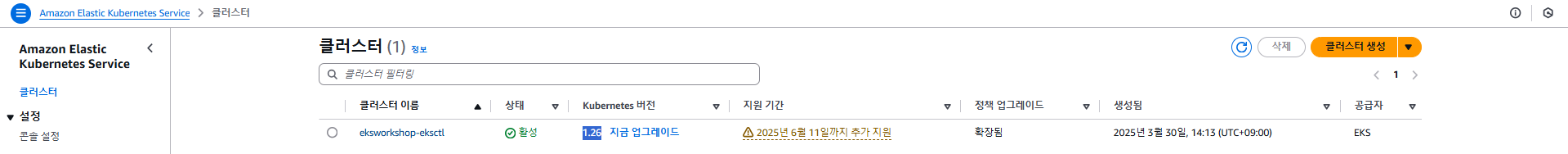

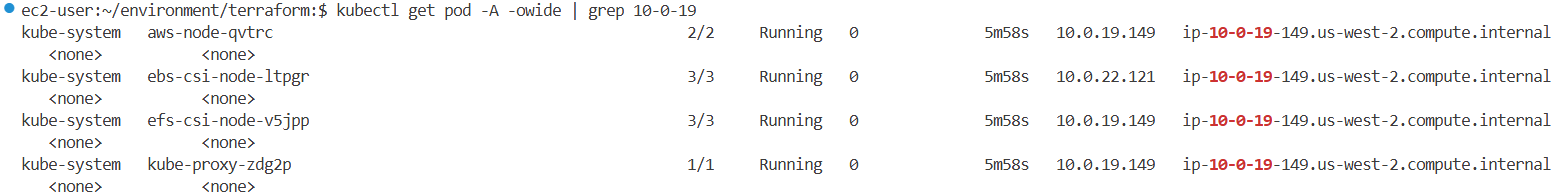

kubectl get pod -A -owide | grep 10-0-44-132