Ignite Cluster persistence일 때, 서버 하나 다운 시 cache partition has been lostPart Exception 해결 방안!

5

Ignite 운영

목록 보기

7/7

1. Version

💬

- OS : CentOS Linux release 7.8.2003(Core)

- Ignite : 2.11.0

- Docker : 19.03.13

2. 문제원인 및 방안

❓ 원인

- persistence일 시 모든 데이터 영역이 디스크에 데이터를 유지하도록 구성이 되어 있는데 묶여있던 노드중 하나가 죽으면 파티션 손실이 일어난다.

💡 해결방안

- 실패한 노드를 포함하여

모든 노드를시작하고클러스터를 활성화하면 된다.

3. 문제 발생 전 완전한 상태 확인

📜 cluster 구성 확인

bash-4.4# ./control.sh --baseline

Control utility [ver. 2.11.0#20210911-sha1:8f3f07d3]

2021 Copyright(C) Apache Software Foundation

User: root

Time: 2022-01-04T01:37:45.958

Command [BASELINE] started

Arguments: --baseline

--------------------------------------------------------------------------------

Cluster state: active

Current topology version: 3

Baseline auto adjustment disabled: softTimeout=300000

Current topology version: 3 (Coordinator: ConsistentId=510933a8-51bb-4816-9405-084c2b142037, Address=172.39.0.1, Order=1)

Baseline nodes:

ConsistentId=node1, Address=172.39.0.1, State=ONLINE, Order=1

ConsistentId=node2, Address=172.17.0.1, State=ONLINE, Order=3

ConsistentId=node3, Address=172.17.0.1, State=ONLINE, Order=2

--------------------------------------------------------------------------------

Number of baseline nodes: 3

Other nodes not found.

Command [BASELINE] finished with code: 0

Control utility has completed execution at: 2022-01-04T01:37:47.063

Execution time: 1105 ms

📜 메모리 로그 확인

[04:28:17,766][INFO][grid-timeout-worker-#54][IgniteKernal]

Metrics for local node (to disable set 'metricsLogFrequency' to 0)

^-- Node [id=888b30be, uptime=01:05:00.342]

^-- Cluster [hosts=3, CPUs=56, servers=3, clients=0, topVer=5, minorTopVer=1]

^-- Network [addrs=[0:0:0:0:0:0:0:1%lo, node1], discoPort=34447, commPort=47100]

^-- CPU [CPUs=24, curLoad=0.1%, avgLoad=0.07%, GC=0%]

^-- Heap [used=69MB, free=92.9%, comm=981MB]

^-- Off-heap memory [used=0MB, free=100%, allocated=51738MB]

^-- Page memory [pages=35]

^-- sysMemPlc region [type=internal, persistence=true, lazyAlloc=false,

... initCfg=40MB, maxCfg=100MB, usedRam=0MB, freeRam=99.98%, allocRam=99MB, allocTotal=0MB]

^-- default region [type=default, persistence=true, lazyAlloc=true,

... initCfg=256MB, maxCfg=51538MB, usedRam=0MB, freeRam=100%, allocRam=51538MB, allocTotal=0MB]

^-- metastoreMemPlc region [type=internal, persistence=true, lazyAlloc=false,

... initCfg=40MB, maxCfg=100MB, usedRam=0MB, freeRam=99.93%, allocRam=0MB, allocTotal=0MB]

^-- TxLog region [type=internal, persistence=true, lazyAlloc=false,

... initCfg=40MB, maxCfg=100MB, usedRam=0MB, freeRam=100%, allocRam=99MB, allocTotal=0MB]

^-- volatileDsMemPlc region [type=user, persistence=false, lazyAlloc=true,

... initCfg=40MB, maxCfg=100MB, usedRam=0MB, freeRam=100%, allocRam=0MB]

^-- Ignite persistence [used=0MB]

^-- Outbound messages queue [size=0]

^-- Public thread pool [active=0, idle=0, qSize=0]

^-- System thread pool [active=0, idle=7, qSize=0]

^-- Striped thread pool [active=0, idle=24, qSize=0]

4. node 1개 down 시 나머지 로그

📜 로그 확인

[02:30:45,193][INFO][tcp-disco-msg-worker-[7c71bed1 192.168.124.249:34447]-#2-#79][TcpDiscoverySpi] New next node [newNext=TcpDiscoveryNode [id=253529d5-1385-4747-9ed8-b4d54c6ac8f2, consistentId=node1, addrs=ArrayList [0:0:0:0:0:0:0:1%lo, 127.0.0.1, 172.17.0.1, 172.19.0.1, 172.21.0.1, 192.168.122.1, node3], sockAddrs=HashSet [/0:0:0:0:0:0:0:1%lo:34447, /127.0.0.1:34447, /172.17.0.1:34447, /172.19.0.1:34447, /192.168.124.238:34447, /172.21.0.1:34447, /192.168.122.1:34447], discPort=34447, order=1, intOrder=1, lastExchangeTime=1641346132657, loc=false, ver=2.11.0#20210911-sha1:8f3f07d3, isClient=false]]

[02:30:45,198][INFO][disco-event-worker-#82][GridDiscoveryManager] Node left topology: TcpDiscoveryNode [id=7c71bed1-ab29-4148-88b2-1804bc647368, consistentId=node3, addrs=ArrayList [0:0:0:0:0:0:0:1%lo, 127.0.0.1, 172.17.0.1, 172.18.0.1, 172.19.1.1, 172.20.1.1, 172.21.0.1, 172.22.0.1, 172.23.0.1, 172.25.0.1, 172.39.0.1, 192.168.122.1, 192.168.124.249], sockAddrs=HashSet [/172.17.0.1:34447, /192.168.124.249:34447, /172.18.0.1:34447, /172.19.1.1:34447, /172.20.1.1:34447, /172.21.0.1:34447, /172.22.0.1:34447, /172.23.0.1:34447, /172.25.0.1:34447, /0:0:0:0:0:0:0:1%lo:34447, /127.0.0.1:34447, /172.39.0.1:34447, /192.168.122.1:34447], discPort=34447, order=3, intOrder=3, lastExchangeTime=1641346152609, loc=false, ver=2.11.0#20210911-sha1:8f3f07d3, isClient=false]

[02:30:45,200][INFO][disco-event-worker-#82][GridDiscoveryManager] Topology snapshot [ver=4, locNode=d46d500d, servers=2, clients=0, state=ACTIVE, CPUs=40, offheap=75.0GB, heap=2.0GB, aliveNodes=[TcpDiscoveryNode [id=253529d5-1385-4747-9ed8-b4d54c6ac8f2, consistentId=node1, isClient=false, ver=2.11.0#20210911-sha1:8f3f07d3], TcpDiscoveryNode [id=d46d500d-dd8d-4583-bcb6-4c089f1660ff, consistentId=node2, isClient=false, ver=2.11.0#20210911-sha1:8f3f07d3]]]

[02:30:45,200][INFO][disco-event-worker-#82][GridDiscoveryManager] ^-- Baseline [id=0, size=3, online=2, offline=1]

[02:30:45,210][WARNING][grid-nio-worker-tcp-comm-7-#46%TcpCommunicationSpi%][TcpCommunicationSpi] Failed to shutdown socket: null

java.nio.channels.ClosedChannelException

at sun.nio.ch.SocketChannelImpl.shutdownOutput(SocketChannelImpl.java:797)

at sun.nio.ch.SocketAdaptor.shutdownOutput(SocketAdaptor.java:407)

at org.apache.ignite.internal.util.IgniteUtils.close(IgniteUtils.java:4231)

at org.apache.ignite.internal.util.nio.GridNioServer$AbstractNioClientWorker.closeKey(GridNioServer.java:2784)

at org.apache.ignite.internal.util.nio.GridNioServer$AbstractNioClientWorker.close(GridNioServer.java:2835)

at org.apache.ignite.internal.util.nio.GridNioServer$AbstractNioClientWorker.close(GridNioServer.java:2794)

at org.apache.ignite.internal.util.nio.GridNioServer$AbstractNioClientWorker.bodyInternal(GridNioServer.java:2157)

at org.apache.ignite.internal.util.nio.GridNioServer$AbstractNioClientWorker.body(GridNioServer.java:1910)

at org.apache.ignite.internal.util.worker.GridWorker.run(GridWorker.java:120)

at java.lang.Thread.run(Thread.java:748)

[02:30:45,255][INFO][exchange-worker-#84][time] Started exchange init [topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], crd=false, evt=NODE_LEFT, evtNode=7c71bed1-ab29-4148-88b2-1804bc647368, customEvt=null, allowMerge=false, exchangeFreeSwitch=true]

[02:30:45,261][INFO][exchange-worker-#84][GridDhtPartitionsExchangeFuture] Finished waiting for partition release future [topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], waitTime=0ms, futInfo=NA, mode=LOCAL]

[02:30:45,271][INFO][exchange-worker-#84][GridDhtPartitionsExchangeFuture] Finish exchange future [startVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], resVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], err=null, rebalanced=true, wasRebalanced=true]

[02:30:45,276][WARNING][exchange-worker-#84][GridDhtPartitionTopologyImpl] Detected lost partitions [grp=SQL_PUBLIC_TF_ASSET, parts=[2, 4, 6, 8-10, 14-15, 20-21, 23, 30, 33-35, 37, 48, 53, 62, 65, 70, 75-76, 78-79, 84, 88, 91, 94, 97, 101, 104, 106, 110, 113-114, 118, 120-121, 123-125, 127, 131, 136-137, 139, 141, 143-144, 146, 148, 150, 154, 160, 163, 168, 170, 172, 176-177, 181, 187, 192, 200-201, 210, 212, 215, 224, 226, 228-229, 231, 235-237, 240, 242-243, 247-249, 255-258, 262, 266, 268-269, 277-278, 280, 283-287, 290, 292, 298-299, 303, 311, 316-317, 321-322, 324, 327, 334, 339, 347, 349, 351-352, 355, 358-359, 363-364, 366-367, 375, 379, 390-391, 394, 399, 401, 403, 405, 407-408, 410, 414, 416, 418, 423-424, 429, 439, 443, 446-447, 449, 452, 461, 467, 471-472, 476-477, 479, 482, 485-487, 491, 494, 497-499, 501, 504, 507, 509-510, 512, 516, 518, 523, 527, 531, 534, 536-537, 541-544, 549, 552, 554-556, 559-560, 564, 566, 570, 578-580, 582, 588, 591, 594-595, 597-599, 601-602, 609, 613, 619, 622, 624, 630-632, 638, 651, 653-654, 657-658, 661, 664, 666, 670, 673, 676, 678, 682, 684, 686, 688, 690, 692-693, 696, 700, 707, 711, 715-716, 718, 721, 723-726, 732, 736, 739, 743, 745, 749-750, 753, 756, 765, 767, 769, 771, 774, 778-780, 782-783, 785, 793-794, 796, 799, 801-802, 805, 811, 813-814, 816-817, 820, 822, 824-826, 828, 831-833, 835, 838, 841-843, 845, 847, 851, 854, 863-865, 867, 872, 875, 878, 883, 888-889, 892-894, 896, 898, 900, 902, 904-907, 909, 912, 914, 917, 919-920, 923, 928, 930, 935, 938-939, 942, 944, 949-951, 954, 956, 959-961, 963, 966, 969-970, 973, 976, 983, 985-986, 988-989, 991-994, 998-999, 1002, 1005, 1015, 1021-1022], topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0]]

[02:30:45,278][INFO][exchange-worker-#84][GridDhtPartitionsExchangeFuture] Completed partition exchange [localNode=d46d500d-dd8d-4583-bcb6-4c089f1660ff, exchange=GridDhtPartitionsExchangeFuture [topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], evt=NODE_LEFT, evtNode=TcpDiscoveryNode [id=7c71bed1-ab29-4148-88b2-1804bc647368, consistentId=node3, addrs=ArrayList [0:0:0:0:0:0:0:1%lo, 127.0.0.1, 172.17.0.1, 172.18.0.1, 172.19.1.1, 172.20.1.1, 172.21.0.1, 172.22.0.1, 172.23.0.1, 172.25.0.1, 172.39.0.1, 192.168.122.1, 192.168.124.249], sockAddrs=HashSet [/172.17.0.1:34447, /192.168.124.249:34447, /172.18.0.1:34447, /172.19.1.1:34447, /172.20.1.1:34447, /172.21.0.1:34447, /172.22.0.1:34447, /172.23.0.1:34447, /172.25.0.1:34447, /0:0:0:0:0:0:0:1%lo:34447, /127.0.0.1:34447, /172.39.0.1:34447, /192.168.122.1:34447], discPort=34447, order=3, intOrder=3, lastExchangeTime=1641346152609, loc=false, ver=2.11.0#20210911-sha1:8f3f07d3, isClient=false], rebalanced=true, done=true, newCrdFut=null], topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0]]

[02:30:45,278][INFO][exchange-worker-#84][GridDhtPartitionsExchangeFuture] Exchange timings [startVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], resVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], stage="Waiting in exchange queue" (0 ms), stage="Exchange parameters initialization" (0 ms), stage="Determine exchange type" (4 ms), stage="Preloading notification" (0 ms), stage="After states restored callback" (1 ms), stage="WAL history reservation" (0 ms), stage="Finalize update counters" (2 ms), stage="Detect lost partitions" (10 ms), stage="Exchange done" (1 ms), stage="Total time" (18 ms)]

[02:30:45,278][INFO][exchange-worker-#84][GridDhtPartitionsExchangeFuture] Exchange longest local stages [startVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], resVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], stage="Affinity initialization (exchange-free switch on fully-rebalanced topology) [grp=SQL_PUBLIC_TF_ASSET]" (4 ms) (parent=Determine exchange type), stage="Affinity initialization (exchange-free switch on fully-rebalanced topology) [grp=asset-mapper-cache]" (3 ms) (parent=Determine exchange type), stage="Affinity initialization (exchange-free switch on fully-rebalanced topology) [grp=ignite-sys-cache]" (2 ms) (parent=Determine exchange type)]

[02:30:45,284][INFO][exchange-worker-#84][time] Finished exchange init [topVer=AffinityTopologyVersion [topVer=4, minorTopVer=0], crd=false]

[02:30:45,288][INFO][exchange-worker-#84][GridCachePartitionExchangeManager] Skipping rebalancing (nothing scheduled) [top=AffinityTopologyVersion [topVer=4, minorTopVer=0], force=false, evt=NODE_LEFT, node=7c71bed1-ab29-4148-88b2-1804bc647368]5. node 1개 down 시 쿼리 날렸을 때 로그

📜 로그 확인

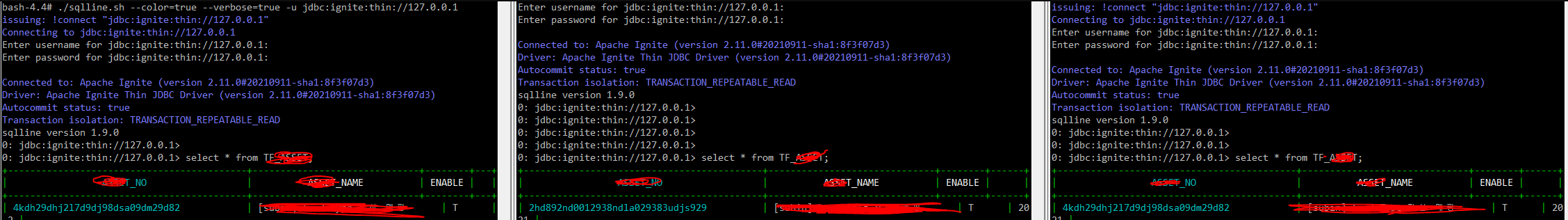

Failed to execute query because cache partition has been lostPart

캐시 파티션이 손실되었기 때문에 쿼리를 실행하지 못했습니다.

0: jdbc:ignite:thin://127.0.0.1> select * from TF_ASSET;

Error: class org.apache.ignite.internal.processors.cache.CacheInvalidStateException: Failed to execute query because cache partition has been lostPart [cacheName=SQL_PUBLIC_TF_ASSET, part=512] (state=50000,code=1)

java.sql.SQLException: class org.apache.ignite.internal.processors.cache.CacheInvalidStateException: Failed to execute query because cache partition has been lostPart [cacheName=SQL_PUBLIC_TF_ASSET, part=512]

at org.apache.ignite.internal.jdbc.thin.JdbcThinConnection.sendRequest(JdbcThinConnection.java:1009)

at org.apache.ignite.internal.jdbc.thin.JdbcThinStatement.execute0(JdbcThinStatement.java:234)

at org.apache.ignite.internal.jdbc.thin.JdbcThinStatement.execute(JdbcThinStatement.java:560)

at sqlline.Commands.executeSingleQuery(Commands.java:1054)

at sqlline.Commands.execute(Commands.java:1003)

at sqlline.Commands.sql(Commands.java:967)

at sqlline.SqlLine.dispatch(SqlLine.java:734)

at sqlline.SqlLine.begin(SqlLine.java:541)

at sqlline.SqlLine.start(SqlLine.java:267)

at sqlline.SqlLine.main(SqlLine.java:206)

6. 해결 방안

💬 해결 방안

- down된 node 뿐만 아니라 모든 node 재 기동

- 재 기동 후 모든 node 클러스터 활성화

- 클러스터 활성화 node 토폴로지 재 설정

📜 기동 후 클러스터 활성화

bash-4.4# ./control.sh --activate

Control utility [ver. 2.11.0#20210911-sha1:8f3f07d3]

2021 Copyright(C) Apache Software Foundation

User: root

Time: 2022-01-04T08:50:41.905

Command [ACTIVATE] started

Arguments: --activate

--------------------------------------------------------------------------------

Command deprecated. Use --set-state instead.

Cluster activated

Command [ACTIVATE] finished with code: 0

Control utility has completed execution at: 2022-01-04T08:50:42.766

Execution time: 861 ms📜 토폴로지 재 설정

bash-4.4# ./control.sh --baseline

Control utility [ver. 2.11.0#20210911-sha1:8f3f07d3]

2021 Copyright(C) Apache Software Foundation

User: root

Time: 2022-01-04T09:26:02.544

Command [BASELINE] started

Arguments: --baseline

--------------------------------------------------------------------------------

Cluster state: active

Current topology version: 9

Baseline auto adjustment disabled: softTimeout=300000

Current topology version: 9 (Coordinator: ConsistentId=0a52df82-6b3b-42ab-87bc-1a92353d0bce, Address=172.17.0.1, Order=3)

Baseline nodes:

ConsistentId=0a52df82-6b3b-42ab-87bc-1a92353d0bce, Address=172.17.0.1, State=ONLINE, Order=3

ConsistentId=5b6f0965-a991-44b9-90c8-b8cde4079dac, Address=172.39.0.1, State=ONLINE, Order=7

ConsistentId=87f64d4e-752d-475b-a67b-f79508c63caf, Address=172.17.0.1, State=ONLINE, Order=9

--------------------------------------------------------------------------------

Number of baseline nodes: 3

Other nodes not found.

Command [BASELINE] finished with code: 0

Control utility has completed execution at: 2022-01-04T09:26:03.642

Execution time: 1098 ms

bash-4.4# ./control.sh --baseline set 0a52df82-6b3b-42ab-87bc-1a92353d0bce,5b6f0965-a991-44b9-90c8-b8cde4079dac,87f64d4e-752d-475b-a67b-f79508c63caf

Warning: the command will perform changes in baseline.

Press 'y' to continue . . . y

Control utility [ver. 2.11.0#20210911-sha1:8f3f07d3]

2021 Copyright(C) Apache Software Foundation

User: root

Time: 2022-01-04T09:26:16.247

Command [BASELINE] started

Arguments: --baseline set 0a52df82-6b3b-42ab-87bc-1a92353d0bce,5b6f0965-a991-44b9-90c8-b8cde4079dac,87f64d4e-752d-475b-a67b-f79508c63caf

--------------------------------------------------------------------------------

Cluster state: active

Current topology version: 9

Baseline auto adjustment disabled: softTimeout=300000

Current topology version: 9 (Coordinator: ConsistentId=0a52df82-6b3b-42ab-87bc-1a92353d0bce, Address=172.17.0.1, Order=3)

Baseline nodes:

ConsistentId=0a52df82-6b3b-42ab-87bc-1a92353d0bce, Address=172.17.0.1, State=ONLINE, Order=3

ConsistentId=5b6f0965-a991-44b9-90c8-b8cde4079dac, Address=172.39.0.1, State=ONLINE, Order=7

ConsistentId=87f64d4e-752d-475b-a67b-f79508c63caf, Address=172.17.0.1, State=ONLINE, Order=9

--------------------------------------------------------------------------------

Number of baseline nodes: 3

Other nodes not found.

Command [BASELINE] finished with code: 0

Control utility has completed execution at: 2022-01-04T09:26:17.941

Execution time: 1694 ms

7. 적용 후

📜 적용 후 쿼리

- 적용 후 다시 잘 되는것을 확인할 수 있다.

📚 참고

블로그 포스팅을 정말 깔끔하게 하시는 것 같습니다 부럽습니다