.png)

SW과정 머신러닝 1007(3)

1. 뷰티풀숲 태그 관련

tab누르면 자동완성됨

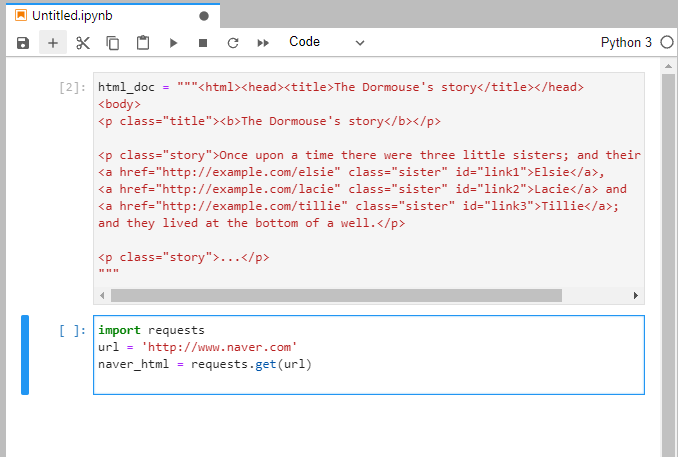

주피터에 뷰티풀숩 소스 작성

import requests

url = 'http://www.naver.com'

naver_html = requests.get(url).text

print(naver_html)뷰티풀숲 설치 태그

$ pip install beautifulsoup4

선택자 종류와 예시

블로그 바로가기

strip()앞뒤 공백 제거 함수

2. 네이버 환율 태그

import requests

from bs4 import BeautifulSoup

url = 'https://finance.naver.com/marketindex/exchangeList.naver'

res = requests.get(url).text

soup = BeautifulSoup(res, 'html.parser')

name = []

price = []

result = soup.select('td.tit >a')

# print(result)

for a in result:

#print(type(a.string))

#print(a.string.strip())

name.append(a.string.strip())

result1 = soup.select('td.sale')

for td in result1:

print(float(td.string.strip().replace(',',''))) # ' , '를 찾아서 ' '(아무것도없는)것으로 바꿔라

price.append(float(td.string.strip().replace(',','')))

# b = a.string.strip()

# print(b)

# print(name)

# print(price)

items = list(zip(name,price))

print(items)3. CGV 영화 제목

url1 = 'http://www.cgv.co.kr/movies/'

res1 = requests.get(url1).text

soup1 = BeautifulSoup(res1, 'html.parser')

result2 = soup1.select('strong.title')

for strong in result2:

print(strong.text)4. Bugs 차트 제목

import requests

from bs4 import BeautifulSoup as bs

url='https://music.bugs.co.kr/chart/track/realtime/total'

res = requests.get(url).text #html

soup = bs(res,"html.parser")

musicdata = soup.select('#CHARTrealtime > table > tbody > tr')

musiclist=[]

for item in musicdata:

musictitle = item.select_one('th>p>a')

musistion = item.select_one('td> p > a')

musiclist.append((musictitle.text,musistion.text))

# print('--------------------------')

# print('노래제목 - '+ musictitle.text.strip())

# print('가 수 - ' + musistion.text.strip())

a=0

for i in musiclist:

a=a+1

print('------------------------')

print(a)

print(i)5. 네이버 뉴스 불러오기

import requests

import urllib.request

from bs4 import BeautifulSoup

url='https://news.naver.com/main/read.naver?mode=LSD&mid=shm&sid1=100&oid=366&aid=0000765143'

#res = requests.get(url).text #html

res = urllib.request.urlopen(url)

soup = BeautifulSoup(res,'html.parser')

news = soup.select('ul.list_txt li a')

# print(len(news))

# print(news)

news_list=[]

for n in news:

news_dic = {}

title = n['title'].strip()

#print('제목 :',title,'\n')

news_dic['title'] = title

url = n['href']

res = urllib.request.urlopen(url)

soup = BeautifulSoup(res,'html.parser')

content = soup.select_one('#articleBodyContents')

#print(content.text.replace('동영상 뉴스','').strip())

news_dic['content'] = content.text.replace('동영상 뉴스','').strip()

news_list.append(news_dic)

print(news_list)