# XOR 문제 딥러닝으로 풀기

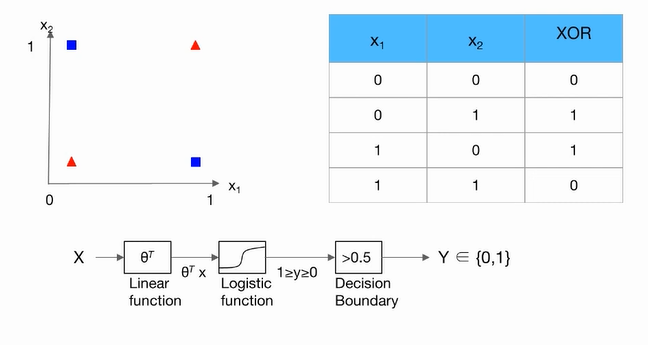

XOR

0,0 -> 0(-)

0,1 -> 1(+)

1,0 -> 1(+)

1,1 -> 0(-)

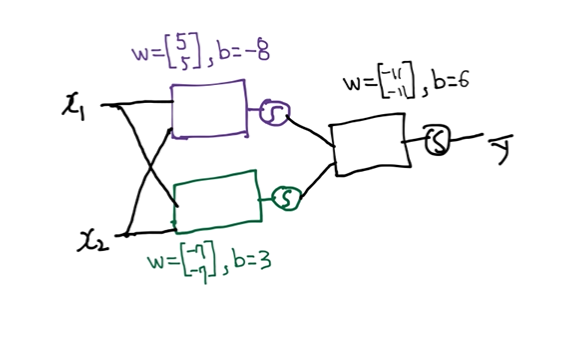

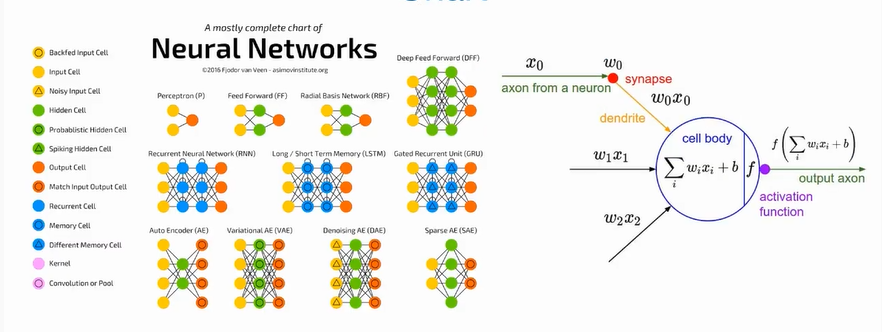

Neural Net

: XOR문제를 푸는 방법으로 제시

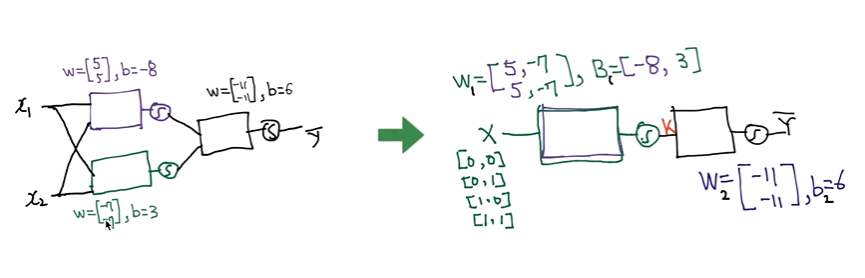

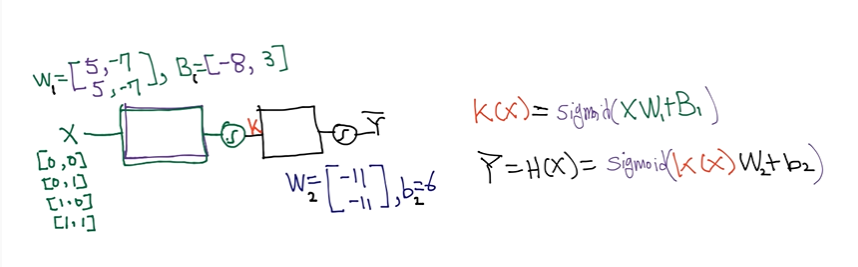

Forward propagation

NN

network : programming code

K = tf.sigmoid(tf.matmul(X, W1) + b1)

hypothesis = tf.sigmoid(tf.matmul(K, W2) + b2)# 딥넷트웍 학습 시키기 (backpropagation)

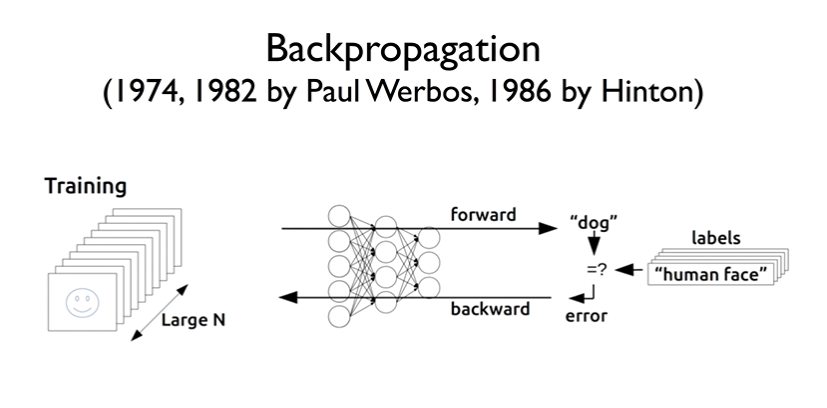

How can we learn W1, W2, B1, B2 from training data?

: 기계적으로/ 자동적으로 어떻게 학습시킬 수 있을까?

: Gradient descent 알고리즘 사용 - 미분값 필요

: Neural Network에서 미분값이 복잡해짐 - 계산이 어려움

Backpropagation

: Gradient descent 알고리즘의 문제점을 해결해주는 알고리즘

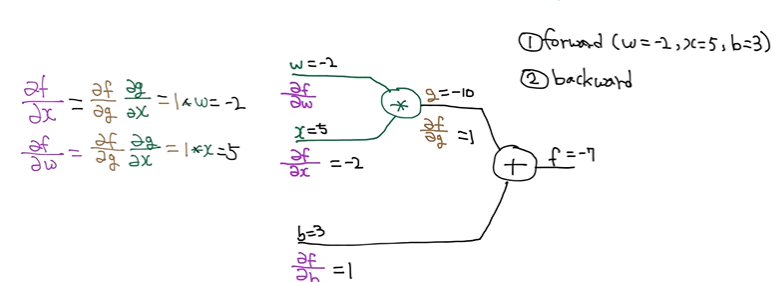

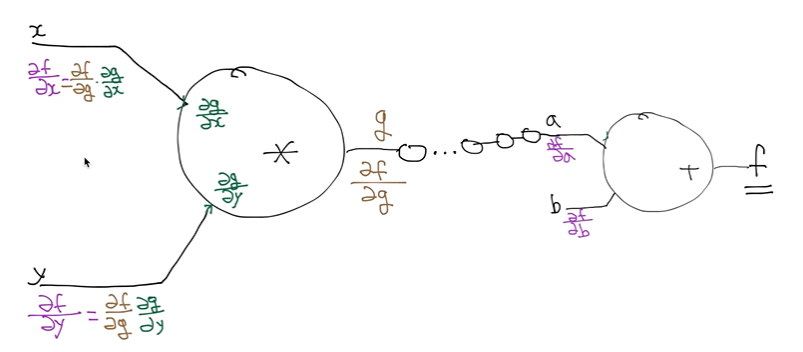

Back propagation (chain rule)

f = Wx + b

g = Wx

f = g + b

: W, x, b가 f에 미치는 영향을 구하고 싶음 = 미분 형태

1) forward (W = -2, x = 5, b = 3)

2) backward

: 좀 더 복잡해진 경우 - 마지막 노드부터 계산

Tensorflow - TensorBoard

: backpropagation을 구하기 위해 그래프화 함

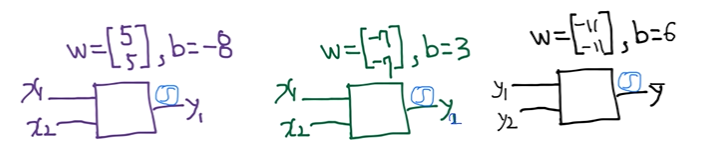

# Neural Nets XOR

Logistic Regression : XOR

: Logistic Regression으로 XOR문제를 해결할 수 없음

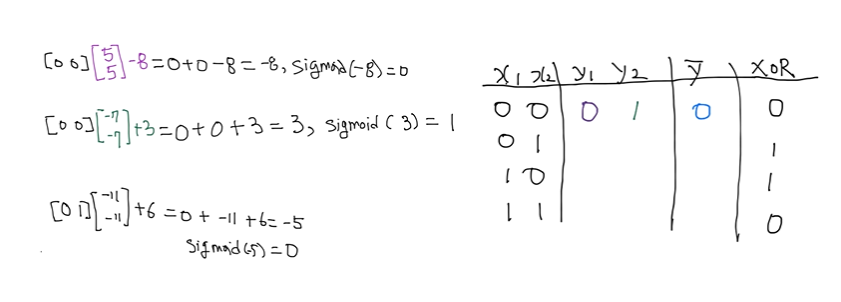

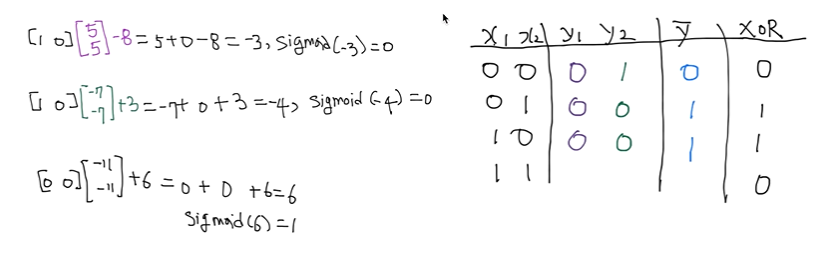

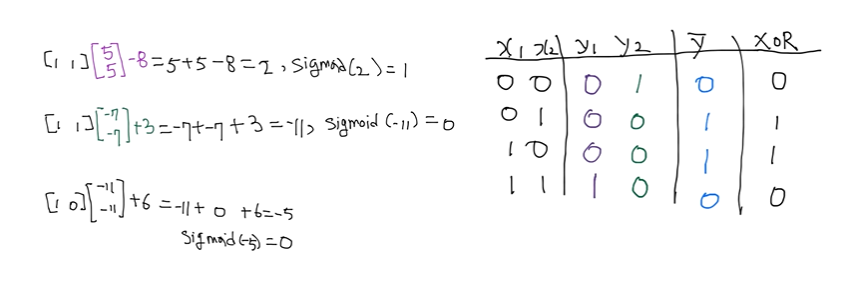

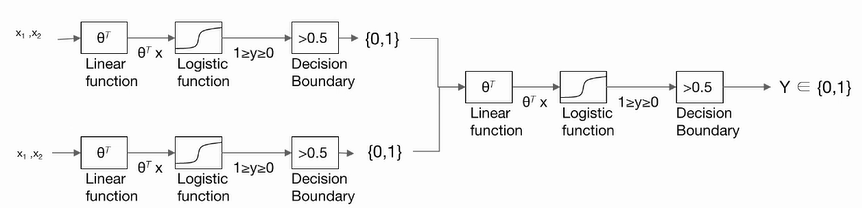

Data sets : Forward propagation

: 3개의 Logistic Regression으로 XOR문제를 해결할 수 있음.

: 위에 작성된 Forward propagtaion 참고

Neural Net : 2 layer

Tensorflow Code

def neural_net(features):

layer1 = tf.sigmoid(tf.matmul(features, W1) + b1) # W1=[2,1], b1=[1]

layer2 = tf.sigmoid(tf.matmul(features, W2) + b2) # W2=[2,1], b1=[1]

hypothesis = tf.sgimoid(tf.matmul(tf.concat([layer1, layer2], -1), W3) + b3) # W3=[2,1], b3=[1]

return hypothesisNeural Net : Vector

: 위에 작성된 NN 참고

: Logistic Regression 두 개를 더해서 하나의 component로 만들고 2 layer neural net을 만듬

Tensorflow Code

def neural_net(features):

layer = tf.sigmoid(tf.matmul(features, W1) + b1) # W1=[2,2], b1=[2]

hypothesis = tf.sigmoid(tf.matmul(layer, W2) + b2 # W2=[2,1], b2=[1]

return hypothesisNeural Net : Chart

: Logistic Regression을 합쳐 Neural Networks를 만들 수 있음

요약 Code (Eager)

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import tensorflow as tf

import tensorflow.contrib.eager as tfe

tf.enable_eager_execution()

tf.set_random_seed(777) # for reproducibility

print(tf.__version__)

x_data = [[0,0],

[0,1],

[1,0],

[1,1]]

y_data = [[0],

[1],

[1],

[0]]

dataset = tf.data.Dataset.from_tensor_slices((x_data, y_data)).batch(len(x_data))

def preprocess_data(features, labels):

features = tf.cast(features, tf.float32)

labels = tf.cast(labels, tf.float32)

return features, labels

W1 = tf.Variable(tf.random_normal([2, 1]), name='weight1')

b1 = tf.Variable(tf.random_normal([1]), name='bias1')

W2 = tf.Variable(tf.random_normal([2, 1]), name='weight2')

b2 = tf.Variable(tf.random_normal([1]), name='bias2')

W3 = tf.Variable(tf.random_normal([2, 1]), name='weight3')

b3 = tf.Variable(tf.random_normal([1]), name='bias3')

def neural_net(features):

layer1 = tf.sigmoid(tf.matmul(features, W1) + b1)

layer2 = tf.sigmoid(tf.matmul(features, W2) + b2)

layer3 = tf.concat([layer1, layer2], -1)

layer3 = tf.reshape(layer3, shape=[-1,2])

hypothesis = tf.sigmoid(tf.matmul(layer3, W3) + b3)

return hypothesis

def loss_fn(hypothesis, labels):

cost = -tf.reduce_mean(labels * tf.log(hypothesis) + (1 - labels) * tf.log(1 - hypothesis))

return cost

optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.01)

def accuracy_fn(hypothesis, labels):

predicted = tf.cast(hypothesis > 0.5, dtype=tf.float32)

accuracy = tf.reduce_mean(tf.cast(tf.equal(predicted, labels), dtype=tf.float32))

return accuracy

def grad(features, labels):

with tf.GradientTape() as tape:

loss_value = loss_fn(neural_net(features),features,labels)

return tape.gradient(loss_value, [W1, W2, W3, b1, b2, b3])

EPOCHS = 50000

for step in range(EPOCHS):

for features, labels in tfe.Iterator(dataset):

features, labels = preprocess_data(features, labels)

grads = grad(neural_net(features), labels)

optimizer.apply_gradients(grads_and_vars=zip(grads,[W1, W2, W3, b1, b2, b3]))

if step % 5000 == 0:

print("Iter: {}, Loss: {:.4f}".format(step, loss_fn(neural_net(features),labels)))

x_data, y_data = preprocess_data(x_data, y_data)

test_acc = accuracy_fn(neural_net(x_data),y_data)

print("Testset Accuracy: {:.4f}".format(test_acc))

# TensorBoard XOR

Tensorboard for XOR NN

: Tensorboard를 사용하기 위해서는 아래 명령어를 실행시켜주어야 함

$ pip install tensorboard

$ tensorboard -logdir=./logs/xor_logs

Eager Execution

writer = tf.contrib.summary.FileWriter("./logs/xor_logs")

with tf.contrib.summary.record_summaries_every_n_global_steps(1):

tf.contrib.summary.scalar('loss', cost)Keras

tb_hist = tf.keras.callbacks.TensorBoard(log_dir="./logs/xor_logs", histogram_freq=0, wrtie_graph=True, write_images=True)

model.fit(x_data, y_data, epochs=5000, callbacks=[tb_hist])