Multi variable linear regression - Full Code

import tensorflow as tf

# data and label

x1 = [73., 93., 89., 96., 73.]

x2 = [80., 88., 91., 98., 66.]

x3 = [75., 93., 90., 100., 70.]

Y = [152., 185., 100., 196., 142.]

# random weights

w1 = tf.Variable(tf.random.normal([1]))

w2 = tf.Variable(tf.random.normal([1]))

w3 = tf.Variable(tf.random.normal([1]))

b = tf.Variable(tf.random.normal([1]))

learning_rate = 0.000001

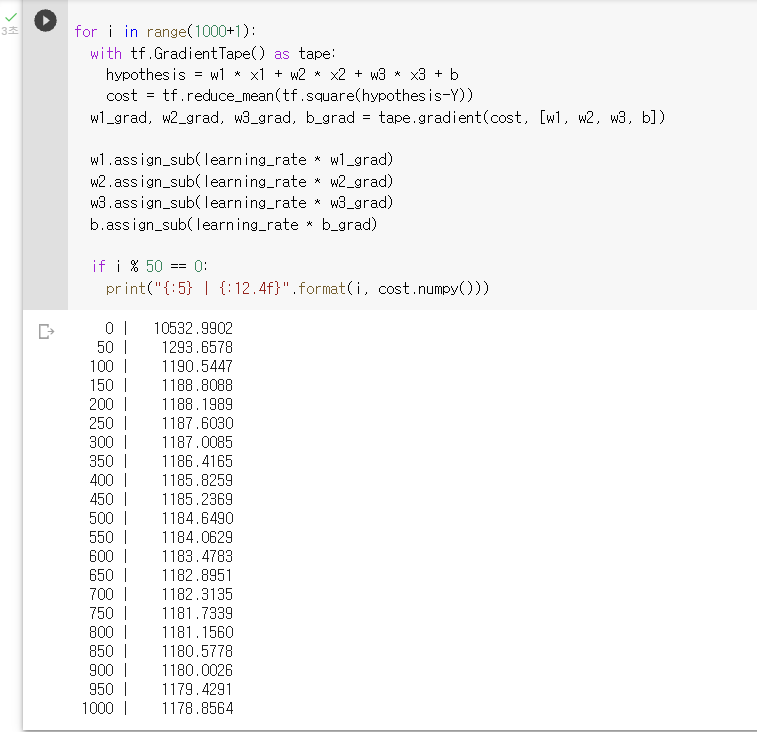

for i in range(1000+1): #Gradient defent

#tf.GradientTape() to record the gradient of the cost function

with tf.GradientTape() as tape:

hypothesis = w1 * x1 + w2 * x2 + w3 * x3 + b

cost = tf.reduce_mean(tf.square(hypothesis-Y))

# calculates the gradients of the cost

w1_grad, w2_grad, w3_grad, b_grad = tape.gradient(cost, [w1, w2, w3, b])

#update w1, w2, w3 and b

w1.assign_sub(learning_rate * w1_grad)

w2.assign_sub(learning_rate * w2_grad)

w3.assign_sub(learning_rate * w3_grad)

b.assign_sub(learning_rate * b_grad)

if i % 50 == 0:

print("{:5} | {:12.4f}".format(i, cost.numpy()))

> 실행 결과

Matrix - Full Code

import tensorflow as tf

import numpy as np

data = np.array([

# x1, x2, x3, y

[73., 80., 75., 152. ],

[93., 88., 93., 185. ],

[89., 91., 90., 100., ],

[96., 98., 100., 196. ],

[73., 66., 70., 142. ]

], dtype=np.float32)

# slice data

X = data[:, :-1]

Y = data[:,[-1]]

W = tf.Variable(tf.random.normal([3,1]))

b = tf.Variable(tf.random.normal([1]))

learning_rate = 0.000001

# hypothesis, prediction function

def predict(x):

return tf.matmul(X, W) + b

n_epochs = 2000

for i in range(n_epochs+1):

# record the gradient of the cost function

with tf.GradientTape() as tape:

cost = tf.reduce_mean((tf.square(predict(X) - Y)))

# calculates the gradients of the Loss

W_grad, b_grad = tape.gradient(cost, [W, b])

#updates parameters (W and b)

W.assign_sub(learning_rate * W_grad)

W.assign_sub(learning_rate * b_grad)

if i % 100 == 0:

print("{:5} | {:10.4f}".format(i, cost.numpy()))

- slice data

x = data[:, :-1]

y = data[:,[-1]]

: 콤마를 기준으로 앞부분은 행 뒷부분은 열

: 콜론을 기준으로 앞뒤에 아무것도 안쓰여져 있기 때문에 처음부터 끝까지 즉, 모든 데이터를 뜻한다.